Figures & data

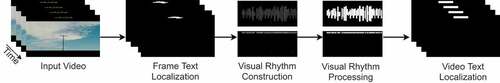

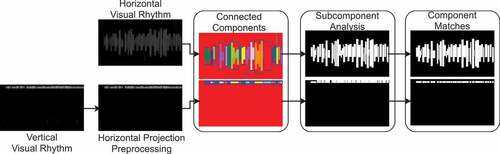

Figure 4. Steps of the visual rhythm processing. Initially, each visual rhythm has its connected components detected. They are then filtered by a subcomponent analysis. Caption positions are retrieved from final visual rhythms.

Table 1. Information from videos collected from YouTube to compose the dataset. All videos were tagged with creative commons license.

Table 2. Different scripts considered in this work.

Table 3. Results obtained for detecting frames with captions for the different videos in the dataset.

Table 4. Results obtained for detecting frames with captions for different scripts.

Table 5. Results obtained for detecting frames with captions for different caption characteristics.

Table 6. Average accuracy achieved for video caption localization.

Table 7. Average accuracy obtained for caption location for each script.

Table 8. Average accuracy obtained for caption localization with different characteristics.