Figures & data

Table 1. Summary of the literature on the decision-making for product lifecycle management.

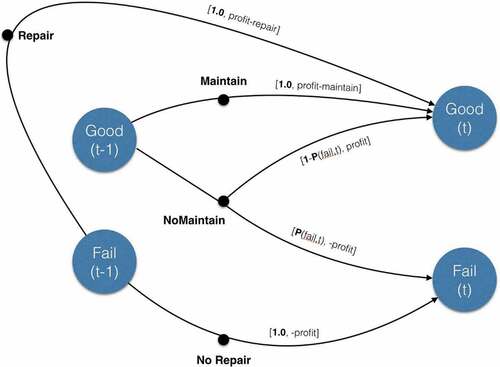

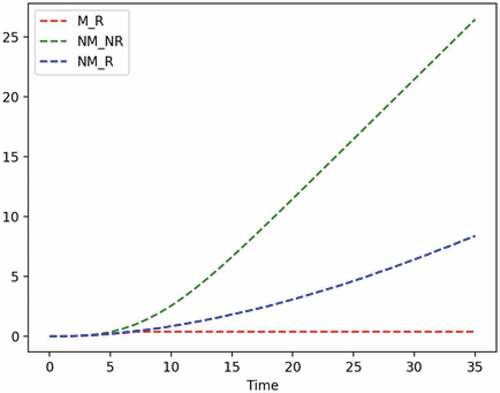

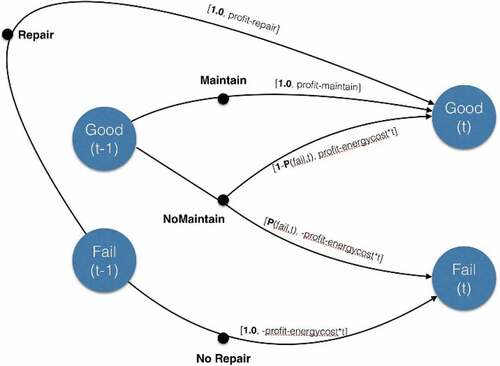

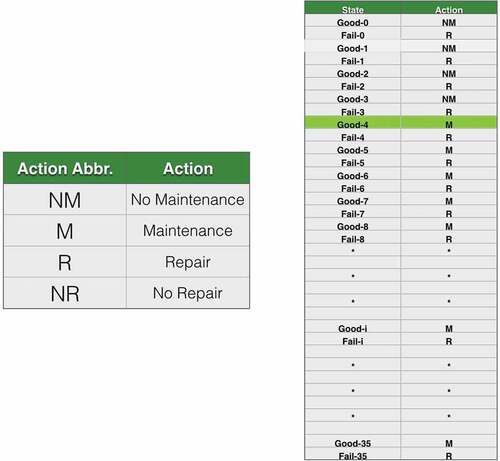

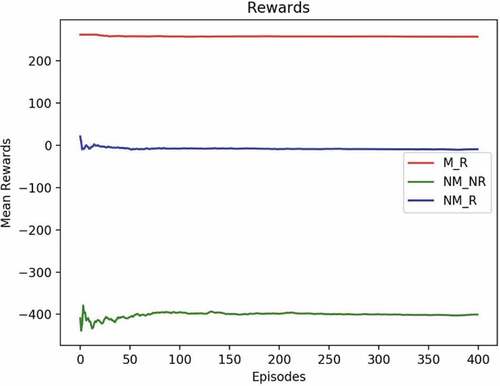

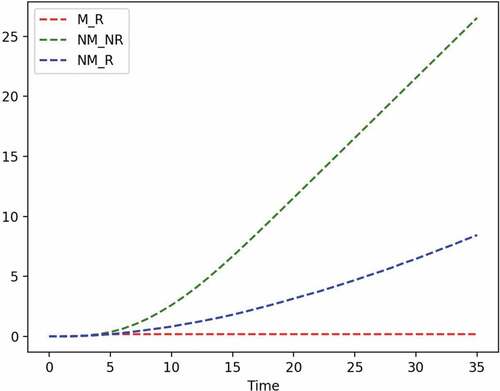

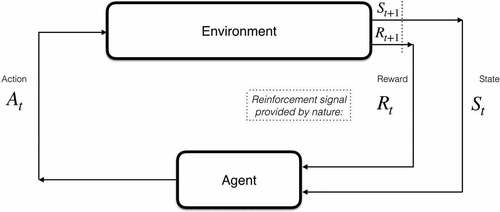

Figure 19. RL extends learning to simultaneously solving a decision problem. Given just a problem state xt and a reward for that state rt, the agent will try to learn how to maximise the profits or minimise the costs over time. This can be visualised as a Bayesian network augmented with action nodes, where at each point in time t it has to make a decision based on the history of observed states and rewards.