Figures & data

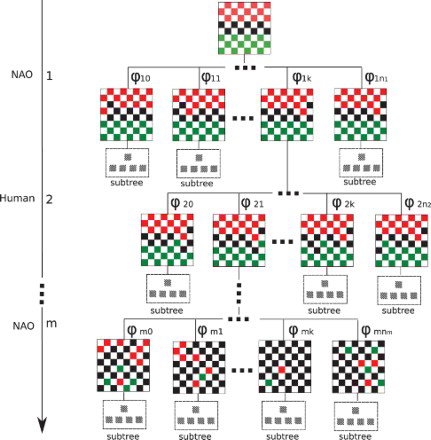

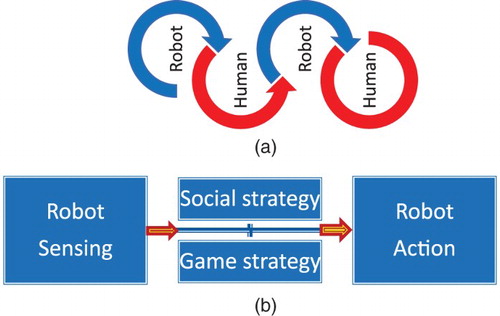

Figure 1. The human–robot interaction process in games. (a) The human–robot interaction process as taking turns between a human and a robot. (b) An individual turn of a robot consists of sensing/perception of the state of the game, strategy module that consists of a game strategy and a social strategy, and action towards the human. Different from existing studies in this study we ground social interaction via the strategy module, and not by adding social features to the observable action or by perception of social cues by the robot.

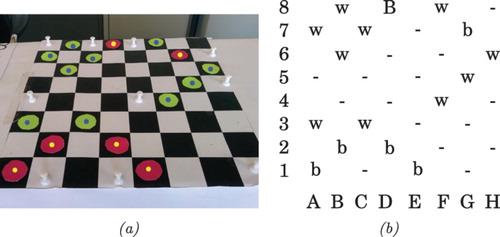

Figure 3. Pieces detection and state estimation example. (a) Image captured with NAO camera. All pieces of the robot and of the opponent are detected and their centers are estimated. (b) representation of the state of the game in the robot “mind”. The robot plays with the black pieces, denoted with b and the opponent pieces are denoted with w (for white). On the color prints and in the designed game red and green colors are used instead of black and white, since the colors might be more enjoyable for the children.