Figures & data

Table 1. Data set information where #Instances is the number of instances and #Features is the number of features.

Table 2. Data set information after preprocessing.

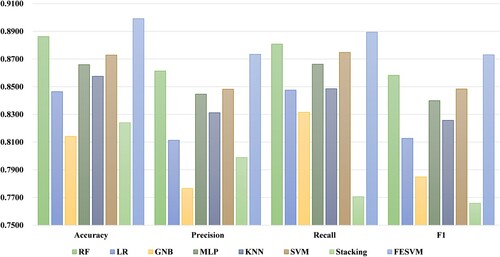

Table 3. Experimental results from the RF, LR, GNB, MLP, KNN, SVM, Stacking, and FESVM using the first eight data sets in Table .

Table 4. Experimental results from the RF, LR, GNB, MLP, KNN, SVM, Stacking, and FESVM using the ninth to sixteenth data sets in Table .

Table 5. Experimental results from the RF, LR, GNB, MLP, KNN, SVM, Stacking, and FESVM using the last four data sets in Table .

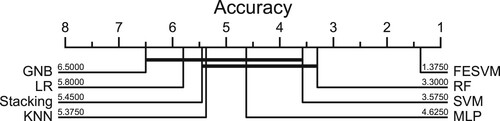

Table 6. Statistical test results for the RF, LR, GNB, MLP, KNN, SVM, Stacking, and FESVM in terms of accuracy (p-values less than 0.05 are highlighted in bold).

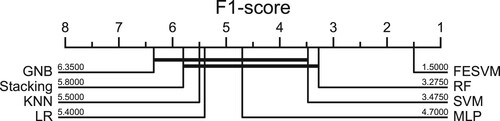

Table 7. Statistical test results for the RF, LR, GNB, MLP, KNN, SVM, Stacking, and FESVM in terms of F1-Score (p-values less than 0.05 are highlighted in bold).

Table 8. Comparison of computation time based on different input features used for the 20 data sets using our proposed FESVM where t1 is the computation time using all features, t2 is the computation time using generated features, t3 is the computation time using feature selection, and t4 is the computation time using the features from the feature generation and feature selection.