Figures & data

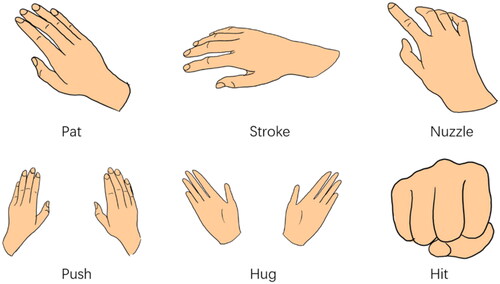

Table 1. Selection of MST gestures.

Table 2. Visual design inspiration.

Table 3. Details of vibrotactile stimuli for the MST gestures selected from Wei et al. (Citation2022b).

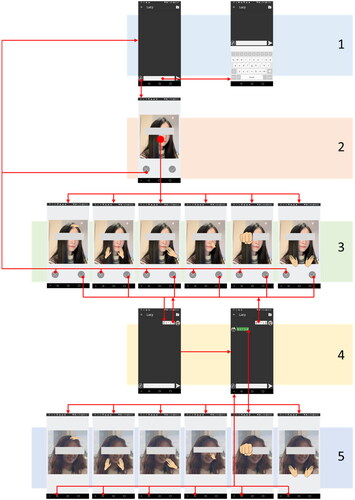

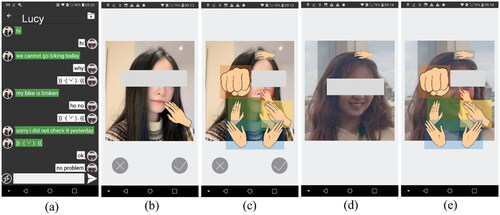

Figure 2. Interface for texting. (a) interface for texting words, (b) interface for the participants’ inputting MST gestures (the avatar is the experimenter), (c) positions of each MST gesture, (d) interface for the participants’ receiving MST gestures (the avatar is the participant), and (e) positions of each MST gesture. We developed this texting interface based on an open-source application (https://github.com/Baloneo/LANC).

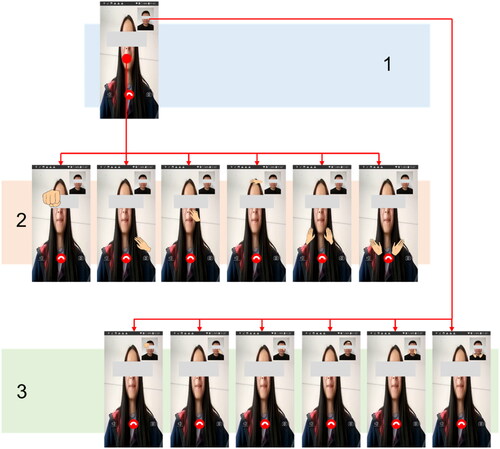

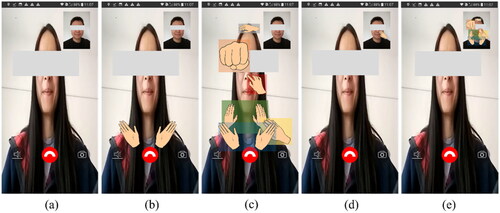

Figure 3. Interface for video calls. (a) original interface for video calls, (b) interface for the participants’ inputting MST gestures (the full-screen image is the experimenter), (c) positions of each MST gesture on the experimenter’s image, (d) interface for the participant’s receiving MST gestures (the image box in the upper right corner shows the participant, and (e) positions of each MST gesture on the participant’s image. We developed this video calling interface based on an open-source application (https://github.com/xmtggh/VideoCalling).

Table 4. Description of the interface structure of texting.

Table 5. Description of the interface structure of video calling.

Table 6. Descriptions of variables.

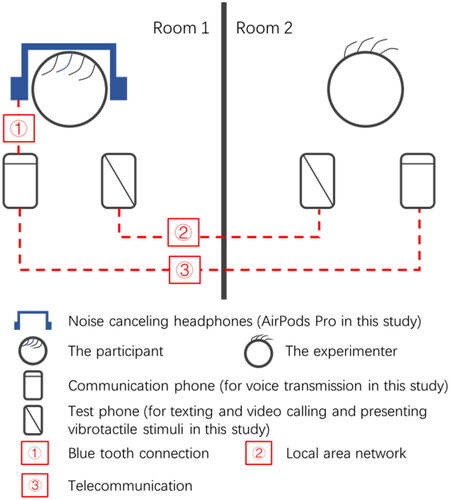

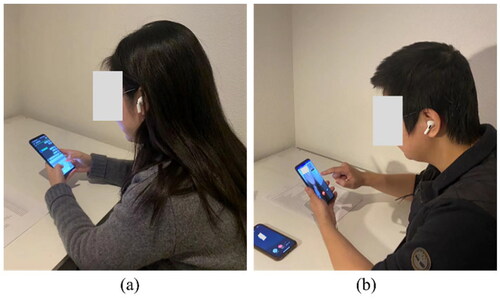

Figure 6. Test environment. (a) Texting and (b) video calling (the second phone on the desk is used for voice transmission).

Table 7. Example questionnaire of NMSPM (Harms & Biocca, Citation2004).

Table 8. Test communication modes, conditions, and activities for participants.

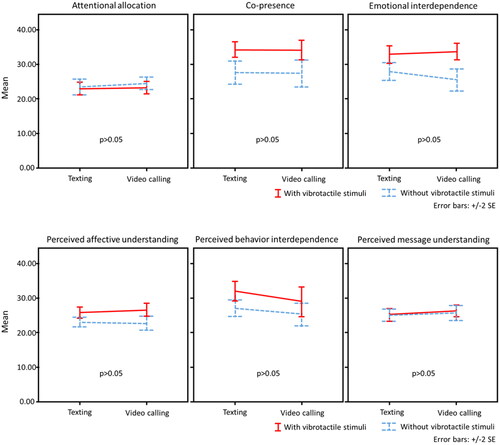

Figure 8. Dimensions of social presence. Between-subjective analysis—video calling and texting (VT: vibrotactile stimuli).

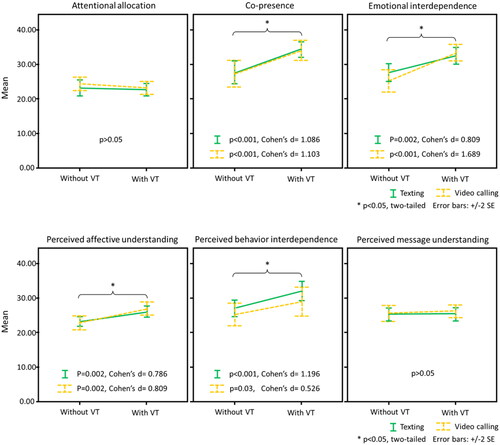

Figure 9. Dimensions of social presence. Within-subjects analysis—with VT and without VT (VT: vibrotactile stimuli).

Table 9. Qualitative analysis of texting.

Table 10. Qualitative analysis of video calling.