Figures & data

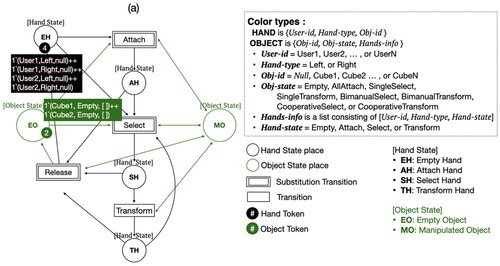

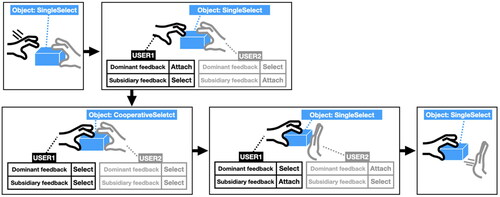

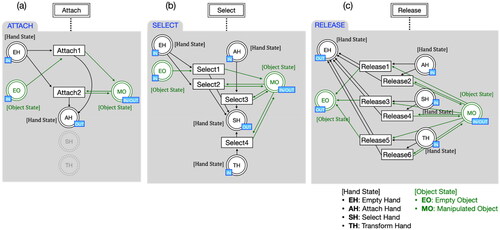

Figure 5. Substitution transitions in collaborative manipulation model (a) attach, (b) select, and (c) release.

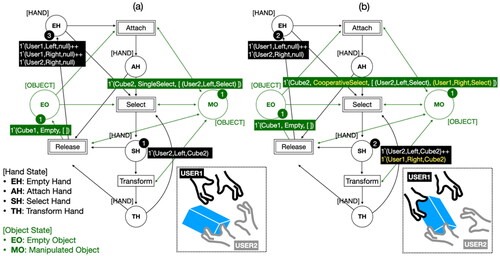

Figure 6. Example of CPN (a) when User2 selects Cube2 with the left hand, (b) when User1 and User2 select Cube2 together.

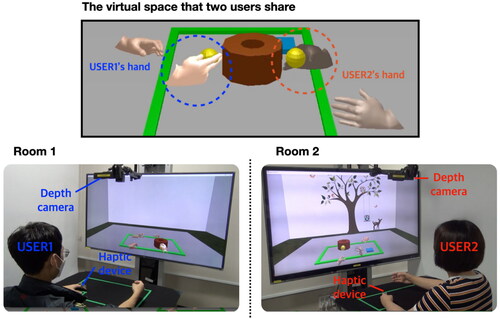

Figure 8. Experimental system setup. The display used was a 40″ three-dimensional monitor. We used the 3GearSystems camera as a depth camera to recognize the pose of the participant’s hands and to track the movement of the participant’s hands. To receive feedback on the collaborator’s interaction, participants wear a vibrating haptic device on their left index finger. Participants’ experimental systems in separate locations are networked with each other and collaborate by sharing the same virtual space (top).

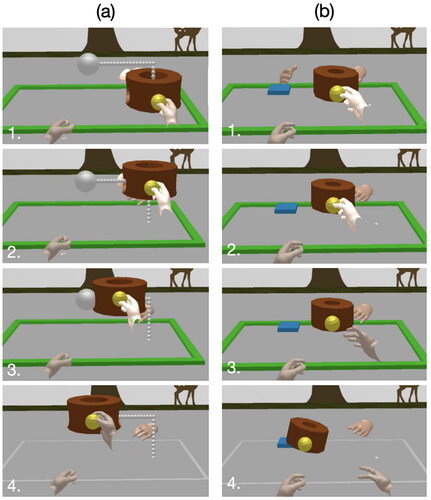

Figure 9. Experimental tasks (a) Task 1: Move together (1. Two users grasp together, 2–3. Two users move together, 4. Two users stop together), (b) Task 2: Handover (1. The giver grasps, 2. The receiver grasps, 3. The giver releases, 4. The receiver moves).

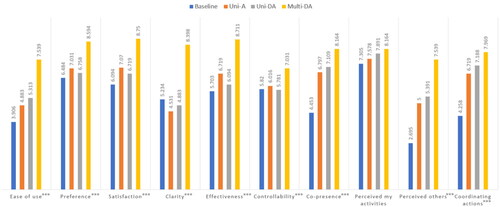

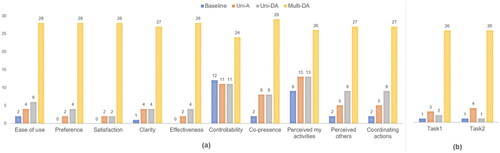

Table 1. Statements in the questionnaire (five-point Likert items).

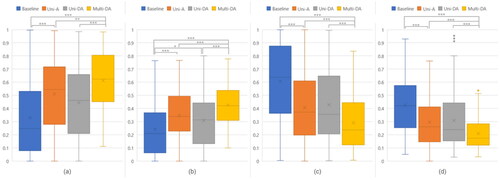

Figure 10. Moving together task: Ratio of collaborative manipulation to (a) cooperative distance, (b) cooperative time, (c) single distance, and (d) single time (x: mean, y: ratio from 0 to 1, *, ***: pairwise significant difference).

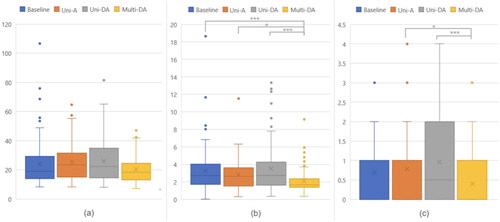

Figure 11. Handover task: (a) completion time (x: mean, y: second), (b) handover time (x: mean, y: second), and (c) failure count (x: mean, y: count) (*, ***: pairwise significant difference).