Figures & data

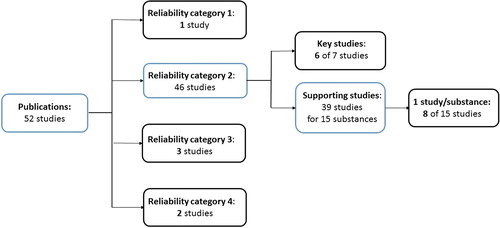

Table 1. Peer-reviewed studies included in this investigation. Studies have been listed according to the assigned reliability category by the registrant: category 1 = Reliable without restriction, category 2 = Reliable with restriction, category 3 = Not reliable and category 4 = Not assignable.

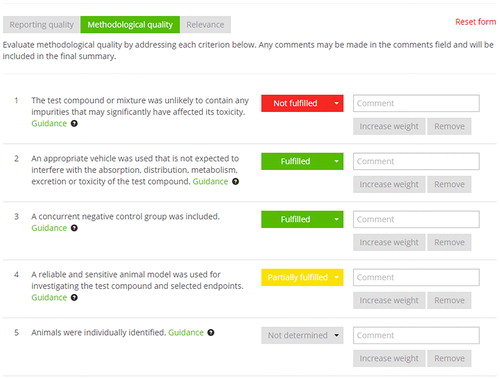

Table 2. SciRAP reporting and methodology criteria considered key for evaluating reliability based on expert judgment and “red criteria” in ToxRTool.

Table 3. Principles for categorizing studies into reliability categories 1–4 based on the SciRAP evaluation.

Table 4. Reliability category and rationale for reliability as registered in the REACH registration dossier for the 20 selected studies and resulting reliability evaluation with the SciRAP tool.

Table 5. SciRAP evaluation of reporting quality criteria for the 20 studies included in this investigation listed according to the assigned reliability category with the SciRAP tool. Each criterion was evaluated as either F = fulfilled, PF = partially fulfilled, NF = not fulfilled, ND = not determined or NA = not applicable.

Table 6. SciRAP evaluation of methodology quality criteria for the 20 studies included in this investigation listed according to the assigned reliability category with the SciRAP tool. Each criterion was evaluated as either F = fulfilled, PF = partially fulfilled, NF = not fulfilled, ND = not determined or NA = not applicable.