Figures & data

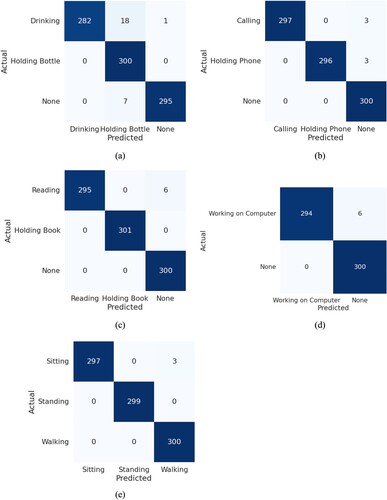

Figure 4. Model accuracies of the proposed method: (a) bottle, (b) mobile phone, (c) book, (d) keyboard, and (e) actions (no interaction).

Table 1. Robustness experiment.

Table 2. Robustness with various subjects.

Table 3. Robustness with various camera angles.

Table 4. Robustness with various camera frame sizes.

Table 5. Robustness in various environments.

Table 6. Ablation study on the explanatory variables in the proposed method.

Table 7. Comparison of accuracy on consideration of multi-camera complementarity.

Table 8. Effect of accuracy on changing the size of training dataset.

Table 9. Effect of accuracy on changing the number of recognized interaction models.

Table 10. Recognition speed experiment on various processor.

Table A1. Comparison of accuracy on consideration of depth coordinates.