Figures & data

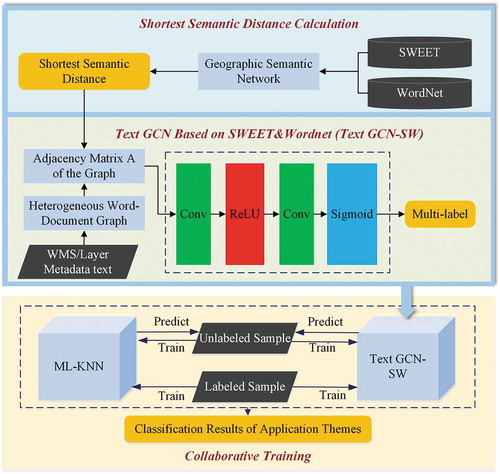

Figure 1. Architecture of the proposed collaborative training model Text GCN-SW-KNN by cooperating ML-KNN and text GCN-SW, where the first two tiers show the working mechanism of Text GCN-SW

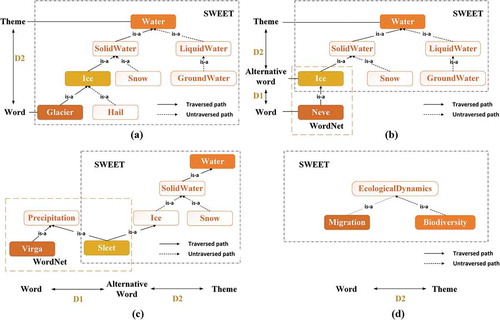

Figure 2. Exemplary shortest path between feature words and theme within SWEET and WordNet. (a) Feature word A is included in SWEET; (b) Feature word is not included in SWEET but in WordNet; (c) Feature word A and alternative word B are two subclasses away; (d) Feature word A and Theme T are two subclasses away

Table 1. Co-training algorithm based on Text GCN-SW and ML-KNN

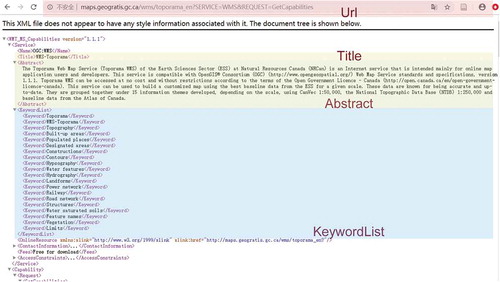

Figure 3. An exemplary WMS metadata document in XML format, including URL, title, abstract and keyword, etc

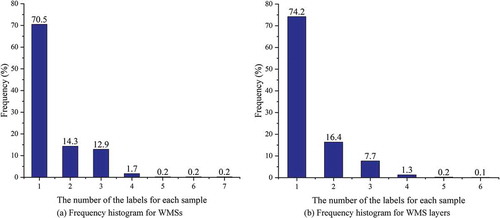

Figure 4. Frequency histogram of the number of labels for each WMS and each layer on the selected 501 samples

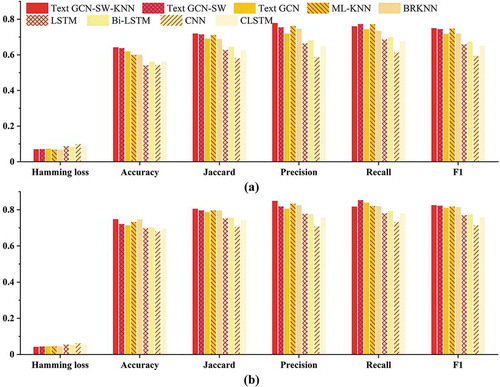

Figure 5. Performance comparison of text GCN-SW-KNN and eight baselines for both service-level and layer-level classification, respectively. (a) is the result for WMS and (b) is for WMS layer

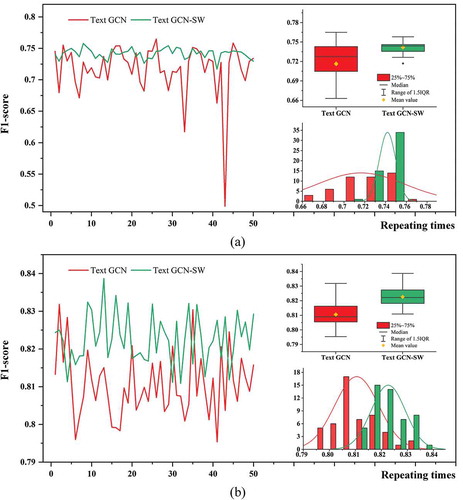

Figure 6. Stability of F1-score in repeating experiments for Text GCN and Text GCN-SW. (a) is the result for WMS and (b) is for WMS layer

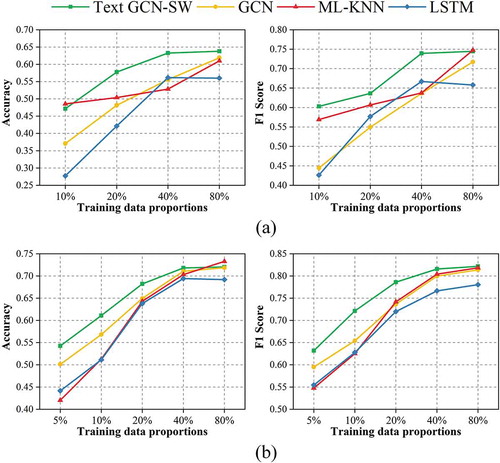

Figure 7. Accuracy and F1 for Text GCN, Text GCN-SW, ML-KNN and LSTM with different proportions of the training data: (a) is for WMS and (b) is for WMS layer

Table A1. Performance metrics of text GCN-SW-KNN and eight baselines for WMS service-level classification

Table A2. Performance metrics of text GCN-SW-KNN and eight baselines for WMS layer-level classification

Data availability statement

The code and data that support the findings of this study are openly available in GitHub at https://github.com/ZPGuiGroupWhu/Text-based-WMS-Application-Theme-Classification/tree/master.