Figures & data

Table 1. The Findable Accessible Interoperable and Reusable (FAIR) Guiding Principles for manual (human-driven) and automated (machine-driven) activities attempting to find and/or process scholarly/scientific digital data and non-data research objects – from data in the conventional sense to analytical pipelines (GO FAIR – International Support and Coordination Office, Citation2021; Wilkinson et al., Citation2016). Quoted from (Wilkinson et al., Citation2016), “four foundational principles – Findability, Accessibility, Interoperability, and Reusability (FAIR) – serve to guide [contemporary digital, e.g. web-based] producers and publishers” involved with “both manual [human-driven] and automated [machine-driven] activities attempting to find and process scholarly digital [data and non-data] research objects – from data [in the conventional sense] to analytical pipelines … An example of [scholarly/scientific digital] non-data research objects are analytical workflows, to be considered a critical component of the scholarly/scientific ecosystem, whose formal publication is necessary to achieve [transparency, scientific reproducibility and reusability]”. All scholarly/scientific digital data and non-data research objects, ranging from data in the conventional sense, either numerical or categorical variables as outcome (product), to analytical pipelines (processes), synonym for data and information processing systems, “benefit from application of these principles, since all components of the research process must be available to ensure transparency, [scientific] reproducibility and reusability”. Hence, “the FAIR principles apply not only to ‘data’ in the conventional sense, but also to the algorithms, tools, and workflows that led to that data … While there have been a number of recent, often domain-focused publications advocating for specific improvements in practices relating to data management and archival, FAIR differs in that it describes concise, domain-independent, high-level principles that can be applied to a wide range of scholarly [digital data and non-data] outputs. The elements of the FAIR Principles are related, but independent and separable. The Principles define characteristics that contemporary data resources, tools, vocabularies and infrastructures should exhibit to assist discovery and reuse by third-parties … Throughout the Principles, the phrase ‘(meta)data’ is used in cases where the Principle should be applied to both metadata and data.”

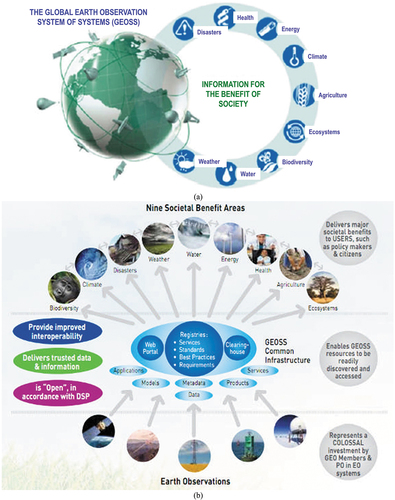

Figure 1. Group on Earth Observations (GEO)’s implementation plan for years 2005–2015 of the Global Earth Observation System of (component) Systems (GEOSS) (EC – European Commission and GEO – Group on Earth Observations, Citation2014; GEO – Group on Earth Observations, Citation2005, Citation2019; Mavridis, Citation2011), unaccomplished to date and revised by a GEO’s second implementation plan for years 2016–2025 of a new GEOSS, regarded as expert EO data-derived information and knowledge system (GEO – Group on Earth Observations, Citation2015; Nativi et al., Citation2015, Citation2020; Santoro et al., Citation2017). (a) Adapted from (EC – European Commission and GEO – Group on Earth Observations, Citation2014; Mavridis, Citation2011). Graphical representation of the visionary goal of the GEOSS applications. Nine “Societal Benefit Areas” are targeted by GEOSS: disasters, health, energy, climate, water, weather, ecosystems, agriculture and biodiversity. (b) Adapted from (EC – European Commission and GEO – Group on Earth Observations, Citation2014; Mavridis, Citation2011). GEO’s vision of a GEOSS, where interoperability of interconnected component systems is an open problem, to be accomplished in agreement with the Findable Accessible Interoperable and Reusable (FAIR) criteria for scientific data (product and process) management (GO FAIR – International Support and Coordination Office, Citation2021; Wilkinson et al., Citation2016), see . In the terminology of GEOSS, a digital Common Infrastructure is required to allow end-users to search for and access to the interconnected component systems. In practice, the GEOSS Common Infrastructure must rely upon a set of interoperability standards and best practices to interconnect, harmonize and integrate data, applications, models, and products of heterogeneous component systems (Nativi et al., Citation2015, p. 3). In 2014, GEO expressed the utmost recommendation that, for the next 10 years 2016–2025 (GEO – Group on Earth Observations, Citation2015), the second mandate of GEOSS is to evolve from an EO big data sharing infrastructure, intuitively referred to as data-centric approach (Nativi et al., Citation2020), to an expert EO data-derived information and knowledge system (Nativi et al., Citation2015, pp. 7, 22), intuitively referred to as knowledge-driven approach (Nativi et al., Citation2020). The formalization and use of the notion of Essential (Community) Variables and related instances (see ) contributes to the process of making GEOSS an expert information and knowledge system, capable of EO sensory data interpretation/transformation into Essential (Community) Variables in support of decision making (Nativi et al., Citation2015, p. 18, Citation2020). By focusing on the delivery to end-users of EO sensory data-derived Essential (Community) Variables as information sets relevant for decision-making, in place of delivering low-level EO big sensory data, the Big Data requirements of the GEOSS digital Common Infrastructure are expected to decrease (Nativi et al., Citation2015, p. 21, Citation2020).

Table 2. Essential climate variables (ECVs) defined by the World Climate Organization (WCO) (Bojinski et al., Citation2014), pursued by the European Space Agency (ESA) Climate Change Initiative’s parallel projects (ESA – European Space Agency, Citation2017b, Citation2020a, Citation2020b) and by the Group on Earth Observations (GEO)’s second implementation plan for years 2016–2025 of the Global Earth Observation System of (component) Systems (GEOSS) (GEO – Group on Earth Observations, Citation2015; Nativi et al., Citation2015, Citation2020; Santoro et al., Citation2017), see . In the terrestrial layer, ECVs are: River discharge, water use, groundwater, lakes, snow cover, glaciers and ice caps, ice sheets, permafrost, albedo, land cover (including vegetation types), fraction of absorbed photosynthetically active radiation, leaf area index (LAI), above-ground biomass, soil carbon, fire disturbance, soil moisture. All these ECVs can be estimated from Earth observation (EO) imagery by means of either physical or semi-empirical data models, e.g. Clever’s semi-empirical model for pixel-based LAI estimation from multi-spectral (MS) imagery (Van der Meer & De Jong, Citation2011).

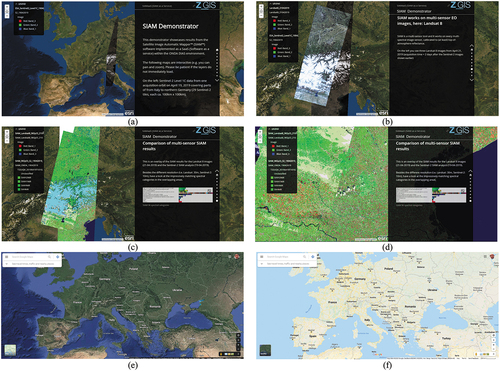

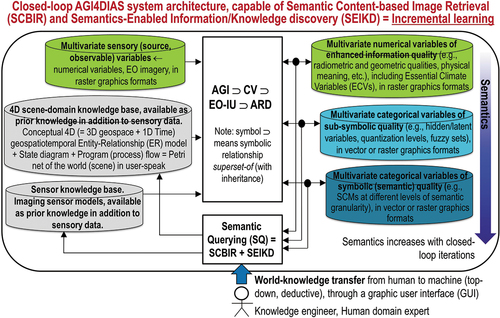

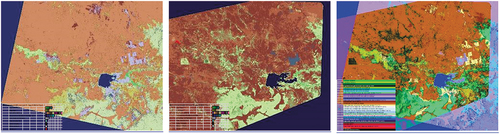

Figure 2. Two examples of semantic/ontological interoperability over heterogeneous sensory data sources (true-facts, observables). The first example is provided by the demonstrator of the Satellite Image Automatic Mapper™ (SIAM™) lightweight computer program (Baraldi, Citation2017, Citation2019a; Baraldi et al., Citation2010a, Citation2010b, Citation2018a, Citation2018b; Baraldi, Puzzolo, Blonda, Bruzzone, & Tarantino, Citation2006; Baraldi & Tiede, Citation2018a, Citation2018b), installed onto the Serco ONDA Data and Information Access Services (DIAS) Marketplace (Baraldi, Citation2019a). This demonstrator works as proof-of-concept of an Artificial General Intelligence (AGI) (Bills, Citation2020; Chollet, Citation2019; Dreyfus, Citation1965, Citation1991, Citation1992; EC – European Commission, Citation2019; Fjelland, Citation2020; Hassabis et al., Citation2017; Ideami, Citation2021; Jajal, Citation2018; Jordan, Citation2018; Mindfire Foundation, Citation2018; Mitchell, Citation2021; Practical AI, Citation2020; Saba, Citation2020c; Santoro et al., Citation2021; Sweeney, Citation2018a; Thompson, Citation2018; Wolski, Citation2020a, Citation2020b), suitable for Earth observation (AGI4EO) applications, namely, ‘AGI for Data and Information Access Services (DIAS) = AGI4DIAS = AGI-enabled DIAS = Semantics-enabled DIAS 2.0 (DIAS 2nd generation) = AGI + DIAS 1.0 + Semantic content-based image retrieval (SCBIR) + Semantics-enabled information/knowledge discovery (SEIKD)’ = EquationEquation (1)(1)

(1) , envisioned by portions of the existing literature (Augustin, Sudmanns, Tiede, & Baraldi, Citation2018; Augustin, Sudmanns, Tiede, Lang, & Baraldi, Citation2019; Baraldi, Citation2017; Baraldi & Tiede, Citation2018a, Citation2018b; Baraldi, Tiede, Sudmanns, Belgiu, & Lang, Citation2016; Baraldi, Tiede, Sudmanns, & Lang, Citation2017; Dhurba & King, Citation2005; FFG – Austrian Research Promotion Agency, Citation2015, Citation2016, Citation2018, Citation2020; Planet, Citation2018; Smeulders, Worring, Santini, Gupta, & Jain, Citation2000; Sudmanns, Augustin, van der Meer, Baraldi, and Tiede, Citation2021; Sudmanns, Tiede, Lang, & Baraldi, Citation2018; Tiede, Baraldi, Sudmanns, Belgiu, & Lang, Citation2017). In the present work, an integrated AGI4DIAS infrastructure = ‘AGI + DIAS 1.0 + SCBIR + SEIKD = DIAS 2.0ʹ is proposed as viable alternative to, first, traditional metadata text-based image retrieval systems, such as popular EO (raster-based) data cubes (Open Data Cube, Citation2020; Baumann, Citation2017; CEOS – Committee on Earth Observation Satellites, Citation2020; Giuliani et al., Citation2017, Citation2020; Lewis et al., Citation2017; Strobl et al., Citation2017), including the European Commission (EC) DIAS 1st generation (DIAS 1.0) (EU – European Union, Citation2017, Citation2018), and, second, prototypical content-based image retrieval (CBIR) systems, whose queries are input with text information, summary statistics or by either image, object or multi-object examples (Datta, Joshi, Li, & Wang, Citation2008; Kumar, Berg, Belhumeur, & Nayar, Citation2011; Ma & Manjunath, Citation1997; Shyu et al., Citation2007; Smeulders et al., Citation2000; Smith & Chang, Citation1996; Tyagi, Citation2017). Typically, existing DIAS and prototypical CBIR systems are affected by the so-called data-rich information-poor (DRIP) syndrome (Ball, Citation2021; Bernus & Noran, Citation2017). Figure captions are as follows. (a) Mosaic of twenty-nine 12-band Sentinel-2 images, acquired across Europe on 19 April, 2019, radiometrically calibrated into top-of-atmosphere reflectance (TOARF) values, depicted in true-colors, 10 m resolution, equivalent to a QuickLook™ technology. (b) Mosaic of three 11-band Landsat-8 images acquired across Europe on 21 April, 2019, radiometrically calibrated into TOARF values, depicted in true-colors, 30 m resolution, equivalent to a QuickLook technology. (c) Overlap between two SIAM’s output maps in semi-symbolic color names (Baraldi, Citation2017, Citation2019a; Baraldi et al., Citation2010a, Citation2010b, Citation2018a, Citation2018b, Citation2006; Baraldi & Tiede, Citation2018a, Citation2018b), automatically generated in near real-time from, respectively, the input 10 m resolution Sentinel-2 image mosaic, shown in Figure 2(a), and the 30 m resolution Landsat-8 image mosaic shown in Figure 2(b). The same map legend applies to the two input data sets. Semantic (symbolic) interoperability is accomplished by SIAM, independent of the input data source. In practice, SIAM instantiates a QuickMap™ technology, where a categorical map, provided with its discrete and finite map legend, is sensory data-derived automatically, without human-machine interaction and in near real-time, either on-line or off-line. (d) Zoom-in of the two SIAM maps, featuring the same semantics, independent of changes in the input sensory data source. In practice, SIAM accomplishes semantic interoperability by featuring robustness to changes in input data and scalability to changes in sensor specifications. (e) Second example of semantic interoperability over heterogeneous sensory data sources. Taken from Google Earth = Google Maps_Satellite View, it is equivalent to a QuickLook technology. It shows multi-source sensory data (quantitative/unequivocal information-as-thing) (Baraldi & Tiede, Citation2018a, Citation2018b; Capurro & Hjørland, Citation2003), equivalent to subsymbolic numerical variables, to be interpreted by users. (f) Taken from Google Maps_Map View (qualitative/equivocal information-as-data-interpretation) (Baraldi & Tiede, Citation2018a, Citation2018b; Capurro & Hjørland, Citation2003), equivalent to a symbolic interpretation of Google Maps_Satellite View data. In practice, Google Maps_Map View works as QuickMap technology. To be run automatically and in near real-time, either on-line or off-line, a QuickMap technology accomplishes data compression through discretization/categorization, together with interpretation of a continuous or discrete numerical variable belonging to a (2D) image-plane into a discrete and finite categorical variable provided with semantics, belonging to an ontology (mental model, conceptual model) of the 4D geospace-time real-world (Baraldi, Citation2017; Baraldi & Tiede, Citation2018a, Citation2018b; Matsuyama & Hwang, Citation1990).

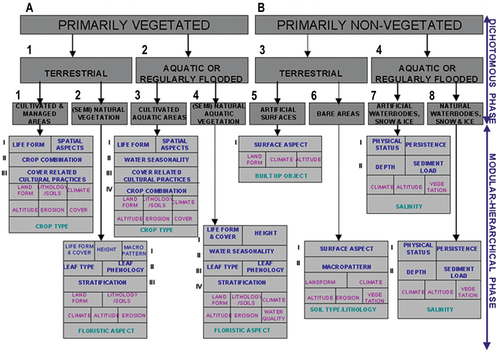

Figure 3. Adapted from (Ahlqvist, Citation2008; Di Gregorio, Citation2016; Di Gregorio & Jansen, Citation2000; Owers et al., Citation2021). Example of a standard land cover (LC) class taxonomy, developed by the geographic information science (GIScience) community (Buyong, Citation2007; Couclelis, Citation2010, Citation2012; Ferreira et al., Citation2014; Fonseca et al., Citation2002; Goodchild et al., Citation2007; Hitzler et al., Citation2012; Kuhn, Citation2005; Longley et al., Citation2005; Maciel et al., Citation2018; Sheth, Citation2015; Sonka et al., Citation1994; Stock et al., Citation2011; Hu, Citation2017). In conceptual terms, a taxonomy (legend) of LC classes is a hierarchical (multi-level, featuring inter-level parent-child relationships) vocabulary of entities (referents, classes of real-world objects) (Ball, Citation2021; Chen, Citation1976). Any LC class taxonomy is part-of a conceptual world model (world ontology, mental model of the world) (Baraldi, Citation2017; Baraldi & Tiede, Citation2018a, Citation2018b; Matsuyama & Hwang, Citation1990), consisting of entities (Ball, Citation2021; Chen, Citation1976), inter-entity relationships/predicates (Ball, Citation2021; Chen, Citation1976), facts (Campagnola, Citation2020), events/occurrents and processes/phenomena (Ferreira et al., Citation2014; Fonseca et al., Citation2002; Galton & Mizoguchi, Citation2009; Kuhn, Citation2005; Maciel et al., Citation2018; Tiede et al., Citation2017). Proposed by the Food and Agriculture Organization (FAO) of the United Nations (UN), the well-known hierarchical Land Cover Classification System (LCCS) taxonomy (Di Gregorio & Jansen, Citation2000) is two-stage and fully-nested. It consists of a first-stage fully-nested 3-level 8-class FAO LCCS Dichotomous Phase (DP) taxonomy, which is general-purpose, user- and application-independent. It consists of a sorted set of three dichotomous layers (Di Gregorio & Jansen, Citation2000): (i) Primarily Vegetated versus Primarily Non-Vegetated. In more detail, Primarily Vegetated applies to areas whose vegetative cover is at least 4% for at least two months of the year. Vice versa, Primarily Non-Vegetated areas have a total vegetative cover of less than 4% for more than 10 months of the year. (ii) Terrestrial versus aquatic. (iii) Managed versus natural or semi-natural. These three dichotomous layers deliver as output the following 8-class FAO LCCS-DP taxonomy. (A11) Cultivated and Managed Terrestrial (non-aquatic) Vegetated Areas. (A12) Natural and Semi-Natural Terrestrial Vegetation. (A23) Cultivated Aquatic or Regularly Flooded Vegetated Areas. (A24) Natural and Semi-Natural Aquatic or Regularly Flooded Vegetation. (B35) Artificial Surfaces and Associated Areas. (B36) Bare Areas. (B47) Artificial Waterbodies, Snow and Ice. (B48) Natural Waterbodies, Snow and Ice. The general-purpose user- and application-independent 3-level 8-class FAO LCCS-DP taxonomy is preliminary to a second-stage FAO LCCS Modular Hierarchical Phase (MHP) taxonomy, consisting of a battery of user- and application-specific one-class classifiers, equivalent to one-class grammars (syntactic classifiers) (Di Gregorio & Jansen, Citation2000). In recent years, the two-phase FAO LCCS taxonomy has become increasingly popular (Ahlqvist, Citation2008; Durbha et al., Citation2008; Herold et al., Citation2009, Citation2006; Jansen et al., Citation2008; Owers et al., Citation2021). For example, it is adopted by the ongoing European Space Agency (ESA) Climate Change Initiative’s parallel projects (ESA – European Space Agency, Citation2017b, Citation2020a, Citation2020b). One reason for its popularity is that the FAO LCCS hierarchy is “fully nested” while alternative LC class hierarchies, such as the Coordination of Information on the Environment (CORINE) Land Cover (CLC) taxonomy (Bossard et al., Citation2000), the U.S. Geological Survey (USGS) Land Cover Land Use (LCLU) taxonomy by J. Anderson (Lillesand & Kiefer, Citation1979), the International Global Biosphere Programme (IGBP) DISCover Data Set Land Cover Classification System (EC – European Commission, Citation1996) and the EO Image Librarian LC class legend (Dumitru et al., Citation2015), start from a first-level taxonomy which is already multi-class.

Figure 4. Adapted from (Tyagi, Citation2017). Typical architecture of a content-based image retrieval (CBIR) system (Datta et al., Citation2008; Kumar et al., Citation2011; Ma & Manjunath, Citation1997; Shyu et al., Citation2007; Smeulders et al., Citation2000; Smith & Chang, Citation1996; Tyagi, Citation2017), whose prototypical instantiations have been presented in the remote sensing (RS) and computer vision (CV) literature as an alternative to traditional metadata text-based image retrieval systems in operational mode (Airbus, Citation2018; Planet, Citation2017). CBIR system prototypes support no semantic CBIR (SCBIR) operation because they lack ‘Computer Vision (CV) ⊃ EO image understanding (EO-IU)’ capabilities, i.e. they lack “intelligence” required to transform EO big sensory data into systematic, operational, timely and comprehensive information-as-data-interpretation (Capurro & Hjørland, Citation2003), known that relationship ‘EO-IU ⊂ CV ⊂ Artificial General Intelligence (AGI) ⊂ Cognitive science’ = EquationEquation (2)(2)

(2) holds (Bills, Citation2020; Chollet, Citation2019; Dreyfus, Citation1965, Citation1991, Citation1992; EC – European Commission, Citation2019; Fjelland, Citation2020; Hassabis et al., Citation2017; Ideami, Citation2021; Jajal, Citation2018; Jordan, Citation2018; Mindfire Foundation, Citation2018; Mitchell, Citation2021; Practical AI, Citation2020; Saba, Citation2020c; Santoro et al., Citation2021; Sweeney, Citation2018a; Thompson, Citation2018; Wolski, Citation2020a, Citation2020b).

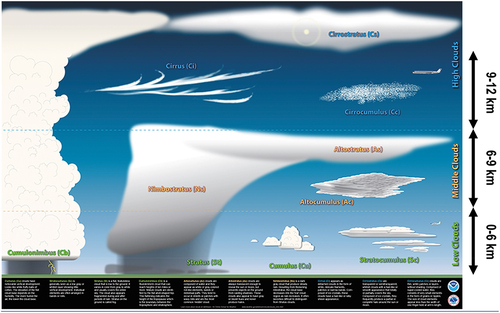

Figure 5. Cloud classification according to the U.S. National Weather Service (U.S. National Weather Service, Citation2019). “Ice clouds, also called cirrus clouds, which include cirrus, cirrostratus, and cirrocumulus, are made up of ice crystals” (Borduas & Donahue, Citation2018). Located in the upper troposphere (6–10 km) or lower stratosphere (10–16 km) (Borduas & Donahue, Citation2018; NIWA – National Institute of Water and Atmospheric Research, Citation2018; U.S. National Weather Service, Citation2019; UCAR – University Corporation for Atmospheric Research, Center for Science Education – SCIED, Citation2018). Worth mentioning, the troposphere is typically located between 0 and 10 km of height (Borduas & Donahue, Citation2018; NIWA – National Institute of Water and Atmospheric Research, Citation2018; U.S. National Weather Service, Citation2019; UCAR – University Corporation for Atmospheric Research, Center for Science Education – SCIED, Citation2018), known that the height of the top of the troposphere, called the tropopause (NIWA – National Institute of Water and Atmospheric Research, Citation2018), varies with latitude (it is lowest over the Poles and highest at the equator) and by season (it is lower in winter and higher in summer) (UCAR – University Corporation for Atmospheric Research, Center for Science Education – SCIED, Citation2018). In more detail, the tropopause can be as high as 20 km near the equator and as low as 7 km over the Poles in winter (NIWA – National Institute of Water and Atmospheric Research, Citation2018; UCAR – University Corporation for Atmospheric Research, Center for Science Education – SCIED, Citation2018). Given that, above the troposphere, the stratosphere is typically located between 10 and 30 km of height (Baraldi, Citation2017; Borduas & Donahue, Citation2018; NIWA – National Institute of Water and Atmospheric Research, Citation2018; U.S. National Weather Service, Citation2019; UCAR – University Corporation for Atmospheric Research, Center for Science Education – SCIED, Citation2018).

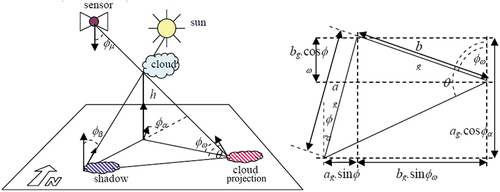

Figure 6. Adapted from (Baraldi & Tiede, Citation2018a, Citation2018b). Physical model, known a priori (available in addition to data), of the Sun-cloud-satellite geometry for arbitrary viewing and illumination conditions. Left: Actual 3D representation of the Sun/ cloud/ cloud–shadow geometry. Cloud height, h, is a typical unknown variable. Right: Apparent Sun/ cloud/ cloud–shadow geometry in a 2D soil projection, with ag = h ⋅ tanφβ, bg = h ⋅ tanφμ.

Figure 7. Adapted from (Swain & Davis, Citation1978). In the y-axis, reflectance values in physical range [0.0, 1.0] are represented as % values in range [0.0, 100.0], either ‘Top-of-atmosphere reflectance (TOARF) ⊇ Surface reflectance (SURF) ⊇ Surface albedo’ = EquationEquation (8)(8)

(8) (refer to the farther Subsection 3.3.2), where surface albedo is included in the list of terrestrial Essential Climate Variables (ECVs) defined by the World Climate Organization (WCO), see . This graph shows the typical spectral response characteristics of “green (photosynthetically active) vegetation” in reflectance values (Liang, Citation2004; Swain & Davis, Citation1978), where chlorophyll absorption phenomena occur jointly with water content absorption phenomena, according to a convergence-of-evidence approach (Baraldi, Citation2017; Baraldi & Tiede, Citation2018a, Citation2018b; Matsuyama & Hwang, Citation1990). “In general, the total reflectance of a given object across the entire solar spectrum (also termed albedo) is strongly related to the physical condition of the relevant targets (shadowing effects, slope and aspect, particle size distribution, refraction index, etc.), whereas the spectral peaks or, vice versa, absorption valleys are more closely related to the chemical condition of the sensed target (material surface-specific absorption)” (Van der Meer & De Jong, Citation2011, p. 251). In the remote sensing (RS) literature and in the RS meta-science common practice, chlorophyll absorption phenomena are traditionally intercepted by so-called vegetation spectral indexes, such as the well-known two-band Normalized Difference Vegetation Index, NDVI (DLR – Deutsches Zentrum für Luft-und Raumfahrt e.V. and VEGA Technologies, Citation2011; ESA – European Space Agency, Citation2015; Jordan, Citation1969; Liang, Citation2004; Neduni & Sivakumar, Citation2019; Rouse, Haas, Scheel, & Deering, Citation1974; Sykas, Citation2020; Tucker, Citation1979), where NDVI ∈ [−1.0, 1.0] = f1(Red, NIR) = f1(Red0.65÷0.68, NIR0.78÷0.90) = (NIR0.78÷0.90 – Red0.65÷0.68)/(NIR0.78÷0.90 + Red0.65÷0.68), which is monotonically increasing with the dimensionless Vegetation Ratio Index (VRI), where VRI ∈ (0, +∞) = NIR0.78÷0.90/Red0.65÷0.68 = (1. + NDVI)/(1. – NDVI) (Liang, Citation2004). Water content absorption phenomena in phenology are traditionally intercepted by so-called moisture or, vice versa, drought spectral indexes, such as the two-band Normalized Difference Moisture Index, NDMI (Liang, Citation2004; Neduni & Sivakumar, Citation2019; Sykas, Citation2020), equivalent to the Normalized Difference Water Index defined by Gao, NDWIGao (Gao, Citation1996), where NDMI ∈ [−1.0, 1.0] = NDWIGao = f2(NIR, MIR1) = f2(NIR0.78÷0.90, MIR1.57÷1.65) = (NIR0.78÷0.90 – MIR1.57÷1.65)/(NIR0.78÷0.90 + MIR1.57÷1.65), which is inversely related to the so-called Normalized Difference Bare Soil Index (NDBSI), NDBSI ∈ [−1.0, 1.0] = f3(NIR, MIR1) = (MIR1.55÷1.75 – NIR0.78÷0.90)/(MIR1.55÷1.75 + NIR0.78÷0.90) adopted in (Kang Lee, Dev Acharya, & Ha Lee, Citation2018; Roy, Miyatake, & Rikimaru, Citation2009), see . Popular two-band spectral indexes are conceptually equivalent to the angular coefficient/ slope/ 1st-order derivative of a tangent to the spectral signature in one point (Baraldi, Citation2017, Citation2019a; Baraldi et al., Citation2010a, Citation2010b, Citation2018a, Citation2018b, Citation2006; Baraldi & Tiede, Citation2018a, Citation2018b; Liang, Citation2004). Three-band spectral indexes are conceptually equivalent to a 2nd-order derivative as local measure of function concavity (Baraldi, Citation2017, Citation2019a; Baraldi et al., Citation2010a, Citation2010b, Citation2018a, Citation2018b, Citation2006; Baraldi & Tiede, Citation2018a, Citation2018b; Liang, Citation2004). Noteworthy, no combination of derivatives of any order can work as set of basis functions, capable of universal function approximation (Bishop, Citation1995; Cherkassky & Mulier, Citation1998). It means that if, in the fundamental feature engineering phase (Koehrsen, Citation2018) preliminary to feature (pattern) recognition (analysis, classification, understanding), any two-band or three-band scalar spectral index extraction occurs, then it always causes an irreversible loss in (lossy compression of) the multivariate shape and multivariate intensity information components of a spectral signature (Baraldi, Citation2017, Citation2019a; Baraldi et al., Citation2010a, Citation2010b, Citation2018a, Citation2018b, Citation2006; Baraldi & Tiede, Citation2018a, Citation2018b).

![Figure 7. Adapted from (Swain & Davis, Citation1978). In the y-axis, reflectance values in physical range [0.0, 1.0] are represented as % values in range [0.0, 100.0], either ‘Top-of-atmosphere reflectance (TOARF) ⊇ Surface reflectance (SURF) ⊇ Surface albedo’ = EquationEquation (8)(8) \lsquoDNs≥ 0atEOLevel0⊇TOARF∈0.0, 1.0atEOLevel1⊇SURF∈0.0, 1.0atEOLevel2/currentARD⊇Surfacealbedo∈0.0, 1.0at,say,EOLevel3/nextgenerationARD\rsquo(8)(8) (refer to the farther Subsection 3.3.2), where surface albedo is included in the list of terrestrial Essential Climate Variables (ECVs) defined by the World Climate Organization (WCO), see Table 2. This graph shows the typical spectral response characteristics of “green (photosynthetically active) vegetation” in reflectance values (Liang, Citation2004; Swain & Davis, Citation1978), where chlorophyll absorption phenomena occur jointly with water content absorption phenomena, according to a convergence-of-evidence approach (Baraldi, Citation2017; Baraldi & Tiede, Citation2018a, Citation2018b; Matsuyama & Hwang, Citation1990). “In general, the total reflectance of a given object across the entire solar spectrum (also termed albedo) is strongly related to the physical condition of the relevant targets (shadowing effects, slope and aspect, particle size distribution, refraction index, etc.), whereas the spectral peaks or, vice versa, absorption valleys are more closely related to the chemical condition of the sensed target (material surface-specific absorption)” (Van der Meer & De Jong, Citation2011, p. 251). In the remote sensing (RS) literature and in the RS meta-science common practice, chlorophyll absorption phenomena are traditionally intercepted by so-called vegetation spectral indexes, such as the well-known two-band Normalized Difference Vegetation Index, NDVI (DLR – Deutsches Zentrum für Luft-und Raumfahrt e.V. and VEGA Technologies, Citation2011; ESA – European Space Agency, Citation2015; Jordan, Citation1969; Liang, Citation2004; Neduni & Sivakumar, Citation2019; Rouse, Haas, Scheel, & Deering, Citation1974; Sykas, Citation2020; Tucker, Citation1979), where NDVI ∈ [−1.0, 1.0] = f1(Red, NIR) = f1(Red0.65÷0.68, NIR0.78÷0.90) = (NIR0.78÷0.90 – Red0.65÷0.68)/(NIR0.78÷0.90 + Red0.65÷0.68), which is monotonically increasing with the dimensionless Vegetation Ratio Index (VRI), where VRI ∈ (0, +∞) = NIR0.78÷0.90/Red0.65÷0.68 = (1. + NDVI)/(1. – NDVI) (Liang, Citation2004). Water content absorption phenomena in phenology are traditionally intercepted by so-called moisture or, vice versa, drought spectral indexes, such as the two-band Normalized Difference Moisture Index, NDMI (Liang, Citation2004; Neduni & Sivakumar, Citation2019; Sykas, Citation2020), equivalent to the Normalized Difference Water Index defined by Gao, NDWIGao (Gao, Citation1996), where NDMI ∈ [−1.0, 1.0] = NDWIGao = f2(NIR, MIR1) = f2(NIR0.78÷0.90, MIR1.57÷1.65) = (NIR0.78÷0.90 – MIR1.57÷1.65)/(NIR0.78÷0.90 + MIR1.57÷1.65), which is inversely related to the so-called Normalized Difference Bare Soil Index (NDBSI), NDBSI ∈ [−1.0, 1.0] = f3(NIR, MIR1) = (MIR1.55÷1.75 – NIR0.78÷0.90)/(MIR1.55÷1.75 + NIR0.78÷0.90) adopted in (Kang Lee, Dev Acharya, & Ha Lee, Citation2018; Roy, Miyatake, & Rikimaru, Citation2009), see Table 3. Popular two-band spectral indexes are conceptually equivalent to the angular coefficient/ slope/ 1st-order derivative of a tangent to the spectral signature in one point (Baraldi, Citation2017, Citation2019a; Baraldi et al., Citation2010a, Citation2010b, Citation2018a, Citation2018b, Citation2006; Baraldi & Tiede, Citation2018a, Citation2018b; Liang, Citation2004). Three-band spectral indexes are conceptually equivalent to a 2nd-order derivative as local measure of function concavity (Baraldi, Citation2017, Citation2019a; Baraldi et al., Citation2010a, Citation2010b, Citation2018a, Citation2018b, Citation2006; Baraldi & Tiede, Citation2018a, Citation2018b; Liang, Citation2004). Noteworthy, no combination of derivatives of any order can work as set of basis functions, capable of universal function approximation (Bishop, Citation1995; Cherkassky & Mulier, Citation1998). It means that if, in the fundamental feature engineering phase (Koehrsen, Citation2018) preliminary to feature (pattern) recognition (analysis, classification, understanding), any two-band or three-band scalar spectral index extraction occurs, then it always causes an irreversible loss in (lossy compression of) the multivariate shape and multivariate intensity information components of a spectral signature (Baraldi, Citation2017, Citation2019a; Baraldi et al., Citation2010a, Citation2010b, Citation2018a, Citation2018b, Citation2006; Baraldi & Tiede, Citation2018a, Citation2018b).](/cms/asset/9826b4c3-5c67-4754-9f0a-c1696bf79555/tbed_a_2017549_f0007_b.gif)

Figure 8. Adapted from (Malenovsky et al., Citation2007). Spectral resolution (“full-width-half-maximum”, FWHM), central wavelength λ, extent (λmax – λmin) and sampling interval of imaging spectroscopy data.

Figure 9. Adapted from (Van der Meer and De Jong, Citation2011). Inter-band Pearson’s linear cross-correlation (PLCC) coefficient, in range [−1.0, 1.0] (Kreyszig, Citation1979; Sheskin, Citation2000; Tabachnick & Fidell, Citation2014), for the main factors resulting from a principal component analysis and factor rotation for an agricultural data set acquired by the 220-band Jet Propulsion Laboratory (JPL) 220-band Airborne Visible Near Infrared Imaging Spectrometer (AVIRIS). Flevoland test site, July 5th 1991. It shows that, a global image-wide inter-band PLCC estimate, where typical local image non-stationarities are lost (Egorov, Roy, Zhang, Hansen, and Kommareddy, Citation2018) because averaged (wiped out), according to the central limit theorem (Kreyszig, Citation1979; Sheskin, Citation2000; Tabachnick & Fidell, Citation2014), scores (fuzzy) “high” (close to 1) within each of the three portions of the electromagnetic spectrum, namely, visible (VIS) ∈ [0.35 μm, 0.72 μm], Near-Infrared (NIR) ∈ [0.72 μm, 1.30 μm] and Middle-Infrared (MIR) ∈ [1.30 μm, 3.00 μm), see .

![Figure 9. Adapted from (Van der Meer and De Jong, Citation2011). Inter-band Pearson’s linear cross-correlation (PLCC) coefficient, in range [−1.0, 1.0] (Kreyszig, Citation1979; Sheskin, Citation2000; Tabachnick & Fidell, Citation2014), for the main factors resulting from a principal component analysis and factor rotation for an agricultural data set acquired by the 220-band Jet Propulsion Laboratory (JPL) 220-band Airborne Visible Near Infrared Imaging Spectrometer (AVIRIS). Flevoland test site, July 5th 1991. It shows that, a global image-wide inter-band PLCC estimate, where typical local image non-stationarities are lost (Egorov, Roy, Zhang, Hansen, and Kommareddy, Citation2018) because averaged (wiped out), according to the central limit theorem (Kreyszig, Citation1979; Sheskin, Citation2000; Tabachnick & Fidell, Citation2014), scores (fuzzy) “high” (close to 1) within each of the three portions of the electromagnetic spectrum, namely, visible (VIS) ∈ [0.35 μm, 0.72 μm], Near-Infrared (NIR) ∈ [0.72 μm, 1.30 μm] and Middle-Infrared (MIR) ∈ [1.30 μm, 3.00 μm), see Figure 7.](/cms/asset/fe48e3a3-184a-4435-bcd0-26afcfe6c83d/tbed_a_2017549_f0009_c.jpg)

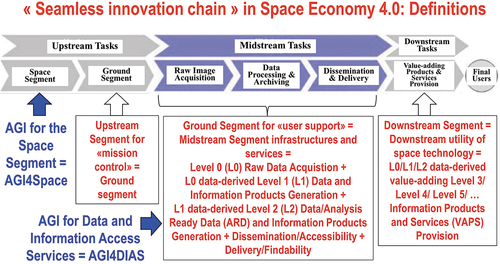

Figure 10. In a new notion of Space Economy 4.0, envisioned by Mazzucato and Robinson in 2017 in their original work for the European Space Agency (ESA) (Mazzucato & Robinson, Citation2017), first-stage “horizontal” (enabling) capacity building, coping with background conditions necessary to specialization, is preliminary to second-stage “vertical” (deep and narrow) specialization policies, suitable for coping with a potentially huge worldwide market of institutional and private end-users of space technology. Definitions adopted herein, in agreement with the new notion of Space Economy 4.0 proposed by Mazzucato and Robinson (Mazzucato & Robinson, Citation2017), are: Space segment, Upstream segment for «mission control» = Ground segment for «mission control», Ground segment for «user support» = Midstream segment infrastructures and services, Downstream segment = Downstream utility of space technology (Mazzucato & Robinson, Citation2017, pp. 6, 57), capable of transforming quantitative (unequivocal) Earth observation (EO) big sensory data into sensory data-derived value-adding information products and services (VAPS), suitable for use by a potentially huge worldwide market of institutional and private end-users of space technology. Artificial General Intelligence (AGI) for EO (AGI4EO) technologies should be applied as early as possible in the “seamless innovation chain” required by a new notion of Space Economy 4.0, starting from AGI for space segment (AGI4Space) applications, which include the notion of future intelligent EO satellites, provided with AGI onboard (EOportal, Citation2020; ESA – European Space Agency, Citation2019; Esposito et al., Citation2019a, Citation2019b; GISCafe News, Citation2018; Zhou, Citation2001), and AGI for Data and Information Access Services (AGI4DIAS) at the midstream segment (see ), such as systematic generation of multi-sensor Analysis Ready Data (ARD) and information products, eligible for direct use in analysis at the downstream segment, without requiring laborious data pre-processing (Baraldi & Tiede, Citation2018a, Citation2018b; CEOS – Committee on Earth Observation Satellites, Citation2018; Dwyer et al., Citation2018; Helder et al., Citation2018; NASA – National Aeronautics and Space Administration, Citation2019; Qiu et al., Citation2019; USGS – U.S. Geological Survey, Citation2018a, Citation2018c).

Figure 11. (a) Adapted from (Baraldi, Citation2017; Baraldi & Tiede, Citation2018a, Citation2018b), this original graph postulates that, within the multi-disciplinary cognitive science domain (Ball, Citation2021; Capra & Luisi, Citation2014; Hassabis et al., Citation2017; Hoffman, Citation2008, Citation2014; Langley, Citation2012; Miller, Citation2003; Mindfire Foundation, Citation2018; Mitchell, Citation2019; Parisi, Citation1991; Santoro et al., Citation2021; Serra & Zanarini, Citation1990; Varela et al., Citation1991; Wikipedia, Citation2019), encompassing disciplines like philosophy (Capurro & Hjørland, Citation2003; Dreyfus, Citation1965, Citation1991, Citation1992; Fjelland, Citation2020), semiotics (Ball, Citation2021; Peirce, Citation1994; Perez, Citation2020, Citation2021; Salad, Citation2019; Santoro et al., Citation2021; Wikipedia, Citation2021e), linguistics (Ball, Citation2021; Berlin & Kay, Citation1969; Firth, Citation1962; Rescorla, Citation2019; Saba, Citation2020a, Citation2020c), anthropology (Harari, Citation2011, Citation2017; Wikipedia, Citation2019), neuroscience (Barrett, Citation2017; Buonomano, Citation2018; Cepelewicz, Citation2021; Hathaway, Citation2021; Hawkins, Citation2021; Hawkins, Ahmad, and Cui, Citation2017; Kaufman, Churchland, Ryu, and Shenoy, Citation2014; Kosslyn, Citation1994; Libby & Buschman, Citation2021; Mason & Kandel, Citation1991; Salinas, Citation2021b; Slotnick et al., Citation2005; Zador, Citation2019), focusing on the study of the brain machinery in the mind-brain problem (Hassabis et al., Citation2017; Hoffman, Citation2008; Serra & Zanarini, Citation1990; Westphal, Citation2016), computational neuroscience (Beniaguev et al., Citation2021; DiCarlo, Citation2017; Gidon et al., Citation2020; Heitger et al., Citation1992; Pessoa, Citation1996; Rodrigues & Du Buf, Citation2009), psychophysics (Benavente et al., Citation2008; Bowers & Davis, Citation2012; Griffin, Citation2006; Lähteenlahti, Citation2021; Mermillod et al., Citation2013; Parraga et al., Citation2009; Vecera & Farah, Citation1997), psychology (APS – Association for Psychological Science, Citation2008; Hehe, Citation2021) computer science, formal logic (Laurini & Thompson, Citation1992; Sowa, Citation2000), mathematics, physics, statistics and (the meta-science of) engineering (Langley, Citation2012; Santoro et al., Citation2021; Wikipedia, Citation2019), semantic relationship ‘Human vision → Computer Vision (CV) ⊃ Earth observation (EO) image understanding (EO-IU)’ = EquationEquation (4)(4)

(4) holds true, where symbol ‘→’ denotes semantic relationship part-of (without inheritance) pointing from the supplier to the client, not to be confused with relationship subset-of, ‘⊃’, meaning specialization with inheritance from the superset (at left) to the subset, in agreement with symbols adopted by the standard Unified Modeling Language (UML) for graphical modeling of object-oriented software (Fowler, Citation2003). The working hypothesis ‘Human vision → CV ⊃ EO-IU’ = EquationEquation (4)

(4)

(4) means that human vision is expected to work as lower bound of CV, i.e. a CV system is required to include as part-of a computational model of human vision (Baraldi, Citation2017; Baraldi et al., Citation2018a, Citation2018b; Baraldi & Tiede, Citation2018a, Citation2018b; Iqbal & Aggarwal, Citation2001), consistent with human visual perception, in agreement with a reverse engineering approach to CV (Baraldi, Citation2017; Bharath & Petrou, Citation2008; DiCarlo, Citation2017). In practice, to become better conditioned for numerical solution (Baraldi, Citation2017; Baraldi et al., Citation2018a, Citation2018b; Baraldi & Tiede, Citation2018a, Citation2018b; Bishop, Citation1995; Cherkassky & Mulier, Citation1998; Dubey, Agrawal, Pathak, Griffiths, & Efros, Citation2018), an inherently ill-posed CV system is required to comply with human visual perception phenomena in the multi-disciplinary domain of cognitive science. (b) In this original graph, the inherently vague/equivocal notion of Artificial Intelligence is disambiguated into the two concepts of Artificial General Intelligence (AGI) and Artificial Narrow Intelligence (ANI), which are better constrained to be better understood (Bills, Citation2020; Chollet, Citation2019; Dreyfus, Citation1965, Citation1991, Citation1992; EC – European Commission, Citation2019; Fjelland, Citation2020; Hassabis et al., Citation2017; Ideami, Citation2021; Jajal, Citation2018; Mindfire Foundation, Citation2018; Practical AI, Citation2020; Santoro et al., Citation2021; Sweeney, Citation2018a; Wolski, Citation2020a, Citation2020b). This graph postulates that semantic relationship ‘EO-IU ⊂ CV ⊂ Artificial General Intelligence (AGI) ← Artificial Narrow Intelligence (ANI) ← (Inductive/ bottom-up/ statistical model-based) Machine Learning-from-data (ML) ⊃ (Inductive/ bottom-up/ statistical model-based) Deep Learning-from-data (DL)’ = ‘EO-IU ⊂ CV ⊂ AGI ← ANI ← ML ⊃ DL’ = EquationEquation (5)

(5)

(5) holds, where ANI is formulated as ‘ANI = [DL ⊂ ML logical-OR Traditional deductive Artificial Intelligence (static expert systems, non-adaptive to data, often referred to as Good Old-Fashioned Artificial Intelligence, GOFAI (Dreyfus, Citation1965, Citation1991, Citation1992; Santoro et al., Citation2021)]’ = EquationEquation (6)

(6)

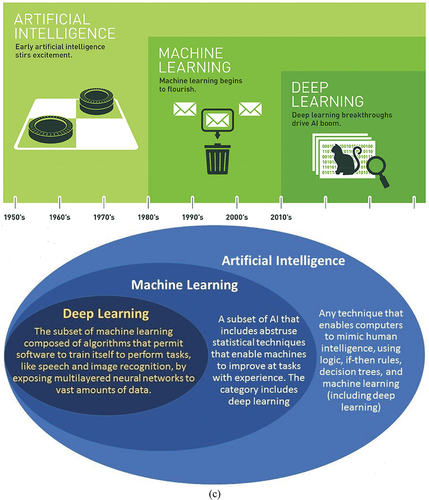

(6) , in agreement with the entity-relationship model shown in (a). (c) In recent years, an increasingly popular thesis is that semantic relationship ‘A(G/N)I ⊃ ML ⊃ DL’ = EquationEquation (7)

(7)

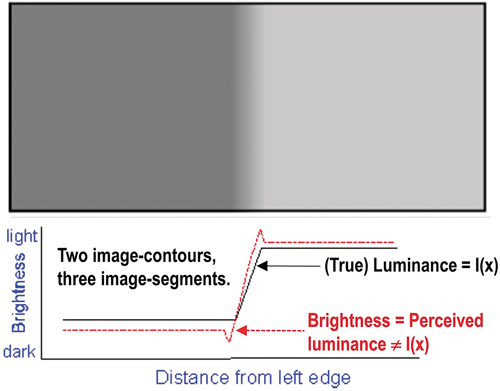

(7) holds (Claire, Citation2019; Copeland, Citation2016). For example, in (Copeland, Citation2016), it is reported that: “since an early flush of optimism in the 1950s, smaller subsets of Artificial Intelligence – first machine learning, then deep learning, a subset of machine learning – have created even larger disruptions.” It is important to stress that the increasingly popular postulate (axiom) ‘A(G/N)I ⊃ ML ⊃ DL’ = EquationEquation (7)

(7)

(7) (Claire, Citation2019; Copeland, Citation2016), see Figure 11(c), is inconsistent with (alternative to) semantic relationship ‘AGI ← ANI ← ML ⊃ DL’ = EquationEquation (5)

(5)

(5) , depicted in Figure 11(a) and Figure 11(b). The latter is adopted as working hypothesis by the present paper.

![Figure 11. (a) Adapted from (Baraldi, Citation2017; Baraldi & Tiede, Citation2018a, Citation2018b), this original graph postulates that, within the multi-disciplinary cognitive science domain (Ball, Citation2021; Capra & Luisi, Citation2014; Hassabis et al., Citation2017; Hoffman, Citation2008, Citation2014; Langley, Citation2012; Miller, Citation2003; Mindfire Foundation, Citation2018; Mitchell, Citation2019; Parisi, Citation1991; Santoro et al., Citation2021; Serra & Zanarini, Citation1990; Varela et al., Citation1991; Wikipedia, Citation2019), encompassing disciplines like philosophy (Capurro & Hjørland, Citation2003; Dreyfus, Citation1965, Citation1991, Citation1992; Fjelland, Citation2020), semiotics (Ball, Citation2021; Peirce, Citation1994; Perez, Citation2020, Citation2021; Salad, Citation2019; Santoro et al., Citation2021; Wikipedia, Citation2021e), linguistics (Ball, Citation2021; Berlin & Kay, Citation1969; Firth, Citation1962; Rescorla, Citation2019; Saba, Citation2020a, Citation2020c), anthropology (Harari, Citation2011, Citation2017; Wikipedia, Citation2019), neuroscience (Barrett, Citation2017; Buonomano, Citation2018; Cepelewicz, Citation2021; Hathaway, Citation2021; Hawkins, Citation2021; Hawkins, Ahmad, and Cui, Citation2017; Kaufman, Churchland, Ryu, and Shenoy, Citation2014; Kosslyn, Citation1994; Libby & Buschman, Citation2021; Mason & Kandel, Citation1991; Salinas, Citation2021b; Slotnick et al., Citation2005; Zador, Citation2019), focusing on the study of the brain machinery in the mind-brain problem (Hassabis et al., Citation2017; Hoffman, Citation2008; Serra & Zanarini, Citation1990; Westphal, Citation2016), computational neuroscience (Beniaguev et al., Citation2021; DiCarlo, Citation2017; Gidon et al., Citation2020; Heitger et al., Citation1992; Pessoa, Citation1996; Rodrigues & Du Buf, Citation2009), psychophysics (Benavente et al., Citation2008; Bowers & Davis, Citation2012; Griffin, Citation2006; Lähteenlahti, Citation2021; Mermillod et al., Citation2013; Parraga et al., Citation2009; Vecera & Farah, Citation1997), psychology (APS – Association for Psychological Science, Citation2008; Hehe, Citation2021) computer science, formal logic (Laurini & Thompson, Citation1992; Sowa, Citation2000), mathematics, physics, statistics and (the meta-science of) engineering (Langley, Citation2012; Santoro et al., Citation2021; Wikipedia, Citation2019), semantic relationship ‘Human vision → Computer Vision (CV) ⊃ Earth observation (EO) image understanding (EO-IU)’ = EquationEquation (4)(4) \lsquo(Inductive/ bottom-up/ statistical model-based) DL-from-data⊂(Inductive/ bottom-up / statistical model-based) ML-from-data→AGI⊃CV←Human vision\rsquo(4) holds true, where symbol ‘→’ denotes semantic relationship part-of (without inheritance) pointing from the supplier to the client, not to be confused with relationship subset-of, ‘⊃’, meaning specialization with inheritance from the superset (at left) to the subset, in agreement with symbols adopted by the standard Unified Modeling Language (UML) for graphical modeling of object-oriented software (Fowler, Citation2003). The working hypothesis ‘Human vision → CV ⊃ EO-IU’ = EquationEquation (4)(4) \lsquo(Inductive/ bottom-up/ statistical model-based) DL-from-data⊂(Inductive/ bottom-up / statistical model-based) ML-from-data→AGI⊃CV←Human vision\rsquo(4) means that human vision is expected to work as lower bound of CV, i.e. a CV system is required to include as part-of a computational model of human vision (Baraldi, Citation2017; Baraldi et al., Citation2018a, Citation2018b; Baraldi & Tiede, Citation2018a, Citation2018b; Iqbal & Aggarwal, Citation2001), consistent with human visual perception, in agreement with a reverse engineering approach to CV (Baraldi, Citation2017; Bharath & Petrou, Citation2008; DiCarlo, Citation2017). In practice, to become better conditioned for numerical solution (Baraldi, Citation2017; Baraldi et al., Citation2018a, Citation2018b; Baraldi & Tiede, Citation2018a, Citation2018b; Bishop, Citation1995; Cherkassky & Mulier, Citation1998; Dubey, Agrawal, Pathak, Griffiths, & Efros, Citation2018), an inherently ill-posed CV system is required to comply with human visual perception phenomena in the multi-disciplinary domain of cognitive science. (b) In this original graph, the inherently vague/equivocal notion of Artificial Intelligence is disambiguated into the two concepts of Artificial General Intelligence (AGI) and Artificial Narrow Intelligence (ANI), which are better constrained to be better understood (Bills, Citation2020; Chollet, Citation2019; Dreyfus, Citation1965, Citation1991, Citation1992; EC – European Commission, Citation2019; Fjelland, Citation2020; Hassabis et al., Citation2017; Ideami, Citation2021; Jajal, Citation2018; Mindfire Foundation, Citation2018; Practical AI, Citation2020; Santoro et al., Citation2021; Sweeney, Citation2018a; Wolski, Citation2020a, Citation2020b). This graph postulates that semantic relationship ‘EO-IU ⊂ CV ⊂ Artificial General Intelligence (AGI) ← Artificial Narrow Intelligence (ANI) ← (Inductive/ bottom-up/ statistical model-based) Machine Learning-from-data (ML) ⊃ (Inductive/ bottom-up/ statistical model-based) Deep Learning-from-data (DL)’ = ‘EO-IU ⊂ CV ⊂ AGI ← ANI ← ML ⊃ DL’ = EquationEquation (5)(5) \lsquoARD⊂EO-IU⊂CV⊂AGI←ANI←ML⊃DL⊃DeepConvolutionalNeuralNetworkDCNN\rsquo(5) holds, where ANI is formulated as ‘ANI = [DL ⊂ ML logical-OR Traditional deductive Artificial Intelligence (static expert systems, non-adaptive to data, often referred to as Good Old-Fashioned Artificial Intelligence, GOFAI (Dreyfus, Citation1965, Citation1991, Citation1992; Santoro et al., Citation2021)]’ = EquationEquation (6)(6) x0026;ANI=[DCNN⊂DL⊂MLlogical0ORTraditionaldeductiveArtificial Intelligencex0026;(staticexpertsystems,non0adaptivetodata,alsoknownasGoodOld0Fashionedx0026;Artificial Intelligence,GOFAI](6) , in agreement with the entity-relationship model shown in (a). (c) In recent years, an increasingly popular thesis is that semantic relationship ‘A(G/N)I ⊃ ML ⊃ DL’ = EquationEquation (7)(7) \lsquoA(G/N)I⊃ML⊃DL⊃DCNN\rsquo(7) holds (Claire, Citation2019; Copeland, Citation2016). For example, in (Copeland, Citation2016), it is reported that: “since an early flush of optimism in the 1950s, smaller subsets of Artificial Intelligence – first machine learning, then deep learning, a subset of machine learning – have created even larger disruptions.” It is important to stress that the increasingly popular postulate (axiom) ‘A(G/N)I ⊃ ML ⊃ DL’ = EquationEquation (7)(7) \lsquoA(G/N)I⊃ML⊃DL⊃DCNN\rsquo(7) (Claire, Citation2019; Copeland, Citation2016), see Figure 11(c), is inconsistent with (alternative to) semantic relationship ‘AGI ← ANI ← ML ⊃ DL’ = EquationEquation (5)(5) \lsquoARD⊂EO-IU⊂CV⊂AGI←ANI←ML⊃DL⊃DeepConvolutionalNeuralNetworkDCNN\rsquo(5) , depicted in Figure 11(a) and Figure 11(b). The latter is adopted as working hypothesis by the present paper.](/cms/asset/b29472e6-c5ea-4c54-b733-1ccd114b1b34/tbed_a_2017549_f0011a_c.jpg)

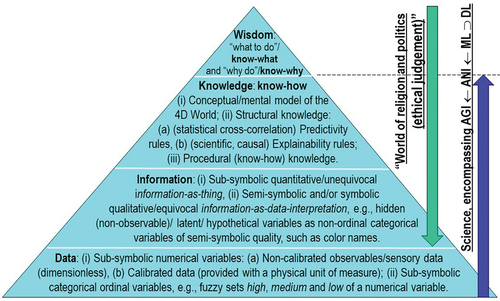

Figure 12. Adapted from (Wikipedia, Citation2020a). The increasingly popular Data-Information-Knowledge-Wisdom (DIKW) pyramid (Rowley, Citation2007; Rowley & Hartley, Citation2008; Wikipedia, Citation2020a; Zeleny, Citation1987, Citation2005; Zins, Citation2007), also known as the DIKW hierarchy, refers loosely to a class of models for representing purported structural and/or functional relationships between data, information, knowledge, and wisdom (Zins, Citation2007). Typically, “information is defined in terms of data, knowledge in terms of information and wisdom in terms of knowledge” (Rowley, Citation2007; Rowley & Hartley, Citation2008).

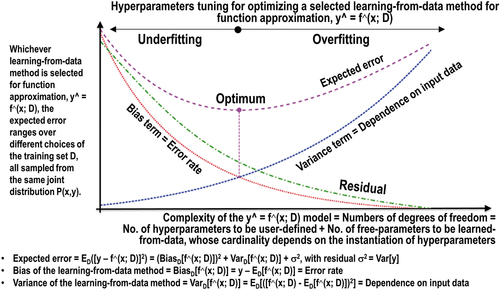

Figure 13. Hyperparameter tuning for model optimization and the bias-variance trade-off (Bishop, Citation1995; Cherkassky & Mulier, Citation1998; Geman et al., Citation1992; Koehrsen, Citation2018; Mahadevan, Citation2019; Sarkar, Citation2018; Wikipedia, Citation2010; Wolpert, Citation1996; Wolpert & Macready, Citation1997). As a general rule, “proper feature engineering will have a much larger impact on model performance than even the most extensive hyperparameter tuning. It is the law of diminishing returns applied to machine learning: feature engineering gets you most of the way there, and hyperparameter tuning generally only provides a small benefit. This demonstrates a fundamental aspect of machine learning: it is always a game of trade-offs. We constantly have to balance accuracy vs interpretability, bias vs variance, accuracy vs run time, and so on” (Koehrsen, Citation2018). On the one hand, “if you shave off your hypothesis with a big Occam’s razor, you will be likely left with a simple model, one which cannot fit all the data. Consequently, you have to supply more data to have better confidence. On the other hand, if you create a complex (and long) hypothesis, you may be able to fit your training data really well (low bias, low error rate), but this actually may not be the right hypothesis as it runs against the maximum a posteriori (MAP) principle of having a hypothesis with small entropy (with Entropy = -log2P(Hypothesis) = length(Hypothesis)) in addition to a small error rate” (Sarkar, Citation2018).

Figure 14. Adapted from (Koehrsen, Citation2018). The so-called “black box problem” closes in on machine learning (ML)-from-data algorithms in general. In artificial neural networks (ANNs), typically based on the McCulloch and Pitts (MCP) neuron model, conceived almost 80 years ago as a simplified neurophysiological version of biological neurons (McCulloch & Pitts, Citation1943), or its improved recent versions (Cimpoi et al., Citation2014; Krizhevsky et al., Citation2012), in compliance with the mind-brain problem (Hassabis et al., Citation2017; Hoffman, Citation2008; Serra & Zanarini, Citation1990; Westphal, Citation2016), the difficulty for the system to provide a suitable explanation for how it arrived at an answer is referred to as “the black box problem”, which affects how an ANN can hit the point of reproducibility or at least traceability/interpretability (Koehrsen, Citation2018; Lukianoff, Citation2019). This chart shows “a (highly unscientific) version of the accuracy vs interpretability trade-off, to highlight a fundamental aspect of ML: it is always a game of trade-offs. We constantly have to balance accuracy vs interpretability, bias vs variance, accuracy vs run time, and so on” (Koehrsen, Citation2018), also refer to .

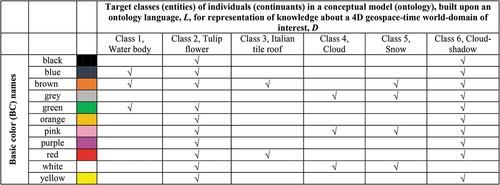

Figure 15. Example of latent/hidden variable as a discrete and finite vocabulary of basic color (BC) names (Baraldi & Tiede, Citation2018a, Citation2018b; berlin & Kay, Citation1969; Griffin, Citation2006). This graphical model of color naming is adapted from (Shotton, Winn, Rother, & Criminisi, Citation2009; Wikipedia, Citation2015). Let us consider z as a (subsymbolic) numerical variable, such as multi-spectral (MS) color values of a population of spatial units belonging to a (2D) image-plane, where spatial units can be either (0D) pixel, (1D) line or (2D) polygon (OGC – Open Geospatial Consortium Inc, Citation2015), with vector data z ∈ ℜMS, where ℜMS represents a multi-spectral (MS) data space, while c represents a categorical variable of symbolic classes in the 4D geospace-time scene-domain, pertaining to the physical real-world, with c = 1, …, ObjectClassLegendCardinality. (a) According to Bayesian theory, posterior probability , where color names ks, equivalent to color (hyper)polyhedra (Benavente et al., Citation2008; Griffin, Citation2006; Parraga et al., Citation2009) in a numerical color (hyper)space ℜMS, provide a partition of the domain of change, ℜMS, of numerical variable z (refer to the farther Figure 29). (b) For discriminative inference, the arrows in the graphical model are reversed using Bayes rule. Hence, a vocabulary of color names, physically equivalent to a partition of a numerical color space ℜMS into color name-specific hyperpolyhedra, is conceptually equivalent to a latent/ hidden/ hypothetical variable linking observables (subsymbolic sensory data) in the real world, specifically, color values, to a categorical variable of semantic (symbolic) quality in the mental (conceptual) model of the physical world (world ontology, world model) (Baraldi, Citation2017; Baraldi & Tiede, Citation2018a, Citation2018b; Matsuyama & Hwang, Citation1990).

Figure 16. Augmented (better constrained) version of the traditional Data-Information-Knowledge-Wisdom (DIKW) pyramid (Rowley, Citation2007; Rowley and Hartley, Citation2008; Wikipedia, Citation2020a; Zeleny, Citation1987; Zeleny, Citation2005; Zins, Citation2007) (see ), where “information is typically defined in terms of data, knowledge in terms of information, and wisdom in terms of knowledge” (Rowley, Citation2007). Intuitively, Zeleny defines knowledge as “know-how” (procedural knowledge) (Saba, Citation2020b) and wisdom as “what to do, act or carry out”/know-what and “why do”/know-why (Zeleny, Citation1987; Zeleny, Citation2005). The conceptual and factual gap between knowledge as “know-how” (factual statement) (Harari, Citation2017, p. 222) and wisdom as “what to do”/know-what and “why do”/know-why (ethical judgement) (Harari, Citation2017, p. 222) is huge to be filled by any human-like Artificial General Intelligence (AGI), capable of human personal and collective (societal) intelligence (Benavente, Vanrell, and Baldrich, Citation2008; Harari, Citation2017). This is acknowledged by Yuval Noah Harari, who writes: filling the gap from knowledge as “know-how” (factual statement) to wisdom as “what to do”/know-what and “why do”/know-why (ethical judgement) is equivalent to crossing “the border from the land of science into that of religion” (Harari, Citation2017, p. 244). About the relationship between religion and science, “science always needs religious assistance in order to create viable human institutions. Scientists study how the world functions, but there is no scientific method for determining how humans ought to behave. Only religions provide us with the necessary guidance” (Harari, Citation2017, p. 219).

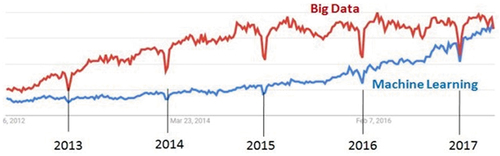

Figure 17. Adapted from (Camara, Citation2017). Google Trends: Big data vs Machine learning (ML) search trends, May 6, 2012 – May 6, 2017.

Figure 18. Lesson to be learned from big data analysis, required to cope with the six Vs of volume, velocity, variety, veracity, volatility and value (Metternicht et al., Citation2020). For example, the popular Google Earth Engine (Gorelick et al., Citation2017) is typically considered a valuable instance of the set of algorithms suitable for working on EO large image databases. Noteworthy, the Google Earth Engine typically adopts a pixel-based-through-time image analysis approach, synonym for spatial-context insensitive 1D image analysis. The computer vision (CV) and remote sensing (RS) communities have been striving to abandon 1D analysis of (2D) imagery since the 1970s (Nagao & Matsuyama, Citation1980). In spite of these efforts, to date, in the RS common practice, traditional suboptimal algorithms for 1D analysis of (2D) imagery implemented in the Google Earth Engine platform are considered the state-of-the-art in EO big data cube analysis (Gorelick et al., Citation2017). Only a small subset of data processing algorithms scores “(fuzzy) high” in a minimally dependent maximally informative (mDMI) set of outcome and process quantitative quality indicators (OP-Q2Is), including accuracy, efficiency, robustness to changes in input data, robustness to changes in input parameters, scalability, timeliness, costs and value (Baraldi, Citation2017; Baraldi & Boschetti, Citation2012a, Citation2012b; Baraldi et al., Citation2014, Citation2018a, Citation2018b; Baraldi & Tiede, Citation2018a, Citation2018b) (refer to Subsection 3.1), in both small data analysis and big data analysis. Only this small subset of data processing algorithms belongs to the domain of interest of Artificial General Intelligence (AGI) = EquationEquation (5)(5)

(5) , see .

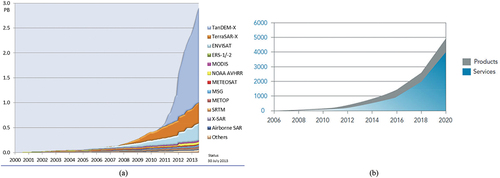

Figure 19. (a) Adapted from (Manilici et al., Citation2013). Deutsches Zentrum für Luft- und Raumfahrt (German Aerospace Center, DLR) archive size in petabytes (PB) of optical and synthetic aperture radar (SAR) data acquired by spaceborne imaging sensors. Status on July 30, 2013. Also refer to (Belward & Skøien, Citation2015; NASA – National Aeronautics and Space Administration, Citation2016; Pinna & Ferrante, Citation2009). (b) Global European annual satellite EO products and services turnover, in millions of euros (eoVox, Citation2008). Unfortunately, this latter estimate, which is monotonically increasing with the number of EO data users, provides no clue at all on the average per user productivity, monotonically increasing with the average number of different EO images actually used (interpreted, rather than downloaded) by a single user per year. Our conjecture is that existing suboptimal EO image understanding (EO-IU) systems, not provided with the Artificial General Intelligence (AGI) capability required to accomplish EO big data interpretation in operational mode (see ), have been outpaced in productivity by the exponential rate of collection of EO sensory data, whose quality and quantity are ever-increasing, see .

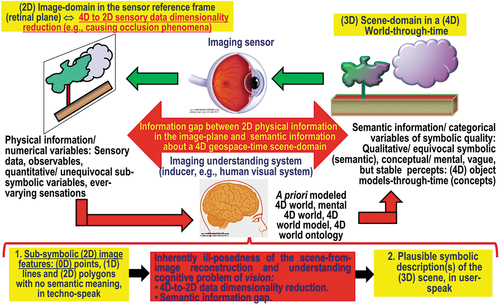

Figure 20. Synonym for scene-from-image reconstruction and understanding (Matsuyama & Hwang, Citation1990), vision is a cognitive problem (Ball, Citation2021; Capra & Luisi, Citation2014; Hassabis et al., Citation2017; Hoffman, Citation2008, Citation2014; Langley, Citation2012; Miller, Citation2003; Mindfire Foundation, Citation2018; Mitchell, Citation2019; Parisi, Citation1991; Santoro et al., Citation2021; Serra & Zanarini, Citation1990; Varela et al., Citation1991; Wikipedia, Citation2019), i.e. it is an inherently qualitative/ equivocal/ ill-posed information-as-data-interpretation task (Baraldi, Citation2017; Baraldi et al., Citation2018a, Citation2018b; Baraldi & Tiede, Citation2018a, Citation2018b; Capurro & Hjørland, Citation2003), see . Encompassing both biological vision and computer vision (CV) (see ), vision is very difficult to solve because: (i) non-deterministic polynomial (NP)-hard in computational complexity (Frintrop, Citation2011; Tsotsos, Citation1990), and (ii) inherently ill-posed in the Hadamard sense (Baraldi, Citation2017; Baraldi & Tiede, Citation2018a, Citation2018b; Hadamard, Citation1902; Matsuyama & Hwang, Citation1990) (refer to Section 2). Vision is inherently ill-posed because affected by: (a) a 4D-to-2D data dimensionality reduction, from the 4D geospatio-temporal scene-domain to the (2D, planar) image-domain, which is responsible of occlusion phenomena, and (b) a semantic information gap (Matsuyama & Hwang, Citation1990), from ever-varying subsymbolic sensory data (sensations) in the physical world-domain to stable symbolic percepts in the mental model of the physical world (modeled world, world ontology, real-world model). Since it is inherently ill-posed, vision requires a priori knowledge in addition to sensory data to become better posed for numerical solution (Baraldi, Citation2017; Baraldi et al., Citation2018a, Citation2018b; Baraldi & Tiede, Citation2018a, Citation2018b; Bishop, Citation1995; Cherkassky & Mulier, Citation1998; Dubey et al., Citation2018).

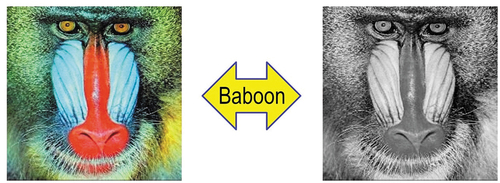

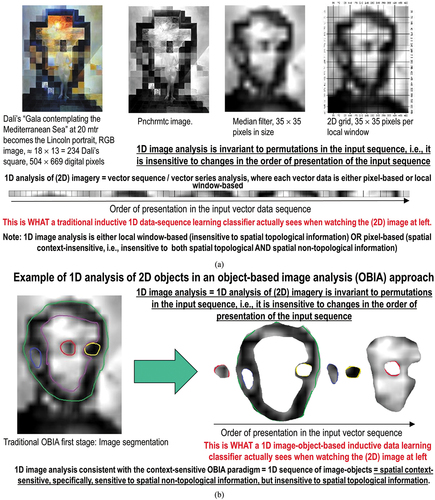

Figure 21. An observation (true-fact) familiar to all human beings wearing sunglasses, such as “spatial information dominates color information in vision” (Baraldi, Citation2017; Baraldi et al., Citation2018a, Citation2018b; Baraldi & Tiede, Citation2018a, Citation2018b), is typically questioned or disagreed upon by many computer vision (CV) and remote sensing (RS) experts and/or practitioners. At right, a panchromatic Baboon is as easy to be visually identified as such as its chromatic counterpart, at left, by any human photointerpreter. This perceptual fact works as proof-of-concept that the bulk of visual information is spatial rather than colorimetric in both the 4D geospace-time scene-domain and its projected (2D) image-plane. In spite of this unequivocal true-fact, to date, the great majority of the CV and RS communities adopt 1D non-retinotopic/2D spatial topology non-preserving (Baraldi, Citation2017; Baraldi & Alpaydin, Citation2002a, Citation2002b; Fritzke, Citation1997; Martinetz et al., Citation1994; Öğmen & Herzog, Citation2010; Tsotsos, Citation1990) image analysis algorithms, either pixel-based or local window-based, insensitive to permutations in the input data sequence (Cimpoi et al., Citation2014; Krizhevsky et al., Citation2012), where inter-object spatial topological relationships (e.g. adjacency, inclusion, etc.) and inter-object spatial non-topological relationships (e.g. spatial distance and angle measure) are oversighted in the (2D) image-plane (refer to Subsection 3.3.4), also refer to .

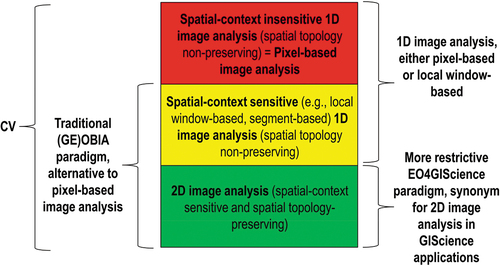

Figure 22. Examples of 1D image analysis approaches (Baraldi, Citation2017; Baraldi et al., Citation2018a, Citation2018b; Baraldi & Tiede, Citation2018a, Citation2018b; Matsuyama & Hwang, Citation1990), 2D spatial topology non-preserving (non-retinotopic) in the (2D) image-domain (Baraldi, Citation2017; Baraldi & Alpaydin, Citation2002a, Citation2002b; Fritzke, Citation1997; Martinetz et al., Citation1994; Öğmen & Herzog, Citation2010; Tsotsos, Citation1990). Intuitively, 1D image analysis is insensitive to permutations in the input data set (Cimpoi et al., Citation2014; Krizhevsky et al., Citation2012). Synonym for 1D analysis of a 2D gridded data set, non-retinotopic 1D image analysis is affected by spatial data dimensionality reduction, from 2D to 1D. The (2D) image at top/left is transformed into the 1D vector data stream (sequence) shown at bottom/right, where vector data are either pixel-based (2D spatial context-insensitive) or spatial context-sensitive, e.g. local window-based or image-object-based. This 1D vector data stream means nothing to a human photo interpreter. When it is input to either an inductive learning-from-data classifier or a deductive learning-by-rule classifier, the 1D vector data sequence is what the classifier actually sees when watching the (2D) image at left. Undoubtedly, computers are more successful than humans in 1D image analysis. Nonetheless, humans are still far more successful than computers in 2D image analysis, which is 2D spatial context-sensitive and 2D spatial topology-preserving (retinotopic) (Baraldi, Citation2017; Baraldi & Alpaydin, Citation2002a, Citation2002b; Fritzke, Citation1997; Martinetz et al., Citation1994; Öğmen & Herzog, Citation2010; Tsotsos, Citation1990), see . (a) Pixel-based (2D spatial context-insensitive) or (spatial context-sensitive) local window-based 1D image analysis. (b) Typical example of 1D image analysis approach implemented within the (geographic) object-based image analysis (GEOBIA) paradigm (Baraldi, Lang, Tiede, & Blaschke, Citation2018; Blaschke et al., Citation2014). In the so-called (GE)OBIA approach to CV, spatial analysis of (2D) imagery is intended to replace traditional pixel-based/2D spatial context-insensitive image analysis algorithms, dominating the RS literature to date. In practice, (GE)OBIA solutions can pursue either (2D spatial topology non-preserving, non-retinotopic) 1D image analysis or (2D spatial topology preserving, retinotopic) 2D image analysis (Baraldi et al., Citation2018). Also refer to and .

Figure 23. Alternative to 1D analysis of (2D) imagery (see .), image analysis (Baraldi, Citation2017; Baraldi et al., Citation2018a, Citation2018b; Baraldi & Tiede, Citation2018a, Citation2018b; DiCarlo, Citation2017; Öğmen & Herzog, Citation2010; Tsotsos, Citation1990) is synonym for 2D analysis of (2D) imagery, meaning 2D spatial context-sensitive and 2D spatial topology-preserving (retinotopic) feature mapping in a (2D) image-domain (Baraldi, Citation2017; Baraldi & Alpaydin, Citation2002a, Citation2002b; Fritzke, Citation1997; Martinetz et al., Citation1994; Öğmen & Herzog, Citation2010; Tsotsos, Citation1990). Intuitively, 2D image analysis is sensitive to permutations in the input data set (Cimpoi et al., Citation2014; Krizhevsky et al., Citation2012). Activation domains of physically adjacent processing units in the 2D array (grid, network) of 2D convolutional spatial filters are spatially adjacent regions in the 2D visual field (image-plane). Distributed processing systems capable of 2D image analysis, such as physical model-based (‘handcrafted’) 2D wavelet filter banks (Baraldi, Citation2017; Burt & Adelson, Citation1983; DiCarlo, Citation2017; Jain & Healey, Citation1998; Mallat, Citation2009; Marr, Citation1982; Sonka et al., Citation1994), typically provided with a high degree of biological plausibility in modelling 2D spatial topological and spatial non-topological information components (DiCarlo, Citation2017; Heitger et al., Citation1992; Kosslyn, Citation1994; Mason & Kandel, Citation1991; Öğmen & Herzog, Citation2010; Rappe, Citation2018; Rodrigues & Du Buf, Citation2009; Slotnick et al., Citation2005; Tsotsos, Citation1990; Vecera & Farah, Citation1997) and/or deep convolutional neural networks (DCNNs), typically learned inductively (bottom-up) from data end-to-end (Cimpoi et al., Citation2014; Krizhevsky et al., Citation2012), are eligible for outperforming traditional 1D image analysis approaches. Will computer vision (CV) systems ever become as good as humans in 2D image analysis? Also refer to . Unfortunately, due to bad practices in multi-scale spatial filtering implementation (Geirhos et al., Citation2018; Zhang, Citation2019), modern inductive DCNN algorithms typically neglect the spatial ordering of object parts, i.e. they are insensitive to the shuffling of image parts (Brendel, Citation2019; Brendel & Bethge, Citation2019). In practice, large portions of modern inductive DCNNs adopt a decision-strategy very similar to that of traditional 1D image analysis approaches, such as bag-of-local-features models (Brendel, Citation2019; Brendel & Bethge, Citation2019), insensitive to permutations in the 1D sequence of input data (Bourdakos, Citation2017), see .

Figure 24. A (geographic) object-based image analysis (GEOBIA) paradigm (Blaschke et al., Citation2014), reconsidered (Baraldi et al., Citation2018). In the (GE)OBIA subdomain of computer vision (CV) (see ), spatial analysis of (2D) imagery is intended to replace traditional pixel-based (2D spatial context-insensitive) image analysis algorithms, dominating the remote sensing (RS) literature and common practice to date. In practice, (GE)OBIA solutions encompass either 1D image analysis (spatial topology non-preserving, non-retinotopic) or 2D image analysis (spatial topology preserving, retinotopic). To overcome limitations of the existing GEOBIA paradigm (Blaschke et al., Citation2014), in a more restrictive definition of EO for Geographic Information Science (GIScience) (EO4GIScience) (see ), novel EO4GIScience applications consider mandatory a 2D image analysis approach, see (Baraldi et al., Citation2018).

Figure 25. Adapted from (Bourdakos, Citation2017). Modern inductive learned-from-data end-to-end Deep Convolutional Neural Networks (DCNNs) typically neglect the spatial ordering of object parts, i.e. they are insensitive to the shuffling (permutations) of image parts. Hence, they are similar to traditional 1D image analysis approaches, such as bag-of-local-features models (Brendel, Citation2019; Brendel & Bethge, Citation2019). Experimental evidence and conclusions reported in (Brendel, Citation2019; Brendel & Bethge, Citation2019) are aligned with those reported in (Bourdakos, Citation2017). These experimental results highlight how little we have yet understood about the inner working of DCNNs. For example, a well-trained DCNN has difficulty with the concept of “correct face” whose parts, such as an eye and a mouth, are in the wrong place. In addition to being easily fooled by images with features in the wrong place, a DCNN is also easily confused when viewing an image in a different orientation. One way to combat this is with excessive training of all possible angles, but this takes a lot of time and seems counter intuitive (Bourdakos, Citation2017).

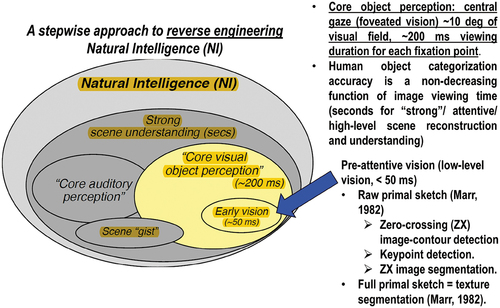

Figure 26. Adapted from (DiCarlo, Citation2017). As instance of the science of Natural Intelligence, reverse engineering primate visual perception adopts a stepwise approach (Baraldi, Citation2017; Bharath & Petrou, Citation2008; DiCarlo, Citation2017), in agreement with the seminal work by Marr (Marr, Citation1982).

Figure 27. Mach bands visual illusion (Pessoa, Citation1996). Below, in the image profile, in black: Ramp in (true, quantitative) luminance units across space. In red: Brightness (qualitative, perceived luminance) across space, where brightness is defined as a subjective aspect of vision, i.e. brightness is the perceived luminance of a surface (Boynton, Citation1990). Where a luminance (radiance, intensity) ramp meets a plateau, there are spikes of brightness, although there is no discontinuity in the luminance profile. Hence, human vision detects two boundaries, one at the beginning and one at the end of the ramp in luminance, independent of the ramp slope. Since there is no discontinuity in luminance where brightness is spiking, the Mach bands effect is called a visual ‘illusion’. In the words of Luiz Pessoa, ‘if we require that a brightness model should at least be able to predict Mach bands, the bright and dark bands which are seen at ramp edges, the number of published models is surprisingly small’ (Pessoa, Citation1996). The important lesson to be learned from the Mach bands illusion is that, in vision, local variance, contrast and 1st-order derivative (gradient) are statistical features (data-derived numerical variables) computed locally in the (2D) image-domain not suitable to detect image-objects (segments, closed contours) required to be perceptually ‘uniform’ (‘homogeneous’) in agreement with human vision (Baraldi, Citation2017; Baraldi et al., Citation2018a, Citation2018b; Baraldi & Tiede, Citation2018a, Citation2018b; Iqbal & Aggarwal, Citation2001). In other words, these popular local statistics, namely, local variance, contrast and 1st-order derivative (gradient), are not suitable visual features if detected image-segments and/or image-contours are required to be consistent with human visual perception, including ramp-edge detection. This straightforward (obvious), but not trivial observation is at odd with a large portion of the existing computer vision (CV) and remote sensing (RS) literature, where many CV algorithms for semi-automatic image segmentation (Baatz & Schäpe, Citation2000; Camara et al., Citation1996; Espindola et al., Citation2006) or (vice versa) semi-automatic image-contour detection (Heitger et al., Citation1992), such as the popular Canny edge detector (Canny, Citation1986), are based on empirical thresholding the local variance, contrast or first-order gradient based on data-dependent heuristic criteria.

Figure 28. To comply with working hypothesis ‘Human vision → CV ⊃ EO-IU’ = EquationEquation (4)(4)

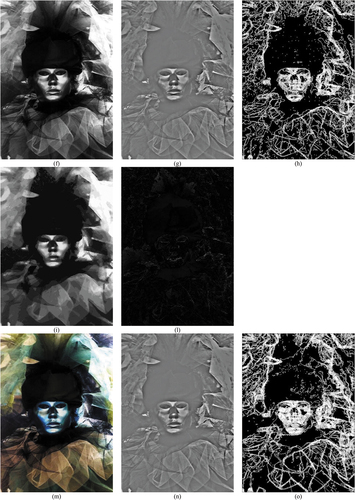

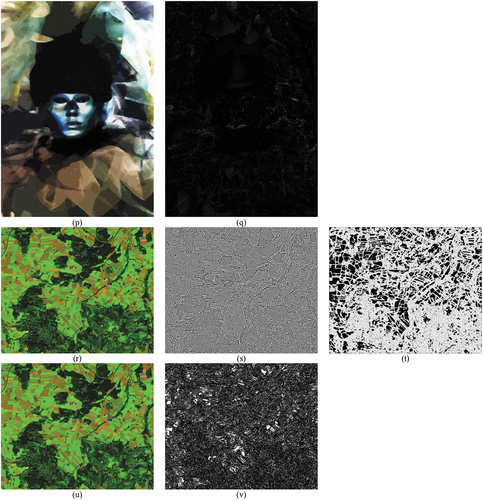

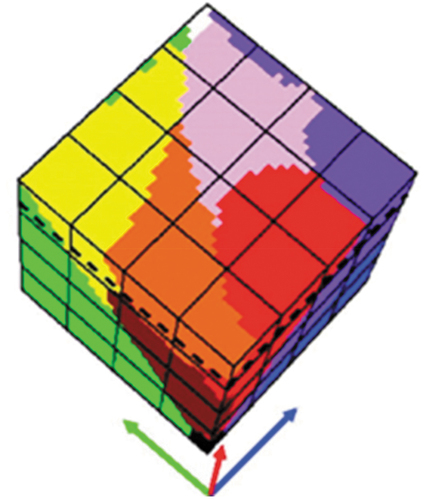

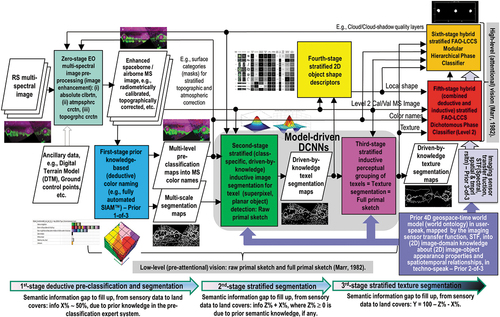

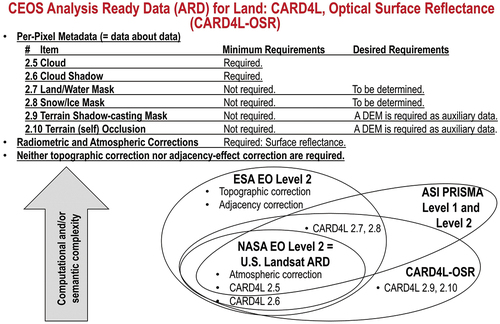

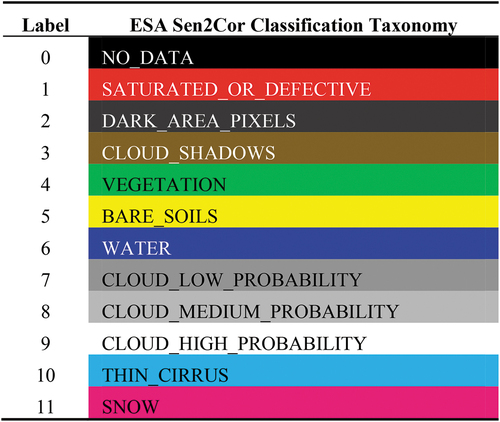

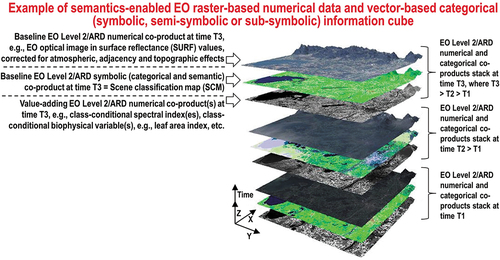

(4) (see ), a low-level ‘CV ⊃ EO-IU’ subsystem is implemented as a top-down/ deductive/ physical model-based (“handcrafted”) 2 grid (array) of wavelet-based multi-scale multi-orientation low-pass 2D Gaussian filters and band-pass trimodal even-symmetric 2D spatial filters (Baraldi, Citation2017). It is equivalent to a prior knowledge-based (physical model-based) deep convolutional neural network (DCNN), alternative to popular inductive DCNNs learned-from-data end-to-end (Cimpoi et al., Citation2014; Krizhevsky et al., Citation2012). Capable of automatic zero-crossing image-contour detection and image segmentation/partitioning, it complies with human visual perception (Baraldi, Citation2017; Baraldi et al., Citation2018a, Citation2018b; Baraldi & Tiede, Citation2018a, Citation2018b; Iqbal & Aggarwal, Citation2001), with regard to: (1) the Mach bands visual illusion (Pessoa, Citation1996) and (2) the perceptual true-fact that human panchromatic and chromatic vision mechanisms are nearly as effective in scene-from-image reconstruction and understanding. It is tested on complex spaceborne/airborne EO optical images in agreement with a stepwise approach, consistent with the property of systematicity of natural language/thought (Fodor, Citation1998; Peirce, Citation1994; Rescorla, Citation2019; Saba, Citation2020a) (refer to Subsection 3.4), to be regarded as commonsense knowledge, agreed upon by the entire human community (refer to Section 2). Testing images start from “simple” synthetic imagery of “known”, but challenging complexity in 2D spatial and colorimetric information components. If first-level testing with synthetic imagery is successful, next, natural panchromatic and RGB images, intuitive to cope with, are adopted for second-level testing purposes. Finally, complex spaceborne/airborne EO optical imagery, depicting manmade objects of known shape and size together with textured areas, are employed for third-level testing. (a) SUSAN synthetic panchromatic image, byte encoded in range {0, 255}, image size (rows × columns × bands) = 370 × 370 × 1. No histogram stretching applied for visualization purposes. Step edges and ramp edges at known locations (the latter forming the two inner rectangles visible at the bottom right corner) form angles from acute to obtuse. According to human vision, 31 image-segments can be detected as reference “ground-truth”. (b) Sum (synthesis) of the wavelet-based near-orthogonal multi-scale multi-orientation image decomposition. Filter value sum in range from −255.0 to +255.0. (c) Automated (requiring no human–machine interaction) image segmentation into zero-crossing segments generated from zero-crossing pixels detected by the multi-scale multi-orientation trimodal even-symmetric 2D spatial filter bank, different from Marr’s single-scale isotropic zero-crossing pixel detection (Marr, Citation1982). Exactly 31 image-segments are detected with 100% contour accuracy. Segment contours depicted with 8-adjacency cross-aura values in range {0, 8}, see . (d) Image-object mean view = object-wise constant input image reconstruction. No histogram stretching applied for visualization purposes. (e) Object-wise constant input image reconstruction compared with the input image, per-pixel root mean square error (RMSE) in range [0.0, 255.0], equivalent to a vector discretization/quantization (VQ) quality assessment in image decomposition (analysis, encoding) and reconstruction (synthesis, decoding) (Baraldi, Citation2017, Citation2019a; Baraldi et al., Citation2010a, Citation2010b, Citation2018a, Citation2018b, Citation2006; Baraldi & Tiede, Citation2018a, Citation2018b). (f) Natural panchromatic image of an actress wearing a Venetian carnival mask, image size (rows × columns × bands) = 438 × 310 × 1. No histogram stretching applied for visualization purposes. (g) Same as (b). (h) Same as (c), there is no CV system’s free- parameter to be user-defined. (i) Same as (d). (l) Same as (e). (m) Natural RGB-color image of an actress wearing a Venetian carnival mask, image size (rows × columns × bands) = 438 × 310 × 3. No histogram stretching applied for visualization purposes. (n) Same as (b). (o) Same as (c), there is no CV system’s free- parameter to be user-defined. (p) Same as (d). (q) Same as (e). (r) Zoom-in of a Sentinel-2A MSI Level-1 C image of the Earth surface south of the city of Salzburg, Austria. Acquired on 2015–09-11. Spatial resolution: 10 m. Radiometrically calibrated into top-of-atmosphere reflectance (TOARF) values in range [0.0, 1.0], byte-coded into range {0, 255}, it is depicted as a false color RGB image, where: R = Middle-InfraRed (MIR) = Band 11, G = Near IR (NIR) = Band 8, B = Blue = Band 2. Standard ENVI histogram stretching applied for visualization purposes. Image size (rows × columns × bands) = 545 × 660 × 3. (s) Same as (b). (t) Same as (c), there is no CV system’s free-parameter to be user-defined. (u) Same as (d), but now a standard ENVI histogram stretching is applied for visualization purposes. (v) Same as (e).