Figures & data

Table 1. Data sets for gender detection

Table 2. Data sets for speaker identification. The number of each speaker’s audios are shown below at corresponding each Speaker ID

Table 3. Hyper-parameters of MLP used for both tasks

Table 4. Hyper-parameters of CNN used for both tasks

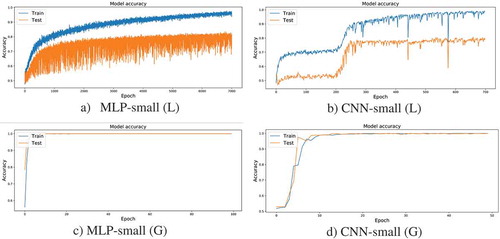

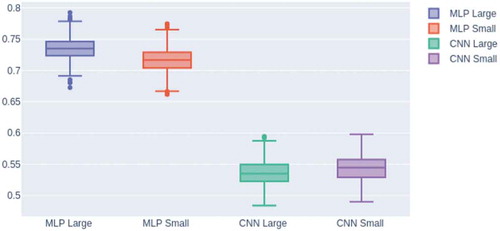

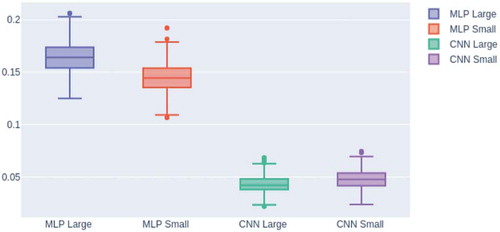

Table 5. Results for gender recognition with different features: L denotes for using the normalized fattened long mfcc vector; G denotes for using the z-score and Gramian matrix transformation. P is precision, R is recall, and F1 is F1-score

Table 6. Results for speaker identification