Figures & data

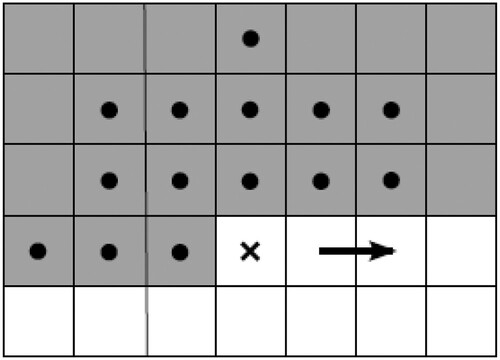

Figure 1. Unilateral contextual neighbourhood of sixth-order used for CAR model. X marks the current pixel, the bullets are pixels in the neighbourhood, the arrow shows movement direction, and the grey area indicates acceptable neighbourhood pixels.

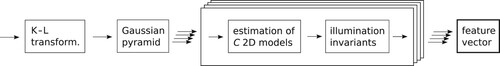

Figure 2. The texture analysis algorithm flowchart uses 2D random field models; the K-L transformation step is optional.

Table 1. The sizes of feature vectors of compared textural features.

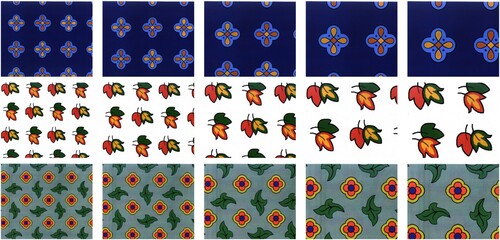

Figure 5. The appearance of patterns from the UEA database with varying scales, from the left, the scale factor is 50%, 60%, 75%, 90%, and 100%.

Figure 11. The appearance of two veneers from the Wood UTIA BTF database in varying scales, from the left, the scale factor is 50%, 60%, 75%, 90%, and 100%.

Figure 4. The appearance of patterns from the UEA database with varying illumination spectra (3 columns on the left) and additional acquisition devices (2 columns from the right).

Table 2. Classification accuracy [%] averaged over all scales and illumination conditions on the UEA dataset.

Figure 6. The illustration of the classification accuracy [%] progresses with decreasing scale differences among training and test sets (UEA dataset). On the left, for one training sample, and on the right, for six training samples per class.

![Figure 6. The illustration of the classification accuracy [%] progresses with decreasing scale differences among training and test sets (UEA dataset). On the left, for one training sample, and on the right, for six training samples per class.](/cms/asset/71619ebf-f158-4a4c-b30f-12cdd154c7e4/tjit_a_2265190_f0006_oc.jpg)

Figure 7. The classification accuracy [%] for all combinations of scales among training and test sets on the UEA dataset, one training sample per class was used.

![Figure 7. The classification accuracy [%] for all combinations of scales among training and test sets on the UEA dataset, one training sample per class was used.](/cms/asset/50c4d433-7ebf-4f22-8922-92e7c4dd044c/tjit_a_2265190_f0007_oc.jpg)

Figure 8. Classification accuracy [%] on the UEA dataset with one training sample, on the left for the training sample with the scale factor of 1 and on the right with the scale factor of 0.5.

![Figure 8. Classification accuracy [%] on the UEA dataset with one training sample, on the left for the training sample with the scale factor of 1 and on the right with the scale factor of 0.5.](/cms/asset/147db2d1-2a42-44ef-beec-24fd6691eba9/tjit_a_2265190_f0008_oc.jpg)

Figure 10. The illustration of the appearance of four veneers from the Wood UTIA BTF database in varying illumination directions. The left column is illuminated from the surface normal, and the direction of illumination tilt increases to the right: 0, 30, 60, 60, and 75 degrees, illumination azimuth is 0, 90, 180, 252, and 345 degrees, respectively.

Table 3. Classification accuracy [%] averaged over all scales and illumination angles on the Wood UTIA BTF dataset.

Figure 12. Classification accuracy [%] progresses with decreasing scale differences among training and test sets (Wood UTIA BTF). On the left, for one training sample, and on the right, for six training samples per class.

![Figure 12. Classification accuracy [%] progresses with decreasing scale differences among training and test sets (Wood UTIA BTF). On the left, for one training sample, and on the right, for six training samples per class.](/cms/asset/f7f860fc-dc96-4eb6-be0d-d5c3b93292f6/tjit_a_2265190_f0012_oc.jpg)

Figure 13. The classification accuracy [%] for all combinations of scales among training and test sets on the Wood UTIA BTF dataset, one training sample per class was used.

![Figure 13. The classification accuracy [%] for all combinations of scales among training and test sets on the Wood UTIA BTF dataset, one training sample per class was used.](/cms/asset/ead7d251-77d8-46a3-a2a5-4f04fc99742b/tjit_a_2265190_f0013_oc.jpg)

Figure 14. Classification accuracy [%] on the Wood UTIA BTF dataset with one training sample, on the left for the training sample with the scale factor of 1 and on the right with scale factor of 0.5.

![Figure 14. Classification accuracy [%] on the Wood UTIA BTF dataset with one training sample, on the left for the training sample with the scale factor of 1 and on the right with scale factor of 0.5.](/cms/asset/fbd3b972-4615-42d5-b602-55bffe25b34f/tjit_a_2265190_f0014_oc.jpg)

Table 4. Classification accuracy [%] is shown for different illumination tilts (declination angle from the surface normal) without any scale variation (Wood UTIA BTF).