Figures & data

Table 1. Iteration steps of PM, NAG, and TAG algorithms with learning rate , while the steps of GD algorithm is 117.

Table 2. Iteration steps of PM, NAG, and TAG algorithms with learning rate , while the steps of GD algorithm is 54.

Table 3. Iteration steps of PM, NAG, and TAG algorithms with learning rate , while the steps of GD algorithm is 44.

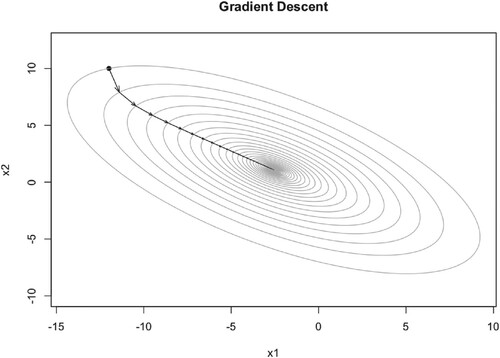

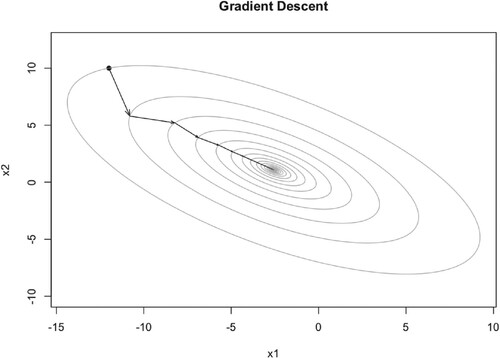

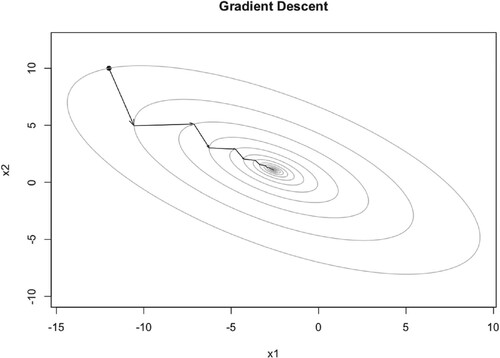

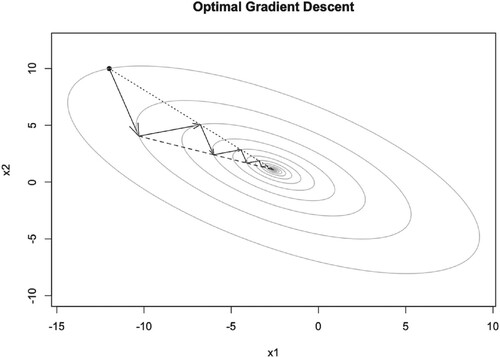

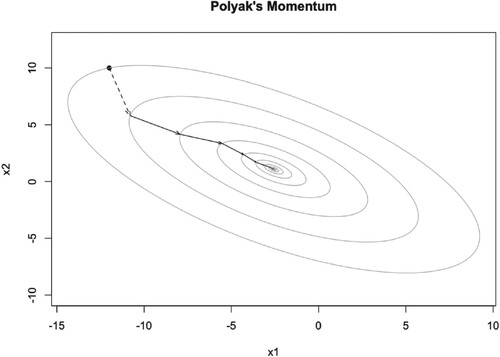

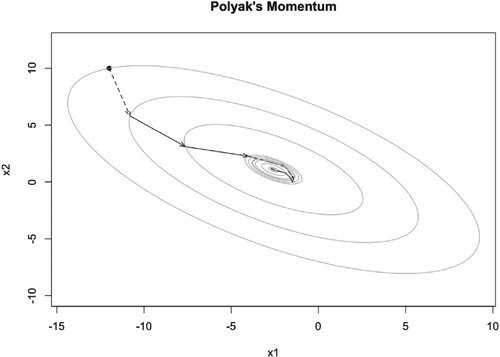

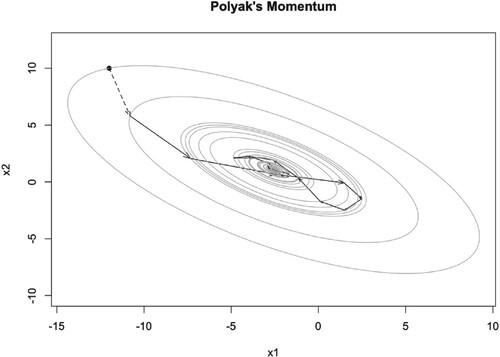

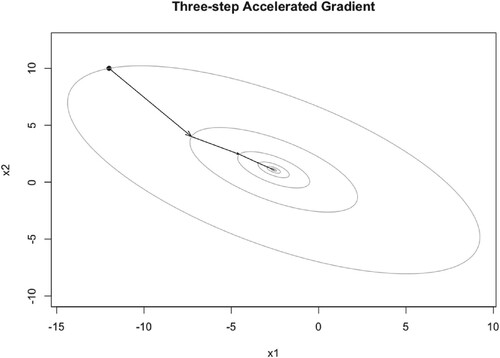

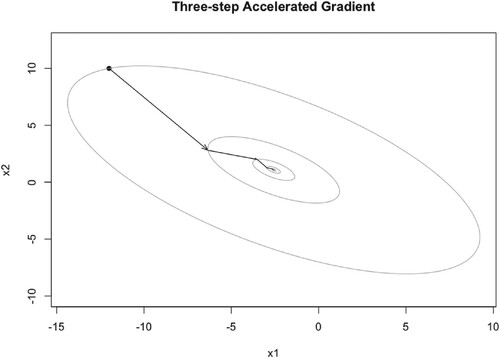

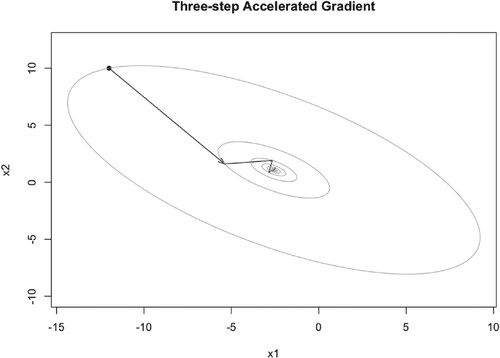

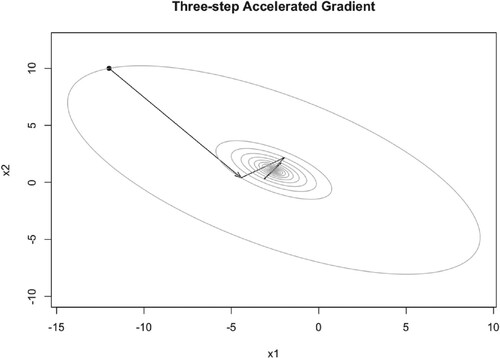

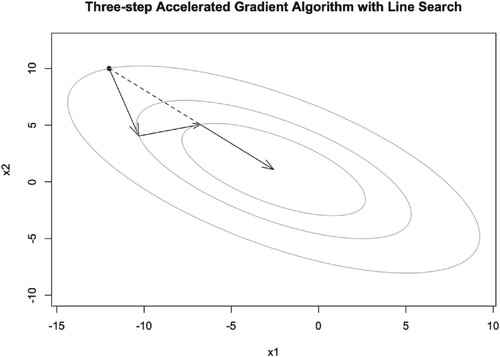

Figure 12. Illustration of TAG iteration trajectory for two-dimensional quadratic function with line search.

Table 4. Iteration steps and runtime (within parentheses) of 10-dimensional quadratic function.

Table 5. Iteration steps and runtime (within parentheses) of 100-dimensional quadratic function.

Table 6. Iteration steps and runtime (within parentheses) of 500-dimensional quadratic function.

Table 7. Iteration steps, runtime (within parentheses), and optimal μ with its range (within brackets) for 10-dimensional FLETCHCR function.

Table 8. Iteration steps, runtime (within parentheses), and optimal μ with its range (within brackets) for 100-dimensional FLETCHCR function.

Table 9. Iteration steps, runtime (within parentheses), and optimal μ with its range (within brackets) for 500-dimensional FLETCHCR function.

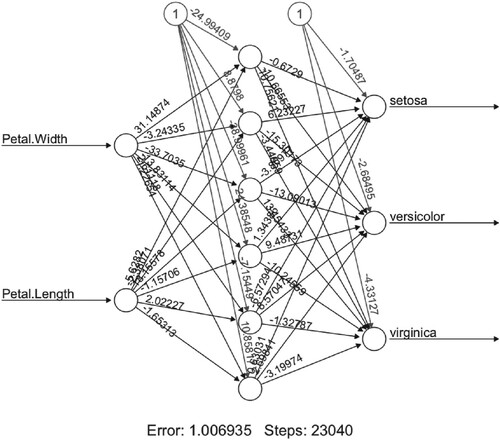

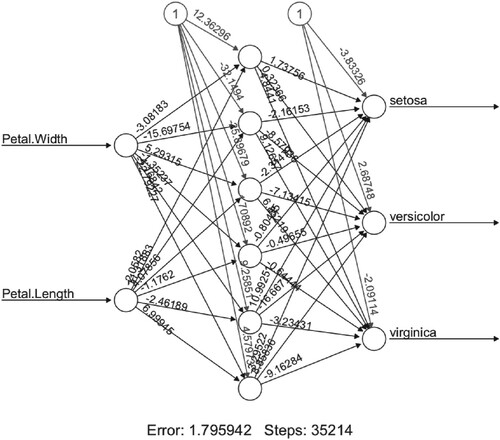

Table 10. Iteration steps and runtime (within parentheses) of BPPM, BPNAG, and BPTAG algorithms with learning rate for iris data set, while the steps and runtime of BPGD algorithm are 64822 and 17.88s respectively.

Table 11. Iteration steps and runtime (within parentheses) of BPPM, BPNAG, and BPTAG algorithms with learning rate for iris data set, while the steps and runtime of BPGD algorithm is 48849 and 11.77s respectively.

Table 12. Iteration steps and runtime (within parentheses) of BPPM, BPNAG, and BPTAG algorithms with learning rate for iris data set, while the steps and runtime of BPGD algorithm is 33503 and 8.09s respectively.

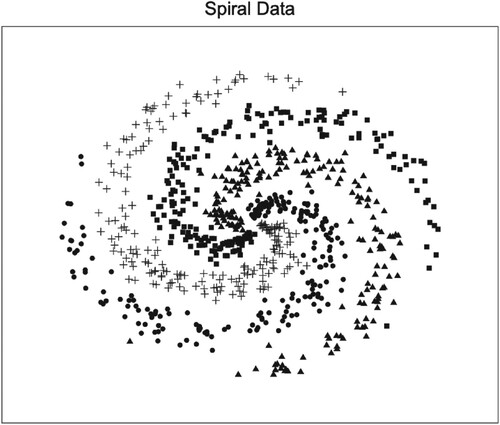

Table 13. Training accuracy of BPPM, BPNAG, and BPTAG algorithms with learning rate for spiral data set, while the accuracy of BPGD algorithm is 0.8775.

Table 14. Training accuracy of BPPM, BPNAG, and BPTAG algorithms with learning rate for spiral data set, while the accuracy of BPGD algorithm is 0.8950.

Table 15. Training accuracy of BPPM, BPNAG, and BPTAG algorithms with learning rate for spiral data set, while the accuracy of BPGD algorithm is 0.8975.

Table 16. Iteration steps and runtime (within parentheses) of BPPMSGD, BPNASG, and BPTASG algorithms with learning rate for iris data set, while the steps and runtime of BPSGD algorithm is 123859 and 22.13s respectively.

Table 17. Training accuracy of BPPMSGD, BPNASG, and BPTASG algorithms with learning rate for spiral data set, while the accuracy of BPSGD algorithm is 0.8887.