Figures & data

Figure 1. The eight rudimentary surgical gestures, or surgemes, common in a four-throw suturing task, as defined by a senior cardiac surgeon. 1) Reach for needle. 2) Position needle. 3) Insert and push needle through tissue. 4) Move to middle with needle (left hand). 5) Move to middle with needle (right hand). 6) Pull suture with left hand. 7) Pull suture with right hand. 8) Orient needle with both hands. [Color version available online.]

![Figure 1. The eight rudimentary surgical gestures, or surgemes, common in a four-throw suturing task, as defined by a senior cardiac surgeon. 1) Reach for needle. 2) Position needle. 3) Insert and push needle through tissue. 4) Move to middle with needle (left hand). 5) Move to middle with needle (right hand). 6) Pull suture with left hand. 7) Pull suture with right hand. 8) Orient needle with both hands. [Color version available online.]](/cms/asset/c509d97b-83f0-4a0e-9ada-f5d07d47d84f/icsu_a_198819_f0001_b.jpg)

Table I. The 72-data-point subset used in the study. The full 192-data-point da Vinci API also contains Cartesian positions, rotation matrices and other data.

Figure 2. Functional block diagram of the system used to recognize elementary surgical motions in this study.

Figure 3. Creating a super feature vector that encapsulated temporal information from neighboring data samples. This example assumes a subsampling granularity of s = 2. [Color version available online.]

![Figure 3. Creating a super feature vector that encapsulated temporal information from neighboring data samples. This example assumes a subsampling granularity of s = 2. [Color version available online.]](/cms/asset/22560205-e5c8-43ef-9af3-df57d98f9ab3/icsu_a_198819_f0003_b.jpg)

Figure 4. Cartesian position plots of the da Vinci left master manipulator, identified by motion class, during performance of a 4-throw suturing class. The left plot (a) depicts the data for the expert surgeon while the right (b) represents the intermediate surgeon. [Color version available online.]

![Figure 4. Cartesian position plots of the da Vinci left master manipulator, identified by motion class, during performance of a 4-throw suturing class. The left plot (a) depicts the data for the expert surgeon while the right (b) represents the intermediate surgeon. [Color version available online.]](/cms/asset/5220dc4a-7b90-4cfb-b6f7-358cb71f2484/icsu_a_198819_f0004_b.jpg)

Figure 5. Recognition rates of the four motion class definitions for the expert surgeon across all 14 temporality sizes with window removal sizes of w = (3, 5, 7). Motion class 11234455 had the highest average recognition rate across all 3 dimensions. [Color version available online.]

![Figure 5. Recognition rates of the four motion class definitions for the expert surgeon across all 14 temporality sizes with window removal sizes of w = (3, 5, 7). Motion class 11234455 had the highest average recognition rate across all 3 dimensions. [Color version available online.]](/cms/asset/271fca1d-d848-4283-a793-408ba9a23bf0/icsu_a_198819_f0005_b.jpg)

Figure 6. Recognition rates of the six motion class definitions for the intermediate surgeon across all 14 temporality sizes with window removal sizes of w = (3, 5, 7). Motion class 12344567 had the highest average recognition rate across dimensions 4 and 5, while 12233456 had the highest rate for dimension 3. [Color version available online.]

![Figure 6. Recognition rates of the six motion class definitions for the intermediate surgeon across all 14 temporality sizes with window removal sizes of w = (3, 5, 7). Motion class 12344567 had the highest average recognition rate across dimensions 4 and 5, while 12233456 had the highest rate for dimension 3. [Color version available online.]](/cms/asset/5dd2ae0b-9dca-4271-9eda-568c409afc90/icsu_a_198819_f0006_b.jpg)

Table II. The four motion classes used for the expert data. Each motion class defines the mapping from the surgeme in the vocabulary to the corresponding motion in the class.

Table III. The six motion classes used for the intermediate data. Each motion class defines the mapping from the surgeme in the vocabulary to the corresponding motion in the class.

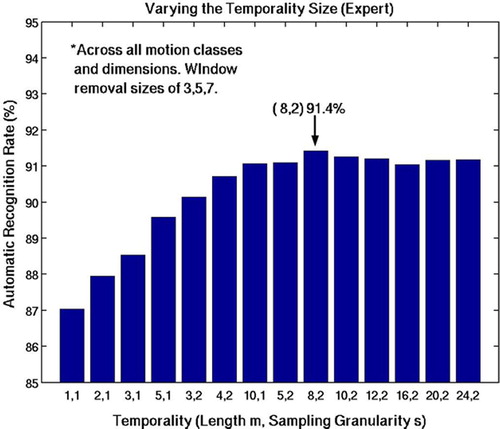

Figure 7. Having a temporal length m = 8 and a subsampling granularity of s = 2 resulted in the highest average recognition rate. Each pair was tested across all 4 motion classes, all 3 dimensions, and on transition removal sizes of 3, 5 and 7. Note that not using any temporal information resulted in the lowest average recognition rates.

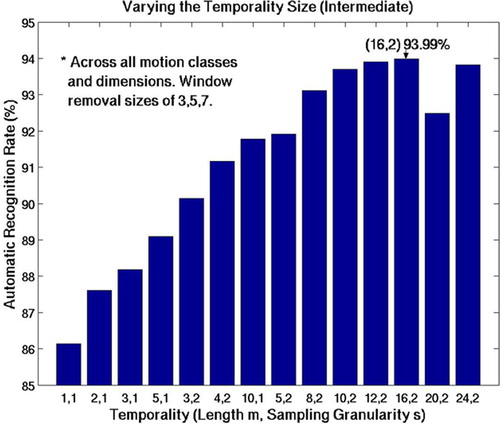

Figure 8. Having a temporal length m = 16 and a subsampling granularity of s = 2 resulted in the highest average recognition rate. Each pair was tested across all 6 motion classes, all 3 dimensions, and on transition removal sizes of 3, 5 and 7. Note that not using any temporal information resulted in the lowest average recognition rates.

Table IV. The various combinations of temporal region m and subsampling rate s. The ordering is based on increasing temporal window size (sm + 1).

Figure 9. The result of LDA reduction with m = 6 and d = 3. The motions of the expert surgeon (left) separate more distinctly than those of the intermediate surgeon (right). [Color version available online.]

![Figure 9. The result of LDA reduction with m = 6 and d = 3. The motions of the expert surgeon (left) separate more distinctly than those of the intermediate surgeon (right). [Color version available online.]](/cms/asset/937dd318-0e6e-4fe6-b2cc-0126b7c6fb43/icsu_a_198819_f0009_b.jpg)

Figure 10. Comparison of automatic segmentation of robot-assisted surgical motion with manual segmentations. Note that most errors occur at the transitions. [Color version available online.]

![Figure 10. Comparison of automatic segmentation of robot-assisted surgical motion with manual segmentations. Note that most errors occur at the transitions. [Color version available online.]](/cms/asset/c15ba6b0-1493-4ecc-922e-29e596016ba6/icsu_a_198819_f0010_b.jpg)

Figure 11. Results of varying window removal size across all temporality sizes and motion classes, and with LDA dimension 4 for the expert data. Removal of a window size of 5 or 7 returned the highest recognition rates. [Color version available online.]

![Figure 11. Results of varying window removal size across all temporality sizes and motion classes, and with LDA dimension 4 for the expert data. Removal of a window size of 5 or 7 returned the highest recognition rates. [Color version available online.]](/cms/asset/0a2901e1-5819-42ed-a7c5-14e3699b8674/icsu_a_198819_f0011_b.jpg)

Figure 12. Results of varying window removal size across all temporality sizes and motion classes, and with LDA dimension 5 for the intermediate data. Removal of a window size of 5 or 7 returned the highest recognition rates. [Color version available online.]

![Figure 12. Results of varying window removal size across all temporality sizes and motion classes, and with LDA dimension 5 for the intermediate data. Removal of a window size of 5 or 7 returned the highest recognition rates. [Color version available online.]](/cms/asset/ee390c35-fc72-4552-b1fb-5f75a96daec1/icsu_a_198819_f0012_b.jpg)

Table V. Results of training on both the expert and intermediate data sets, which barely decreased the recognition rates of the classifier. A motion class definition of 12344455, with temporal size of m = 8 and s = 2 and LDA dimension of 5 was used. The comparison parameters for the standalone are expert = {11234455, m = 8, s = 2, d = 5} and intermediate = {12344567, m = 16, s = 2, d = 5}.