Abstract

Hoerl and Kennard (Citation1970a) introduced the ridge regression estimator as an alternative to the ordinary least squares (OLS) estimator in the presence of multicollinearity. In ridge regression, ridge parameter plays an important role in parameter estimation. In this article, a new method for estimating ridge parameters in both situations of ordinary ridge regression (ORR) and generalized ridge regression (GRR) is proposed. The simulation study evaluates the performance of the proposed estimator based on the mean squared error (MSE) criterion and indicates that under certain conditions the proposed estimators perform well compared to OLS and other well-known estimators reviewed in this article.

1 Introduction

In the presence of multicollinearity OLS estimator yields regression coefficients whose absolute values are too large and whose signs may actually reverse with negligible changes in the data (Buonaccorsi, Citation1996). Whenever the multicollinearity presents in the data, the OLS estimator performs ‘poorly’. The method of ridge regression, proposed by Hoerl and Kennard (Citation1970a) is one of the most widely used tools to the problem of multicollinearity. In a ridge regression an additional parameter, the ridge parameter (k) plays an vital role to control the bias of the regression toward the mean of the response variable. In addition, they proposed the generalized ridge regression (GRR) procedure that allows separate ridge parameter for each regressor. Using GRR, it is easier to find optimal values of ridge parameter, i.e., values for which the MSE of the ridge estimator is minimum. In addition, if the optimal values for biasing constants differ significantly from each other then this estimator has the potential to save a greater amount of MSE than the OLS estimator (Stephen and Christopher, Citation2001). In both ORR and GRR as ‘k’ increases from zero and continues up to infinity, the regression estimates tend toward zero. Though these estimators result in biased, for certain value of k, they yield a minimum mean squared error (MMSE) compared to the OLS estimator (see Hoerl and Kennard, Citation1970a). Ridge parameter ‘k’ proposed by Hoerl et al. (Citation1975) performs fairly well.

Much of the discussions on ridge regression concern the problem of finding good empirical value of k. Recently, many researchers have suggested various methods for choosing ridge parameter in ridge regression. These methods have been suggested by Hoerl and Kennard (Citation1970a), Hoerl et al. (Citation1975), McDonald and Galarneau (Citation1975), Hocking et al. (Citation1976), Lawless and Wang (Citation1976), Gunst and Mason (Citation1977), Lawless (Citation1978), Nomura (Citation1988)", Heath and co-workers (Citation1979), Nordberg (Citation1982), Saleh and Kibria (Citation1993), Haq and Kibria (Citation1996), Kibria (Citation2003), Pasha and Shah (Citation2004), Khalaf and Shukur (Citation2005), Norliza et al. (Citation2006), Alkhamisi and Shukur (Citation2007), Mardikyan and Cetin (Citation2008), Dorugade and Kashid (Citation2010) and Al-Hassan (Citation2010)" to mention a few. The objective of the article is to investigate some of the existing popular techniques that are available in the literature and to make a comparison among them based on mean square properties. Moreover, we suggested some methods for estimating ridge parameters in ORR and GRR which produce ridge estimators that yield minimum MSE than other estimators. The organization of the article is as follows.

In this article, we introduce alternative ordinary and generalized ridge estimators and study their performance by means of simulation techniques. Comparisons are made with other ridge-type estimators evaluated elsewhere, and the estimators to be included in this study are described in Section 2. In Section 3, we propose some new methods for estimating the ridge parameter. In Section 4, we illustrate the simulation technique that we have adopted in the study and related results of the simulations appear in the tables and figures. In Section 5, we give a brief summary and conclusion.

2 Model and estimators

Consider, a widely used linear regression model(1) where Y is a n × 1 vector of observations on a response variable. β is a p × 1 vector of unknown regression coefficients, X is a matrix of order (n × p) of observations on ‘p’ predictor (or regressor) variables and ε is an n × 1 vector of errors with E(ε) = 0 and V(ε) = σ 2In. For the sake of convenience, we assume that the matrix X and response variable Y are standardized in such a way that X′X is a non-singular correlation matrix and X′Y is the correlation between X and Y. The paper is concerned with data exhibited with multicollinearity leading to a high MSE for β meaning that

is an unreliable estimator of β.

Let ∧ and T be the matrices of eigen values and eigen vectors of X′X, respectively, satisfying T′X′XT = ∧ = diagonal (λ1, λ2 , …, λp), where λi being the ith eigen value of X′X and T′T = TT′ = Ip we obtain the equivalent model(2) where Z = XT, it implies that Z′Z = ∧, and α = T′β (see Montogomery et al. (Citation2006)) Then OLS estimator of α is given by

(3) Therefore, OLS estimator of β is given by

2.1 Generalized ridge estimator (GRR)

The GRR estimator of α is defined by(4) where K = diagonal(k1,k2 … kp), ki ⩾ 0, i = 1,2, …, p be the different ridge parameters for different regressors and A = ∧ + K.

Hence GRR estimator for β is .

and mean square error of is

(5) In case of GRR, various methods are available in the literature to determine the separate ridge parameter for each regressor. Among these, well known methods for determination of ridge parameter which are used in the further study are given below.

- (1)

Hoerl and Kennard (Citation1970a) have proposed the following ridge parameter

(6)

- (2)

Nomura (Citation1988) proposed a ridge parameter and it is given by

(7)

- (3)

Troskie and Chalton (Citation1996) proposed a ridge parameter and it is given by

(8)

- (4)

Firinguetti (Citation1999) proposed a ridge parameter and it is given by

(9)

- (5)

Batah et al. (Citation2008) proposed a ridge parameter and it is given by

(10) where,

is the ith element of

and

is the OLS estimator of σ2, i.e.

.

2.2 Ordinary ridge estimator (ORR)

Setting k1 = k2 = … = kp = k and k ⩾ 0, the Ordinary ridge regression (ORR) estimator of β is(11) and mean square error of

is

(12) We observe that, when k = 0 in Equation(12)

(12) , MSE of OLS estimator of α is recovered. Hence

Hoerl et al. (Citation1975) suggested that, the value of ‘k’ is chosen small enough, for which the mean squared error of ridge estimator, is less than the mean squared error of OLS estimator.

In case of ORR also, many researchers have suggested different ways of estimating the ridge parameter. Some of the well known methods for choosing the ridge parameter value are listed below.

| (1) |

| ||||

| (2) |

| ||||

| (3) |

| ||||

| (4) |

| ||||

| (5) |

| ||||

| (6) |

| ||||

| (7) |

| ||||

| (8) |

| ||||

| (9) |

| ||||

| (10) |

| ||||

| (11) |

| ||||

| (12) |

| ||||

| (13) |

| ||||

| (14) |

| ||||

| (15) |

| ||||

3 Proposed ridge parameter

Hoerl and Kennard (Citation1970a) conclude that, bias and total variance of the parameter estimates are, respectively monotonically increasing and decreasing functions of ridge parameters. They also suggested a value of ith ridge parameter ki(HK) used in GRR given in Equation(6)(6) . Hoerl et al. (Citation1975) suggests the modification in ki(HK) used in ORR which performs fairly well. Lawless and Wang (Citation1976) suggest the modification in ki(HK) to reduce the bias by multiplying the ith eigen value λi to the denominator of Equation(6)

(6) to keep the variation depends on the strength of the multicollinearity. Their estimator reduces the bias but results in greater total variance of the parameter estimates.

In this article, we suggest a estimator that takes a little bias than estimator given by Hoerl et al. (Citation1975) and substantially reduces the total variance of the parameter estimates than the total variance using estimator given by Lawless and Wang (Citation1976), thereby improving the mean square error of estimation and prediction. We suggest the modification by multiplying λmax/2 to the denominator of Equation(6)(6) . The suggested estimator is:

(28) where λmax is the largest eigen value of X′X.

This leads to the denominator of the alternative estimator given by Equation(28)(28) being greater than that of Hoerl and Kennard (Citation1970a) by λmax/2. Hence, we can write

It clearly indicates that our suggested estimator lies in between the estimators given by Hoerl et al. (Citation1975) and Lawless and Wang (Citation1976)". Kibria (Citation2003) suggested optimal ridge parameters by proposing new ridge parameters by modifying the quantity

. By adopting algorithms outlined in Kibria (Citation2003), we propose new methods to determine ridge parameters in case of ORR for the ridge parameter k as below,

(29)

(30)

(31)

(32) From (29)–(31), (30)

is the ith element of

and

is the OLS estimator of σ2, i.e.

.

Result 1

Result 2

If λmax is close to ‘p’ then k4(AD) ≅ 2k14, and if λmax is close to ‘1’ then k4(AD) ≅ 2k1.

Hoerl et al. (Citation1975) have shown that . Using this, inequality from result 1,

. Hence k4(AD) satisfies the upper bound of ridge parameter stated by Hoerl and Kennard (Citation1970a).

Proposed estimator is examined by means of a simulation technique which we present in the next section.

4 Performance of the proposed ridge parameter

In this section, we examined the performance of the ridge estimator using the proposed ridge parameters in both ORR and GRR over the different ridge parameters (k) reviewed in this article. We examined the average MSE (AMSE) ratio of the ridge estimator using proposed ridge parameters and other ridge parameters over OLS estimator. Performances of new ridge estimators given in (28)–(32) are studied in two parts. In part A, performance for proposed ridge estimators is evaluated through simulation in case of ORR. Whereas, in part B a simulation study is carried out for evaluating the performance of proposed ridge estimators in case of GRR.

4.1 Part A

We consider the true model as Y = Xβ + ε. Here ε follows a normal distribution N(0, σ2In) and the explanatory variables are generated (see Batah et al., Citation2008) fromwhere uij is an independent standard normal random number and ρ2 is the correlation between xij and

for j, j′ < p and j ≠ j′. j, j′ = 1,2, …, p. When j or j′ = p, the correlation will be ‘ρ’. Here we consider predictor variables p = 4 and ρ = 0.9. These variables are standardized such that X′X is in the correlation form and it is used for the generation of Y with β = (2,3,5, 1)′. We have simulated the data with sample sizes n = 20, 50 and 100. The variance of the error terms is taken as σ2 = 1, 5, 10 and 25. Ridge estimates are computed using different ridge parameters given in (13)–(27) and (29)–(32). The MSE of such ridge regression parameters are obtained using Equation(12)

(12) . This experiment is repeated 2000 times and obtains the AMSE. Firstly, we computed the AMSE ratios (AMSE

/AMSE (

) of OLS estimator over different estimators for various values of triplet (ρ, n, σ2) and reported in . We consider the method that leads to the maximum AMSE ratio to the best from the MSE point of view.

Table 1 Ratio of AMSE of OLS over various ridge estimators for different ‘k’.

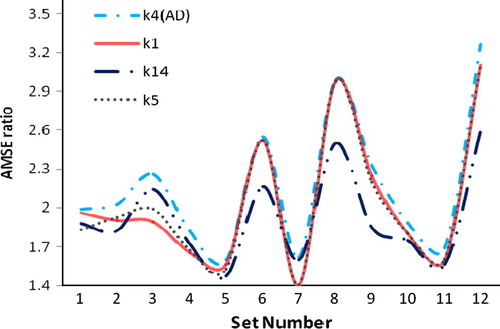

In the following figure (), we represent the same values reported in Here we noted that values of AMSE ratios only for k1,k5,k14 and k4(AD) are represented because these values for remaining choice of ‘k’ have less importance for the comparative study. Here input values are n, ρ and σ2. These input values are ordered according to the increase of values. For fixed value of ‘ρ’ changes values of ‘n’ and for fixed values of (ρ, n) changes the values of σ2. There are 12 sets of (ρ, n, σ2) values. These are arranged as (0.9,20,1), (0.9,20,5), …, (0.9,100,25) and it is numbered as 1, 2, …, 12, respectively.

Figure 1 Ratio of AMSE of OLS over various ridge estimators for different ‘k′ (p = 4, β = (2,3,5,1)′ and ρ = 0.9).

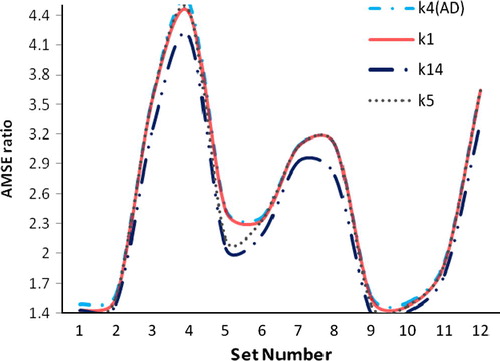

Same procedure for another choice of p = 3 and β = (3,1, 5)′ is done and AMSE ratios are computed and represented in .

Figure 2 Ratio of AMSE of OLS over various ridge estimators for different ‘k’ (p = 3, β = (3,1,5)′ and ρ = 0.9).

From , and , we observe that the performance of proposed ridge parameters k1(AD),k2(AD),k3(AD) and k4(AD) is better than OLS. Particularly k4(AD) performs equivalently and is little better than ridge parameters proposed by Hoerl et al. (Citation1975) and Dorugade and Kashid (Citation2010)" whereas, it gives better performance than other ridge parameters reviewed in this article for all combinations of correlation between predictors (ρ), sample size (n) and variance of the error term (σ2) used in this simulation study.

4.2 Part B

Here we evaluate the performance of proposed ridge parameters in case of GRR. Here we generate the data which exhibit with multicollinearity using the procedure for the generation of y with β = (2,3,5,1)′ as discussed in part A. We consider predictor variables p = 4 and ρ = 0.9. We have simulated the data with sample sizes n = 20, 50 and 100. The variance of the error term is taken as σ2 = 1,5,10 and 25. Generalized ridge estimators are computed using different ridge parameters given in (6)–(10) and Equation(28)(28) . The MSE of such ridge regression parameters are obtained using Equation(5)

(5) . This experiment is repeated 2000 times and obtains the AMSE.

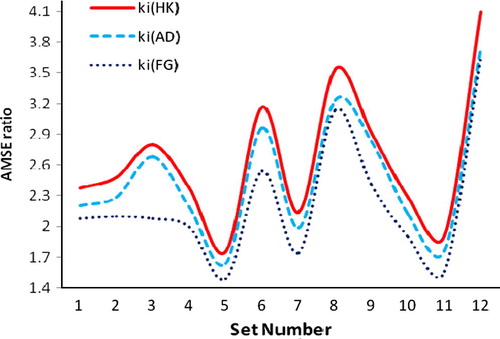

We computed the AMSE ratios (AMSE / AMSE

) of OLS estimator over different estimators for various values of triplet (ρ, n, σ2). These ratios are reported in and here we noted that values of AMSE ratios only for ki(HK), ki(FG) and ki(AD) are represented in

Figure 3 Ratio of AMSE of OLS over various ridge estimators for different ‘ki’ (p = 4, β = (2,3,5,1)′ and ρ = 0.9).

Table 2 Ratio of AMSE of OLS over various ridge estimators for different ‘ki’.

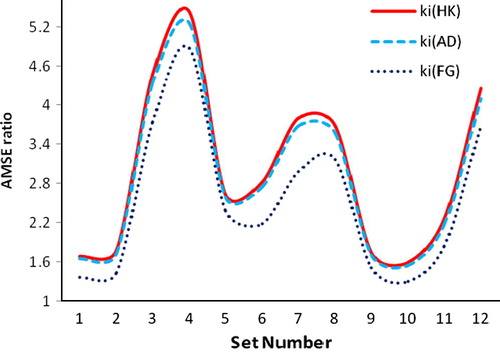

Same procedure for another choice of p = 3 and β = (3,1, 5)′ is done and AMSE ratios are represented in .

Figure 4 Ratio of AMSE of OLS over various ridge estimators for different ‘ki’ (p = 3, β = (3,1,5)′ and ρ = 0.9).

From , and , we conclude that proposed ridge parameter ki(AD) and ki(HK), the ridge parameter proposed by Hoerl and Kennard (Citation1970a) both perform equivalently. Whereas, the performance of ki (AD) is better than OLS, ki(HMO), ki(FG),ki(TC) and ki(F) for all combinations of correlation between predictors (ρ), sample size (n) and variance of the error term (σ2) used in this simulation study.

5 Conclusion

In this article we have proposed a new method for estimating the ridge parameter in the presence of multicollinearity. The performance of the proposed ridge parameter is evaluated through the simulation study, for different combinations of correlation between predictors (ρ), the number of explanatory variables (p), sample size (n) and variance of the error variable (σ2). The evaluation of our estimator has been done by comparing the AMSE ratios of OLS estimator over the proposed estimator and the other estimators reviewed in this article. Finally, we found that the performance of the proposed estimator is satisfactory over the other estimators in the presence of multicollinearity.

Acknowledgments

The author is very grateful to the reviewers and the editor for so detailed comments and constructive suggestions which resulted in the present version. The present studies were supported in part by University Grants Commission, India, Project No. F.No.23-1743/10 (WRO), dated 22.9.10.

Notes

Peer review under responsibility of University of Bahrain.

References

- Y.Al-HassanPerformance of new ridge regression estimatorsJournal of the Association of Arab Universities for Basic and Applied Science920102326

- M.A.AlkhamisiG.ShukurA Monte Carlo study of recent ridge parametersCommunications in Statistics – Simulation and Computation3632007535547

- F.S.BatahT.RamnathanS.D.GoreThe efficiency of modified jackknife and ridge type regression estimators: a comparisonSurveys in Mathematics and its Applications2422008157174

- J.P.BuonaccorsiA modified estimating equation approach to correcting for measurement error in regressionBiometrika831996433440

- A.V.DorugadeD.N.KashidAlternative method for choosing ridge parameter for regressionInternational Journal of Applied Mathematical Sciences492010447456

- L.FiringuettiA generalized ridge regression estimator and its finite sample propertiesCommunications in Statistics – Theory and Methods285199912171229

- G.H.GolubM.HeathG.WahbaGeneralized cross-validation as a method for choosing a good ridge parameterTechnometrics2121979215223

- R.F.GunstR.L.MasonBiased estimation in regression: an evaluation using mean squared errorJournal of the American Statistical Association721977616628

- M.S.HaqB.M.G.KibriaA shrinkage estimator for the restricted linear regression model: ridge regression approachJournal of Applied Statistical Science341996301316

- R.R.HockingF.M.SpeedM.J.LynnA class of biased estimators in linear regressionTechnometrics1841976425437

- A.E.HoerlR.W.KennardRidge regression: biased estimation for nonorthogonal problemsTechnometrics1219705567

- A.E.HoerlR.W.KennardK.F.BaldwinRidge regression: some simulationsCommunications in Statistics41975105123

- G.KhalafG.ShukurChoosing ridge parameter for regression problemCommunications in Statistics – Theory and Methods34200511771182

- B.M.KibriaPerformance of some new ridge regression estimatorsCommunications in Statistics – Simulation and Computation3222003419435

- J.F.LawlessP.WangA simulation study of ridge and other regression estimatorsCommunications in Statistics – Theory and Methods14197615891604

- J.F.LawlessRidge and related estimation procedureCommunications in Statistics – Theory and Methods721978139164

- S.MardikyanE.CetinEfficient choice of biasing constant for ridge regressionInternational Journal of Contemporary Mathematical Sciences3112008527547

- MasuoNomuraOn the almost unbiased ridge regression estimationCommunications in Statistics – Simulation and Computation1731988729743

- G.C.McDonaldD.I.GalarneauA Monte Carlo evaluation of some ridge-type estimatorsJournal of the American Statistical Association703501975407412

- D.C.MontogomeryE.A.PeckG.G.ViningIntroduction to Linear Regression Analysis2006John Wiley and Sons

- L.NordbergA procedure for determination of a good ridge parameter in linear regressionCommunications in StatisticsA111982285309

- A.NorlizaH.A.MaizahA.RobinA comparative study on some methods for handling multicollinearity problemsMathematika2222006109119

- G.R.PashaM.A.ShahApplication of ridge regression to multicollinear dataJournal of Research (Science)151200497106

- A.K.SalehB.M.KibriaPerformances of some new preliminary test ridge regression estimators and their propertiesCommunications in Statistics – Theory and Methods22199327472764

- G.W.StephenJ.P.ChristopherGeneralized ridge regression and a generalization of the Cp statisticJournal of Applied Statistics2872001911922

- C.G.TroskieD.O.ChaltonA Bayesian estimate for the constants in ridge regressionSouth African Statistical Journal301996119137