?Mathematical formulae have been encoded as MathML and are displayed in this HTML version using MathJax in order to improve their display. Uncheck the box to turn MathJax off. This feature requires Javascript. Click on a formula to zoom.

?Mathematical formulae have been encoded as MathML and are displayed in this HTML version using MathJax in order to improve their display. Uncheck the box to turn MathJax off. This feature requires Javascript. Click on a formula to zoom.Abstract

The qualitative behavior of the differential equation , where x is real and f is periodic in t, is determined by the attracting periodic solutions. This article gives an exposition of a method of Ilyashenko that uses complex analysis to determine an upper bound on the number of periodic solutions. The method is then applied to a well-known model of a single neuron or pool of neurons.

1 Introduction

The value of many real-valued quantities at time t can often be modeled by a differential equation of the form . A simple example is logistic population growth with periodic harvesting [Citation3]. If f(t, x) depends only on x, the equation is called autonomous. If the time dependence has period T, then interest focuses on the periodic solutions with period T, because these solutions include the attractors that exhibit the long-term behavior of the system. For small periodic perturbations of an autonomous equation, general conclusions can be drawn about the number of periodic solutions. For one such method, see [Citation3] and [Citation11]. However, for large perturbations, up until recently there was no general method for placing upper bounds on that number.

Yulij Ilyashenko has found such upper bounds by using one-variable complex analysis, showing once again the power of complex analysis in real variable problems. In [Citation15, Citation17], his approach is developed and then applied to special cases of Hilbert’s still partly unsolved sixteenth problem. We hope to encourage wider knowledge and broader application of this lovely work.

This article begins with an exposition of Ilyashenko’s method, continues with a description of a well-known neural model [25, pp. 78–80], and then applies Ilyashenko’s method to the neural model. The elements of Ilyashenko’s method are summarized after Theorem 2 in Section 3. In [Citation15], Ilyashenko applied his method to functions f(t, x) that are polynomial in x. We show how it also can be applied to transcendental functions such as those used in the neural model. The article concludes with questions and directions for further research. Those primarily interested in the neural application can begin with Section 4 and refer as necessary to previous sections.

After an introduction to differential equations and to complex variables, students may wish to attempt some of the problems posed in this article. For example, the exercise suggested after Proposition 10 (Section 6) only uses complex variables. Some special cases of Question 2 (Section 7) involve patching together solutions of first-order linear ordinary differential equations. Furthermore, methods involving nullclines (Section 4) and computer-assisted numerical computation can be used in the search for periodic solutions.

2 The Poincaré Map, Jensen’s Formula, and the Poincaré Metric

This section discusses the Poincaré map, Jensen’s formula, and the Poincaré metric. The first is central to the study of periodically forced differential equations. The other two topics can be approached after an undergraduate course in complex analysis. They are used in Section 3 to develop Ilyashenko’s method. As is common, in describing functions on subsets of the complex numbers, the terms analytic, complex analytic, and holomorphic will be used synonymously.

2.1 The Poincaré map

Consider a periodically forced differential equation , where for all t,

. For convenience, we assume that the period T is 1. Therefore, a periodic solution is a solution x(t) of the differential equation such that for all t,

. The time-one map P(x), usually referred to as the Poincaré map, is defined as follows [3, pp. 209–211], [11, p. 116]. Suppose x(t) is a solution with initial condition

. Then

, supposing that x(1) is defined. We will refer to

as the displacement map. Observe that periodic solutions of the differential equation are in one-to-one correspondence with fixed points of the Poincaré map P(x) and thus with zeros of the displacement map F(x). For example, the initial value problem

with

has the solution

with

. The Poincaré map is P(x) = ex and the displacement map is

. The only periodic solution is x(t) = 0, which corresponds to the fixed point x = 0 of the Poincaré map.

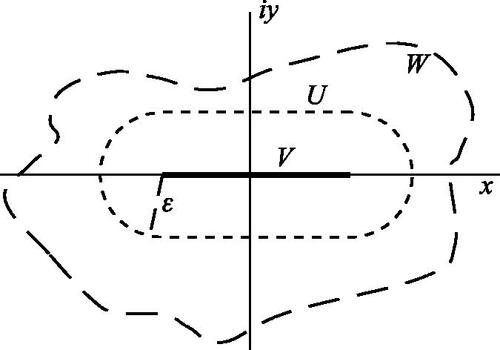

Let , where V is a finite real closed interval and W is an open neighborhood of V in the complex numbers

. Suppose the continuous function

is complex analytic for all fixed t and that f(t, z) restricts on

to a function f(t, x) determining a real-valued differential equation

. If the Poincaré map P(x) and thus the displacement map F(x) are defined for all x in V, it follows that the displacement map F(z) for the differential equation

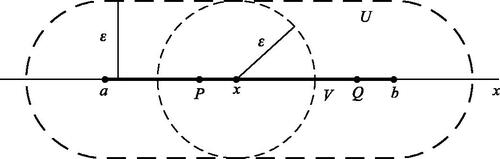

extends F(x) and that F(z) is defined and analytic on some ϵ-neighborhood U of V in

[7, p. 36], [22, p. 44] (see ). Ilyashenko applies to the displacement map F(z) his general theorem that gives an upper bound for the number of zeros on V of any function that is complex analytic in an ϵ-neighborhood U of V in

(Theorem 2).

2.2 Jensen’s formula

Ilyashenko’s method is based on a novel application of Jensen’s formula, a result that was published in 1899 in Acta Mathematica as a letter from Jensen to Mittag-Leffler, the founder and editor of that distinguished journal [Citation19]. This formula relates the position of the zeros of a function within a disk to the logarithm of its absolute value on the boundary of the disk. Accounts of how the foundations of complex analysis were understood at the time are found in [Citation6, Citation10].

Johan Jensen was a Danish electrical engineer and amateur mathematician who never held an academic position. It is surprising and also gratifying that this outsider was able to conjecture and prove in a few lines an important result that had eluded some of the greatest mathematicians of the nineteenth century. We are informed in a biographical article on Jensen by Børge Jessen, himself a distinguished Danish mathematician, that Jensen was an admirer of Weierstrass [Citation20]. This is evident in Jensen’s own proof of his formula [Citation19], a proof based on power series methods. Such methods were preferred by Weierstrass and his followers. Jensen uses the term holomorphic, a term introduced by followers of Cauchy, but the term was sometimes also used by followers of Weierstrass [6, p. 718]. The result is stated and proved elegantly and correctly, as one would expect from Jessen’s remark that “his papers are patterns of exact and precise exposition” [Citation20]. There is a great charm to Jensen’s letter. He begins by addressing Monsieur le Professeur and then chattily notes his attendance at some lectures recently given by the addressee. There is a buoyant enthusiasm throughout, from the title that announces a new and important result, to the hopes expressed at the end that his result will enable progress to be made on the Riemann conjecture regarding the zeros of the zeta function. While these hopes were not realized, Jensen’s formula was used extensively in succeeding investigations of entire functions; that is, functions holomorphic on the whole complex plane [1, p. 208].

Jensen’s Formula ([1, p. 207], [28, p. 181])

Suppose f is holomorphic in the closed disk of radius ρ, f(0) is nonzero, and f has n zeros in the interior of the disk, counted with multiplicities, and these zeros occur at points (see ). Then

Proofs of Jensen’s formula rely on variations of the following procedure that cancels the zeros of f in the interior of the disk of radius ρ. Let . Note that

. On the circle

, let

, and observe that for each term

the numerator and denominator have the same absolute value and so

. Therefore

on the circle

. We then take a branch of the complex logarithm of F(z). Jensen works with a power series representation that he integrates term by term about the circle [Citation19], Saks and Zygmund directly apply the Cauchy integral theorem for a circle [Citation28], while Ahlfors uses the result that

is a well-defined harmonic function on

and thus satisfies the mean value property for such functions [1, p. 165], a property that he previously establishes using the Cauchy integral theorem.

Ilyashenko relies on the following corollary to Jensen’s formula. As there are some subtle points, a proof of the corollary is given below.

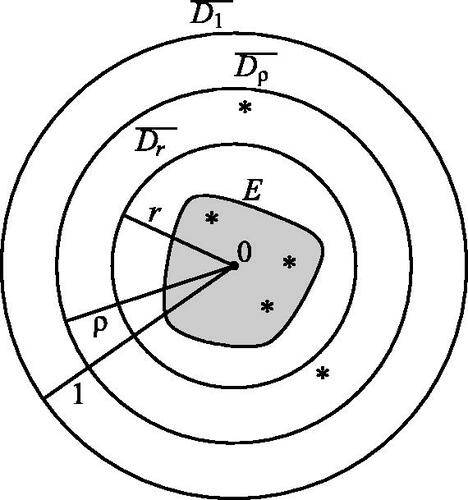

Corollary to Jensen’s Formula

Suppose f is holomorphic in the open disk D1 of radius 1 and continuous in the closed disk of radius 1 and f(0) is nonzero. Let

. Let E be a compact subset of the open disk D1 that is contained in a closed disk

of radius r, with

. Let N be the number of zeros counted with multiplicity of f restricted to E (see ). Then:

Fig. 3 Corollary to Jensen’s formula: n = 5 zeros of f(z) at points marked by ; N = 3 zeros in compact subset E of

.

.

If

, and

, then

.

Proof.

(a) We can choose ρ arbitrarily close to 1 with . f is holomorphic on the closed disk of radius ρ. Suppose f has n zeros in the interior of the disk of radius ρ, counted with multiplicity, and these zeros occur at points

. For all θ,

is contained in

and thus

. By Jensen’s formula,

and therefore

. For each zero ai

of f that is contained in

, we have

and thus

. So

, for all ρ with

. Letting ρ approach 1, we obtain the desired conclusion.

(b) Part (b) follows by assuming that . □

2.3 Poincaré metric

The Poincaré metric is well-suited to studying complex analytic functions on the open unit disk D1. A detailed treatment can be found in [Citation21].

For the Poincaré metric, a differentiable curve C in the disk D1, given by with

, has length determined by the integral

an integral that is often written invariantly as

. The distance

between two points in D1 is defined by the infimum of the lengths of all curves connecting z1 and z2. The term Poincaré metric refers both to the distance

and to the function

. This function is sometimes called the metric density. Note that when defining the Poincaré metric some writers such as those of [Citation21] divide by 2 the distance function and the metric density that are defined above.

The curve-lengths and distances are invariant under the fractional linear transformations that map the disk D1 homeomorphically to itself. These fractional linear transformations are the isometries of the Poincaré metric.

By using the Riemann mapping theorem, the Poincaré metric can also be defined on any open set U properly contained in provided that U is homeomorphic to D1. According to that justly celebrated theorem, the homeomorphism from U to D1 can be chosen to be a complex analytic function

. The distance

between two points in U can then be defined as

using the previous definition of the Poincaré metric on D1 (). The metric on U is well-defined because any two such complex analytic homeomorphisms

and

from U to D1 differ by a fractional linear transformation T of D1 (that is,

), and, as mentioned above, such transformations T are isometries of the Poincaré metric on D1. Similarly, the Riemann map

from U to D1 determines a positive real metric density

at each point z in U and the length of any curve C in U is given by

[Citation21]. If the curve is given parametrically by

with

, then the length of C is determined by the integral

.

The inclusion principle is a fundamental property of the Poincaré metric. Let dU

and dW

be the Poincaré metrics as defined above on U and W, respectively, where U is contained in W and let ΔU

and ΔW

be the respective metric densities. Then for all z1 and z2 in U, we have . Intuitively z1 and z2 are closer to the boundary of U than they are to the boundary of W, so their Poincaré distance in U is greater than their Poincaré distance in W (see ). Similarly, at all points z in U, we have

.

The Poincaré metric on an open disk of radius ϵ is an important special case for what follows. For , the open disk of radius ϵ centered at the origin, the Riemann map from U to D1 is defined by

. So

. Similarly, for any open disk U of radius ϵ centered at a point p in

, we obtain

.

3 Ilyashenko’s Theorems on Zeros and Periodic Solutions

In this section, we draw two theorems from Ilyashenko’s article [Citation15]. These results place upper bounds on the number of zeros of a complex analytic function f on a compact set K contained in a domain U homeomorphic to the open unit disk. The section concludes with an application of Ilyashenko’s method to a differential equation arising from Hilbert’s sixteenth problem. Formula (6) in [Citation15] should have a factor of 2 inside the exponential function and thus read . The appropriate changes have been made throughout this article including in the statement of Theorem 3.

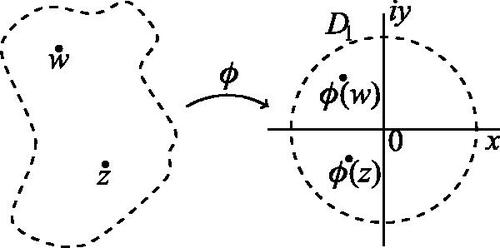

Theorem 1

([Citation15]). For some domain U, assume there is a function analytic on U that maps the closure

homeomorphically onto the closed disk

. These conditions are satisfied if U is the interior of a Jordan curve. Let f be holomorphic on U and continuous on its closure

, with

. Let K be a compact subset of U, with

such that

. Let N be the number of zeros counted with multiplicity of f(z) restricted to K and let σ be the diameter of K in the Poincaré metric on U. Then:

For any r < 1 such that

, we have

.

for

and

for

.

.

Proof.

(a) For a proof that the interior of a Jordan curve satisfies the hypothesis of Theorem 1, see [Citation30]. Choose a point P in K such that . By composing if necessary with a fractional linear transformation T on

, we can assume that

. The composition

is holomorphic on the open disk D1 of radius 1 and continuous on the closed disk

(see ) Then

, and N is the number of zeros counted with multiplicity of g restricted to

. The result follows by applying the corollary to Jensen’s formula to the function g and the compact set

.

(b) If σ = 0, it follows that K contains only one point and that N = 0. The theorem follows trivially. Therefore, we will assume that .

As above, chose a point P in K such that and

. Because

is an isometry between the Poincaré metric on U and the Poincaré metric on D1, σ is also the diameter of

in the Poincaré metric on D1. By the compactness of

, there is some closed disk with center 0 that contains

and has a minimal radius

with

; that is,

(). By part (a),

. It remains to determine

in terms of σ.

We observed above that 0 is in . Furthermore, by the definition of

, there is a point Q in

that lies on the boundary of the disk of radius

. Therefore, using the Poincaré metric on D1 and its isotropy property,

By elementary algebra and properties of the logarithm, for all with

, the inequality

is equivalent to the inequality

. Therefore,

.

The inequality implies

. Using calculus,

for all x,

. Therefore, for

, and so

.

The inequality implies

and thus

.

(c) Part (c) follows directly from part (b). ■

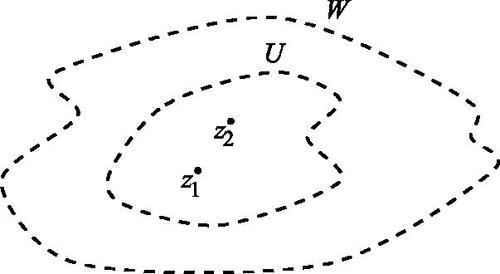

Theorem 2

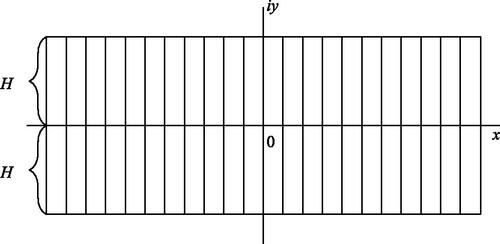

([Citation15]). Let the “stadium” U be the open ϵ-neighborhood of a finite closed real interval of length L in the usual Euclidean metric and with diameter σ in the Poincaré metric on U (see ). Let f be holomorphic on U and continuous on its closure

, with

and

. Let N be the number of zeros counted with multiplicity of f(z) restricted to V. Then:

Fig. 7 Theorem 2: The diameter σ of V in the Poincaré metric on U equals . As shown in the proof of Theorem 2,

.

.

.

Proof.

(a) Since V is compact, the diameter σ of V equals the distance of two points P and Q in V (see ). This distance is at most equal to the Poincaré arclength along V between P and Q which is at most equal to the length LU

of V in the Poincaré metric on U.

, where

is the metric density of U evaluated at x. For each x between a and b, let

be the open disk of radius ϵ centered at x, and let

be the metric density for the Poincaré metric on

(see ). Since

is contained in U, by the inclusion principle we have

for all z in

(see ). Letting z = x, we have

. Therefore,

where

is the usual Euclidean length of the interval V. So

.

(b) Part (b) follows from part (a) and Theorem 1c. ■

3.1 Ilyashenko’s method

Consider a periodically forced differential equation , where for all t,

. For convenience, we assume that the period T = 1. Upper bounds on the number of real periodic solutions of

are determined by upper bounds on the number of zeros of the displacement map F. Using Theorem 2b, the method requires

a closed interval V containing the initial points of all real periodic solutions,

an ϵ-neighborhood U of V with closure

on which the displacement map F is defined and analytic,

the maximum M of

on the closure of U, and

the maximum m of

on V such that

.

Using this method, Ilyashenko has obtained results pertinent to aspects of Hilbert’s sixteenth problem. For a survey, see [Citation16]. Hilbert’s problem involves upper bounds on the number of limit cycles of polynomial planar differential equations, but a simplified version involves a one-dimensional equation of the form that is polynomial in x and periodic in t. Recall that a limit cycle is an attracting or repelling periodic solution. Charles Pugh inquired whether there was an upper bound on the number n of limit cycles that depended only on the degree d of the polynomial.

For d = 2, there are no more than two limit cycles. Furthermore, in the cubic case, for with the coefficient

of constant sign for all t, it was known that there could be no more than three limit cycles [Citation3]. An important negative result was obtained by Lins Neto who showed that for any degree

, and any n > 0, there is such an equation having at least n limit cycles [Citation23]. In particular, in the cubic case d = 3, the constant sign condition on

is necessary to obtain the upper bound n = 3. However, it remained to determine upper bounds on n using not only the degree d but also properties of the coefficient functions of the polynomial.

Theorem 3

([Citation15]). Suppose f(t, x) is of period 1 in t and polynomial of degree in x with the xd term having coefficient equal to 1 and where C > 1 is greater than the maximum of the absolute value of the coefficients of f. Then for the differential equation

there is some number N(C, d), depending only on d and C, such that the number of limit cycles is at most N(C, d). Moreover,

Stephen Smale has directed attention to the Liénard equation, another special case of Hilbert’s sixteenth problem [Citation29]. By reducing the problem to a one-dimensional periodically forced equation and using a technique more elaborate but similar to that in the previous theorem, Ilyashenko and Panov obtained the following result:

Theorem 4

([Citation17]). Consider the Liénard equation

where

, and n is odd. Then the number of limit cycles

.

The reason for the double or triple exponential character of these estimates will be suggested by the proofs of similar results in Section 5, results that apply Ilyashenko’s method to certain equations that arise from the neural model of Section 4 and for which f is transcendental in x.

Returning to the constant sign cubic case, suppose f(t, x) is qualitatively similar to functions with

of constant sign for all t; that is, for all t there is one inflection point and no more than two turning points, and either for all t,

, or for all t,

. It was conjectured that as in the constant sign cubic case, the differential equation

would have no more than three limit cycles. This conjecture was recently answered in the negative by Decker and Noonburg, with examples drawn from the neural model of Section 4 exhibiting an arbitrarily large number of limit cycles [Citation8, Citation9].

4 Periodic Solutions of a Neural Model

4.1 Sigmoid response functions

In the simplest models, a neuron gives an all-or-nothing response to a stimulus; that is, the response is gated by a translate of the unit step function . However, to use the resources of calculus and differential equations, it is preferable to have a response function S that rises smoothly from 0 to 1 as x passes from

to

. We normalize the model as follows.

Definition.

A sigmoid response function S is a continuously twice differentiable increasing function of the real numbers that is concave up on

and concave down on

with

,

, and

.

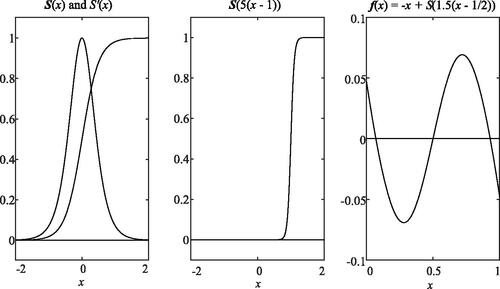

In neural modeling, sigmoid response functions are often implemented using elementary functions. Four common examples are

, where the error function

We plot together with its bell-shaped derivative

(see ).

For any number r and for any k > 0, a transformation of S(x) yields the function , with

.The maximum value k of the derivative of this function g(x) occurs at its threshold x = r. For fixed r, as k approaches

, we see that g(x) approaches

. This behavior is illustrated in the plot of

where

().

4.2 Neural models

The usual model for a single neuron is the four-dimensional Hodgkin–Huxley ordinary differential equation that led to the 1963 Nobel Prize in Physiology or Medicine for its discoverers. However, simpler models are easier to manipulate and may reveal important qualitative features of neural behavior. The two-dimensional Fitzhugh–Nagumo ordinary differential equation is such a simplification [Citation24]. A further reduction yields an experimentally tested one-dimensional model derived from electrophysiological considerations [18, pp. 37, 55, 89, 106]. The model considered in this article shares qualitative features with this electrophysiological model [Citation14], [25, pp. 78–80]. Furthermore, the model in this article has been applied successfully to the collective behavior of a single pool of neurons and of interacting pools of neurons including the much studied Wilson–Cowan oscillator [Citation26, Citation31]. It is also used in differentiable artificial neural networks [Citation13].

Let x represent the activation level of a neuron. Then x would decay exponentially if it were not for direct or indirect self-stimulation that is gated through a sigmoid response function S with threshold r and maximum derivative k. The resulting one-dimensional autonomous differential equation is . We first discuss the qualitative features of this autonomous equation. We then discuss the periodically forced equation where the threshold r varies periodically.

For any sigmoid response function S, if is the right-hand side of the differential equation, we obtain

if and only if

. The bell-shaped properties of

imply that the equation

has two solutions for k > 1, one solution for k = 1, and no solutions for k < 1. Therefore, f has at most three zeros, and the differential equation

has at most three equilibria. As an example, we graph f using

, k = 1.5, and r = 1/2 ().

For the example above, at the equilibrium with the smallest x value and at the equilibrium with the largest x value, the derivative , implying that these equilibria are sinks or attractors; that is, solutions with initial conditions sufficiently close to such an equilibrium converge to the equilibrium as time t increases. At the middle equilibrium, the derivative

, implying that this equilibrium is a source or repeller; that is, solutions with initial conditions sufficiently close to the equilibrium converge to the equilibrium as time t decreases and move away from the equilibrium as time t increases. It is this behavior that makes this equation a useful neural model, as neurons and neuron populations are often in either a low-activation state or in a high-activation state. Furthermore, because the derivative

is nonzero at each of the three equilibria, it follows for sufficiently small perturbations of f and

that the equilibria persist, respectively, as attractors and as a repeller [Citation3], [5, pp. 84–89], [Citation11]. In the language of dynamical systems theory, an equilibrium at which

is nonzero is called hyperbolic, and a differential equation

such that the number and type of equilibria persist under sufficiently small perturbations of f and

is called structurally stable [Citation12].

4.3 Periodic stimulation and periodic solutions

Abnormal oscillatory behavior of regions of the brain is characteristic both of epilepsy and Parkinson’s disease and is much studied and modeled [Citation2]. Periodic stimulation of parts of the brain is increasingly used in the treatment of those cases of epilepsy that do not respond to medication [Citation27]. Periodic stimulation of a single neuron or of a pool of neurons may come from other parts of the brain or from external sources. Periodic input into the one-variable neuron model is equivalent to a periodic change in the threshold r. Therefore, we consider the periodically stimulated nonautonomous one-dimensional ordinary differential equation model , where S is a sigmoid response function, k > 0, and r is continuous with period 1.

To be specific at this point, we consider a periodic perturbation of the autonomous equation discussed above: , where

, k = 1.5,

, where

and r1 is continuous with period 1.

When the equilibria are hyperbolic, as in the unperturbed example, then for a sufficiently small magnitude perturbation r1 about the threshold r0, the three equilibria persist as three periodic solutions. The low and high excitation solutions are attractors and the middle solution is a repeller [Citation3, Citation11], [26, pp. 1595–1598].

More generally, consider for this example the nullclines and the generalized nullclines

[3, pp. 205–208]. For the unperturbed example, the nullclines are three horizontal lines

, and

corresponding to the three equilibria x0, x1, and x2, while the generalized nullclines are two horizontal lines

and

corresponding to the two critical points c1 and c2. For small enough perturbations, the nullclines persist as curves

, and

in the (t, x)-plane that are not solutions of the differential equation, but rather indicate where the slope field of the differential equation is horizontal. If constants

and d2 exist with

, then the differential equation has at least three periodic solutions. Furthermore, if

for

and

and

for

, then there are precisely three periodic solutions, with the low and high excitation solutions attractors and the middle solution a repeller [Citation3, Citation11, Citation26].

These methods break down when the values of k and r become sufficiently large so that the nullclines are no longer separated by constants or no longer persist as three separate curves but rather have branches that join and separate in the interval . In such cases, the number of periodic solutions may be less than, equal to, or greater than three [Citation3, Citation11, Citation26].

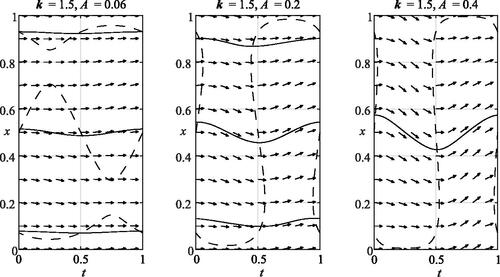

To be specific, consider the following example: , where

, k = 1.5,

, where

and

. As shown in , for A = 0.06 the three nullclines are separated by constants and there are precisely three periodic solutions. For

, the nullclines no longer persist as separate curves, but there are still precisely three periodic solutions. For A = 0.4, the nullclines no longer persist as separate curves and there is only one periodic solution.

Fig. 9 Nullclines (dashes), slope field, and periodic solutions (solid lines) for k = 1.5, r = 1/2, and A = 0.06, A = 0.2, and A = 0.4.

For the example above, the function f(t, x) seems qualitatively cubic-like (see the last paragraph of Section 3), that is, similar to a function with the coefficient

negative for all t, and it was known that differential equations of the form

with

of constant sign have no more than three periodic solutions [Citation3]. So it was conjectured that differential equations from the neural model of the form

also have no more than three periodic solutions.

This conjecture was answered in the negative by Decker and Noonburg who, using a careful bifurcation analysis, explored an example with five periodic solutions [Citation8]. Such an example is provided by the equation , with

, k = 3,

, A = 0.37, and

.

Furthermore, by first studying examples where and r(t) is piecewise linear, and then smoothing these examples, Decker and Noonburg demonstrated that for any n > 0, there exist k > 0 and a continuous periodic function r, such that there are at least n periodic solutions for the equation

[Citation9].

Many questions remain. One such is to find an upper bound N for the number of periodic solutions that is dependent on k and on S, but is independent of the perturbation r. In Section 5, we find an answer to such questions using complex analytic techniques developed by Yulij Ilyashenko.

5 Upper Bounds for Number of Periodic Solutions of Neural Models

Ilyashenko’s method finds upper bounds on the number of real periodic solutions of by finding upper bounds on the number of zeros of the displacement map F (see Section 2). The method requires (see Theorem 2)

a closed interval V containing the initial points of all real periodic solutions,

an ϵ-neighborhood U of V with closure

on which the displacement map F(z) is defined and analytic,

the maximum M of

on the closure of U, and

the maximum m of

on V such that

.

For the periodically forced neuron of Section 4, these elements are established in Proposition 5. They are then used in Theorems 9 and 11 to obtain results on the number of real periodic solutions.

Recall that a function on a subset of is defined to be complex analytic if it is complex analytic in some neighborhood of that subset [Citation1]. Note that F may fail to be defined at a point z0 in U if f is undefined at z0, or if f fails to be complex analytic at z0, or if the solution z(t) starting at z0 fails to be defined for all t less than or equal to the period of the differential equation.

Definition.

A complex sigmoid response function S is a sigmoid response function that is complex analytic on the real line.

Definition.

A complex sigmoid response function has (H, B) bounded derivative if , B > 1,

is complex analytic in the closed H-neighborhood of the real axis, and for all z in that H-neighborhood we have

(see ).

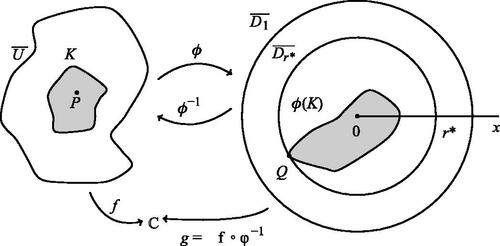

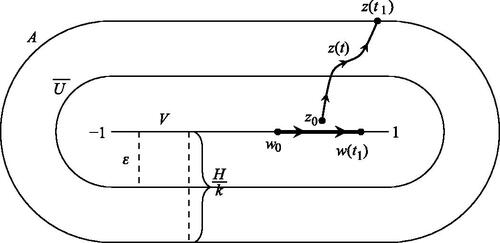

Proposition 5.

Let S be a complex sigmoid response function with (H, B) bounded derivative. Consider the differential equation , where

and r(t) is real-valued and continuous with period 1. Let V be the interval

. Suppose

, U is the ϵ-neighborhood of V, A is the closed

-neighborhood of V, P is the time-one (i.e., Poincaré) map,

is the displacement function,

, and

. Then:

V contains the initial points of all real periodic solutions of the differential equation.

On the closure of U, the Poincaré map P and the displacement function F are defined and analytic and P takes values in A.

.

.

Proof.

Note that the three lemmas included in the proof of Proposition 5 are only used for the proof of part (b).

Consider a solution x(t) with initial condition x0. The bounds on S,

, imply

for x < 0 and

for x > 1. If

, then there is a neighborhood of x0, such that, for all t, x(t) is increasing while in that neighborhood, showing that x(t) is not a periodic solution. Similarly, x(t) is not a periodic solution whenever

. Therefore, the closed unit interval and hence V contain all initial points for all periodic solutions.

Note that

and that, by the assumption

, A is a subset of the closed H-neighborhood of the real axis. Therefore the functions f(t, z) and

are defined for all (t, z) with z in A, as is the differential equation

. Suppose z0 is in the closure of U and let z(t) be the solution of

with initial condition

. We need to show that z(t) is defined and in A for all

(see ). Suppose not. We use the following three lemmas to derive the contradiction

. Lemma 7 is a corollary of Gronwall’s inequality and is much used in the theory of differential equations.

Lemma 6.

Let . For all fixed t and all points P and Q in A,

; i.e., for all fixed t, D is a Lipschitz constant in z for f(t, z) on A.

Proof.

Note that the maximum D exists since f is periodic in t and A is compact. Since A is convex, the straight-line path C from P to Q lies in A.

See [1, p. 104]. □

Lemma 7.

([4, p. 103], [12, pp. 169, 297]). For some , suppose

and

are solutions of

that are contained in A for all t with

, and that for all fixed t, D is a Lipschitz constant in z for f(t, z) in A. Then

for all t with

.

Lemma 8.

Let . Then

.

Proof.

If z is in the closed -neighborhood A of V, then

. Moreover,

because r is real-valued. So

, which implies

, because S has an (H, B) bounded derivative. Therefore, for all z in A,

. ■

Now suppose that there exists some , such that either z(t) is not defined or that z(t) is defined but it is not in A. In either case there exists

, such that

is on the boundary of A and z(t) is in A for all

. The distance from

to V is

().

Choose a point w0 in V such that and let w(t) be the solution of

with initial condition

(). As argued in the proof of part (a),

for x < 0 and

for x > 1. Therefore, for all

, w(t) is defined and in V and consequently in A.

on the boundary of the

neighborhood A of V implies

.

Since , it follows by Lemmas 6, 7, 8 that

By choice of w(0), , implying the contradiction

. Therefore, for all

, z(t) is defined and in A. In particular, for all z0 in the closure of U, the Poincaré map

is defined and in A.

It remains to show that the Poincaré map P and the displacement function F are complex analytic on the closure of U. The assumption implies that

and thus also S are complex analytic on an open neighborhood W of A. Therefore, for all fixed t, the function

is complex analytic on W. We have just shown that solutions with initial conditions in the closure of U remain in A and thus in W for all

. It follows from classical results on the analyticity of solutions with respect to initial conditions that the Poincaré function P and thus also the displacement function

are complex analytic on the closure of U [22, p. 44].

By part (b), z in

implies P(z) in A. The real line is invariant for P, so either z and P(z) are both in the closed upper half of A or they are both in the closed lower half of A. Therefore, M is at most equal to the diameter of the closed upper half of A. This region is formed by a rectangle of length 2 and height

together with quarter circles of radius

at each end (). The region has diameter

.

To find a lower bound for m we estimate

from below, where we choose x(t) to be the solution of the differential equation

with initial condition

. For comparison, consider the exponential differential equation

, with initial condition

and solution

. Since for all x < 0,

, it follows that for all

. Therefore

. ■

We now apply Ilyashenko’s Theorem 2 using the estimates in Proposition 5.

Theorem 9.

Let S be a complex sigmoid response function with (H, B) bounded derivative. Let N be the number of real periodic solutions for the differential equation , where k > 0 and r(t) is real valued and continuous with period 1. Then:

.

If

, then

, where

.

Proof.

(a) Suppose . By Proposition 5a, N equals the number of zeros of the displacement function F(z) on the interval

. Under the assumptions of Theorem 2,

, where L = 2 is the length of V. By Proposition 5b, these assumptions are satisfied by

. By parts (c) and (d) of Proposition 5, we estimate

and

. Substituting in

, we obtain the result.

If , then for real x,

for all t, which yields the bound

for the derivative of the Poincaré map P [3, pp. 109–110], [11, p. 130]. Therefore the displacement map

is monotone decreasing and has at most one zero, implying that there is at most one periodic solution.

(b) Suppose ; then we have

6 Explicit Upper Bound on the Number of Periodic Solutions

In this section, we consider the complex sigmoid response function from Section 4, and letting B = 2 we determine an explicit value of H such that the derivative

is (H, B) bounded. We then use Theorem 9 to put an upper bound on the number N of real periodic solutions for the differential equation

, where

and r is real-valued with period 1. The value of H is determined by the magnitude of

near the real axis and, therefore, influenced by the location of the poles of S near the real axis.

Proposition 10

. has (H, B) bounded derivative

where

and B = 2.

Proof.

The derivative . The following statements are equivalent:

,

,

, and

, where

.

This last inequality is satisfied for all x and y with . ■

As an exercise, using properties of elementary complex analytic functions, similar estimates can be obtained for the other examples of sigmoid response functions that are listed in Section 4.

Exercise. is (H, B) bounded with:

B = 2 and , for

,

B = 2 and , for

, and

B = 2 and , for

.

Theorem 11.

Let N be the number of real periodic solutions to the differential equation , where k > 0, r is real-valued and continuous with period 1, and

. Then

where

, approximating to the least integer larger than C.

Proof.

If , Theorem 11 follows by applying Theorem 9b, using B = 2 and the value

determined in Proposition 10. If

, then for real x,

for all t, which yields the bound

for the derivative of the Poincaré map P [3, pp. 109–110], [11, p. 130]. Therefore the displacement map

is monotone decreasing and has at most one zero, implying that there is at most one periodic solution. ■

7 Conclusions and Open Questions

After an introductory course in differential equations and an introductory course in complex analysis, the method of Ilyashenko can be used to study other accessible and illuminating problems involving upper bounds on the number of real periodic solutions of equations of the form , where f is periodic in t and complex analytic in x. Delicate work is required to understand the particular function f and its derivative

and how these determine the elements of the approach (see Section 5).

For example, undergraduates may wish to consider the autonomous population model , where r is the intrinsic growth/decay rate, L is the carrying capacity, M is the minimal sustainable population in the absence of harvesting, H is the harvesting rate, and where r, L, and M are assumed to be positive with M < L [3, p. 217]. If H = 0, there are three equilibria: x = 0, x = L, and x = M. For small continuous periodic perturbations of the constants, the three equilibria persist as periodic solutions. Furthermore, whatever their size, as long as the periodic perturbations r(t), L(t), and M(t) remain positive, the coefficient of x3 remains of constant negative sign, and this implies that there can be no more than three periodic solutions. If r is allowed to vary in sign, one may search for examples with more than three periodic solutions, and then use Ilyashenko’s method to determine upper bounds on the number of such solutions.

Even for the neural models studied in this article, better estimates might be obtained by setting , for c > 0, and allowing B to approach 1 and c to approach 0.

Ilyashenko believes that the double or triple exponential estimates are likely to be far too big [Citation15, Citation17]. He quotes Smale’s conjecture for the Liénard equation, that the number of periodic solutions is likely to be polynomial in d and independent of the magnitude C of the coefficients. Ilyashenko suggests that sharper estimates might be found by complexifying time t and studying the resulting foliations that have Riemann surfaces as leaves.

The estimate from Theorem 11 that , with

, suggests a very large number of periodic solutions that could not possibly be detected in either natural or artificial neural networks. However, this upper bound is independent of the periodic stimulation r and suggests the existence of a more plausible upper bound that cannot yet be theoretically demonstrated. Furthermore, seemingly impractical mathematical idealizations often find unexpected applications.

We conclude with three questions suggested by these studies. The last question is vague and requires further precision.

Question 1. Suppose a complex sigmoid response function S is complex analytic in some neighborhood of fixed width about the real axis. Does such an S have a derivative that is (H, B) bounded for some H > 0 and B > 1?

Question 2. The piecewise linear function has derivative

. Replace S(x) by L(x) in the neural model we have studied. Using real variable methods find an upper bound N for the number of periodic solutions to

, where k > 0 and r is continuous and has period 1 in t. Using such methods can one obtain an estimate comparable to or better than the estimates in Theorem 11? To begin, consider the case

for n an integer starting with n = 1.

Question 3. For , for a fixed number k > 0 and a fixed complex sigmoid response function S, let N(k, S) be the supremum of the number of periodic solutions, where the supremum is taken over the set of continuous functions r with period 1. Note that Theorem 11 gives an estimate for N(k, S), where

. Let N(k) be the infimum of N(k, S) where the infimum is taken over the set of complex sigmoid response functions S. What is the “best” upper estimate for N(k) that can be obtained using Ilyashenko’s method and (H, B) bounded derivatives as in Section 5?

ACKNOWLEDGMENT

This article was inspired by discussions I had with my University of Hartford colleagues Robert Decker, Virginia W. Noonburg, and Ben Pollina. Valuable suggestions were offered by Linda Keen, Malgorzata Marciniak, John Mitchell, and Zbigniew Nitecki. Nine of the figures were rendered by Andrew Starnes who used the LATEXpackage PGFPlots. and were generated with Matlab. The meticulous work of the editors and reviewers was of great help and is much appreciated.

Additional information

Notes on contributors

Diego M. Benardete

Diego Mair Benardete received his doctorate in mathematics from the City University of New York in 1985 and has ever since researched pure and applied aspects of dynamical systems and differential equations. At the University of Hartford, he enjoys teaching undergraduate mathematics. He also has an amateur’s interest in history, literature, philosophy, and religion that manifests itself professionally in the study of the history and philosophy of mathematics.

References

- Ahlfors, L. (1979). Complex Analysis: An Introduction to the Theory of Analytic Functions of One Complex Variable, 3rd ed. New York, NY: McGraw-Hill.

- Ashwin, P., Coombes, S., Nicks, R. (2016). Mathematical frameworks for oscillatory network dynamics in neuroscience. J. Math. Neurosci. 6: Art. 2, 92 pp.

- Benardete, D., Noonburg, V. W., Pollina, B. (2008). Qualitative tools for studying periodic solutions and bifurcations as applied to the periodically harvested logistic equation. Amer. Math. Monthly. 115(3): 202–219.

- Birkhoff, G., Rota, G-C. (1962). Ordinary Differential Equations. Waltham, MA: Ginn and Company.

- Blanchard, P., Devaney, R. L., Hall, G. R. (1998). Differential Equations. Pacific Grove, CA: Brooks/Cole.

- Bottazini, U., Gray, J. (2013). Hidden Harmony—Geometric Fantasies: The Rise of Complex Function Theory. New York: Springer.

- Coddington, E. A., Levinson, N. (1985). Theory of Ordinary Differential Equations. Malabar, FL: Krieger. Reprint of original edition, (1955). New York, NY: McGraw-Hill.

- Decker, R., Noonburg, V. W. (2012). A periodically forced Wilson–Cowan system with multiple attractors. SIAM J. Math. Anal. 44(2): 887–905.

- Decker, R., Noonburg, V. W. A single neuron equation with multiple periodic cycles. In preparation.

- Gray, J. (2015). The Real and the Complex: A History of Analysis in the 19th Century. New York, NY: Springer.

- Hale, J. K., Kocak, H. (1991). Dynamics and Bifurcations. New York, NY: Springer.

- Hirsch, M. W., Smale, S. (1974). Differential Equations, Dynamical Systems, and Linear Algebra. Orlando, FL: Academic Press.

- Hopfield, J. J. (1982). Neural networks and physical systems with emergent collective computational abilities. Proc. Nat. Acad. Sci. 79: 2554–2558. DOI: https://doi.org/10.1073/pnas.79.8.2554.

- Hoppensteadt, F. C., Izhikevich, E. (1997). Weakly Connected Neural Networks. New York, NY: Springer.

- Ilyashenko, Yu. (2000). Hilbert-type numbers for Abel equations, growth and zeros of holomorphic functions. Nonlinearity. 13(4): 1337–1342. DOI: https://doi.org/10.1088/0951-7715/13/4/319.

- Ilyashenko, Yu. (2002). Centennial history of Hilbert’s 16th problem. Bull. Amer. Math. Soc. (N.S.). 39(3): 301–354.

- Ilyashenko, Yu., Panov, A. (2001). Some upper estimates of the number of limit cycles of planar vector fields with an application to the Liénard equations. Moscow Math. J. 1(4): 583–599. DOI: https://doi.org/10.17323/1609-4514-2001-1-4-583-599.

- Izhikevich, E. (2007). Dynamical Systems in Neuroscience: The Geometry of Excitability and Bursting. Cambridge, MA: MIT Press.

- Jensen, J. (1899). Sur un nouvel et important théorème de la théorie des fonctions. Acta. 22: 359–364. DOI: https://doi.org/10.1007/BF02417878.

- Jessen, B. (1973). Jensen, Johan Ludwig William Valdemar. In: Gillispie, C. C., ed. Dictionary of Scientific Biography, Vol. 7. New York: Scribner, p. 101.

- Keen, L., Lakic, N. (2007). Hyperbolic Geometry from a Local Viewpoint. New York: Cambridge Univ. Press.

- Lefschetz, S. (1963). Differential Equations: Geometric Theory, 2nd ed. New York: John Wiley.

- Lins Neto, A. (1980). On the number of solutions of the equation dxdt=∑0naj(t)xj,0≤t≤1, for which x(0)=x(1) . Invent. Math. 59(1): 67–76.

- Murray, J. D. (1993). Mathematical Biology, 2nd ed. New York, NY: Springer.

- Noonburg, V. W. (2019). Differential Equations: From Calculus to Dynamical Systems, 2nd ed. Providence, RI: MAA Press.

- Noonburg, V. W., Benardete, D., Pollina, B. (2003). A periodically forced Wilson–Cowan system. SIAM J. Appl. Math. 63(5): 1585–1603.

- Rolston, J. D., Eaglot, D. J., Wang, D, D., Shih, T., Chang, E. F. (2012). Comparison of seizure control outcomes and the safety of vagus nerve, thalamic deep brain, and responsive neurostimulation: evidence from randomized control trials. Neurosurg. Focus. 32(3): E14.

- Saks, S., Zygmund, A. (1971). Analytic Functions. Amsterdam: Elsevier.

- Smale, S., (1998). Mathematical problems for the next century. Math. Intelligencer. 20: 7–15. DOI: https://doi.org/10.1007/BF03025291.

- Veech, W. A. (1967). A Second Course in Complex Analysis. New York: W. A. Benjamin.

- Wilson, H. R., Cowan, J. D. (1972). Excitatory and inhibitory interactions in localized populations of model neurons. Biophys. J. 12: 1–24. DOI: https://doi.org/10.1016/S0006-3495(72)86068-5.