?Mathematical formulae have been encoded as MathML and are displayed in this HTML version using MathJax in order to improve their display. Uncheck the box to turn MathJax off. This feature requires Javascript. Click on a formula to zoom.

?Mathematical formulae have been encoded as MathML and are displayed in this HTML version using MathJax in order to improve their display. Uncheck the box to turn MathJax off. This feature requires Javascript. Click on a formula to zoom.Abstract

Monte Carlo integration is a commonly used technique to compute intractable integrals and is typically thought to perform poorly for very high-dimensional integrals. To show that this is not always the case, we examine Monte Carlo integration using techniques from the high-dimensional statistics literature by allowing the dimension of the integral to increase. In doing so, we derive nonasymptotic bounds for the relative and absolute error of the approximation for some general classes of functions through concentration inequalities. We provide concrete examples in which the magnitude of the number of points sampled needed to guarantee a consistent estimate varies between polynomial to exponential, and show that in theory arbitrarily fast or slow rates are possible. This demonstrates that the behavior of Monte Carlo integration in high dimensions is not uniform. Through our methods we also obtain nonasymptotic confidence intervals which are valid regardless of the number of points sampled.

1 Introduction

Monte Carlo integration is a simple and widely used numerical integration method which leverages randomness and computational power to approximate intractable integrals. Chapters 1 and 2 of Owen (Citation2013) provide some historical details as well as an introduction to the subject. Roughly speaking, we can think of this method as generating a random grid of points on which we evaluate the function being integrated, and average these values to form an estimate of the integral. As a result, Monte Carlo integration is generally thought to perform poorly for very high-dimensional functions. The intuition behind this is clear: the curse of dimensionality makes covering the region of integration exponentially more difficult as the dimension of the integral increases. However, this poor scaling of the accuracy of the method is not immediate from the usual statement about the error rates of Monte Carlo methods. While it is true that the approximation error is (Owen Citation2013, chap. 2.1) on the absolute scale regardless of the dimension of the integrand, where n is the sample size, the constant involved in this rate can depend on the dimension of the integral. Furthermore, for many statistical applications the relative accuracy is of interest rather than the absolute accuracy, and these two metrics can behave quite differently. In order to characterize the dependence of the constant on the dimension of the integral, we derive the order of n needed to guarantee a vanishing relative error as the dimension of the integral increases. Formally, we wish to integrate a sequence of functions

from

with respect to a sequence of probability densities

. The Monte Carlo procedure uses the following approximation:

(1)

(1) where Xi for

are iid samples from the probability densities

with support

. We measure the difficulty of the problem by the relationship required between p and n(p) such that when

, for every

(2)

(2)

where n(p), the sample of points being sampled, is an increasing function in p. We assume that the expectations are nonzero in order to make condition (2) well-defined. The growth rate of the function n(p) measures the complexity of the problem with respect to the dimensionality of the integral, that is, it is the sampling cost of producing an asymptotically consistent estimate in relative error. If the dimension of the integral is fixed, then consistency is guaranteed so long as

. We show in the sequel that this cost can vary depending on the function being integrated, and can range from exponential to polynomial in dependency. Therefore, the performance of Monte Carlo procedures is not uniformly affected by the dimensionality of the integral.

Although considering a changing integrand may appear unusual at first, this type of scheme has been used as a theoretical device in the proof for the accuracy of quasi-Monte Carlo methods (Lemieux Citation2009; Owen Citation2019), where a carefully chosen deterministic grid is used instead of a randomly sampled grid. In this setting, uncertainty quantification is obtained through the Koksma-Hlawka inequality (Hickernell Citation2014), which is shown by constructing a adversarial function which changes with the number of grid points, n. This regime is also common in high-dimensional statistics, in which a statistical model is allowed to increase in complexity as the sample size increases, see Vershynin (Citation2018) and Wainwright (Citation2019) for an overview of high-dimensional probability and statistics, respectively. In our case, we can imagine that the function of interest lies somewhere along the sequence and we wish to give some guidance on the required size of n to satisfy a certain accuracy requirement, depending on the placement of this function. Even though the statement in (2) is asymptotic, we use nonasymptotic concentration bounds throughout this work. Through this approach we obtain nonasymptotic

confidence intervals for the absolute error, and we derive the relationship between the sampling cost of Monte Carlo procedures in high dimensions and the structure of

and

.

We first provide some background information on Monte Carlo integration and concentration inequalities. We then examine some results for approximating volumes in high dimensions, and general integrals under structural assumptions. We then provide a comparison between asymptotic and non-asymptotic confidence intervals in Section 5. Calculations and derivations are deferred to the Appendix. We take to mean that

, and take

to mean that there exists a constant N0 such that for all

for some B > 0. We use uppercase letters such as X or Xi to denote a vector-valued random variable. When invoking the Lipschitz property we take it to be with respect to the Euclidean norm. The Lipschitz property implies the uniform continuity of a function, and therefore bound its rate of increase uniformly across its domain. This fact will prove helpful in obtaining faster rates of concentration.

2 Background

Monte Carlo integration is justified by the application of the central limit theorem (CLT) in which we write

where

is a probability measure. The addition of

makes integration on infinite domains feasible; some texts take

as the uniform probability measure when considering domains with finite Lebesgue measure. If we are able to sample independent and identically distributed points

from the density

, we can estimate the integral through:

(3)

(3)

Assuming that , we are then guaranteed a consistent estimate as

by the strong law of large numbers.

The approximation error is typically obtained by using an empirical estimate of the variance and appealing to the CLT to produce an asymptotically valid confidence interval. However, noting that the estimate introduced in (3) is an average, quantifying the approximation error is equivalent to quantifying how rapidly an average concentrates around its expectation. Seeing the problem from this perspective allows us to use powerful concentration inequalities to bound the non-asymptotic approximation error; see Boucheron, Lugosi, and Massart (Citation2013) for a textbook discussing concentration inequalities and their uses. In order to use these bounds however, we require additional assumptions on and

. We introduce some particular structures which lead to rapid concentration, including averages of independent sub-Gaussian random variables, concave/convex Lipschitz functions on the hypercube and Lipschitz functions of strongly log-concave random variables.

2.1 Averages of Sub-Gaussian Variables

Sub-Gaussian random variables are a class of random variables whose tail probabilities can be dominated by those of a Gaussian random variable with mean μ and variance . As Gaussian distributions concentrated around their mean at an exponentially fast rate (Durrett Citation2019, Theorem 1.2.6), we can consequently expect the same behavior from sub-Gaussian random variables. It can be shown that for a random variable to have lighter tail than a Gaussian distribution with mean μ and variance

is mathematically equivalent to upper bounding the moment generating function of the centered random variable by that of a Gaussian with variance

(Wainwright Citation2019, Theorem 2.6). More precisely, a random variable Z is said to be sub-Gaussian with mean μ and a proxy variance

if the moment generating function of

satisfies:

The proxy variance may not be unique in this definition, but taking

to be as small as possible while satisfying the above condition results in a sharper bound. Then

, the average of iid realizations

satisfies

(4)

(4)

(see Wainwright Citation2019, sec. 2). For the purpose of verifying condition (2), the bound in (4) can be re-expressed as

(5)

(5) through simple algebraic manipulations. This form is more useful for studying the relative accuracy of Monte Carlo integration. We assume the expectation is nonzero or the ratio in (5) is ill-defined. As we do not know the expectation, we need to bound it from below in order to use (5) in practice; for convex functions this can sometimes be accomplished by Jensen’s inequality. One important class of sub-Gaussian random variables are bounded random variables taking value in the interval

, in which case the proxy variance

is

; this is a consequence of Hoeffding’s Lemma (Boucheron, Lugosi, and Massart Citation2013, Lemma 2.2).

2.2 Convex/Concave Lipschitz Functions of Random Variables on the Unit Cube

Beyond simple averages, there are concentration results for convex/concave Lipschitz functions of random variables on the hypercube. For a Lipschitz convex/concave function g(x) with Lipchitz constant L, and for a random variable whose components are independently distributed,

(6)

(6)

which implies

(7)

(7) by Theorem 3.24 in Wainwright (Citation2019). We will apply this bound to averages of convex/concave Lipschitz functions in Section 4, by using the fact that averages of such functions remains convex/concave Lipschitz. The surprising aspect of this bound is that it is dimension free, as it holds for all convex/concave Lipschitz continuous functions of arbitrary dimension. However, as we will see in the examples in Section 4, it is possible that the Lipschitz constant L increases with the dimension of the function.

2.3 Lipschitz Functions of Strongly Log-concave Random Variables

For some , a density

is strongly γ-log-concave if it satisfies the following:

for all

and all

. Then for any L-Lipschitz function g and a strongly γ-log-concave random variable X:

and

by Theorem 3.16 in Wainwright (Citation2019). Strongly log-concave distributions can also be characterized as the product of a log-concave density with a multivariate normal distribution (Saumard and Wellner Citation2014). We will apply this bound to averages of iid realizations of g(X) by using properties of strongly γ-log-concave random variables in Section 4. This bound also does not depend on the dimension of the integral, although once again, the constants involved can potentially increase with p. Intuitively, the strongly log-concave density must be uni-modal and “hump-like” on the log scale, and as this is exponentiated the resulting density concentrates rapidly around its mean.

3 Estimation of High-Dimensional Volumes

Estimation of volumes is a classic application of Monte Carlo integration. Suppose we are interested in calculating the unknown volume (Lebesgue measure) of a set F which is contained within a set with known finite volume. Then if we can sample Xi’s from the uniform distribution supported on

, we can approximate the volume of F by:

As the random variable only takes the value of 0 or

, it is sub-Gaussian with

. Noting this and using the sub-Gaussian bound in Section 2.1 gives:

Proposition 1.

Suppose that both and

are finite. Then:

This implies the nonasymptotic confidence interval

for

and

where Xi are iid copies of a uniform random variable supported on

.

The typical example provided in textbooks such as (Ross Citation2013, chap. 3.2), is the problem of computing the value of π or the area of a circle inscribed in a square. We extend the latter to the case of a hypersphere contained in a hypercube and showcase how the curse of dimensionality manifests itself in volume estimation.

Example 1.

Let and let

, where F is a hypersphere of dimension p with radius 1, while

is the hypercube centered at 0 with sides of length 2. In this case we know that

and

, thus,

The absolute error is decaying extremely quickly, as the volume of the unit sphere decays exponentially to 0 as the dimension increases. Therefore, the chance of hitting the sphere by sampling from the unit cube is also exponentially decaying to 0. Our estimate will essentially be 0, but this is quite close to the volume of an unit sphere in high dimensions. However, in order to satisfy our relative consistency requirement, we need the number of Xi sampled to be , by Stirling’s approximation. Thus, the numerical cost of integrating this function is exponentially increasing in the dimension of the sphere.

In general this bound can be improved through a tighter control of the ratio . In fact, if this ratio is bounded below by a constant independent of dimension, then the relative error would be exponentially decreasing in n and we would obtain a dimension-free result. However, it is increasingly difficult to find a set

to cover F in high dimensions, thus, this may not be feasible in practice.

As noted by a reviewer, this example is part of the broader problem of estimating a small probability or proportion. Consider the problem of estimating the probability with the estimator

then:

by (5), where Xi are iid copies of X. The requirement for relative consistency is satisfied if

, therefore, depending on the speed at which

an arbitrarily fast or slow rate of growth of the function n(p) is possible.

4 Expectations of High-Dimensional Functions

We consider two additional examples of Monte Carlo integration for expectations of smooth functions, going beyond the estimation of probability and proportions to demonstrate the general utility of the results presented in Section 2. The indicator function used in Section 3 is, in some sense, ill behaved, as it is not a continuous function and can change very rapidly in a small neighborhood. Considering functions with restrictions on their rate of growth through the Lipschitz property, along with other structural assumptions induces much faster rates of concentration.

4.1 Lipschitz Convex or Concave Distributions on the Unit Square

Proposition 2.

For convex or concave Lipschitz function with Lipschitz constant Lp, and a random variable X whose components are independently distributed and supported on

,

This implies the nonasymptotic confidence interval

for

and

where Xi are iid copies of X.

The proof follows from the observation that a sum of Lipschitz convex/concave functions is still Lipschitz convex/concave and the previously discussed concentration inequality. The rate of convergence for the relative accuracy only depends on the dimension through the Lipschitz constant Lp and . These can increase with dimension, which places constraints on the growth rate of p relative to n, as demonstrated in Example 2.

Example 2.

Consider

for any q > 1. We apply Proposition 2 to show that n(p) has a polynomial dependence on p for our relative consistency condition to hold. We first note that

is

-Lipschitz, where

as

since

for all

, and

for

. The expectation of this function can be bounded from below by Jensen’s inequality:

This results in:

meaning that it is sufficient for

to guarantee an asymptotically consistent estimator in relative accuracy.

Remark 1.

Proposition 2 applies to any function defined on a hyper-rectangle as we may shift the domain of a function to the unit cube by centering and rescaling the function, although this will change the Lipschitz constant of the function.

4.2 Strongly Log-Concave Distributions

For Lipschitz functions of strongly log-concave distributions the concentration can also be quite rapid. This allows us to consider an example of a function defined on a infinite domain and remove the convexity or concavity assumption on the function .

Proposition 3.

For a function with Lipschitz constant Lp, and a strongly γ-log-concave random variable X,

This implies the nonasymptotic confidence interval

for

and

where Xi are iid copies of X.

This is proven by noting that an average of Lipschitz function is Lipschitz and the fact that the joint distribution of independent strongly γ-log-concave distribution is still strongly γ-log-concave, as shown in the Appendix. One particularly important member of strongly log-concave distributions is the multivariate normal distribution with nonsingular covariance matrix Σ, which is strongly γ-log-concave, with γ equal to the minimal eigenvalue of .

Example 3.

Consider the following expectation where follows a multivariate normal distribution with 0 mean and a covariance matrix Σ such that

. This condition on the minimum eigenvalue implies that the distribution of X is strongly γ-log-concave. We wish to estimate:

This function is -Lipschitz due to the fact that:

and the fact that

. Finally we may bound the expectation of this function from below by

, thus,

meaning that

is sufficient to guarantee a relative consistent estimate.

5 Nonasymptotic and Asymptotic Confidence Intervals

We briefly discuss and compare the non-asymptotic confidence intervals presented in Sections 3 and 4 to asymptotic and exact confidence intervals in a simple example to better understand their coverage properties. The nonasymptotic confidence intervals considered in the previous sections will tend to be conservative for most distributions. This is necessary as the probability bounds used are applicable to a wide class of distributions and the equality in the bound cannot hold for all values of , thus, they must in general overstate the probability of the event.

Example 4.

We observed a sample from a binomial distribution with parameters (k, p) where k is the number of coin tosses and p is the probability of heads. We treat k as known and consider the problem of providing a 90% and 95% confidence interval for p. We consider three possible confidence intervals, the Clopper-Pearson exact confidence interval, the Bayesian credible interval obtained with the prior (which is the Jeffreys’ prior) and the nonasymptotic confidence interval from Proposition 1. The Bayesian credible interval is used instead of the usual normal approximation, as for certain values of p we are likely to obtain an observation of 0 or k, which results in an invalid approximation. This credible interval also coincides with the standard normal approximation as

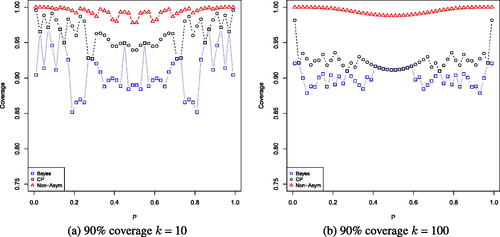

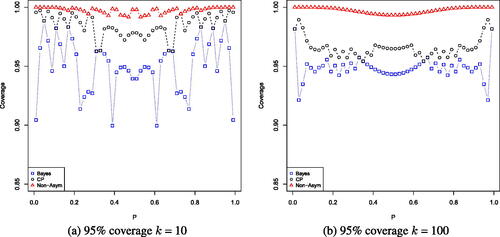

by the Bernstein von-Mises theorem (Van der Vaart Citation2000, chap. 10), and is therefore asymptotically valid. Exact coverage probabilities for 90% and 95% nominal confidence intervals are given in and . We see that the proposed nonasymptotic interval tends to be more conservative than the Clopper-Pearson interval. Although, we do note the Bayesian credible interval suffers poor coverage for certain values of the parameter p even if the sample size is relatively high.

Fig. 1 Exact coverage probabilities for a nominal 90% confidence interval, for k = 10 on the left and k = 100 on the right. Non-asymptotic coverage probabilities are given by triangles, Clopper-Pearson exact confidence interval coverage probabilities are given by circles and Bayesian credible interval coverage probabilities are given by squares.

Fig. 2 Exact coverage probabilities for a nominal 95% confidence interval, for k = 10 on the left and k = 100 on the right. Nonasymptotic coverage probabilities are given by triangles, Clopper-Pearson exact confidence interval coverage probabilities are given by circles and Bayesian credible interval coverage probabilities are given by squares.

In general, if sufficient knowledge of the distribution is available to the practitioner, it is possible to obtain better alternatives to the non-asymptotic intervals by performing a bespoke analysis. In the binomial example, exact intervals are available as we have the analytical form of the probability mass function. However, it is not always the case that such knowledge available to us. Although, we do note that if an exact interval is unavailable, the asymptotic confidence intervals may produce invalid anti-conservative statements while the nonasymptotic intervals provides valid conservative statements.

6 Discussion

We have shown that the applicability of Monte Carlo integration varies substantially depending on the specific combination of the function being integrated and the measure it is integrated against, and we relate the approximation error to the rate of concentration of a sum of iid random variables. We note that the procedure can be catastrophically bad for approximating volumes, but for integrating specific functions it may perform well. The analysis can potentially be extended to sub-exponential random variables, or random variables defined on manifolds by exploiting the numerous available concentration inequalities. Another important extension is to consider non iid random variables. This generalization could help in providing non-asymptotic bounds on the performance of Monte Carlo Maximum Likelihood Estimates for exponential graphical models as introduced in Geyer and Thompson (Citation1992) and general Monte Carlo maximum likelihood estimation approaches. This can potentially be done through the bounds of strongly log-concave densities presented in Section 4.2, however, we would need to ensure that the sampled distribution is strongly log-convex, which is a nontrivial assumption to verify.

There are also other variants of Monte Carlo which are used in high dimensions. While quasi-Monte Carlo methods has a absolute error rate of , these methods perform better than the

factor in the error might suggest. For example, it has been observed that quasi-Monte Carlo performs well in some financial applications in very high dimensions (Paskov and Traub Citation1996). This may be due to the fact that this error bound is obtained through a worst case scenario analysis and the true dependence on dimension may be much smaller. Determining the effective dimension of the problem is still an open question, see Owen and Pan (Citation2021).

Supplementary Materials

The supplementary materials contains the code used to produce and .

Supplementary_code.zip

Download Zip (2.4 KB)Acknowledgments

We would like to thank two anonymous reviewers and the associate editor for their comments which lead to improvements in the quality and exposition of the article. We also thank Michaël Lanlancette and Heather S. Battey for comments and feedback on a draft version of this article.

Disclosure Statement

The author reports there are no competing interests to declare.

References

- Boucheron, S., Lugosi, G., and Massart, P. (2013), Concentration Inequalities: A Nonasymptotic Theory of Independence, Oxford: Oxford University Press.

- Durrett, R. (2019), Probability: Theory and Examples, Cambridge: Cambridge University Press.

- Geyer, C. J., and Thompson, E. A. (1992), “Constrained Monte Carlo Maximum Likelihood for Dependent Data,” Journal of the Royal Statistical Society, Series B, 54, 657–683. DOI: 10.1111/j.2517-6161.1992.tb01443.x.

- Hickernell, F. J. (2014), “Koksma-Hlawka Inequality,” Wiley StatsRef: Statistics Reference Online.

- Lemieux, C. (2009), Monte Carlo and Quasi-Monte Carlo sampling, New York: Springer.

- Owen, A. B. (2013), “Monte Carlo Theory, Methods and Examples,” unpublished manuscript. https://artowen.su.domains/mc/

- ———(2019), “Monte Carlo Book: The Quasi-Monte Carlo Parts,” unpublished manuscript. https://artowen.su.domains/mc/practicalqmc.pdf

- Owen, A. B., and Pan, Z. (2021), “Where are the logs?” arXiv:2110.06420.

- Paskov, S., and Traub, J. F. (1996), “Faster Valuation of Financial Derivatives,” Journal of Portfolio Management, 22, 113–120. DOI: 10.3905/jpm.1995.409541.

- Ross, S. M. (2013), Simulation, New York: Academic Press.

- Saumard, A., and Wellner, J. A. (2014), “Log-concavity and Strong Log-Concavity: A Review,” Statistics Surveys, 8, 45–114. DOI: 10.1214/14-SS107.

- Van der Vaart, A. W. (2000), Asymptotic Statistics, Cambridge: Cambridge University Press.

- Vershynin, R. (2018), High-Dimensional Probability: An Introduction with Applications in Data Science, Cambridge: Cambridge University Press.

- Wainwright, M. J. (2019), High-Dimensional Statistics: A Non-Asymptotic Viewpoint, Cambridge: Cambridge University Press.

Appendix A.

Appendix

A.1 Properties of Lipschitz and Log-Concave Distributions

Lemma 1.

If

are Lp-Lipschitz functions with respect to the Euclidean norm for

, then

is a

-Lipschitz function.

If

are Lp-Lipschitz convex/concave functions with respect to the Euclidean norm for

, then

is a

-Lipschitz convex/concave function.

Proof.

Let , then

where the last inequality follows from Jensen’s inequality for the concave function

. The second statement follows from the first by noting that sums of convex/concave functions remain convex/concave. □

This basic property of strongly log-concave distribution is shown in Saumard and Wellner (Citation2014), but due to notational differences we provide a proof for the convenience of the reader.

Lemma 2.

Let Xi be independent copies of a γ strongly log-concave distribution for , then the joint distribution

is also a γ strongly log-concave distribution.

Proof.

Let Xi be distributed according to Let

, for

then by the log-concavity of each xi, for each

and all

which implies

where the final inequality follows from the triangle inequality. □

A.2 Proof of Propositions 2 and 3

A.2.1 Proof of Proposition 2

The first and third part of the proposition follow from choosing the function in bound (5), and noting by Lemma 1 that g is convex/concave with Lipschitz constant

. The confidence interval is obtained by setting:

and solving for δ.

A.2.2 Proof of Proposition 3

The proof for the first and third statements is almost the same as that of Proposition 2. We let and note that this function is

-Lipschitz and combine this with the fact that the joint distribution of

is also strongly γ-log-concave (as shown in Lemma 2) gives the desired result. The confidence interval is obtained by setting:

and solving for δ.