?Mathematical formulae have been encoded as MathML and are displayed in this HTML version using MathJax in order to improve their display. Uncheck the box to turn MathJax off. This feature requires Javascript. Click on a formula to zoom.

?Mathematical formulae have been encoded as MathML and are displayed in this HTML version using MathJax in order to improve their display. Uncheck the box to turn MathJax off. This feature requires Javascript. Click on a formula to zoom.ABSTRACT

In this paper, we establish an initial theory regarding the second-order asymptotical regularization (SOAR) method for the stable approximate solution of ill-posed linear operator equations in Hilbert spaces, which are models for linear inverse problems with applications in the natural sciences, imaging and engineering. We show the regularizing properties of the new method, as well as the corresponding convergence rates. We prove that, under the appropriate source conditions and by using Morozov's conventional discrepancy principle, SOAR exhibits the same power-type convergence rate as the classical version of asymptotical regularization (Showalter's method). Moreover, we propose a new total energy discrepancy principle for choosing the terminating time of the dynamical solution from SOAR, which corresponds to the unique root of a monotonically non-increasing function and allows us to also show an order optimal convergence rate for SOAR. A damped symplectic iterative regularizing algorithm is developed for the realization of SOAR. Several numerical examples are given to show the accuracy and the acceleration effect of the proposed method. A comparison with other state-of-the-art methods are provided as well.

COMMUNICATED BY:

1. Introduction

We are interested in solving linear operator equations,

(1)

(1) where A is an injective and compact linear operator acting between two infinite dimensional Hilbert spaces

and

. For simplicity, we denote by

and

the inner products and norms, respectively, for both Hilbert spaces. Since A is injective, the operator equation (Equation1

(1)

(1) ) has a unique solution

for every y from range

of the linear operator A. In this context,

is assumed to be an infinite dimensional subspace of

.

Suppose that, instead of the exact right-hand side , we are given noisy data

obeying the deterministic noise model

with noise level

. Since A is compact and

, we have

and the problem (Equation1

(1)

(1) ) is ill-posed. Therefore, regularization methods should be employed for obtaining stable approximate solutions.

Loosely speaking, two groups of regularization methods exist: variational regularization methods and iterative regularization methods. Tikhonov regularization is certainly the most prominent variational regularization method (cf., e.g. [Citation1]), while the Landweber iteration is the most famous iterative regularization approach (cf., e.g. [Citation2,Citation3]). In this paper, our focus is on the latter, since from a computational viewpoint the iterative approach seems more attractive, especially for large-scale problems.

For the linear problem (Equation1(1)

(1) ), the Landweber iteration is defined by

(2)

(2) where

denotes the adjoint operator of A. We refer to [Citation2, Section 6.1] for the regularization property of the Landweber iteration. The continuous analogue of (Equation2

(2)

(2) ) can be considered as a first-order evolution equation in Hilbert spaces

(3)

(3) if an artificial scalar time t is introduced, and

in (Equation2

(2)

(2) ). Here and later on, we use Newton's conventions for the time derivatives. The formulation (Equation3

(3)

(3) ) is known as Showalter's method, or asymptotic regularization [Citation4,Citation5]. The regularization property of (Equation3

(3)

(3) ) can be analysed through a proper choice of the terminating time. Moreover, it has been shown that by using Runge–Kutta integrators, all of the properties of asymptotic regularization (Equation3

(3)

(3) ) carry over to its numerical realization [Citation6].

From a computational viewpoint, the Landweber iteration, as well as the steepest descent method and the minimal error method, is quite slow. Therefore, in practice accelerating strategies are usually used; see [Citation3,Citation7] and references therein for details.

Over the last few decades, besides the first-order iterative methods, there has been increasing evidence to show that the discrete second-order iterative methods also enjoy remarkable acceleration properties for ill-posed inverse problems. The well-known methods are the Levenberg–Marquardt method [Citation8], the iteratively regularized Gauss–Newton method [Citation9], the ν-method [Citation2, Section 6.3], and the Nesterov acceleration scheme [Citation10]. Recently, a more general second-order iterative method – the two-point gradient method – has been developed in [Citation11]. In order to understand better the intrinsic properties of the discrete second-order iterative regularization, we consider in this paper the continuous version

(4)

(4) of the second-order iterative method in the form of an evolution equation, where

and

are the prescribed initial data and

is a constant damping parameter.

From a physical viewpoint, the system (Equation4(4)

(4) ) describes the motion of a heavy ball that rolls over the graph of the residual norm square functional

and that keeps rolling under its own inertia until friction stops it at a critical point of

. This nonlinear oscillator with damping, which is called the Heavy Ball with Friction (HBF) system, has been considered by several authors from an optimization viewpoint, establishing different convergence results and identifying the circumstances under which the rate of convergence of HBF is better than the one of the first-order methods; see [Citation12–14]. Numerical algorithms based on (Equation4

(4)

(4) ) for solving some special problems, e.g. inverse source problems in partial differential equations, large systems of linear equations, and the nonlinear Schrödinger problem, etc., can be found in [Citation15–18]. The main goal of this paper is the intrinsic structure analysis of the theory of the second-order iterative regularization and the development of new iterative regularization methods based on the framework (Equation4

(4)

(4) ).

The remainder of the paper is structured as follows: in Section 2, we extend the theory of general affine regularization schemes for solving linear ill-posed problems to a more general setting, adapted for the analysis of the second-order model (Equation4(4)

(4) ). Then, the existence and uniqueness of the second-order flow (Equation4

(4)

(4) ), as well as some of its properties, are discussed in Section 3. Section 4 is devoted to the study of the regularization property of the dynamical solution to (Equation4

(4)

(4) ), while Section 5 presents the results about convergence rates under the assumption of conventional source conditions. In Section 6, based on the Störmer–Verlet method, we develop a novel iterative regularization method for the numerical implementation of the second-order asymptotical regularization (SOAR). Some numerical examples, as well as a comparison with four existing iterative regularization methods, are presented in Section 7. Finally, concluding remarks are given in Section 8.

2. General affine regularization methods

In this section, we consider general affine regularization schemes based on a family of pairs of piecewise continuous functions (

) for regularization parameters

. Once a pair of generating functions

is chosen, the approximate solution to (Equation1

(1)

(1) ) can be given by the procedure

(5)

(5)

Remark 2.1

The affine regularization procedure defined by formula (Equation5(5)

(5) ) is designed in particular for the second-order evolution equation (Equation4

(4)

(4) ). If one sets

, the proposed regularization method coincides with the classical linear regularization schema for general linear ill-posed problems; see, e.g. [Citation19]. However, as the numerical experiments in Section 7 will show, the initial data influence the behaviour of the regularized solutions obtained by (Equation4

(4)

(4) ). By finding an appropriate choice of the triple

, the second-order analogue of the asymptotical regularization yields an accelerated procedure with approximate solutions of higher accuracy.

To evaluate the regularization error for the procedure (Equation5

(5)

(5) ) in combination with the noise-free intermediate quantity

(6)

(6) where evidently

, we introduce the concepts of index functions and profile functions from [Citation19,Citation20], as follows:

Definition 2.1

A real function is called an index function if it is continuous, strictly increasing and satisfies the condition

.

Definition 2.2

An index function f, for which holds, is called a profile function to

under the assumptions stated above.

Having a profile function f estimating the noise-free error and taking into account that

is an upper bound for the noise-propagation error

, which is independent of

, we can estimate the total regularization error

as

(7)

(7) for all

. If we denote by

(8)

(8) the bias function related to the major part of the regularization method

from (Equation5

(5)

(5) ), then

is evidently a profile function to

and we have

(9)

(9)

Proposition 2.1

Assume that the pairs of functions are piecewise continuous in α and satisfy the following three conditions:

For any fixed

:

and

.

Two constants

and

exist such that

and

hold for all

.

A constant

exists such that

for all

.

Then, if the regularization parameter is chosen so that

the approximate solution in (Equation5

(5)

(5) ) converges to the exact solution

as

.

Proof.

From the properties (i) and (ii) of Proposition 2.1 we deduce for point-wise convergence

and

for any

(see, e.g. [Citation2, Theorem 4.1]). Therefore, by the estimate (Equation9

(9)

(9) ) we can derive that

as

.

Proposition 2.1 motivates us to call the procedure (Equation5(5)

(5) ) a regularization method for the linear inverse problem (Equation1

(1)

(1) ) if the pair of functions

satisfies the three requirements (i), (ii) and (iii).

Example 2.1

For the Landweber iteration (Equation2(2)

(2) ) with the step size

, we have

and

. It is not difficult to show, e.g. in [Citation19,Citation21], that

is a regularization method by Proposition 2.1 with constants

,

and

.

Consider the continuous version of the Landweber iteration (Equation3(3)

(3) ), i.e. Showalter's method. It is not difficult to show that

and

, and hence

. Obviously,

is a regularization method with

,

and

by noting that

and

[Citation5].

Note that the three requirements (i)–(iii) in Proposition 2.1 are not enough to ensure rates of convergence for the regularized solutions. More precisely, for rates in the case of ill-posed problems, additional smoothness assumptions on in correspondence with the forward operator A and the regularization method under consideration have to be fulfilled. This allows us to verify the specific profile functions

in formula (Equation7

(7)

(7) ) that are specified for our second-order method in formula (Equation9

(9)

(9) ). Once a profile function f is given, together with the property (iii) in Proposition 2.1, we obtain from the estimate (Equation7

(7)

(7) ) that

(10)

(10) Moreover, if we consider the auxiliary index function

(11)

(11) and choose the regularization parameter a priori as

, then we can easily see that

(12)

(12) Hence, the convergence rate

of the total regularization error as

depends on the profile function f only, but for our suggested approach, f is a function of

and on the damping parameter η.

In order to verify the profile function f in detail, it is of interest to consider how sensitive the regularization method is with respect to a priori smoothness assumptions. In this context, the concept of qualification can be exploited for answering this question: the higher its qualification, the more the method is capable of reacting to smoothness assumptions. Expressing the qualification by means of index functions ψ, the traditional concept of qualifications with monomials for

from [Citation5] (see also [Citation2]) has been extended in [Citation19,Citation20] to general index functions ψ. We adapt this generalized concept to our class of methods (Equation5

(5)

(5) ) in the following definition.

Definition 2.3

Let ψ be an index function. A regularization method (Equation5(5)

(5) ) for the linear operator equation (Equation1

(1)

(1) ) generated by the pair

is said to have the qualification ψ with constant

if both inequalities

(13)

(13) are satisfied for all

.

Remark 2.2

Since the bias function of Showalter's method equals and

, set

in the following identity:

(14)

(14) to conclude that for all exponents p>0 the monomials

are qualifications for Showalter's method. We will show that an analogue result also holds for the SOAR method – see Proposition 4.1 – and will apply this fact to obtaining associated convergence rates.

3. Properties of the second-order flow

We first prove the existence and uniqueness of strong global solutions of the second-order equation (Equation4(4)

(4) ). Then, we study the long-term behaviour of the dynamical solution

of (Equation4

(4)

(4) ) and the residual norm functional

.

Definition 3.1

is a strong global solution of (Equation4

(4)

(4) ) with initial data

if

, and

are locally absolutely continuous [Citation22],

holds for almost every

.

Theorem 3.1

For any pair there exists a unique strong global solution of the second-order dynamical system (Equation4

(4)

(4) ).

Proof.

Denote by , and rewrite (Equation4

(4)

(4) ) as a first-order differential equation

(15)

(15) where

,

and I denotes the identity operator in

. Since A is a bounded linear operator, both

and B are also bounded linear operators. Hence, by the Cauchy–Lipschitz–Picard theorem, the first-order autonomous system (Equation15

(15)

(15) ) has a unique global solution for the given initial data

.

Now, we start to investigate the long-term behaviours of the dynamical solution and the residual norm functional. These properties will be used for the study of convergence rate in Section 5.

Lemma 3.1

Let be the solution of (Equation4

(4)

(4) ). Then,

and

as

. Moreover, we have the following two limit relations:

(16)

(16) and

(17)

(17)

The proof of the above lemma uses the idea given in [Citation14], and can be found in Appendix A.1. If does not belong to the domain

of the Moore–Penrose inverse

of A, it is not difficult to show that there is a ‘blow-up’ for the solution

of the dynamical system (Equation4

(4)

(4) ) in the sense that

as

. Contrarily, for

, i.e. if the noisy data

satisfy the Picard condition, one can show more assertions concerning the long-term behaviour of the solution to the evolution equation (Equation4

(4)

(4) ), and we refer to Lemma 3.2 for results in the case of noise-free data

. In this work, for the inverse problem with noisy data, we are first and foremost interested in the case that

may occur, since the set

is of the first category and the chance to meet such an element is negligible.

At the end of this section, we show some properties of of (Equation4

(4)

(4) ) with noise-free data.

Lemma 3.2

Let be the solution of (Equation4

(4)

(4) ) with the exact right-hand side y as data. Then, in the case

we have

.

and

as

.

and

as

.

as

.

The proof of Lemma 3.2 follows as a special case for in [Citation22], and it is given in Appendix A.2. The rate

as

given in Lemma 3.2 for the second-order evolution equation (Equation4

(4)

(4) ) should be compared with the corresponding result for the first-order method, i.e. the gradient decent methods, where one only obtains

as

. If we consider a discrete iterative method with the number k of iterations, assertion (iv) in Lemma 3.2 indicates that in comparison with gradient descent methods, the second-order methods (Equation4

(4)

(4) ) need the same computational complexity for the number k of iterations, but can achieve a higher order

of accuracy for the objective functional as

.

4. Convergence analysis for noisy data

This section is devoted to the verification of the pair of generator functions occurring in formula (Equation6

(6)

(6) ) associated with the second-order equation problem (Equation4

(4)

(4) ) with the inexact right-hand side

and the corresponding regularization properties.

Let be the well-defined singular system for the compact and injective linear operator A, i.e. we have

and

with ordered singular values

as

. Since the eigenelements

and

form complete orthonormal systems in

and

, respectively, the equation in (Equation4

(4)

(4) ) is equivalent to

(18)

(18) Using the decomposition

under the basis

in

, we obtain

(19)

(19)

In order to solve the above differential equation, we have to distinguish three different cases: (a) the overdamped case: , (b) the underdamped case: there is an index

such that

, and (c) the critical damping case: an index

exists such that

. In this section, we discuss for simplicity the overdamped case only. The other two cases are studied similarly, and the corresponding details can be found in Appendix 2. We remark that all results that concluded in the overdamped case also hold for the other two cases, but with different value of positive constants

in Proposition 2.1 and γ in Definition 2.3.

In the overdamped case, the characteristic equation of (Equation19(19)

(19) ), possessing the form

, which has two independent solutions

and

for all

, where

. Hence, it is not difficult to show that the general solution to (Equation19

(19)

(19) ) in the overdamped case is

(20)

(20)

Introducing the initial conditions in (Equation4(4)

(4) ) to obtain a system for

yields

(21)

(21) or equivalently with the decomposition

for all

(22)

(22) which gives

(23)

(23) By a combination of (Equation23

(23)

(23) ), the definition of

and the decomposition of

we obtain

where

(24)

(24) We find the form required for the generator functions in formula (Equation6

(6)

(6) ) if we set

(25)

(25) Then the corresponding bias function

is

(26)

(26)

Theorem 4.1

The functions in (Equation25

(25)

(25) ) based on (Equation4

(4)

(4) ) satisfy the conditions (i)–(iii) of Proposition 2.1, which means that we consequently have a regularization method with the procedure (Equation5

(5)

(5) ) for the linear inverse problem (Equation1

(1)

(1) ).

Proof.

We check all of the three requirements in Proposition 2.1. The first condition obviously holds for and

, defined in (Equation25

(25)

(25) ) and (Equation26

(26)

(26) ), respectively.

The second condition can be obtained by using

(27)

(27) It remains to bound

in Proposition 2.1. By the inequality

for a>0, we obtain

which implies that

Therefore, the third requirement in Proposition 2.1 holds for

with

(28)

(28) Finally, by the proof above, we see that the upper bound

for the affine regularization method with

can be selected arbitrarily.

Proposition 4.1

For all exponents p>0 the monomials are qualifications with the constants

(29)

(29) for the SOAR method in the overdamped case.

Proof.

Set in (Equation14

(14)

(14) ) and use the following inequality:

and the inequality (Equation27

(27)

(27) ), and we can derive that

Similarly, we have

which completes the proof.

The assertion of Theorem 4.1 and analogues to Proposition 4.1 can be found in Appendix 2 for the other values of the constant occurring as a parameter in the second-order differential equation of problem (Equation4

(4)

(4) ). In particular, this means the underdamped case (b), as well as the critical damping case (c).

5. Convergence rate results

Under the general assumptions of the previous sections, the rate of convergence of as

in the case of precise data, and of

as

in the case of noisy data, can be arbitrarily slow (cf. [Citation23]) for solutions

which are not smooth enough. In order to prove convergence rates, some kind of smoothness assumptions imposed on the exact solution must be employed. Such smoothness assumptions can be expressed by range-type source conditions (cf., e.g. [Citation2]), approximate source conditions (cf. [Citation24]), and variational source conditions occurring in form of variational inequalities (cf. [Citation25]). Now, range-type source conditions have the advantage that, in many cases, interpretations in the form of differentiability of the exact solution, boundary conditions, or similar properties are accessible. Hence, we focus in the following on the traditional range-type source conditions only. More precisely, we assume that an element

and numbers p>0 and

exist such that

(30)

(30) Moreover, the initial data

is supposed to satisfy such source conditions as well, i.e.

(31)

(31) For the choice

, the condition (Equation31

(31)

(31) ) is trivially satisfied. However, following the discussions in Sections 2 and 6, the regularized solutions essentially depend on the value of

. A good choice of

provides an acceleration of the regularization algorithm. In practice, one can choose a relatively small value of

to balance the source condition and the acceleration effect.

Proposition 5.1

Under the source conditions (Equation30(30)

(30) ) and (Equation31

(31)

(31) ),

is a profile function for the SOAR, where the constant γ is defined in (Equation29

(29)

(29) ).

Proof.

Combining the formulas (Equation9(9)

(9) ), (Equation30

(30)

(30) ) and (Equation31

(31)

(31) ) yields

This proves the proposition.

Theorem 5.1

A priori choice of the regularization parameter

If the terminating time of the second-order flow (Equation4

(4)

(4) ) is selected by the a priori parameter choice

(32)

(32) with the constant

then we have the error estimate for

(33)

(33) where the constant

and

.

Proof.

By the discussion in Section 2, we choose the value of such that

. By solving this equation we directly obtain

. Setting

and using the estimate (Equation12

(12)

(12) ), this gives the relations (Equation32

(32)

(32) ) and

Finally, we use the inequality

to get the bound

(the upper bound

is required for the affine regularization (Equation5

(5)

(5) ) in both the underdamped and critical cases; see the appendix for details).

In practice, the stopping rule in (Equation32(32)

(32) ) is not realistic, since a good terminating time point

requires knowledge of ρ (a characteristic of unknown exact solution). Such knowledge, however, is not necessary in the case of a posteriori parameter choices. In the following two subsections, we consider two types of discrepancy principles for choosing the terminating time point a posteriori.

5.1. Morozov's conventional discrepancy principle

In our setting, Morozov's conventional discrepancy principle means searching for values T>0 satisfying the equation

(34)

(34) where

is a constant, and the number

is defined in Proposition 2.1.

Lemma 5.1

If then the function

has at least one root.

Proof.

The continuity of is obvious according to Theorem 3.1. On the other hand, from (Equation16

(16)

(16) ) and the assumption of the lemma, we conclude that

which implies the existence of the root of

.

Theorem 5.2

A posteriori choice I of the regularization parameter

Suppose that and the source conditions (Equation30

(30)

(30) ) and (Equation31

(31)

(31) ) hold. If the terminating time

of the second-order flow (Equation4

(4)

(4) ) is chosen according to the discrepancy principle (Equation34

(34)

(34) ), we have for any

and p>0 the error estimates

(35)

(35) and

(36)

(36) where

is defined in the Theorem 5.1,

and

.

Proof.

Using the moment inequality and the source conditions (Equation30

(30)

(30) )–(Equation31

(31)

(31) ), we deduce that

(37)

(37) Since the terminating time

is chosen according to the discrepancy principle (Equation34

(34)

(34) ), we derive that

(38)

(38) Now we combine the estimates (Equation37

(37)

(37) ) and (Equation38

(38)

(38) ) to obtain, with the source conditions, that

(39)

(39) where

.

On the other hand, in a similar fashion to (Equation38(38)

(38) ), it is easy to show that

If we combine the above inequality with the source conditions (Equation30

(30)

(30) )–(Equation31

(31)

(31) ) and the qualification inequality (2.3), we obtain

which yields the estimate (Equation35

(35)

(35) ). Finally, using (Equation35

(35)

(35) ) and (Equation39

(39)

(39) ), we conclude that

This completes the proof.

Remark 5.1

If the function has more than one root, we recommend selecting

from the rule

In other words,

is the first time point for which the size of the residual

has about the order of the data error. By Lemma 5.1 such

always exists.

It is easy to show that is bounded by a decreasing function as the proof of Proposition 5.2 will show. Roughly speaking, the trend of

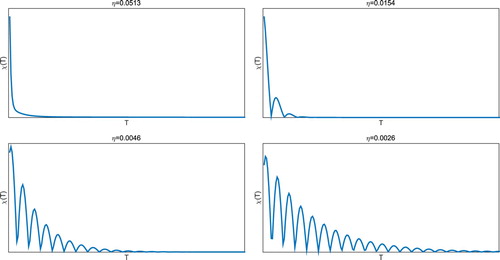

is to be a decreasing function, where oscillations may occur, and we refer to Figure . On the other hand, one can anticipate that the more oscillations of the discrepancy function

occur, the smaller the damping parameter η is. This is an expected result due to the behaviour of damped Hamiltonian systems.

Figure 1. The behaviour of from (Equation34

(34)

(34) ) with different damping parameters η.

5.2. The total energy discrepancy principle

For presenting a newly developed discrepancy principle, we introduce the total energy discrepancy function as follows:

(40)

(40) where

as before.

Proposition 5.2

The function is continuous and monotonically non-increasing. If

, then

has a unique solution.

Proof.

The continuity of is obvious according to Theorem 3.1. The non-growth of

is straight-forward according to

. Furthermore, from (Equation16

(16)

(16) ), (Equation17

(17)

(17) ) and the assumption of the proposition, we derive that

(41)

(41) and that, moreover,

. This implies the existence of roots for

.

Finally, let us show that has a unique solution. We prove this by contradiction. Since

is a non-increasing function, a number

exists so that

for

with some positive

. This means that

in

. Hence,

in

. Using equation (Equation4

(4)

(4) ) we conclude that for all

:

. Since

, we obtain that

for

, which implies that

. This contradicts the fact in (Equation41

(41)

(41) ).

Theorem 5.3

A posteriori choice II of the regularization parameter

Assume that and a positive number

exists such that for all

, the unique root

of

satisfies the inequality

, where

is a constant, independent of δ. Then, under the source conditions (Equation30

(30)

(30) ) and (Equation31

(31)

(31) ), for any

and p>0 we have the error estimates

(42)

(42) where

is defined in Theorem 5.1, and constants

and

are the same as in Theorem 5.2.

Proof.

The proof can be done along the lines and using the tools of the proof of Theorem 5.2.

In the simulation Section 7.1, we will computationally show that the assumptions occurring in the above theorem can happen in practice. Empirically, when the value of the initial velocity is not too small () or the noise is small enough (

), the additional assumption

in Theorem 5.3 always holds.

6. A novel iterative regularization method

Roughly speaking, the second-order evolution equation (Equation4(4)

(4) ) with an appropriate numerical discretization scheme for the artificial time variable yields a concrete second-order iterative method. Just as with the Runge–Kutta integrators [Citation6] or the exponential integrators [Citation26] for solving first-order equations, the damped symplectic integrators are extremely attractive for solving second-order equations, since the schemes are closely related to the canonical transformations [Citation27], and the trajectories of the discretized second flow are usually more stable.

The simplest discretization scheme should be the Euler method. Denote by , and consider the following Euler iteration:

(43)

(43) By elementary calculations, scheme (Equation43

(43)

(43) ) expresses the form of following three-term semi-iterative method:

(44)

(44) with a specially defined parameters

and

. It is well known that the semi-iterative method (Equation44

(44)

(44) ), equipped with an appropriate stopping rule, yields order optimal regularization method with (asymptotically) much fewer iterations than the classical Landweber iteration [Citation2, Section 6.2].

In this paper, we develop a new iterative regularization method based on the Störmer–Verlet method, which also belongs to the family of symplectic integrators and takes the form

(45)

(45)

Proposition 6.1

For any fixed damping parameter η, if the step size is chosen by

(46)

(46) then, the scheme (Equation45

(45)

(45) ) is convergent. Consequently, for any fixed T, there exists a pair of parameters

satisfying

and the condition (Equation46

(46)

(46) ), such that

as

. Here

and

are solutions to (Equation45

(45)

(45) ) and (Equation4

(4)

(4) ), respectively.

Proof.

Denote by and rewritten (Equation45

(45)

(45) ) as

(47)

(47) where

and

By Taylor's theorem and the finite difference formula, it is not difficult to show the consistency of the scheme (Equation45(45)

(45) ). It is well known that boundedness implies the convergence of consistent schemes for any problem [Citation28], hence, it suffices to show the boundedness of the scheme (Equation45

(45)

(45) ). The asymptotical behaviour

follows from the convergence result and the second order of the Störmer–Verlet method. Furthermore, a sufficient condition for the boundedness of the iterative algorithm (Equation45

(45)

(45) ) is that the operator B is non-expansive. Hence, it is necessary to prove that

.

Using the singular value decomposition, we have , where Φ is a unitary matrix and

, where

.

By the elementary calculations, we obtain that the eigenvalues of B equal

(48)

(48)

Denote by the index of

, corresponding to the maximal absolute value of

, i.e.

There are three possible cases here: the overdamped case (), the underdamped case (

), and the critical damping case (

).

Let us consider these cases, respectively. For the chosen time step size in (Equation46

(46)

(46) ), we have

. Therefore, for the overdamped case,

Define

with a>1 (by the condition (Equation46

(46)

(46) ), it holds that

), and we have

(49)

(49) Note that

(50)

(50) Combine (Equation49

(49)

(49) ) and (Equation50

(50)

(50) ) to obtain

Now, consider the underdamped case. In this case, the complex eigenvalue

satisfies

which implies that

Finally, consider the critical damping case. Similarly, we have

, which yields the desired result.

At the end of this second, we show the convergence rate of the scheme (Equation45(45)

(45) ).

Theorem 6.1

Under the assumptions of Theorem 5.2 or 5.3, if the time step size is chosen by then for any

and p>0 we have the convergence rate

(51)

(51) where

,

,

, and

is the root of (Equation34

(34)

(34) ) or (Equation40

(40)

(40) ). Here,

denotes the standard floor function and

is defined in Theorem 5.1.

Proof.

It follows from Proposition 6.1 that the choice yields

with some constant C for all

.

Combine the above inequality and the results in Theorems 5.2 and 5.3 to obtain

for some constant

. This gives the estimate (Equation51

(51)

(51) ).

7. Numerical simulations

In this section, we present some numerical results for the following integral equation:

(52)

(52) If we choose

, the operator A is compact, selfadjoint and injective. It is well known that the integral equation (Equation52

(52)

(52) ) has a solution

if

. Moreover, the operator A has the eigensystem

, where

and

. Furthermore, using the interpolation theory (see e.g. [Citation29]) it is not difficult to show that for

In general, a regularization procedure becomes numerically feasible only after an appropriate discretization. Here, we apply the linear finite elements to solve (Equation52

(52)

(52) ). Let

be the finite element space of piecewise linear functions on a uniform grid with step size

. Denote by

the orthogonal projection operator acting from

into

. Define

and

. Let

be a basis of the finite element space

, then, instead of the original problem (Equation52

(52)

(52) ), we solve the following system of linear equations:

(53)

(53) where

and

.

As shown in [Citation2], the finite dimensional projection error plays an important role in the convergence rates analysis. For the compact operator A,

as

. Moreover, if the noise level

slowly enough as

, the quality

has no influence and we obtain the same convergence rates as in Theorems 5.2 and 5.3.

Uniformly distributed noises with the magnitude are added to the discretized exact right-hand side:

(54)

(54) where

returns a pseudo-random value drawn from a uniform distribution on [0,1]. The noise level of measurement data is calculated by

, where

denotes the standard vector norm in

.

To assess the accuracy of the approximate solutions, we define the -norm relative error for an approximate solution

(

):

where

is the exact solution to the corresponding model problem.

7.1. Influence of parameters

The purpose of this subsection is to explore the dependence of the solution accuracy and the convergence rate on the initial data , damping parameter η and the discrepancy functions χ and

, and thus to give a guide on the choices of them in practice.

In this subsection, we solve integral equation (Equation52(52)

(52) ) with the exact right-hand side

. Then, the exact solution

, and

for all

. Denote by ‘DP’ and ‘TEDP’ the newly developed iterative scheme (Equation45

(45)

(45) ) equipped with the Morozov's conventional discrepancy function

and the total energy discrepancy functions

, respectively.

The results about the influence of the solution accuracy (L2Err) and the convergence rate (iteration numbers ) on the initial data

are given in Tables and , respectively. As we can see, both the initial data

and

influence the solution accuracy as well as the convergence rate. Moreover, when the value of the damping parameter is not too small (see Tables and ) the results (both solution accuracy and convergence rate) by the methods ‘DP’ and ‘TEDP’ almost coincide with each other. This result verifies the rationality of the assumption in Theorem 5.3.

Table 1. The dependence of the solution accuracy and the convergence rate on the initial data

.

.

.

.

Table 2. The dependence of the solution accuracy and the convergence rate on the initial data

.

.

.

.

Table 3. The dependence of the solution accuracy and the convergence rate on the damping parameter η.

.

.

In Table , we displayed the numerical results with different value of damping

parameters η. With the appropriate choice of the damping parameter, say in

our example, the SOAR not only

gives the most accurate result but exhibits an acceleration effect. The

critical value of the damping parameter, say

, also provides an accurate result.

But it requires a few more steps. The influence of the damping parameter on

the residual functional can be found in Figure . It shows that at the same

time point, the larger the damping parameter, the smaller the residual norm

functional.

7.2. Comparison with other methods

In order to demonstrate the advantages of our algorithm over the traditional approaches, we solve the same problems by four famous iterative regularization methods – the Landweber method, the Nesterov's method, the ν-method and the conjugate gradient method for the normal equation (CGNE, cf., e.g. [Citation30]). The Landweber method is given in (Equation2(2)

(2) ), while Nesterov's method is defined as [Citation31]

(55)

(55) where

(we choose

in our simulations). Moreover, we select the Chebyshev method as our special ν-method, i.e.

[Citation2, Section 6.3]. For all of four traditional iterative regularization methods, we use the Morozov's conventional discrepancy principle as the stopping rule.

We consider the following two different right-hand sides for the integral equation (Equation52(52)

(52) ).

Example 1:

. Then,

, and

for all

. This example uses the discretization size n=400. Other parameters are

.

Example 2:

. Then,

, and

for all

. This example uses the discretization size n=400. Other parameters are

.

The results of the simulation are presented in Table , where we can conclude that, in general, the SOAR need less iteration and CPU time, and offers a more accurate regularization solution. Moreover, with respect to the Morozov's conventional discrepancy principle, the newly developed total energy discrepancy principle provides more accurate results in most cases. Concerning the number of iterations, the CGNE method performed much better than all of the other methods. However, the accuracy of the CGNE method is much worse than other methods (including DP and TEDP), since the step size of CGNE is too large to capture the optimal point and the semi-convergence effect disturbs the iteration rather early. Note that we set a maximal iteration number in all of our simulations.

Table 4. Comparisons with the Landweber method, the Nesterov's method, the Chebyshev method, and the CGNE method.

8. Conclusion and outlook

In this paper, we have investigated the method of SOAR for solving the ill-posed linear inverse problem Ax=y with the compact operator A mapping between infinite dimensional Hilbert spaces. Instead of y, we are given noisy data obeying the inequality

. We have shown regularization properties for the dynamical solution of the second-order equation (Equation4

(4)

(4) ). Moreover, by using Morozov's conventional discrepancy principle on the one hand and a newly developed total energy discrepancy principle on the other hand, we have proven the order optimality of SOAR. Furthermore, based on the framework of SOAR, by using the Störmer–Verlet method, we have derived a novel iterative regularization algorithm. The convergence property of the proposed numerical algorithm is proven as well. Numerical experiments in Section 7 show that, in comparison with conventional iterative regularization methods, SOAR is a faster regularization method for solving linear inverse problems with high levels of accuracy.

Various numerical results show that the damping parameter η in the second-order equation (Equation4(4)

(4) ) plays a prominent role in regularization and acceleration. Therefore, how to choose an optimal damping parameter should be studied in the future. Moreover, using the results of the nonlinear Landweber iteration, it will be possible to develop a theory of SOAR for wide classes of nonlinear ill-posed problems. Furthermore, it could be very interesting to investigate the case with the dynamical damping parameter

. For instance, the second-order equation (Equation4

(4)

(4) ) with

(

) presents the continuous version of Nesterov's scheme [Citation32], and the discretization of (Equation4

(4)

(4) ) with

yields the well-known ν-methods [Citation2, Section 6.3]. Even in the linear case (Equation1

(1)

(1) ), to the best of our knowledge, it is not quite clear whether Nesterov's approach equipped with a posteriori choice of the regularization parameter is an accelerated regularization method for solving ill-posed inverse problems. In our opinion, however, the SOAR can be a candidate for the systematic analysis of general second-order regularization methods.

Disclosure statement

No potential conflict of interest was reported by the authors.

ORCID

Y. Zhang http://orcid.org/0000-0003-4023-6352

Additional information

Funding

References

- Tikhonov A, Leonov A, Yagola A. Nonlinear ill-posed problems. Vols. I and II. London: Chapman and Hall; 1998.

- Engl H, Hanke M, Neubauer A. Regularization of inverse problems. New York (NY): Springer; 1996.

- Kaltenbacher B, Neubauer A, Scherzer O. Iterative regularization methods for nonlinear ill-posed problems. Berlin: Walter de Gruyter GmbH & Co. KG; 2008.

- Tautenhahn U. On the asymptotical regularization of nonlinear ill-posed problems. Inverse Probl. 1994;10:1405–1418. doi: 10.1088/0266-5611/10/6/014

- Vainikko G, Veretennikov A. Iteration procedures in ill-posed problems. Moscow: Nauka; 1986. (In Russian)

- Rieder A. Runge–Kutta integrators yield optimal regularization schemes. Inverse Probl. 2005;21:453–471. doi: 10.1088/0266-5611/21/2/003

- Neubauer A. On Landweber iteration for nonlinear ill-posed problems in Hilbert scales. Numer Math. 2000;85:309–328. doi: 10.1007/s002110050487

- Jin Q. On a regularized Levenberg–Marquardt method for solving nonlinear inverse problems. Numer Math. 2010;115:229–259. doi: 10.1007/s00211-009-0275-x

- Jin Q. On the discrepancy principle for some Newton type methods for solving nonlinear inverse problems. Numer Math. 2009;111:509–558. doi: 10.1007/s00211-008-0198-y

- Neubauer A. On Nesterov acceleration for Landweber iteration of linear ill-posed problems. J Inverse Ill-Pose Probl. 2017;25:381–390.

- Hubmer S, Ramlau R. Convergence analysis of a two-point gradient method for nonlinear ill-posed problems. Inverse Probl. 2017;33:095004. doi: 10.1088/1361-6420/aa7ac7

- Alvarez F. On the minimizing property of a second-order dissipative system in Hilbert spaces. SIAM J Control Optim. 2000;38:1102–1119. doi: 10.1137/S0363012998335802

- Alvarez F, Attouch H, Bolte J, et al. A second-order gradient-like dissipative dynamical system with hessian-driven damping. Application to optimization and mechanics. J Math Pures Appl. 2002;81:747–779. doi: 10.1016/S0021-7824(01)01253-3

- Attouch H, Goudou X, Redont P. The heavy ball with friction method. I. The continuous dynamical system. Commun Contemp Math. 2000;2:1–34.

- Zhang Y, Gong R, Cheng X, et al. A dynamical regularization algorithm for solving inverse source problems of elliptic partial differential equations. Inverse Probl. 2018;34:065001.

- Edvardsson S, Neuman M, Edström P, et al. Solving equations through particle dynamics. Comput Phys Commun. 2015;197:169–181. doi: 10.1016/j.cpc.2015.08.028

- Edvardsson S, Gulliksson M, Persson J. The dynamical functional particle method: an approach for boundary value problems. J Appl Mech. 2012;79:021012. doi: 10.1115/1.4005563

- Sandin P, Gulliksson M. Numerical solution of the stationary multicomponent nonlinear schrodinger equation with a constraint on the angular momentum. Phys Rev E. 2016;93:033301. doi: 10.1103/PhysRevE.93.033301

- Hofmann B, Mathé P. Analysis of profile functions for general linear regularization methods. SIAM J Numer Anal. 2007;45:1122–1141. doi: 10.1137/060654530

- Mathé P, Pereverzev SV. Geometry of linear ill-posed problems in variable Hilbert scales. Inverse Probl. 2003;19(3):789–803. doi: 10.1088/0266-5611/19/3/319

- Mathé P. Saturation of regularization methods for linear ill-posed problems in Hilbert spaces. SIAM J Numer Anal. 2006;42:968–973. doi: 10.1137/S0036142903420947

- Boţ R, Csetnek E. Second order forward–backward dynamical systems for monotone inclusion problems. SIAM J Control Optim. 2016;54:1423–1443. doi: 10.1137/15M1012657

- Schock E. Approximate solution of ill-posed equations: arbitrarily slow convergence vs. superconvergence. In: Hämmerlin G, Hoffmann KH, editors. Constructive methods for the practical treatment of integral equations. Vol. 73. Basel: Birkhäuser; 1985 p. 234–243.

- Hein T, Hofmann B. Approximate source conditions for nonlinear ill-posed problems – chances and limitations. Inverse Probl. 2009;25:035003.

- Hofmann B, Kaltenbacher B, Poschl C, et al. A convergence rates result for Tikhonov regularization in Banach spaces with non-smooth operators. Inverse Probl. 2007;23:987–1010. doi: 10.1088/0266-5611/23/3/009

- Hochbruck M, Lubich C, Selhofer H. Exponential integrators for large systems of differential equations. SIAM J Sci Comput. 1998;19:1152–1174.

- Hairer E, Wanner G, Lubich C. Geometric numerical integration: structure-preserving algorithms for ordinary differential equations. 2nd ed. New York (NY): Springer; 2006.

- Tadmor E. A review of numerical methods for nonlinear partial differential equations. Bull Am Math Soc. 2012;49(4):507–554. doi: 10.1090/S0273-0979-2012-01379-4

- Lions J, Magenes E. Non-homogeneous boundary value problems and applications. Vol. I. Berlin: Springer; 1972.

- Hanke M. Conjugate gradient type methods for ill-posed problems. New York (NY): Wiley; 1995.

- Nesterov Y. A method of solving a convex programming problem with convergence rate. Sov Math Dokl. 1983;27:372–376.

- Su W, Boyd S, Candes E. A differential equation for modeling Nesterov's accelerated gradient method: theory and insights. J Mach Learn Res. 2016;17:1–43.

Appendix 1. Proofs in Section 3

A.1. Proof of Lemma 3.1

Define the Lyapunov function of (Equation4(4)

(4) ) by

. It is not difficult to show that

(A1)

(A1) by looking at equation (Equation4

(4)

(4) ) and the differentiation of the energy function

. Hence,

is non-increasing, and consequently,

. Therefore,

is uniform bounded. Integrating both sides in (EquationA1

(A1)

(A1) ), we obtain

which yields

.

Now, let us show that for any the following inequality holds:

(A2)

(A2)

Consider for every the function

. Since

and

for every

. Taking into account (Equation4

(4)

(4) ), we get

(A3)

(A3)

On the other hand, by the convexity inequality of the residual norm square functional , we derive

(A4)

(A4)

Combine (EquationA3(A3)

(A3) ) and (EquationA4

(A4)

(A4) ) with the definition of

to obtain

By (EquationA1

(A1)

(A1) ),

is non-increasing, hence, given t>0, for all

we have

By multiplying this inequality with

and then integrating from 0 to θ, we obtain

Integrate the above inequality once more from 0 to t together with the fact that

decreases, to obtain

(A5)

(A5) where

.

Since and

, it follows from (EquationA5

(A5)

(A5) ) that

Dividing the above inequality by

and letting

, we deduce that

Hence, for proving (EquationA2(A2)

(A2) ), it suffices to show that

. It is obviously held by noting the following inequalities:

From the inequality

, we conclude together with (EquationA2

(A2)

(A2) ) that

(A6)

(A6) Consequently, we have

Now, let us show the remaining parts of the assertion. Since is non-increasing and bounded from below by

, it converges as

. If

, then

by noting (EquationA6

(A6)

(A6) ). This contradicts the fact that

. Therefore, the limit (Equation17

(17)

(17) ) holds and

as

.

A.2. Proof of Lemma 3.2

(i) Consider for every the function

. Similarly as in (EquationA3

(A3)

(A3) ), it holds that

which implies that

(A7)

(A7) or, equivalently (by using the evolution equation (Equation4

(4)

(4) )),

(A8)

(A8)

By the assumption , we deduce that

(A9)

(A9) which means that the function

is monotonically decreasing. Hence, a real number C exists, such that

(A10)

(A10) which implies

. By multiplying this inequality with

and then integrating from 0 to T, we obtain the inequality

Hence,

is uniform bounded, and, consequently, the trajectory

is uniform bounded.

(ii) follows from Lemma 3.1.

Now, we prove assertion (iv). Define

(A11)

(A11) By elementary calculations, we derive that

which implies that (by noting

)

Integrate the above equation on to obtain together, with the nonnegativity of

,

(A12)

(A12)

On the other hand, since both and

are uniform bounded, and

as

, a constant M exists such that

. Hence, letting

in (EquationA12

(A12)

(A12) ), we obtain

(A13)

(A13)

Since is non-increasing, we deduce that

(A14)

(A14) Using (EquationA13

(A13)

(A13) ), the left side of (EquationA14

(A14)

(A14) ) tends to 0 when

, which implies that

. Hence, we conclude

, which yields the desired result in (iv).

Finally, let us show the long-term behaviour of . Integrating the inequality (EquationA8

(A8)

(A8) ) from 0 to T we obtain the fact that there exists a real number

, such that for every

(A15)

(A15) Since both

and

are global bounded (note that

), inequality (EquationA15

(A15)

(A15) ) gives

. The relations

and

as

are obvious by noting assertions (i), (ii), (iv) and the connection equation (Equation4

(4)

(4) ).

Appendix 2. Convergence analysis of the SOAR for the case when

B.1 The underdamped case:

In this case, the solution to the second-order differential equation (Equation4(4)

(4) ) reads

where

where

and

are defined in (Equation24

(24)

(24) ), and

(B1)

(B1)

As in the overdamped case, we define

(B2)

(B2) In this case, the corresponding bias function becomes

where

is given in (Equation26

(26)

(26) ).

Theorem B.1

The functions defined in (EquationB2

(B2)

(B2) ), satisfy the conditions (i)–(iii) of Proposition 2.1.

Proof.

The first requirement in Proposition 2.1 is obvious. Furthermore, using the inequalities and

we obtain

(B3)

(B3) which implies the second condition in Proposition 2.1:

with

. Similarly, we have

(B4)

(B4) which means that

with

.

Now, let us check the third condition in Proposition 2.1. Using the inequality (EquationB4(B4)

(B4) ) we obtain that for

Hence, in the case when

and

, we have

.

Finally, by defining

(B5)

(B5) we can deduce that

for

with

.

Proposition B.1

For all exponents p>0 the monomials are qualifications with the constants

for the SOAR method in the underdamped case, where γ is defined in (Equation29

(29)

(29) ) and

(B6)

(B6)

Proof.

By Proposition 4.1, we only need to show that

(B7)

(B7) for all

. Set

and

in (Equation14

(14)

(14) ) to obtain

(B8)

(B8) Then, using (EquationB4

(B4)

(B4) ) and (EquationB8

(B8)

(B8) ), we can derive that for all

which yields the first inequality in (EquationB7

(B7)

(B7) ).

Finally, from the above result, we can deduce that for all

which completes the proof.

B.2 The critical damping case:

In this case, the solution of (Equation4(4)

(4) ) is

, where

and

,

.

Define

(B9)

(B9) and obtain the corresponding bias function

Theorem B.2

The functions given in (EquationB9

(B9)

(B9) ), satisfy the conditions (i)–(iii) of Proposition 2.1.

Proof.

By Theorem B.1, we only need to check the case when . In this case, it is easy to verify that

,

and

and

for all

. Finally, by the inequality (assume that

)

, and Theorem B.1, we complete the proof with

for

,

, and

is defined in (EquationB5

(B5)

(B5) ).

Proposition B.2

For all exponents p>0 the monomials are qualifications with the constants

for the SOAR method in the critical damping case, where

(B10)

(B10) and the constants γ and

are defined in (Equation29

(29)

(29) ) and (EquationB6

(B6)

(B6) ), respectively.

Proof.

By Propositions 4.1 and B.1, we only need to show that for all

By (EquationB9

(B9)

(B9) ) and elementary calculations, we derive that

and

which yields the required result.