?Mathematical formulae have been encoded as MathML and are displayed in this HTML version using MathJax in order to improve their display. Uncheck the box to turn MathJax off. This feature requires Javascript. Click on a formula to zoom.

?Mathematical formulae have been encoded as MathML and are displayed in this HTML version using MathJax in order to improve their display. Uncheck the box to turn MathJax off. This feature requires Javascript. Click on a formula to zoom.ABSTRACT

In this paper, a new gradient-based iterative algorithm is proposed to solve the coupled Lyapunov matrix equations associated with continuous-time Markovian jump linear systems. A necessary and sufficient condition is established for the proposed gradient-based iterative algorithm to be convergent. In addition, the optimal value of the tunable parameter achieving the fastest convergence rate of the proposed algorithm is given explicitly. Finally, some numerical simulations are given to validate the obtained theoretical results.

1. Introduction

Continuous-time Markovian jump linear systems have been widely used to describe some practical plants. Stability is fundamental for the investigation of any control systems. For the stability analysis of the Markovian jump linear systems, the coupled Lyapunov matrix equation is an important tool. In the past decades, the coupled continuous Lyapunov matrix equations are broadly used in stability analysis for continuous-time Markovian jump linear systems. In [Citation1], the existence of the unique positive definite solution of coupled Lyapunov matrix equations was used to check the moment stability of Markovian jump linear systems. In [Citation2], the authors shown that the Markovian jump linear system is stochastically stable if and only if the associated coupled Lyapunov matrix equation has a unique positive definite solution. The solution of the coupled Lyapunov matrix equations was used in [Citation3] to check the stochastic stability of the nonhomogeneous Markovian jump system. In [Citation4], the coupled Lyapunov equations have been applied to the stabilization of stochastic linear systems.

According to the above descriptions, it is known that the coupled Lyapunov matrix equation has an importance role in the stability analysis of the Markovian jump linear system. Thus, finding solutions for this kind of matrix equations has attracted much attention over the past decade. The exact solution of coupled continuous Lyapunov matrix equations was obtained in [Citation5] by using the Kronecker product and matrix inversion. However, the computational difficulties of the traditional compute method will arise because excessive computer memory is required for computation of high-dimensional matrices. Due to this, the iterative approaches are widely used to solve coupled matrix equations in recently years and some effective iterative algorithms have been developed. For example, some gradient-based iterative algorithms were presented in [Citation6,Citation7] to solve the general coupled matrix equations, including the continuous coupled Lyapunov matrix equations. The reduced-rank gradient-based algorithm was developed in [Citation8] for solving coupled Sylvester matrix equations. By using the property of symmetric positive definite matrix, a gradient-based iterative algorithm was presented in [Citation9] for a type of coupled matrix equations. As a matter of fact, the above-mentioned gradient-based algorithms in [Citation8,Citation9] can also be extended to solve the coupled Lyapunov matrix equations. In addition, some explicit iterative algorithms are proposed to solve the coupled Lyapunov matrix equation. In [Citation10], the conjugate direction method was given for solving the coupled Lyapunov matrix equations. In [Citation11], a classical Smith iterative algorithm was constructed by using the fixed point iterative approach. A simple iterative technique was introduced in [Citation12] to solve the coupled Lyapunov matrix equation. Recently, some new gradient-based iterative algorithms were presented for solving some kinds of matrix equations [Citation13,Citation14]. These algorithms also can be extended to solve the coupled Lyapunov matrix equations.

Besides, an implicit iterative algorithm was firstly proposed in [Citation15] by taking the special structure of the coupled continuous-time Lyapunov matrix equations into consideration. In this algorithm, some standard continuous Lyapunov matrix equations need to be solved at each iteration step. Based on the idea in [Citation15], two modified implicit iterative algorithms were developed in [Citation16,Citation17] for solving coupled continuous Lyapunov matrix equations. Recently, a new implicit iterative algorithm was constructed in [Citation18] for solving the coupled Lyapunov matrix equation by using successive over relaxation. More results for the solution of the matrix equations can be found in [Citation19–22]. However, some standard continuous Lyapunov matrix equations need to be solved in the aforementioned implicit iterative algorithms. In addition, a main disadvantage of the exist gradient-based iterative algorithms is that the convergence rates of these algorithms are slow. Inspired by the above analysis, in this paper, we aim to develop a new gradient-based iterative algorithm, which does not need to solve the standard Lyapunov equation and has faster convergence rate than the existing iterative algorithms.

In this paper, the gradient-based iterative technique is investigated for solving the continuous coupled Lyapunov matrix equations. First, a novel and simple gradient-based iterative algorithm is constructed and analysed. It is proven that the proposed gradient-based iterative algorithm converges to the unique solution of the coupled Lyapunov matrix equations if and only if the tunable parameter satisfies a certain inequality. Moreover, an optimal tunable value that achieves the fastest convergence rate of the algorithm is obtained. Finally, the correctiveness of the convergence results are verified by some simulation results.

Notation: Throughout this paper, the notation ⊗ represents the Kronecker product of two matrices. I represents an identity matrix of appropriate dimensions. For a real matrix A, we use and

to denote the transpose, the 2-norm, the

-norm, the eigenvalues, the maximal eigenvalue, the minimal eigenvalue and the spectral radius of matrix A, respectively. For two integers m and l with

denotes the set

For two square matrices E and A, let us define

For any matrix

the stretching function is defined as

2. Preliminaries

Consider the following continuous-time Markovian jump linear system

(1)

(1) where

is the system state, and

is a continuous-time discrete-state Markovian process taking values in a finite set

. For the Markovian jump linear system (Equation1

(1)

(1) ), the system matrices of N subsystems are

. The stationary transition probabilities of the Markovian process

are given by

(2)

(2) where

and

(

) is the transition rate from mode i at time t to mode j at time t+h. All the transition rates

,

can be collected into a transition rate matrix

which has the following property

Let the initial condition for the system (Equation1

(1)

(1) )–(Equation2

(2)

(2) ) be

and

the definition of asymptotically mean square stability for the system (Equation1

(1)

(1) )–(Equation2

(2)

(2) ) can be stated as follows:

Definition 2.1

[Citation3]

The continuous-time Markovian jump linear system (Equation1(1)

(1) )–(Equation2

(2)

(2) ) is asymptotically mean square stable if for any

there holds

where

the denotes mathematical expectation.

It is well known that the preceding mean square stability of the continuous-time Markovian jump linear system (Equation1(1)

(1) )–(Equation2

(2)

(2) ) is closely related to the following continuous coupled Lyapunov matrix equations (CLMEs)

(3)

(3) where

, are arbitrarily given positive definite matrices, and

, are the unknown matrices to be determined. Regarding the mean square stability of the system (Equation1

(1)

(1) )–(Equation2

(2)

(2) ), the following results are introduced.

Lemma 2.1

[Citation3]

The continuous-time Markovian jump linear system (Equation1(1)

(1) )–(Equation2

(2)

(2) ) is mean square stable if and only if the continuous CLMEs (Equation3

(3)

(3) ) have a unique solution

with

, for any given

with

.

Due to the significance of the continuous CLMEs in the stability analysis of the Markovian jump linear system, many researchers pay attention to the solution of the continuous CLMEs (Equation3(3)

(3) ). In next section, we will investigate the iterative technique for solving the continuous CLMEs (Equation3

(3)

(3) ). Before give the main results, we first present some classical iterative algorithms.

Algorithm 2.1

[Citation16]

(4)

(4)

Algorithm 2.2

[Citation17]

(5)

(5) where

,

, and

are some tunable parameters, which satisfy some given conditions.

In this paper, k denotes the iterative step of the iterative algorithms.

Remark 2.1

For Algorithms 2.1 and 2.2, at each iteration step, one needs to solve N standard continuous Lyapunov matrix equations in the following form,

Due to this, some additional operations are required when using Algorithms 2.1 and 2.2 for solving the continuous CLMEs (Equation3

(3)

(3) ). Thus, they are implicit iterative algorithms.

In addition, the gradient-based iterative algorithms proposed in [Citation7,Citation8] can also be used to solve the continuous CLMEs (Equation3(3)

(3) ). These results are stated as follows:

Algorithm 2.3

[Citation7]

(6)

(6) with

(7)

(7)

Algorithm 2.4

[Citation8]

(8)

(8) where

Remark 2.2

It is easily noted that the gradient-based iterative Algorithms 2.3 and 2.4 involve intermediate variables and

at each iterative step. Thus, the computational cost of these two gradient-based iterative algorithms are high.

In this section, two explicit iterative algorithms and two implicit iterative algorithms for solving the continuous CLMEs (Equation3(3)

(3) ) have been reviewed. In order to improve the convergence rate, a new gradient-based iterative algorithm to solve the continuous CLMEs (Equation3

(3)

(3) ) will be proposed in the next section.

3. A new gradient-based iterative algorithm

The basic idea of the gradient-based iterative method is to search for an optimal matrix such that a given objective function is minimized. In this case, some simplified objective functions can be given as follows:

(9)

(9) To construct the gradient-based iterative algorithm, we should first calculate the gradient of

, with respect to

. In fact, the gradient of

, with respect to

, can be derived easily as below:

(10)

(10) where

, are given by

(11)

(11) Now, based on (Equation10

(10)

(10) ) a new gradient-based iterative algorithm can be constructed to search the solution of the continuous CLMEs (Equation3

(3)

(3) ).

Algorithm 3.1

(12)

(12) where

, are defined in (Equation7

(7)

(7) ).

Remark 3.1

In comparison with the existing Algorithms 2.1 and 2.2, the proposed Algorithm 3.1 is explicit and it does not need to solve the standard Lyapunov matrix equations by applying the Matlab function 'lyap'. Thus, the computational cost of the Algorithm 3.1 should be less than the implicit iterative Algorithms 2.1 and 2.2.

Remark 3.2

In comparison with the existing Algorithms 2.3 and 2.4, it can be observed that the term is not included in the Algorithm 3.1 at each iterative step. In this case, the computational complexity of this gradient-based algorithm will be much lower than the existing iterative algorithms. Therefore, the proposed Algorithm 3.1 would have faster convergence rate than the Algorithms 2.3 and 2.4 as evidenced in the simulations.

In the following, we will give some convergence results of the Algorithm 3.1. To this end, we first introduce the following useful lemma, which has an important role in the derivation of the main results.

Lemma 3.1

[Citation23]

If and

then we have

In addition, it is denoted that

(13)

(13) where

(14)

(14) and let

(15)

(15) where

. On the basis of above notations (Equation13

(13)

(13) ) and (Equation15

(15)

(15) ), a necessary and sufficient condition for the convergence of the proposed Algorithm 3.1 can be given in the following theorem.

Theorem 3.1

Assume that the continuous CLMEs (Equation3(3)

(3) ) has a unique solution

.

If

(16)

(16) then, the sequence

obtained by the Algorithm 3.1 with arbitrary initial condition converges to the unique solution of CLMEs (Equation3

(3)

(3) ) if and only if

(17)

(17) If

(18)

(18) then, the sequence

obtained by the Algorithm 3.1 with arbitrary initial condition converges to the unique solution of CLMEs (Equation3

(3)

(3) ) if and only if

(19)

(19) If neither the condition (Equation16

(16)

(16) ) nor the condition (Equation18

(18)

(18) ) is satisfied, then the Algorithm 3.1 is divergent.

Proof.

Define the iterative error matrix

(20)

(20) Substituting

(21)

(21) into the Algorithm 3.1 and adding

, on both sides of (Equation12

(12)

(12) ), yields

(22)

(22) with

where

, are defined in (Equation20

(20)

(20) ). Next, by performing vectorization operation and using Lemma 3.1, the obtained expressions (Equation22

(22)

(22) ) can be equivalently written as

with

Further, by using the notation (Equation14

(14)

(14) ), it can be obtained from the above equations that

with

where

, are defined in (Equation14

(14)

(14) ). From the above equations, one can obtain

Then, the above relations can be compactly written as

(23)

(23) where Ω is defined in (Equation13

(13)

(13) ) and

(24)

(24) This relation implies that

, for arbitrary initial conditions if and only if

is Schur stable. Further, it is well known that

is Schur stable if and only if the eigenvalues of matrix

satisfy

From this relation, it can be obtained that

(25)

(25) Obviously, the convergence condition (Equation17

(17)

(17) ) of the Algorithm (Equation12

(12)

(12) ) can be obtained by the relation (Equation25

(25)

(25) ). The proof of this theorem is thus completed.

Remark 3.3

In Theorem 3.1, the convergence results of the Algorithm 3.1 are given under the constrain conditions (Equation16(16)

(16) ) and (Equation18

(18)

(18) ). In future, we will investigate to remove these conditions by applying some other techniques.

Corollary 3.1

Assume that all the eigenvalues ,

, and

(26)

(26) If

then, the sequence

obtained by the Algorithm 3.1 with arbitrary initial condition converges to the unique solution of CLMEs (Equation3

(3)

(3) ) if and only if

If

then, the sequence

obtained by the Algorithm 3.1 with arbitrary initial condition converges to the unique solution of CLMEs (Equation3

(3)

(3) ) if and only if

Remark 3.4

In Corollary 3.1, the convergence conditions of the proposed Algorithm 3.1 are provided for two special cases: all the eigenvalues of the matrix Ω are greater than zero and all the eigenvalues of the matrix Ω are less than zero. For the case where the matrix Ω have both positive and negative eigenvalues, it is difficult to obtain an explicit expression of the convergence conditions in the current paper. This is our future work.

Theorem 3.2

Assume that the continuous CLMEs (Equation3(3)

(3) ) has a unique solution

. With Ω defined in (Equation13

(13)

(13) ) and any initial condition

, the following relation holds

(27)

(27) Moreover, if all the eigenvalues

,

, then the convergence rate of the Algorithm 3.1 is maximized when

Proof.

It is known that the relation holds for any matrix A, hence it follows from (Equation23

(23)

(23) ) that

From the above relation, we conclude that the relation (Equation27

(27)

(27) ) holds.

It can be seen from the relation (Equation27(27)

(27) ) that

can be used to measure the convergence rate of the Algorithm 3.1. Moreover, the relation (Equation27

(27)

(27) ) shows that the smaller

is, the faster the proposed Algorithm 3.1 will converge. In other words, the convergence rate of the Algorithm 3.1 is maximized if

is minimized. According to the above analysis, the iteration in (Equation12

(12)

(12) ) is convergent if and only if the eigenvalues of matrix

satisfy

In this case, we can further assume that the relation (Equation26

(26)

(26) ) holds. For a given μ, the optimal convergence factor

should satisfy the following equation

which means that

has a non-trivial solution. From the above analysis, the optimal choice of μ can be given by

Thus, the proof of this corollary is completed.

4. Illustrative examples

In this section, we give two examples to illustrate the effectiveness of the iterative Algorithms 2.1–3.1 and validate some theoretical results on the optimal convergence. For fair comparison of different iterative algorithms, we define the iterative error versus the iteration step k as where

Example 4.1

Consider the continuous CLMEs in the form of (Equation3(3)

(3) ) with the following system matrices

and transition rate matrix

It can be seen that the number of the subsystems is N=3, and the dimension of the above system is n=3. This example was once used in [Citation15]. Assume that the positive definite matrices

are all chosen as identity matrices. By [Citation15], the initial values of the iterative algorithms can be given by

(28)

(28) Next, several simulations are given to show different advantages of the proposed Algorithm 3.1.

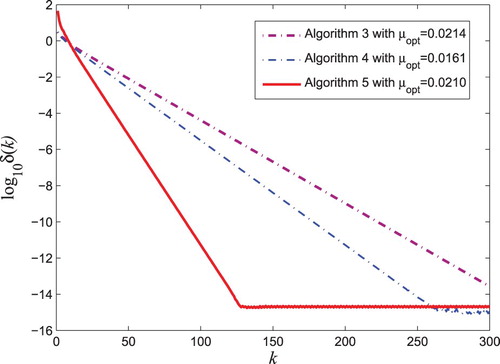

Simulation 1: In this simulation, we will verify some convergence results of the developed gradient-based iterative Algorithm 3.1 for initial conditions (Equation28(28)

(28) ). First, we use the Algorithm 3.1 to solve the continuous CLMEs (Equation3

(3)

(3) ) for different tuning parameters μ. According to Theorem 3.1, the iterative Algorithm 3.1 converges if and only if the tunable parameter satisfies

0.0239. When the Algorithm 3.1 is applied, by using the method in Theorem 3.2, the best tuning parameter is given by

0.0210. The iterative error

against k with different tuning parameters are shown in Figure .

Figure 1. The convergence performance of the proposed Algorithm 3.1 with different tuning parameters.

From Figure , it can be seen that the Algorithm 3.1 is convergent when the tunable parameter belongs to

In addition, the optimal tuning parameter leads to the fastest convergence rate.

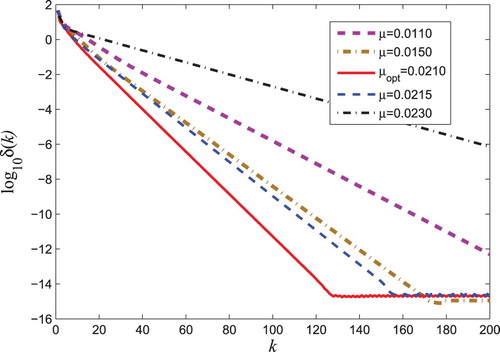

Simulation 2: In this simulation, we will compare the convergence performance of the proposed gradient-based iterative Algorithm 3.1 with some existing iterative Algorithms 2.1, 2.2, 2.3 and 2.4 in terms of computational time. By applying the method in [Citation7], we choose the step size such that the Algorithm 2.3 with initial conditions (Equation28

(28)

(28) ) has the maximal convergence rate. For the Algorithm 2.4, the fastest convergence rate can be obtained when the step size is chosen as

. By using the method in Theorem 3.2, it can be derived that the Algorithm 3.1 has the best convergence performance if

. By using different iterative algorithms to solve the continuous CLMEs (Equation3

(3)

(3) ), thus we obtain the computational time and the iterative errors for different algorithms as shown in Table 1 for precision

.

From Table , one can see that the Algorithm 3.1 takes less computational time than the previous iterative Algorithms 2.1, 2.2, 2.3 and 2.4. In addition, the iteration errors versus k for Algorithms 2.3, 2.4 and 3.1 are shown in Figure .

Table 1. Comparison of the convergence performance for different iterative algorithms.

It can be seen from Figure that the proposed Algorithm 3.1 is more effective and converge much faster than the previous gradient-based iterative Algorithms2.3 and 2.4. The total iterative numbers of the Algorithms2.3, 2.4 and 3.1 with a same cutoff error are 300, 260 and 120, respectively. Thus, the convergence rate of the proposed Algorithm 3.1 is much faster than the existing Algorithms 2.3 and 2.4.

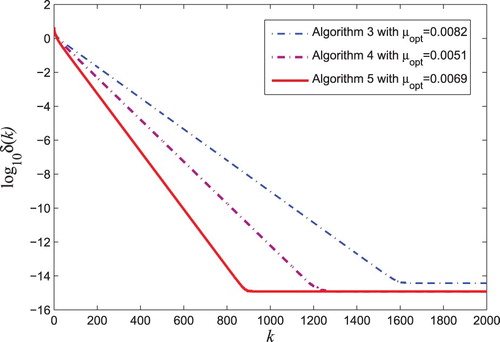

Example 4.2

In this paper, we show that effectiveness of the proposed gradient-based Algorithm for the continuous CLMEs (Equation3(3)

(3) ) with high-dimensional system matrices. Consider the following continuous CLMEs in the form of (Equation3

(3)

(3) ) with N=2. The system matrices are as follows:

and transition rate matrix is

In this example, the dimension of the above system is n=9 and the positive definite matrices

are both chosen as identity matrices. Next, the convergence performance of the different algorithms with the zero initial conditions. For this example, by applying the method in [Citation7], we choose the step size

such that the Algorithm 2.3 with initial conditions (Equation28

(28)

(28) ) has the maximal convergence rate. For the Algorithm2.4, the fastest convergence rate can be obtained when the step size is chosen as

. By using the method in Theorem 3.2, it can be derived that the Algorithm 3.1 has the best convergence performance if

.

First, the converge curves for Algorithms 2.3–3.1 are given in Figure .

Figure 3. The convergence performance of the different gradient-based iterative algorithms with high-dimensional system matrices.

For the continuous CLMEs (Equation3(3)

(3) ) with high-dimensional system matrices, it can be seen from Figure that the proposed gradient-based Algorithm 3.1 with zero initial conditions has faster convergence rate than the previous Algorithms 2.3–2.4 if the tuning parameter is appropriately chosen.

For this example with high-dimensional system matrices, we next compare the proposed Algorithm 3.1 with the existing Algorithms 2.1–2.4 in terms of the computational time. By using different iterative algorithms to solve the coupled Lyapunov matrix Equation (Equation3(3)

(3) ) with zero initial conditions, the computational time with the cutoff iterative errors

in the following table.

From Table , it can be seen that if the parameter μ is properly chosen, then the proposed gradient-based iterative Algorithm 3.1 takes less computational time than the existing Algorithms 2.1–2.4. From these results, it can be concluded that the proposed Algorithm 3.1 has much computational cost than the existing Algorithms 2.1–2.4 even if the coupled Lyapunov matrix Equation (Equation3(3)

(3) ) has high-dimensional system matrices.

Table 2. Comparison of the convergence performance of the Algorithms 2.1–3.1 with high-dimensional system matrices.

5. Conclusions

In this paper, a gradient-based iterative algorithm is given for solving the continuous CLMEs (Equation3(3)

(3) ) which arises in the continuous-time Markovian jump linear systems.The structure of this new gradient-based iterative algorithm is much simpler than the existing gradient-based iterative algorithms. It has been proved that the sequence generated by the proposed algorithm converge to the unique positive definite solution of the continuous CLMEs (Equation3

(3)

(3) ) with faster convergence rates in comparison with existing algorithms. Moreover, the optimal tunable parameter achieving the fastest convergence rate is explicitly provided.

In future, it is expected that the ideas and methods can be further used to solve the other matrix equations such as coupled Riccati matrix equations, discrete coupled Lyapunov equations, etc.

Disclosure statement

No potential conflict of interest was reported by the authors.

Additional information

Funding

References

- Mariton M. Almost sure and moments stability of jump linear systems. Syst Control Lett. 1988;11(5):393–397. doi: 10.1016/0167-6911(88)90098-9

- Ji Y, Chizeck HJ. Controllability, stabilizability, and continuous-time Markovian jump linear quadratic control. IEEE Trans Automat Contr. 1990;35(7):777–788. doi: 10.1109/9.57016

- Sun HJ, Wu AG, Zhang Y. Stability and stabilisation of Ito stochastic systems with piecewise homogeneous Markov jumps. Int J Syst Sci. 2018;50(2):307–319. doi: 10.1080/00207721.2018.1551977

- Luo TJ. Stability analysis of stochastic pantograph multi-group models with dispersal driven by G-Brownian motion. Appl Math Comput. 2019;354:396–410.

- Jodar L, Mariton M. Explicit solutions for a system of coupled Lyapunov differential matrix equations. Proc Edinburgh Math Soc. 1987;30(2):427–434. doi: 10.1017/S0013091500026821

- Ge ZW, Ding F, Xu L. Gradient-based iterative identification method for multivariate equation-error autoregressive moving average systems using the decomposition technique. J Franklin Inst. 2019;356(3):1658–1676. doi: 10.1016/j.jfranklin.2018.12.002

- Zhang HM. Quasi gradient-based inversion-free iterative algorithm for solving a class of the nonlinear matrix equations. Comput Math Appl. 2019;77(5):1233–1244. doi: 10.1016/j.camwa.2018.11.006

- Zhang H. Reduced-rank gradient-based algorithms for generalized coupled Sylvester matrix equations and its applications. Comput Math Appl. 2015;70(8):2049–2062. doi: 10.1016/j.camwa.2015.08.013

- Ding F, Zhang H. Gradient-based iterative algorithm for a class of the coupled matrix equations related to control systems. IET Control Theory Appl. 2014;8(15):1588–1595. doi: 10.1049/iet-cta.2013.1044

- Hajarian M. On the convergence of conjugate direction algorithm for solving coupled Sylvester matrix equations. Comput Appl Math. 2018;37(3):3077–3092. doi: 10.1007/s40314-017-0497-y

- Sun HJ, Zhang Y, Fu YM. Accelerated Smith iterative algorithms for coupled Lyapunov matrix equations. J Franklin Inst. 2017;354(15):6877–6893. doi: 10.1016/j.jfranklin.2017.07.007

- Sun HJ, Liu W, Teng Y. Explicit iterative algorithms for solving coupled discrete-time Lyapunov matrix equations. Iet Control Theory Appl. 2016;10(18):2565–2573. doi: 10.1049/iet-cta.2016.0437

- Huang BH, Ma CF. Gradient-based iterative algorithms for generalized coupled Sylvester-conjugate matrix equations. Comput Math Appl. 2017;75(7):2295–2310. doi: 10.1016/j.camwa.2017.12.011

- Zhang H, Yin H. New proof of the gradient-based iterative algorithm for the Sylvester conjugate matrix equation. Comput Math Appl. 2017;74(12):3260–3270. doi: 10.1016/j.camwa.2017.08.017

- Borno I. Parallel computation of the solutions of coupled algebraic Lyapunov equations. Automatica. 1995;31(9):1345–1347. doi: 10.1016/0005-1098(95)00037-W

- Qian YY, Pang WJ. An implicit sequential algorithm for solving coupled Lyapunov equations of continuous-time Markovian jump systems. Automatica. 2015;60:245–250. doi: 10.1016/j.automatica.2015.07.011

- Wu AG, Duan GR, Liu W. Implicit iterative algorithms for continuous Markovian jump Lyapunov equations. IEEE Trans Automat Contr. 2016;61(10):3183–3189. doi: 10.1109/TAC.2015.2508884

- Wu AG, Sun HJ, Zhang Y. An SOR implicit iterative algorithm for coupled Lyapunov equations. Automatica. 2018;97:38–47. doi: 10.1016/j.automatica.2018.07.021

- Damm T, Sato K, Vierling A. Numerical solution of Lyapunov equations related to Markov jump linear systems. Numer Linear Algebra Appl. 2017;25(6):13–14.

- Hajarian M. Convergence properties of BCR method for generalized Sylvester matrix equation over generalized reflexive and anti-reflexive matrices. Linear Multilinear Algebra. 2018;66(10):1975–1990. doi: 10.1080/03081087.2017.1382441

- Zhang H. A finite iterative algorithm for solving the complex generalized coupled Sylvester matrix equations by using the linear operators. J Franklin Inst. 2017;354(4):1856–1874. doi: 10.1016/j.jfranklin.2016.12.011

- Tian ZL, Fan CM, Deng YJ, et al. New explicit iteration algorithms for solving coupled continuous Markovian jump Lyapunov matrix equations. J Franklin Inst. 2018;355(17):8346–8372. doi: 10.1016/j.jfranklin.2018.09.027

- Brewer J. Kronecker products and matrix calculus in system theory. IEEE Trans Circuits Syst. 1978;25(9):772–781. doi: 10.1109/TCS.1978.1084534