?Mathematical formulae have been encoded as MathML and are displayed in this HTML version using MathJax in order to improve their display. Uncheck the box to turn MathJax off. This feature requires Javascript. Click on a formula to zoom.

?Mathematical formulae have been encoded as MathML and are displayed in this HTML version using MathJax in order to improve their display. Uncheck the box to turn MathJax off. This feature requires Javascript. Click on a formula to zoom.Abstract

Many eye diseases such as Diabetic Retinopathy, Cataract, Glaucoma have their symptoms shown in the retina. To detect such diseases, the normal and abnormal retina should be differentiated. The Optic disc has been a prominent landmark for finding abnormalities in the retina. In this paper, we attempted two methods to localize the optic disc using the segmentation which is done on the interference map that is obtained from a family of generalized motion patterns of the image. In brief, we are adding motion to the image so that the bright regions can be extracted well by improving the contrast between the object and its background in the image. Then, the region of interest has been taken and binary image has been generated. In the 1st method, by thresholding, the optic disc has been segmented and its centre has been found. In the second method, the grab cut is used for segmentation. Both of our methods show better results when compared with the competing method.

1 Introduction

Diabetic Retinopathy and Glaucoma are the most common diseases related to the retina. Detecting the abnormality and symptoms in the early stage can prevent or lessen the effect of vision loss. Most common computer-aided techniques need ophthalmologist’s examination and supervision on the diagnosis results. By providing information about the abnormalities, we are reducing the burden of the ophthalmologist. Thereby also helping a non-expert understand the problem. The efficiency of diagnosis can be improved by predicting the abnormalities more accurately.

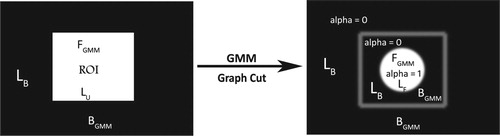

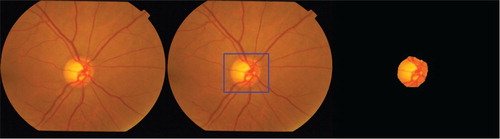

For the automated detection of the abnormality, the detection of the optic disc and macula assist us to get vital information. Many methods have been discussed in the literature for finding the optic disc by observing the intensity and geometrical features. In our work, we proposed two methods for segmenting the optic disc. The first method is an extension to [Citation1] which uses Interference map introduced in [Citation2] to segment the optic disc. We extended the concept by applying a threshold on the red channel image which proved to be more effective and efficient, based on the results we observed. The second method uses the graph cut method by using the prior information obtained from the [Citation1] method (Figure ). This graph cut based method has given even better results compared to the first method. In the following sections, we present a literature survey, give a rundown about the method we adopted and discuss the novelty we brought, followed by experimental study and conclusion.

2 Literature survey

Many optic disc segmentation methods have been proposed in the past. However, finding the exact optic disc centre and accurate segmentation is a challenging problem. A few thresholding based optic disc segmentation approaches proposed in the literature are summarized as follows.

The paper by Thomas et al. talks about using morphological techniques. The input image was filtered with a hexagonal shaped structuring element with closing and opening operations. Later thresholded to get the bright region which contains optic disc in the form of a binary image. Contours of the optic disc were obtained using gradient-based watershed transformation. However, the method was not efficient for the low contrast images and irregular illuminations [Citation3].

The paper by Ghafar et al. talks about using hough transform to get some approximate optic disc. Lee filter was applied to attenuate the distortions. The input retinal image was firstly enhanced using Sobel operator and the local thresholding was applied using mean and variance. Later, the output obtained by using Hough transform and lee filter was thresholded to obtain the optic disc by taking the maximum intensity region [Citation4].

The paper by Noor et al. talks about finding the cup to disc ratio for the diagnosis of glaucoma. The minimum, maximum and mean values from each red, red and green channels were considered for finding the multiple thresholds. Initially, the region of interest was taken. Later, post processing was applied to find the cup to disc ratio. But the performance of optic disc and optic cup segmentation were degraded [Citation5].

The paper by Tehmina et al. talks about applying Otsu’s thresholding for the image. Image pre-processing was done using bi-linear interpolation and histogram equalization to enhance resolution and contrast. Later, the noise was removed using mean and median filters. Then, Otsu’s thresholding was applied to get the initial optic disc. At last, the convex hull was applied to the obtained output to get the ultimate optic disc [Citation6].

3 GMP and interference map

3.1 Generalized motion pattern

The concept of Generalized motion pattern(GMP) was used in [Citation7]. They argue that the smear pattern holds more information about the scene and simulated it by inducing motion in a given image. The resulting sequence of images are coalesced to form a motion pattern. Such GMP is given by

(1)

(1) where

denotes the pixel location and

denotes the ith image obtained for applying ith rotation of the image forming

images totally.

is the coalescing function applied on those images for obtaining the GMP.

3.2 Interference map

The K GMPs generated with K different pivots (randomly selected points in the image) are combined together to get an Interference Map [Citation2]. Interference Map is given by

(2)

(2) The coalescing function

combines all GMPs into an Interference Map. The method [Citation1] uses this Interference Map to find the optic disc. 150 pivot points were chosen randomly and the image was rotated about the pivot point with step angle(

) 1 within range

. Median was used as coalescing function

and pointwise quartile generator as

. The interference map generated in this process gives the information about the bright regions in the image. The interference map is thresholded using (3) to give binary image which contains candidate regions.

(3)

(3)

(4)

(4) The time taken for the method [Citation1] to segment OD is high as they are taking 150 pivot points. We observed that max interference map generation works better than the median interference map generation when tested with standard dataset Drishti-GS1 [Citation8].

4 Grab cut Technique

Grab cut [Citation9] is an algorithm which is used widely for solving segmentation problems. It uses the Gaussian Mixture Model (GMM) to model the probability distribution over the pixels and trains it iteratively to segment the foreground and background regions by incorporating the graph cuts [Citation10].

A graph is constructed as follows: where

,

where is pixel location,

s and t are special vertices called source and sink respectively.

for each (u,v)

E, C(u,v) is defined using GMM as follows:

Two GMMs are created for background region and foreground region. Each one has clusters to cluster the RGB pixel values of the given image. The parameters of each gaussian cluster c are

,

and

. GMM is given by

(5)

(5) where

(6)

(6)

The labels of background and foreground are given as and

. Initially, a trimap is used which is defined as

. Where

is set of all background pixels,

is set of all unknown pixels and

is set of all foreground pixels. When the segmentation is initialized, the region around the rectangular box (ROI) is treated as a background region and stored in

. Pixel inside the rectangular box are stored in

and

will be an empty set.

The GMM for the background is created by the pixels in where

and the GMM for the foreground is created by the pixels in

where

. Iterations are performed by grab cut to assign the foreground and background labels and by clustering the pixels in

depending on the weighting function defined as [Citation11]:

(7)

(7)

(8)

(8) where

given by GMM for foreground,

given by GMM for background,

and

is RGB row vector for

for each

Let p,q be two neighbouring pixels in the image, the edge weight function for the edges between the neighbouring pixels is defined as

where where <·> denotes the expectation over the image. and

Once the graph is constructed, min cut is performed to segment foreground and background regions [Citation9]. The Figure shows the pipeline of the grabcut algorithm.

5 Proposed methods

5.1 Method 2

We adopted the method to crop the ROI from the red channel using less number of pivot points which reduces computational time by a significant margin. The ROI is of fixed size 500×500 pixels. We have experimented with different thresholds and found that below mentioned threshold was giving better results. Using the thresholding,

(9)

(9)

(10)

(10) the binary image is generated with a single candidate Optic disc region in 90% images in Drishti-GS1 dataset. Here

in (Equation (9) denotes the region of interest (ROI) and

contains our candidate Optic discs in the form of a binary image. The centre of the largest candidate regions is found and considered as Optic disc centre. Our method produced better results compared to the method discussed in [Citation1]. The proposed algorithm 1 is given in as follows.

ALGORITHM 1

Input: Fundus Image

Output: Segmented Optic disc and corresponding predicted Optic Centre.

Extract the Green channel of the input image

.

Using

as an input, max interference map

is generated as discussed in [Citation2].

Binary image is obtained from

by thresholding and centre of the candidate region in the binary image is calculated.

Consider in red channel, a square with fixed size

whose centre is the centre obtained in S3.

Binary image of the ROI, IOD is obtained through thresholding, which contains a single candidate region.

The centre of the candidate region in IOD is considered as Optic Disc Centre and the candidate region is interfered to be Optic disc.

5.2 Method 2

We used the region of interest obtained from the adopted method as input to the grab cut algorithm [Citation9]. It uses the prior information obtained from the region of interest which has foreground information as optic disc and the region outside the region of interest is treated as background region. It results the segmented optic disc region with a very good accuracy. We tested the algorithm with Drishti-GS1 dataset and got better results than our 1st method. The proposed algorithm 2 is given as follows:

ALGORITHM 2

Input: Fundus Image

Output: Segmented Optic disc.

Extract the Green channel of the input image

.

Using

as an input, max interference map

is generated as discussed in [Citation2].

Binary image is obtained from

by thresholding and centre of the candidate region in the binary image is calculated.

Consider a region of interest

in RGB colour space about the centre obtained in S3.

The ROI is given as input to the grab cut algorithm

Binary image

is obtained as output of grab cut which contains a single candidate region.

The centre of the candidate region in

is considered as Optic disc Centre and the candidate region is interfered to be Optic disc.

6. Time complexity analysis

6.1. Method 1

The time taken to construct a GMP (IGMP) is for an image of dimensions

where

. We are constructing an interference map (Iint) using K GMPs in S2. So the time taken for S2 is

. Rest all other steps take constant time. However, K and

are kept as constants in practice. Therefore, the time complexity of the first method is

.

6.2. Method 2

The time taken to construct the graphs is where

is no. of vertices and

is no. of edges. Assigning weights to the edges between pixels and terminal nodes uses GMM which runs EM algorithm to find the parameters in

[Citation12]. Here

is no. of clusters in GMM which is kept as constant. The min cut/max flow algorithm takes

[Citation13].

Hence, the time taken for the extra work done in the second method is to find region of interest which takes .

Therefore, the time complexity of the 2nd method is where V is no. of vertices i.e. mn+2 and E is no. of edges.

7 Experiments and results

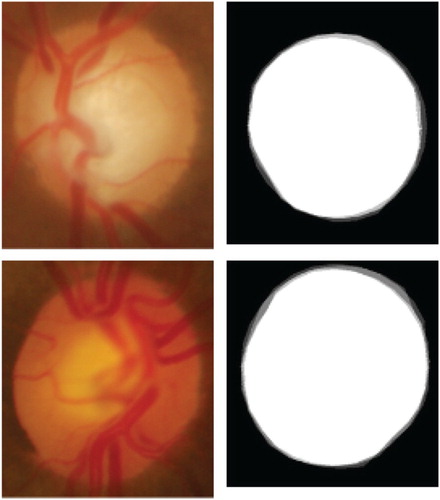

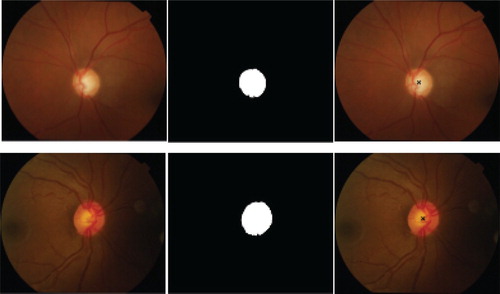

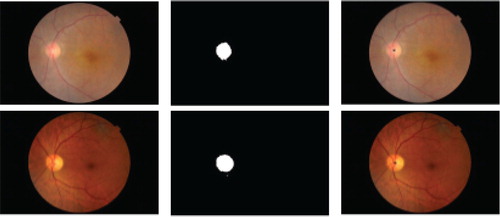

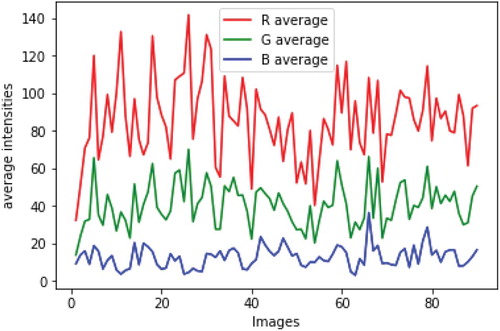

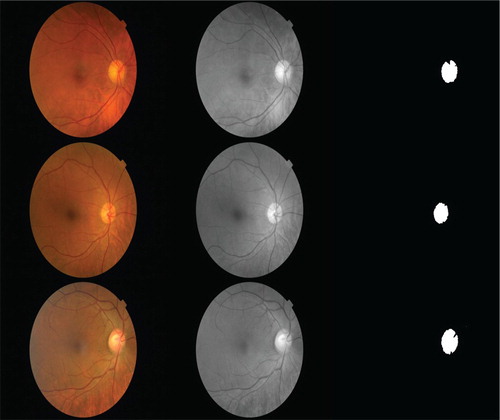

The proposed methods were evaluated on Drishti-GS1 [Citation8] dataset, which has retinal images obtained from the subjects who are Indians. Figure shows the soft map obtained on sample regions with Optic disc (OD) from the dataset. The later shows the OD centre obtained through our first approach for sample images from Drishti-GS1 dataset, which is marked with a cross in images of the last column (Figure ). Figure shows the Optic disc centre obtained from our first method for sample images from our dataset for which no Ground Truths(GT) are available. Despite intra-image variations, proposed method 1 produces very good estimates of the OD centre. The markings were uncertain for some images having irregular illuminations and noise. However, the method performs well as in most of the cases, the images are consistent in terms of illumination and colour intensities (Figure ).

It has been observed that the red component of the image has been working well for segmenting the optic disc. The red channel of the retinal image has high pixel intensities compared to green and blue channels which can be seen in Figure . Where R average , G average

and B average

are functions of number of images(image indices). Given by

(10)

(10)

(11)

(11)

(12)

(12)

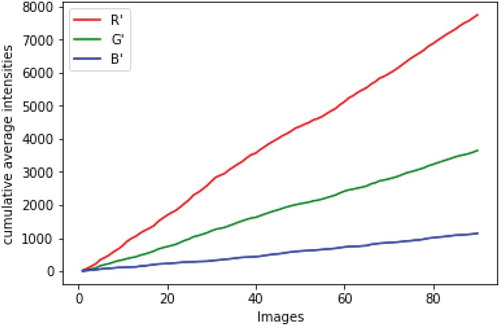

The cumulative averages have been obtained for the three channels which can be seen in Figure . The red channel grows rapidly with the increase in number of images when compared to other two channels. In Figure , ,

and

are the functions of number of images given by

(13)

(13)

(14)

(14)

(15)

(15)

Where is the red component of the image

at the location (x,y),

is the green component of the image

at the location (x,y) and

is the blue component of the image

at the location (x,y).

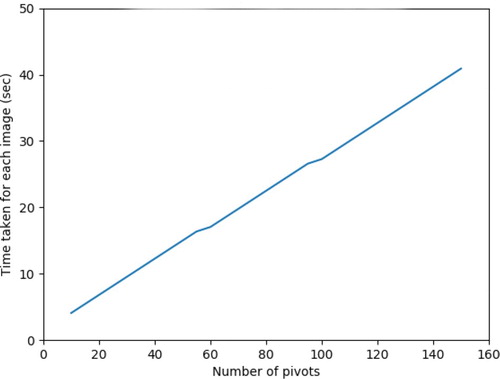

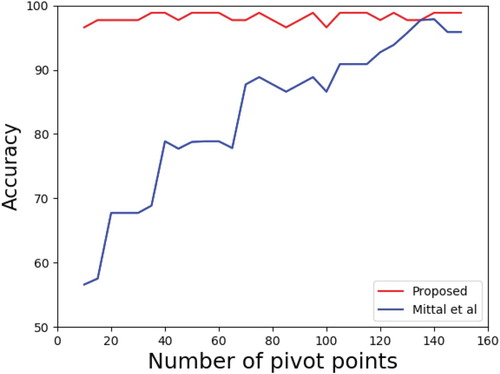

We studied the effect of the parameter setting on K, the number of pivot points. Greater the value K, more the evidence gathered in Iint at an increased computational cost (Figure ). From Figure , we can observe that the time taken to perform the proposed method for a single image increases linearly with the number of pivots. According to [Citation1], the evidence comprises of one or more bright regions, among which one serves as Optic disc. In both of our methods, we use the evidence gathered to locate the Optic disc region and use it as our ROI, so our approaches work fine even for less number of pivots. A high step angle (θ) affects localization while a low θ cannot soften out the background tissues.

R is the average of the radius of the optic discs for which GT is provided in Drishti-GS1. The accuracy is calculated based on the occurrence of predicted OD centre from the GT within a certain distance. Figure shows the effect of varying k with θ=10 and distance is R/4. The plots indicate that for , better results are obtained. If the distance between the predicted OD centre and GT OD centre is less than

, then it is marked as correct prediction and the corresponding accuracy on the dataset are given in the second column of the Table .

was assigned its optimal value (i.e,. 10). Similarly

is considered and tabulated in the third column. The third and fourth columns of the Table provide the information about Dice similarity coefficient (DSC) and Jaccard similarity coefficient (JSC) calculated using the below formula.

(16)

(16)

(17)

(17)

(18)

(18)

(19)

(19)

(20)

(20)

Table 1. Results of OD centres.

True Positive (TP) contains the number of pixels that are commonly marked as OD in GT and prediction. False Positive (FP) contains the number of pixels that are falsely predicted as OD. False Negative (FN) contains the number of pixels that are falsely predicted as non-OD.

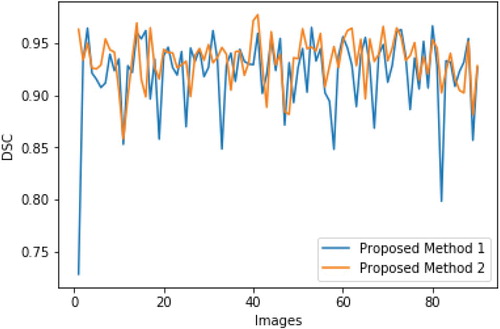

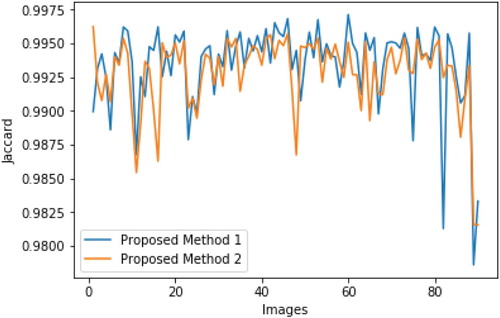

Figure shows the Optic disc centre obtained from our second method for sample images from our dataset for which no Ground Truths(GT) are available. We compared the second method with first one which gave significant results for the Drishti-GS1 dataset which can be seen in Figure . In Figure the images are in the X-axis and their respective Dice Similarity coefficients on Y-axis. In Figure the images are in the X-axis and their respective Jaccard Similarity coefficients on Y-axis. Despite the variations in contrast obtained through contrast stretching, the proposed method 2 segmented the optic disc well which can be seen in the images in the order of original, contrast stretched and optic disc segmented.

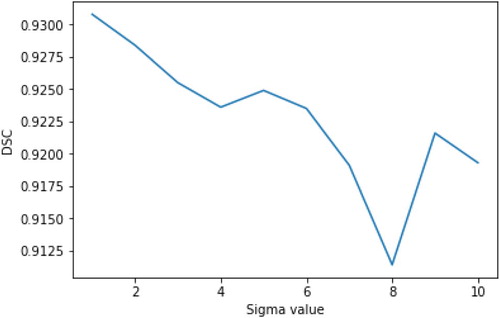

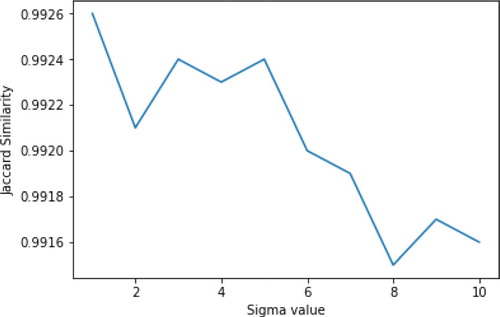

Two methods have been tested on images with different Gaussian noise levels. It has been observed that, the second method performs well despite the noise in the images whereas first method was not producing the results. In Figure , the plot shows the performance of the second method with different standard deviations of the Gaussian noise on the X-axis and the Dice Similarity Coefficient (DSC) on the Y-axis. The Dice Similarity coefficient of 0.92 was obtained for the standard deviation of 10 which is a good result despite the noise. In Figure , the plot shows the performance of the second method with different standard deviations of the gaussian noise on the X-axis and the Jaccard Coefficients (JC) on the Y-axis. The Jaccard coefficient was also not affected much with an increase in noise levels.

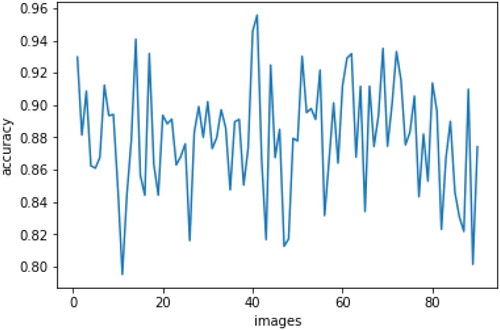

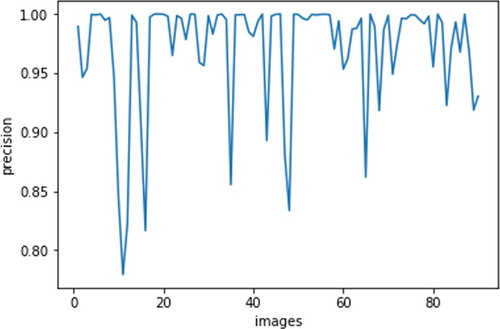

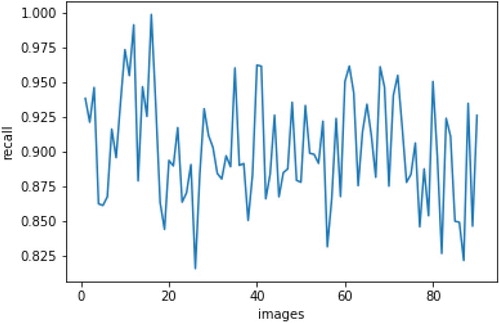

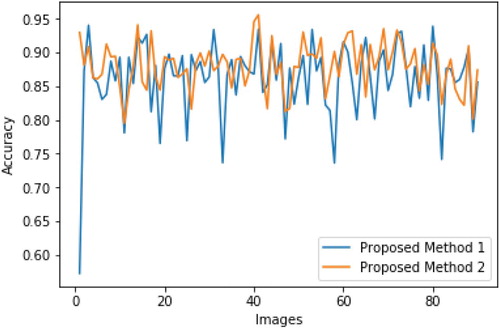

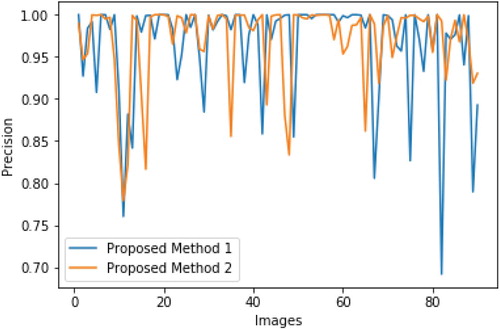

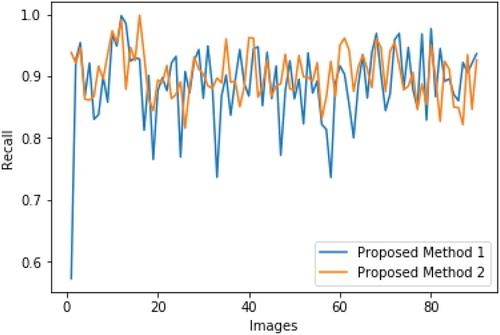

The precision, recall and accuracy for the segmentation have been obtained for both the proposed methods which can be seen in Table . The second method has outperformed the first method in terms of all metrics namely accuracy, precision and recall. The Figure gives the performance of the second method where the image numbers(indices) are in X-axis and respective accuracy on the Y-axis. The Figure gives the performance of the second method where the image numbers(indices) are in X-axis and respective precision on the Y-axis. The Figure gives the performance of the second method where the image numbers(indices) are in X-axis and respective recall on the Y-axis. The Figure gives the performance of both the methods where the image numbers(indices) are in X-axis and respective accuracy on the Y-axis. The Figure gives the performance of both the methods where the image numbers(indices) are in X-axis and respective precision on the Y-axis. The Figure gives the performance of both the methods where the image numbers(indices) are in X-axis and respective recall on the Y-axis.

Table 2. Results of Segmentation.

7 Conclusion

Two improvized methods of segmenting the optic disc have been implemented and improvement has been obtained because of localized segmentation. Applying motion to the object in the fundus image results in good contrast between optic disc and its background. Although the proposed method 1 gives good results in terms of efficiency and less time consuming compared to the competing method, its performance reduces in the case of irregularly illuminated images. The proposed method 2 uses iterative graph cuts and it is outperforming competing methods on the benchmark dataset Drishti-GS1 [Citation8] and it also found to be robust to the gaussian noise. The manual intervening for graph cut segmentation has been eliminated by using the motion models. To make it a real-time process, the number of pivot points used is minimized without affecting the efficiency. Applying motions to the image has been working well to find the bright regions and can be extended further to find the abnormalities in the retina (Figure ).

Disclosure statement

No potential conflict of interest was reported by the author(s).

References

- Mittal G, Sivaswamy J. Optic disk and macula detection from retinal images using generalized motion pattern. In 2015 Fifth National Conference on Computer Vision, Pattern Recognition, Image Processing and Graphics (NCVPRIPG), IEEE; 2015. p 1–4.

- Chakravarty A, Sivaswamy J, et al. An assistive annotation system for retinal images. In 2015 IEEE 12th International Symposium on Biomedical Imaging (ISBI), IEEE; 2015. p. 1506–1509.

- Walter T, Klein J-C. Segmentation of color fundus images of the human retina: Detection of the optic disc and the vascular tree using morphological techniques. In International Symposium on Medical Data Analysis, Springer; 2001, p. 282–287.

- Abdel-Ghafar RA, Morris T. Progress towards automated detection and characterization of the optic disc in glaucoma and diabetic retinopathy. Med Inform Internet Med. 2007;32(1):19–25. doi: 10.1080/14639230601095865

- Noor NM, Abdul Khalid NE, Md Ariff N. Optic cup and disc color channel multi-thresholding segmentation. In 2013 IEEE International Conference on Control System, Computing and Engineering, IEEE; 2013, p. 530–534.

- Khalil T, Akram MU, Khalid S, et al. Improved automated detection of glaucoma from fundus image using hybrid structural and textural features. IET Image Proc. 2017;11(9):693–700. doi: 10.1049/iet-ipr.2016.0812

- Sai Deepak K, Sivaswamy J. Automatic assessment of macular edema from color retinal images. IEEE Trans Med Imaging. 2012;31(3):766–776. doi: 10.1109/TMI.2011.2178856

- Sivaswamy J, Krishnadas S, Chakravarty A, et al. A comprehensive retinal image dataset for the assessment of glaucoma from the optic nerve head analysis. JSM Biomedical Imaging Data Papers. 2015;2(1):1004.

- Rother C, Kolmogorov V, Blake A. Grabcut: Interactive foreground extraction using iterated graph cuts. In ACM transactions on graphics (TOG), ACM; 2004. Vol 23, p. 309–314.

- Boykov YY and Jolly M-P. Interactive graph cuts for optimal boundary & region segmentation of objects in nd images. In Proceedings Eighth IEEE International Conference on Computer Vision, ICCV 2001, IEEE; 2001. Vol 1, p. 105–112.

- Talbot JF, Xu X. Implementing grabcut. Brigham Young University, 3, 2006.

- Verbeek JJ, Vlassis N, Kröse B. Efficient greedy learning of Gaussian mixture models. Neural Comput. 2003;15(2):469–485. doi: 10.1162/089976603762553004

- Orlin JB. Max flows in o (nm) time, or better. In Proceedings of the Forty-Fifth Annual ACM Symposium on Theory of Computing, ACM; 2013. p. 765–774.