?Mathematical formulae have been encoded as MathML and are displayed in this HTML version using MathJax in order to improve their display. Uncheck the box to turn MathJax off. This feature requires Javascript. Click on a formula to zoom.

?Mathematical formulae have been encoded as MathML and are displayed in this HTML version using MathJax in order to improve their display. Uncheck the box to turn MathJax off. This feature requires Javascript. Click on a formula to zoom.Abstract

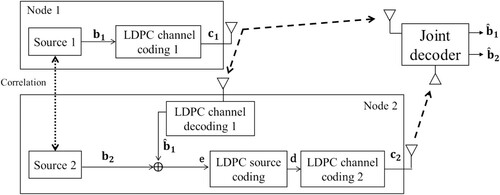

This paper investigates a new communication system where two nodes want to disseminate highly correlated contents to a single destination and can be applied for densely deployed wireless sensors networks applications. Motivated by their capacity-achieving performance and existing practical implementations, the proposed communication scheme is fully based on Low-Density Parity-Check (LDPC) codes for data compression and channel coding. More specifically, we consider a network of two correlated binary sources with two orthogonal communication phases. Data are encoded at the first source with an LDPC channel code and broadcast in the first phase. Based on the first source received data, the second source computes the correlation vector and applies a Joint Source–Channel (JSC) LDPC code, which output is communicated in the second phase. At the receiver, the whole network is mapped on a joint factor graph over which an iterative message-passing joint decoder is proposed. The aim of the joint decoder is to exploit the residual correlation between the sources for better estimation. Simulation results are investigated and compared to the theoretical limits and to an LDPC-based distributed coding system where no inter-node compression is applied.

1. Introduction

In densely deployed wireless sensor or internet of things networks, several nodes disseminate physical information to a central node for decision-making. Due to the redundant nature of the collected data, these nodes of information are generally correlated in space (different camera views, temperature or pressures samples from different sensors, etc.). In several cases, many nodes measure the same target phenomenon; hence, the correlation of the data is very high, which dominates the transmission bandwidth and can cause uplink congestion.

In the information and coding theory community, the problem of sending correlated data, with no communicating sources, to a unique destination is called distributed source coding, where the uncompressed correlation is exploited at the destination. The information theoretical lossless compression bounds on distributed source coding of two correlated sources are defined by the Slepian-Wolf (SW) theorem [Citation1]. In fact, the latter states that two independent and identically distributed (i.i.d) sources can be compressed to a source rate , where

is the joint entropy of the sources

and

[Citation1]. The result is also combined with the well-known Shannon theorem for the case of the transmission of correlated sources over noisy channels [Citation2].

In this framework, several studies proposed practical joint source–channel coding and decoding techniques for the transmission of correlated sources [Citation3–6]. In Ref. [Citation3], the authors proposed Turbo-based JSC decoding that exploits the inter-source dependence to perform channel error-control for Additive White Gaussian Noise (AWGN) channels. Also, a similar system was investigated in [Citation4] to transmit correlated sources over fading multiple access channels with three different source coding strategies. Besides, the authors in Ref. [Citation5] proposed a hybrid digital/analog coding scheme to send correlated sources over discrete-memoryless two-way channels. Other contributions used channel codes to exploit the inter-sources correlation in the framework of distributed source coding [Citation7–11]. LDPC-based iterative joint decoding schemes were proposed in [Citation7, Citation9, Citation10], and the contributions were utilizing two types of iterations, named local and global iterations, to this aim. In the local iterations, the LDPC decoding process performs the Sum-Product (SP) algorithm independently for the two sources, then the estimation of the source’s correlation is performed at the global iterations. It was also demonstrated in [Citation12] that Turbo Codes could reach the SW limit for distributed lossless source encoding.

All the presented contributions assume no inter-node communication. Hence, all the correlation is transmitted and then exploited by adequate JSC decoding. However, in wireless communications with orthogonal signalling, if the first source transmits its data, the latter is naturally broadcasted and received by the second source, and the correlation can be evaluated and compressed before transmission in the second phase. This remark motivates the current work where, unlike the described solutions, inter-node compression is used based on source LDPC codes, and JSC decoding is applied to exploit the residual correlation that remains after compression.

The proposed system aims to transmit two correlated binary sources to a destination over two independent AWGN channels. At the first source, an LDPC channel code is used. At the second source, based on the received data from the first source, we apply a JSC LDPC [Citation13] coding to compress and protect the correlation vector. The main contributions behind this work are: First, inter-node compression coding system is introduced to transmit correlated binary sources based on JSC LDPC codes. Second, a global network Tanner graph is presented and used to apply a message-passing iterative joint decoding, that exploits the residual correlation of the two sources after compression. Simulation results are investigated and compared to Shannon-SW limits and to an equivalent LDPC-based system with distributed source coding.

This paper is organized as follows. Section 2 gives a detailed overview of the system model. Section 3 provides the message transfer decoding process. Investigation of the system efficiency based on simulation is emphasized in Section 4.

2. The proposed communication system with inter-node compression based on JSC LDPC codes

As mentioned, we study a joint coding system composed of two memoryless binary sources and one single destination. Each source communicates its own data to the destination through an independent AWGN channel, based on LDPC codes. As shown in Figure , the source

generates a binary i.i.d sequence

. We assume that the sequence

is

bits length, and is encoded by an LDPC channel code providing a codeword

, such as

(1)

(1) with the generator matrix

of size (

). Then, the encoded bit-stream

is broadcasted over AWGN channels by means of BPSK modulation. We denote by

the first source LDPC channel coding rate. Besides, the second source

generates a binary data

, which is supposed to be correlated with

by means of a crossover probability p. Otherwise,

, where z is a random variable with a probability

and

. The probability (1-p) also denoted by

is defined as the correlation ratio between the two sources. The small the p is, the more correlated the sources are.

At the second source, we can recover a noisy version of , after the wireless broadcast transmits phase of

. The received version will then be decoded at the second source to reconstruct an estimate of

called

. The latter is obtained after a BP iterative decoding on the LDPC channel coding 1 Tanner graph constructed based on the

parity check matrix

. Then, we construct the correlation vector as

being the XOR of the vectors

and

. The obtained vector e is very redundant, which motivated the use of an LDPC source encoder [Citation13] with an (

×

) parity check matrix

providing a compressed sequence as

(2)

(2) The compressed sequence d is then encoded by a second LDPC channel code using the generator matrix

with

dimensions as

(3)

(3)

The codeword is modulated by BPSK and transmitted to the destination over an AWGN channel.

denotes the source coding rate of

and

is the corresponding channel coding rate. Since we consider orthogonal signalling, the overall rate of the proposed system is

.

3. The joint network-mapped iterative decoder

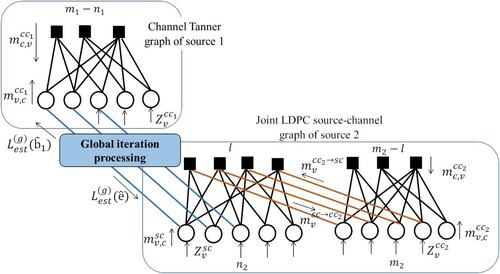

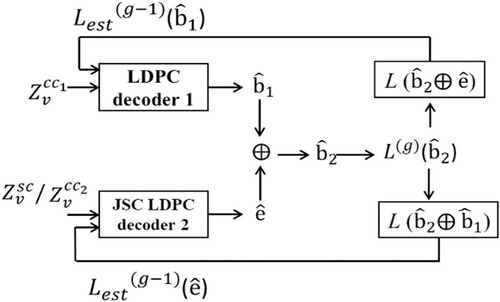

The aim of the joint decoder, applied at the receiver, is to generate the best estimates of original sources data based on error-correcting LDPC decoding, while exploiting the residual correlation between the two sources after LDPC correlation compression.

Based on the factor graph representation available for source and channel LDPC codes, the network joint decoder of correlated sources can be described by two elementary connected decoders as shown in Figure . The global graph components are the channel decoder graph of source 1 and the joint source–channel decoder graph of source 2. The decoding process uses message transfer based on the Belief Propagation (BP) algorithm and is composed of two types of iterations: local iterations l and global iterations g. First, a local processing is applied where the LDPC channel decoder 1 of source 1 and the joint source–channel decoder 2 of source 2 apply the BP algorithm with a maximum number of local iterations, denoted by . After these

iterations, the outputs of the elementary decoders provide the estimates of

and e, denoted respectively by

and

, which are inherently related to the second source original source sequence

. We propose at a second decoding stage to compute an estimate of the source’s correlation using global iterations to enhance the performance of the joint decoders by providing extra a priori information. The latter is provided by the correlation update process depicted in Figure , where

vector is estimated as

(4)

(4) As shown in Figure , the correlation estimate applied by updating the log-likelihood ratios (LLRs) during global iterations provides messages

(

) and

(

), passed respectively to the channel decoder graph 1 and the joint source–channel LDPC decoder 2 for further local iterations. We note that at the first global iteration g = 0,

(

) and

(

) are initialized to zero, and for the next iterations, they will be appended to the systematic variable nodes of each corresponding decoder. The joint global decoder messages transfer is described in more detail as follows for a specific l-th local and g-th global iteration.

Figure 3. The global iteration joint decoder block diagram with correlation exploitation and LLRs updating.

For the elementary LDPC channel decoder 1, the messages of the variable nodes sent to a check nodes c are

(5)

(5)

where

are the channel observations LLRs used to initialize the variable nodes.

are the LLRs corresponding to the estimates of the vector

components at the global iteration g.

expression is

(6)

(6) where

are the LLRs corresponding to the vector

components estimated as [Citation9]

(7)

(7) with k and

being respectively the length and the Hamming weight of sequence

.

The messages are computed after

local iterations at the second source joint graph by

(8)

(8) The messages delivered by the check nodes

of the channel decoder 1 to the connected variable nodes are evaluated as

(9)

(9)

The joint source–channel LDPC decoding process message transfer for the second source elementary decoder behaves the same [Citation13]. However, in this proposed system, we append the correlation update messages to the variable nodes of the LDPC source decoding part. Hence, after a fixed number of local iterations, the updating LLR for the variable nodes of the LDPC source decoder is calculated as

(10)

(10) where

(

) are the LLRs associated to a vector

. The LLRs

(

) are evaluated as

(11)

(11) and

is given by

(12)

(12) After a fixed number of global iterations of the joint global decoder, the sequence

is estimated based on the following a posteriori LLR

(13)

(13)

Also, the information vector is estimated based on the correlation vector, LLRs calculated by

(14)

(14)

4. Simulation results

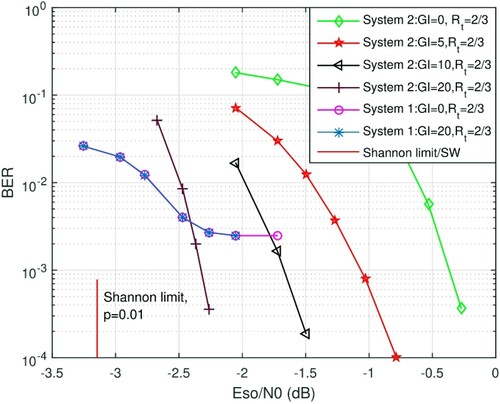

In this section, we investigate the performance of the proposed inter-node compression scheme with JSC LDPC codes. Also, we compare the performance of our proposed system to a distributed coding system [Citation11] where no communication is allowed between the two sources, and the correlation is fully exploited at the decoder (not compressed). The distributed coding system is composed of two binary sources, where data are encoded independently by LDPC channel codes and then sent to the destination through AWGN channels.

We consider a binary i.i.d sequence with information length = 1800 bits, a regular LDPC channel code with a code rate

, and degrees defined by (

= 3,

= 6) at source

. At the second source, we assume

bits and apply a JSC source and channel LDPC regular codes with respective rates

and

with (3, 6) degrees. The overall rate of the system is R = 2/3. At the decoder, we apply the described message passing algorithm with a maximum number of local iterations equal to

and different number of global iterations denoted by GI. Also, we assume that the two sources have the same distance to the destination, and consequently the same signal-to-noise ratio. However, we suppose that the

is too close to

and the inter-nodes link suffers from no communication errors

. We studied in [Citation14] the case where wireless link between sources is noisy with a bit error probability pe. This case can be tackled by applying an LLR updating function [Citation14] and is omitted in this paper. For the reference distributed system, we consider channel coding rates

and

with constant degrees (3, 9), which means a global rate equal to 2/3.

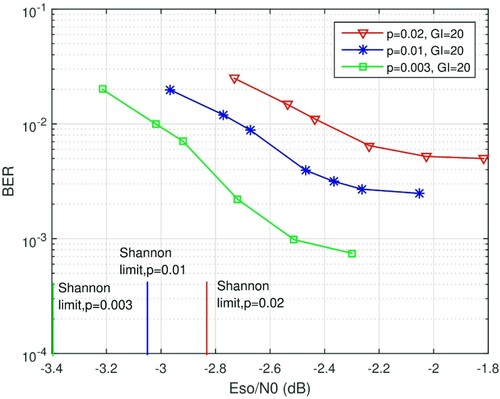

In Figure , we plot the BER performance as a function of the Eso/N0 for the inter-node compression system (system 1) and distributed coding system (system 2). We note that the crossover probability p between the two sources, used in this experiment, is equal to 0.01. In our investigations, to analyze the efficiency of the proposed joint decoder, we also consider the theoretical SW/Shannon bound proposed in [Citation15], where Eso/N0 should be greater than

(15)

(15) where Eso is the energy per source bit,

is the noise power spectral density,

is the source’s joint entropy, and R is the overall coding rate. The joint entropy is given by

and depends on the first source entropy H(S1) (equal to 1 here) and on the crossover probability p.

Figure 4. BER performance for equivalent rates compression of inter-source correlations and distributed coding systems with crossover probability p = .01.

From the obtained results, we observe that the performance of the system 1 in the waterfall region is better than the system 2. The proposed system provides a gain of about 2.2 dB for BER equal to and with no global iterations (GI = 0).

The gap of the proposed system with respect to the SW/Shannon theoretical limit is less than 1 dB. However, while it is clear that the performance of the distributed system 2 is substantially improved after exploiting the source’s correlation during the global iterations, the latter has no impact on the performance of our proposed system with inter-node compression. Indeed, this behaviour is explained by the fact that the compression scheme applied at the second source reduces drastically the correlation between the sources, hence there is no residual redundancy to exploit. Otherwise, if no extra computation is allowed at the destination node, the inter-node compression can be beneficial since we can reach fair performance with no need of global iterations. Finally, we also remark a higher error floor, justified by the compression loss, which depends on the source coding operation, applied at the second source and is specific to the joint LDPC source coding process.

We previously demonstrated in [Citation16] that in the case of point-to-point communication, the joint LDPC system can reach better improvements as the source is highly correlated and provides less error floors. In the second set of experiments, we study, in Figure , the effect of the crossover probability p on the performance of the inter-node compression system in the waterfall and error floor regions.

We propose three crossover probabilities p: p = .02 with , p = .01 and

, and p = .003 with

. Also, we use the theoretical limit of Eso/N0 as benchmark for the different values of p. We recall that the number of global iterations is oversized especially for high values of p where no improvement is obtained, due to LDPC correlation compression. We can conclude from the results that having a lower parameter p involves better improvement in both waterfall and error floor regions. We observe a gain of about 0.3 dB with p = 0.003 compared to the case where p = .01 for a

with a reduced error floor. The practical system behaviour agrees also with the theoretical limits, and always a gap less than 1 dB is achieved.

5. Conclusion

In this paper, we study a joint source–channel coding system that compresses the inter-node correlations based on LDPC codes. First, we presented the system model, which is composed of two binary sources and one destination, and with two communication phases. Second, we developed a joint decoder with two stages of iterations to exploit the source correlation. Based on computer simulations, we demonstrated that the proposed system could provide slightly better results in the waterfall region compared to a distributed coding system exploiting the source correlation without applying any global iterations at the cost of a quality loss for high SNRs. The proposed system can be beneficial for downlink wireless sensor network systems, where computation cost is increased at the intermediate source with XORing and LDPC compression, but very low processing is needed at the destination by omitting global iterations.

Disclosure statement

No potential conflict of interest was reported by the author(s).

Additional information

Funding

References

- Slepian D, Wolf J. Noiseless coding of correlated information sources. IEEE Trans Inf Theory. 1973;19(4):417–480.

- Garcia-Frias J, Zhao Y. Near-Shannon/Slepian-Wolf performance for unknown correlated sources over AWGN channels. IEEE Trans Comm. 2005;53(4):555–559.

- Garcia-Frias J. Joint source-channel decoding of correlated sources over noisy channels. Data Compression Conf.; 2001. p. 283–292.

- Argyriou A, Alay O, Palantas P. Modeling the lossy transmission of correlated sources in multiple access fading channels. Phy Comm. 2017;24:34–45.

- Weng JJ, Alajaji F, Linder T. Joint source-channel coding for the transmission of correlated sources over two-way channels. IEEE ISIT; 2019. p. 1322–1329.

- Huo Y, Zhu C, Hanzo L. Spatio-temporal iterative source–channel decoding aided video transmission. IEEE Trans Veh Technol. 2013;62(4):1597–1609.

- Daneshgaran F, Laddomada M, Mondin M. LDPC-based channel coding of correlated sources with iterative joint decoding. IEEE Trans Comm. 2006;54(4):577–582.

- Aljohani AJ, Ng SX. Distributed joint source-channel coding-based adaptive dynamic network coding. IEEE Access. 2020;8:86715–86731.

- Asvadi R, Matsumoto T, Juntti M. Joint distributed source-channel decoding for LDPC-coded binary Markov sources. IEEE PIMRC; 2013. p. 807–811.

- Khas M, Saeedi H, Asvadi R. LDPC code design for correlated sources using EXIT charts. IEEE ISIT; 2017. p. 2945–2949.

- Nangir M, Asvadi R, Ahmadian-Attari M, et al. Analysis and code design for the binary CEO problem under logarithmic loss. IEEE Trans Commun. 2018;66(12):6003–6014.

- Aaron A, Girod B. Compression with side information using turbo codes. Data Compression Conf.; 2002. p. 252–261.

- Fresia M, Perez-Cruz F, Poor HV, et al. Joint source and channel coding. IEEE Signal Process Mag. 2010;27(6):104–113.

- Abdessalem MB, Zribi A, Matsumoto T, et al. LDPC-based joint source channel coding and decoding strategies for single relay cooperative communications. Phys Commun. 2020;38:100947.

- Garcia-Frias J. Joint source-channel decoding of correlated sources over noisy channels. Data Compression Conf.; 2001. p. 283–292.

- Ben Abdessalem M, Zribi A, Bouallegue A. Analysis of joint source channel low-density parity-check (LDPC) coding for correlated sources transmission over noisy channels. Intl. Conf Communications, Networking and Mobile Computing, 2017.