Abstract

This paper reviews the key perspectives on human error and analyses the core theories and methods developed and applied over the last 60 years. These theories and methods have sought to improve our understanding of what human error is, and how and why it occurs, to facilitate the prediction of errors and use these insights to support safer work and societal systems. Yet, while this area of Ergonomics and Human Factors (EHF) has been influential and long-standing, the benefits of the ‘human error approach’ to understanding accidents and optimising system performance have been questioned. This state of science review analyses the construct of human error within EHF. It then discusses the key conceptual difficulties the construct faces in an era of systems EHF. Finally, a way forward is proposed to prompt further discussion within the EHF community.

Practitioner statement This state-of-science review discusses the evolution of perspectives on human error as well as trends in the theories and methods applied to understand, prevent and mitigate error. It concludes that, although a useful contribution has been made, we must move beyond a focus on an individual error to systems failure to understand and optimise whole systems.

1. Introduction

Many of us, particularly those working within the safety-critical industries, received a fundamental education in human error models (e.g. Rasmussen Citation1982; Reason Citation1990, Citation1997) and methods (e.g. Kirwan Citation1992a, Citation1992b). The simplicity of the term ‘human error’ can be a blessing. We have likely explained our role to those outside the discipline using this term. Indeed, the familiarity of human error within everyday language may have facilitated buy-in for the importance of ergonomics and human factors (EHF).Footnote1 However, its simplicity may also be a curse (Shorrock Citation2013), with unintended consequences for safety and justice, and for the EHF discipline generally.

Currently, EHF finds itself within a shift that is changing the nature of many long-standing concepts, introducing subtleties that are less easy to explain to clients of EHF services, the media, the justice system, and the public. The movement from the ‘old view’ to ‘new view’ of human error proposed by Dekker (Citation2006) and from Safety-I to Safety-II (Hollnagel et al. Citation2013; Hollnagel Citation2014) considers many of these challenges and introduces newer concepts to safety management. Whether considered a paradigm shift (e.g. Provan et al. Citation2020) or more of an evolution in thinking, recent discourse has challenged the practical usefulness of human error methods and theories (Salmon et al. Citation2017). Underpinning this is a fundamental change in focus in EHF from analysing human-technology interactions to a broader, more holistic form of thinking that acknowledges various aspects of complexity science (Dekker Citation2011b; Salmon et al. Citation2017; Walker et al. Citation2010).

Along with this is the recognition that activity occurs within sociotechnical systems, comprising human and technical components that work together to achieve a common goal. In complex sociotechnical systems, outcomes (e.g. behaviours, accidents, successes) emerge from the interactions between multiple system components (i.e. humans and technologies). These interactions are dynamic, non-linear (i.e. the strength of a cause is not equivalent to its effect) and non-deterministic (i.e. uncertain and difficult to predict). People act locally, without knowledge of the system as a whole; thus, different perspectives and worldviews exist. Importantly, complex socio-technical systems are generally open to their environment and must respond and adapt to environmental changes. These aspects differentiate complex systems from merely complicated systems, within which component relationships can be analysed with more certainty. Many traditional engineered artifacts can be conceptualised as complicated systems, such as a jumbo jet or an automobile. These systems can be reduced to their parts, analysed, and then re-assembled to the whole. However, once a social element (i.e. human interaction) becomes a part of the system boundary, the system becomes complex (Cilliers Citation1998; Dekker, Cilliers, and Hofmeyr Citation2011). Complex systems are indivisible, and therefore the system must be the unit of analysis (Ottino Citation2003). Ackoff (Citation1973) described how we must move away from ‘machine age’ views of the world which assume systems are complicated and can thus be treated in a reductionist manner (i.e. broken into constituent parts, analysed and reassembled to the whole). The reverse of this is systems thinking – a way of thinking of the world in systems, emphasising interactions and relationships, multiple perspectives, and patterns of cause and effect. Here, the system is the unit of analysis and component behaviour should only be considered within the context of the whole. A key implication of systems thinking is that accidents cannot be attributed to the behaviour of an individual component (i.e. a human error), instead, we must examine how interactions between components failed; that is, how the system itself failed. It is worth noting here, in using the term ‘system failure’, it is acknowledged that systems themselves only function; outcomes are defined as successes or failures from the perspective of human stakeholders (i.e. whether or not stakeholder purposes and expectations of the system are met).

There have been many influential works outlining this case for change (e.g. Rasmussen Citation1997; Leveson Citation2004; Dekker Citation2002; Hollnagel Citation2014). While these debates began over thirty years ago (Senders and Moray Citation1991) they remain unresolved. Whilst systems thinking perspectives are experiencing something of a resurgence in EHF (Salmon et al. Citation2017), this perspective is yet to flow through to the media or justice systems (e.g. Gantt and Shorrock Citation2017). This creates a difficult situation in which ambiguous messages from the EHF community surrounding the validity and utility of human error are potentially damaging to the discipline (Shorrock Citation2013). This state of science review is therefore timely. This paper aims to summarise the history and current state of human error research, critically evaluate the role of human error in modern EHF research and practice, summarise the arguments for a shift to systems thinking approaches, and provide recommendations for EHF researchers and practitioners to take the discipline forward.

1.1. Consequences of ‘human error’

Human error continues to be cited as the cause of most accidents (Woods et al. Citation2010). Emerging in the 1970s as a focus of accident investigations following disasters such as Three Mile Island and Tenerife, human error explanations came to supplement the previous engineering-led focus on equipment and technical failures in investigations (Reason Citation2008). At present, it is commonly stated across the safety-critical domains that human failure causes most accidents (in the range of 50–90% depending on the domain; e.g. Baybutt Citation2002; Guo and Sun Citation2020; Shappell and Wiegmann Citation1996). The United States National Highway Transport Safety Agency (NHTSA Citation2018) assigns 94% of road crashes to the driver, interpreted as ‘94% of serious accidents are caused by human error’ (e.g. Rushe Citation2019). In aviation, human error is implicated in the majority of the UK Civil Aviation Authority’s (CAA) ‘significant seven’ accident causes (CAA Citation2011), and it has been estimated that medical error is the third leading cause of death in the USA (Makary and Daniel Citation2016). It is no surprise that figures like these are used to justify the replacement of humans with automation, or the introduction of stricter behavioural controls (i.e. rules or procedures) with punishment to deter non-compliance. However, despite (and sometimes because of) safety controls such as automation, behavioural controls and punishments, accidents still occur. Indeed, we have reached a point in many domains where the decline in accident rates in more developed countries has now plateaued, for example in aviation (Weigmann and Shappell Citation2003), road (Department of Transport 2017; NHTSA Citation2019), and rail (Walker and Strathie Citation2016). The traditional view of human error has taken us so far, and in doing so, has increasingly exposed the systemic nature of the errors ‘left over’ along with the limitations of existing models and methods (Leveson Citation2011; Salmon et al. Citation2017).

1.2. Origins and use of the term

The human tendency to attribute causation to the actions of another human appears to be part of our nature (Reason Citation1990). Yet, efforts to translate this common attribution into a scientific construct have proved difficult. To provide context for the modern usage of the concept of error, we begin by exploring its history in common language and its early development as a scientific construct.

The term ‘error’ has a long history. The Latin errorem, from which error derives, meant ‘a wandering, straying, a going astray; meandering; doubt, uncertainty’; also ‘a figurative going astray, mistake’.Footnote2 Around 1300, the middle English errour meant, among other things ‘deviation from truth, wisdom, good judgement, sound practice or accuracy made through ignorance or inadvertence; something unwise, incorrect or mistaken’ or an ‘offense against morality or justice; transgression, wrong-doing, sin’ (Kurath Citation1953/1989). The term ‘accident’ is derived from the Latin cadere, meaning ‘to fall’Footnote3 which has similarities to the meaning of errorem in that they both imply movement from some objective ‘correct path’. This requires a judgement to be made as to what the ‘correct path’ is and the nature of any transgression from it. Judgement is therefore key. This is ‘the ability to make considered decisions or come to sensible conclusions’ or ‘a misfortune or calamity viewed as a divine punishment’.Footnote4 Judgement is the noun while ‘to be judged’ is the verb, and herein lies the etymology of human error’s relationship to ‘blame’: blame connotes ‘responsibility’, ‘condemnation’ or even ‘damnation’. Individual responsibility is fundamental to Western criminal law (Horlick-Jones Citation1996) and the overlapping relationships between error, accident, judgement and blame play out regularly in legal judgements and popular discourse. Something that Horlick-Jones refers to as a ‘blamist’ approach.

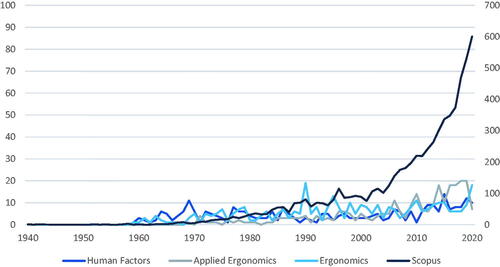

Human error is used more precisely, and more extensively, within the EHF literature. This is shown in which is based on a keyword search (for ‘human error’ and ‘error’) within the titles, abstracts and keywords of articles in three of the top EHF journals (based on impact factor), and the wider literature indexed by Scopus. shows that the term human error began to be used in the mid-late 1960s, which aligns with the establishment of specialised EFH journals (e.g. Ergonomics in 1957). The use of the term has increased somewhat over time within EHF journals but has increased much more sharply within the wider academic literature identified in Scopus. Clearly, this is a topic that continues to engage writers, both positively and negatively.

Figure 1. Trends in the use of the term ‘human error’ across journal articles from 1940 to 2020, comparing: Human Factors, Applied Ergonomics, Ergonomics and all records in Scopus (note, Scopus figures refer to secondary y axis due to higher numbers overall).

1.3. The emergence of human error as a scientific concept

The scientific origins of human error begin in the 1940s and span three key periods.

1.3.1. 1900 To World War II

Human error was a topic of interest to the followers of Freud and psychodynamics, to the behaviourists, and to those from the Gestalt school of psychology. Freud saw the unconscious as playing a role in behaviour (e.g. the eponymous ‘Freudian slip’; Freud and Brill Citation1914). In early psychophysics research, the causes of error were not studied per se, but errors were used as an objectively observable measure of performance (Amalberti Citation2001; Green and Swets Citation1966). Behaviourism research shared an interest in observable indicators of errors (Watson Citation1913), with some limited interest in phenomena such as negative transfer of training (e.g. Singleton Citation1973). Errors of perception were a common subject of study for those within the Gestalt school (Wehner and Stadler Citation1994). Systematic errors in interpreting visual illusions, for example, can be seen as representing early human error ‘mechanisms’ (Reason Citation1990). An interesting exception to the more dominant schools of behaviourism and psychophysics was Bartlett’s (Citation1932) schema theory, which focussed on the role of internal scripts in guiding behaviour. To an extent, Bartlett’s work presaged the coming of the ‘cognitive revolution’.

1.3.2. World War II – a turning point

The equipment and technologies deployed during World War II created an imperative to understand and address human error. In 1942 a young psychology graduate, Alphonse Chapanis, joined the Army Air Force Aero Medical Lab as their first psychologist. He studied the controls of the Boeing B-17, an aircraft that had been over-represented in crash landings. Chapanis identified that the flaps and landing gear had identical switches which were co-located and operated in sequence. He determined that during the high-workload period of landing, pilots were retracting the landing gear instead of the flaps. Chapanis solved the design issue by installing a small rubber wheel to the landing gear lever and a small wedge-shaped to the flap lever in what we would now call analogical mapping (Gentnor 1983) or shape coding. Fitts and Jones (Citation1947) built on Chapanis’ work by showing that many other so-called pilot errors” (quotation marks were used by Fitts and Jones) were in fact problems of cockpit design: “Practically all pilots of present-day AAF aircraft, regardless of experience or skill, report that they sometimes make errors in using cockpit controls. The frequency of these errors and therefore the incidence of aircraft accidents can be reduced substantially by designing and locating controls in accordance with human requirements” (p. 2). As a result of this pioneering work, not only did these (so-called) pilot errors virtually disappear but more formalised work into human error was initiated.

1.3.3. Post-World War II to 1980s

Following World War II, the language and metaphors of new fields of enquiry such as cybernetics and computing found expression in new concepts of human error in the emerging field of cognitive psychology. Information processing models, such as Broadbent’s (Citation1958) stage model of attention, made explicit the idea that different cognitive information processing units perform different functions which enable humans to process information from the environment and to act on this information. One of the first information processing-based approaches to human error was Payne and Altman's (Citation1962) taxonomy. This categorised errors associated with sensation or perception as ‘input errors’, errors associated with information processing as ‘mediation errors’, and errors associated with physical responses as ‘output errors’. Not all errors, therefore, were the same.

The pioneering work of Chapanis and Fitts also revealed that those committing an error were often as perplexed as the psychologists as to why it occurred. This required an increase in attention to the psychological underpinnings of error which, in turn, started to challenge ‘rational actor’ or utility theories tacit in human error thinking to this point, first proposed by Daniel Bernoulli in the 1700s and popular in economics around the 1950s (Tversky Citation1975). The idea of local or bounded rationality (Simon Citation1957) was proposed whereby the rationality of decisions or actions must be defined from the local perspective of the person acting in the particular situation, taking into account their knowledge, their goals and the environmental constraints under which they are operating (Gibson Citation1979; Woods and Cook Citation1999). Far from seeking to rationally optimise an outcome, humans were observed to operate as ‘satisficers’ (Simon Citation1956) who make use of ‘fast and frugal’ heuristics within an adaptive toolbox of strategies (Gigerenzer Citation2001). Rasmussen and Jensen (Citation1974) demonstrated the benefits of studying normal performance and adaptability during real-world problem-solving noting that the processes employed by people quite often differed from what “the great interest of psychologists in complex, rational problem-solving leads one to expect” (Rasmussen and Jensen Citation1974, 7). In another early paper, while still focussed on the categorisations of errors, Buck (Citation1963) demonstrated the benefits of observing normal performance in train driving, as opposed to a review of failures alone.

1.3.4. 1980s Onwards

In the early 1980s, Norman proposed the Activation-Trigger-Schema model of error (Norman Citation1981), drawing on Bartlett’s schema theory from the pre-World War II era. Only a few years later, as the cognitive systems engineering field began to grow, the human error approach began to be questioned. The NATO Conference on Human Error organised by Neville Moray and John Senders provided a key forum for discussion. A position paper submitted by Woods (Citation1983) called for the need to look ‘behind human error’ and to consider how design leads to ‘system-induced error’ (Weiner Citation1977) rather than ‘human error’. Hollnagel (Citation1983) questioned the existence of human error as a phenomenon and called for a focus instead on understanding decision making and action in a way that accounts for performance variability. In the 1990s these views gathered support but were far from mainstream (we will return to them later) and human error remained largely in step with a rational view on human behaviour. Pheasant (Citation1991), for example, defined error as “an incorrect belief or an incorrect action” (p. 181), and Sanders and McCormick (Citation1993) referred to error as “an inappropriate or undesirable human decision or behaviour (p. 658)”. Clearly, there was growing tension between a deterministic view of error and one grounded in the role of bounded rationality and the environment within which errors occur, as exemplified by concepts such as situated planning (Suchman Citation1987) and distributed cognition (Hutchins Citation1995).

Once the environmental and other systemic factors were admitted into the causation of errors, the natural next step was to focus on the dynamic aspects of complex systems within which errors take place. Rasmussen’s (Citation1997) model of migration proposed that behaviour within a system is variable within a core set of system constraints, with behaviours adapting in line with gradients towards efficiency (influenced by management pressure) and effort (influenced by individual preferences), eventually migrating over time towards the boundaries of unacceptable performance. More recently, resilience engineering has emerged to consider the intrinsic ability of a system to adjust its functioning prior to, during, or following changes and disturbances, so that it can sustain required operations under both expected and unexpected conditions” (Hollnagel Citation2014). This development led to a re-branding from Safety-I thinking (i.e. focus on preventing accidents and incidents) to Safety-II (understanding of everyday functioning and how things usually ‘go right’). While it has been emphasised that these views are complementary rather than conflicting, Safety-II advocates a much stronger focus on normal performance variability within a system, especially at the higher levels (e.g. government, regulators) who traditionally take a Safety-I view (Hollnagel et al. Citation2013).

From the etymology of the word error, the growing reference to human error in scientific literature, and the origins of different theoretical bases, it is clear that human error – as a concept - is central to the discipline, yet not fully resolved. The origins of the concept in EHF rapidly alighted on the fact that human error is very often ‘design induced error’, and there has been tension ever since between that and a more mechanistic, colloquial view of error and ‘blamism’. This tension permeates the current state of science. We next consider how human error is viewed from different perspectives in a more general way, including by those outside of the EHF discipline. We propose a broad set of perspectives on human error and its role in safety and accident causation (acknowledging that safety is not the only relevant context for discussions of human error). Then, we explore how ‘human error’ has been defined, modelled, and analysed through the application of EHF methods.

2. Perspectives, models and methods for understanding human error

2.1. Perspectives on safety and human error

In this section, we propose four perspectives to synthesise our understanding of human error: the mechanistic perspective, individual perspective, interactionist perspective and systems perspective. The key aspects of each perspective are summarised in .

Table 1. Perspectives on safety management and accident causation.

The perspectives somewhat represent the evolution of safety management practices over time. While the concept of human error and blame has been prevalent in society throughout history, formal safety approaches such as accident investigation commenced from an engineering perspective (Reason Citation2008), followed by the introduction of psychology and EHF, and later the adoption of systems theory and complexity science within EHF. The perspectives could also be seen to fit along a continuum between Dekker’s (Citation2006) ‘old view’ and ‘new view’ of human error with the mechanistic and individual perspectives representing the old view, the interactionalist view tending towards the new view, and the systems perspective representing the new view.

2.1.1. The mechanistic perspective

The mechanistic perspective focuses on technology and views human behaviour in a deterministic manner. Underpinned by engineering principles and Newtonian science, this perspective suggests that human behaviour can be predicted with some certainty and that the reliability of human failure can be calculated. A reductionist view, the mechanistic perspective takes a micro view and aligns with Safety-I thinking in relation to preventing failures. It tends to view error as a cause of accidents.

2.1.2. The individual perspective

This perspective can be conceptualised as addressing ‘bad applies’ (Dekker Citation2006) or bad behaviours. An indication that this perspective is in use may be a reference to the ‘human factor’. This approach is often associated with ‘blamism’ and is outdated within EHF. It can, however, still be found within safety practice exemplified in some behaviour-based safety approaches and in the education and enforcement interventions commonly applied to address public safety issues. For example, this approach continues to dominate road safety research and practice whereby driver behaviour is seen as the primary cause of road crashes and driver education and enforcement are common intervention strategies (e.g. Salmon et al. Citation2019).

2.1.3. The interactionist perspective

Generally applied in a Safety-I context, this perspective still views error as the cause of accidents, but acknowledges the contributory role of contextual and organisational factors. Sometimes referred to as ‘simplistic systems thinking’ (Mannion and Braithwaite Citation2017) it does consider system influences on behaviour but often in a linear or mechanistic fashion, and is limited to the organisational context. Unlike the mechanistic and individual perspectives, the interactionalist perspective does not connote a negative view of humans, often quite the reverse (e.g. Branton’s (1916–90) person-centred approach to ergonomics; Osbourne et al. 2012).

2.1.4. The systems perspective

This perspective, underpinned by systems theory and complexity science, takes a broader view of system behaviour across multiple organisations and acknowledges wider societal influences. It can be differentiated from the interactionist perspective in that it takes the system itself as the unit of analysis (often considering elements beyond the boundary of the organisation); it considers non-linear interactions; and it generally views accidents as ‘systems failures’, rather than adopting the ‘error-as-cause’ view. Uniquely, this perspective can explain accidents where there is no underlying ‘error’ but where the normal performance of individuals across levels of the system leads it to shift beyond the boundary of safe operation. Johnston and Harris (Citation2019), discussing the Boeing 737 Max failures explains that “one must also remember that nobody at Boeing wanted to trade human lives for increased profits… Despite individual beliefs and priorities, organisations can make and execute decisions that none of the participants truly want… (p. 10). The systems perspective is more often aligned with Safety-II, with the aim of optimising performance rather than only preventing failure. It is also compatible with human-centred design approaches whereby user needs and perspectives are considered within a broader understanding of system functioning (e.g. Clegg Citation2000).

2.2. Human performance models

Stemming predominantly from the individual and interactionist EHF perspectives, human performance models (see ) provide conceptual representations of the mechanisms by which errors arise. The models should be interpreted in the context within which they were developed, with models emerging from changes in the state of the science. Structural models, such as information processing approaches, align with the individual perspective. These models are focussed on cognitive structures and cognition ‘in the head’. In contrast, functional models such as the perceptual cycle model (Neisser Citation1976), rewritable routines (Baber and Stanton Citation1997; Stanton and Baber Citation1996) and the contextual control model (Hollnagel Citation1993; Hollnagel and Woods Citation2005), align with the interactionist perspective and consider goal-directed interactions between humans and their environment. Importantly, human error, phenomenologically, will appear differently depending on the modelling lens through which it is projected. Some models will illuminate certain perspectives more than others and some will have higher predictive validity, in some situations, than others. The model selected, therefore, affects the human error reality.

Table 2. Overview of human performance and human error models; in order of publication date.

2.3. Human error methods

The selection of models also influences the methods applied. Two decades ago, Kirwan (Citation1998a) reviewed and analysed 38 approaches to human error identification, with many more being developed since. This includes human reliability analysis (HRA), human error identification (HEI) and accident analysis methods. We, therefore, limited our review (see ) to those methods described in Stanton et al. (Citation2013) as representing methods that are generally available and in use by EHF practitioners. To gain an understanding of the relative influence of each method, we used Scopus to identify citations and other article metrics for each seminal publication describing a method. While these citation counts are a coarse measure and tell us nothing about utilisation in practice,Footnote5 they provide some insight into the prominence of the methods within the academic literature.

Table 3. Human error methods and taxonomies.

Broadly, the human error methods reviewed fall within two classes: retrospective and prospective; however, a sub-set span both classes.

Retrospective human error analysis methods provide insight into what behaviours contributed to an accident or adverse event, with most methods encouraging the analyst to classify errors (e.g. slip, lapse or mistake) and performance shaping factors (e.g. time pressure, supervisor actions, workplace procedures). The Human Factors Analysis and Classification System (HFACS; Weigmann and Shappell Citation2001) was the only method identified as retrospective only. Originally developed for aviation, HFACS has been adapted for several safety-critical domains (e.g. maritime, Chauvin et al. Citation2013; mining, Patterson and Shappell Citation2010; and rail, Madigan, Golightly, and Madders Citation2016). HFACS provides taxonomies of error and failure modes across four organisational levels (unsafe acts, preconditions for unsafe acts, unsafe supervision, and organisational influences).

Prospective human error methods are used to identify all possible error types that may occur during specific tasks so that design remedies can be implemented in advance. Generally applied in conjunction with task analysis, they enable the analyst to systematically identify what errors could be made. The Systematic Human Error Reduction and Prediction Approach (SHERPA; Embrey Citation1986, Citation2014), for example, provides analysts with a taxonomy of behaviour-based ‘external error modes’, along with ‘psychological error mechanisms’, with which to identify credible errors based on operations derived from a task analysis of the process under analysis. Credible errors and relevant performance influencing factors are described and rated for probability and criticality before suitable remedial measures are identified. Prospective methods can also support HRA, whereby tasks are assessed for the quantitative probabilities of human errors occurring taking into account baseline human error probabilities and relevant performance shaping factors. Examples include the Human Error Assessment and Reduction Technique (HEART; Williams Citation1986) and the Cognitive Reliability and Error Analysis Method (CREAM; Hollnagel Citation1998). As can be seen in , CREAM was the most frequently cited of the methods reviewed.

To varying extents, all human error methods rely on an underpinning mechanistic, individual or interactionist perspective. There is no doubt such approaches are useful, but caution is recommended. The accuracy of HRA approaches has been challenged given uncertainties surrounding error probability estimates (Embrey Citation1992) and overall methodological reliability and validity (Stanton et al. Citation2013). It has also been suggested that categorising and counting errors supports a worldview of humans as unreliable system components that need to be controlled, or even replaced by automation (Woods and Hollnagel Citation2006). Both Embrey (Citation1992) and Stanton et al. (Citation2006) suggest that methods should focus on the identification of potential errors rather than their quantification, with the benefit being the qualitative insights gained and the opportunities to identify remedial measures which can inform design or re-design.

As may be expected, none of the methods reviewed adopt a systems perspective.

3. Use, misuse and abuse of human error

It is clear the current state of science comprises multiple perspectives on human error, multiple theoretical frames, and multiple methods. All have played a role in raising the profile of EHF within the safety-critical industries. Similarly, from the perspective of public awareness of EHF, the idea of human error is widespread within media reporting and tends to be used synonymously with EHF (Gantt and Shorrock Citation2017). It has been shown that media ‘blamism’ influences people to more readily agree that culpable individuals deserve punishment and less readily assign responsibility to the wider organisation (Nees, Sharma, and Shore Citation2020). Indeed, there is a tendency for the media to modify the term to assign blame to the person closest to the event (e.g. pilot error, driver error, nurse error). The unresolved tension between human error and design error leads to unintended consequences. Even using such terms encourages a focus on the ‘human factor’ rather than the wider system (Shorrock Citation2013). To paraphrase Parasuraman and Riley (Citation1997) on automation; the term human error is ‘used’, ‘misused’ and ‘abused’. A discussion considering these topics helps to further explore the current state of science.

3.1. Use and utility of human error

3.1.1. An intuitive and meaningful construct

Human error is “intuitively meaningful” (Hollnagel and Amalberti Citation2001, 2). It is easy to explain to people outside of the EHF discipline or those new to EHF. Framing actions as errors, being unintentional acts or omissions as opposed to intentional violations, may help to reduce unjust blame. Most people will also agree with the phrase “to err is human” (Alexander Pope 1688–1744), at least in principle. Indeed, trial-and-error is an important part of normal learning processes and errors help keep systems safe by building operator expertise in their management (Amalberti Citation2001). Singleton (Citation1973, 731) noted that skilled operators make “good use of error” and “are effective because of their supreme characteristics in error correction rather than error avoidance”.

3.1.2. Errors and heroic recoveries emerge from the same underlying processes

Errors also facilitate serendipitous innovation (Reason Citation2008). For example, had Wilson Greatbatch not installed the wrong size of resistor into his experimental heart rhythm recording device, he would not have noticed that the resulting circuit emitted electrical pulses, and likely would not have been inspired to build the first pacemaker in 1956 (Watts Citation2011). Similarly, had Alexander Fleming kept a clean and ordered laboratory, he would not have returned from vacation to find mould growing in a petri dish, leading to the discovery of penicillin (Bennett and Chung Citation2001). In line with Safety-II arguments, these events demonstrate that the processes underpinning human error are the same as those which lead to desirable outcomes. Consequently, the elimination of error via automation or strict controls may also act to eliminate human innovation, adaptability, creativity and resilience.

3.1.3. Learning opportunities

Human error models and taxonomies have been an important tool in EHF for decades. They have facilitated learning from near misses and accidents (e.g. Chauvin et al. Citation2013; Baysari, McIntosh, and Wilson Citation2008), the design of improved human-machine interfaces (e.g. Rouse and Morris Citation1987), and the design of error-tolerant systems (e.g. Baber and Stanton Citation1994).

3.2. Misuse and abuse of human error

Conversely, there are several ways in which human error has been misused, abused or both. These relate to a lack of precision in the construct and the way it is used in language. It also relates to an underlying culture of blamism, simplistic explanations of accident causation, a focus on frontline workers, and the implementation of inappropriate fixes.

3.2.1. A lack of precision in theory and use in language

A key scientific criticism of the notion of human error is that it represents a folk model' of cognition. That is, human error is a non-observable construct, used to make causal inferences, without clarity on the mechanism behind causation (Dekker and Hollnagel Citation2004). This notion is supported by the fact that there are multiple models of human error. In terms of language, Hollnagel and Amalberti (Citation2001) suggest that misuse of human error can be related to it being regarded as a cause, a process or a consequence:

Human error as a cause: When human error is used as a cause or explanation for an adverse event, it represents a stopping point for the investigation. This hinders learning, with broader factors that played a contributory role in the accident potentially overlooked. Human error is not an explanation of failure, it demands an explanation” (Dekker Citation2006, 68). Framing error as a cause of failure generates several negative outcomes such as reinforcing blame, recommendation of inappropriate countermeasures (i.e. re-training, behaviour modification) and a failure to act on the systemic issues that are the real underlying causes of failure. Reason (Citation2000) provided the analogy of swatting mosquitoes versus draining swamps. We can either swat the mosquitoes (by blaming and re-training individuals), or drain the swamps within which the mosquitoes breed (by addressing systemic factors). However, the actions of those at the frontline remain a focus of official accident investigations. For example, the investigation into the 2013 train derailment at Santiago de Compostela by Spain’s Railway Accident Investigation Commission focussed on the error of the train driver (Vizoso Citation2018) and the investigation by France’s Bureau of Enquiry and Analysis for Civil Aviation Safety into the Air France 447 crash focussed on the pilots’ failure to control the aircraft (Salmon, Walker, and Stanton Citation2016).

Human error as a process: When human error is discussed as a process or an event, the focus is on the error itself, rather than its outcomes. One of the issues with this approach is that error is often defined as a departure from a ‘good’ process (Woods and Cook Citation2012); some ‘ground truth’ in an objective reality. But this raises further questions such as what standard is applicable and how we account for local rationality. It also raises questions around what it means for other deviations, given that these are relatively common and occur with no adverse consequences (and indeed often lead to successful consequences).

Confounding error with its outcome: The final usage of human error discussed by Hollnagel and Amalberti (Citation2001) is where the error is defined in terms of its consequence, i.e. the accident event. Human error used in this sense is confounded with the harm that has occurred. As an example, although the seminal healthcare report To Err is Human (Kohn, Corrigan, and Donaldson Citation1999) called for systemic change, and was a catalyst for a focus on patient safety, it also confounded medical harm with medical error, thus placing the focus on individual healthcare worker performance. Referring to human error also had the unintended consequence of the healthcare sector believing that they could address the issues being faced without assistance from other disciplines, thus failing to keep up with modern developments in safety science (Wears and Sutcliffe Citation2019).

As Hollnagel and Amalberti (Citation2001) note, for each of the ways that human error is used in language, it carries negative connotations. This is important, because although many people in everyday life and workplaces will acknowledge that ‘everyone makes mistakes’, we are biased cognitively to assume that bad things (i.e. errors and associated adverse outcomes) happen to bad people – the so-called ‘just world hypothesis’ (see Furnham Citation2003 for a review; also Reason Citation2000). Similarly, the ‘causality credo’ (Hollnagel Citation2014) is the idea that for every accident there must be a cause, and generally, a bad one (as bad causes precede bad consequences), that these bad causes can be searched back until a ‘root cause’ (or set of causes) can be identified, and that all accidents are preventable by finding and treating these causes. This mindset reinforces a focus on uncovering ‘bad things’. However, as we have seen, contemporary thinking posits that people act in a context of bounded and local rationality, where adaptation and variability are not only common but in fact necessary to maintain system performance. The contribution of ‘normal performance’ to accidents is now widely accepted (Dekker Citation2011b; Perrow Citation1984; Salmon et al. Citation2017).

3.2.2. Blame and simplistic explanations of accidents

The causality credo aligns with the legal tradition of individual responsibility for accidents, where the bad cause is either a crime or a failure to fulfil a legal duty. Dekker (Citation2011a) describes the shift in societal attitudes from the pre-17th century when religion and superstitions would account for misfortunes, to the adoption of what was considered a more scientific and rational concept of an ‘accident’. Accidents were seen as “merely a coincidence in space and time with neither human nor divine motivation” (Green Citation2003, 31). It was not until the advent of large-scale industrial catastrophes such as Three Mile Island in 1979 and Tenerife in 1977 that a risk management approach came to the fore, with the corresponding reduction in the public acceptance of risk to “zero tolerance of failure” (Dekker Citation2011a, 123). This has brought additional pressure to identify a blameworthy actor and ensure they are brought to justice.

Another element is outcome bias whereby blame and criminalisation are more likely when the consequences of an event are more severe (Henriksen and Kaplan Citation2003; Dekker Citation2011b). Defensive attribution theory (Shaver Citation1970) proposes that more blame is attributed in high severity, as opposed to low severity accidents, as a high severity event evokes our self-protective defences against the randomness of accidents. Increased blame then provides a sense of control over the world. Attributing blame following an adverse event, particularly a catastrophic one, restores a sense of trust in ‘experts’ (who may be individuals or organisations). This repair of trust is needed for the continuation of technological expansion and suggests a “fundamental, almost primitive, need to blame…” (Horlick-Jones Citation1996, 71).

Hindsight also plays an important role (Dekker Citation2006). Post-accident, it is easy to fall into the trap of viewing events and conditions leading up to the event as linear and deterministic. Hindsight bias in combination with attribution bias helps to strengthen the beliefs of managers, judges, investigators and others who review accidents to ‘persuade themselves… that they would never have been so thoughtless or reckless’ (Hudson Citation2014, 760). Criminal law in Western countries tends to be predicated on the notion that adverse events arise from the actions of rational actors acting freely (Horlick-Jones Citation1996). This ‘creeping determinism’ (Fischoff Citation1975) also increases confidence in our ability to predict future events, meaning that we may fail to consider causal pathways that have not previously emerged.

3.2.3. Focus on frontline workers

Human error models and methods, almost universally, fail to consider that errors are made by humans at all levels of a system (Dallat, Salmon, and Goode Citation2019). Rather, they bring the analysts to focus on the behaviour of operators and users, particularly those on the ‘front-line’ or ‘sharp-end such as pilots, control room operators, and drivers. This is inconsistent with contemporary understandings of accident causation, which emphasise the role that the decisions and actions from other actors across the work system play in accident trajectories (Rasmussen Citation1997; Salmon et al. Citation2020). Further, decades of research on safety leadership (Flin and Yule Citation2004) and safety climate (Mearns, Whitaker, and Flin Citation2003) highlight the importance of managerial decisions and actions in creating safe (or unsafe) environments. Nonetheless, it is interesting to note that despite decades of research, our knowledge of human error is still largely limited to frontline workers or users while the nature and prevalence of errors at higher levels of safety-critical systems have received much less attention. This may be explained by the fact that the relationship or coupling between behaviour and the outcome affects how responsibility is ascribed (Harvey and Rule Citation1978). Key aspects of this include causality and foreseeability (Shaver Citation1985). There is often a time lag between decisions or actions made by those away from the frontline and the accident event. Within the intervening time frame, many opportunities exist for other decisions and actions, or circumstances, to change the course of events. Further, where decisions are temporally separated from the event, it is more difficult to foresee the consequences, particularly in complex systems where unintended consequences are not uncommon.

The way in which we define human error may also generate difficulties when considering the role of those at higher levels of the system. Frontline workers generally operate under rules and procedures which provide a normative standard against which their behaviour can be judged. In contrast, designers, managers and such generally operate with more degrees of freedom. It is easier to understand their decisions as involving trade-offs between competing demands, such as a manager unable to employ additional staff due to budgetary constraints or a designer forfeiting functionality to maximise usability. In many ways the copious rules and procedures that we place on frontline workers to constrain their behaviours masks our ability to see their decisions and actions as involving the same sorts of trade-offs (e.g. between cost and quality, efficiency and safety), and helps to reinforce the focus on human error.

Other reasons for the focus on the frontline worker are more pragmatic such as the practical difficulties of identifying specific decisions that occurred long before the event that may not be well-documented or remembered. Even where those involved could be identified, it may be difficult to locate them. Focussing on the frontline worker, or ‘the last person to touch the system’, provides an easy explanation. Simple causal explanations are preferred by managers and by courts (Hudson Citation2014) and are less costly than in-depth investigations that can identify systemic failures.

Further, research has shown that simple causal explanations reduce public uncertainty about adverse events, such as school shootings (Namkoong and Henderson Citation2014). It is also convenient for an organisation to focus on individual responsibility. This is not only legally desirable but frames the problem in a way that enables the organisation to continue to operate as usual, leaving its structures, culture and power systems intact (Catino Citation2008; Wears and Sutcliffe Citation2019).

3.2.4. Inappropriate fixes

A human error focus has in practice led to frequent recommendations for inappropriate system fixes or countermeasures. For example, in many industries accident investigations still lead to recommendations focussed on frontline workers such as re-training or education around risks (Reason Citation1997; Dekker Citation2011b). Further, the pervasiveness of the hierarchy of control approach in safety engineering and occupational health and safety (OHS) can reinforce the philosophy that humans are a ‘weak point’ in systems and need to be controlled through engineering and administrative mechanisms. This safety philosophy, without appropriate EHF input, can reinforce person-based interventions which attempt to constrain behaviour, such as the addition of new rules and procedures to an already overwhelming set of which no one has full oversight or understanding. This contrasts with traditional EHF interventions based on human-centred design, improving the quality of working life, and promoting worker wellbeing. A contemporary example is a trend towards the use of automation in more aspects of our everyday lives, such as driving, underpinned by the argument that humans are inherently unreliable. This embodies the deterministic assumption underlying many approaches to safety and accident prevention which ignores fundamental attributes of complex systems such as non-linearity, emergence, feedback loops and performance variability (Cilliers Citation1998; Grant et al. Citation2018). Thus, fixes are based on inappropriate assumptions of certainty and structure, rather than supporting humans to adapt and cope with complexity. On a related note, the increasing imposition of rules, procedures and technologies adds to complexity and coupling within the system, which can in turn increase opportunities for failure (Perrow Citation1984).

4. Systems perspectives and methods in EHF

The appropriate use of human error terms, theories, and methods has unquestionably enabled progress to be made, but the underlying tension between human error and ‘systems failure’ remains unresolved. The misuse and abuse of human error lead to disadvantages which may be slowing progress in safety improvement. In response, perspectives on human error which were considered radical in the 1990s are becoming more mainstream and the current state of science is pointing towards a systems approach. Indeed, EHF is regularly conceptualised as a systems discipline (e.g. Dul et al. Citation2012; Moray Citation2000; Wilson Citation2014; Salmon et al. Citation2017). Specifically, when considering failures, it is proposed that we go further than human error, design error (Fitts and Jones Citation1947), further even than Weiner’s (Citation1977) ‘system-induced failure’ to recognise accidents as ‘systems failures’.

Taking a systems approach, whether to examine failure or to support success, requires structured and systematic methods to support the practical application of constructs such as systems theory and systems thinking. While methods from the interactionist perspective may be appropriate in some circumstances, for example, in comparing design options or predictive risk assessment, they do not take the whole system as the unit of analysis and consider potential non-linear interactions.

A core set of systems methods are now in use (Hulme et al. Citation2019). These include AcciMap (Svedung and Rasmussen Citation2002), the Systems Theoretic Accident Model and Processes (STAMP; Leveson Citation2004), Cognitive Work Analysis (CWA; Vicente Citation1999), the Event Analysis of Systemic Teamwork (EAST; Walker et al. Citation2006), the Networked Hazard Analysis and Risk Management System (Net-HARMS; Dallat, Salmon, and Goode Citation2018) and the Functional Analysis Resonance Method (FRAM; Hollnagel Citation2012). These methods take into account the key properties of complex systems (see ). In comparison to human error methods (), systems methods offer a fundamentally distinct perspective by taking the system as the unit of analysis, rather than commencing with a focus on human behaviour. Consequently, the analyses produced are useful in identifying the conditions or components that might interact to create the types of behaviours that other methods would classify as errors. Importantly, this includes failures but also normal performance. Notably, the boundary of the system of interest must always be defined for a particular purpose and from a particular perspective, and this may be broader (e.g. societal; Salmon et al. Citation2019) or more narrow (e.g. comprising an operational team and associated activities, contexts and tools; Stanton Citation2014). Boundary definitions will depend upon the level of detail required and a judgement on the strength of external influences, but are vital for defining the ‘unit of analysis’.

Table 4. Human error defined in relation to complex systems properties.

While systems methods have been in use for some time, there continue to be calls for more frequent applications across a range of domains (Hulme et al. Citation2019; Salmon et al. Citation2017), from aviation (e.g. Stanton, Li, and Harris Citation2019), healthcare (Carayon et al. Citation2014), to nuclear power (e.g. Alvarenga, Frutuoso e Melo, and Fonseca Citation2014), to addressing the risk of terrorism (Salmon, Carden, and Stevens Citation2018). They are seeing increasing use in practice as they are better able to cope with the complexity of modern sociotechnical systems. For example, in the context of air traffic management, EUROCONTROL has argued for a move away from simplistic notions of human error, and towards systems thinking and associated concepts and methods, such as those cited in this paper (Shorrock et al. Citation2014). Methods such as Net-HARMS (Dallat, Salmon, and Goode Citation2018) have been developed with the express purpose of being used by practitioners as well as researchers.

A significant benefit of the systems perspective and systems methods is to address the issue of blame and simplistic explanations of accidents, expanding the message beyond EHF professionals and the organisations within which they work. As one example, the EUROCONTROL Just Culture Task Force has led to the acknowledgement of just culture in EU regulations (Van Dam, Kovacova, and Licu Citation2019) and delivered, over 15 years, education and training on just culture and the judiciary. This includes workshops and annual conferences that bring together prosecutors, air traffic controllers, pilots, and safety and EHF specialists (Licu, Baumgartner, and van Dam Citation2013) and includes content around systems thinking as well as just culture. Guidance is provided for interfacing with the media, to assist in educating journalists about just culture and ultimately facilitate media reporting into air traffic management incidents that are balanced and non-judgemental (EUROCONTROL Citation2008). While it is acknowledged that culture change is a slow process, these activities have a long-term focus with the aim of shifting mindsets over time.

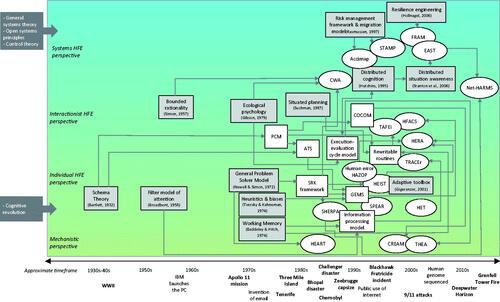

5. A Summary of the shift from human performance to systems ergonomics

The rise of the systems perspective in EHF has undoubtedly impacted the popularity and use of human error terms, theories and methods. summarises the development over time of the theories, models and methods that relate to the four perspectives on human error. This highlights the proliferation of model and method development in a relatively short period, particularly from the late 1970s to the early 2000s. Hollnagel (Citation2009) attributes this surge in method development, particularly human error methods, to the Three Mile Island nuclear meltdown in 1979. He identifies this at the beginning of the second age of human factors, where the human is viewed as a liability. It is worth highlighting that many of the systems methods were developed in parallel to the human error methods, with some notable examples also stemming from the nuclear sector (e.g. CWA). suggests that EHF has spent the past three decades operating under multiple perspectives, rather than necessarily experiencing a paradigm shift. Interestingly, there have been some examples of integration between perspectives, such as the Net-HARMS method drawing from SHERPA, and CWA’s adoption of the SRK taxonomy. However, given that EHF is no longer a ‘new’ discipline, with this journal alone recently celebrating 60 years of publication, it is timely to discuss a clearer way forward.

Figure 2. Overview of the evolution of models (white boxes), methods (white ovals), and underpinning theory (grey boxes) by perspective. Note, alignment against perspectives is intended to be approximate and fuzzy rather than a strict classification.

6. To err within a system is human: a proposed way forward

The concept of human error has reached a critical juncture. Whilst it continues to be used by researchers and practitioners worldwide, increasingly there are questions regarding its utility, validity, and ultimately its relevance given the move towards the systems perspective. We suggest there are three camps existing within EHF (Shorrock Citation2013): 1) a group that continues to use the term with ‘good intent’, arguing that we must continue to talk of error in order to learn from it; 2) a group who continues to use the term for convenience (i.e. when communicating in non-EHF arenas) but rejects the simplistic concept, instead focussing on wider organisational or systemic issues; and 3) a group who have abandoned the term, arguing that the concept lacks clarity and utility and its use is damaging. We predict that this third group will continue to grow. We acknowledge that the concept of error can have value from a psychological point of view, in describing behaviour that departs from an individual’s expectation and intention. It might also be considered proactively in system design, however, interactionalist HEI methods that focus on all types of performance, rather than errors or failures alone, provide analysts with a more nuanced view. Importantly, however, we have seen how the concept of human error has been misused and abused, particularly associated with an error-as-cause view, leading to unintended consequences including blame and inappropriate fixes.

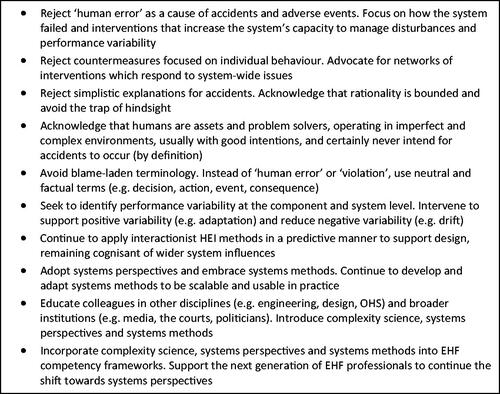

A set of practical recommendations are offered as a way of moving EHF, and our colleagues within related disciplines, away from a focus on individual, mechanistic, blame-worthy ‘human error’ and towards the conception of a holistic system of system performance. These are shown in .

Figure 3. A proposed way forward.

Importantly, we must consider the implications of the proposed changes on other areas of the discipline. A shift away from a human error to the systems perspective would fundamentally change how we view and measure constructs such as situation awareness (see Stanton et al. Citation2017), workload (Salmon et al. Citation2017) and teamwork, for example. There are also significant implications for areas such as job and work design, where we may see a resurgence in interest in approaches underpinned by sociotechnical systems theory (Clegg Citation2000) along with new understandings of how organisations can support workers, for example by promoting agency in responding to uncertainty (Griffin and Grote Citation2020). A final note as we draw our discussion to a close is that a key systems thinking principle relates to maintaining awareness of differing worldviews and perspectives within a complex system. Donella Meadows (1941–2001) highlighted a vital consideration for any discipline when she suggested that the highest leverage point for system change is the power to transcend paradigms. There is no certainty in any worldview, and flexibility in thinking, rather than rigidity, can indeed be the basis for “radical empowerment” (Meadows Citation1999, 18).

7. Conclusions

Human error has helped advance our understanding of human behaviour and has provided us with a set of methods that continue to be used to this day. It remains, however, an elusive construct. Its scientific basis, and its use in practice, have been called into question. While its intuitive nature has no doubt assisted EHF to gain buy-in within various industries, its widespread use within and beyond the discipline has resulted in unintended consequences. A recognition that humans only operate as part of wider complex systems leads to the inevitable conclusion that we must move beyond a focus on an individual error to systems failure to understand and optimise whole systems. Theories and methods taking a systems perspective exist to support this shift, and the current state of science points to their uptake increasing. We hope that our proposed way forward provides a point of discussion and stimulates debate for the EHF community as we face new challenges in the increasingly complex sociotechnical systems in which we apply EHF.

| Abbreviations | ||

| CWA | = | cognitive work analysis |

| EAST | = | event analysis of systemic teamwork |

| EHF | = | ergonomics and human factors |

| FRAM | = | functional analysis resonance method |

| HEI | = | human error identification |

| HFACS | = | human factors analysis and classification system |

| HRA | = | human reliability analysis |

| OHS | = | occupational health and safety |

| Net-HARMS | = | networked hazard analysis and risk management system |

| SHERPA | = | systematic human error reduction and prediction approach |

| STAMP | = | systems theoretic accident model and processes |

Acknowledgements

We sincerely thank Rhona Flin for her insightful comments on a draft version of this paper. We also thank Alison O’Brien and Nicole Liddell for their assistance with proofreading and literature searching. Finally, we are immensely grateful to the anonymous peer reviewers for their valuable comments which have strengthened the paper.

Disclosure statement

No potential conflict of interest was reported by the author(s).

Correction Statement

This article has been corrected with minor changes. These changes do not impact the academic content of the article.

Additional information

Funding

Notes

1 Note, the terms ergonomics and human factors can be used interchangeably, and the discipline is also known by HFE.

2 From https://www.etymonline.com/word/error [accessed 6 June 2020]

4 From https://www.encyclopedia.com/social-sciences-and-law/law/law/judgment [accessed 6 June 2020].

5 Contemporary metrics such as social media engagements and mentions in news articles or blog posts were not available for these publications given most were released some time ago.

6 No seminal paper could be identified for human error HAZOP.

7 The citation search was refined to papers also including the words ‘HEIST’ OR ‘Human error identification in systems tool’, given that the seminal publication contains broader content beyond describing the HEIST method.

8 The citation search was refined to papers also including the words ‘SPEAR’ OR ‘Systems for Predicting Human Error and Recovery’, given that the seminal publication contains broader content beyond describing the SPEAR method.

References

- Ackoff, R. L. 1973. “Science in the Systems Age: Beyond IE, or and MS.” Operations Research 21 (3): 661–671. doi:10.1287/opre.21.3.661.

- Akyuz, E., and M. Celik. 2015. “A Methodological Extension to Human Reliability Analysis for Cargo Tank Cleaning Operation on Board Chemical Tanker Ships.” Safety Science 75: 146–155. doi:10.1016/j.ssci.2015.02.008.

- Alexander, T. M. 2019. “A Case Based Human Reliability Assessment Using HFACS for Complex Space Operations.” Journal of Space Safety Engineering 6 (1): 53–59. doi:10.1016/j.jsse.2019.01.001.

- Alvarenga, M. A. B., P. F. Frutuoso e Melo, and R. A. Fonseca. 2014. “A Critical Review of Methods and Models for Evaluating Organizational Factors in Human Reliability Analysis.” Progress in Nuclear Energy 75: 25–41. doi:10.1016/j.pnucene.2014.04.004.

- Amalberti, R. 2001. “The Paradoxes of Almost Totally Safe Transportation Systems.” Safety Science 37 (2–3): 109–126. doi:10.1016/S0925-7535(00)00045-X.

- Baber, C., and N. A. Stanton. 1994. “Task Analysis for Error Identification: A Methodology for Designing Error-Tolerant Consumer Products.” Ergonomics 37 (11): 1923–1941. doi:10.1080/00140139408964958.

- Baber, C., and N. A. Stanton. 1996. “Human Error Identification Techniques Applied to Public Technology: Predictions Compared with Observed Use.” Applied Ergonomics 27 (2): 119–131. doi:10.1016/0003-6870(95)00067-4.

- Baber, C., and N. A. Stanton. 1997. “Rewritable Routines in Human Interaction with Public Technology.” International Journal of Cognitive Ergonomics 1 (4): 237–249.

- Barajas-Bustillos, M. A., A. Maldonado-Macias, M. Ortiz-Solis, A. Realyvazquez-Vargas, and J. L. Hernández-Arellano. 2019. “A Cognitive Analyses for the Improvement of Service Orders in an Information Technology Center: A Case of Study.” Advances in Intelligent Systems and Computing 971: 188–198.

- Bartlett, F. C. 1932. Remembering: A Study of Experimental and Social Psychology. Cambridge: Cambridge University Press.

- Baybutt, P. 2002. “Layers of Protection Analysis for Human Factors (LOPA-HF).” Process Safety Progress 21 (2): 119–129. doi:10.1002/prs.680210208.

- Baysari, M. T., A. S. McIntosh, and J. R. Wilson. 2008. “Understanding the Human Factors Contribution to Railway Accidents and Incidents in Australia.” Accident; Analysis and Prevention 40 (5): 1750–1757. doi:10.1016/j.aap.2008.06.013.

- Baysari, M. T., C. Caponecchia, and A. S. McIntosh. 2011. “A Reliability and Usability Study of TRACEr-RAV: The Technique for the Retrospective Analysis of Cognitive errors-for rail, Australian version.” Applied Ergonomics 42 (6): 852–859. doi:10.1016/j.apergo.2011.01.009.

- Bennett, J. W., and K.-T. Chung. 2001. “Alexander Fleming and the Discovery of Penicillin.” Advances in Applied Microbiology 49: 163–184.

- Broadbent, D. 1958. Perception and Communication. London: UK Pergamon Press.

- Buck, L. 1963. “Errors in the Perception of Railway Signals.” Ergonomics 6 (2): 181–192. doi:10.1080/00140136308930688.

- CAA. 2011. CAA ‘Significant Seven’ Task Force Reports. West Sussex: UK Civil Aviation Authority.

- Carayon, P., T. B. Wetterneck, A. J. Rivera-Rodriguez, A. S. Hundt, P. Hoonakker, R. Holden, and A. P. Gurses. 2014. “Human Factors Systems Approach to Healthcare Quality and Patient Safety.” Applied Ergonomics 45 (1): 14–25. doi:10.1016/j.apergo.2013.04.023.

- Catino, M. 2008. “A Review of Literature: Individual Blame vs. organizational Function Logics in Accident Analysis.” Journal of Contingencies and Crisis Management 16 (1): 53–62. doi:10.1111/j.1468-5973.2008.00533.x.

- CCPS (Centre for Chemical Process Safety). 1994. Guidelines for Preventing Human Error in Process Safety. New York: American Institute for Chemical Engineers.

- Chauvin, C., S. Lardjane, G. Morel, J.-P. Clostermann, and B. Langard. 2013. “Human and Organisational Factors in Maritime Accidents: Analysis of Collisions at Sea Using the HFACS.” Accident; Analysis and Prevention 59: 26–37. doi:10.1016/j.aap.2013.05.006.

- Chen, D., Y. Fan, C. Ye, and S. Zhang. 2019. “Human Reliability Analysis for Manned Submersible Diving Process Based on CREAM and Bayesian Network.” Quality and Reliability Engineering International 35 (7): 2261–2277.

- Cilliers, P. 1998. Complexity and Postmodernism: Understanding Complex Systems. London: Routledge.

- Clegg, C. W. 2000. “Sociotechnical Principles for System Design.” Applied Ergonomics 31 (5): 463–477. doi:10.1016/S0003-6870(00)00009-0.

- Dallat, C., P. M. Salmon, and N. Goode. 2018. “Identifying Risks and Emergent Risks across Sociotechnical Systems: The NETworked Hazard Analysis and Risk Management System (NET-HARMS).” Theoretical Issues in Ergonomics Science 19 (4): 456–482. doi:10.1080/1463922X.2017.1381197.

- Dallat, C., P. M. Salmon, and N. Goode. 2019. “Risky Systems versus Risky People: To What Extent Do Risk Assessment Methods Consider the Systems Approach to Accident Causation? A Review of the Literature.” Safety Science 119: 266–279. doi:10.1016/j.ssci.2017.03.012.

- Dekker, S. 2002. “Reconstructing Human Contributions to Accidents: The New View on Error and Performance.” Journal of Safety Research 33 (3): 371–385. doi:10.1016/S0022-4375(02)00032-4.

- Dekker, S. 2006. The Field Guide to Understanding Human Error. Aldershot: Ashgate.

- Dekker, S. 2011a. “The Criminalization of Human Error in Aviation and Healthcare: A Review.” Safety Science 49 (2): 121–127. doi:10.1016/j.ssci.2010.09.010.

- Dekker, S. 2011b. Drift into Failure: From Hunting Broken Components to Understanding Complex Systems. Surrey: Ashgate.

- Dekker, S., P. Cilliers, and J-H. Hofmeyr. 2011. “The Complexity of Failure: Implications of Complexity Theory for Safety Investigations.” Safety Science 49 (6): 939–945. doi:10.1016/j.ssci.2011.01.008.

- Dekker, S. W., and E. Hollnagel. 2004. “Human Factors and Folk Models.” Cognition, Technology and Work 6 (2): 79–86. doi:10.1007/s10111-003-0136-9.

- Department for Transport. 2020. Reported road casualties in Great Britain: 2019 annual report. https://www.gov.uk/government/statistics/reported-road-casualties-great-britain-annual-report-2019

- Dul, J., R. Bruder, P. Buckle, P. Carayon, P. Falzon, W. S. Marras, J. R. Wilson, and B. van der Doelen. 2012. “A Strategy for Human Factors/Ergonomics: developing the Discipline and Profession.” Ergonomics 55 (4): 377–395. doi:10.1080/00140139.2012.661087.

- Embrey, D. 2014. “SHERPA: A Systematic Human Error Reduction and Prediction Approach to Modelling and Assessing Human Reliability in Complex Tasks.” In 22nd European Safety and Reliability Annual Conference (ESREL 2013). Amsterdam, The Netherlands: CRC Press.

- Embrey, D. E. 1986. “SHERPA: A Systematic Human Error Reduction and Prediction Approach.” Proceedings of the International Topical Meeting on Advances in Human Factors in Nuclear Power Systems Knoxville, Tennesee.

- Embrey, D. E. 1992. “Quantitative and qualitative prediction of human error in safety assessments.” I. Chem. E. Symposium Series No. I30 I. Chem. E., London.

- EUROCONTROL. 2018. Just Culture Guidance Material for Interfacing with the Media. Edition 1.0. https://skybrary.aero/bookshelf/books/4784.pdf

- Fischoff, B. 1975. “Hindsight is Not Equal to Foresight: The Effect of Outcome Knowledge on Judgment under Uncertainty.” Journal of Experimental Psychology: Human Perception and Performance 1 (3): 288–299.

- Fitts, P. M., and R. E. Jones. 1947. Analysis of Factors Contributing to 460 “Pilot Error” Experiences in Operating Aircraft Controls. Dayton, OH: Aero Medical Laboratory, Air Material Command, Wright-Patterson Air Force Base.

- Flin, R., and S. Yule. 2004. “Leadership for Safety: Industrial Experience.” Quality and Safety in Health Care 13 (suppl_2): ii45–51. doi:10.1136/qshc.2003.009555.

- Freud, S., and A. A. Brill. 1914. Psychopathology of Everyday Life. New York: Macmillan Publishing.

- Furnham, A. 2003. “Belief in a Just World: research Progress over the past Decade.” Personality and Individual Differences 34 (5): 795–817. doi:10.1016/S0191-8869(02)00072-7.

- Gantt, R., and S. Shorrock. 2017. “Human Factors and Ergonomics in the Media.”. In Human Factors and Ergonomics in Practice: Improving System Performance and Human Well-Being in the Real World, edited by S. Shorrock and C. Williams, 77–91. Boca Raton, FL: CRC Press.

- Gentner, D. 1983. “Structure-Mapping: A Theoretical Framework for Analogy.” Cognitive Science 7 (2): 155–170. doi:10.1207/s15516709cog0702_3.

- Ghasemi, M., J. Nasleseraji, S. Hoseinabadi, and M. Zare. 2013. “Application of SHERPA to Identify and Prevent Human Errors in Control Units of Petrochemical Industry.” International Journal of Occupational Safety and Ergonomics 19 (2): 203–209. doi:10.1080/10803548.2013.11076979.

- Gibson, J. J. 1979. The Ecological Approach to Visual Perception. Hillsdale, NJ: Erlbaum.

- Gigerenzer, G. 2001. “The Adaptive Toolbox.”. In Bounded Rationality: The Adaptive Toolbox, edited by G. Gigerenzer and R. Selten, 37–50. Cambridge, MA: The MIT Press.

- Grant, E., P. M. Salmon, N. Stevens, N. Goode, and G. J. M. Read. 2018. “Back to the Future: What Do Accident Causation Models Tell us about Accident Prediction?” Safety Science 104: 99–109. doi:10.1016/j.ssci.2017.12.018.

- Green, D. M., and J. A. Swets. 1966. Signal Detection Theory and Psychophysics. Oxford, England: John Wiley.

- Green, J. 2003. “The Ultimate Challenge for Risk Technologies: controlling the Accidental.” In Constructing Risk and Safety in Technological Practice, edited by J. Summerton and B. Berner, 29–42. London: Routledge.

- Griffin, M. A., and G. Grote. 2020. “When is More Uncertainty Better? A Model of Uncertainty Regulation and Effectiveness.” Academy of Management Review 45 (4): 745–765. doi:10.5465/amr.2018.0271.

- Guo, Yundong, and Youchao Sun. 2020. “Flight Safety Assessment Based on an Integrated Human Reliability Quantification Approach.” PLOS ONE 15 (4): e0231391. doi:10.1371/journal.pone.0231391.

- Harris, D., N. A. Stanton, A. Marshall, M. S. Young, J. Demagalski, and P. Salmon. 2005. “Using SHERPA to Predict Design-Induced Error on the Flight Deck.” Aerospace Science and Technology 9 (6): 525–532. doi:10.1016/j.ast.2005.04.002.

- Harvey, M. D., and B. G. Rule. 1978. “Moral Evaluations and Judgments of Responsibility.” Personality and Social Psychology Bulletin 4 (4): 583–588. doi:10.1177/014616727800400418.

- Henriksen, K., and H. Kaplan. 2003. “Hindsight Bias, Outcome Knowledge and Adaptive Learning.” Quality and Safety in Health Care 12 (90002): 46ii–ii50. doi:10.1136/qhc.12.suppl_2.ii46.

- Hollnagel, E. 1983. Human Error: Position Paper for NATO Conference on Human Error, August 1983, Bellagio, Italy. https://erikhollnagel.com/

- Hollnagel, E. 1993. Human Reliability Analysis: Context and Control. London: Academic Press.

- Hollnagel, E. 1998. Cognitive Reliability and Error Analysis Method (CREAM). Oxford: Elsevier Science Ltd.

- Hollnagel, E. 2009. The ETTO Principle: Efficiency-Thoroughness Trade-off: Why Things That Go Right Sometimes Go Wrong. Surrey: Ashgate.

- Hollnagel, E. 2012. FRAM: The Functional Resonance Analysis Method: Modelling Complex Socio-Technical Systems. Surrey: Ashgate.

- Hollnagel, E. 2014. Safety-I and Safety-II: The past and Future of Safety Management. Farnham, UK: Ashgate.

- Hollnagel, E., and D. D. Woods. 2005. Joint Cognitive Systems: Foundations of Cognitive Systems Engineering. Boca Raton, FL: Taylor & Francis.

- Hollnagel, E., J. Leonhardt, T. Licu, and S. Shorrock. 2013. From Safety-I to Safety-II: A White Paper. Brussels: EUROCONTROL.

- Hollnagel, E., and R. Amalberti. 2001. “The Emperor’s New Clothes or Whatever Happened to Human Error?” Invited Keynote Presentation at 4th International Workshop on Human Error, Safety and System Development. Linköping, June 11–12, 2001.

- Horlick-Jones, T. 1996. “The Problem of Blame.”. In Accident and Design: Contemporary Debates on Risk Management, edited by C. Hood and D. K. C. Jones, 61–71. London: UCL Press.

- Hu, J., L. Zhang, Q. Wang, and B. Tian. 2019. “A Structured Hazard Identification Method of Human Error for Shale Gas Fracturing Operation.” Human and Ecological Risk Assessment: An International Journal 25 (5): 1189–1206. doi:10.1080/10807039.2018.1461008.

- Hudson, P. 2014. “Accident Causation Models, Management and the Law.” Journal of Risk Research 17 (6): 749–764. doi:10.1080/13669877.2014.889202.

- Hulme, A., N. A. Stanton, G. H. Walker, P. Waterson, and P. M. Salmon. 2019. “What Do Applications of Systems Thinking Accident Analysis Methods Tell us about Accident Causation? A Systematic Review of Applications between 1990 and 2018.” Safety Science 117: 164–183. doi:10.1016/j.ssci.2019.04.016.

- Hutchins, E. 1995. Cognition in the Wild. Bradford: MIT Press.

- Igene, O. O., and C. Johnson. 2020. “Analysis of Medication Dosing Error Related to Computerised Provider Order Entry System: A Comparison of ECF, HFACS, Stamp and AcciMap Approaches.” Health Informatics Journal 26 (2): 1017–1042. doi:10.1177/1460458219859992.

- Johnston, P., and R. Harris. 2019. “The Boeing 737 MAX Saga: Lessons for Software Organizations.” Software Quality Professional 21 (3): 4–12.

- Kirwan, B. 1992a. “Human Error Identification in Human Reliability Assessment. Part 1: Overview of Approaches.” Applied Ergonomics 23 (5): 299–318. doi:10.1016/0003-6870(92)90292-4.

- Kirwan, B. 1992b. “Human Error Identification in Human Reliability Assessment. Part 2: Detailed Comparison of Techniques.” Applied Ergonomics 23 (6): 371–381. doi:10.1016/0003-6870(92)90368-6.

- Kirwan, B. 1994. A Guide to Practical Human Reliability Assessment. London: Taylor and Francis.

- Kirwan, B. 1998a. “Human Error Identification Techniques for Risk Assessment of High Risk Systems-Part 2: Towards a Framework Approach.” Applied Ergonomics 29 (5): 299–318. doi:10.1016/s0003-6870(98)00011-8.

- Kirwan, B. 1998b. “Human Error Identification Techniques for Risk Assessment of High Risk Systems-Part 2: Towards a Framework Approach.” Applied Ergonomics 29 (5): 299–318. doi:10.1016/s0003-6870(98)00011-8.

- Kirwan, B., and L. K. Ainsworth. 1992. A Guide to Task Analysis. London: Taylor & Francis.

- Kohn, L. T., J. M. Corrigan, and M. S. Donaldson. 1999. To Err is Human: Building a Safer Health System. Washington, DC: Institute of Medicine, National Academy Press.

- Kurath, H. [1953] 1989. Middle English Dictionary. Ann Arbor, Michigan: The University of Michigan Press.

- Leveson, N. 2004. “A New Accident Model for Engineering Safer Systems.” Safety Science 42 (4): 237–253. doi:10.1016/S0925-7535(03)00047-X.

- Leveson, N. 2011. “Applying Systems Thinking to Analyze and Learn from Events.” Safety Science 49 (1): 55–64. doi:10.1016/j.ssci.2009.12.021.

- Licu, T., M. Baumgartner, and R. van Dam. 2013. “Everything You Always Wanted to Know about Just Culture (but Were Afraid to Ask).” Hindsight 18: 14–17.

- Madigan, R., D. Golightly, and R. Madders. 2016. “Application of Human Factors Analysis and Classification System (HFACS) to UK Rail Safety of the Line Incidents.” Accident Analysis & Prevention 97: 122–131. doi:10.1016/j.aap.2016.08.023.

- Makary, M., and M. Daniel. 2016. “Medical Error—the Third Leading Cause of Death in the US.” BMJ 353: i2139.

- Mannion, R., and J. Braithwaite. 2017. “False Dawns and New Horizons in Patient Safety Research and Practice.” International Journal of Health Policy and Management 6 (12): 685–689. doi:10.15171/ijhpm.2017.115.

- Maxion, R. A., and R. W. Reeder. 2005. “Improving User-Interface Dependability through Mitigation of Human Error.” International Journal of Human-Computer Studies 63 (1–2): 25–50. doi:10.1016/j.ijhcs.2005.04.009.

- Meadows, D. H. 1999. Leverage Points: Places to Intervene in a System. Hartland, VT: The Sustainability Institute.

- Mearns, K., S. M. Whitaker, and R. Flin. 2003. “Safety Climate, Safety Management Practice and Safety Performance in Offshore Environments.” Safety Science 41 (8): 641–680. doi:10.1016/S0925-7535(02)00011-5.

- Moray, N. 2000. “Culture, Politics and Ergonomics.” Ergonomics 43 (7): 858–868. doi:10.1080/001401300409062.

- Namkoong, J-E., and M. D. Henderson. 2014. “It’s Simple and I Know It!: Abstract Construals Reduce Causal Uncertainty.” Social Psychological and Personality Science 5 (3): 352–359. doi:10.1177/1948550613499240.

- Nees, M. A., N. Sharma, and A. Shore. 2020. “Attributions of Accidents to Human Error in News Stories: Effects on Perceived Culpability, Perceived Preventability, and Perceived Need for Punishment.” Accident; Analysis and Prevention 148: 105792. doi:10.1016/j.aap.2020.105792.

- Neisser, U. 1976. Cognition and Reality. San Francisco: W.H. Freeman.