Abstract

In safety-critical automatic systems, safety can be compromised if operators lack engagement. Effective detection of undesirable engagement states can inform the design of interventions for enhancing engagement. However, the existing engagement measurement methods suffer from several limitations which damage their effectiveness in the work environment. A novel engagement evaluation methodology, which adopts Artificial Intelligence (AI) technologies, has been proposed. It was developed using motorway control room operators as subjects. Openpose and Open Source Computer Vision Library (OpenCV) were used to estimate the body postures of operators, then a Support Vector Machine (SVM) was utilised to build the engagement evaluation model based on discrete states of operator engagement. The average accuracy of the evaluation results reached 0.89 and the weighted average precision, recall, and F1-score were all above 0.84. This study emphasises the importance of specific data labelling when measuring typical engagement states, forming the basis for potential control room improvements.

Practitioner summary: This study demonstrates an automatic, real-time, objective, and relatively unobtrusive method for measuring dynamic operator engagement states. Computer vision technologies were used to estimate body posture, then machine learning (ML) was utilised to build the engagement evaluation model. The overall evaluation shows the effectiveness of this framework.

Abbreviations: AI: Artificial Intelligence; OpenCV: Open Source Computer Vision Library; SVM: Support Vector Machine; UWES: Utrecht Work Engagement Scale; ISA Engagement Scale: Intellectual, Social, Affective Engagement Scale; DSSQ: Dundee Stress State Questionnaire; SSSQ: Short Stress State Questionnaire; EEG: electroencephalography; ECG: Electrocardiography; VMOE: Video-based Measurement for Operator Engagement; CMU: Carnegie Mellon University; CNN: Convolutional Neural Network; 2D: two dimensional; ML: Machine learning.

1. Introduction

With the development of information, communication, and control technologies, automation has improved operation processes in safety-critical domains by enhancing safety and efficiency. There are several studies aiming to optimise the use of automation technology (e.g. Sarikan and Ozbayoglu Citation2018; Formosa et al. Citation2020; Chen et al. Citation2021) and ergonomic effectiveness (e.g. Shi and Rothrock Citation2022; Stanton et al. Citation2022). However, the scarcity of studies from a user experience point of view in this domain could pose limitations on the effectiveness of the control systems. The intended performance of such systems could be impaired if the operators have a low level of engagement at work (defined below) no matter how much the systems have been improved purely in terms of technology and effectiveness. A range of negative aspects and issues to do with negligence have been widely studied in automatic and safety-critical domains, relating to, for example, mental workload (e.g. Gao et al. Citation2013; Liu, Gao, and Wu Citation2023), vigilance (e.g. Finomore et al. Citation2009; Neigel et al. Citation2020), situation awareness (e.g. Lau, Jamieson, and Skraaning Citation2016a, Citation2016b), and musculoskeletal issue (e.g. Bazazan et al. Citation2019; Tavakkol et al. Citation2020). However, according to the principles of positive psychology, attention should be given not only to addressing the negative aspects but also to building a positive mentality (Seligman Citation2002). Engagement at work is a positive, fulfilling, work-related state of mind (Schaufeli et al. Citation2002). The term engagement was originally proposed in academic literature by Kahn (Citation1990) as ‘the harnessing of organization members’ selves to their work roles…people employ and express themselves physically, cognitively, and emotionally during role performances (694)’. Engagement at work contributes to individual health, job satisfaction (Shimazu et al. Citation2015), and well-being (Bakker et al. Citation2008). Notably, it is widely recognised that individual engagement is a contributing factor to work performance (e.g. Christian, Garza, and Slaughter Citation2011; Bakker and Demerouti Citation2008). Thus, it is a significant and positive experience that should be considered when designing augmentation of roles that use automation (Roto, Palanque, and Karvonen Citation2019; Ferraro et al. Citation2022). However, insufficient engagement among operators occurs frequently in many automatic systems, such as in nuclear power control (Izsó and Antaiovits Citation1997), CCTV monitoring (Smith Citation2002), and motorway control (Jin, Mitchell, and May Citation2020). Some of these control rooms are closely linked to public safety, and so insufficient engagement of operators at some crucial moments in these control rooms could threaten public safety. Therefore, careful consideration should be given to means of enhancing the engagement of operators in automatic and highly safety-critical systems (Smith, Blandford, and Back Citation2009; Schaeffer and Lindell Citation2016; Jin, Mitchell, and May Citation2020). Engagement is a dynamic experience (Kahn Citation1990), and understanding and measuring an experience are key prerequisites for improving it (Battarbee and Koskinen Citation2005). Valid measurement of dynamic engagement in real control rooms can reveal promising pathways for facilitating engagement and enhancing operator performance.

1.1. Measurement

The measurement of engagement at work by means of self-reported questionnaires is widely used in many industries. Examples including, using the Utrecht Work Engagement Scale (UWES) (Schaufeli, Bakker, and Salanova Citation2006), the Oldenburg Burnout Inventory (Demerouti and Bakker Citation2008), and the Intellectual, Social, Affective Engagement Scale (ISA Engagement Scale) (Soane et al. Citation2012). In the safety-critical context and automatic control rooms, the Dundee Stress State Questionnaire (DSSQ) (e.g. Reinerman-Jones, Matthews, and Mercado Citation2016; Reinerman et al. Citation2020; Ferraro et al. Citation2022) and the Short Stress State Questionnaire (SSSQ) (Bernhardt and Poltavski Citation2021) are put forward as means of measuring task engagement. DSSQ attempts to integrate psychological aspects of task engagement within several meta-factors (Matthews et al. Citation2002), and the SSSQ (Helton and Näswall Citation2015) is a simplified version of the DSSQ. Although these surveys provide rich insights into the impacts and dimensions of engagement, these methods do not adequately provide real-time information, since they are based on post-hoc self-assessments. Furthermore, because of the biases of self-assessment resulting from subjective responses, more objective indicators are needed (Reinerman-Jones, Matthews, and Mercado Citation2016).

Considerable improvements have been made in measuring operator engagement and related psychological concepts (e.g. attention, vigilance, situation awareness) from the viewpoints of efficiency, objectivity, automation, and continuity by testing various physiological signals. Relevant techniques include electroencephalography (EEG) (e.g. Mijović et al. Citation2017; Bernhardt et al. Citation2019), eye tracking (e.g. Wright, Chen, and Barnes Citation2018; Shi and Rothrock Citation2022) and electrocardiography (ECG) (e.g. Bernhardt et al. Citation2019). However, the complexity of the equipment required, and the high intrusiveness of the sensors have restricted the scalability of the implementation and the generalisability of these techniques in actual safety-critical contexts.

The advantages of non-contact data collection (e.g. filming subject body postures) increase the likelihood that operators will work in their normal way during measurement of engagement. Many studies in non-safety critical contexts have shown that body posture is an effective indicator for measuring some aspects of cognitive ergonomics, such as the link between posture and cognitive workload changes (Debie et al. Citation2021), attention (Diaz Citation2015) and affective state (Charles-Edwards et al. Citation2004). Engagement is not an exception (e.g. Klein and Celik Citation2017; Rajavenkatanarayanan et al. Citation2018; Kaur et al. Citation2019).

The analysis of the links between body posture and engagement has greatly benefitted from the use of machine learning (ML) and computer vision technologies. Due to the dynamic nature of safety-critical systems, unpredictable control room situations that may complicate the interpretation of operator postures and activities often happen. ML is an efficient tool for data mining, training, and building models using sample data, and it can also accurately identify the complex relationships between data and varying body postures with high accuracy (Bourahmoune and Amagasa Citation2019; Ren et al. Citation2020; Ran et al. Citation2021). The application of relatively non-intrusive sensors together with ML enables the classification of various posture categories without significant disturbance, examples being the measuring of lifting postures by analysing kinematic variables recorded by compact wearable sensors in a gym (Hawley, Hamilton-Wright, and Fischer Citation2023), and classifying sitting postures by analysing pressures recorded by sensors in a chair in an office environment (Roh et al. Citation2018) or in a learning context (D’Mello, Chipman, and Graesser Citation2007). However, these sensor-based measurements that require physical contact may remain suboptimal in real-world applications, because of the conservative culture of safety-critical contexts where measurement techniques should be minimally intrusive and discreet. Furthermore, physical contact with operators when collecting biometric signals has potential for changing their behaviour or causing distraction. Because of its negligible intrusiveness, ML has been used with non-contact computer vision technologies (e.g. by analysing body postures) for detecting student engagement changes in actual classrooms (e.g. Villa et al. Citation2020; Goldberg et al. Citation2021) and for detecting body postural changes of operators in training contexts (Nakajima Citation2004). This suggests that measuring operator engagement by using the combination of ML and computer vision technologies to analyse body postures in actual safety-critical control rooms is very promising.

Furthermore, care is needed when selecting a suitable measuring strategy based on ML and the nature of the data to effectively support design-related and other interventions (Chan et al. Citation2022). Kaur et al. (Citation2019) adopted unsupervised learning algorithms, such as K-Means to predict student engagement based on detecting patterns of body posture, however, the potential risk with this approach is that invalid engagement may be indicated. This is because the use of unsupervised learning algorithms for evaluating engagement does not benefit from the progress made in human data labelling (Leong Fwa and Marshall Citation2018). For example, in a control room, the range of body postures of an operator using a computer either to browse working materials or to watch entertaining videos can be quite similar: the results of unsupervised learning algorithms, by evaluating only body postures, could indicate both these activities as showing the same state of engagement. This is because unsupervised learning algorithms mostly realise classifications by recognising patterns within the data; the definition of each class is given by analysis of the classification results (Sinaga and Yang Citation2020). This may decrease the effectiveness of the measurements, hindering the use of data-driven interventions for enhancing engagement.

The development and use of a valid engagement measurement methodology may aid in informing interventions by effectively indicating the distribution of typical engagement states. In the current study, the following five typical engagement states were defined:

State 0. The operator is not engaged at all and is avoiding work.

State 1. The operator is a little engaged and is standing by, available for work.

State 2. The operator is moderately engaged and is working in a leisurely manner.

State 3. The operator is very engaged and is working in an orderly and independent way.

State 4. The operator is extremely engaged and fully committed.

These states were generated based on a qualitative approach; a combination of multiple data sets was analysed by using a frame-driven thematic analysis (Braun and Clarke Citation2006) to describe the typical intensities of engagement of motorway operators in terms of the following four work engagement indicators:

Mental resilience: energetic arousal caused by the tasks (Matthews et al. Citation2013) and persistence while facing difficulties (Schaufeli et al. Citation2002).

Value: the importance or significance of the tasks (Kahn Citation1990) and competitive motivation in completing the tasks (Matthews et al. Citation2013).

Mood: the enjoyment (Schaufeli et al. Citation2002) and interest generated by the experience of doing the tasks (Matthews et al. Citation2013).

Cognitive involvement: concentration on the tasks at hand, the distorted sense of time (Schaufeli et al. Citation2002), cognitive vigilance (Kahn Citation1990), and the difficulty of detaching oneself from current tasks (Schaufeli et al. Citation2002).

These definitions are used by an instrument named Video-based Measurement for Operator Engagement (VMOE) [in press]. VMOE has been developed for manually assessing which of the five typical engagement states of an operator is observed. VMOE recommended four work-related indicators as cut-off points for measuring these five states (Appendix A). They are developed based on existing engagement indicators, including: (i) active participation in target activities (O’Malley et al. Citation2003; Alimoglu et al. Citation2014), (ii) focus [since highly engaged employees ignore everything else around them (Schaufeli et al. Citation2002)], (iii) interaction (Alimoglu et al. Citation2014) and (iv) the relatedness of communications with target activities (Wood et al. Citation2016). VMOE explains these indicators further to adapt them to the context of motorway control rooms.

The process of manual recognition of affective states is cumbersome (Gunes and Piccardi Citation2009), and because these five states require targeted recognition for informing design or other interventions, supervised learning algorithms were adopted for evaluating engagement state by means of body posture in this current study. Support Vector Machine (SVM) is a method of supervised learning; SVM builds an inference model to create boundaries between different data classes, to recognise targeted data by assessing features (Liu et al. Citation2010). The combination of image processing using SVM and manual assessment of postures has already been applied to assessing simple operator postural changes based on assessing low pixel silhouettes (Nakajima Citation2004). Proper feature selection is crucial for SVM when investigating the relationship between variations in complex body postures and engagement changes (Klein and Celik Citation2017). Notably, the range of specific operator work-related tasks may pose new challenges when locating key anatomical points to ensure effective engagement measurement and innovative approaches may be required.

Although similar sitting postures are generally adopted by operators in both non-safety-critical and safety-critical situations, the SVM models (that differentiate between engagement states) developed separately for these two environments may not be generalisable to each other, because their working situations (i.e. working tools and tasks) are usually different. For example, TV walls are often not found in non-safety-critical environments, and the tasks in such contexts may not involve long-term monitoring and response to emergencies. These contextual differences are likely to lead to different engagement implications of similar postures in the two different environments. For example, an operator looking up at a TV wall may indicate that (s)he is monitoring the system, while the same posture in a non-safety-critical environment may be observed when an employee is chatting with colleagues. Therefore, even though many posture estimation models have been trained with SVM in non-safety-critical contexts for evaluating mentality (e.g. Roh et al. Citation2018; Hawley, Hamilton-Wright, and Fischer Citation2023), they are not necessarily applicable in safety-critical settings, especially when specific operator engagement states have been selected as measurement criteria. Nevertheless, no studies were found that report the training of an SVM model using posture data of operators in safety-critical settings for measuring typical operator engagement states.

Notably, the conservative culture associated with the safety-critical domain (Savioja, Liinasuo, and Koskinen Citation2014) may lead to obstacles to the collection of sufficient standardised data for ML purposes. The lack of training datasets may threaten the effectiveness of ML evaluations by weakening randomness in data processing. To achieve resilience (Bergström, van Winsen, and Henriqson Citation2015), a methodology to cope with such inherent risks in data collection should be developed for measuring engagement. In this connection, an approach that incorporates SVM has the potential to reduce the impact of a shortage of training datasets (Li et al. Citation2022) by determining a threshold for the requirement of training datasets (Hawley, Hamilton-Wright, and Fischer Citation2023). Using this approach is preferable to assuming that sufficient training datasets will always be available. The alternative approach of collecting data in simulated environments (e.g. Nakajima Citation2004; Zhang et al. Citation2020) is not recommended because of the difficulties in simulating dynamic operator behaviours in actual work contexts.

1.2. Aim and overview

This paper describes the development and verification of an automatic, real-time, objective, and relatively unintrusive engagement evaluation procedure for measuring typical operator engagement states in actual safety-critical control room environments. This is a methodology that guides the development of SVM models for processing data from real working environments. Firstly, each operator was videoed using a simple camera, and the engagement experience in each video was divided into segments so that each segment showed a typical engagement state as defined by VMOE. Secondly, key body posture features in each video were identified. Thirdly, the positions of these key features were estimated using computer vision algorithms. Consequently, the body posture estimation results and the previously determined engagement states formed a sample dataset. The dataset formed in this way were randomly divided into a training dataset and a testing dataset to train and test this SVM model so that it could determine the engagement state objectively and automatically. To ensure the effectiveness of each model a conservative threshold of the size of the training dataset was adopted. Finally, for each SVM model, the effectiveness and accuracy of the determination of the five typical engagement states were assessed. The testing results established the feasibility of this methodology for building engagement measurement that can inform the opportunities for improving engagement in this safety-critical domain.

2. Methods

2.1. Subject selection

Motorway control room seated operators were selected as subjects for defining typical operator engagement states for VMOE and providing data for training the SVM model in this study because the working environment of motorway operators is similar to conditions in many safety-critical control rooms (e.g. nuclear power stations, power plants, and railway control rooms). Operators in these control rooms generally work while sitting, and almost all the tasks of the operator are accomplished by the upper part of the body. A TV wall, computers, and telephones are always their main working tools; their tasks usually involve daily monitoring, emergency response, and routine work (e.g. Izsó and Antaiovits Citation1997; Smith, Blandford, and Back Citation2009; Savioja, Liinasuo, and Koskinen Citation2014; Schaeffer and Lindell Citation2016; Jin Citation2022). Experience is largely context-dependent (Law et al. Citation2009); operators experience similar engagement states while conducting similar tasks and using similar work tools, and operators normally adopt particular body postures while performing specific types of tasks and using particular work tools. Because the work tools and tasks are similar within a range of safety-critical control rooms, it is reasonable to generalise links between engagement and body postures across a range of similar control rooms.

2.2. Participants and engagement rating results

All the research data in this paper was collected from the real environment of a motorway control room in one province in Southwest China. All the observed subjects were regular operators in the motorway control room, working normally. There were three male operators and seven female operators, aged between 25 and 50, all of them with over three years of work experience. Four day shifts and six night shifts were filmed in this study (). The night shift was from 4.30 pm to 9.30 am, and the day shift was 9.30 am to 4.30 pm. These videos were all shot between 9:00 am and 10:00 pm, because the traffic volumes on a motorway change frequently during this period. Video data for this period can better reflect the dynamic working status of an operator. Factors outside the observer’s control also influenced the recording; for example, an operator asked that one part of a video would be deleted because (s)he thought that some sensitive issues had been mentioned during recording, and another operator suddenly decided to withdraw from participation for personal reasons. Therefore, the time and duration of each video clip was different. A summary of the video clips is shown in . The Ethics Sub-Committee of Loughborough University granted full ethical approval for this study.

Table 1. The list of all video clips.

The varying engagement states in each video (each showing one operator) were assessed according to the criteria of VMOE. Each of the ten video recordings was scored by one of ten trained postgraduate students, all majoring in enterprise management and having volunteered as scorers. They were first given instructions about using VMOE, and the duties of motorway operators (Jin Citation2022) were explained. Then each student was asked to carefully divide one video into many independent segments based on the operator behaviour they were seeing at each instant, according to the VMOE rating rubric (Appendix A) and the VMOE instruction manual (Appendix B), assigning to each segment an engagement state from 0 to 4 according to VMOE. A change in the engagement state signalled the start of a new segment, and a timestamp (h:min:s) was inserted when (s)he believed that the engagement state had changed. Each student was told they could review the video clip during scoring as often as they wished. To encourage the students to rate these videos carefully according to VMOE, all rating processes were supervised and guided by two cognitive scientists (i.e. the first and second authors) who worked with students to decide the ratings when they were not sure about scoring of particular clips. The engagement state rating results from the ten operators for the discrete segments in the ten corresponding videos were used as the dataset for the subsequent evaluation models.

2.3. Body posture estimation

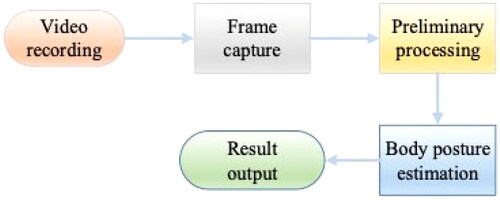

The purpose of this part of the study was to acquire information on body posture from the video recording using computer vision algorithms; this process is called body posture estimation. The process of body posture estimation is depicted in and involves video recording, frame capture, preliminary processing, body posture estimation, and result output. The details of each step are presented below.

Figure 1. The process of body posture estimation.

In the video recording and frame capture step, an ordinary camera—the rear camera of a smartphone—was used in this study to capture the body posture of the operator at work. The resolution of the camera was set to 1920 × 1080 pixels and the frame rate of the recorded video was 30 frames per second. The body posture was estimated every 30 frames, namely once every second. As most of the body of the operator would be hidden by the work bench or seat if photographed from the front or rear, the body posture can only be captured from the side. The two o’clock and 10 o’clock positions were selected as the best places for the camera to capture the body posture in right-left, front-back, and up-down dimensions. During the video recording of each operator, the camera was not moved. However, it was not possible to keep the position and orientation of the camera identical for all operators because of the following considerations:

operators usually sit in different positions and so the position of the camera also needed to be changed to film them. As the devices of the monitoring system occupied space, the preferred space for placing the camera was not always available.

Unexpected situations—a chosen camera location might need to be changed if the space was unexpectedly required by an operator or colleague for a work-related reason.

Minor variations in the positioning of the video camera for each operator might have led to non-unified data collection specification, reducing the definition of the relationship between posture and engagement. Therefore, rather than aggregating all the video data, a specific model was trained and tested for each video according to a consistent methodology.

In the preliminary processing step, some invalid data, which are inevitable in the actual working environment, were removed. The criteria for discarding data were as follows: (a) if the observed operator left the working position or (b) if other people blocked the observed operator partially or totally. In these situations, body posture estimation was not feasible. The last column of shows the number of available images for each video recording. In addition to discarding invalid data, it was necessary to rotate and resize the frames according to the needs of the body posture estimation and other analysis. OpenCV-python library was used to capture and process frames in the preliminary processing of the images in this study.

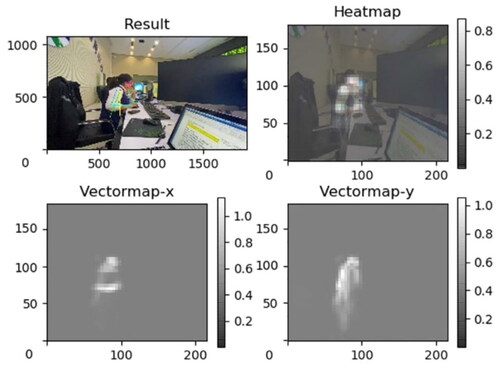

In the body posture estimation step, the Openpose model was applied; this model is an open-source library developed at Carnegie Mellon University (CMU) based on Convolutional Neural Network (CNN) and Convolutional Architecture for Fast Feature Embedding (Caffe) frameworks (Caffe | Deep Learning Framework 2022) (Jia et al. Citation2014). The Openpose model has shown excellent performance and efficiency in multi-person two-dimensional (2D) posture detection (Cao et al. Citation2021). It has been implemented in popular ML frameworks, such as Keras, Tensorflow, and Pytorch. In this study, Openpose in Tensorflow was used to estimate body posture with 2D images. As many surveys suggest, TensorFlow is the most popular and the most mentioned ML framework in scientific papers, and it is the primary choice of data scientists around the world (Dinghofer and Hartung Citation2020). The basic unit in computer image processing, which is used in these images, is the pixel. One example of the estimation results using Tensorflow is shown in . The first figure shows the final estimation results in the original image, in which the key points and the limbs of an observed operator are detected accurately. The x-axis and y-axis show that the size of the original image is 1920 × 1080 pixels, which is also mentioned above. The second figure is the heatmap of the key points, which shows the confidence level for each key point at every pixel location in the image. The third and fourth figures are the vector maps of the x and y components, which show the part affinity fields (2D vector fields for each limb) for part association. The last three figures (left to right, from top to bottom) are resized to suit the requirements of the Openpose model.

Figure 2. An example of body posture estimation.

To undertake accurate evaluation of engagement, this study used the picture itself as the coordinate system rather than any posture. This is because not only the change of posture reflected the change of engagement, but also the change of body position reflected the change of engagement. Therefore, compared with the body posture, the fixed picture is more suitable as a reference. The left side of a picture is the y axis, the lower side of the picture is the x axis, and the lower left corner of the picture is the origin.

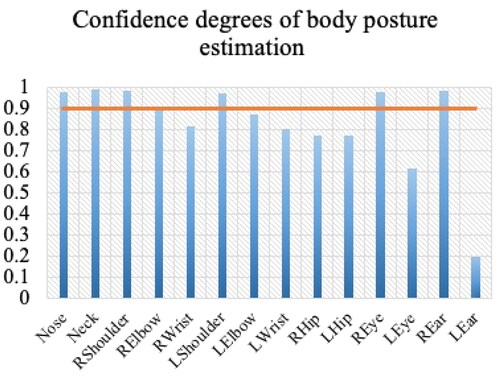

In the resultant output step, as much valid body posture information as possible was retained, while eliminating unrelated information. As shown in , the aim of body posture estimation was to locate key anatomical points or joints. To more comprehensively locate the possible anatomical points, clip 2 in , being the longest video, was selected for estimating the degree of confidence for the body posture. The results are shown in —nose, neck, shoulders, right eye, and right ear were taken into consideration, for all of which the confidence levels exceed 90 percent. However, the locations of eyes, nose, and ears change in line with the movement of the head. Hence, the locations of the right eye, nose, and right ear provide similar information about body posture. It is therefore redundant to record the locations of all three features—right eye, nose, and right ear—and so only the nose was selected. In conclusion, the hypothesis was made that the nose, neck, and right shoulder were selected as the key anatomical points for estimating body posture.

2.4. Engagement evaluation based on SVM

In this study, a non-linear SVM with the Gaussian kernel function was used to detect the relationship between body posture data and engagement evaluation states. Because of its good performance in evaluation, this is the popular research approach in the assessment area and is widely used in practical applications (Zhao et al. Citation2009; Zhang et al. Citation2020). The SVM model was implemented and trained with the Scikit-learn library in Python. The training of SVM defines its decision boundary, which is the optimum hyperplane with maximum classification margins that segregate learning samples appropriately. If the input data cannot satisfactorily be classified linearly, SVM can map it into a high dimensional feature space, using a nonlinear transformation to convert the problem into a linear classification (Liu et al. Citation2010).

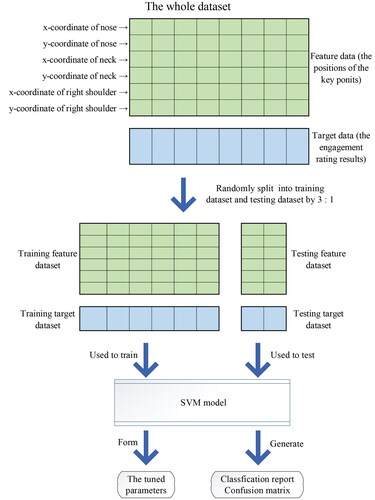

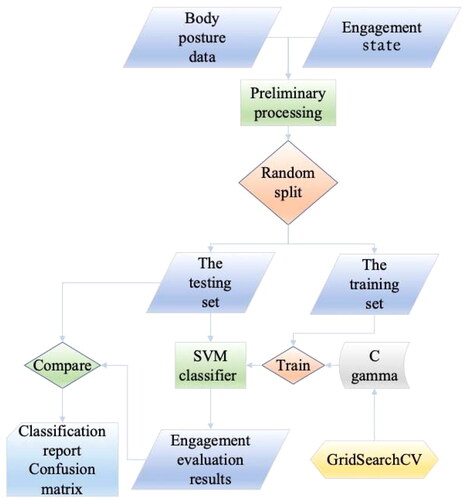

As shown in , the positions of the key points from body posture estimation in section 2.3 were the feature data, and the engagement rating results in section 2.2 were the target data in the study. Then the feature data and target data were both split into the training and testing dataset in the ratio 3:1. A very similar study used 935 and 1118 images as two thresholds to recognise operator body postures in safety-critical systems by using SVM and image processing, and it achieved very high accuracy (Nakajima Citation2004). Another similar study took 800 images as a training dataset (Juang et al. Citation2012), and the image sharpness (pixels) used in these two studies were both lower than that of the current study. For ensuring the validity of evaluation, sufficient size of the training dataset is necessary to create robust boundaries when using SVM. The threshold of the training dataset (the lowest number of images in the training dataset from all clips that can provide more than the 1118 images in the abovementioned studies) was set at 1408 images. Clips 1, 5, 6, and 9 were excluded because they did not meet this threshold. A systematic trial and error approach was adopted in the search for the optimal parameters (Khalifa and Htike Citation2013). In the training process, two significant hyperparameters [i.e. (i) the penalty parameter C and (ii) the kernel function coefficient gamma] were tuned by using GridSearchCV. GridSearchCV trained the models with all the hyperparameter sets in the tuned parameters (i.e. a pre-set collection of potential hyperparameter sets). The collection of penalty parameter C was preset to [10, 100, 1000, 10,000, 100,000], and the collection of kernel function coefficient gamma was preset to [0.0001, 0.001, 0.01, 0.1, 1, 10, 100, 1000, 10,000]. The testing dataset, which was a new group of the feature data and target data, was used to validate the performance of the trained SVM model by comparing the predicted results from the model with the engagement rating results. GridSearchCV helped search for the optimal hyperparameter set which enables the model to provide the most correctly predicted results in the testing dataset. The whole process of engagement evaluation is shown in .

Figure 3. Confidence degrees of body posture estimation: the orange line indicates a confidence level of 90%.

Figure 4. The SVM model of engagement evaluation.

Figure 5. The process of engagement evaluation.

Because precision and recall within a model are inherently contradictory, it is advisable to determine the relative importance of these metrics according to the objective of the evaluation when adjusting the SVM model or training it, if the research aim is to realise some specific design goals. For example, when the cost of missing the input data of one class is high, the focus should be put on improving the recall of this class rather than on precision. An example of this situation could be where operators in a highly safety-critical domain are required to respond quickly to unexpected events (Ikuma et al. Citation2014). In this case, if the model does not recognise that the engagement state of an operator is 0 when an emergency occurs, the design interventions for helping the operator improve his/her engagement state cannot be applied at the required time. If the operator remains in a low state of engagement instead of responding to the emergency, motorway safety will be threatened. In this case, the recall of state 0 should be increased so that incidences of failure to detect engagement state 0 occur as rarely as possible, even if the result is a loss of precision, which might cause an incorrect evaluation of state 0. Although it is necessary to consider the relative importance of precision and recall in effectively evaluating a particular engagement state, the model is more applicable if it can almost always accurately identify correctly each state of engagement, because this will reveal more opportunities for improving engagement.

The aim of this study is to develop an effective and balanced methodological framework that focuses on generally accurate evaluations of all engagement states rather than the precision or recall of some particular engagement states. Therefore, it is necessary to consider both precision and recall for all engagement states; higher values of these two metrics—and therefore the F1-score of all engagement states—are desirable. The F1-score is comprehensive, taking both the precision and recall into account. The macro average of F1-score is the arithmetic mean of the F1-score of all engagement states, so each class is treated equally, but it may be significantly influenced by the performances of minor classes (for which there are few data), possibly resulting in underestimation of the performances of major classes. The weighted average of F1-score considers the imbalance of classes and can better reflect the performance of most classes, especially when the distribution of different engagement states is very uneven. This may better serve data collection in the real world because the amount of data collected for each class in actual situation is not always sufficient or equal, and because not all engagement states occur to the same extent throughout the videos. In addition, accuracy is the other significant metric for assessing model performance since it reflects the proportion of all results that are correctly evaluated. Therefore, for all engagement states, these two metrics are significant for comprehensively evaluating the model’s performance in this study.

3. Results

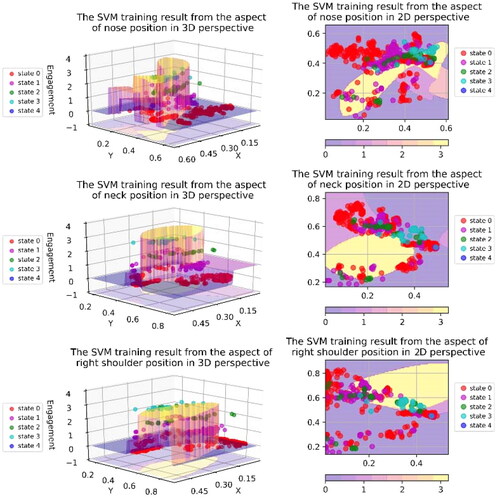

The performance of the engagement evaluation model, which had been trained by the training data set as mentioned above, was assessed in detail using the testing data set. As discussed in section 2.3, the evaluation model was trained and tested by data from only one recording of one operator at a time, and all the video clips that could provide more than 1408 images () were processed in this way. To clearly and directly illustrate the classifying mechanism of the classifier model, the decision boundary and the testing set can be visualised graphically in a figure when the feature data is two-dimensional or three-dimensional. However, in this study, the feature data, namely the body posture estimation result, was six-dimensional as shown in the six rows of the first green matrix in . This cannot be visualised in a chart with up to three dimensions. To overcome this, the feature data were divided into three sets of two-dimensional points to be drawn separately, which were the exact positions of nose, neck, and right shoulder. The decision boundary was projected into the aspects of the positions of the nose, neck, and right shoulder. Notably, this process was a conversion from high-dimensional space to low-dimensional space, which resulted in information loss and data overlap. Therefore, some decision domains, which are enclosed by decision boundaries, are not visible in the figures. It also seemed that the decision boundary did not clearly distinguish the position data of the different rated engagement states.

An example of visualisation is provided for clarifying the mechanism of the classifier model: two-dimensional and three-dimensional perspectives of visualisations for video clips 3 in as shown in . In these figures, the coordinates of the points were the locations of nose, neck, or right shoulder. The colour of the point indicates the corresponding engagement state of the point as rated by VMOE, as shown in the legend. The differentiated regions are decision domains. The colour of the decision domain indicates the corresponding engagement state of the points within the domains generated by the SVM model, which can be evaluated by using the colour bar. Two-dimensional visualisation is the main form of academic visualisation, providing a direct view of the position data and the decision domains. Due to the information loss and data overlap mentioned above, elements of different engagement states overlap each other in two-dimensional visualisation, as illustrated in . Alternatively, in the three-dimensional visualisation, the z-coordinate denotes the engagement state. The position data and the decision domains of different engagement states are distributed in the x-y planes of corresponding z-coordinates. Using three-dimensional visualisation it is easy to observe whether the points are in the corresponding decision domains. The two-dimensional perspective is the projection of the three-dimensional plot onto the x-y plane of the z-coordinate −1.

Figure 6. The classifying mechanism of video clip 3.

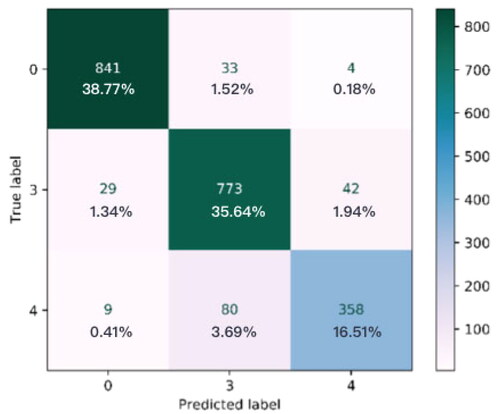

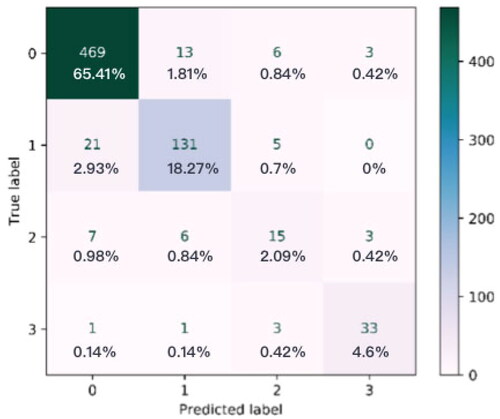

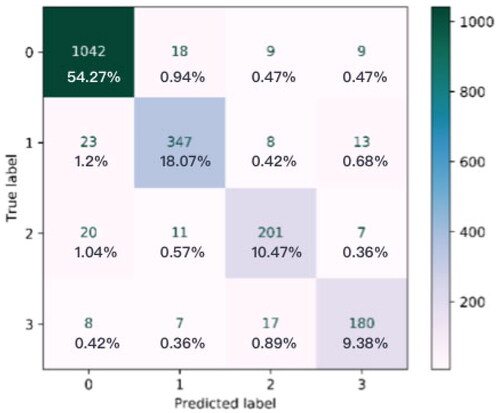

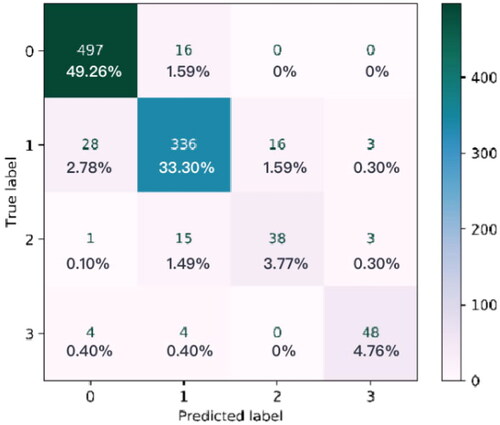

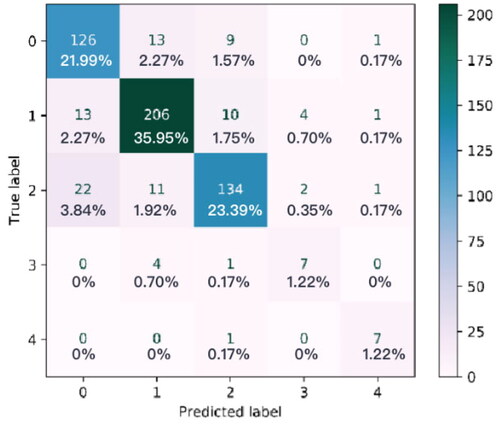

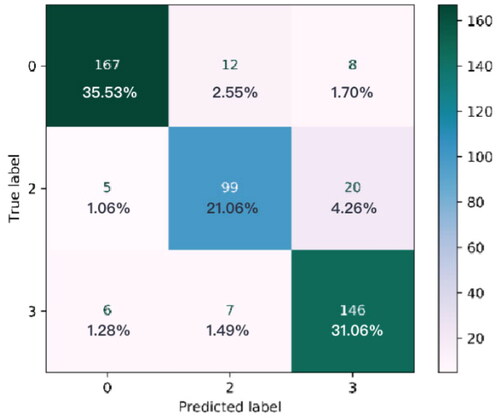

The engagement evaluation results of each video clip are also displayed in a confusion matrix, which provides the numbers of correctly evaluated results and incorrectly evaluated results. In the confusion matrix, the vertical axis (termed ‘true labels’) indicates the true engagement states derived from the expert assessment of the video clips. The horizontal axis (termed ‘predicted labels’) indicates the predicted engagement states generated by the model. The diagonal elements represent the numbers of real engagement states which were predicted as such by the model (i.e. the match between the true and predicted labels), while off-diagonal elements represent where the actual engagement states were predicted incorrectly by the model. The confusion es of the video clips (i.e. the numbers of images and their proportions) is shown in , and all percentages are rounded to two decimal places.

Figure 7. Confusion matrix for video clip 2.

Figure 8. Confusion matrix for video clip 3.

Figure 9. Confusion matrix for video clip 4.

Figure 10. Confusion matrix for video clip 7.

Figure 11. Confusion matrix for video clip 8.

Figure 12. Confusion matrix for video clip 10.

The classification reports corresponding to the confusion matrixes are shown in , and all results are accurate to two decimal places. Several metrics are presented in the classification result as follows: (a) precision (to each engagement state, the proportion of correctly evaluated results in the evaluated results); (b) recall (to each engagement state, the proportion of correctly evaluated results in the true results); (c) F1-score (the harmonic mean of precision and recall); (d) accuracy (to all engagement states, the proportion of correctly evaluated results in all results); (e) the macro average of precision, recall and F1-score (the arithmetic mean of precision, recall, and F1-score of all engagement states included); (f) the weighted average of precision, recall and F1-score (the weighted mean of precision, recall, and F1-score of all included engagement states, weighted according to the proportions of the corresponding engagement states in all testing data).

Table 2. Classification result for video clip 2.

Table 3. Classification result for video clip 3.

Table 4. Classification result for video clip 4.

Table 5. Classification result for video clip 7.

Table 6. Classification result for video clip 8.

Table 7. Classification report for video clip 10.

4. Discussion

This study presents an innovative methodology for measuring operator engagement by combining manually measured typical engagement states with an analysis of vision-based body posture data generated by Openpose and SVM to realise automated, real-time, objective, effective, and less intrusive engagement evaluation of operators in the actual safety-critical working environment of a motorway control room. The results in show the effectiveness, practicality, and robustness of the models developed by this methodology. This indicates that the supervised learning method based on image processing data is effective at predicting operator engagement in actual workplace settings, and it is reasonable to conclude that the five typical engagement states of the operators have been generally visually differentiated by scorers by using the manual third-party coding approach termed VMOE. Compared with the limitations of self-assessment methods (Reinerman-Jones, Matthews, and Mercado Citation2016), this automated real-time measurement technique is more objective and efficient. Because of the satisfactory performance, this measurement can effectively inform interventions to enhance operator engagement by indicating changes in real-time operator engagement states in control rooms.

Because of the relatively sophisticated methodology framework and the adaptation of advanced AI algorithms, the method employed in this study offers superior performance, compared to some previous studies. The weighted averages of the precisions, recalls, and F1-scores of video clips 2, 3, 4, 7, 8, and 10 were all above 0.84, and the highest weighted average F1-score was 0.92 (). The average F1-score of all the engagement states in these video clips was 0.85, which is higher than the 0.81 achieved in one former related study (Brenner et al. Citation2021). The highest accuracy is 0.92 () and the average accuracy of all these video clips is 0.89; both these results are both higher than the 0.71 obtained in another previous related study (Ashwin and Guddeti Citation2019). This shows that the training datasets were sufficient for building the models’ robustness after using an effective threshold of training datasets to create robust boundaries for SVM. The numbers of images and pixels used in the current study are much higher than the thresholds used in two other closely related studies that achieved satisfactory data classifications by using SVM (Nakajima Citation2004; Juang et al. Citation2012). The models were evaluated by the testing datasets, and the convincing results prove that the methodology proposed can build effective models.

This study explores an effective way of providing sufficient information about body posture for tracking operator engagement in the actual working environment. The body postures in control rooms are usually more varied and unpredictable due to many sudden emergencies and other unexpected events, compared with other environments [such as classrooms or simulated laboratories (e.g. Klein and Celik Citation2017; Villa et al. Citation2020; Bustos-López et al. Citation2022)] where measuring engagement has been widely studied. If only a few indicators of body posture are used, such as the body lean angle, the slouch factor in the classroom, etc. (Sanghvi et al. Citation2011), or unnecessarily complex features, such as numerous silhouetted form features without precisely defined key points in a simulated laboratory (Nakajima Citation2004), it may not be possible to fully and efficiently describe the body posture, making it even more difficult for the SVM model to distinguish operator engagement states. To alleviate these issues, this research used data for three anatomical points because the combination of these key points can describe the posture more comprehensively, and therefore can be used to evaluate the engagement state more accurately. Encouraging evaluation results confirmed that the hypothetical anatomical key points used to provide information on body posture in this study are sufficient to track the five operator engagement states in the actual working environment, even though some key anatomical points could not always be located. For example, in , engagement states cannot be classified properly by using the position of only one of the three anatomical key points.

4.1. Contribution

This study presented is the first (within mainstream published literature) to evaluate typical operator engagement states in an actual safety-critical domain by measuring body posture with the help of ML. The high accuracy generated from training samples from operational staff shows the potential of applying this methodology for measuring operator engagement states in real safety-critical control rooms. This research expands on the application of AI in security and automated systems. Previous studies mainly used AI to monitor physical status, such as traffic flow (Formosa et al. Citation2020; Chen et al. Citation2021) to strengthen system safety. This study opens up the new perspective on using AI as a measurement of typical and valid operator engagement states that can effectively inform interventions that enhance operator engagement, leading to better performance and well-being.

Inspired by Chan et al. (Citation2022) review of ML application and its satisfactory performance in relation to measuring operator engagement, the evaluation method discussed in this paper can be used in the design scenarios listed below for enhancing operator engagement. Because engagement is a positive and beneficial work experience, these scenarios help broaden the path for enhancing employee well-being (Shimazu et al. Citation2015) and performance (Christian, Garza, and Slaughter Citation2011), thereby improving system safety from the perspective of positive psychology. Additionally, further development of any of these opportunities requires full and robust consideration of the ethics of using AI in this work context as the consequences for the operators could be either positive or negative (Hagendorff Citation2020). Potential applications include: (1) setting experience goals for control room operators by analysing fluctuations in actual engagement; (2) understanding the root causes of fluctuations in operator engagement; (3) developing effective interventions based on real-time engagement evaluation; (4) developing various user experience design tools (e.g. personas, user journey mapping) using engagement evaluation results; (5) designing patterns of operator working (e.g. working shifts) for maintaining sufficient engagement; (6) developing and implementing policy for supporting engagement.

In addition, this evaluation method has the advantages over other similar methods of its high practicality, generalisation, and low cost. There have been some digital approaches for analysing body posture, such as with a motion sensing input device named Kinect (Diaz Citation2015; Brenner et al. Citation2021), which is much larger and more expensive than the basic camera employed in this study. Even though the use of Kinect does not require the wearing of additional measuring equipment and could be applied in real control room settings, the relatively large size and high price of Kinect may restrict its application. Compared with other sensors which usually require physical contact with participants for collecting data, the camera enables collection of data in a non-contact way, alleviating any interference with work. The SVM model in this study was trained by the body posture data and engagement data of motorway operators. The applicability of this trained model for use in connection with other actual seated safety-critical control tasks is promising because of the similarities in the links between the engagement and body postures for motorway operators and for operators in many other kinds of safety-critical control rooms. Notably, rather than building a universal model with strict requirements for data collection specifications (e.g. Nakajima Citation2004; Zhang et al. Citation2020), this methodology shows the benefits of building an optimal model for each particular situation (i.e. operator in this case). This methodology is needed in safety-critical systems because it alleviates the impacts caused by the unavoidable variations in data collection configurations in safety-critical control rooms. Additionally, this methodology can also be used to build a universal model for evaluating operator engagement states if a unique videoing position is possible.

4.2. Limitations and future work

Although an acceptable size of training dataset has been adopted for enhancing the robustness of the models, uneven distribution (i.e. in this case among various engagement states) of sample data among categories is still a major challenge to the performance of ML evaluation (Kaur et al. Citation2019). Referring to the results of this study, the evaluation results of state 2 in video clips 3 and 7, and of states 3 and 4 in video clip 8 are not very satisfactory (see ). However, in and , the evaluation results of states 2, 3, and 4 are satisfactory, because the samples and proportions of states 3 and 4 in video clip 2 are greater than those in video clip 8, and the sample and proportion of state 2 in video clip 4 is greater than that in video clips 3 and 7 (see ). This shows that these evaluation models can distinguish all the five engagement states by body posture, given comparatively evenly distributed training data.

The lack of multiple coders for the video-based engagement evaluations and assessments of intercoder reliability could be argued as a methodological weakness. Within qualitative research, there are arguments for and against deriving and reporting intercoder reliability (O’Connor and Joffe Citation2020), as well as issues to do with resources, feasibility, and cost-benefit. In this study, all instances of coding uncertainty were resolved through consultation between the rater and the lead authors. However, given additional resources, it would have been beneficial to employ dual coders for the assessment of the different engagement states from the videos.

In this study, the assessment of engagement has been based solely on biometric measurements. If other determinants of engagement had been available a more precise assessment of engagement (including triangulation) might have been possible. The key to successfully recognising engagement from body posture is that body postures for different engagement states differ visibly. However, according to VMOE, one of the main differences between states 3 and 4 is the oral reaction of the operator to a disturbance at work rather than a clearly observable change in body posture. Therefore, for example, in , both the precision and the recall for state 0 are higher than those for states 3 and 4. To improve the robustness of these models, more work may be considered, such as adopting multibiometric techniques with ML (Villa et al. Citation2020).

Additionally, the limitations of the research environment (and the desire for real-time and non-intrusive data collection) have not enabled this study to include other constructs that might more thoroughly explain engagement and that could be incorporated into the ML model. An example is cognitive workload which is related to subject performance and psychological state (Debie et al. Citation2021).

5. Conclusion

This project has developed a methodological framework for automatically evaluating real-time engagement based on body posture in a safety-critical working environment. This methodological framework is based on predefined definitions of engagement states. The body posture of control room operators was estimated using Openpose and OpenCV and the evaluation model was built using supervised learning algorithm-SVM. Results indicated the feasibility and effectiveness of this engagement evaluation methodology, and its potential for tracking engagement automatically, objectively, and in a timely way in a seated safety-critical context. This provides a firm foundation for using AI to understand real-time operator engagement to design dynamic and individual user experiences, as well as to inform ergonomic design interventions for influencing engagement state.

Acknowledgements

The authors thank all the control room operators and student volunteers for participation. They would also like to thank the reviewers for their helpful suggestions for improving the quality of the article.

Disclosure statement

No potential conflict of interest was reported by the author(s).

Data availability statement

Currently, the data in the paper is not available publicly, as it is still in use by the authors for the development of other engagement evaluation methods. However, individuals interested in these data can contact Linyi Jin at [email protected] for access queries.

Additional information

Funding

References

- Alimoglu, M.K., D.B. Sarac, D. Alparslan, A.A. Karakas, and L. Altintas. 2014. “An Observation Tool for Instructor and Student Behaviors to Measure in-Class Learner Engagement: A Validation Study.” Medical Education Online 19 (1): 24037. doi:10.3402/meo.v19.24037.

- Ashwin, T.S., and R.M.R. Guddeti. 2019. “Unobtrusive Behavioral Analysis of Students in Classroom Environment Using Non-Verbal Cues.” IEEE Access 7: 150693–150709. doi:10.1109/ACCESS.2019.2947519.

- Bakker, A.B., W.B. Schaufeli, M.P. Leiter, and T.W. Taris. 2008. “Work Engagement: An Emerging Concept in Occupational Health Psychology.” Work & Stress 22 (3): 187–200. doi:10.1080/02678370802393649.

- Bakker, A.B., and E. Demerouti. 2008. “Towards a Model of Work Engagement.” Career Development International 13 (3): 209–223. doi:10.1108/13620430810870476.

- Battarbee, K., and I. Koskinen. 2005. “Co-Experience: user Experience as Interaction.” CoDesign 1 (1): 5–18. doi:10.1080/15710880412331289917.

- Bazazan, A., I. Dianat, N. Feizollahi, Z. Mombeini, A.M. Shirazi, and H.I. Castellucci. 2019. “Effect of a Posture Correction–Based Intervention on Musculoskeletal Symptoms and Fatigue among Control Room Operators.” Applied Ergonomics 76: 12–19. doi:10.1016/j.apergo.2018.11.008.

- Bergström, J., R. van Winsen, and E. Henriqson. 2015. “On the Rationale of Resilience in the Domain of Safety: A Literature Review.” Reliability Engineering & System Safety 141: 131–141. doi:10.1016/j.ress.2015.03.008.

- Bernhardt, K.A., D. Poltavski, T. Petros, F.R. Ferraro, T. Jorgenson, C. Carlson, P. Drechsel, and C. Iseminger. 2019. “The Effects of Dynamic Workload and Experience on Commercially Available EEG Cognitive State Metrics in a High-Fidelity Air Traffic Control Environment.” Applied Ergonomics 77: 83–91. doi:10.1016/j.apergo.2019.01.008.

- Bernhardt, K.A., and D. Poltavski. 2021. “Symptoms of Convergence and Accommodative Insufficiency Predict Engagement and Cognitive Fatigue during Complex Task Performance with and without Automation.” Applied Ergonomics 90: 103152. doi:10.1016/j.apergo.2020.103152.

- Bourahmoune, K., and T. Amagasa. 2019. “AI-Powered Posture Training: Application of Machine Learning in Sitting Posture Recognition Using the Lifechair Smart Cushion.” IJCAI International Joint Conference on Artificial Intelligence, 2019-August, 5808–5814. doi:10.24963/ijcai.2019/805.

- Braun, V., and V. Clarke. 2006. “Using Thematic Analysis in Psychology.” Qualitative Research in Psychology 3 (2): 77–101. doi:10.1191/1478088706qp063oa.

- Brenner, M., H. Brock, A. Stiegle, and R. Gomez. (2021, August). "Developing an Engagement-Aware System for the Detection of Unfocused Interaction." In 2021 30th IEEE International Conference on Robot & Human Interactive Communication (RO-MAN) (pp. 798-805). IEEE. doi:10.1109/RO-MAN50785.2021.9515353.

- Bustos-López, M., N. Cruz-Ramírez, A. Guerra-Hernández, L.N. Sánchez-Morales, N.A. Cruz-Ramos, and, G. Alor-Hernández. 2022. “Wearables for Engagement Detection in Learning Environments: A Review.” Biosensors 12 (7): 509. doi:10.3390/bios12070509.

- Cao, Z., G. Hidalgo, T. Simon, S.E. Wei, and Y. Sheikh. 2021. “OpenPose: Realtime Multi-Person 2D Pose Estimation Using Part Affinity Fields.” IEEE Transactions on Pattern Analysis and Machine Intelligence 43 (1): 172–186. doi:10.1109/TPAMI.2019.2929257.

- Chan, V.C., G.B.Ross, A.L. Clouthier, S.L. Fischer, and R.B. Graham. 2022. “The Role of Machine Learning in the Primary Prevention of Work-Related Musculoskeletal Disorders: A Scoping Review.” Applied Ergonomics 98: 103574. doi:10.1016/j.apergo.2021.103574.

- Charles-Edwards, G.D., G.S. Payne, M.O. Leach, and, A. Bifone. 2004. “Effects of Residual Single-Quantum Coherences in Intermolecular Multiple-Quantum Coherence Studies.” Journal of Magnetic Resonance 166 (2): 215–227. doi:10.1016/j.jmr.2003.10.017.

- Chen, C., Z. Liu, S. Wan, J. Luan, and Q. Pei. 2021. “Traffic Flow Prediction Based on Deep Learning in Internet of Vehicles.” IEEE Transactions on Intelligent Transportation Systems 22 (6): 3776–3789. doi:10.1109/TITS.2020.3025856.

- Christian, M.S., A.S. Garza, and J.E. Slaughter. 2011. “Work Engagement: A Quantitative Review and Test of Its Relations with Task and Contextual Performance.” Personnel Psychology 64 (1): 89–136. doi:10.1111/j.1744-6570.2010.01203.x.

- D'Mello, S.S., P. Chipman, and A. Graesser. 2007. “Posture as a Predictor of Learner’s Affective Engagement.” Proceedings of the Annual Meeting of the Cognitive Science Society, 29.

- Debie, E., R.F. Rojas, J. Fidock, M. Barlow, K. Kasmarik, S. Anavatti, M. Garratt, and H. A. Abbass. 2021. “Multimodal Fusion for Objective Assessment of Cognitive Workload: A Review.” IEEE Transactions on Cybernetics 51 (3): 1542–1555. doi:10.1109/TCYB.2019.2939399.

- Demerouti, E., and A.B. Bakker. 2008. “The Oldenburg Burnout Inventory: A Good Alternative to Measure Burnout (and Engagement).” Handbook of Stress and Burnout in Health Care 65 (7): 1–25.

- Díaz, C., W. Guerra, M. Hincapié, and G. Moreno. 2015. “Description of a System for Determining the Level of Student Learning from the Posture Evaluation.” 2015 10th Computing Colombian Conference (10CCC), 491–498. IEEE. doi:10.1109/ColumbianCC.2015.7333466.

- Dinghofer, K., and F. Hartung. 2020. “Analysis of Criteria for the Selection of Machine Learning Frameworks.” 2020 International Conference on Computing, Networking and Communications (ICNC), 373–377. IEEE. doi:10.1109/ICNC47757.2020.9049650.

- Ferraro, J.C., M. Mouloua, P.M. Mangos, and G. Matthews. 2022. “Gaming Experience Predicts UAS Operator Performance and Workload in Simulated Search and Rescue Missions.” Ergonomics 65 (12): 1659–1671. doi:10.1080/00140139.2022.2048896.

- Finomore, V., G. Matthews, T. Shaw, and J. Warm. 2009. “Predicting Vigilance: A Fresh Look at an Old Problem.” Ergonomics 52 (7): 791–808. doi:10.1080/00140130802641627.

- Formosa, N., M. Quddus, S. Ison, M. Abdel-Aty, and J. Yuan. 2020. “Predicting Real-Time Traffic Conflicts Using Deep Learning.” Accident Analysis & Prevention 136: 105429. doi:10.1016/j.aap.2019.105429.

- Gao, Q., Y. Wang, F. Song, Z. Li, and X. Dong. 2013. “Mental Workload Measurement for Emergency Operating Procedures in Digital Nuclear Power Plants.” Ergonomics 56 (7): 1070–1085. doi:10.1080/00140139.2013.790483.

- Goldberg, P., Ö. Sümer, K. Stürmer, W. Wagner, R. Göllner, P. Gerjets, E. Kasneci, and U. Trautwein. 2021. “Attentive or Not? Toward a Machine Learning Approach to Assessing Students’ Visible Engagement in Classroom Instruction.” Educational Psychology Review 33 (1): 27–49. doi:10.1007/s10648-019-09514-z.

- Gunes, H., and M. Piccardi. 2009. “Automatic Temporal Segment Detection and Affect Recognition from Face and Body Display.” IEEE Transactions on Systems, Man, and Cybernetics. Part B, Cybernetics 39 (1): 64–84. doi:10.1109/TSMCB.2008.927269.

- Hagendorff, T. 2020. “The Ethics of AI Ethics: An Evaluation of Guidelines.” Minds and Machines 30 (1): 99–120. doi:10.1007/s11023-020-09517-8.

- Hawley, S.J., A. Hamilton-Wright, and S.L. Fischer. 2023. “Detecting Subject-Specific Fatigue-Related Changes in Lifting Kinematics Using a Machine Learning Approach.” Ergonomics 66 (1): 113–124. doi:10.1080/00140139.2022.2061052.

- Helton, W. S., and K. Näswall. 2015. “Short Stress State Questionnaire: Factor Structure and State Change Assessment.” European Journal of Psychological Assessment 31 (1): 20–30. doi:10.1027/1015-5759/a000200.

- Ikuma, L.H., C. Harvey, C.F. Taylor, and C. Handal. 2014. “A Guide for Assessing Control Room Operator Performance Using Speed and Accuracy, Perceived Workload, Situation Awareness, and Eye Tracking.” Journal of Loss Prevention in the Process Industries 32: 454–465. doi:10.1016/j.jlp.2014.11.001.

- Izsó, L., and M. Antaiovits. 1997. “An Observation Method for Analyzing Operators’ Routine Activity in Computerized Control Rooms.” International Journal of Occupational Safety and Ergonomics 3 (3–4): 173–189. doi:10.1080/10803548.1997.11076374.

- Jia, Y., E. Shelhamer, J. Donahue, S. Karayev, J. Long, R. Girshick, S. Guadarrama, and T. Darrell. (2014) “Caffe: Convolutional Architecture for Fast Feature Embedding.” In MM 2014 - Proceedings of the 2014 ACM Conference on Multimedia, Florida, Orlando, 675–678. doi:10.1145/2647868.2654889.

- Jin, L., V. Mitchell, A. May, and M. Sun. 2022. “Analysis of the Tasks of Control Room Operators within Chinese Motorway Control Rooms.” In HCI in Mobility, Transport, and Automotive Systems. HCII 2022. Lecture Notes in Computer Science. Vol. 13335, 526–546. Springer International Publishing. doi:10.1007/978-3-031-04987-3_36.

- Jin, L., V. Mitchell, and A. May. (2020). "Understanding Engagement in the Workplace: Studying Operators in Chinese Traffic Control Rooms." In Design, User Experience, and Usability. Design for Contemporary Interactive Environments: 9th International Conference, DUXU 2020, Held as Part of the 22nd HCI International Conference, HCII 2020, Copenhagen, Denmark, Proceedings, Part II 22: 653–665. Springer International Publishing. doi:10.1007/978-3-030-49760-6_46.

- Juang, C.F., C.W. Liang, C.L. Lee, and I.F. Chung. (2012). "Vision-Based Human Body Posture Recognition using Support Vector Machines." IEEE International Conference on Awareness Science and Technology :150–155. doi:10.1109/iCAwST.2012.6469605.

- Kahn, W.A. 1990. “Psychological Conditions of Personal Engagement and Disengagement at Work.” Academy of Management Journal 33 (4): 692–724. doi:10.5465/256287.

- Kaur, A., B. Ghosh, N.D. Singh, and A. Dhall. 2019. “Domain Adaptation Based Topic Modeling Techniques for Engagement Estimation in the Wild.” 2019 14th IEEE International Conference on Automatic Face & Gesture Recognition (FG 2019), 1–6. IEEE. doi:10.1109/FG.2019.8756511.

- Khalifa, O.O., and K.K. Htike. 2013. “Human Posture Recognition and Classification.” Proceedings – 2013 International Conference on Computer, Electrical and Electronics Engineering: ‘Research Makes a Difference’, ICCEEE 2013, 40–43. IEEE. doi:10.1109/ICCEEE.2013.6633905.

- Klein, R., and T. Celik. 2017. “The Wits Intelligent Teaching System: Detecting Student Engagement during Lectures Using Convolutional Neural Networks.” 2017 IEEE International Conference on Image Processing (ICIP), 2856–2860. IEEE. doi:10.1109/ICIP.2017.8296804.

- Lau, N., G.A. Jamieson, and G. Skraaning Jr. 2016a. “Empirical Evaluation of the Process Overview Measure for Assessing Situation Awareness in Process Plants.” Ergonomics 59 (3): 393–408. doi:10.1080/00140139.2015.1080310.

- Lau, N., G.A. Jamieson, and G. Skraaning Jr. 2016b. “Situation Awareness Acquired from Monitoring Process Plants – The Process Overview Concept and Measure.” Ergonomics 59 (7): 976–988. doi:10.1080/00140139.2015.1100329.

- Law, E.L.C., V. Roto, M. Hassenzahl, A.P. Vermeeren, and J. Kort. 2009. “Understanding, Scoping and Defining User Experience.” Proceedings of the SIGCHI Conference on Human Factors in Computing Systems, 719–728. New York, NY: ACM. doi:10.1145/1518701.1518813.

- Fwa, H.L., and L. Marshall. 2018. “Modeling Engagement of Programming Students Using Unsupervised Machine Learning Technique.” GSTF Journal on Computing 6 (1): 1. doi:10.5176/2251-3043_6.1.114.

- Li, J., M. Ran, W. Tong, Y. Cao, L. Cai, X. Ou, and T. Yang. 2022. “Transfer-Learning-Based SVM Method for Seismic Phase Picking with Insufficient Training Samples.” IEEE Geoscience and Remote Sensing Letters 19: 1–5. doi:10.1109/LGRS.2022.3160588.

- Liu, G., D. Zhou, H. Xu, and C. Mei. 2010. “Model Optimization of SVM for a Fermentation Soft Sensor.” Expert Systems with Applications 37 (4): 2708–2713. doi:10.1016/j.eswa.2009.08.008.

- Liu, Y., Q. Gao, and M. Wu. 2023. “Domain- and Task-Analytic Workload (DTAW) Method: A Methodology for Predicting Mental Workload during Severe Accidents in Nuclear Power Plants.” Ergonomics 66 (2): 261–290. doi:10.1080/00140139.2022.2079727.

- Matthews, G., S.E. Campbell, S. Falconer, L.A. Joyner, J. Huggins, K. Gilliland, R. Grier, and J.S. Warm. 2002. “Fundamental Dimensions of Subjective State in Performance Settings: Task Engagement, Distress, and Worry.” Emotion 2 (4): 315–340. doi:10.1037/1528-3542.2.4.315.

- Matthews, G., J. Szalma, A.R. Panganiban, C. Neubauer, and J.S. Warm. 2013. "Profiling Task Stress with the Dundee Stress State Questionnaire." Psychology of stress: New research, 1, 49–90.

- Mijović, P., V. Ković, M. De Vos, I. Mačužić, P. Todorović, B. Jeremić, and, I. Gligorijević. 2017. “Towards Continuous and Real-Time Attention Monitoring at Work: reaction Time versus Brain Response.” Ergonomics 60 (2): 241–254. doi:10.1080/00140139.2016.1142121.

- Nakajima, C. 2004. “Posture Recognition of Nuclear Power Plant Operators by Supervised Learning.” 2004 International Conference on Image Processing, 2004. ICIP ’04, 877–880. IEEE. doi:10.1109/ICIP.2004.1419439.

- Neigel, A.R., V.L. Claypoole, S.L. Smith, G.E. Waldfogle, N.W. Fraulini, G.M. Hancock, W.S. Helton, and J.L. Szalma. 2020. “Engaging the Human Operator: A Review of the Theoretical Support for the Vigilance Decrement and a Discussion of Practical Applications.” Theoretical Issues in Ergonomics Science 21 (2): 239–258. doi:10.1080/1463922X.2019.1682712.

- O’Connor, C., and H. Joffe. 2020. “Intercoder Reliability in Qualitative Research: Debates and Practical Guidelines.” International Journal of Qualitative Methods 19: 160940691989922. doi:10.1177/1609406919899220.

- O’Malley, K.J., B.J. Moran, P. Haidet, C.L. Seidel, V. Schneider, R.O. Morgan, P.A. Kelly, and, B. Richards. 2003. “Validation of an Observation Instrument for Measuring Student Engagement in Health Professions Settings.” Evaluation & the Health Professions 26 (1): 86–103. doi:10.1177/0163278702250093.

- Rajavenkatanarayanan, A., A.R. Babu, K. Tsiakas, and F. Makedon. 2018. "Monitoring Task Engagement Using Facial Expressions and Body Postures." In Proceedings of the 3rd International Workshop on Interactive and Spatial Computing, pp. 103–108. doi:10.1145/3191801.3191816.

- Ran, X., C. Wang, Y. Xiao, X. Gao, Z. Zhu, and B. Chen. 2021. “A Portable Sitting Posture Monitoring System Based on a Pressure Sensor Array and Machine Learning.” Sensors and Actuators A: Physical 331: 112900. doi:10.1016/j.sna.2021.112900.

- Reinerman, L., J. Mercado, J.L. Szalma, and P.A. Hancock. 2020. “Understanding Individualistic Response Patterns When Assessing Expert Operators on Nuclear Power Plant Control Tasks.” Ergonomics 63 (4): 440–460. doi:10.1080/00140139.2019.1677946.

- Reinerman-Jones, L., G. Matthews, and J.E. Mercado. 2016. “Detection Tasks in Nuclear Power Plant Operation: Vigilance Decrement and Physiological Workload Monitoring.” Safety Science 88: 97–107. doi:10.1016/j.ssci.2016.05.002.

- Ren, W., O. Ma, H. Ji, and X. Liu. 2020. “Human Posture Recognition Using a Hybrid of Fuzzy Logic and Machine Learning Approaches.” IEEE Access 8: 135628–135639. doi:10.1109/ACCESS.2020.3011697.

- Roh, J., H.J. Park, K.J. Lee, J. Hyeong, S. Kim, and B. Lee. 2018. “Sitting Posture Monitoring System Based on a Low-Cost Load Cell Using Machine Learning.” Sensors 18 (1): 208. doi:10.3390/s18010208.

- Roto, V., P. Palanque, and H. Karvonen. 2019. “Engaging Automation at Work – A Literature Review.” In IFIP Advances in Information and Communication Technology, 158–172. Springer International Publishing. doi:10.1007/978-3-030-05297-3_11.

- Sanghvi, J., G. Castellano, I. Leite, A. Pereira, P.W. McOwan, and A. Paiva. 2011. "Automatic Analysis of Affective Postures and Body Motion to Detect Engagement with a Game Companion." In Proceedings of the 6th international conference on Human-robot interaction, pp. 305–312. doi:10.1145/1957656.1957781.

- Sarikan, S.S., and A.M. Ozbayoglu. 2018. “Anomaly Detection in Vehicle Traffic with Image Processing and Machine Learning.” Procedia Computer Science 140: 64–69. doi:10.1016/j.procs.2018.10.293.

- Savioja, P., M. Liinasuo, and H. Koskinen. 2014. “User Experience: Does It Matter in Complex Systems?” Cognition, Technology & Work 16 (4): 429–449. doi:10.1007/s10111-013-0271-x.

- Schaeffer, J., and R. Lindell. 2016. “Emotions in Design: Considering User Experience for Tangible and Ambient Interaction in Control Rooms.” Information Design Journal 22 (1): 19–31. doi:10.1075/idj.22.1.03sch.

- Schaufeli, W.B., M. Salanova, V. González-Romá, and A.B. Bakker. 2002. “The Measurement of Engagement and Burnout and: A Confirmative Analytic Approach.” Journal of Happiness Studies 3 (1): 71–92. doi:10.1023/A:1015630930326.

- Schaufeli, W.B., A.B. Bakker, and M. Salanova. 2006. “The Measurement of Work Engagement with a Short Questionnaire: A Cross-National Study.” Educational and Psychological Measurement 66 (4): 701–716. doi:10.1177/0013164405282471.

- Seligman, M.E. 2002. “Positive Psychology, Positive Prevention, and Positive Therapy.” In Handbook of Positive Psychology, 3–9. New York, NY: Oxford University Press.

- Shi, C., and L. Rothrock. 2022. “Using Eye Movements to Evaluate the Effectiveness of the Situation Awareness Rating Technique Scale in Measuring Situation Awareness for Smart Manufacturing.” Ergonomics 1–9. doi:10.1080/00140139.2022.2132299.

- Shimazu, A., W.B. Schaufeli, K. Kamiyama, and N. Kawakami. 2015. “Workaholism vs. Work Engagement: The Two Different Predictors of Future Well-Being and Performance.” International Journal of Behavioral Medicine 22 (1): 18–23. doi:10.1007/s12529-014-9410-x.

- Sinaga, K.P., and M.S. Yang. 2020. “Unsupervised K-Means Clustering Algorithm.” IEEE Access 8: 80716–80727. doi:10.1109/ACCESS.2020.2988796.

- Smith, G.J. 2002. “Behind the Screens: Examining Constructions of Deviance and Informal Practices among CCTV Control Room Operators in the UK.” Surveillance & Society 2 (2/3): 376–395. doi:10.24908/ss.v2i2/3.3384.

- Smith, P., A. Blandford, and J. Back. 2009. “Questioning, Exploring, Narrating and Playing in the Control Room to Maintain System Safety.” Cognition, Technology & Work 11 (4): 279–291. doi:10.1007/s10111-008-0116-1.

- Soane, E., C. Truss, K. Alfes, A. Shantz, C. Rees, and M. Gatenby. 2012. “Development and Application of a New Measure of Employee Engagement: The ISA Engagement Scale.” Human Resource Development International 15 (5): 529–547. doi:10.1080/13678868.2012.726542.

- Stanton, N.A., A.P. Roberts, K.A. Pope, and D. Fay. 2022. “The Quest for the Ring: A Case Study of a New Submarine Control Room Configuration.” Ergonomics 65 (3): 384–406. doi:10.1080/00140139.2021.1961857.

- Tavakkol, R., E. Kavi, S. Hassanipour, H. Rabiei, and M. Malakoutikhah. 2020. “The Global Prevalence of Musculoskeletal Disorders among Operating Room Personnel: A Systematic Review and Meta-Analysis.” Clinical Epidemiology and Global Health 8 (4): 1053–1061. doi:10.1016/j.cegh.2020.03.019.

- Villa, M., M. Gofman, S. Mitra, A. Almadan, A. Krishnan, and A. Rattani. 2020. “A Survey of Biometric and Machine Learning Methods for Tracking Students’ Attention and Engagement.” Proceedings – 19th IEEE International Conference on Machine Learning and Applications, ICMLA 2020, 948–955. doi:10.1109/ICMLA51294.2020.00154.

- Wood, B.K., R.L. Hojnoski, S.D. Laracy, and C.L. Olson. 2016. “Comparison of Observational Methods and Their Relation to Ratings of Engagement in Young Children.” Topics in Early Childhood Special Education 35 (4): 211–222. doi:10.1177/0271121414565911.

- Wright, J.L., J.Y. Chen, and M.J. Barnes. 2018. “Human–Automation Interaction for Multiple Robot Control: The Effect of Varying Automation Assistance and Individual Differences on Operator Performance.” Ergonomics 61 (8): 1033–1045. doi:10.1080/00140139.2018.1441449.

- Zhang, X., S. Mahadevan, N. Lau, and M. B. Weinger. 2020. “Multi-Source Information Fusion to Assess Control Room Operator Performance.” Reliability Engineering & System Safety 194: 106287. doi:10.1016/j.ress.2018.10.012.

- Zhang, B., Z. Wang, W. Wang, Z. Wang, H. Liang, and D. Liu. 2020. “Security Assessment of Intelligent Distribution Transformer Terminal Unit Based on RBF-SVM.” 2020 IEEE 4th Conference on Energy Internet and Energy System Integration: Connecting the Grids towards a Low-Carbon High-Efficiency Energy System, EI2 2020, 4342–4346. doi:10.1109/EI250167.2020.9346959.

- Zhao, Z.D., Y.Y. Lou, J.H. Ni, and J. Zhang. 2009. “RBF-SVM and Its Application on Reliability Evaluation of Electric Power System Communication Network.” Proceedings of the 2009 International Conference on Machine Learning and Cybernetics, 1188–1193. IEEE. doi:10.1109/ICMLC.2009.5212365.

Appendix A:

VMOE rating rubric

Table A1. Video-based measurement for operator engagement.

Appendix B:

VMOE instruction manual

According to the VMOE instruction manual, each video recording is divided into segments; each segment is considered to show a particular state of engagement. Each video segment has its own engagement state score according to the rating rubric in VMOE. The duration of each segment is indicated by timestamps which register the time as h:min:s. A timestamp is inserted when the observer believes that the engagement state has changed.

Notably, the study limits segment duration to 3 min for engagement at State 0 or State 1. If an operator in one segment continued to show the status of State 0 for 3 min, this segment would be defined as State 0, but if the operator ended this status of State 0 within 3 min, it would be defined as State 1. Furthermore, the analysis scope includes the target tasks completed by the operators using the computer, landline telephone, and big TV wall, whereas the tasks completed using a cell phone or involving paper documents are not within the research scope. In addition, only operators who are seated at their workplace are evaluated. Finally, the observer is encouraged to master the background knowledge of the control room and operator in advance, becoming well acquainted with the target tasks, operator persona, etc.