Abstract

This study focuses on computer-based formative assessment for supporting problem solving and reasoning in mathematics. To be able to assist students who find themselves in difficulties, the software suggested descriptions – diagnoses – of the encountered difficulty the students could choose from. Thereafter, the software provided metacognitive and heuristic feedback as suitable. The findings provide insight into how formative assessment can be designed to support students during their problem solving and indicate that diagnosis and feedback could be helpful for promoting students’ creative reasoning. In other words, the feedback guided students in justifying, monitoring, and controlling their solution method.

1. Introduction

Learning mathematics requires students to tackle mathematics problems by creating (parts of) the method by themselves, not just by knowing the definitions and procedures for applying or using them when necessary. Nevertheless, various studies have shown that many students have serious difficulties in solving mathematics problems (Verschaffel et al., Citation2020) and that superficial solution strategies are common among students (Palm, Citation2008; Sidenvall et al., Citation2015). Hiebert (Citation2003) concluded by reviewing the literature on the effects of traditional classroom practice that students have few opportunities to engage in more complex processes (e.g. reasoning, communication, conjecturing, justifying, etc.) to find solution methods by themselves rather than learning facts and simple procedures (e.g. calculation, labelling, definition, etc.). Similar results were found in a Swedish study where 200 mathematics classrooms were observed (Boesen et al., Citation2014). As a result, students encounter difficulties during problem solving and tasks that require them to create mathematical reasoning (Bergqvist et al., Citation2008).

During recent decades research in mathematical problem-solving has advanced significantly, but additional research is needed to advance our knowledge on how students can be supported in carrying out this complex activity (Lester & Cai, Citation2016; Liljedahl & Cai, Citation2021). One such support can be formative assessment during problem solving because formative assessment is considered one of the promising ways to improve the teaching of learning processes (Black & Wiliam, Citation2009; Stiggins, Citation2006; Wiliam & Thompson, Citation2008). Formative assessment includes feedback (i.e. future action of what to do) provided to the learner during an instructional sequence or learning activity based on the diagnosis (i.e. information about what is needed) for the purpose of supporting the learning (Wiliam & Thompson, Citation2017). According to recent reviews of formative assessment, feedback is considered to play a vital role in how formative assessment can be effective in supporting students’ learning outcomes (Brookhart, Citation2018). Therefore, feedback can be provided to facilitate problem solving, that is, feedback that does not provide the method or the procedure for how to solve the task. This is because compared to being taught how to apply ready-made methods, students learn mathematics better when they have to struggle and create their own solutions to the tasks (B. Jonsson et al., Citation2014). Furthermore, from their meta-analysis of studies focused on the effectiveness of feedback in computer-based assessment environments, Van der Kleij et al. (Citation2015) suggested for future research that ‘digital learning environments could become even more powerful if the feedback included a diagnostic component’ (p. 504). Keeping in mind the current situation and gaps described above, this paper investigates how computer-based formative assessment can support students’ problem solving and reasoning.

2. Research framework

2.1. Problem solving in mathematics

Problem solving is defined as ‘engaging in a task for which the solution method is not known in advance’ (NCTM, Citation2000, p. 51). An implication of this definition is that mathematical tasks are of two types: problems and non-problems, where the latter often are called routine tasks. Similar definitions of problem-solving sometimes include that the task must be a challenge (Schoenfeld, Citation1985) or that it should require exploration (Niss, Citation2003). It is important in problem solving to foster ‘students’ ideas and asking them to clarify and justify their ideas orally and in writing’ (Lester & Cai, Citation2016).

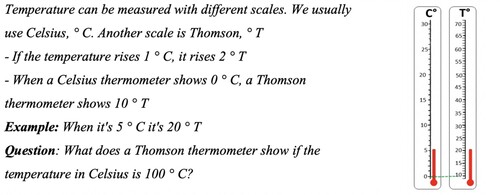

When it comes to the process of solving problems, there are various arrangements of the problem-solving process documented in the research literature (see Figure ). For example, (Polya, Citation1945) suggested that the problem-solving process involved: (i) understanding the problem; (ii) developing a plan; (iii) carrying out the plan; and (iv) looking back. Similarly, the researchers developed the models for problem-solving processes targeted at the teaching purposes (mostly) (e.g. Mason et al., Citation1982; Schoenfeld, Citation1992; Wilson et al., Citation1993; Yimer & Ellerton, Citation2010) (see Figure ). Some researchers provided problem solving models for the purpose of investigating, analysing, and explaining mathematical behaviour (e.g. Carlson & Bloom, Citation2005; Schoenfeld, Citation1985). For example, Carlson and Bloom (Citation2005) developed a multidimensional problem-solving framework with four phases: orientation, planning, executing, and checking. In this framework, problem solving is consiered as a cyclical–recursive- relationship (Carlson & Bloom, Citation2005).

Figure 1. Different phase models of problem solving processes (Rott et al., Citation2021).

Schoenfeld (Citation1985) describes four different characteristics of knowledge that are required to be a good problem solver or to solve a problem. The cause of success or failure in a problem-solving attempt locates in one or more of the following four categories (Schoenfeld, Citation2014). (I) Beliefs are students’ personal views on mathematics and are very important for how the student tackles novel tasks (Schoenfeld, Citation1985); (II) Metacognitive monitoring and control include evaluating progress and initiating effective searches for strategies and resources to select and use. These are the executive processes of reflecting on and controlling one's own thinking, and these procedures are essential for the self-regulation needed to choose wisely when making decisions on the available knowledge (e.g. Schraw et al., Citation2006). (III) Heuristics is the selection and implementation process of suitable general rules of thumb for mathematical problem solving. In other words, these are the strategies and techniques needed to solve the problem. These could be methods of solving tasks or the order of procedures needed to solve the task, and there is more specific heuristics principles (e.g. ‘draw a figure’, ‘try a few examples’, ‘try to solve a simpler task’, etc., see Schoenfeld, Citation1985). (IV) Resources are the student’s previously acquired knowledge that is necessary to solve a particular task. Students with good control try to make the most of their resources and heuristic knowledge so that they are able to solve novel tasks in a more efficient way.

Furthermore, Schoenfeld mentioned the following six possible phases for the process of solving the problem: (1) reading the task, (2) analysing the task, (3) exploring methods, (4) making a plan, (5) implementing the plan, and (6) verifying the result. It is not necessary that all of these steps are present during the problem-solving process. For instance, the analysis phase of a given task could be enough to plan how to progress, and thus the exploration phase would be not needed (Schoenfeld, Citation1985).

The focus of this paper is on how students can be supported in their problem-solving phases, with an emphasis on the use of computer-based formative assessment as an example of an approach for providing such support. To do so, the above-mentioned Polya’s four phases and Schoenfeld’s six phases were considered and modified into five phases as representing problematic situations where students might get stuck during problem solving. For example, Schoenfeld’s reading phase was modified as ‘Interpretation’ of the task because students not only read the task, but they also need to interpret the meaning of the task. In addition, Schoenfeld’s analysis and exploring phases were merged into one phase, namely ‘Exploration/Analysis’ of the task, because they do not necessarily occur simultaneously or sequentially. Schoenfeld (Citation1985) planning phase was modified into the ‘Hypothesis’ phase, as inspired by Lampert (Citation1990), because students need to hypothesize a solution method and justify their hypothesis before the actual application of the method. Thus, the ‘Justification’ phase and ‘Application’ phase were adopted instead of Schoenfeld’s implementation and verification phases. Hence, the five phases – (1) Interpretation, (2) Exploration/Analysis, (3) Hypothesis, (4) Justification, and (5) Application – were implemented in the design of the diagnostic process for identifying problematic situations in order to support students who have difficulties in solving the task. The Interpretation of the task includes any actions involved in reading the task and the process of the student’s understanding of the meaning of the task, that is, what the task is asking for. As a student engages in clarifying the meaning of the task, the Exploration/Analysis phase takes place, which includes actions to break down the task into its parts or searching for relevant data that can be used later on in the problem-solving process. During the Hypothesis phase, the student constructs a method that has the potential to solve the problem. Afterwards, the student attempts to justify the Hypothesis. Once the student has justified the Hypothesis, the Application of the method occurs.

Additionally, the above-mentioned features of the metacognitive and heuristic components of problem-solving were employed to design suitable feedback. The feedback regarding beliefs and resources was excluded because belief concerns one’s personal worldview of mathematics which takes months and years to develop and thus might be difficult to change and measure in the short intervention of this study. Resource feedback is always task-specific and concerns basic knowledge and tools (e.g. procedures, facts, definitions, concepts). However, the aim of this study was to suggest and evaluate general feedback instead of task-specific feedback, and therefore resource feedback was excluded.

2.2. Role of formative assessment & computer assisted formative assessment

Formative assessment involves collecting information on students’ achievement and then using this information to make decisions about how to provide feedback or instructions to support students’ learning (Bennett, Citation2011; Black & Wiliam, Citation1998, Citation2009; Wiliam & Thompson, Citation2008). Students’ involvement in their own learning processes in the form of self-assessment and receiving feedback from an agent (e.g. teachers, computers, etc.) that advance student’s learning are prescribed as the two key aspects of formative assessment among other aspects (e.g. peer assessment) that will be emphasized in the present study. This is because self-assessment motivates students to take responsibility for their own learning (Bennett, Citation2011) and ‘self-regulated students are meta-cognitively, socially, motivationally, and behaviourally active in problem solving processes’ (Clark, Citation2012, p. 216). A recent meta-analysis indicated that formative assessment interventions are most effective when focused on providing student-initiated self-assessment (i.e. medium-sized impact; d = .61) (H. Lee et al., Citation2020). For example, students’ reflection on the quality of their own work and related revision is a self-assessment (see Andrade & Valtcheva, Citation2009). On the other hand, formative feedback is ‘information communicated to the learner that is intended to modify his or her thinking or behaviour for the purpose of improving learning’ (Shute, Citation2008), and the purpose of providing formative feedback is to involve students in meta-cognitive strategies (i.e. monitoring, reflecting, etc.) (Clark, Citation2012). Moreover, computer assisted formative assessment has been a constantly growing interest in the educational research (Attali & van der Kleij, Citation2017; Sullivan et al., Citation2021), especially for providing feedback. Hattie and Timperley (Citation2007) meta-analysis showed that computer-assisted feedback can provide cues or reinforcement for improving learning and that it is one of the most effective forms of feedback.

In this study, the students solved tasks with the help of a computer-assisted formative assessment that encouraged the students to assess their own difficulties, and they received suitable feedback from the computer depending on their diagnoses. Feedback was considered as a response to students’ actions for thinking by themselves or reflecting by themselves that guided them to continue their reasoning to solve a task. Students first received metacognitive feedback (MF) (Kramarski & Zeichner, Citation2001), which consisted of self-regulating statements to foster their thinking and their monitoring/control when processing the task. The purpose was to give the students responsibility to solve the task by creating their own solution method, that is the ‘devolution of problem’ (see Brousseau, Citation1997). Then, if necessary, students received heuristic feedback (HF), which consisted of suggestions about what to do to solve the task. For example, feedback might suggest drawing a figure of the task, dividing the task into small parts, etc. The idea was that the students were not provided with any template of solution method to solve the tasks, rather they were given the responsibility to construct their own solution method with the support of MF and HF. That is, first provide general MF based on students’ own reflection, and if MF was insufficient then provide somewhat more specific HF. By doing so, if a student succeeds to construct the solution method (at least part of it) with MF, then she has kept more responsibility for solving the task.

2.3. Research questions

The aim of this study concerns how formative assessment from a computer can support students’ problem-solving when they encounter difficulties. The difficulties are diagnosed according to the five phases (i.e. Interpretation, Exploration/Analysis, Hypothesis, Justification, and Application), and for each diagnosis, two types of feedback were given (i.e. MF and HF). The specific research questions were:

What kind of problem-solving difficulties do students solve by using MF or HF?

In what ways are students helped or not helped by the feedback?

3. Method

3.1. Computer environment: task, diagnosis, and feedback design

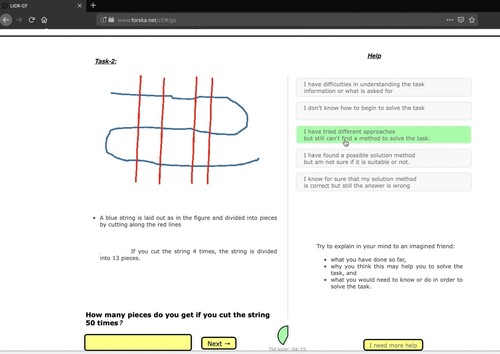

Website design: A website was constructed specifically for this study. After logging into the system, the students received instructions on the first page that contained an outline of a procedure for diagnosing and getting feedback during problem-solving. At the bottom of the page, there was a ‘start’ button to begin the test. The test page was divided into two segments (see Figure ). The left side contained a task and the answer box, while the right side contained five descriptions of difficulties (diagnoses) the students could choose from and space for corresponding feedback. When the students clicked on a diagnosis, they received MF. At the bottom of the MF, there was an option for ‘more help’ to get HF. Thus, the student could decide if they needed HF to solve the task or not. A countdown clock was attached to the bottom of the page, and students got 10 min per task. They could try the same task multiple times if their answer was wrong within the 10-minute time frame duration. The diagnoses and feedback were the same for all tasks (see appendix). This will be presented in the following start with the task:

Task design: The goal of the task design was that the solution procedure was not known in advance by the student, and they had to devise their own solution method. A total of 10 different kinds of tasks were designed to be solved using different kinds of methods, and the students could construct a unique method for each of the tasks.

Diagnosis: Diagnoses were provided as statements for why the students cannot continue their task-solving attempts. The five diagnosis statements were developed considering the expectations about how students might mathematically deduce a problem in the process of solving the task (see section 2.1).

Feedback during the problem-solving phase: Based on the diagnosis, students received MF (Figure ) and HF (Figure ). First, MF was provided to queries that were general (not task-specific), and the purpose was to initiate monitoring and control, in other words, self-regulation of the level of feedback. If this was not sufficient, then HF was provided concerning general suggestions for solution strategies.

Feedback after solving the task: Students received corrective feedback in the form of being right or wrong after providing an answer.

3.2. Method of data collection

Participants: The participants were 17 undergraduate students enrolled in the basic year (basår in Swedish) programme’s mathematics course from a public university in Sweden. The basic year is a one-year programme for students who want to study science or technology at the university but do not have full entrance qualifications. This is a complementary education with high school courses in mathematics, physics, and chemistry. Recruitment took place through an email invitation from the course coordinator as well as direct approach where the author visited the classroom to describe/present the research and recruit participants. An invitation was sent to 86 students (all enrolled students in the course), of whom 17 accepted the invitation. However, one student did not need any feedback from the computer, one student quit the test after working on three tasks and one withdrew his participation. Thus, the analysis drew from the 14 remaining students. The participants were 20–25 years old and 42.9% were male. Data were collected on a one-to-one basis, one student at a time. A pilot study was conducted with an additional five participants before conducting this study. The piloting results were used to verify the task intelligibility, the specificity of the feedback and the semi-structured interview, the functionality of the web application, and the optimal difficulty of the problems. All participants received three movie tickets (worth 36 euros) as their participation incentive.

TAP: ‘Think aloud protocols’ (TAP) was used to collect data with audio recording. TAP was used because the reasoning process while solving the task ‘may contain more data than an ordinary written solution, which may be supplemented by, for example, think-aloud protocols and interviews’ (Lithner, Citation2008, p. 257). Therefore, students were invited to solve the mathematical tasks in a think-aloud manner, and students were only prompted by the statement ‘please, talk aloud’ when they were silent for 5–10 s. Additionally, to capture an accurate representation of the participants’ problem-solving activities, all of the participants’ writing, drawing, and utterances were captured by a smart pen (i.e. an electronic pen) that records both scripts and speech digitally.

Observation & Post-interview: Along with audio recording and screen recording, the student’s problem-solving method was observed with an observation sheet (see appendix) for triangulation of the data. It was one to one session with each student, and the author was sitting beside the student during the whole session. The computer screen was recorded for triangulation of the observation. Moreover, a semi-structured interview (see appendix) was conducted with each participant after completing the task in order to gather data about their reasoning for choosing certain feedback, whether and how the feedback was helpful or not, and their opinion regarding the feedback. The interview was conducted by the author and the interview was recorded both in a recorder and a smart pen.

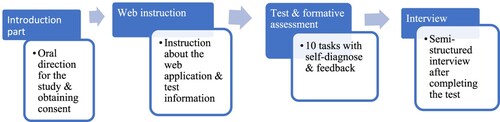

3.3. Study procedure

The study process is described in Figure . The participants sat in a laboratory setting with a personal computer. Instructions were provided for the study, and the author motivated them to use feedback, explained the rules (e.g. using calculator, paper and pen, no mobile phones), and asked them for their informed consent for participation. Their demographics were collected. The author then started by introducing the study’s web application. First, participants logged in with their names and university email address. Then they got the instruction about using the web application and test information, and they could start the test when they were ready.

3.4. Ethical considerations

The Swedish Research Council’s principles and guidelinesFootnote1 were followed and written informed consent was obtained from the students in accordance with the Helsinki declaration. The data collection and analysis were done by the author but have been discussed with and read by the other project members.

4. Method of analysis

To answer the research questions regarding how computer-based formative assessment can support students’ problem solving (or reasoning), all data from the recordings were analysed task by task for each student. The analysis was performed following the two steps below:

4.1. Step 1: Identifying problematic situations and students’ interaction with the computer

As the first step, all problematic situations were noted for each task and categorized according to the student’s diagnosis (see Table ). These were identified as any situation in which a student interrupted their problem-solving process and asked for help from the computer by choosing one of the suggested diagnoses. Thereafter, each problematic situation was divided into two sub-categories under five diagnosis levels, that is, whether the student chose MF and/or chose HF. Afterwards, each problematic situation was examined with respect to the student’s choice of action after choosing a diagnosis, including (i) following MF, (ii) choosing and following HF, (iii) ignoring/skipping MF, (iv) ignoring/skipping MF but choosing HF and following HF, (v) ignoring/skipping HF, (vi) running out of time, (vii) giving up, (viii) choosing another diagnosis and thereafter choosing any of the actions i, ii, iii, iv, v, vi or vii. Choice of actions i, iii, vi, vii and viii (if the choice was to follow/ignore MF etc.) were sorted under receiving MF. The choices of actions number ii, iv, v, vi, vii and viii (if the choice was to follow/ignore HF) were sorted under receiving HF. When a student did not find the chosen feedback helpful, they may have chosen another diagnosis (viii) to receive more feedback. Then a similar analysis was done by categorizing their actions as ‘following MF’ or ‘following HF’. Finally, if the student gave up (vii) without following the feedback or was not helped by following any of the feedback, then the student’s action was identified as ‘Not helped by feedback’. The latter was related to the second research question.

Table 1. The overview of the problematic situation: the feedback students followed & succeeded, failed etc.

Table 2. The overview of problematic situations where the students failed to diagnose correctly but could resume the problematic situation.

Table 3. The overview of problematic situations with incorrect diagnoses where the students failed to resume the problematic situation.

An example: A student chose and received MF, ‘Read the task carefully again and try to describe to an imaginary friend what information there is that may be useful for solving the task.’ If the student thereafter was observed reading the task again and explaining what useful information there is out loud, then the student’s action was identified as ‘following MF’.

4.2. Step 2: Identifying if and how the chosen feedback contributes to problem solving

As the second step, data from observations, TAP, and the interviews were examined to determine if and how the feedback contributed to problem-solving as well as to explore how students were helped or not helped by the feedback under each problematic situation level, and the following three aspects were considered:

From the TAP and observation data, whether there is evidence in the student’s reasoning that the student is following the feedback.

From the TAP and observation data, whether the student can make progress in the problematic situation by utilizing the feedback. Student’s problem-solving was interpreted as progressing if it was observed that the student made progress at the step at which they encountered the difficulty or if the student managed to move on to the next steps of problem-solving (e.g. exploration, hypothesis, implementation, etc.). For example, the student might not understand the task and the feedback made them read the task again. If the student was able to interpret/understand the task or was able to move to the next step ‘Exploration’, then the student was considered to have made progress.

From the post-interview, whether the students expressed that the provided feedback was helpful for making progress.

The student’s choice of correct/incorrect diagnoses: As a final step, in all situations in which a student chose a diagnosis the observation and TAP data were analysed to establish whether or not the student chose the correct diagnosis. For example, if the data showed that the student had gained useful information by analysing/exploring the task but was unable to move on to plan how to solve the task, the diagnosis should concern ‘Hypothesis’. If the student chose ‘Hypothesis’, the choice of diagnosis was regarded as correct and otherwise as incorrect. A similar procedure was followed for all of the clicked statements to assess the correct and incorrect diagnoses.

5. Results

5.1. Research question 1- What kinds of problems/problematic situations can students solve by receiving MF or HF?

The analysis began by creating an overview of problematic situations (Table ) from the observations and the screen recording. Here, each problematic situation was categorized depending on the student’s choice of actions (see section 4). In the table, the columns show the number of diagnoses. The student’s choice of actions (i.e. what diagnosis the student chose and the feedback they decided to follow or not) was classified into MF or HF. The last two columns represent the total number of correct diagnoses and incorrect diagnoses chosen by the students. Similarly, the last two rows denote the total number of students’ correct and incorrect diagnoses under each diagnosis. The fourth and fifth rows denote the total number of cases of whether students succeeded or failed when they followed feedback. The cases of skipped, gave up, ran out of time, or chose another feedback denote the sixth, seventh, eighth and ninth rows respectively under each problematic situation.

5.1.1. The distribution among the five types of difficulties and students’ choices

The problematic situations in which students most often asked for help/feedback were Exploration/Analysis (27.3% of the problematic situations), Justification (27.3%), and Hypothesis (21.5%). Students asked comparatively fewer times for feedback on Application (10.7%) and Interpretation (13.2%).

Students asked for MF in 74.4% of cases and HF in 25.6% of cases. Table shows that if students tried to follow the provided feedback, the giving up rate was 3.3% of the situations, the running out of time rate was 9.1%, the skipped feedback rate was 26.4%, and the choosing another diagnosis rate was 1.7%. To estimate how often feedback helped the student, there was a need to look closer into the situations of skipping feedback, running out of time, and giving up. In 29 situations among these 32 situations when students skipped feedback, the students first read the MF, and just after reading the MF the students decided to move on or chose HF. In 2 situations out of 32, the students decided to move on to other kinds of feedback. In one situation the student did not follow the feedback at all. In giving up situations, the students chose HF, and they first read the feedback and just after reading the feedback decided to move on to another task instead of trying to solve the same task. In the cases of running out of time, the students asked for help/feedback at nearly the end of the given time for each task, and therefore they did not have time to use the feedback. If we count all these situations (i.e. skipped, ran out of time, chose another feedback, gave up) as not helped by the feedback, then the success rate was 52.9% (64/121).

5.1.2. The success rate when students followed feedback and had enough time

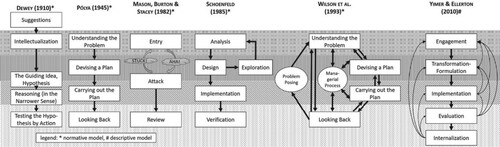

The students were free to decide whether to use feedback or not, and students may seek help in different ways from the computer (Wood & Wood, Citation1999). Thus, there may be that in some of the skipped, chose another feedback and gave up situations, the students did not actually try to use the feedback. The success with each of the five types of difficulties when students decided to follow feedback and got enough time to solve the task after receiving feedback is displayed in Figure .

Figure 5. The success rate with each of the five types of difficulties if we disregard the cases of skipped, gave up, ran out of time & chose another feedback.

It can be concluded that MFs were often sufficient for most of the students when they had problems interpreting the task, engaging in Exploration/Analysis, and justifying their method, but when it comes to Hypothesis (making a plan for solving the task) students to a greater extent needed HF to be able to resume their problem-solving process.

5.2. Research question 2- In what ways are students helped or not helped by the feedback

The qualitative analysis of think-aloud protocols clarified in what ways the formative assessment design was working or not. To determine in what ways students were helped (or not) by the feedback, it was considered whether a student used the provided feedback, if the student succeeded to resume the problematic situations or not, and whether the student’s diagnosis of the chosen feedback was correct or incorrect (see Table ). If a student could resume their problematic situation after following the feedback, then it was considered ‘helpful’. If a student could not resume the problematic situation after following the feedback, then it was considered as ‘not helpful’. The chosen feedback helped the students in different ways depending on the problem-solving phases they found difficult.

5.2.1. In what ways was MF helpful?

Thirteen successful cases were found to overcome problematic situations during the Interpretation phase (i.e. having difficulties understanding task information or what was being asked for). The MF suggested reading the task again. Following the feedback helped the students to read the text more carefully and to pay attention to all and earlier missed task information and include that information in their new interpretation. For example, on task 2 (see Figure ) some students had difficulties understanding how the blue string should be divided. After following the feedback that suggested reading the task again carefully, the students managed to understand that the blue string is laid out as in the figure and divided into pieces by cutting along the red lines.

Seventeen problematic situations were successfully solved during Exploration/Analysis (i.e. having difficulties in exploring useful data or analysing the task information). The MF suggested reading the task carefully again and trying to describe to an imaginary friend what information might be useful for solving the task. Following the feedback helped the students to read the task more thoughtfully in order to extract relevant data and information from the task that might be useful for solving the task. The MF was helpful to students to engage in successful Exploration/Analysis by identifying the unnoticed information for a better understanding of the task as well as to analyse the task.

Two cases successfully solved problematic situations during Hypothesis (i.e. having difficulties in planning a solution method). The MF suggested explaining to an imaginary friend what they had done so far, why they thought this might help to solve the task, and what they would need to know or do to solve the task. After following the received MF on the Hypothesis, students started to engage in hypothesis development by going back to their solution, and they could reason about what they had done so far, what the given example had stated, and why the example stated this.

Eleven problematic situations were solved during Justification (i.e. having difficulties in justifying their planned strategy to solve the task). The MF suggested that students justify why their strategy is the proper way to solve the task by giving the reason of their thinking process to an imaginary friend. After following the received MF on Justification, they started explaining the task and then read the solution strategy from the beginning. They went back to their script to reflect on why their solution strategy was right or not. The MF encouraged them to look back at their strategies, and then their thinking could indicate the path to solve the task by themselves by pointing out why their earlier strategies went wrong and what their mistake was.

Six problematic situations were solved during Application (i.e. having difficulties in verifying the result). In all of the actions concerning student’s choice of following the MF on Application, it was observed that they responded to the feedback by checking their solution again from the beginning, and they went back to their task and started revising. The MF helped on the Application by reminding students to go back to their solution method and to investigate from the beginning when they had made a simple mistake.

The following excerpt is an example from one student showing how all of the students’ responses were analysed. The example exemplifies how one student followed the feedback and how it helped her reasoning as well as solving the task by receiving MF on Hypothesis.

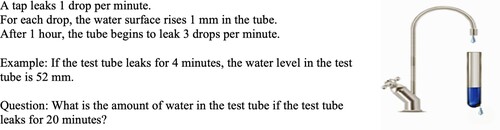

S1 needed help after trying to solve task number 5 (see Figure ). First, she tried to solve it as 52 – (16 * 3) = 4 mm and tried 4 mm as the answer. After getting corrective feedback that the answer was wrong, she clicked the third diagnosis (i.e. ‘I have tried different approaches but still can’t find a method to solve the task’) and got feedback that suggested she try to explain to an imaginary friend what she had done so far, why she thought this might help her to solve the task, and what she would need to know or do to solve the task.

There is evidence in the student’s reasoning that the student is following the feedback: The student thought out loud just after reading the feedback: ‘So, it is the difference … … ’. This, together with the observation of the student starting to explain the task information from the beginning and trying to reason what she had done and why she did so (‘ … and one drop is 1 mm. … .the water level in the test-tube is 52 mm. For 4 minutes 4 * 3 = 12, so when it’s 52, it has already lost 12 mm.’), were interpreted as indications that she was following the provided feedback. The student’s engagement, explaining the task and the example again, and trying to explain what she got and why, showed that she followed the provided feedback.

Table. Excerpts from the student’s (S1) solution.

| (b) | If the student can make progress utilizing the feedback: After reading the feedback, the student realized her mistake when she was trying to explain her reasoning to an imaginary friend. These quotes show her realizing her faulty hypothesis where she had not counted the test tube’s dripping, and she started to engage in the right hypothesis, as she thought aloud in the middle of her thinking process: So when it has leaked for 4 minutes, there’s 16 minutes left from 20 minutes. Oh no! The tap, the tap is still dripping when the test tube is dripping. Of course! … … because 4 is what I got if the tap was not dripping and only the test tube was dripping. | ||||

Here, in the case of S1, we can see that she used feedback for her argumentation, and she realized what was wrong with her previous solution methods. Therefore, MF on Hypothesis helped her to identify missing information from the task, and this led to new reasoning after getting the feedback (because she realized that she did not count the dripping from the tap and only counted the test tube’s dripping). Thus, she could adjust her strategies and could solve the task.

| (c) | If students thought the feedback was helpful: In the post-interview S1 mentioned the following concerning MF on the Hypothesis: When I thought about what I had done so far, that one (feedback) helped, because I had to go back. And had to tell you or my imaginary friend what I have done. Because when I ended up solving it, I used what I had already done. But I added the new thing I learned that, of course, the tap is still dripping while the test tube was dripping. | ||||

I think I could have solved it by reading the task again … . But I would not say what I had done so far, but this feedback pointed me to do so. I can go back as well. Not just read the task but read what I did as well, so that (feedback) helped. And I solved the task after doing so.

5.2.2. The ways in which HF was helpful to overcome problematic situations

In one case, the student asked for HF on Interpretation, that is, the student had difficulty understanding the task information of task-5. HF on Interpretation suggested that the student read the task carefully again and write down their own interpretation of the task, using their own words, figures, examples, etc. It was observed that the student responded to the feedback by reading the task and writing down useful information in their notebook as well as trying to draw a figure for the task. As a result, they could interpret what the task asked for and they understood that they needed to determine the amount of water leaking from the test tube for 20 min, which began to leak an hour after the tap started leaking.

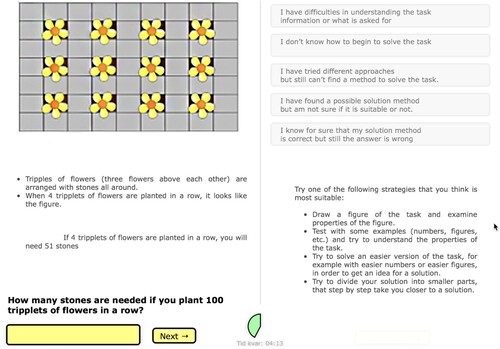

Three problematic situations were solved during Exploration/Analysis (i.e. having difficulties exploring useful data or analysing the task information), and the received HF suggested drawing a figure of the task or trying to solve an easier version of the task with simple numbers or trying to divide the solution strategy into smaller parts. Following the feedback helped the students to extract useful data/information and to analyse the task with small numbers, see the pattern, and so on. For example, in the Flower task (see appendix), the student was observed to test the given example with 1, 2, 3, and 4 rows of planting flowers and to find useful information (i.e. the amount of stone was increased by 11 for each row of planting flowers) and to make a plan to use their new-found task information for solving the task after following the HF.

Seven problematic situations were solved during the Hypothesis (i.e. having difficulties in planning a solution method). HF on Hypothesis suggested that students divide their solution plan into smaller parts, try with small numbers, etc. The HF helped students by motivating them to try an easier version of the solution of the task with small numbers to get an idea for a solution. For example, in the Chessboard task (see appendix) it was observed that the students were successful in finding a pattern by testing with simple numbers (5, 8, 10, etc.) on the given task example. Thus, the feedback helped them make a plan to use their new-found pattern for solving the task after following the HF.

Three problematic situations were solved during Justification (i.e. having difficulties in justifying their planned solution method). The HF suggested that students try to solve their solution method with some simple numbers, try to find a pattern from their simple example strategy, try to estimate if the answer is reasonable, and try to find an example showing that the method is wrong. After following the received HF on Justification, they could take a reflective look at their solution, they could point out what went wrong, could correct the mistake in the solution method, and could justify their method.

In one case, a student asked for and followed HF on the Application. It was observed that the student responded to the feedback by checking his solution again from the beginning, especially the calculation. The feedback helped the student to redo his calculation. The following excerpt is an example of how one student followed the HF on Justification and how it helped in his reasoning to solve the task.

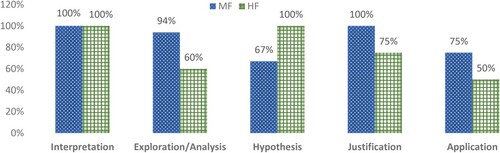

S8 was trying to solve task-4 (see Figure ) using estimation without verifying his method. He estimated that the answer should be 400, so he placed his answer in the answer box and got feedback that his answer was wrong. After that he tried to get help from the computer, so he clicked on the fourth diagnosis (i.e. ‘I have found a possible solution method but am not sure if it is suitable or not’) and read the MF, and he thought aloud: ‘So, I am thinking, if C is 100, then maybe T should be 200, maybe no. That’s impossible.’ Then he clicked on ‘more help’ for HF, which suggested he try to solve his solution method with some simple numbers and try to find a pattern from his simple example strategy.

There is evidence in the student’s reasoning that identifies connections to the feedback: The feedback suggested that the student test his method on one or a few simple examples, with simpler numbers than what is given in the task to see if it works, to find a pattern from his simple example strategy, and to make a conclusion from the observed pattern. The student thereafter started to explain out loud: ‘So, the pattern is every time C goes up by 1 degree, then T goes up by 2 degrees. So, I will try this method, I am going to count’. The student was thereafter observed to test his method on simple examples in order to find a pattern from his simple example strategy as well as to make a conclusion from this pattern. These observations together with the utterances were interpreted as evidence that he followed the feedback. How the student progressed by resuming his problematic situation is explained in the next part.

Table. Excerpts from the student’s (S-8) solution.

| (b) | If the student can make progress utilizing the feedback: After receiving feedback, S8 started again by analysing the task from the beginning and tried to justify his formulated solution method. Thereafter, he started to test the task with small examples and tried to solve easier versions of the task in order to find a pattern (as the feedback suggested him to do), which led to new reasoning after getting the feedback. Because he was trying an easier version of his previous (before feedback) solution method of the task with small numbers in order to get an idea for a solution. These quotes outline his comprehension as he started to engage in Justification by finding a pattern and trying to test his pattern, as he thought aloud: ‘OK, C = 10, T = 30; then it’s C = 20 should be T = 50; C = 25 should be T = 60; C = 30 should be T = 70; C = 35 should be T = 80; … ’ | ||||

So, after following the received feedback he managed to plan what should be a solution method and how to move forward for the solution with the successful engagement of Justification. Hence, he made progress utilizing the given feedback.

| (c) | If students thought the feedback was helpful: In the post-interview, he mentioned that feedback was useful for him to solve this task, ‘ … .try to convince why or why not in the proper way. It helped me to go back and find a pattern’. Though this student was not positive about the overall feedback, he asked for feedback that was connected to the task instead of general feedback in the post-interview conversation. | ||||

5.2.3. In what way was the (correct/incorrect) diagnosis helpful

Considering the correct/incorrect diagnosis in successful cases, in 53 cases the students chose the correct diagnosis situation, and in 11 cases they chose the incorrect diagnosis (see Table ). It was considered a correct diagnosis when it was observed from the observation and recordings that a student needed help in a specific problematic situation, and they clicked on the corresponding diagnosis to get help. However, if a student chose a different diagnosis than where they needed help, then this situation was considered an incorrect diagnosis. For example, students chose MF on Interpretation four times where it was observed that they needed feedback on Exploration/Analysis.

It was found that if students made an incorrect diagnosis and the correct diagnosis happened to be an adjacent diagnosis, then the feedback helped them to overcome the problematic situations. That is, feedback on Exploration/Analysis was helpful for students to resume problems on Hypothesis, and feedback on Interpretation was helpful to overcome problematic situations on Exploration/Analysis. For instance, if students used the feedback they received on Exploration/Analysis, which suggested students read the task carefully again and try to describe in their mind to an imaginary friend what information there is that might be useful for solving the task, then it makes sense that when students started explaining task information in their mind they also started to construct a solution strategy by analysing the task (e.g. analysing the example and making an illustration of the task). In that case, the feedback on Exploration/Analysis was enough because it complied with the suggestion to explain the task, which triggered students to move forward to construct a solution strategy while explaining the task. For example, S7 needed feedback on the Hypothesis, but she clicked on the adjacent diagnosis Exploration/Analyse in the Ice cream-making task (see appendix). She was observed to find both useful information (i.e. that the amount of water (1 L) and sugar (.2 kg) is always fixed, and the concentration of berry juice only varies to make the ice cream) and to make a plan using her new-found task information for solving the task after following the MF on Exploration/Analysis. The feedback suggested that she read the task carefully again and try to describe to an imaginary friend what information there is that may be useful for solving the task. Furthermore, it was also observed that students tended to read about a specific strategy (e.g. drawing a figure) in one task, and then they could use the same strategy in other tasks without reading about the strategy again. That is, the feedback provided a strategy that the student incorporated in their mind as a strategy for solving problems.

Regarding diagnosis statements, students mentioned in their post-interviews that they easily understood after reading all the statements where to click for getting feedback. Diagnostic statements helped them to locate difficulties during the problem-solving process because the diagnoses were designed based on different problem-solving phases. Some of them also thought this was a way to look back on the solution and reflect upon the difficulties.

5.2.4. Students failed to resume problematic situations

Eight problematic situations were observed where students could not resume the problem-solving process with the help of feedback. In only one of these situations, the student chose the correct diagnosis but still could not solve the problematic situation. In seven of these situations, the students chose incorrect diagnoses and failed to resume their problem-solving process (see Table ). For example, it was observed that students chose twice to get help on Exploration/Analysis, where the observer noticed they needed feedback on Justification.

However, it was also observed that the students used feedback at some point without resuming their problem-solving process in these eight situations. Especially if students made an incorrect diagnosis and the diagnosis was not adjacent (i.e. it was two or more steps away from the correct diagnosis), then students failed to resume their problem-solving process. Additionally, it was also observed in some students’ cases when students failed to resume the problem-solving process that students did not follow the feedback properly (i.e. they were reluctant to draw a figure of the task or to develop the previously drawn figure with one or two more extensions or to test the example with simple numbers and then calculate and check the new figure) or they just read part of the feedback instead of carefully reading the entire text of the feedback. Nonetheless, MF was not enough for all students, and they needed HF. Additionally, some students also expressed some frustration because the computer did not provide direct feedback about what to look for in a particular task, and they asked for feedback that was connected to the task.

5.3. Summary

Students were in general positive about the feedback they received, and they thought it was helpful for them. When looking into more detail at the use of both MF and HF, both feedbacks can be used in a formative way as a support to overcome a problematic situation during problem-solving. For the second research question, it can be concluded that all of the students were often helped by the feedback in some way. Information from the interviews indicated that the feedback helped them to try again instead of giving up, motivated them to go back to the task solving process again to solve the task, and suggested ways and strategies on how to proceed. They expressed that the feedback helped them to look back, argue with themselves about why their method was right or wrong, and make judgments and go forward.

6. Discussion

6.1. Can general MF/HF be useful?

Instead of being provided with specific feedback after one individual task as in other feedback studies (Narciss & Huth, Citation2006), in the current study feedback suitable for mathematical problems, in general, was provided based on diagnoses chosen by the students to fit their specific difficulty. In the current study, the usefulness of general MF and HF for students is to solve a specific task, and earlier research (Kapa, Citation2007) has shown that similar kinds of feedback are helpful for the long-term development of general problem-solving abilities because the corresponding self-reflection is an essential part of problem-solving competence. From the current study, by providing empirical evidence for the general MF and HF on the development of problem-solving steps, we can conclude that there are problematic situations in which general MF or HF could be helpful for students. Moreover, this study was not designed to make any comparisons between these two types of feedback, but rather showed that the helpfulness of MF and HF were tied significantly to the student’s choice of using feedback during problem solving.

In contrast to experimental studies of MF, which employ MF during problem-solving (Kramarski & Zeichner, Citation2001)with step-by-step guidance via problem mapping/examples of solved tasks (e.g. Kapa, Citation2007) as well as hints related to the task (e.g. Harskamp & Suhre, Citation2007), in the current study the general MF provided in an exploratory study based on students’ self-diagnosis when they had problematic situations. In the current study, self-diagnosis was employed to support student’s problem-solving skills, and the feedback was provided in the form of reflecting questions and/or self-explaining, and these types of self-reflecting feedback were found to be effective for learning (Schworm & Renkl, Citation2002). Therefore, by requiring students to make individual choices, the design of this study’s diagnoses and feedback prompted students to engage cognitively in the learning process. That is, the students were required to be involved in their problem-solving process by clarifying/understanding the problem they were facing, and as such the process was characterized by a strong emphasis on the consideration step in the self-regulated learning cycle (Zimmerman, Citation2000).

6.2. Adjacent feedback seems to help in resuming problematic situations

Whether the provided feedbacks were helpful or not depended on the student’s ability to decide how, when, and whether to use the feedback. Knowing when and how to seek help during problem solving is a key self-regulatory skill (Pintrich, Citation2000). In the current study, it was important to identify the correct diagnosis even though some incorrect diagnoses led to progress. Especially, if it appeared to be an incorrect diagnosis of an adjacent diagnosis this helped students to resume their problematic situations on Interpretation, Exploration/Analysis, and Application (see Table ). A possible explanation for this outcome could be related to the component of the feedback. MF on Interpretation and Exploration/Analysis were almost the same, which suggested that students go back to the task and carefully read the task information again. The MF was to self-explain, such as, ‘What information is there that may be useful for solving the task’ and ‘Why do you think that your way of trying is the proper way (or not) to solve the task’. These directive guidance questions during the problem-solving process induced the construction of complex knowledge by promoting students’ thinking process relevant to the specific solution (see King, Citation1994). This self-explaining helped the students to resolve misunderstandings by monitoring their progression during the problem solving (Chi & Bassok, Citation1988), to evaluate their current solution method (i.e. before receiving feedback), and make judgments about pursuing a new direction to solve the task. For example, during a Justification/Application step, the students were involved in the execution of their planned method or strategy. But the planned method was executed based on the previous steps. Therefore, monitoring and evaluating the strategy is important to avoid faulty steps. Thus, if students need help on the Justification step, but choose the incorrect diagnosis of the Hypothesis step, these feedbacks are often helpful enough to construct the task solution because the feedback consists of the formations/directions about what to do if someone does not know how to construct a task solution. This direction gives the student another chance to monitor and control their solution and to rectify it if necessary. Another possible reason could be that participants may have been following the feedback they received from a previous task while working on the next task because the feedback was to self-explain what they had done. The result of this study is in line with other studies where it has been found that MF reminded students to monitor and refine their cognitive strategy use (H. W. Lee et al., Citation2010).

6.3. The probable reason for skipping or ignoring (metacognitive) feedback

Regarding students’ skipping or ignoring feedback, it must be considered that unlike many other studies (e.g. Bokhove, Citation2010) this study did not provide feedback automatically, and instead gave students the autonomy to choose feedback depending on their problematic situations. The feedback design of the current study, in which the MF was provided/presented first and then HF was given, showed (as also revealed from the interviews with some students) that some students tended to ignore the MF because the MF suggested self-explanation, which they perceived to be time consuming when they were supposed to complete the task in a limited time. It was also observed that students mostly ignored MF and followed HF in the last half of the tasks, and the reason for this might be that students already discovered from the first half of the tasks that the MF they received was self-explanation/self-convincing of their work whereas HF gave them some kinds of suggestion of what to do and how to do it. Though students successfully used MF in 76.6% of the situations, they tended to ignore MF and moved on to HF for the rest of the situations, especially on Hypothesis (making a plan). This result may be due to the fact that students were aware of the fact that the ‘MF’ they were receiving on the Hypothesis was not enough to help them make a plan. In other words, it is ‘simply’ because making a plan is the hardest part and they needed more help with that. As a result, students may have wanted to get more HF help during the Hypothesis problematic situations because HF provided heuristic strategies for making a plan. Moreover, MF and HF support might vary among different students because of their individual differences in cognitive abilities. For example, students with weak cognitive skills might not be helped by the information of general MF and HF, as Fyfe et al. (Citation2015) found in their study that students with lower working memory benefitted less from strategy feedback. Another reason may have been the lack of the ability to effectively use the feedback right away (see in A. Jonsson, Citation2013). Perhaps, students were used to receiving solution methods from their teacher, thus, in this study when they were receiving MF first, they were not satisfied with the feedback and looked for more strategies in HF or direct solution methods for the task. However, if students are provided with HF before MF, then they are given more specific guidance and do not have the same opportunity to apply their own metacognitive reflection/judgement on their solution method. By instead providing MF before HF as in this study, the students are given somewhat more responsibility for creating their own solution method which is in line with Brousseau’s (Citation1997) devolution of problem.

6.4. Task-specific feedback

While this study supports providing general MF and HF to students during problem solving, the author does not claim that the MF and HF of the current study provide a complete set of feedback for promoting problem-solving strategies. Rather, the author argues more generally in favour of providing general MF and HF for problem solving to foster critical thinking. It is also noted that in some cases it has been observed that general MF and HF did not help students to resume their problem-solving process. This is especially the case when students wrongly tried to construct a solution method by calculating the average or percentage based on the given example. In that case, students might need feedback related to a specific task, that is, feedback that specifies what to look for or what to do in a particular task. This also urges a modification to some of the feedback in the current study. For example, one possibility would be to add task-specific feedback with hints (e.g. Harskamp & Suhre, Citation2007) after providing MF and HF. Moreover, the effect of the usefulness of the feedback may depend on the student’s motivation and self-efficacy, which has been found to be positively associated with effort and persistence (Schunk, Citation1995) as well as enhanced cognitive engagement and the useful practice of learning strategies (Zimmerman, Citation2000). Thus, students with a higher level of self-efficacy might make greater efforts or be better able to follow MF and HF. Therefore, a future study direction could be examining how and to what extent self-efficacy and motivation affect the use of feedback.

6.5. Limitations and importance of the study

When interpreting the results of this study, some methodological limitations of the study need to be considered. Although the students were positive about the evaluation of the computer programme during the interview, it might be likely that they were mainly being polite to the interviewer. To minimize this influence during interviews, the students were asked to reflect on their problematic situations (i.e. why they had used particular feedback/help and what was helpful or not for solving problematic situations). Even though the diagnosis and feedback were developed based on a pilot study, choosing the correct diagnosis was shown to be rather challenging for some students in some cases. The reason could be that the students were not used to self-diagnosis and received feedback on problem-solving processes. Moreover, since the timing for each task was fixed (i.e. 10 min),Footnote2 in some cases (9.1%, 11/121) the time was too short to resolve the problematic situations after students received feedback. In these cases, the students decided to use the feedback nearly at the end of the given time but did not succeed. Other potential factors (e.g. age, gender, prior knowledge in mathematics of the participant) might also influence the results, but these are not considered in the present study.

The present study proposes an environment that incorporates the occurrence of MF and HF for supporting problem-solving processes through a unique computerized model without a human tutor. That does not mean that this study suggests replacing the teachers’ role with a computer environment, but rather that computer support can be a supplement to teachers (e.g. teachers can use the design of this study to reduce their workload). This study can therefore be seen as an example of how computer based general MF and HF be used for supporting problem-solving as a first step of providing feedback (i.e. before providing task-specific feedback to students). The results and the design of this study can be used for supporting students while still devolving the main responsibility for constructing the solution method for the students.

This paper does not claim that the list of diagnoses and the feedback that was considered in this paper is a complete set of diagnoses and feedback for any problem-solving situation; rather, there is room for evaluation and improvement. In conclusion, teachers need to consider these types of general MF and HF to develop their students’ problem-solving strategies. That is, teachers should help students to recognize the general problematic situations regarding task-solving phases by using MF and HF. Teachers can employ the findings of this study as an instrument to apply formative assessment during instruction to support creative reasoning.

Supplemental Material

Download MS Word (468.6 KB)Acknowledgements

The author is thankful to Johan Lithner and Carina Granberg for their thoughtful comments and guidance that enriched the article.

Disclosure statement

No potential conflict of interest was reported by the author.

Notes

2 From earlier studies, it was observed that five minutes was most often sufficient time for students to solve these kinds of tasks (e.g., B. Jonsson et al., Citation2014).

References

- Andrade, H., & Valtcheva, A. (2009) Promoting learning and achievement through self-assessment. Theory into Practice, 48(1), 12–19. https://doi.org/10.1080/00405840802577544

- Attali, Y., & van der Kleij, F. (2017). Effects of feedback elaboration and feedback timing during computer-based practice in mathematics problem solving. Computers & Education, 110, 154–169. https://doi.org/10.1016/j.compedu.2017.03.012

- Bennett, R. E. (2011). Formative assessment: A critical review. Assessment in Education: Principles, Policy & Practice, 18(1), 5–25. https://doi.org/10.1080/0969594X.2010.513678

- Bergqvist, T., Lithner, J., & Sumpter, L. (2008). Upper secondary students’ task reasoning. International Journal of Mathematical Education in Science and Technology, 39(1), 1–12. https://doi.org/10.1080/00207390701464675

- Black, P., & Wiliam, D. (1998). Assessment and classroom learning. Assessment in Education: Principles, Policy & Practice, 5(1), 7–74. https://doi.org/10.1080/0969595980050102

- Black, P., & Wiliam, D. (2009). Developing the theory of formative assessment. Educational Assessment, Evaluation and Accountability, 21(1), 5–31. https://doi.org/10.1007/s11092-008-9068-5

- Boesen, J., Helenius, O., Bergqvist, E., Bergqvist, T., Lithner, J., Palm, T., & Palmberg, B. (2014). Developing mathematical competence: From the intended to the enacted curriculum. The Journal of Mathematical Behavior, 33, 72–87. https://doi.org/10.1016/j.jmathb.2013.10.001

- Bokhove, C. (2010). Implementing feedback in a digital tool for symbol sense. International Journal for Technology in Mathematics Education, 17(3), 121–126.

- Brookhart, S. M. (2018). Summative and formative feedback. In A. A. Lipnevich & J. K. Smith (Eds.), The Cambridge handbook of instructional feedback (pp. 52–78). Cambridge University Press.

- Brousseau, G. (1997). Theory of didactical situations in mathematics: Didactique des Mathématiques, 1970–1990. Springer Netherlands.

- Carlson, M., & Bloom, I. (2005). The cyclic nature of problem solving: An emergent multidimensional problem-solving framework. Educational Studies in Mathematics, 58(1), 45–75. https://doi.org/10.1007/s10649-005-0808-x

- Chi, M. T., & Bassok, M. (1988). Learning from examples via self-explanations. Technical Report No. 11.

- Clark, I. (2012). Formative assessment: Assessment is for self-regulated learning. Educational Psychology Review, 24(2), 205–249. https://doi.org/10.1007/s10648-011-9191-6

- Fyfe, E., DeCaro, M., & Rittle-Johnson, B. (2015). When feedback is cognitively-demanding: The importance of working memory capacity. Instructional Science, 43(1), 73–91. https://doi.org/10.1007/s11251-014-9323-8

- Harskamp, E., & Suhre, C. (2007). Schoenfeld’s problem solving theory in a student controlled learning environment. Computers & Education, 49(3), 822–839. https://doi.org/10.1016/j.compedu.2005.11.024

- Hattie, J., & Timperley, H. (2007). The power of feedback. Review of Educational Research, 77(1), 81–112. https://doi.org/10.3102/003465430298487

- Hiebert, J. (2003). What research says about the NCTM standards. In J. Kilpatrick, W. G. Martin, & D. Schifter (Eds.), A research companion to principles and standards for school mathematics (pp. 5–23). National Council of Teachers of Mathematics.

- Jonsson, A. (2013). Facilitating productive use of feedback in higher education. Active Learning in Higher Education, 14(1), 63–76. https://doi.org/10.1177/1469787412467125

- Jonsson, B., Norqvist, M., Liljekvist, Y., & Lithner, J. (2014). Learning mathematics through algorithmic and creative reasoning. The Journal of Mathematical Behavior, 36, 20–32. https://doi.org/10.1016/j.jmathb.2014.08.003

- Kapa, E. (2007). Transfer from structured to open-ended problem solving in a computerized metacognitive environment. Learning and Instruction, 17(6), 688–707. http://doi.org/10.1016/j.learninstruc.2007.09.019

- King, A. (1994). Guiding knowledge construction in the classroom: Effects of teaching children how to question and how to explain. American Educational Research Journal, 31(2), 338–368. https://doi.org/10.3102/00028312031002338

- Kramarski, B., & Zeichner, O. (2001). Using technology to enhance mathematical reasoning: Effects of feedback and self-regulation learning. Educational Media International, 38(2-3), 77–82. https://doi.org/10.1080/09523980110041458

- Lampert, M. (1990). When the problem is not the question and the solution is not the answer: Mathematical knowing and teaching. American Educational Research Journal, 27(1), 29–63. https://doi.org/10.3102/00028312027001029

- Lee, H., Chung, H. Q., Zhang, Y., Abedi, J., & Warschauer, M. (2020). The effectiveness and features of formative assessment in US K-12 education: A systematic review. Applied Measurement in Education, 33(2), 124–140. https://doi.org/10.1080/08957347.2020.1732383

- Lee, H. W., Lim, K. Y., & Grabowski, B. L. (2010). Improving self-regulation, learning strategy use, and achievement with metacognitive feedback. Educational Technology Research and Development, 58(6), 629–648. https://doi.org/10.1007/s11423-010-9153-6

- Lester, F. K., & Cai, J. (2016). Can mathematical problem solving be taught? Preliminary answers from 30 years of research. In P. Felmer, E. Pehkonen, & J. Kilpatrick (Eds.), Posing and solving mathematical problems. Research in mathematics education. Springer. https://doi.org/10.1007/978-3-319-28023-3_8

- Liljedahl, P., & Cai, J. (2021). Empirical research on problem solving and problem posing: A look at the state of the art. ZDM – Mathematics Education, 53(4), 723–735. https://doi.org/10.1007/s11858-021-01291-w

- Lithner, J. (2008). A research framework for creative and imitative reasoning. Educational Studies in Mathematics, 67(3), 255–276. https://doi.org/10.1007/s10649-007-9104-2

- Mason, J., Burton, L., & Stacey, K. (1982). Thinking mathematically. Addison-Wesley.

- Narciss, S., & Huth, K. (2006). Fostering achievement and motivation with bug-related tutoring feedback in a computer-based training for written subtraction. Learning and Instruction, 16(4), 310–322. http://doi.org/10.1016/j.learninstruc.2006.07.003

- NCTM. (2000). Principles and standards for school mathematics. National Council of Teachers of Mathematics.

- Niss, M. (2003). Mathematical competencies and the learning of mathematics: The Danish KOM Project.

- Palm, T. (2008). Impact of authenticity on sense making in word problem solving. Educational Studies in Mathematics, 67(1), 37–58. https://doi.org/10.1007/s10649-007-9083-3

- Pintrich, P. R. (2000). Chapter 14 – the role of goal orientation in self-regulated learning. In M. Boekaerts, P. R. Pintrich, & M. Zeidner (Eds.), Handbook of self-regulation (pp. 451–502). San Diego: Academic Press.

- Polya, G. (1945). How to solve it. Princeton University.

- Rott, B., Specht, B., & Knipping, C. (2021). A descriptive phase model of problem-solving processes. ZDM – Mathematics Education, 53(4), 737–752. https://doi.org/10.1007/s11858-021-01244-3

- Schoenfeld, A. H. (1985). Mathematical problem solving. Academic Press.

- Schoenfeld, A. H. (1992). Learning to think mathematically: Problem solving, metacognition, and sense making in mathematics. In (pp. 334–370).

- Schoenfeld, A. H. (2014). What makes for powerful classrooms, and how can we support teachers in creating them? A story of research and practice, productively intertwined. Educational Researcher, 43(8), 404–412. https://doi.org/10.3102/0013189x14554450

- Schunk, D. H. (1995). Self-efficacy and education and instruction. In J. E. Maddux (Ed.), Self-efficacy, adaptation, and adjustment: Theory, research, and application (pp. 281–303). Plenum Press. https://doi.org/10.1007/978-1-4419-6868-5_10

- Schraw, G., Crippen, K. J., & Hartley, K. (2006). Promoting self-regulation in science education: Metacognition as part of a broader perspective on learning. Research in Science Education, 36(1), 111–139. https://doi.org/10.1007/s11165-005-3917-8

- Schworm, S., & Renkl, A. (2002). Learning by solved example problems: Instructional explanations reduce self-explanation activity.

- Shute, V. J. (2008). Focus on formative feedback. Review of Educational Research, 78(1), 153–189. https://doi.org/10.3102/0034654307313795

- Sidenvall, J., Lithner, J., & Jäder, J. (2015). Students’ reasoning in mathematics textbook task-solving. International Journal of Mathematical Education in Science and Technology, 46(4), 533–552. https://doi.org/10.1080/0020739X.2014.992986

- Stiggins, R. (2006). Assessment for learning: A key to motivation and achievement. EDge: The Latest information for the education practitioner, 2(2), 1–19.

- Sullivan, P., McBrayer, J. S., Miller, S., & Fallon, K. (2021). An examination of the use of computer-based formative assessments. Computers & Education, 173, 104274. https://doi.org/10.1016/j.compedu.2021.104274

- Van der Kleij, F. M., Feskens, R. C. W., & Eggen, T. J. H. M. (2015). Effects of feedback in a computer-based learning environment on students’ learning outcomes: A meta-analysis. Review of Educational Research, 85(4), 475–511. https://doi.org/10.3102/0034654314564881

- Verschaffel, L., Schukajlow, S., Star, J., & Van Dooren, W. (2020). Word problems in mathematics education: A survey. ZDM, 52(1), 1–16. https://doi.org/10.1007/s11858-020-01130-4

- Wiliam, D., & Thompson, M. (2008). Integrating assessment with learning: What will it take to make it work? In: Routledge.

- Wiliam, D., & Thompson, M. (2008). Integrating assessment with learning: What will it take to make it work? In C. A. Dwyer (Ed.), The future of assessment: Shaping teaching and learning. (pp. 53–82). Routledge.

- Wilson, J. W., Fernandez, M. L., & Hadaway, N. (1993). Mathematical problem solving. Research ideas for the Classroom: High School Mathematics, 57, 78.

- Wood, H., & Wood, D. (1999). Help seeking, learning and contingent tutoring. Computers & Education, 33(2), 153–169. https://doi.org/10.1016/S0360-1315(99)00030-5

- Yimer, A., & Ellerton, N. F. (2010). A five-phase model for mathematical problem solving: Identifying synergies in pre-service-teachers’ metacognitive and cognitive actions. ZDM, 42(2), 245–261. https://doi.org/10.1007/s11858-009-0223-3

- Zimmerman, B. J. (2000). Attaining self-regulation: A social cognitive perspective. In M. Z. Monique Boekaerts & R. P. Paul (Eds.), Handbook of self-regulation (pp. 13–39). Academic Press.