ABSTRACT

Predictive Maintenance (PdM) solutions assist decision-makers by predicting equipment health and scheduling maintenance actions, but their implementation in industry remains problematic. Specifically, prior research repeatedly indicates that decision-makers often refuse to adopt the data-driven, system-generated advice in their working procedures. In this paper, we address these acceptance issues by studying how PdM implementation changes the nature of decision-makers’ work and how these changes affect their acceptance of PdM systems. We build on the human-centric Smith-Carayon Work System model to synthesise literature from research areas where system acceptance has been explored in more detail. Consequently, we expand the maintenance literature by investigating the human-, task-, and organisational characteristics of PdM implementation. Following the literature review, we distil ten propositions regarding decision-making behaviour in PdM settings. Next, we verify each proposition’s relevance through in-depth interviews with experts from both academia and industry. Based on the propositions and interviews, we identify four factors that facilitate PdM adoption: trust between decision-maker and model (maker), control in the decision-making process, availability of sufficient cognitive resources, and proper organisational allocation of decision-making. Our results contribute to a fundamental understanding of acceptance behaviour in a PdM context and provide recommendations to increase the effectiveness of PdM implementations.

1. Introduction

Modern production technologies have dramatically increased the complexity and maintenance-related costs of the production process (Jardine, Lin, and Banjevic Citation2006), while the requirements for the reliability of equipment are higher than ever. Predictive Maintenance (PdM), one of the protagonists of Industry 4.0, promises to meet these requirements in a cost-efficient manner (Bukhsh and Stipanovic Citation2020; Koochaki et al. Citation2012). More specifically, PdM monitors the health of asset components (such as engines and gearboxes), estimates the remaining useful life of each component, and optimises asset maintenance schedules based on the predicted future state of those components (Lee et al. Citation2014; Lei et al. Citation2018). However, although recent research has generated increasingly advanced predictive techniques and maintenance optimisation algorithms (see reviews by Alaswad and Xiang Citation2017 and De Jonge and Scarf Citation2020), few organisations have successfully implemented data-driven PdM systems (Grubic et al. Citation2011; Mulders and Haarman Citation2017).

Research seeking to address PdM implementation issues has typically concentrated around increasing PdM’s technological capabilities (Dalzochio et al. Citation2020; Sheikhalishahi, Pintelon, and Azadeh Citation2016), while some research focusses on the organisational challenges of PdM implementation (Aboelmaged Citation2014; Veldman, Klingenberg, and Wortmann Citation2011). Nowadays, however, researchers recognise that human factors (i.e. design considerations that optimise human well-being and overall performance, Dul et al. Citation2012) should be central in designing PdM technology (Neumann et al. Citation2021; Sahli, Evans, and Manohar Citation2021), an approach termed ‘Industry 5.0’ (European Commission 2021). More specifically, Shafiee (Citation2015) stresses that decision-makers’ acceptance of PdM technologies is vital, as implementation is fruitless when a technology is rejected by its users. However, such rejections are typically observed in industry, as decision-makers often refuse to accept a PdM system’s advice (Ingemarsdotter et al. Citation2021; Tiddens, Braaksma, and Tinga Citation2015).

In the current paper, we define decision-makers as those employees who are responsible for operational decision-making in the domain of maintenance: instructing mechanics when to perform maintenance actions on certain components. Acceptance of PdM systems, in turn, is the degree to which these decision-makers actually use the system-generated advice in their decisions, ranging from complete rejection to perfect compliance (Venkatesh and Bala Citation2008).

Maintenance research typically assumes compliance with data-driven advice (Bousdekis et al. Citation2021), but research has recently noted the challenge of decision-makers’ limited willingness to accept PdM systems. For example, Bokrantz et al. (Citation2020) investigated PdM implementation through focus groups and interviews and found that change culture and poor technological design encourage decision-makers’ rejection of PdM. Golightly, Kefalidou, and Sharples (Citation2018) obtained similar findings through expert interviews, additionally encouraging organisations to increase decision-makers’ knowledge of the system to facilitate adoption. Ingemarsdotter et al. (Citation2021) suggest, after a multiple-case study, that applying explainable algorithms for PdM reduces the barrier for its acceptance. Lundgren et al. (Citation2022) interviewed maintenance managers, who believe high workforce age and poor organisational change culture incite resistance to PdM in decision-makers. Wellsandt et al. (Citation2022) explored the use of ‘digital assistants’ in PdM systems to address its limited adoption and identify the benefits, limits, and challenges of adopting such technologies.

In the current paper, we aim to uncover the factors that promote decision-makers’ acceptance of PdM in their working procedures and take a more holistic approach by applying the Smith-Carayon model of the Work System (Smith and Carayon-Sainfort Citation1989; Carayon Citation2009; Citation2006). This model, in line with Industry 5.0, posits that humans are the centre of the Work System and that all other elements that constitute the workplace (called domains) are interdependent and should be designed around humans to facilitate wellbeing and, consequently, acceptance. We build on the model to investigate how PdM implementation transforms workplace domains and, through theory-driven research, formulate propositions describing how the integration of PdM at work impacts the acceptance of professionals working with these advanced systems. Subsequently, we verify each proposition’s relevance for literature, industry, and future research through in-depth interviews with six experts from academia and industry. Based on the propositions and interviews, we identify the overarching themes that facilitate PdM acceptance at work.

The contributions of this paper are as follows. First, we uncover several factors that influence decision-makers’ acceptance of data-driven PdM decision-support by building on the Work System model, a framework that explains the impact of implementing new technologies on decision-makers, by synthesising literature from various research areas. As such, we propose a comprehensive framework on the adoption of data-driven PdM systems and expand the scope of previous research by exploring the impact of a multitude of interdependent work elements and decision-makers’ characteristics on PdM adoption. Through our broad review of literature, we respect the interdisciplinary nature of PdM adoption by decision-makers (Burton, Stein, and Jensen Citation2020). We describe the framework and review process in Section 2.

Second, following our synthesis, we provide ten testable, theory-driven, and evidence-based propositions regarding the impact of PdM implementation on decision-makers and their acceptance behaviour in Sections 3 through 7. Through expert interviews, we verify the propositions’ relevance for academia and industry. We present the interview process in Section 8 and results in Section 9. From the propositions and the interviews, we identify control, trust, demands and resources, and organisational allocation of decision-making as the overarching factors that enhance PdM adoption, presented in Section 10. Finally, Section 11 provides a discussion and puts forward a future research agenda.

2. Framework

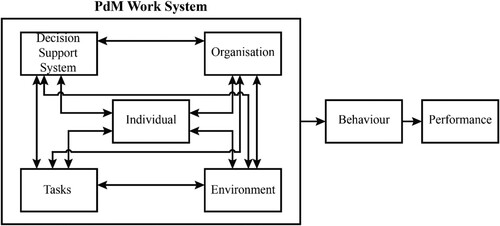

The Smith-Carayon Work System model, depicted in Figure , (Smith and Carayon-Sainfort Citation1989; Carayon Citation2009; Citation2006) integrates all facets of work and systemises how technological change affects decision-makers. The model is mostly applied in healthcare research for structuring literature reviews (Peavey and Cai Citation2020; Salahuddin and Ismail Citation2015) and investigating acceptance issues with new technologies (Walker et al. Citation2018). As the logic underlying maintenance- and healthcare decision-making processes is comparable – both intend to restore and keep their target at a healthy and functional state (Mobley Citation2002) – applying the Work System model is useful for maintenance research.

Figure 1. Conceptual Framework of the PdM Work System with Behavioural and subsequent Performance Outcomes, adapted from Smith and Carayon-Sainfort (Citation1989).

Previous research on PdM implementation applies technology-focused frameworks in understanding implementation issues, dismissing tasks’ or individual decision-makers’ characteristics (see Aboelmaged Citation2014 and Ingemarsdotter et al. Citation2021). However, as PdM transforms the organisation, work environment, and decision-maker’s tasks (Neumann et al. Citation2021), a framework should ideally also incorporate these characteristics. Moreover, previous research often looks at implementation barriers in isolation (e.g. how the organisation hinders PdM implementation, how technology hinders PdM implementation, etc.), while a framework should recognise the interdependencies between the implementation issues (Burton, Stein, and Jensen Citation2020). The ergonomic and holistic Work System model meets these two requirements.

Ergonomically, the Work System model posits that technology should be human-centric (Dul et al. Citation2012), as implementing new technologies at work often induces adverse changes that impede employee wellbeing (Smith and Carayon Citation1995), resulting in strain if they are not compensated by beneficial changes (Smith and Carayon-Sainfort Citation1989). Strain, in turn, causes maladaptive behaviours, hindering the use and acceptance of technology at work and hurts overall performance (Bakker and Demerouti Citation2018). Therefore, to address people’s rejections of data-driven suggestions, we review which adverse changes PdM implementation imposes on decision-makers.

Holistically, the Work System divides jobs into components called domains and surmises that all domains interactively influence decision-maker behaviour. Note that we substitute Technology in the original model for Decision-Support System (DSS), which better fits the PdM context, rendering the following domains:

Decision-Support System: a computer programme that assists the human in the decision-making process using PdM algorithms.

Individual: the human at the centre of the model, (s)he has both physical and psychological characteristics.

Tasks: the specific tasks or activities to be performed.

Organisation: the organisational conditions under which tasks are performed.

Environment: the physical environment where tasks are performed.

In the Work System, changes to one domain cascade to other domains. Moreover, domains are interdependent through interactions, meaning that if PdM implementation imposes changes, these will be influenced by the characteristics of other domains. Figure indicates these interactions with double-headed arrows. To generate propositions for acceptance behaviour, we review which domain characteristics, domain interactions and beneficial changes to the Work System can mitigate the adverse effects of PdM implementation.

In our model, unlike Smith and Carayon-Sainfort (Citation1989), we position Behaviour explicitly as a product of the Work System rather than as component of it. The outcome of behaviour is Performance which, in our model, represents the quality of the maintenance decisions made. As such, we emphasise that (acceptance) behaviour precedes performance and study how PdM characteristics influence behaviour rather than performance.

The acceptability of algorithms is an interdisciplinary topic, necessitating an integration of existing theory (Burton, Stein, and Jensen Citation2020). We review literature based on suggestions by Webster and Watson (Citation2002). First, we determine our search terms through combining keywords with a conceptual model, search for relevant papers in the Scopus database to find peer-reviewed scientific material (Martín-Martín et al. Citation2018) and complement this set with more practice-oriented operations management materials from Google Scholar (Chapman and Ellinger Citation2019). Next, we apply backward citation chasing (researching papers’ reference list) to find older materials that inspired these initial papers and forward citation chasing (researching papers referencing those found previously) to find newer materials that use or extend these papers (Webster and Watson Citation2002). Appendix A contains our search terms and process in more detail.

3. Pdm decision support system

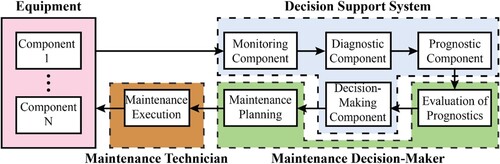

The PdM Decision Support System (DSS) facilitates informed decision-making (Arnott and Pervan Citation2008; Shibl, Lawley, and Debuse Citation2013). PdM processes consist of five subsequent phases (Wellsandt et al. Citation2022) that, traditionally, are performed by humans:

Signal processing: acquiring information regarding asset behaviour;

Diagnosis: transforming asset behaviour to diagnoses of equipment health;

Prognosis: predicting future equipment health based on current health;

Maintenance Decision-Making: generating schedules for maintenance action based on expected equipment health;

Acting: performing the required maintenance action on the asset.

Phase 5 is out of scope for our research, as it concerns the replacement of parts by mechanics instead of a decision-maker’s tasks. Humans are supported in Phases 1–4 by DSS units, respectively named: the monitoring, diagnostic, prognostic, and decision-making units (Asadzadeh and Azadeh Citation2014; Li, Chun, and Yeh Ching Citation2005). In this paper, we focus on Phases 3 and 4, as PdM implementation introduces the associated prognostic and decision-making units to the Work System.

3.1. DSS: demand or resource?

Algorithms have long surpassed humans regarding the speed, quantity, and accuracy of calculations. Meta-analyses corroborate this for algorithms forecasting psychological and medical diagnoses (Dawes, Faust, and Meehl Citation1989; Grove et al. Citation2000). Reviews comparing human and automated forecasting capabilities obtain similar findings (Hogarth and Makridakis Citation1981; Sanders Citation1992). Unsurprisingly, human decisions based on DSS advice are generally of higher quality than unaided decisions (De Baets and Harvey Citation2018; Webby, O’Connor, and Edmundson Citation2005).

For decision-makers to process system-generated advice, they need to exert effort. Job elements that require physical- or cognitive effort from the individual are referred to as demands, while we refer to job elements that are functional in achieving goals, reducing job demands, or stimulating personal growth as resources (Demerouti et al. Citation2001). As decision-makers’ (cognitive) resources are limited, well-designed DSSs reduce the demands placed on individuals and facilitate decision-making resources, increasing decision-making quality (Hertel et al. Citation2019). However, poorly designed systems impose additional demands such as superfluous information and complex interfaces (Meeßen, Thielsch, and Hertel Citation2020), decreasing decision-making quality and job performance (Demerouti et al. Citation2001; Webby, O’Connor, and Edmundson Citation2005). Eventually, when demands outweigh resources over a long period, decision-makers can refuse to use the system in an ultimate attempt to reduce demands (Cegarra and Hoc Citation2008; Petrou et al. Citation2012).

Even though PdM DSSs theoretically improve maintenance decision-making (Jardine, Lin, and Banjevic Citation2006), actual benefits are falling short (Grubic et al. Citation2011). Generally, DSS development does not consider the end-users’ needs (Meeßen, Thielsch, and Hertel Citation2020), to which maintenance DSSs are no exception (Labib Citation2004; Tretten and Karim Citation2014). Maintenance decision-makers are often overwhelmed by the complexity of the system (Grubic et al. Citation2011; Tiddens, Braaksma, and Tinga Citation2015) or the output (Bukhsh and Stipanovic Citation2020). Consequently, decision-makers often refuse to use PdM in their work setting. We therefore propose:

Proposition 1: Maintenance decision-makers who experience the PdM DSS as demand-imposing rather than resource-facilitating are less likely to use it.

3.2. Trusting the DSS

A decisive factor for PdM acceptance is whether decision-makers trust the system (Shibl, Lawley, and Debuse Citation2013; Ghazizadeh, Lee, and Boyle Citation2012). Higher levels of trust increase decision-makers’ intent to use a DSS (Meeßen, Thielsch, and Hertel Citation2020) and, subsequently, the acceptance of the system and its advice (John Lee and Moray Citation1992; Riedel et al. Citation2010). Three dimensions of trust exist when referring to system use (John Lee and Moray Citation1992; Riedel et al. Citation2010):

Performance-related trust: expecting consistent and quality system performance

Process-related trust: understanding the underlying characteristics of system behaviour and belief in their appropriateness

Purpose-related trust: belief in the good intentions of the system designer

3.2.1. Performance-related trust issues

When moving from traditional, scheduled maintenance to PdM, performance trust is affected. PdM systems calculate replacement intervals using monitoring-, diagnostic- and prognostic DSS units, not human expertise. This is significant, as decision-makers treat system-generated and human forecasts differently. Ex-ante, decision-makers prefer system-generated advice over human advice in several forecasting settings (Logg, Minson, and Moore Citation2019). However, humans falsely expect that systems are perfectly accurate (Prahl and Van Swol Citation2017; Dzindolet et al. Citation2002), a criterion that is absent for advice given by humans (Alvarado-Valencia and Barrero Citation2014). Additionally, decision-makers believe that humans learn from errors, but DSSs cannot (Burton, Stein, and Jensen Citation2020; Dietvorst, Simmons, and Massey Citation2015; Citation2018). Therefore, once decision-makers ex-post observe that the DSS has made a mistake, they are reluctant to use the DSS henceforth and instead prefer to rely on human advice, a phenomenon referred to as algorithm aversion (Dietvorst, Simmons, and Massey Citation2015; Dzindolet et al. Citation2002; Prahl and Van Swol Citation2017). Given that the literature presented above concerns system-generated advice for forecasts, we believe algorithm aversion holds for PdM decision-making. Thus, we propose:

Proposition 2: After observing that advice quality is poor, maintenance decision-makers are more likely to continue using PdM advice generated by human experts than PdM advice generated by a DSS.

3.2.2. Process-related trust issues

Process-related trust relates to the transparency of an algorithm, i.e. the degree to which the system user understands the transformation of system inputs to outputs. DSSs with low transparency are referred to as black-boxes. Black-boxes cannot explain their decisions to decision-makers, causing low process-related trust (Rai Citation2020; Rudin Citation2019). Given the black-box nature of PdM applications (Bukhsh and Stipanovic Citation2020), low process trust towards them likely ensues.

Understanding the PdM algorithm and believing in its appropriateness establishes process-related trust with decision-makers (Riedel et al. Citation2010). Both are attainable by involving users in DSS development. Design participation increases users’ understanding of the applied models (Lawrence, Goodwin, and Fildes Citation2002), increasing black-box transparency. Moreover, users believe the DSS fits the workplace requirements and is relevant to their job if they were involved in its design (Bano and Zowghi Citation2015; Sabherwal, Jeyaraj, and Chowa Citation2006; J. He and King Citation2008). This effect is positively associated with the degree of user involvement and control in design (Lawrence, Goodwin, and Fildes Citation2002; Riedel et al. Citation2010).

The notion of perceived control influencing process trust extends into the system’s operational phase. Dietvorst, Simmons, and Massey (Citation2018) performed a series of experiments in which decision-makers adjusted system-generated forecasts of student test scores. They found that decision-makers were more likely to use algorithms whose output they could adjust, even if the adjustment size permitted was limited (Dietvorst, Simmons, and Massey Citation2018). As prognostics typically apply machine learning models (Traini, Bruno, and Lombardi Citation2021), increasing process-related trust through such adjustments is very relevant. Therefore, we propose:

Proposition 3: DSSs that have been (1) developed with the participation of maintenance decision-makers, (2) use transparent algorithms, or (3) give them the control to adjust model outcomes are more likely to be accepted by maintenance decision-makers.

3.2.3. Purpose-related trust issues

The final dimension of trust relates not to the DSS itself but reflects trust in its designers and their intentions. DSS designers’ intentions depend on the project's initiation, i.e. the rationale for implementing the PdM DSS (Tiddens, Braaksma, and Tinga Citation2020). Project initiation is induced by a technology push to implement newly available PdM technologies or a decision pull that seeks a technological solution out of economic necessity (Ton et al. Citation2020). While initiation can be a combination of both (Tiddens, Braaksma, and Tinga Citation2020), increasing maintenance cost efficiency is a common motive for implementing PdM techniques (Jardine, Lin, and Banjevic Citation2006; Mulders and Haarman Citation2017; Tiddens, Braaksma, and Tinga Citation2015). Managers often focus on cost savings with PdM implementation, but decision-makers demand that the system create practical value for decision-making (Golightly, Kefalidou, and Sharples Citation2018). If decision-makers believe that managers and designers intend to cut costs instead of help them, purpose trust suffers (Riedel et al. Citation2010). Therefore, we propose:

Proposition 4: When maintenance decision-makers are unconvinced by the provided rationale or the designers’ intentions explaining why a PdM DSS needs to be implemented, they are less likely to use the DSS.

3.3. Shifting preferences through information frames

The formats and frames in which decision-makers receive information also influence behaviour. The seminal paper on information frames by Tversky and Kahneman (Citation1981) shows the effects of framing on human behaviour through the now famous Asian Disease problem: people display risk-averse behaviour through gain-frames (saving lives), whereas risk-seeking behaviour emerges through loss-frames (preventing deaths). In other words: people select sure gains over risky gains but select risky losses over sure losses. A meta-analysis of health message framing found that gain-frames are more effective in encouraging healthy prevention behaviour than loss-frames (Gallagher and Updegraff Citation2012). This resembles PdM decision-making, which concerns the execution of prevention behaviour (maintenance action) to boost equipment health. Here, gain-frames could be advice promoting increased safety and remaining useful life through maintenance action, whereas advice through loss-frames would emphasise the reduction in safety and remaining useful life associated with not performing maintenance action. Given that framing effects have been well-researched and persist across many decision-making settings (Kühberger, Schulte-Mecklenbeck, and Perner Citation1999; Williams and Noyes Citation2007; McNeil et al. Citation1982), we propose:

Proposition 5: Presenting PdM decision-making alternatives through gain-frames increases the likelihood of PM action being issued by the maintenance decision-maker compared to decision-making alternatives presented through loss-frames.

3.4. Organising automated decision-making

In an increasingly digital world, we can automate large parts of the decision-making process. Assigning tasks through direct comparisons of capabilities leaves humans with an arbitrary set of tasks (Bainbridge Citation1983; Hendrick Citation2003), as the human is assigned the tasks the developer could not formalise. Already in 1983, Bainbridge (Citation1983) discusses how assigning tasks based on direct comparison leads to monitoring tasks for humans and increases the problems facing them. She associates automation with a loss of cognitive skills, as knowledge is not retrieved frequently in a monitoring task (Bainbridge Citation1983). Even though automation of decision-making processes reduces job demands initially, its passiveness can decrease job meaningfulness and, subsequently, job satisfaction (Karasek Citation1979).

Authors see the challenge of automating PdM decision-making (Bokrantz et al. Citation2017; Koomsap, Shaikh, and Prabhu Citation2005), but automation is not always desirable (Rudin Citation2019). In PdM, the signal processing phase can be automated meaningfully, but the possibilities for automating detection, prediction, and maintenance decision-making are limited as they require human interpretation of results (Wellsandt et al. Citation2022). Conversely, manual operation of the entire PdM process is computationally infeasible for humans. Instead of choosing between human or automated approaches, researchers suggest more value is achieved by creating settings where humans and systems cooperate to achieve a common goal (Fügener et al. Citation2020).

With new AI-based algorithms, PdM systems can fulfil an assistant role to decision-makers (Bousdekis et al. Citation2021; Wellsandt et al. Citation2022), but also request information from the human decision-maker before generating advice (Langer and Landers Citation2021). This collaboration is known as human-in-the-loop or decision augmentation in OM, or adaptable automation in ergonomics (Vagia, Transeth, and Fjerdingen Citation2016). In decision augmentation, the human decision-maker is involved in and in control of decision-making and determines the level of automation (Leyer and Schneider Citation2021; von Stietencron et al. Citation2021). Through augmentation, performance benefits from the combination of the algorithm’s vast computational capabilities and the human’s ability to anticipate unforeseen events beyond system parameters (de Cremer and Kasparov Citation2021; Fügener et al. Citation2020; Lepenioti et al. Citation2020), while decision-makers benefit through retained job meaningfulness. Therefore, we propose:

Proposition 6: Maintenance decision-making processes in which human-system interaction is organised based on the combined strength of humans and systems will outperform fully automated or completely manual solutions.

4. Tasks

PdM abandons the conservative and static maintenance intervals of more traditional maintenance policies for dynamic intervals based on equipment failure predictions. This amounts to a just-in-time approach to replacements and repairs (Koochaki et al. Citation2012), which has consequences for maintenance scheduling.

4.1. Human adjustments of system-generated advice

In scheduled maintenance strategies, maintenance decision-making is synonymous to maintenance scheduling. When scheduling, humans attempt to optimise the utilisation of available resources by organising maintenance activities (Pintelon, Du Preez, and Van Puyvelde Citation1999). Contrary to beliefs in literature, scheduling is not merely solving a mathematical problem (De Snoo, Van Wezel, and Jorna Citation2011); it is a dynamic task that requires flexibility when adjusting maintenance schedules (Pintelon, Du Preez, and Van Puyvelde Citation1999). As such, human decision-makers are essential in the scheduling process (De Snoo et al. Citation2011). They are more problem solvers than schedulers (Berglund and Karltun Citation2007), continuously rescheduling system-generated schedules based on new information (Fransoo and Wiers Citation2006; Van Wezel, McKay, and Wäfler Citation2015). Traditional maintenance advice is typically based on system age (Zhao et al. Citation2021), a static variable, while PdM accounts for natural equipment deterioration (He et al. Citation2020). As such, schedules are updated due to frequent estimates of remaining useful life or analyses of manufactured products quality (Koomsap, Shaikh, and Prabhu Citation2005; Zhao et al. Citation2021), increasing the rescheduling frequency (Fransoo and Wiers Citation2006).

Moreover, prognostics is not perfect in its predictions of equipment failure. As long as models cannot generate faultless forecasts, which is not a realistic expectation in the near future, humans will be required in the forecasting process to evaluate system output (Leitner and Leopold-Wildburger Citation2011; Wellsandt et al. Citation2022). Prior research on human-system interactions in sales forecasting indicates that humans do not strictly adhere to system-generated advice and often adjust system output (Fildes et al. Citation2009; Franses and Legerstee Citation2009; Fransoo and Wiers Citation2008). A literature review of empirical works on sales forecasting procedures by Perera et al. (Citation2019) substantiates these findings. This means that, given the opportunity, humans will likely modify system-generated output in PdM settings. Hence, we propose:

Proposition 7: Humans tasked with evaluating system-generated maintenance advice will generally adjust prognostic calculations and subsequent system-generated maintenance schedules.

4.2. Prioritising scheduling over the schedule

Decision-makers may have various reasons to adjust system output, such as overcoming algorithm aversion (Dietvorst, Simmons, and Massey Citation2015), increasing decision-making autonomy (Bakker and Demerouti Citation2017), responding to incentives and preferences (Scheele, Thonemann, and Slikker Citation2018; Özer, Zheng, and Ren Citation2014), or simply demonstrating engagement with forecasting tasks to supervisors (Fildes et al. Citation2009; Patt and Zeckhauser Citation2000). However, decision-makers themselves indicate they pursue accuracy (Fildes et al. Citation2009). Forecasting literature prescribes that for accuracy to benefit from adjustments, they should be based on contextual information: knowledge that cannot be captured by DSS components (Perera et al. Citation2019; Lawrence et al. Citation2006).

While prognostics has a straightforward goal of generating accurate estimates, scheduling goals vary (Simões, Gomes, and Yasin Citation2016). Optimal schedules determine the sequence of work orders that make efficient use of available resources (Pintelon, Du Preez, and Van Puyvelde Citation1999). Thus, academics have made scheduling techniques for PdM systems that minimise costs or maximise reliability based on diagnostics and prognostics (Alaswad and Xiang Citation2017). However, maintenance decision-makers adhere to more requirements than this optimisation puzzle (Van Wezel, McKay, and Wäfler Citation2015). De Snoo, Van Wezel, and Jorna (Citation2011) introduce two categories of performance measures that schedulers apply: measures related to the scheduling product and measures related to the scheduling process. The scheduling product concerns the schedule itself and how well it conforms to internal and external constraints, with measures such as maintenance budget and the utilisation rate per machine (Simões, Gomes, and Yasin Citation2016). The quality of the scheduling process reflects how effectively schedules are created, communicated, and adjusted, which includes measures such as maintenance team flexibility and the number of disputes between operators and maintenance technicians (Simões, Gomes, and Yasin Citation2016).

When uncertainty increases, schedulers shift their focus from the scheduling product to the scheduling process (De Snoo, Van Wezel, and Jorna Citation2011). This makes sense from the scheduler's role as problem solver: uncertain environments, where dynamic information flows constantly (De Snoo, Van Wezel, and Jorna Citation2011), require more rescheduling and thus the ability to reschedule correctly (De Snoo et al. Citation2011). This is significant for PdM decision-making, where replacements are scheduled on continuously updated diagnostics and prognostics. Therefore, PdM schedules are less definitive than those based on traditional replacement intervals (Arts and Basten Citation2018), and we propose:

Proposition 8: The focus of maintenance decision-makers tasked with scheduling under PdM lies with scheduling process quality rather than scheduling product quality.

5. Individual

The individual is at the centre of the Work-System model, and (s)he is the pivot on which the domain interactions balance. Even when the Work-System domains are balanced, humans can display erratic decision-making patterns.

5.1. Biases and heuristics

Humans are boundedly rational and influenced by biases and heuristics as a consequence (Donohue, Özer, and Zheng Citation2020; Gino and Pisano Citation2008). Biases are systematic errors that affect decisions and judgments (Gino and Pisano Citation2008), representing some deviation from a rational decision-making outcome (Bendoly et al. Citation2010). Heuristics are mental shortcuts that reduce the complexity of a decision-making task to simple, judgmental operations (Loch and Wu Citation2007; Tversky and Kahneman Citation1974), representing a deviation from a rational decision-making process (Bendoly et al. Citation2010). Numerous biases and heuristics influence decision-making (see reviews by Carter, Kaufmann, and Michel Citation2007 and Gino and Pisano Citation2008). Without proper precautions, they can lead to severe systematic errors (Tversky and Kahneman Citation1974).

5.1.1. Overconfidence effects

A common bias with severe implications for operations is overconfidence (Bendoly et al. Citation2010; Hilary and Hsu Citation2011). Overconfidence is the tendency of decision-makers to believe the information they possess is more accurate than it is and to overestimate their performance (Bendoly et al. Citation2010; Gino and Pisano Citation2008). An early example of performance overestimation comes from Svenson (Citation1981), who shows that a majority of people regard themselves as above-average drivers. Literature shows the bias persists across various decision-making settings (Bendoly et al. Citation2010; Hilary and Hsu Citation2011; Lawrence et al. Citation2006).

Goodwin, Sinan Gönül, and Önkal (Citation2013) demonstrate that human forecasters tighten the confidence intervals of adjusted system-generated forecasts, showing they believe they can more accurately predict than the system. Humans also discount the advice of the algorithm compared to their judgment, even after receiving feedback on decreasing accuracy through adjusting (Dietvorst, Simmons, and Massey Citation2015; Lim and O’Connor Citation1995). Decision-makers overestimate their forecasting capabilities in point forecasts and interval forecasts (Goodwin, Moritz, and Siemsen Citation2018), both of which are applied in PdM (Jardine, Lin, and Banjevic Citation2006). Moreover, overconfidence effects increase with task complexity (Davis Citation2018), implying they will persist in PdM decision-making. Given the prevalence of overconfidence, we propose:

Proposition 9: Maintenance decision-makers generally overestimate the probability of their adjustments increasing the accuracy of system-generated predictions and the magnitude by which their adjustments to system-generated PdM predictions increase accuracy.

6. Organisation

Organisational conditions describe to what degree teamwork, employee participation, supervision, job security, and technical training are supported or discouraged by the company (Smith and Carayon-Sainfort Citation1989). Company structure and task allocation over the hierarchical levels of the organisation are also part of this domain.

6.1. Assigning forecast ownership

Traditionally, maintenance decision-making is centralised and involves scheduling only (Crespo Marquez and Gupta Citation2006). The transition to PdM introduces prognostics to the maintenance DSS and, as depicted in Figure , additional human decision-making. The organisation must decide where to locate the task of evaluating prognostics in the organisation. We identify three options based on Sanders and Ritzman (Citation2004): a third party, a prognostics department, or the existing maintenance department.

Figure 2. Graphical Representation of Human-System Interaction in PdM.

Outsourcing prognostics to a third party places it outside the organisation where forecast objectivity is highest (Sanders and Ritzman Citation2004). Veldman, Klingenberg, and Wortmann (Citation2011) show that companies already use external partners for specialised diagnostics, which can be extended to prognostics (Arts, Basten, and Van Houtum Citation2019; Bukhsh and Stipanovic Citation2020).

Internally, prognostics can be assigned to a separate maintenance analytics department, similar to how many companies set up departments for demand forecasting (Fildes et al. Citation2009; McCarthy et al. Citation2006). The rationale behind this division is also similar (Scheele, Thonemann, and Slikker Citation2018): a specialised unit can monitor component degradation, while a scheduling department is most knowledgeable regarding available resources for maintenance execution.

Alternatively, we can assign prognostics responsibilities to the traditional maintenance function, meaning prognostics are made and used by the same organisational unit. Communication will be easy, which is an advantage as PdM requires frequent rescheduling.

Assigning prognostics to the existing maintenance department introduces the highest risk of subjective decision-making. That is, incentives can tempt decision-makers to adjust remaining useful life estimations to their benefit (Fildes et al. Citation2009; Goodwin, Moritz, and Siemsen Citation2018; Pennings, Van Dalen, and Rook Citation2019), or decision-makers might focus on the scheduling process rather than the output. Given that the same department will be responsible for generating and using the prognostics, there is little supervision regarding its performance. The added benefits of PdM are subsequently low, as optimisation of equipment lifetime is not the primary concern.

Separating prognostics responsibilities from the existing maintenance tasks through a prognostics department or third party offers advantages in terms of effectiveness. PdM models are generally black-boxes to traditional maintenance departments (Bukhsh and Stipanovic Citation2020; Ton et al. Citation2020), to which a specialised internal business unit with a better understanding of the models is an effective solution. Even though advocacy- and incentive-based biases occur in these configurations (Pennings, Van Dalen, and Rook Citation2019), proper contracts can mitigate their effects (Scheele, Thonemann, and Slikker Citation2018; Oliva and Watson Citation2009; Topan et al. Citation2020). Moreover, the responsibility for unexpected breakdowns now lies with either department, as schedulers can be penalised for disregarding accurate information, and forecasters can be penalised for providing inaccurate prognostics. Therefore, we propose:

Proposition 10: Evaluation of prognostics will occur most effectively when those attending to this task are organisationally separated from those who attend to maintenance scheduling tasks.

7. Environment

The environmental factors of the Work System refer to the physical context in which the job is performed. Lighting, temperature, noise, and office layout are all factors that can influence decision-making behaviour. Ergonomic considerations and governmental regulations exist regarding workplace and environmental factors, as well as ergonomic principles for guiding physical workplace design.

When making the maintenance decisions studied in this paper, decision-makers are unlikely to face the environment where the maintenance action is executed. Hence, factors as work safety and proper workplace conditions are less relevant for maintenance decision-making. As such, the environment of maintenance decision-makers is comparable to that of decision-makers elsewhere. The physical context and the distance to the equipment under maintenance will matter, but we believe this to be practically synonymous with organisational separation as discussed in Section 7.

8. Interview procedure

To corroborate our theory-based propositions, we interview six maintenance and behavioural experts from academia and industry. The aim of the interviews is to verify the relevance of the propositions for current and future research and industry. We invited a purposive set of experts from the authors’ networks to obtain a sample with diverse backgrounds and considerable work experience (5+ years); we offer some information about them in Table .

Table 1. Description of Interview Participants

Sessions were conducted with respondents individually. Respondents received the propositions sequentially and never saw the propositions before the session. After respondents read a proposition, we asked three questions: A) whether the expert believes the proposition is true, B) whether it is currently relevant for maintenance operations, and C) whether it has future relevance when implementing PdM technologies (see Appendix B for exact questions). Through these questions, respondents can claim a proposition is true but practically irrelevant (for PdM implementation), or vice versa.

After six interviews, we reached thematic saturation and no additional experts were invited. Interviews lasted from 1 to 1.5 h. Interviews were recorded, manually transcribed, and processed using NVivo 12; a software package for analysing qualitative data. Next, we coded answers with two codes per question: true or false for Question A, relevant now or not relevant now for Question B, and relevant PdM or not relevant PdM for Question C. Finally, we tallied the support expressed for the propositions by each expert, investigated their provided reasons for supporting or opposing the propositions, and reported outspoken similarities and surprising differences among them.

9. Interview results

We structure the presentation of our results as follows. We provide a summary of the proposition, followed by the respondents’ support per question in parentheses (A: True, B: Relevant now, C: Relevant PdM). Next, we explain the majority opinion and add quotes from respondents, either to illustrate the majority opinion or express a strong minority opinion. We provide an overview of support for propositions in Table . Notable differences between academics’ and practitioners’ expressed support are mentioned in-text and available in Appendix C. Propositions that receive high support and are seen as relevant by experts are analysed in Section 10 (Implications) while propositions that receive low support and are seen as irrelevant by experts are analysed in Section 11 (Discussion).

Table 2. Number of Respondents that agree with the Questions per Proposition

Proposition 1 – Demanding DSS used little (A:5, B:5, C:6)

Respondents recognise the importance of P1 in PdM implementation. Since maintenance staff typically have too much work already, respondents believe that ‘systems that impose additional demands are doomed to fail’ (M3). M1 notes that decision-makers will avoid the system and instead look for workarounds. To improve PdM acceptance, DSSs should be designed as ‘an extension of decision-makers, not a burden in their daily work’ (M3).

Proposition 2 – DSSs are ignored faster than humans after erring (A:6, B:6, C:6)

‘This is true, I have seen this endlessly’ says R6 immediately after reading. ‘Even if human advice has caused many problems in the past, that advice is worth more than what comes out of a system’ (M2). According to M1, there is a ‘self-fulfilling prophecy’: humans demand perfection from a DSS they distrust and, given that prognoses are never perfect, see their distrust confirmed constantly. A2 notes that P2 will be true as long as systems are imperfect. So, respondents see P2 as pivotal in PdM implementation and stress the need for a human interpreter of prognostics. ‘Even companies with smart, self-learning maintenance prognostics algorithms let humans do the analysis and communication. All because of trust’ (A1).

Proposition 3 – DSS use increases through (1) participative design (A:5, B:4, C:3), (2) transparent algorithms (A:4, B:2, C:3), or (3) allowing adjustments to outcomes (A:4, B:4, C:4)

Respondents mostly believed all elements to be true, but do not believe P3.1 and P3.2 are practically attainable. M2 and A3 believe that you cannot assemble a collective of decision-makers to co-design the system such that all stakeholders in the company are satisfied with that collective. A1 knows P3.1 is undesirable for companies, as companies claim that ‘we are not a playground, we want things that function and we can work with’. Respondents fear that P3.2 is contradictory (M1, A1, A2, A3), as PdM algorithms are too complex to be understandable for decision-makers. Most respondents believe P3.3 to become relevant when prognostics are involved, but A2 warns that ‘adjusting forecasts helps usage, but not necessarily acceptance’.

Proposition 4 – DSSs are used less when decision-makers mistrust those implementing the system (A:5, B: 6, C:6)

Companies prefer talking about how DSSs will save them money rather than expressing how automation will address decision-makers’ needs, which is how decision-makers’ mistrust arises (M1, M2 & A3). ‘The IT department within a steel production company had developed an alarm clustering tool. IT and management were pleased, but decision-makers remained unconvinced. In the end, the tool had to be cancelled’ (A1). P4 will become more prominent in the future, as manufacturers produce equipment with built-in prognostics that decision-makers believe ‘are only put in there to make more money’ (A1).

Proposition 5 – Issue PM under gain frame, wait under loss frame (A:0, B:3, C:5)

Respondents were oblivious to this framing effect and, therefore, unwilling to say P5 is true. A1 has seen a company abandon loss frames for gain frames in its DSS, but observed no different behaviour. Generally, maintenance decision-makers want keep the asset operational and favour sure options over risky ones. ‘Perhaps, when you face scarcity or time pressure, framing might nudge decision-makers to make better decisions’ (M2). A2 believes that, if true, loss frames can help PdM attain its promise to prevent conservative maintenance action. Therefore, future research into frames is highly anticipated by most. A3 would rather first see research into what specific information is best to give decision-makers before thinking about potential framing effects.

Proposition 6 – Human-System interaction outperforms humans and systems separately (A:5, B:5, C:6)

Respondents are clear: full automation is undesirable. ‘People offer you access to additional information that is useful for analysis’ (A1), information that the system cannot possess. Respondents believe humans should be the ones to make the final decision. However, A3 warns that P6 is only true if the interaction is adequate and of high quality: ‘Currently, many systems with interaction exist that are worse than automated and manual solutions’.

Proposition 7 – Decision-makers adjust system output (A:5, B: 5, C:6)

The practitioners immediately replied: true, people think they know best. During the interview, they nuanced their statements and explained that decision-makers adjust to accommodate pre-existing schedules, priorities, and intuitions. A2 believes that P7 is untrue as it only holds if the DSS is relatively new and its quality is unknown. However, the other respondents believe that ‘nobody follows a system that, even slightly, goes against their beliefs’ (M2). Respondents agree that P7 will become very relevant in the future, as adjustments can tell us a lot about the human-system interaction and where flaws in the system still exist.

Proposition 8 – Schedulers prioritise scheduling process over quality (A:6, B:6, C:5)

M1 and M2 believe decision-makers are more preoccupied with gathering and communicating information than they are with making an optimal schedule. According to M3 and A1, making a schedule is not complicated. However, the process of acquiring the required information, preparing different schedules for different scenarios, and communicating the correct schedule to all stakeholders requires significant effort. A3 estimates 75% of schedulers’ time is devoted to this role of ‘information hub’, and would not be surprised to see this number increase under PdM when more asset health information is introduced.

Proposition 9 – Decision-makers overestimate the quality of their interventions (A:4, B: 3, C:6)

M1 and M3 agree with P9 and have this seen many times in practice. They see a considerable threat for PdM efficacy, as ‘decision-makers need to be able to make necessary adjustments, but you have to convey that not all their adjustments are necessary or useful’ (M3). Contrarily, A1 emphasises that decision-makers are ‘very humble’ and reserved regarding their capabilities, so as not to breach trust put in them. A3 notes that P9 depends on the type of adjustment and the scope of the system. ‘It is difficult to overestimate the quality of adjustments for parameters outside of system scope. If the system is really good, then P9 becomes true’.

Proposition 10 – Scheduling and prognostics should have separate departments (A:4, B:2, C:5)

Managers affirmed the problem of conflicting interests, and they provide additional rationale for P10 by claiming scheduling and prognosis are two different organisational processes that require different skills and levels of technical know-how. Respondents suggest organising separation laterally rather than hierarchically so that prognostic and scheduling business units both have responsibility for the maintenance process. A1 and A3 are not convinced of this approach, but add that they lack the experience to explicitly claim P10 is wrong.

10. Implications and recommendations

The PdM Work System Model not only looks at how the domains influence decision-maker behaviour but also at how the domains interact with the influence other domains have on behaviour. Now, we review the domain interactions that, according to the experts, impact PdM acceptance behaviour most. We summarise our findings into four general PdM support factors:

Setting an appropriate degree of human control in PdM decision-making (P3.3, P6, P7, P8 & P9).

Creating trust between the decision-maker and the model (maker) (P2 & P4).

Providing sufficient cognitive resources to decision-makers to deal with the high cognitive demands posed by the system while using it (P1).

Allocating decision-making responsibilities and capabilities to the appropriate organisational unit (P2, P6 & P10).

The support factor human control suggests PdM adoption benefits from explicitly taking into consideration decision-makers’ desire for control and propensity to adjust system-generated outcomes. For example, prognostic models can provide intervals instead of point forecasts, and scheduling algorithms can delegate priority-setting for maintenance tasks to human decision-makers. Such control can be achieved through decision augmentation, where human-system interaction leverages decision-makers’ contextual knowledge to attain high-quality outcomes. Acceptance of advice of employees downstream of the decision-maker (e.g. mechanics, machine operators) also benefits from human control, as employees prefer human control over the decisions that affect them (Langer and Landers Citation2021). Like Leyer and Schneider (Citation2021), our respondents warn that human control alone is not necessarily beneficial and that it should be configured appropriately. As such, we encourage maintenance researchers to explore and compare augmented decision-making designs that allow beneficial and meaningful involvement from decision-makers.

Regarding the support factor trust, decision-makers are more likely to adopt PdM technology when they understand its benefits and agree with management’s motives leading up to PdM implementation. Acceptance suffers when (decision-makers believe) managers or system-designers are driven by costs instead of decision-makers’ interests or asset health. Our respondents stress that many decision-makers assume that automation is installed to replace human resources. Therefore, managers and designers should clarify their intentions and assure themselves decision-makers’ trust before initiating implementation. When trust is established and decision-makers welcome PdM, they are more willing to accept initial implementation issues. As such, trust can facilitate the change process.

Our results also suggest that practitioners should ensure that the available job resources meet the demands that are placed on decision-makers when implementing PdM systems. As Golightly, Kefalidou, and Sharples (Citation2018) also suggest, additional demands associated with PdM implementation can overburden available resources. Consequently, decision-makers might avoid using the system to reduce the extra demands imposed on them. When practitioners ensure that the PdM Work System’s demands on decision-makers do not outweigh their resources, decision-makers are less likely to seek the workarounds mentioned by the experts, facilitating more optimal performance. Future research identifying resources and demands in the PdM Work System increases awareness of their importance among academics and practitioners and helps them facilitate balanced work environments for decision-makers.

Finally, we advocate assigning prognostic and scheduling to separate organisational decision-making units. With this suggestion, we challenge the belief in literature that centralising PdM decisions will necessarily improve decision-making (Topan et al. Citation2020; Mezafack, Di Mascolo, and Simeu-Abazi Citation2021). Under centralisation, contextual information and the power of human-system collaboration are lost, while decision-making is at risk of corruption and advocacy bias. Moreover, our experts indicate prognostics and scheduling require vastly different skills. Through separation, existing specialists are not overburdened with new tasks while decision-making quality improves through specialisation. The experts indicate that these teams should operate in close proximity of each other, so some degree of centralisation is required. Like Ingemarsdotter et al. (Citation2021), we encourage researchers to examine what decision-making and centralisation structures promote PdM adoption.

11. Discussion and research agenda

In this paper, we make several contributions to academia and industry. First, we integrate human factors and decision-making literature with production and PdM literature using the PdM Work System model. We formulate ten theory-based propositions that express how PdM implementation influences various human factors and PdM acceptance. As such, practitioners can consult the current work to better understand impact of moving from a traditional maintenance strategy to an advanced PdM driven policy on maintenance decision-makers. After performing expert interviews on the relevance of our propositions, we synthesise the propositions into more general PdM Support Factors. Our support factors are design considerations that satisfy human needs and increase acceptance to attain PdM benefits, echoing the Industry 5.0 vision that technologies should benefit both the organisation and its employees. Together, the propositions and support factors have the potential to aid academics and practitioners in their efforts to design a resilient, human-centric PdM system.

During the interview analyses, we noted little recognition for P3.1, P3.2, and P5. P3.1 suggests to develop algorithms with decision-makers but, according to the experts, underestimates practitioners’ preference for demonstrable products over a lengthy development procedure. The same holds for P3.2, developing transparent algorithms, while experts also believe explainable PdM is an arduous challenge. Previous research by Bokrantz et al. (Citation2020) and Ingemarsdotter et al. (Citation2021) promotes the development of transparent algorithms, but we caution future authors that companies might not be willing to invest the effort required for explaining algorithms to decision-makers. Proposition 5, regarding framing in PdM, was never regarded as true due to the unfamiliarity of respondents with the topic. However, respondents foresee value in applying frames in PdM DSSs, and they advocated for research on this topic.

Due to our thorough review of literature and subsequent interviews, we are confident the remaining propositions and their implications will hold. However, the propositions remain at a high abstraction level and, due to the theory-based research set-up, we have potentially overlooked more practice-specific propositions. Also, given the human-centric predisposition of this paper, our propositions primarily evaluate interactions with the individual. PdM Work System interactions not directly involving the individual, such as organisational attitude towards technological change (DSS-Organisation) and the organisational knowledge of maintainable equipment (Organisation-Environment), might also have acceptance-critical impact. We encourage researchers to explore new interactions and formulate new propositions, or further specify and validate existing propositions.

Both case-study and experimental research are viable follow-up research. Case studies acquire contextual data through focusing on a real-life phenomenon (Barratt, Choi, and Li Citation2011), and have been performed successfully before to investigate Work System domain interactions (Tiddens, Braaksma, and Tinga Citation2015; Veldman, Klingenberg, and Wortmann Citation2011). Propositions 3.1, 3.2, 4, 6, 8, 9, and 10 are most suitable for case study review. These propositions describe intricate relationships between decision-makers and PdM Work System domains that can only be adequately studied on the work floor.

Experiments quantify the impact of change in a variable on other variables, which is useful for formalising domain interactions. If the topic of research is reducible to an independent, manipulatable variable, experiments are suitable. This is eminently the case for propositions 1, 2, 3.3, 5 and 7.

As an example, we suggest that experimental research in PdM decision-making behaviour should apply the paper by Bolton and Katok (Citation2018) as a blueprint. Their cost-loss game is remarkably similar to PdM decision-making, as participants decide to either pay a cost to avoid a larger loss (perform PM) or to not pay this cost at the risk of paying the loss given a certain probability of losing (postpone PM). The authors study how advice formats affect human decision-making in this game. Follow-up research by Tan and Basten (Citation2021) shows the potential of this experimental setup to capture new behavioural tendencies. Propositions 2 and 5 are good candidates to be examined through a similar approach, as they represent a relationship between advice characteristics and human follow-up thereof. Research exploring the manipulation of different variables in cost-loss games, such as the advice format or source, is therefore promoted.

This paper provides a first glimpse of how the Industry 5.0 vision can be assimilated in PdM. We hope that our results serve as a stepping stone for practitioners toward broader acceptance of PdM in industry. Additionally, we hope that this paper is used by behavioural and maintenance researchers as a resource, both through its literature review and results, for starting their own work on human factors in PdM. We believe that future research will develop interesting theories of human behaviour in PdM contexts, contributing to an understanding of the requirements that create sustainable, human-centric PdM systems.

Supplemental Material

Download MS Word (14.3 KB)Supplemental Material

Download MS Word (16.1 KB)Acknowledgements

We are grateful to the six experts for their participation and invaluable contributions to our study. This research was approved by the Ethical Review Board of the Eindhoven University of Technology, reference number ERB2021IEIS31a.

Disclosure statement

No potential conflict of interest was reported by the author(s).

Data availability statement

Due to the nature of this research, participants of this study did not agree for their data to be shared publicly, so supporting data is not available.

Additional information

Funding

Notes on contributors

Bas van Oudenhoven

Bas van Oudenhoven is currently pursuing a PhD degree at the Eindhoven University of Technology, where he is a researcher in the Human Performance Management group, School of Industrial Engineering. He is active in the behavioural operations management field, with a research focus on the effective use of decision-support tools in the area of Predictive Maintenance. He is a member of the consortium PrimaVera (Predictive maintenance for Very effective asset management), for which he collaborates with various high-tech public and private companies.

Philippe Van de Calseyde

Philippe Van de Calseyde is an Assistant Professor of organisational behaviour at the Eindhoven University of Technology (TU/e). His background is mainly in the areas of judgment and decision-making. Philippe’s research focuses on understanding how situational- and cognitive factors influence people’s judgments and decisions. Specific interests include how people respond to the decision-speed of others, interpersonal trust, human cooperation, negotiations, and the role of emotions in decision-making. In testing these relationships, he mostly conducts experiments and field studies.

Rob Basten

Rob Basten is an Associate Professor at the Eindhoven University of Technology (TU/e), where he is primarily occupied with after sales services for high-tech equipment. He is especially interested in using new technologies to improve services. For example, 3D printing of spare parts on location and using condition monitoring information to perform just in time maintenance. He is further active in behavioural operations management, trying to understand how people can use decision support systems in such a way that they actually improve decisions and add value. Many of his research projects are interdisciplinary and performed in cooperation with high-tech industry.

Evangelia Demerouti

Evangelia Demerouti is a Full Professor at Eindhoven University of Technology (TU/e) and Distinguished Visiting Professor at the University of Johannesburg. Her research focuses on the processes enabling performance, including the effects of work characteristics, individual job strategies (including job crafting and decision-making), occupational wellbeing, and work-life balance. She studied psychology at the University of Crete (Greece) and obtained her PhD in Work and Organisational Psychology (cum laude, 1999) from the Carl von Ossietzky Universität Oldenburg (Germany). She has published over 200 national and international papers and book chapters and is associate editor of the Journal of Occupational Health Psychology.

References

- Aboelmaged, Mohamed Gamal. 2014. “Predicting E-Readiness at Firm-Level: An Analysis of Technological, Organizational and Environmental (TOE) Effects on e-Maintenance Readiness in Manufacturing Firms.” International Journal of Information Management 34 (5): 639–651. doi:10.1016/j.ijinfomgt.2014.05.002.

- Alaswad, Suzan, and Yisha Xiang. 2017. “A Review on Condition-Based Maintenance Optimization Models for Stochastically Deteriorating System.” Reliability Engineering and System Safety 157: 54–63. doi:10.1016/j.ress.2016.08.009.

- Alvarado-Valencia, Jorge A., and Lope H. Barrero. 2014. “Reliance, Trust and Heuristics in Judgmental Forecasting.” Computers in Human Behavior 36 (July): 102–113. doi:10.1016/j.chb.2014.03.047.

- Arnott, David, and Graham Pervan. 2008. “Eight Key Issues for the Decision Support Systems Discipline.” Decision Support Systems 44 (3): 657–672. doi:10.1016/j.dss.2007.09.003.

- Arts, Joachim, and Rob Basten. 2018. “Design of Multi-Component Periodic Maintenance Programs with Single-Component Models.” IISE Transactions 50 (7): 606–615. doi:10.1080/24725854.2018.1437301.

- Arts, Joachim, Rob Basten, and Geert-Jan van Houtum. 2019. “Maintenance Service Logistics.” In Operations, Logistics and Supply Chain Management, edited by Henk Zijm, Matthias Klumpp, Alberto Regattieri, and Sunderesh Heragu, 493–517. Cham: Springer. doi:10.1007/978-3-319-92447-2_22.

- Asadzadeh, S. M., and A. Azadeh. 2014. “An Integrated Systemic Model for Optimization of Condition-Based Maintenance with Human Error.” Reliability Engineering and System Safety 124: 117–131. doi:10.1016/j.ress.2013.11.008.

- Bainbridge, Lisanne. 1983. “Ironies of Automation.” Automatica 19 (6): 775–779. doi:10.1016/0005-1098(83)90046-8.

- Bakker, Arnold B., and Evangelia Demerouti. 2017. “Job Demands-Resources Theory: Taking Stock and Looking Forward.” Journal of Occupational Health Psychology 22 (3): 273���285. doi:10.1037/ocp0000056.

- Bakker, Arnold B., and Evangelia Demerouti. 2018. “Multiple Levels in Job Demands – Resources Theory: Implications for Employee Well-Being and Performance.” In Handbook of Well-Being, edited by Ed Diener, Shigehiro Oishi, and Louis Tay, 1–13. Salt Lake City, UT: DEF Publishers.

- Bano, Muneera, and Didar Zowghi. 2015. “A Systematic Review on the Relationship between User Involvement and System Success.” Information and Software Technology 58: 148–169. doi:10.1016/j.infsof.2014.06.011.

- Barratt, Mark, Thomas Y Choi, and Mei Li. 2011. “Qualitative Case Studies in Operations Management: Trends, Research Outcomes, and Future Research Implications.” Journal of Operations Management 29 (4): 329–342. doi:10.1016/j.jom.2010.06.002.

- Bendoly, E., R. Croson, P. Goncalves, and K. Schultz. 2010. “Bodies of Knowledge for Research in Behavioral Operations.” Production and Operations Management 19 (4): 434–452. doi:10.1111/j.1937-5956.2009.01108.x.

- Berglund, M., and J. Karltun. 2007. “Human, Technological and Organizational Aspects Influencing the Production Scheduling Process.” International Journal of Production Economics 110 (1–2): 160–174. doi:10.1016/j.ijpe.2007.02.024.

- Bokrantz, Jon, Anders Skoogh, Cecilia Berlin, and Johan Stahre. 2017. “Maintenance in Digitalised Manufacturing: Delphi-Based Scenarios for 2030.” International Journal of Production Economics 191 (June): 154–169. doi:10.1016/j.ijpe.2017.06.010.

- Bokrantz, Jon, Anders Skoogh, Cecilia Berlin, Thorsten Wuest, and Johan Stahre. 2020. “Smart Maintenance: A Research Agenda for Industrial Maintenance Management.” International Journal of Production Economics 224 (October 2019): 107547. doi:10.1016/j.ijpe.2019.107547.

- Bolton, Gary E., and Elena Katok. 2018. “Cry Wolf or Equivocate? Credible Forecast Guidance in a Cost-Loss Game.” Management Science 64 (3): 1440–1457. doi:10.1287/mnsc.2016.2645.

- Bousdekis, Alexandros, Katerina Lepenioti, Dimitris Apostolou, and Gregoris Mentzas. 2021. “A Review of Data-Driven Decision-Making Methods for Industry 4.0 Maintenance Applications.” Electronics (Switzerland) 10 (7), doi:10.3390/electronics10070828.

- Bousdekis, Alexandros, Stefan Wellsandt, Enrica Bosani, Katerina Lepenioti, Dimitris Apostolou, Karl Hribernik, and Gregoris Mentzas. 2021. “Human-AI Collaboration in Quality Control with Augmented Manufacturing Analytics.” In Advances in Production Management Systems. Artificial Intelligence for Sustainable and Resilient Production Systems, edited by Alexandre Dolgui, Alain Bernard, David Lemoine, Gregor von Cieminski, and David Romero, 633, 303–310. Cham: Springer. doi:10.1007/978-3-030-85910-7_32.

- Bukhsh, Zaharah Allah, and Irina Stipanovic. 2020. “Predictive Maintenance for Infrastructure Asset Management.” IT Professional 22 (5): 40–45. doi:10.1109/MITP.2020.2975736.

- Burton, Jason W., Mari Klara Stein, and Tina Blegind Jensen. 2020. “A Systematic Review of Algorithm Aversion in Augmented Decision Making.” Journal of Behavioral Decision Making 33 (2): 220–239. doi:10.1002/bdm.2155.

- Carayon, Pascale. 2006. “Human Factors of Complex Sociotechnical Systems.” Applied Ergonomics 37 (4 SPEC. ISS.): 525–535. doi:10.1016/j.apergo.2006.04.011.

- Carayon, Pascale. 2009. “The Balance Theory and the Work System Model. Twenty Years Later.” International Journal of Human-Computer Interaction 25 (5): 313–327. doi:10.1080/10447310902864928.

- Carter, Craig R., Lutz Kaufmann, and Alex Michel. 2007. “Behavioral Supply Management: A Taxonomy of Judgment and Decision-Making Biases.” International Journal of Physical Distribution and Logistics Management 37 (8): 631–669. doi:10.1108/09600030710825694.

- Cegarra, Julien, and Jean Michael Hoc. 2008. “The Role of Algorithm and Result Comprehensibility of Automated Scheduling on Complacency.” Human Factors and Ergonomics In Manufacturing 18 (6): 603–620. doi:10.1002/hfm.20129.

- Chapman, Karen, and Alexander E. Ellinger. 2019. “An Evaluation of Web of Science, Scopus and Google Scholar Citations in Operations Management.” The International Journal of Logistics Management 30 (4): 1039–1053. doi:10.1108/IJLM-04-2019-0110.

- Crespo Marquez, Adolfo, and Jatinder N.D. Gupta. 2006. “Contemporary Maintenance Management: Process, Framework and Supporting Pillars.” Omega 34 (3): 313–326. doi:10.1016/j.omega.2004.11.003.

- Dalzochio, Jovani, Rafael Kunst, Edison Pignaton, Alecio Binotto, Srijnan Sanyal, Jose Favilla, and Jorge Barbosa. 2020. “Machine Learning and Reasoning for Predictive Maintenance in Industry 4.0: Current Status and Challenges.” In Computers in Industry. Elsevier B.V. doi:10.1016/j.compind.2020.103298.

- Davis, Andrew M. 2018. “Biases in Individual Decision-Making.” In The Handbook of Behavioral Operations, edited by Karen Donohue, Elena Katok, and Stephen Leider, 149–198. Hoboken, NJ: John Wiley & Sons, Inc. doi:10.1002/9781119138341.ch5.

- Dawes, Robyn M., David Faust, and Paul E. Meehl. 1989. “Clinical versus Actuarial Judgment.” Science 243 (4899): 1668–1674. doi:10.1126/science.2648573.

- De Baets, Shari, and Nigel Harvey. 2018. “Forecasting from Time Series Subject to Sporadic Perturbations: Effectiveness of Different Types of Forecasting Support.” International Journal of Forecasting 34 (2): 163–180. doi:10.1016/j.ijforecast.2017.09.007.

- De Cremer, David, and Garry Kasparov. 2021. “AI Should Augment Human Intelligence, Not Replace It.” Harvard Business Review. 2021. https://hbr.org/2021/03/ai-should-augment-human-intelligence-not-replace-it.

- De Jonge, Bram, and Philip A. Scarf. 2020. “A Review on Maintenance Optimization.” European Journal of Operational Research 285 (3): 805–824. doi:10.1016/j.ejor.2019.09.047.

- Demerouti, Evangelia, Arnold B Bakker, Friedhelm Nachreiner, and Wilmar B Schaufeli. 2001. “The Job Demands-Resources Model of Burnout.” Journal of Applied Psychology 86 (3): 499–512. doi:10.1037/0021-9010.86.3.499.

- De Snoo, Cees, Wout van Wezel, and René J. Jorna. 2011. “An Empirical Investigation of Scheduling Performance Criteria.” Journal of Operations Management 29 (3): 181–193. doi:10.1016/j.jom.2010.12.006.

- De Snoo, Cees, Wout van Wezel, J. C. Wortmann, and G. J. C. Gaalman. 2011. “Coordination Activities of Human Planners during Rescheduling: Case Analysis and Event Handling Procedure.” International Journal of Production Research 49 (7): 2101–2122. doi:10.1080/00207541003639626.

- Dietvorst, Berkeley J., Joseph P. Simmons, and Cade Massey. 2015. “Algorithm Aversion: People Erroneously Avoid Algorithms after Seeing Them Err.” Journal of Experimental Psychology: General 144 (1): 114–126. doi:10.1037/xge0000033.

- Dietvorst, Berkeley J., Joseph P. Simmons, and Cade Massey. 2018. “Overcoming Algorithm Aversion: People Will Use Imperfect Algorithms If They Can (Even Slightly) Modify Them.” Management Science 64 (3): 1155–1170. doi:10.1287/mnsc.2016.2643.

- Donohue, Karen, Ozalp Özer, and Yanchong Zheng. 2020. “Behavioral Operations: Past, Present, and Future.” Manufacturing & Service Operations Management 22 (1): 191–202. doi:10.1287/msom.2019.0828.

- Dul, Jan, Ralph Bruder, Peter Buckle, Pascale Carayon, Pierre Falzon, William S. Marras, John R. Wilson, and Bas van der Doelen. 2012. “A Strategy for Human Factors/Ergonomics: Developing the Discipline and Profession.” Ergonomics 55 (4): 377–395. doi:10.1080/00140139.2012.661087.

- Dzindolet, Mary T., Linda G. Pierce, Hall P. Beck, and Lloyd A. Dawe. 2002. “The Perceived Utility of Human and Automated Aids in a Visual Detection Task.” Human Factors: The Journal of the Human Factors and Ergonomics Society 44 (1): 79–94. doi:10.1518/0018720024494856.

- Elsevier, B. V. 2017. “Scopus Quick Reference Guide.”.

- Fildes, Robert, Paul Goodwin, Michael Lawrence, and Konstantinos Nikolopoulos. 2009. “Effective Forecasting and Judgmental Adjustments: An Empirical Evaluation and Strategies for Improvement in Supply-Chain Planning.” International Journal of Forecasting 25 (1): 3–23. doi:10.1016/j.ijforecast.2008.11.010.

- Franses, Philip Hans, and Rianne Legerstee. 2009. “Properties of Expert Adjustments on Model-Based SKU-Level Forecasts.” International Journal of Forecasting 25 (1): 35–47. doi:10.1016/j.ijforecast.2008.11.009.

- Fransoo, Jan C., and Vincent C.S. Wiers. 2006. “Action Variety of Planners: Cognitive Load and Requisite Variety.” Journal of Operations Management 24 (6): 813–821. doi:10.1016/j.jom.2005.09.008.

- Fransoo, Jan C., and Vincent C.S. Wiers. 2008. “An Empirical Investigation of the Neglect of MRP Information by Production Planners.” Production Planning and Control 19 (8): 781–787. doi:10.1080/09537280802568163.

- Fügener, Andreas, Jörn Grahl, Wolfgang Ketter, and Alok Gupta. 2020. “Cognitive Challenges in Human-AI Collaboration: Investigating the Path towards Productive Delegation.” Working Paper.

- Gallagher, Kristel M., and John A. Updegraff. 2012. “Health Message Framing Effects on Attitudes, Intentions, and Behavior: A Meta-Analytic Review.” Annals of Behavioral Medicine 43 (1): 101–116. doi:10.1007/s12160-011-9308-7.

- Ghazizadeh, Mahtab, John D. Lee, and Linda Ng Boyle. 2012. “Extending the Technology Acceptance Model to Assess Automation.” Cognition, Technology and Work 14 (1): 39–49. doi:10.1007/s10111-011-0194-3.

- Gino, Francesca, and Gary Pisano. 2008. “Toward a Theory of Behavioral Operations.” Manufacturing and Service Operations Management 10 (4): 676–691. doi:10.1287/msom.1070.0205.

- Golightly, David, Genovefa Kefalidou, and Sarah Sharples. 2018. “A Cross-Sector Analysis of Human and Organisational Factors in the Deployment of Data-Driven Predictive Maintenance.” Information Systems and E-Business Management 16 (3): 627–648. doi:10.1007/s10257-017-0343-1.

- Goodwin, Paul, Brent Moritz, and Enno Siemsen. 2018. “Forecast Decisions.” In The Handbook of Behavioral Operations, edited by Karen Donohue, Elena Katok, and Stephen Leider, 433–458. Hoboken, NJ: John Wiley & Sons, Inc. doi:10.1002/9781119138341.ch12.

- Goodwin, Paul, M. Sinan Gönül, and Dilek Önkal. 2013. “Antecedents and Effects of Trust in Forecasting Advice.” International Journal of Forecasting 29 (2): 354–366. doi:10.1016/j.ijforecast.2012.08.001.

- Grove, William M., David H. Zald, Boyd S. Lebow, Beth E. Snitz, and Chad Nelson. 2000. “Clinical versus Mechanical Prediction: A Meta-Analysis.” Psychological Assessment 12 (1): 19–30. doi:10.1037/1040-3590.12.1.19.

- Grubic, T., L. Redding, T. Baines, and D. Julien. 2011. “The Adoption and Use of Diagnostic and Prognostic Technology within UK-Based Manufacturers.” Proceedings of the Institution of Mechanical Engineers, Part B: Journal of Engineering Manufacture 225 (8): 1457–1470. doi:10.1177/0954405410395858.

- He, Yihai, Zhaoxiang Chen, Yixiao Zhao, Xiao Han, and Di Zhou. 2020. “Mission Reliability Evaluation for Fuzzy Multistate Manufacturing System Based on an Extended Stochastic Flow Network.” IEEE Transactions on Reliability 69 (4): 1239–1253. doi:10.1109/TR.2019.2957502.

- He, Jun, and William R. King. 2008. “The Role of User Participation in Information Systems Development: Implications from a Meta-Analysis.” Journal of Management Information Systems 25 (1): 301–331. doi:10.2753/MIS0742-1222250111.

- Hendrick, Hal W. 2003. “Determining the Cost–Benefits of Ergonomics Projects and Factors That Lead to Their Success.” Applied Ergonomics 34 (5): 419–427. doi:10.1016/S0003-6870(03)00062-0.

- Hertel, Guido, Sarah M. Meeßen, Dennis M Riehle, Meinald T Thielsch, Christoph Nohe, and Jörg Becker. 2019. “Directed Forgetting in Organisations: The Positive Effects of Decision Support Systems on Mental Resources and Well-Being.” Ergonomics 62 (5): 597–611. doi:10.1080/00140139.2019.1574361.

- Hilary, Gilles, and Charles Hsu. 2011. “Endogenous Overconfidence in Managerial Forecasts.” Journal of Accounting and Economics 51 (3): 300–313. doi:10.1016/j.jacceco.2011.01.002.

- Hogarth, Robin M., and Spyros Makridakis. 1981. “Forecasting and Planning: An Evaluation.” Management Science 27 (2): 115–138. doi:10.1287/mnsc.27.2.115.

- Ingemarsdotter, Emilia, Marianna Lena Kambanou, Ella Jamsin, Tomohiko Sakao, and Ruud Balkenende. 2021. “Challenges and Solutions in Condition-Based Maintenance Implementation - A Multiple Case Study.” Journal of Cleaner Production 296: 126420. doi:10.1016/j.jclepro.2021.126420.