Abstract

This paper centers on to evaluate whether and to what extent the learning objectives of the geography curricula emphasize students’ higher-order thinking skills (HOTS), and whether students are capable of answering to HOTS-questions by using the Finnish upper secondary geography education as an example. The revised Bloom’s taxonomy was used as a framework for the content analysis. The findings show that geography has the potential to enhance students’ HOTS, but students experience difficulties when answering to HOTS-questions. The results could be used to evaluate the desired thinking skills and knowledge dimensions in geography education for to enhance students meaningful learning.

Introduction

Higher-order thinking skills and geography education

The last twenty years have seen discussion of the weakened position of geography in education, reflected, for example, in falling student intakes, decreased credit hours, and geography being seen as an umbrella subject, or as an optional subject in the curriculum (see e.g. Bednarz, Heffron, and Solem Citation2014; Chang Citation2014; Lane and Bourke Citation2017; van der Schee, Notté, and Zwartjes Citation2010). At the same time, the news mainly concerns geographical themes like tourism, the threat of global pandemics, climate change, refugees and migration, the global economy, deforestation, forest fires, etc. However, these are not acknowledged as geographical phenomena. The media often portrays geography as a knowledge of topography and facts about the world’s places and regions (see e.g. Favier and van der Schee Citation2012, 666). However, rather than teaching simple facts about the world, according to Favier and van der Schee (Citation2012, 666), geography should be seen “more like an activity that students can engage in”, and geography educators should assist students to learn, acquire, and use geographical knowledge, as well as to develop their geographical skills and attitudes to be able to do geography (Chang and Kidman Citation2019, 2; Favier and van der Schee Citation2012, 666; van der Schee, Notté, and Zwartjes Citation2010, 7).

Bednarz (Citation2019, 521) proposes that geography has three “secret powers,” i.e. ways of thinking: spatial thinking; geographic thinking; and geospatial thinking. Additionally, it is suggested geographical knowledge has the potential to enhance people’s thinking and opinion-making skills, especially when combining concrete facts with abstract ideas and knowledge gained from geographical research (Béneker and Van Der Vaart Citation2020, 8). Moreover, geography educators should be able to communicate more effectively the thinking processes and core content of our discipline to the wider public (Bednarz Citation2019, 523). It is therefore interesting to examine the kind of thinking geography education can enhance.

In this paper, we have approached geographical thinking skills and knowledge dimensions through Bloom’s taxonomy, originally presented by Benjamin S. Bloom and revised by Anderson and Krathwohl in 2001 (Anderson et al. Citation2014). The revision includes six domains of cognitive processes divided into lower-order (LOTS) (remember, understand, apply) and higher-order thinking skills (HOTS) (analyze, evaluate, create), and four domains of knowledge: factual; conceptual; procedural; and metacognitive knowledge (Anderson et al. Citation2014; see also Virranmäki, Valta-Hulkkonen, and Pellikka Citation2020; and Tikkanen and Aksela Citation2012). It should be said that the division between LOTS and HOTS is contested: sometimes remembering is said to be the only lower-order thinking skill (see e.g. Anderson et al. Citation2014). However, the categories in the taxonomy are hierarchical, but they also overlap (Krathwohl Citation2002, 215), forming a continuum (Anderson et al. Citation2014).

It has been suggested that teaching and learning should focus on HOTS and metacognitive knowledge, because education can thus enhance meaningful learning (Airasian and Miranda Citation2002; Bijsterbosch, van der Schee, and Kuiper Citation2017; Krathwohl Citation2002). The research of Kumpas-Lenk, Eisenschmidt, and Veispak (Citation2018) proposed that students were more motivated, engaged, and satisfied in their studies when learning outcomes were designed to demand higher-order thinking. However, the research of Stes et al. (Citation2012) noted that designing the learning processes to target HOTS does not mean students will produce their answers at the same level, while Anderson et al. (Citation2014, 21) state that “not all students learn the same things from the same instruction even when the intended objective is the same,” and eventually, not all learning outcomes can always be stated as objectives.

Concerning geography education, only a few studies have examined the revised Bloom’s taxonomy and objectives in curricula, or students’ ability to use HOTS in their answers. Research into the cognitive processes and knowledge dimensions of the geography assessment questions (see Virranmäki, Valta-Hulkkonen, and Pellikka Citation2020; Bijsterbosch, van der Schee, and Kuiper Citation2017; Wertheim and Edelson Citation2013) and geography textbook questions (see e.g. Jo and Bednarz Citation2009; Krause et al. Citation2017; Mishra Citation2015; Şanli 2019;Yang Citation2013, Citation2015) has concluded that LOTS are emphasized. Some researchers (see e.g. Collins Citation2018; De Miguel González and De Lázaro Torres Citation2020; Favier and van der Schee Citation2014; Liu et al. Citation2010) have noted that digital technologies, like digital representations such as digital maps and geographical information systems, may be suitable for enhancing students’ HOTS. However, it has been said there is insufficient empirical evidence to show that digital maps are better for improving students’ higher-order thinking (Collins Citation2018, 139).

We (Virranmäki, Valta-Hulkkonen, and Pellikka Citation2020) have earlier examined the geography test questions during the digitalization process of the Finnish matriculation examination between 2013 and 2019 and concluded geography test questions to require mainly understanding of conceptual and factual knowledge. Although the questions requiring analysis have been increased during the digitalization. Thus, the aim of this study is to evaluate whether and to what extent the learning objectives (LO) of geography curricula might emphasize students’ higher-order thinking skills, and whether students are capable of answering HOTS-type questions in both paper-based and digital tests. This information could be used to evaluate the desired thinking skills and knowledge dimensions in geography education.

Recent changes in Finnish upper secondary geography education

From the perspective of Finnish upper secondary geography education, there have been three major changes in recent years. These changes create the background for this research in which the main interest is to examine how the changes have influenced geography education. First, a drastic change occurred in 2014, when geography lost one of its compulsory courses in upper secondary education as a result of the Finnish government’s decision concerning the distribution of lesson hours between different subjects (Valtioneuvosto Citation2014). Second, the core curriculum for upper secondary education was revised in 2015. The former curriculum dated back to 2003 (see Finnish National Board of Education [FNBE] Citation2016, Citation2003). The result was that the content of the geography curriculum remained almost the same, but the order of the upper secondary school courses changed (see Appendix A). Curriculum reform, operated by the National Agency for Education, is usually carried out every tenth year. In this study, we focus on the 2003 and 2015 geography curricula. The curriculum has since been reformed in 2019, so students starting their studies in 2021 will be taught according to the new curriculum.

Third, the Matriculation Examination (M.E.) was nationally completely digitalized during 2016–2019 due to a decision of the Finnish government in 2011 (see more information in Virranmäki, Valta-Hulkkonen, and Pellikka Citation2020). This meant that, before the autumn 2016, all students took the tests in the paper-based form, and after the digitalization reform all students have taken the examination in digital form. The M.E. is the national large-scale (approximately 35,000 participants per year; held biannually (in the spring and autumn) simultaneously in all Finnish upper secondary schools) summative assessment of learning outcomes at the end of upper secondary general education. It aims to examine whether students have accomplished the skills and competences defined in the National Core Curriculum for General Upper Secondary Schools (FNBE Citation2003, Citation2016). The M.E. consists of at least four tests, of which only the mother tongue test is compulsory. Students can include the geography test in their M.E., but it is not mandatory. Geography, philosophy, and German (as foreign language) were the first subjects to be digitalized in the autumn of 2016; mathematics was the last in the spring of 2019. In addition to these major changes, the students’ acceptance for higher education in the spring of 2020 onwards is mainly based on their success in the M.E. instead of the current entrance exams. This will increase the importance of the tests in the M.E.

We have chosen to examine geography education in the Finnish Upper Secondary context, in which geography belongs to the natural sciences and is taught as a named subject with one compulsory and three optional courses for all students. We acknowledge that national educational aims and school systems vary widely (see e.g. Butt and Lambert Citation2014, 9), and in some parts of the world, for example in Sweden, the Netherlands, China, and parts of the United States and South America, geography is taught as part of social studies. However, geography’s status as a named subject in upper secondary education is quite common across the globe: for example, in Sweden, the Netherlands, China, parts of the United States, Argentina, Brazil, Chile, Guyana, Paraguay, and Uruguay (Bednarz, Heffron, and Solem Citation2014; Brooks, Quian, and Salinas-Silva 2018; Uhlenwinkel et al. Citation2017). Furthermore, researchers have noted that geography seems to have a general “body of knowledge” (Butt and Lambert Citation2014, 1) and a general understanding of geography education’s goals (Chang and Seow Citation2018, 32). Keeping these and the major changes that have occurred in the Finnish upper secondary education scene in mind, we suggest that Finland offers a good and interesting example of geography education.

The purpose of this study is to analyze the geography objectives of the Finnish National Core Curricula for General Upper Secondary Schools published in 2003 and 2015 in terms of the levels of cognitive and knowledge domains of revised Bloom’s taxonomy, and to examine students’ higher-order cognitive outcomes in geography tests in the paper-based (between the autumn of 2015 and spring of 2016) and digital (between the autumn of 2016 and spring of 2017) forms. Our research questions are: (1) To what extent – if at all – does the geography learning objectives of the Finnish upper secondary curricula (published in 2003 and 2015) reflect higher-order thinking skills and different geographical knowledge dimensions? (2) Are students capable of demonstrating their higher-order thinking skills when answering the Finnish M.E.’s geography test questions in the paper-based and digital forms?

Research design

Analysis through revised Bloom’s taxonomy

Krathwohl (Citation2002, 212) describes the revised Bloom’s taxonomy as “a framework for classifying statements of what we expect or intend students to learn as a result of instruction.” We have applied the revised Bloom’s taxonomy in geography education and produced a framework ( and ) in which we represent the thinking skills and knowledge dimensions in the context of geography education by using the LOs of the Finnish geography curricula, and students’ performance when answering the HOTS-type questions in the paper-based and digital M.E. in geography as an example. The Tables present the type of skills that students are expected to show in their answers when demonstrating different cognitive processes and knowledge types. In this research, we use the term HOTS-type questions to refer to questions that require students to analyze, evaluate, or create conceptual or procedural knowledge. Here, metacognitive knowledge could have also been involved in HOTS-type questions. However, as our previous research (Virranmäki, Valta-Hulkkonen, and Pellikka Citation2020) concluded, there were no questions requiring metacognitive knowledge in the geography tests of the Finnish M.E. between 2013 and 2019. For this reason, we did not analyze students’ ability to use metacognitive knowledge in their answers.

Table 1. The cognitive process domains of the Taxonomy Table applied in the context of the objectives of geography and students’ answers to the geography tests (based on Anderson et al. Citation2014 and Virranmäki, Valta-Hulkkonen, and Pellikka Citation2020).

Table 2. The knowledge domains of the Taxonomy Table applied in the context of objectives of geography and students’ answers to the geography tests (based on Anderson et al. Citation2014; and Virranmäki, Valta-Hulkkonen, and Pellikka Citation2020).

We have also used revised Bloom’s taxonomy to analyze students’ answers, even though the taxonomy was originally developed to examine educational objectives and give “commonly understood meaning to objectives classified in one of its categories” (Krathwohl Citation2002, 218), because we are especially interested in students’ HOTS. To our knowledge, the SOLO-taxonomy (developed by Biggs and Collis [Citation1982] to analyze students’ work and products) oversimplifies the higher cognitive processes. We suggest that revised Bloom’s taxonomy gives a better understanding of students’ thinking skills at the higher level of cognitive domains by differentiating three levels of thinking: analyzing, evaluating and creating. We have therefore sought to examine students’ answers from the perspective of whether they can demonstrate the required thinking skills in their answer, i.e. do students’ answers reflect higher-order thinking skills?

All researchers in this study are familiar with revised Bloom’s taxonomy. To attain consistency in the categorization of LOs and students’ answers, a preliminary framework based on revised Bloom’s taxonomy was developed by the first author (part of and ). We then discussed the content of the tables and the principles of the categorization process together. The first author then made required changes to the tables to ensure all the authors agreed the categorization principles and process. Content analysis, which was performed utilizing this framework, was then used to revise and finalize and .

In this study (), LOTS consist of three domains. The first is remembering, which is a prerequisite for other cognitive processes, because the recognized and recalled scattered information is often used in more complex tasks (see Anderson et al. Citation2014, 66, 70). The second, largest, and very comprehensive category is understanding, which relates to the construction of connections between prior and new knowledge. In understanding, students mainly describe geographical phenomena by listing information and explaining concepts related to a given task. However, because of its comprehensive nature, understanding is an important part of geography education and has huge potential to enhance geography learning (see also Bijsterbosch, van der Schee, and Kuiper Citation2017, 18). The third is applying, in which students use their prior knowledge of geographical models, theories, or procedures (e.g. calculations and spatial data analysis) to solve familiar or unfamiliar exercises or problems (see Anderson et al. Citation2014, 77).

The more comprehensive skills, HOTS, include the domains of “analyze”, “evaluate” and “create”. Analyzing is a continuation of understanding, because it requires students to reorganize knowledge from different sources, recognize causalities, and decide the suitable information to be used in a given situation (see Anderson et al. Citation2014, 79). Whereas evaluation is an extension of analyzing, because students are expected to use “standards or performance with clearly defined criteria” (Anderson et al. Citation2014, 83) to make justifiable arguments and draw a firm conclusion, based on the analysis they have done beforehand. In other words, when students are evaluating, they draw conclusions and judgments based on the evaluation they have done by checking or critiquing something from a certain perspective, i.e. critical thinking is required. The most comprehensive domain is “create”, in which students solve a given problem by planning how to do it, generating different outcomes or producing a real solution for it (Anderson et al. Citation2014, 85). Creating requires a student to use deep understanding and synthesize scattered material to produce an organized whole. Creative and holistic thinking and synthesis are part of creating knowledge.

The knowledge domain () of revised Bloom’s taxonomy is divided into four dimensions: factual; conceptual; procedural; and metacognitive. Factual knowledge consists of knowledge (e.g. terms, facts, concepts) that are separate parts of information, whereas in the conceptual domain, knowledge forms a larger entity, in which connections (for example between concepts) are seen, and learners’ everyday experiences are transferred (see Anderson et al. Citation2014, 42). When students use knowledge of how to do something, they are using procedural knowledge that is the use of subject- or discipline-specific skills, methods, and techniques, whereas metacognitive knowledge refers to students’ own knowledge of their strengths and weaknesses when studying geography, and to the broader skills of learning and problem solving (see Anderson et al. Citation2014, 52–60).

Content analysis of the learning objectives and students’ answers to the geography tests

The empirical part of this study consists of two different data sets, which were analyzed using the previously mentioned framework as a basis for the analysis. The geography LOs of the Finnish National Core Curriculum for General Upper Secondary Schools published in 2003 and 2015 (FNBE Citation2003, Citation2016) were analyzed between January and February 2020. The students’ answers to HOTS-type geography questions from the Finnish M.E. in both paper-based and digital forms were analyzed between January and March 2018. The data of the students’ answers was obtained from the Finnish Matriculation Examination Board (later FMEB). We used a qualitative approach and theory-driven content analysis to systematically analyze the data. Additionally, researcher triangulation was used in the analysis process. The reliability of our analysis was further strengthened by dialogue between the three researchers during the research process.

The analysis process of the LOs of the geography curricula published in 2003 and 2015 was started by the first author, who collected the LOs from the curricula. She then repeatedly read the LOs, later constructing a table presenting all the LOs, categorized into revised Blooms’ taxonomy according to the previously mentioned framework. The second and third author read this preliminary categorization individually. We later discussed the categorization at a joint meeting, ensuring that we had a common understanding of the categorization criteria (see and ). Finally, the first author produced the final categorization of the LOs, which was then read and approved by the other two authors. The consistency of the produced framework was checked repeatedly during the research process.

There was a total of 76 LOs in the two analyzed curricula. These were further divided into 107 smaller LOs, because some LOs included two or more objectives. Additionally, we found ten LOs that we could not categorize at any level of revised Bloom’s taxonomy, because they did not reflect any parts of the taxonomy. Therefore, among the 107 LOs found in the curricula, 97 were categorized in one or more category of the taxonomy table, because the categories were hierarchical but also overlapped (Krathwohl Citation2002, 215). There are therefore a total of 174 categorizations (67 LOs in the 2003 curriculum and 107 LOs in the 2015 curriculum).

The data consisting of 200 students’ answers to four examinations, altogether 800 students taking part in the geography test in the Finnish M.E. between the autumn of 2015 and spring of 2017 was received from the FMEB. Thus, the data consisted of 400 students’ answers to paper-based (tests in the autumn of 2015 and spring of 2016) as well as 400 students’ answers to digital (tests in the autumn of 2016 and spring of 2017) forms. We limited the analysis of students’ answers to the HOTS-type questions (analyze, evaluate, or create conceptual or procedural knowledge) of the Finnish M.E. (analysis found in Virranmäki, Valta-Hulkkonen, and Pellikka Citation2020), because we were interested in whether students were capable of demonstrating their HOTS and conceptual or procedural knowledge. The analysis is therefore based on students’ answers to 33 HOTS-type questions.

The first author was responsible for communication with the FMEB, and did a preliminary categorization (according to the preliminary framework, and ) of a sample of the students’ answers from a digital test in the autumn of 2016. Several joint meetings were then organized, and the results of the preliminary categorization were discussed and evaluated by all authors. These meetings ensured that all the authors agreed to the categorization principles. Finally, the first author conducted the final analysis process.

For every question (n = 33), we analyzed a random sample of 50 students’ answers from the data of 200 students’ answers. However, in some cases, there were not 50 answers out of 200 students’ answers in the research material. In these cases only some of the 200 students had answered the particular questions and the rest had not answered (as was the case for the digital test in the autumn of 2016: in assignment 7 A, for which there were only 35 answers of 200 students’ answers and 7B for which there were only 35 answers of 200 students’ answers, and in assignment 9B, for which there were only 19 answers of 200 students’ answers; and the digital test in the spring 2017 test: in assignment 5 C, for which there were only 46 answers of 200 students’ answers). From the paper-based tests, there was a total of nine assignments, consisting of 13 HOTS-type questions. Therefore, 650 students’ answers were analyzed. Meanwhile, in the digital test, there were 12 assignments with 20 HOTS-type questions, and 935 analyzed students’ answers. We therefore categorized a total of 1,585 answers in the content analysis.

Research findings

The thinking skills and geographical knowledge emphasized in the geography LOs in the 2003 and 2015 curricula

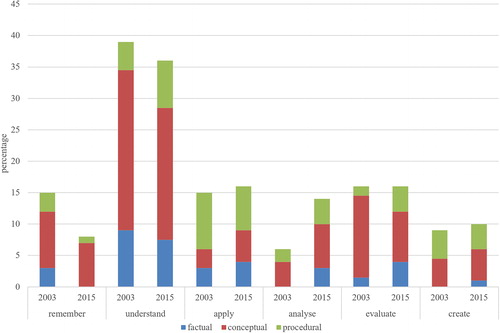

We analyzed the geography LOs of the Finnish National Core Curricula for General Upper Secondary Schools produced in 2003 and 2015. presents the percentages of the thinking skills and the geographical knowledge found in the analyzed LOs. We found that the 2015 curriculum’s LOs were slightly more demanding in terms of thinking skills than the 2003 curriculum’s LOs. In the 2015 curriculum, 61 percent of the LOs required lower-order thinking (remember, understand, apply); 39 percent required higher-order thinking (analyze, evaluate, create). In the 2003 curriculum, the percentages were 69 percent and 31 percent respectively. However, when the LOs requiring the use of higher-order thinking were examined more closely, only analytical thinking increased, from 6 percent to 14 percent, while the percentages of LOs emphasizing evaluating or creating remained almost the same. This means that understanding the causal relationships within and between human and physical geography phenomena has become more numerous.

Figure 1. The distribution (in percentages) of the geography LOs in the 2003 (n = 67 LO) and 2015 curricula (n = 107 LO) according to the cognitive and knowledge dimensions.

When examining the knowledge dimension of the LOs of the two geography curricula we found that most of the LOs in both curricula emphasized conceptual knowledge (60% of the 2003 curriculum’s LOs and 54% of the 2015 curriculum’s LOs), which means knowledge of classifications, categories, principles, generalizations, theories, models, and structures in geography. Second, the LOs emphasized the use of procedural knowledge (24% and 27% of LOs respectively), in other words, the knowledge of how to do geography, subject-specific skills, methods, and techniques. The LOs emphasized factual knowledge least (16% and 19% of LOs respectively). That is knowledge of geographical terms and specific details. Metacognitive knowledge was completely lacking in the geography LOs.

In examining the distribution (percentages) of the different higher-order thinking skills and knowledge dimensions between the general LOs of geography and the course-specific LOs ( and ) in more depth, we found that HOTS were distributed more evenly between the different courses in the 2015 curriculum. Analytical and evaluative thinking, as well as conceptual and procedural knowledge, were pursued in all geography courses and in general objectives. However, in both curricula, the highest level of thinking, creating, was pursued only in the general and course 4’s LOs. Additionally, we found that the only compulsory course (GE1) of the 2015 curriculum completely lacked factual knowledge and creative thinking. Sixty percent of the LOs in this course emphasized remembering and understanding conceptual knowledge, and 90 percent of the LOs were categorized as conceptual knowledge in this course.

Table 3. The distribution (in percentages) of the different higher-order thinking skills and knowledge dimensions between general and course-specific objectives in the 2003 curriculum.

Table 4. The distribution (in percentages) of the different higher-order thinking skills and knowledge dimensions between general and course-specific objectives in the 2015 curriculum.

Additionally, we found ten LOs in the geography curricula (3 out of fifty LOs in the 2003 curriculum, and 7 out of 57 LOs in the 2015 curriculum) that were categorized as value-based LOs, because they were incapable of reflecting any of the levels of revised Bloom’s taxonomy. These included tolerance and respect for cultural diversity and human rights, acting as an active global citizen promoting sustainable development, and taking a stance on local and global issues, and gathering experiences and interest in geography, and how geography examines the world.

Students’ higher-order thinking skills and geographical knowledge in the paper-based and digital tests

The examination of students’ ability to demonstrate their higher-order thinking skills and geographical knowledge in their answers to geography tests in the paper-based ( and ) and digital ( and ) forms are provided. In examining the cognitive dimension of the students’ answers ( and ), we found () that students were able to show (54% of answers) and use analytical thinking skills in their answers in the paper-based tests. This means students could select relevant information and organize it coherently, making causal relationships visible. However, analytical thinking skills were seen only in 35 percent of the students’ answers in the digital tests () and in the majority of answers (53%), students mainly listed and explained concepts. This means that they understood the given geographical phenomenon. Overall, students had difficulties analyzing diagrams about climate change, the potential of wind energy, and geographical information system methods. Students performed well when the assignments required them to analyze maps about free-time living in Finland, the regional characteristics of world population growth, and the environmental impact of fishing and aquaculture.

Table 5. Distribution of students’ answers (n = 650) as a percentage (in numbers) at different levels of cognitive skills when the HOTS-type question (n = 13) required analyzing, evaluating, or creating in the paper-based form of the Finnish Matriculation Examination.

Table 6. Distribution of students’ answers (n = 935) as a percentage (in numbers) at different levels of cognitive skills, when the HOTS-type question (n = 20) required analyzing, evaluating, or creating in the digital form of the Finnish Matriculation Examination.

Table 7. Distribution of students’ answers (n = 650) as a percentage (in numbers) at different levels of geographical knowledge, when the HOTS-type question (n = 13) required conceptual or procedural knowledge in the paper-based form of the Finnish Matriculation Examination.

Table 8. Distribution of students’ answers (n = 935) as a percentage (in numbers) at different levels of geographical knowledge, when the HOTS-type question (n = 20) required conceptual or procedural knowledge in the digital form of the Finnish Matriculation Examination.

Our analysis revealed students had difficulties when questions required them to evaluate. In the paper-based tests, only 34 percent, and in the digital tests only 15 percent, of the students’ answers were structured in such a way that evaluative thinking was visible, i.e. conclusions and judgments were formed and justified. Most of the students’ answers were grouped either in the category of “analyze” (38% and 46.5% of answers respectively) or in the category of “understand” (24% and 34% of answers respectively). Therefore, most of the students’ answers lacked critical thinking. In the category of “evaluate”, there were assignments that required students to evaluate errors in geographical information systems or to evaluate the pros and cons of the mining industry, for example.

Students seemed to perform well when answering questions requiring them to create. Creative and holistic thinking were evident in 75 percent of the students’ answers in the paper-based tests, and in 54 percent of the students’ answers in the digital tests. In this category, the questions required students to create a map representing the larger geographical area and plan a geographical study, for example. However, students had difficulties in hypothesizing what impacts the mining industry might have in a certain area or how to improve the world’s food production. In the paper-based tests, 17 percent, and in the digital tests 15 percent, of their answers mainly showed analytical thinking, so pondering possible improvements was difficult for some students.

In researching the knowledge dimension of the students’ answers ( and ), we reported that almost all the students (100% of the students’ answers in the paper-based tests, and 90% of the students’ answers in the digital tests) were capable of answering questions requiring them to use conceptual knowledge, using geographical concepts, theories, models, and generalizations, and connecting them. However, in the digital tests, 10 percent of the students’ answers showed only factual knowledge of simple facts or specific details. Additionally, procedural knowledge was evident in 73 per cent of the students’ answers in the paper-based tests. Students were able to use geographical skills, techniques, and methods related to geographical information systems especially were well known, but students had difficulties when they were required to draw a map. However, in digital tests, only 36 percent of the students’ answers showed procedural knowledge. There were difficulties in using knowledge of methods about geographical information systems, planning a geographical research plan, or producing an altitude profile.

Discussion and conclusion

In this research, we analyzed Finnish upper secondary school geography LOs of the 2003 and 2015 curricula through the framework of revised Bloom’s taxonomy to examine whether and to what extent the geography curricula emphasized students’ higher-order thinking skills. Additionally, we analyzed students’ performance in answering the HOTS-type questions of the Finnish geography M.E. between the autumn of 2015 and spring of 2017 in both paper-based and digital forms.

Our results show that geography has the potential to enhance students’ higher-order thinking skills. We found that the current 2015 curriculum is slightly more demanding and 39 percent of LOs emphasize HOTS. However, this is mainly because of the increased requirement of analytical thinking skills (and the decreased requirement of understanding and remembering), whereas evaluative and creative thinking remained almost the same during the research period. When we examined the knowledge requirements of the geography LOs, we found that in both curricula, conceptual knowledge was the most emphasized knowledge, while procedural knowledge was the second most emphasized, and factual was the least valued knowledge type.

Furthermore, we found that the distribution of the LOs of the 2015 geography curriculum between the different courses did not support the learning of higher-order thinking skills. The highest level of thinking, creating, is found only in the general objectives, and in course 4. The curriculum reform resulted in only one compulsory geography course, which is quite one-sided in terms of cognitive and knowledge demands. However, the curriculum reform improved the structure of the curriculum so that analytical and evaluative thinking, as well as conceptual and procedural knowledge, were pursued in all geography courses, and HOTS are distributed more evenly between the different courses. We also identified value-based LOs, which increased during the curriculum reform. Teaching values, like sustainable development and diversity, are an important part of geography teaching and learning (see e.g. Uhlenwinkel et al. Citation2017). The value-based LOs included in the curriculum reflect geography’s ability to teach values that are important in helping students to appreciate a diverse society, in which everyone has the opportunity to participate actively (see also Bednarz Citation2019). The value-based LOs must therefore be included in the curriculum.

Regarding our second research question, our analysis of the students’ answers shows that students have difficulties demonstrating HOTS, especially in the digital tests. The results support the research of Stes et al. (Citation2012) (see also Anderson et al. Citation2014) in concluding that not all students produce answers at the required HOTS-level. However, contrary to our findings, it has been suggested previously that digital technologies could be suitable for enhancing students HOTS (see e.g. Collins Citation2018; De Miguel González and De Lázaro Torres Citation2020; Favier and van der Schee Citation2014; Liu et al. Citation2010; Palladino and Goodchild 1993). However, the students’ answers lacked evaluative and creative, as well as holistic, thinking in both test forms, and analytical thinking in the digital tests. According to our interpretation of revised Bloom’s taxonomy ( and ), this means that although the questions required students to understand causalities and form a coherent conclusion, make judgments, and justify their views (i.e. be critical) or hypothesize possible changes, they were incapable of showing these thinking skills in their answers. However, these are all an important part of geographical learning (see e.g. Bednarz Citation2019; Béneker and Van Der Vaart Citation2020). Additionally, in the digital tests, students had difficulties when required to use procedural knowledge, i.e. knowledge of how to make a geographical research plan or draw an altitude profile or map.

The reason for these problems in the digital tests may be the tests’ transformation from the paper-based to digital format. We analyzed the last two paper-based tests and the first two digital geography tests, and the newness of the digital format may have affected the students’ answers, because they may not have become accustomed to digital studying yet. However, the M.E. in geography will be in digital form in the future and therefore, it is substantial to gain more knowledge on how well students can demonstrate their knowledge and thinking skills in digital geography tests. This study provides important new knowledge on this issue.

Relying on our results and also on previous research, which states that to enhance meaningful learning, teaching and learning should focus on HOTS and metacognitive knowledge (Airasian and Miranda Citation2002; Bijsterbosch, van der Schee, and Kuiper Citation2017; Krathwohl Citation2002), we suggest three development aspects for consideration. First, we need a careful rethinking of the desired thinking skills and knowledge dimensions of LOs in geography, and a revaluation of the distribution of the HOTS-type LOs between the different geography courses. The LOs requiring evaluative and creative thinking skills, as well as the use of procedural knowledge, should clearly be reconsidered, because students have difficulties when using these skills. Second, we suggest that there is a need to engage students to planning teaching and learning geography i.e. they should know what skills and knowledge they are required to use when learning geography. In this way students can “learn to use knowledge” (Béneker and Van Der Vaart Citation2020, 9), and “be conscious and mindful about their thinking processes” (Bednarz Citation2019, 525) i.e. they become aware of the metacognitive knowledge possibilities in their learning. This might empower youth to be critical thinkers and take responsibility for their own learning and doing. This requires more training for teachers and students about thinking skills. Clear instructions and careful practice in the classrooms could help students to perform better when using the HOTS. Students especially need practice in their evaluative thinking skills, i.e. how to make firm conclusions based on given material and known criteria, and to justify their views. Third, we need more discussion between teachers and students about LOs in geography to increase students’ metacognitive knowledge (see Anderson et al. Citation2014, 51), which is completely lacking in the current geography curriculum.

We have created a framework by applying a revised Bloom’s taxonomy. This reflects our understanding of it; it should be said that the interpretation of the taxonomy is always a matter of subjective statement (see Anderson et al. Citation2014, 33). However, we have attempted to describe the produced framework as accurately as possible so that other researchers can evaluate and use it in their own research. Using Finnish geography curriculum LOs and students’ answers to the M.E. as an example, we have shown that geography is much more than learning simple facts, it is about doing geography (see e.g. Chang and Kidman Citation2019, 2; Favier and van der Schee Citation2012, 666; van der Schee, Notté, and Zwartjes Citation2010, 7). Geography has the potential to enhance students HOTS. Geography’s position in the curriculum varies across the globe, but it is acknowledged to have some common knowledge and skills that make our findings about the Finnish context applicable elsewhere. Awareness of thinking skills and knowledge dimensions in geography curricula and students’ answers provides some insights for educators and policymakers in designing LOs and assessment questions that are more inspirational and engaging for students, and improve geography teaching and learning. Yet, researchers should also study them in different educational contexts to be able to communicate more effectively the “key ideas of our discipline and its distinct thinking processes and perspectives” (Bednarz Citation2019, 523) to the wider public.

Acknowledgment

We would like to thank the Finnish Matriculation Examination Board for giving us a research license for the use of the data of students' answers.

Additional information

Funding

Notes on contributors

Eerika Virranmäki

Eerika Virranmäki (MSc, Doctoral Researcher) is doing her PhD study in the Geography Research Unit at the University of Oulu. Her research interests are in the field of geography education primarily in the upper secondary schools, geographical knowledge and changing pedagogies in geography education and assessment.

Kirsi Valta-Hulkkonen

Kirsi Valta-Hulkkonen (PhD) is working as a lecturer in the Teacher Training School in the Faculty of Education at the University of Oulu. Her research interests are in the fields of geography and biology education.

Anne Pellikka

Anne Pellikka (MSc, Doctoral Researcher) is a university teacher in the didactics of biology, geography and health education in the Faculty of Education at the University of Oulu. Her research interests are in the fields of biology, geography and environmental studies in the context of teacher education.

References

- Airasian, P. W., and H. Miranda. 2002. The role of assessment in the revised taxonomy. Theory into Practice 41 (4):249–54. doi: 10.1207/s15430421tip4104_8.

- Anderson, L. W., D. R. Krathwohl, P. W. Airasian, K. A. Cruikshank, R. E. Mayer, P. R. Pintrich, J. Raths, and M. Wittrock. 2014. A taxonomy for learning, teaching, and assessing: A revision of Bloom’s. Edinburgh, UK: Pearson Education Limited.

- Bednarz, S. W. 2019. Geography’s secret powers to save the world. The Canadian Geographer / Le Géographe Canadien 63 (4):520–9. doi: 10.1111/cag.12539.

- Bednarz, S. W., S. G. Heffron, and M. Solem. 2014. Geography standards in the United States: Past influences and future prospects. International Research in Geographical and Environmental Education 23 (1):79–89. doi: 10.1080/10382046.2013.858455.

- Béneker, T., and R. Van Der Vaart. 2020. The knowledge curve: Combining types of knowledges leads to powerful thinking. International Research in Geographical and Environmental Education 29 (3):221–11. doi: 10.1080/10382046.2020.1749755.

- Biggs, J. B., and K. F. Collis. 1982. Evaluating the quality of learning: The SOLO taxonomy (structure of the observed learning outcome). New York: Academic Press.

- Bijsterbosch, E., J. van der Schee, and W. Kuiper. 2017. Meaningful learning and summative assessment in geography education: An analysis in secondary education in the Netherlands. International Research in Geographical and Environmental Education 26 (1):17–35. doi: 10.1080/10382046.2016.1217076.

- Brooks, C., G. Qian, and V. Salinas-Silva. 2018. What next for geography education? A perspective from the International Geographical Union–Commission for Geography Education. J-Reading-Journal of Research and Didactics in Geography 6 (1):5–15. doi: http://doi.org/10.4458/8579-01.

- Butt, G., and D. Lambert. 2014. International perspectives on the future of geography education: An analysis of national curricula and standards. International Research in Geographical and Environmental Education 23 (1):1–12. doi: 10.1080/10382046.2013.858402.

- Chang, C. H. 2014. Is Singapore’s school geography becoming too responsive to the changing needs of society? International Research in Geographical and Environmental Education 23 (1):25–39. doi: 10.1080/10382046.2013.858405.

- Chang, C. H., and G. Kidman. 2019. Curriculum, pedagogy and assessment in geographical–for whom and for what purpose? International Research in Geographical and Environmental Education 28 (1):1–4. doi: 10.1080/10382046.2019.1578526.

- Chang, C. H., and T. Seow. 2018. Geographical education that matters – A critical discussion of consequential validity in assessment of school geography. Geographical Education 31:31–40.

- Collins, L. 2018. The impact of paper versus digital map technology on students’ spatial thinking skill acquisition. Journal of Geography 117 (4):137–52. doi: 10.1080/00221341.2017.1374990.

- De Miguel González, R., and M. L. De Lázaro Torres. 2020. WebGIS implementation and effectiveness in secondary education using the digital atlas for schools. Journal of Geography 119 (2):74–85. doi: 10.1080/00221341.2020.1726991.

- Favier, T. T., and J. van der Schee. 2012. Exploring the characteristics of an optimal design for inquiry-based geography education with Geographic Information Systems. Computers & Education 58 (1):666–77. doi: 10.1016/j.compedu.2011.09.007.

- Favier, T. T., and J. van der Schee. 2014. The effects of geography lessons with geospatial technologies on the development of high school students’ relational thinking. Computers & Education 76:225–36. doi: 10.1016/j.compedu.2014.04.004.

- Finnish National Board of Education (FNBE). 2003. Lukion opetussuunnitelman perusteet 2003 [National Core Curriculum for General Upper Secondary Schools 2003]. Accessed January 15, 2020. https://www.oph.fi/sites/default/files/documents/47345_lukion_opetussuunnitelman_perusteet_2003.pdf

- Finnish National Board of Education (FNBE). 2016. National core curriculum for general upper secondary schools 2015. Helsinki: Next Print Oy.

- Jo, I., and S. W. Bednarz. 2009. Evaluating geography textbook questions from a spatial perspective: Using concepts of space, tools of representation, and cognitive processes to evaluate spatiality. Journal of Geography 108 (1):4–13. doi: 10.1080/00221340902758401.

- Krathwohl, D. R. 2002. A revision of Bloom’s taxonomy: An overview. Theory into Practice 41 (4):212–41. doi: 10.1207/s15430421tip4104_2.

- Krause, U., T. Béneker, J. Van Tartwijk, A. Uhlenwinkel, and S. Bolhuis. 2017. How do the German and Dutch curriculum contexts influence (the use of) geography textbooks? Review of International Geographical Education Online 7 (3):235–63.

- Kumpas-Lenk, K., E. Eisenschmidt, and A. Veispak. 2018. Does the design of learning outcomes matter from students’ perspective? Studies in Educational Evaluation 59 (July):179–86. doi: 10.1016/j.stueduc.2018.07.008.

- Lane, R., and T. Bourke. 2017. Possibilities for an international assessment in geography. International Research in Geographical and Environmental Education 26 (1):71–85. doi: 10.1080/10382046.2016.1165920.

- Liu, Y., E. N. Bui, C. H. Chang, and H. G. Lossman. 2010. PBL-GIS in secondary geography education: Does it result in higher-order learning outcomes? Journal of Geography 109 (4):150–8. doi: 10.1080/00221341.2010.497541.

- Mishra, R. K. 2015. Mapping the knowledge topography: A critical appraisal of geography textbook questions. International Research in Geographical and Environmental Education 24 (2):118–30. doi: 10.1080/10382046.2014.993170.

- Palladino, S., and M. Goodchild. 1993. A place for GIS in the secondary schools? Lessons from the NCGIA secondary education project. Geo Info Systems 3 (4):45–49.

- Şanli, C. 2019. Investigation of question types in high school geography coursebooks and their analysis in accordance with the Revised Bloom’s Taxonomy. Aegean Geographical Journal 28 (2):111–27.

- Stes, A., S. de Maeyer, D. Gijbels, and P. van Petegem. 2012. Instructional development for teachers in higher education: Effects on students’ learning outcomes. Teaching in Higher Education 17 (3):295–308. doi: 10.1080/13562517.2011.611872.

- Tikkanen, G., and M. Aksela. 2012. Analysis of Finnish chemistry matriculation examination questions according to cognitive complexity. Nordic Studies in Science Education 8 (3):257–68. doi: 10.5617/nordina.532.

- Uhlenwinkel, A., T. Béneker, G. Bladh, S. Tani, and D. Lambert. 2017. GeoCapabilities1 and curriculum leadership: Balancing the priorities of aim-based and knowledge-led curriculum thinking in schools. International Research in Geographical and Environmental Education 26 (4):327–41. doi: 10.1080/10382046.2016.1262603.

- Valtioneuvosto. 2014. Valtioneuvoston asetus lukiolaissa tarkoitetun koulutuksen yleisistä valtakunnallisista tavoitteista ja tuntijaosta 942/2014 [Government decree on the general objectives for general upper secondary education and lesson hour distribution]. Accessed February 3, 2020. https://www.finlex.fi/fi/laki/alkup/2014/20140942

- van der Schee, J., H. Notté, and L. Zwartjes. 2010. Some thoughts about a new international geography test. International Research in Geographical and Environmental Education 19 (4):277–82. doi: 10.1080/10382046.2010.519148.

- Virranmäki, E., K. Valta-Hulkkonen, and A. Pellikka. 2020. Geography tests in the Finnish Matriculation Examination in paper and digital forms – An analysis of questions based on revised Bloom’s taxonomy. Studies in Educational Evaluation 66:100896. doi: 10.1016/j.stueduc.2020.100896.

- Wertheim, J. A., and D. C. Edelson. 2013. A road map for improving geography assessment. Geography Teacher 10 (1):15–21. doi: 10.1080/19338341.2012.758044.

- Yang, D. 2013. Comparing assessments within junior geography textbooks used in mainland China. Journal of Geography 112 (2):58–67. doi: 10.1080/00221341.2011.648211.

- Yang, D. 2015. A comparison of questions and tasks in geography textbooks before and after curriculum reform in China. Review of International Geographical Education Online 5 (3):231–48.

Appendix

Appendix A. Content of school geography in Finnish upper secondary schools according to national core curricula of 2003 and 2015.