?Mathematical formulae have been encoded as MathML and are displayed in this HTML version using MathJax in order to improve their display. Uncheck the box to turn MathJax off. This feature requires Javascript. Click on a formula to zoom.

?Mathematical formulae have been encoded as MathML and are displayed in this HTML version using MathJax in order to improve their display. Uncheck the box to turn MathJax off. This feature requires Javascript. Click on a formula to zoom.Abstract

Classical molecular dynamics simulates the time evolution of molecular systems through the phase space spanned by the positions and velocities of the constituent atoms. Molecular-level thermodynamic, kinetic, and structural data extracted from the resulting trajectories provide valuable information for the understanding, engineering, and design of biological and molecular materials. The cost of simulating many-body atomic systems makes simulations of large molecules prohibitively expensive, and the high-dimensionality of the resulting trajectories presents a challenge for analysis. Driven by advances in algorithms, hardware, and data availability, there has been a flare of interest in recent years in the applications of machine learning – especially deep learning – to molecular simulation. These techniques have demonstrated great power and flexibility in both extracting mechanistic understanding of the important nonlinear collective variables governing the dynamics of a molecular system, and in furnishing good low-dimensional system representations with which to perform enhanced sampling or develop long-timescale dynamical models. It is the purpose of this article to introduce the key machine learning approaches, describe how they are married with statistical mechanical theory into domain-specific tools, and detail applications of these approaches in understanding and accelerating biomolecular simulation.

GRAPHICAL ABSTRACT

1. Introduction

Classical molecular dynamics (MD) simulation is a workhorse tool for the study of molecular and atomic systems to understand and predict their behaviour by integrating Newton's equations of motion at the molecular scale [Citation1,Citation2]. The essence of the technique is to simulate the dynamical evolution of a molecular system through its phase space spanned by the atomic positions and velocities under a Hamiltonian defining the many-body interaction potential. Analysis of the resulting simulation trajectories provides a means to estimate the structural, thermodynamic, and dynamical properties of the system. Performing a molecular dynamics requires three chief ingredients: an initial system configuration, an interaction potential, and a means to integrate the classical equations of motion. This approach was anticipated in 1812 by Pierre Simon de Laplace's Gedankenexperiment that posited ‘an intelligence which could comprehend all the forces by which nature is animated and the respective positions of the beings which compose it, if moreover this intelligence were vast enough to submit these data to analysis … to it nothing would be uncertain, and the future as the past would be present to its eyes’ [Citation3]. Alder and Wainwright were the first to realise Laplace's ‘clockwork universe’ in 1957 through their pioneering molecular dynamics simulations employing state-of-the-art computers and simulation algorithms to approximate the role of the ‘all-seeing intelligence’ [Citation4,Citation5]. Modern advances in computational hardware and software and force fields constructed from quantum mechanical calculations and precise experimental measurements have enabled simulations of systems of billions [Citation6,Citation7] and even trillions [Citation8] of atoms. However, validated force fields for arbitrary materials and conditions are still lacking, and the inherently serial nature of numerical integration and the requirement for short time steps on the order of femtoseconds to preserve numerical stability have largely limited simulations of non-trivial systems to millisecond time scales [Citation9–11]. Karplus and Petsko elegantly articulated these deficiencies in their 1990 article with their assertion holding equally true today [Citation12]: ‘Two limitations in existing simulations are the approximations in the potential energy functions and the lengths of the simulations. The first introduces systematic errors and the second statistical errors.’ The continued success of MD is critically contingent on progress on both of these fronts and each is an important and active area of research in the field. The present review considers recent advances enabled by machine learning in general, and deep learning in particular, in engaging the second of these challenges.

The statistical errors in structural, thermodynamic, and kinetic properties is fundamentally a sampling problem. Simulation trajectories furnished by standard MD do not offer sufficiently comprehensive sampling of the states or events of interest to provide robust estimations of the properties of interest [Citation11,Citation12]. Proper sampling of the relevant states and transition rates is critical for the success of biomolecular simulations in applications including identification of the native and metastable states of a protein, resolution of protein binding pockets and association free energy of ligands and drugs, prediction of the permeability of membrane modulating peptides, understanding of the mechanisms of protein allostery, prediction of the stable structures and aggregation pathways of self-assembling peptides, and modelling of the activation pathways and kinetics of membrane proteins. Enhanced sampling techniques presently engage this challenge with approaches that fall largely into one of four classes [Citation13–20]. (I) Path sampling techniques that efficiently sample reactive pathways between two pre-defined states. (II) Tempering or generalised ensemble approaches that modify the system Hamiltonian to lower barrier heights and improve sampling of configurational space. (III) Decomposition techniques that break the (configurational) phase space of the system into a number of disjoint metastable states and construct a kinetic model for the dynamical transitions between these states. (IV) Collective variable (CV) biasing techniques that accelerate sampling and barrier hopping along pre-specified order parameters.

The first class of approaches – path sampling – focuses on sampling the interconversion pathways between two defined states of interest, making it less well suited to the global exploration of a previously uncharted configurational space. Recent work by Bolhuis and co-workers combining path reweighting with transition path sampling has, however, demonstrated a means to estimate the underlying free energy surface in the vicinity of the barrier and terminal states [Citation21].

The second class of approaches – tempering – is well suited to systems for which there is very little prior knowledge as to what collective variables are most important in governing the system dynamics, but suffer from the drawback that much computational effort is expended sampling modified Hamiltonians that are generally not of direct interest but serve only to support improved sampling [Citation13].

The third class of approaches – discrete kinetic models – requires the definition of a partitioning of phase space into a set of disjoint metastable states and therefore requires sampling of these thermally-relevant configurations. As such, methods from the second or fourth classes of techniques are profitably employed to efficiently sample the configurational space rather than relying upon the exploration provided by unbiased simulations.

The fourth class of approaches – collective variable biasing – appears to suffer from the deficiency that they presuppose the availability of ‘good’ CVs along which to drive sampling. We define ‘good’ in the sense that driving sampling along these CVs leads to lower variance estimators of the structural, thermodynamic, or kinetic properties of interest [Citation23–26]. As such, these CVs should typically be coincident with or closely related to the important dynamical motions of the system and drive sampling over free energy barriers connecting thermally relevant states that would be rarely be surmounted in unbiased simulations. For all but the simplest systems it is not possible to intuit good CVs [Citation27,Citation28], and accelerating bad CVs that are irrelevant to the important molecular motions can lead to poorer sampling than standard unbiased MD. For this reason, the development of techniques to determine good CVs for enhanced sampling is of ‘paramount concern in the continued evolution of such methods’ [Citation13]. In 2018 we published a review of nonlinear machine learning approaches for data-driven CV discovery [Citation16]. It is the purpose of the present review to provide an update to this fast moving field and illuminate some recent advances in employing tools from machine learning – deep learning in particular – for CV discovery and enhanced sampling. We also direct the interested reader to a number of other recent reviews of machine learning in molecular simulation [Citation29], soft materials engineering [Citation30,Citation31], materials science [Citation32], collective variable identification [Citation33], and enhanced sampling [Citation13].

The structure of this review is as follows. In Section 2, we present a brief survey of some of the most prevalent and powerful machine learning techniques that have found broad adoption within the molecular simulation community. Building upon these fundamentals, in Section 3 we detail recent advances in CV discovery and enhanced sampling enabled by these machine learning tools. We focus our discussion upon biomolecular simulations, and in particular protein folding, where many of these developments and successes have been demonstrated. We will largely focus on all-atom simulations where the sampling problem is most severe, but all techniques discussed may be equally well applied to coarse-grained calculations. Finally, in Section 4 we present our outlook upon emerging challenges and opportunities for the field.

2. Survey of popular machine learning techniques for CV discovery

The data-driven CVs sought for enhanced sampling are those which provide improved statistical estimates of the properties of interest. Typically, these CVs are correlated with the highest-variance or slowest-evolving collective degrees of freedom, and therefore can also provide molecular-level insight and understanding of system properties and behaviour. In principle, enhanced sampling could be conducted in all possible combinations of CVs and those which provide the statistically optimal estimates of the property we seek to estimate declared the ‘best’. Of course the enormous computational cost associated with a blind search in the combinatorial space of all possible CVs entirely defeats the purpose of enhanced sampling to provide efficient and accelerated property estimation. Accordingly, a constructive criterion by which to define and determine a ‘good’ CV is required [Citation34–36]. Putative CVs can be considered order parameters spanning a reduced-dimensional subspace of the molecular configurational space. The quality of the subspace defined by these CVs is frequently scored according to one of two common metrics: high-variance CVs parameterise a subspace that maximally preserves the configurational variance contained within a molecular simulation trajectory [Citation37–39], whereas slow CVs (i.e. highly autocorrelated CVs) span a subspace that maximally preserves the kinetic content.

High-variance CV discovery is more straightforward and amenable to a wide array of established machine learning and dimensionality reduction techniques. Data-driven discovery of these CVs take simulation trajectories as their input, and it is typically possible to apply these techniques to non-time ordered data and data generated by biased sampling where the thermodynamic bias can be exactly cancelled by thermodynamic reweighting [Citation40]. Conceptually, these techniques can be thought of as identifying and parameterising a low-dimensional subspace within the high-dimensional configurational phase space to which the simulation data are approximately restrained [Citation41,Citation42]. We note here the apparent ‘chicken and egg’ problem wherein CV discovery requires simulation trajectories that provide good sampling of the thermally relevant phase space, whereas the generation of such trajectories requires enhanced sampling in good CVs [Citation33]. The solution, of course, is to iterate between rounds of CV discovery and enhanced sampling until convergence of the CVs and phase space exploration [Citation33,Citation37,Citation43,Citation44].

The application of machine learning for high-variance CV discovery was pioneered through the use of linear dimensionality reduction tools such as principal component analysis (PCA) and multidimensional scaling (MDS) [Citation45,Citation46]. However, the inherent linearity of these approaches limited the capabilities in identifying the important nonlinear CVs characteristic of complex molecular systems. In more recent years, nonlinear dimensionality reduction and manifold learning techniques have been employed, including locally linear embedding (LLE) [Citation47,Citation48], Isomap [Citation49–52], local tangent space alignment [Citation53], Hessian eigenmaps [Citation54], Laplacian eigenmaps [Citation55], diffusion maps (DMAPS) [Citation41, Citation44,Citation56–58], sketch maps [Citation38,Citation59,Citation60] and t-distributed Stochastic Neighbor Embedding (t-SNE) [Citation61]. These more powerful techniques have largely superseded linear approaches but do tend to suffer from the absence of an explicit functional mapping of atomic coordinates to the CVs, which can present challenges in interpretability and implementing biased sampling [Citation37,Citation43,Citation44,Citation62, Citation63]. Deep learning techniques based on artificial neural networks have recently emerged as a means to discover high-variance nonlinear CVs that are equipped with explicit and differentiable functional mappings to the atomic coordinates [Citation37,Citation64].

Slow CV discovery tends to be more challenging and approachable with a narrower class of machine learning tools. These approaches are also more restrictive in that they typically require (long) time-ordered trajectories that have been propagated under the unbiased system Hamiltonian. Depending on the particular dynamical propagator that is implemented, approaches do exist to relax the requirement for unbiased trajectories by performing dynamical reweighting of biased simulation trajectories [Citation65–70]. Conceptually, these approaches seek linear or nonlinear functions of the configurational coordinates that are maximally autocorrelated and therefore parameterise the slowest-evolving molecular motions. In general, these approaches owe their mathematical foundations to the properties of the transfer operator (a.k.a. Perron-Frobenius operator or propagator) or its adjoint the Koopman operator [Citation71–83] and associated variational principles such as the variational approach to conformational dynamics (VAC) [Citation84,Citation85] or variational approach to Markov processes (VAMP) [Citation80]. Classical techniques for slow CV discovery include time-lagged independent component analysis (TICA) [Citation86,Citation87] kernel TICA (kTICA) [Citation88,Citation89], dynamical mode decomposition (DMD) [Citation74,Citation90–96], extended dynamical mode decomposition (EDMD) [Citation78,Citation97,Citation98], canonical correlation analysis (CCA) [Citation80,Citation99], Markov state models (MSMs) [Citation19,Citation20], or Ulam's method [Citation71,Citation100–103]. More recent approaches based on deep learning include deep CCA [Citation104], variational approach to Markov processes networks (VAMPnets) [Citation81,Citation105], state-free reversible VAMPnets (SRVs) [Citation106,Citation107], time-lagged autoencoders (TAEs) [Citation108,Citation109], and variational dynamics encoders (VDEs) [Citation109–111].

In the remainder of this section we survey four of the most popular machine learning techniques – ANNs, DMAPS, MSMs, and TICA – that serve as the foundations for many recent methodological developments in high-variance and slow CV discovery and enhanced sampling that we discuss in Section 3.

2.1. Artificial neural networks (ANNs)

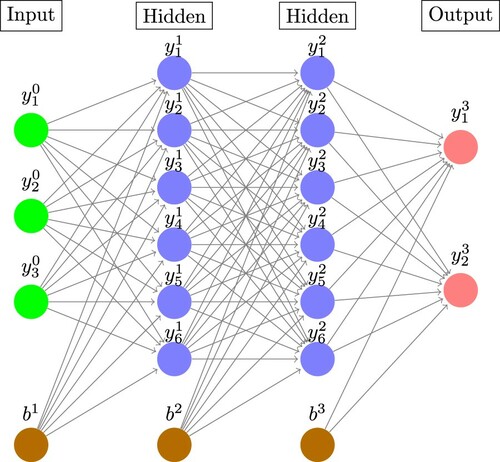

Artificial neural networks (ANNs) are collections of activation functions, or neurons, which are composited together into layers in order to approximate a given function of interest [Citation112]. Their utility and power can be largely attributed to the universal approximation theorem, [Citation113,Citation114], which states that, under mild assumptions, there exists a finite-size neural network that is capable of approximating any continuous function to arbitrary precision. In a fully-connected ANN, the neurons in each layer take as their inputs the outputs from the previous layer, apply a nonlinear activation function, and pass on their outputs to the next layer. A schematic diagram of a three-layer feed-forward fully-connected neural network in Figure . Mathematically, the output from neuron i of fully connected layer k is given by,

(1)

(1) where

and

define the layer weights and biases, respectively. The activation function

is an arbitrary nonlinear function but is often taken to be

or some form of rectified linear unit (ReLU) and is applied element-wise to the input. ANNs are typically trained by minimising an objective function (also called loss function) using some variant of stochastic gradient descent through a process known as backpropagation [Citation115–117].

Figure 1. Schematic diagram of a three-layer fully-connected feed-forward neural network. The output of neuron i from layer k is denoted and the bias node for layer k denoted

. The arrows connecting pairs of neurons are the trainable weights

. The output of each layer is computed from a weighted sum of outputs of the previous layer passed through a nonlinear activation function (Equation (Equation1

(1)

(1) )). (Image constructed using code downloaded from http://www.texample.net/tikz/examples/neural-network with the permission of the author Kjell Magne Fauske.)

Many of the advances in deep learning have been driven by novel network topologies, activation functions, and loss functions adapted to particular tasks. For example, convolutional neural networks capture spatial invariance of local features, a useful feature for image analysis [Citation118–120]. Autoassociative neural networks perform non-linear dimensionality reduction [Citation121]. Generative models, such as variational autoencoders [Citation122] and generative adversarial networks (GANs) [Citation123], are capable of synthesising new, unobserved examples that resemble existing training data. In applications to molecular systems, ANNs have been used to build biasing potentials for enhanced sampling [Citation124–128], fit ab initio potential energy surfaces [Citation129,Citation130] and determine quantum mechanical forces in MD simulations [Citation131], perform coarse graining [Citation132], and generate realistic molecular configurations [Citation133–135]. To highlight a few specific examples, PotentialNet is a novel neural network architecture which uses graph convolutions to encode molecular structures, accommodating permutation invariance and molecular symmetries [Citation136]. SchNet is a variant of a deep tensor neural network that eliminates rotational, translational, and permutational atomic symmetries by construction and has been used to fit molecular potential energy landscapes and molecular force fields [Citation137]. PointNet [Citation138] is a network designed to ingest and process point cloud data for object classification and part segmentation that eliminates permutational invariances by max pooling, and which recently found applications in local molecular structure analysis and crystal structure classification [Citation139]. CGnets learn free energy functions and force fields for coarse-grained molecular representations by fitting against all-atom force data [Citation132]. Boltzmann Generators (BG) employ a synthesis of deep learning, normalising flows, and statistical mechanics to train an invertible network capable of efficiently sampling molecular configurations from the equilibrium distribution [Citation133]. As we will see below, many cutting-edge ML approaches rely upon some form of ANN, and, with increasing frequency, deep neural networks (DNN) comprising many hidden layers.

We note that Boltzmann Generators [Citation133] represent a particularly promising and powerful enhanced sampling technique for molecular systems, and although they do not inherently rely upon the discovery or definition of CVs for their operation we identify strong synergies between these techniques. First, training of BGs generally requires a number of examples of molecular structures from metastable states of the system and CV enhanced sampling techniques may be used to efficiently furnish these training examples starting from nothing more than a single structure and a molecular force field. Second, since BGs can efficiently sample and estimate free energy differences between distantly separated states of the molecular system they may be used to efficiently generate physically realistic transition pathways between metastable states identified by CV enhanced sampling. Third, one mode of BG deployment augments the network loss function with a “reaction-coordinate loss” to promote sampling along a particular direction in phase space. CV discovery techniques can identify good reaction coordinates linking important metastable states of the system. Fourth, CV discovery and enhanced sampling may be conducted within the BG latent space to augment the power of BGs to explore previously unsampled regions of configurational space through the invertible transformation to molecular coordinates.

2.2. Diffusion maps (DMAPS)

Diffusion maps are a dimensionality reduction technique originally proposed by Coifman and Lafon that performs nonlinear dimensionality reduction by harmonic analysis of a discrete diffusion process (random walk) constructed over a high-dimensional dataset [Citation57,Citation140]. The first application of DMAPS to molecular simulations demonstrated its capacity to extract dynamically-relevant collective molecular motions [Citation41], and it has since seen widespread adoption as a method for the analysis of molecular trajectories [Citation28,Citation58,Citation141] and as a component of adaptive biasing methods [Citation43,Citation44,Citation56,Citation63]. Mathematically, DMAPS construct a random walk over the space of molecular configurations recorded over the course of a molecular simulation, which, in the continuum limit, can be shown to correspond to a Fokker-Plank (FP) diffusion process in the presence of potential wells [Citation142]. The leading eigenvectors of the Markov matrix describing the dynamics of the discrete random walk approximate the leading eigenfunctions of the associated backward FP operator describing the most slowly relaxing modes of the diffusion process [Citation140]. The algorithm proceeds by constructing a kernel matrix K defined as,

(2)

(2) where i and j index over molecular configurations,

is a user-defined distance metric such as the translationally and rotationally aligned root mean squared deviation (RMSD) between atomic coordinates, and ε is the user-defined kernel bandwidth which represents the characteristic step size of the random walk over the data [Citation42]. After row-normalising the kernel matrix to conserve hopping probabilities, a spectral decomposition gives eigenvector/eigenvalue pairs that are truncated at a gap in the eigenvalue spectrum. The resultant top k eigenvectors define the CVs spanning the low-dimensional embedding and which parameterise the intrinsic manifold upon which the diffusion process is effectively restrained. The naïve implementation of DMAPS scales quadratically in the number of data points, and so variants with reducted memory and computational costs have been developed, including landmark diffusion maps (L-DMAPS) [Citation143] and pivot diffusion maps (P-DMAPS) [Citation144].

Although a powerful nonlinear dimensionality reduction technique, DMAPS possess at least two limitations in its applications to molecular systems. The first is the assumption of diffusive dynamics over the high-dimensional data, which may or may not be a good approximation of the true molecular dynamics. The second is the absence of an explicit mapping from the atomic coordinates to the low-dimensional CVs. As a result, out of sample extension to new data points outside of the training set require the use of approximate interpolation techniques such as the Nyström extension, Laplacian pyramids, or kriging [Citation145–147]. Further, although the existence of an explicit function mapping is no guarantee of interpretability (consider ANNs), its absence can frustrate interpretability of the CVs. A degree of interpretability can be recovered by correlating the DMAPS CVs with candidate physical variables [Citation41,Citation56], perhaps within an automated search procedure [Citation27, Citation148, Citation149], by projecting representative molecular configurations over the low-dimensional embedding, or by visualising the collective modes in the high-dimensional space [Citation150, Citation151]. The absence of an explicit mapping also precludes the calculation of exact derivates of this expression, which renders diffusion maps incompatible with enhanced sampling methods such as umbrella sampling [Citation152] or metadynamics [Citation23] that require the gradients of the collective variables with respect to the atomic coordinates.

2.3. Markov state models (MSMs)

Markov state models (MSMs) are a powerful framework to gain insight from molecular simulation trajectories, and guide efficient simulations [Citation20,Citation153]. MSMs are widely used for studying many biomolecular processes including protein folding, protein association, ligand binding, and forging connections with experiment [Citation154, Citation155]. Constructing MSMs typically follows the following steps: feature extraction from the molecular simulation trajectory, feature transformation, engineering, and elimination of symmetries (e.g. translation, rotation, permutation), projection of engineered features into a low-dimensional subspace, clustering low-dimensional projections of configurations into microstates, construction of a microstate transition matrix, coarse-graining into macrostates, validation and analysis of the microstate and macrostate kinetic models for thermodynamic and dynamic properties. A schematic illustration of this pipeline is presented in Figure .

Figure 2. Schematic diagram of the Markov state model (MSM) construction and analysis pipeline. (a) Many short molecular dynamics trajectories are collected. (b) The snapshots constituting each trajectory are featurized, projected into a low-dimensional space, and clustered into microstates. Each frame in each trajectory is assigned to a microstate. For illustrative purposes, four microstates are considered and coloured green, blue, purple, and pink. (c) Counting the number of transitions between microstates furnishes the transition counts matrix. (d) Assuming the system is at equilibrium and therefore follows detailed balance, the count matrix is symmetrised and normalised to generate the reversible transition matrix defining the conditional transition probabilities between microstates. (e) The equilibrium distribution over microstates is furnished by the leading eigenvector of the transition probability matrix, here illustrated in a pie chart. States with greater populations are more thermodynamically stable. (f) The higher eigenvectors correspond to a hierarchy of increasingly fast dynamical relaxations over the microstates. The first of these possess a negative entry corresponding to the green state and positive entries for the other states, therefore characterising the net transport of probability distribution out of the green microstate and into the blue, purple, and pink. If desired, the microstates can be further coarse grained into macrostates, typically by clustering of the microstate transition matrix. Image reprinted with permission from Ref. [Citation20]. Copyright (2018) American Chemical Society.

![Figure 2. Schematic diagram of the Markov state model (MSM) construction and analysis pipeline. (a) Many short molecular dynamics trajectories are collected. (b) The snapshots constituting each trajectory are featurized, projected into a low-dimensional space, and clustered into microstates. Each frame in each trajectory is assigned to a microstate. For illustrative purposes, four microstates are considered and coloured green, blue, purple, and pink. (c) Counting the number of transitions between microstates furnishes the transition counts matrix. (d) Assuming the system is at equilibrium and therefore follows detailed balance, the count matrix is symmetrised and normalised to generate the reversible transition matrix defining the conditional transition probabilities between microstates. (e) The equilibrium distribution over microstates is furnished by the leading eigenvector of the transition probability matrix, here illustrated in a pie chart. States with greater populations are more thermodynamically stable. (f) The higher eigenvectors correspond to a hierarchy of increasingly fast dynamical relaxations over the microstates. The first of these possess a negative entry corresponding to the green state and positive entries for the other states, therefore characterising the net transport of probability distribution out of the green microstate and into the blue, purple, and pink. If desired, the microstates can be further coarse grained into macrostates, typically by clustering of the microstate transition matrix. Image reprinted with permission from Ref. [Citation20]. Copyright (2018) American Chemical Society.](/cms/asset/1b52a065-8ca2-451f-8ae5-9d9a07fb437d/tmph_a_1737742_f0002_oc.jpg)

There are many important aspects in each of these steps, a detailed discussion of which can be found in Refs. [Citation20,Citation153,Citation156]. Here we observe a few key points. The chief advantage of MSMs in furnishing long-time kinetic models, is that the simulation data required for their construction need only have reached local equilibrium, in the sense that the transition probabilities between neighbouring microstates are memoryless, allowing the MSM to be constructed exclusively from conditional probabilities that the system appears in state j at time given that system appears in state i at time t [Citation155–158]. This is an extremely valuable property since it alleviates the need for globally equilibrated simulation trajectories that can be exceedingly expensive to generate. As such, MSMs can be constructed from multiple relatively short trajectories that can be performed in parallel and initialised adaptively to provide good sampling of all relevant transitions [Citation156,Citation158]. We also observe that many steps in the MSM construction pipeline profitably employ other machine learning methods. In particular, TICA, SRVs, and VDEs are frequently used in the featurization and dimensionality reduction steps [Citation87,Citation107,Citation110] (see Sections 2.4 and 3.7), spectral clustering is employed to lump microstates into macrostates [Citation159], maximum likelihood estimation used to enforce reversibility [Citation156], and active learning used to adaptively direct sampling of undersampled microstate transitions [Citation153]. VAMPnets and Deep Generative Markov State Models are two recently-proposed approaches that employ deep learning to replace some or all of the MSM parameterisation pipeline for the construction of discrete kinetic models. We discuss these approaches in Section 3.8.

2.4. Time-lagged independent component analysis (TICA)

Time-lagged independent component analysis (TICA) (also known as second order ICA or time-structure based ICA, and equivalent to CCA employing time-lagged data for reversible processes [Citation104,Citation105,Citation108]) is a linear dimensionality reduction method that takes as input a featurization of a molecular simulation trajectory and identifies maximally autocorrelated linear projections along which the dynamical evolution of the system relaxes most slowly [Citation71,Citation84–87,Citation160–164]. This stands in contrast to PCA, which identifies linear projections along which the configurational variance in the simulation trajectory is maximal [Citation45,Citation46,Citation165]. The leading TICA components can be interpreted, within the linear approximation, as the leading ‘slow modes’ whereas the PCA components are the leading ‘high variance modes’. It can be shown that given a (possibly nonlinear) mean-zeroed featurization of the snapshots of a molecular simulation trajectory

with frames recorded at a time interval τ, the expansion coefficients

defining the hierarchy of TICA modes defined by the linear projections

follow from the solution of the following generalised eigenvalue problem [Citation30,Citation84–86],

(3)

(3) where

is a diagonal matrix of ordered eigenvalues

that rank order the corresponding eigenvectors according to an implied time scale

,

is the covariance matrix with elements

,

is the time-lagged covariance matrix with elements

, and the columns of

corresponding to the

hold the expansion coefficients.

The identification of slow modes is particularly important when we are interested in understanding or accelerating kinetic process in molecular systems, for example in protein folding [Citation10] or ligand binding [Citation166]. TICA is commonly used within the MSM pipeline to define slow low-dimensional projections of simulation trajectory data for microstate clustering (Section 2.3). It is known that MSM models built on top of TICA components are generally much better performing than those built upon structural metrics (e.g. root-mean-square deviation of atomic positions) [Citation87]. TICA coordinates have also been used as collective variables in which to conduct enhanced sampling using metadynamics [Citation167, Citation168].

3. Machine learning-enabled advances in collective variable discovery and enhanced sampling

We now proceed to detail a selection of recent advances in collective variable (CV) discovery and enhanced sampling in biomolecular simulations that have been enabled by modern machine learning techniques. The selected applications are mainly taken from the field of biomolecular simulation and largely build upon the foundations established in Section 2.

3.1. Diffusion maps-based enhanced sampling

A number of enhanced sampling techniques have emerged that rely on DMAPS to learn a low dimensional intrinsic manifold characterising the slowest motions of a macromolecular system, then use various schemes to expand the boundaries of the manifold into unexplored regions. One such method is known as diffusion-map-directed molecular dynamics (DM-d-MD). In DM-d-MD, an initial short simulation is carried out, after which the locally-scaled variant of diffusion maps is used to construct the intrinsic manifold [Citation58]. The configuration with the largest value of the first diffusion coordinate is chosen as the new frontier, and a new short simulation is started from that state. This process is repeated until no new regions are discovered. Selection bias towards frontier points perturbs sampling away from the unbiased Boltzmann distribution. In order to reconstruct accurate free energies and sample densities, additional rounds of umbrella sampling are performed at the frontier points and reweighting is employed to recover equilibrium statistics. An extended version of DM-d-MD was subsequently proposed to eliminate the need for the additional umbrella sampling and improve the selection of frontier points [Citation44]. In this extended version, swarms of simulations are initialised, terminated, and restarted over the course of the landscape exploration process to maintain an approximately uniform distribution in the first two DMAPS coordinates. By updating the statistical weights of the trajectories within this kill/spawn process the necessary reweighting factors are available to correct for the bias introduced in the selection of simulation starting points and recover estimates of the unbiased free energy landscape. An application of extended DM-d-MD to alanine-12 demonstrated impressive speedups in exploring the thermally accessible phase space compared to unbiased calculations [Citation44]. The heart of the DM-d-MD method is to accelerate sampling by the smart initialisation of unbiased simulations at the frontier of the explored phase space rather than through the imposition of artificial bias. On the one hand, this is advantageous in that all simulation trajectories evolve under the unbiased system Hamiltonian and therefore obey the true dynamics of the system. On the other hand, the absence of artificial bias means that simulations are reliant on favourable initialisation and thermal fluctuations to drive barrier crossing, so trajectories can be prone to tumble down steep free energy gradients and limit the efficiency of barrier crossing.

A second approach termed intrinsic map dynamics (iMapD) is due to Chiavazzo et al. [Citation63]. Similar to DM-d-MD, short simulations are conducted and embedded using DMAPS. The boundary of this ‘intrinsic map’ is detected and extended outwards by a certain amount using local PCA. This step is critical to iMapD, as it involves the projection of points on the intrinsic manifold into unexplored regions, effectively allowing the system to tunnel through free energy barriers. Since the projected points may lie off-manifold, a lifting step is performed where the new configurations are restrained and the remaining degrees of freedom are relaxed. Once lifting is complete, new rounds of unbiased simulation are initialised from the projected boundary points and the procedure repeated until convergence. An illustration of the operation of iMapD is presented in Figure . An application of iMapD to computationally challenging simulations of the dissociation of the Mga2 dimer demonstrated its capacity to efficiently drive dissociation in just three iterations of the technique where millisecond-long unbiased simulations failed to do so [Citation63]. Whereas DM-d-MD initialised new simulations at the frontier of the currently sampled phase space, iMapD performs a local extrapolation to seed new points beyond the current frontier, offering improved sampling efficiency and the possibility to tunnel through free energy barriers. The optimal size of the outward step can, however, be difficult to determine and, like DM-d-MD, the absence of artificial bias can impair barrier crossing efficiency.

Figure 3. Schematic illustration of iMapD. The curved teal sheet is a cartoon representation of a low-dimensional manifold residing within the high-dimensional coordinate space of the molecular system (black background) and to which the system dynamics are effectively restrained. This manifold supports the low-dimensional molecular free energy surface of system (red contours denote potential wells). The dimensionality of the manifold, good collective variables with which to parameterise it, and topography of the free energy surface are a priori unknown. iMapD commences by running short unbiased simulations to perform local exploration of the underlying manifold and which define an initial cloud of points . Boundary points are identified, here

and

, and local PCA applied to define a locally-linear approximation to the manifold geometry that is locally valid in the vicinity of each point. An outward step is then taken within these linear subspaces, here from

to expand the exploration frontier. The projected point may lie off the manifold due to the linear approximation inherent in the outward projection and so a short ‘lifting’ operation is employed to relax it back to the manifold. This point then seeds a new unbiased simulation that generates a new cloud of points

and the process is repeated until the manifold is fully explored. In this manner iMapD explores the manifold by ‘walking on clouds’. Image adapted with permission from Ref. [Citation63].

![Figure 3. Schematic illustration of iMapD. The curved teal sheet is a cartoon representation of a low-dimensional manifold residing within the high-dimensional coordinate space of the molecular system (black background) and to which the system dynamics are effectively restrained. This manifold supports the low-dimensional molecular free energy surface of system (red contours denote potential wells). The dimensionality of the manifold, good collective variables with which to parameterise it, and topography of the free energy surface are a priori unknown. iMapD commences by running short unbiased simulations to perform local exploration of the underlying manifold and which define an initial cloud of points C(1). Boundary points are identified, here BP1(1) and BP2(1), and local PCA applied to define a locally-linear approximation to the manifold geometry that is locally valid in the vicinity of each point. An outward step is then taken within these linear subspaces, here from BP1(1) to expand the exploration frontier. The projected point may lie off the manifold due to the linear approximation inherent in the outward projection and so a short ‘lifting’ operation is employed to relax it back to the manifold. This point then seeds a new unbiased simulation that generates a new cloud of points C(2) and the process is repeated until the manifold is fully explored. In this manner iMapD explores the manifold by ‘walking on clouds’. Image adapted with permission from Ref. [Citation63].](/cms/asset/c3aa5750-ea24-4fea-bd88-b36f9519c305/tmph_a_1737742_f0003_oc.jpg)

The application of artificial biasing potentials in the collective variables identified by DMAPS is made challenging by the absence of an explicit and differentiable mapping between the atomic coordinates and the DMAPS CVs. The out-of-sample extension techniques discussed in Section 2.2 furnish approximate projections for new data and enable energy biases to be applied in Monte-Carlo simulations as perturbations to the unbiased Hamiltonian conditioned on the current value of the DMAPS CVs [Citation169–171]. The approximations introduced by these extrapolations, however, typically render them too numerically unstable for reliable derivative calculation and the implementation of force biases in molecular dynamics simulation. One solution to this problem is offered by the diffusion nets (DNETS) approach of Mishne et al., who train an ANN encoder to learn a functional map from the atomic coordinates to the low-dimensional DMAPS embeddings [Citation172]. By construction, this map is both explicit and differentiable, opening the door to its use within off-the-shelf molecular dynamics enhanced sampling techniques such as umbrella sampling or metadynamics. The authors also train a ANN decoder to reconstruct molecular configurations from the DMAPS manifold, which may also be useful in ‘hallucinating’ new molecular configurations outside the currently explored phase space that may then be lifted and used to initialise new simulations in the mould of iMapD.

3.2. Smooth and nonlinear data-driven CVs (SandCV)

In a similar spirit to the DNETS approach of Mishne et al. (Section 3.1), Hashemian et al. developed an approach termed smooth and nonlinear data-driven collective variables (SandCV) to estimate explicit and differentiable expressions for CVs discovered by nonlinear dimensionality reduction and then apply bias in these CVs to perform enhanced sampling [Citation39]. In principle, SandCV is compatible with any nonlinear dimensionality technique and enhanced sampling protocol, but it was originally developed to operate with Isomap [Citation50] and adaptive biasing force (ABF) [Citation173]. The heart of SandCV is estimation of the explicit and differentiable function that projects a D-dimensional all-atom Cartesian configuration

into a point

in the d-dimensional Isomap manifold, and d<<D. The mapping

can be conceived of as the composition of three functional maps,

(4)

(4) as illustrated in Figure .

performs alignment of the atomic configuration to (some subset of) the atoms

of a reference structure,

performs a projection of the aligned configuration to the nearest neighbour point within the previously constructed Isomap manifold, and

performs projection of this point into the manifold and is itself the inverse of a function

that is a mapping from the points in the manifold back to the aligned molecular configurations achieved through a basis function expansion in a small number of landmark points. Enhanced sampling is effected by applying biasing forces over the manifold

and propagating these to forces on atoms

through the Jacobian of the mapping function

. SandCV is demonstrated in applications to alanine dipeptide in vacuum and explicit water. In an instance of transfer learning, it is shown that data-driven CVs computed for a simpler system (alanine dipeptide in vacuum) can be applied to a more complex system (alanine dipeptide in water). In a followup publication, the authors propose an extension to SandCV that builds an atlas of locally-valid CVs that are subsequently stitched together, which can be valuable in parameterising complex free energy topologies where different regions of conformational space may require different CVs for their parameterisation [Citation174].

Figure 4. Schematic illustration of SandCV. Molecular configurations are aligned to a reference configuration

then projected onto the Isomap manifold using a nearest neighbour projection and a basis function expansion in a number of landmark points

. Enhanced sampling using adaptive biasing force (ABF) is effected by propagating biasing forces over the manifold

into forces on atoms

through the Jacobian of the explicit and differentiable composite mapping function

. Image reprinted from Ref. [Citation39], with the permission of AIP Publishing.

![Figure 4. Schematic illustration of SandCV. Molecular configurations r are aligned to a reference configuration A(r) then projected onto the Isomap manifold using a nearest neighbour projection and a basis function expansion in a number of landmark points M−1∘P(x). Enhanced sampling using adaptive biasing force (ABF) is effected by propagating biasing forces over the manifold F(ξ) into forces on atoms F(r) through the Jacobian of the explicit and differentiable composite mapping function C(r)=M−1∘P∘A(r). Image reprinted from Ref. [Citation39], with the permission of AIP Publishing.](/cms/asset/ffe90e9b-25ee-43a3-8651-16a2edd0f05b/tmph_a_1737742_f0004_oc.jpg)

SandCV relies on the availability of representative configurations covering the region of configurational phase space of interest since the projection of points onto the manifold is through projection onto nearest neighbours. When no such data are available, SandCV uses initial high-temperature simulations to provide seed configurations for the manifold learning. The subsequent enhanced sampling is then able to interpolatively bridge the gaps between the sparse initial landscape, but it remains undemonstrated as to whether the algorithm can extrapolatively drive sampling into new regions of configuration space.

3.3. Molecular enhanced sampling with autoencoders (MESA)

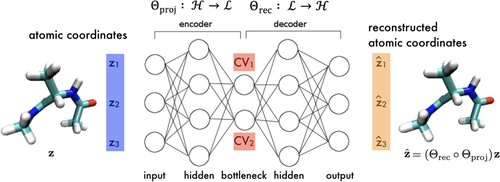

DNETS (Section 3.1) and SandCV (Section 3.2) furnish explicit and differentiable approximations linking the atomic coordinates to the low-dimensional CVs furnished by nonlinear dimensionality reduction, which can subsequently be used to conduct enhanced sampling. Chen et al. proposed an alternative nonlinear dimensionality approach based on deep learning that learns nonlinear CV that possess explicit and differentiable mappings by construction [Citation37,Citation64]. In doing so, the functional estimation step is eliminated and enhanced sampling may be conducted directly in the learned CVs without approximation error. This approach, termed molecular enhanced sampling with autoencoders (MESA), employs an deep neural network (DNN) with an autoencoding architecture or ‘autoencoder’ (AE) comprising an encoder that maps molecular configurations

in a high-dimensional coordinate space

to a nonlinear projection

in a low-dimensional latent space

, and a decoder

that approximates the reverse mapping (Figure ). The network is trained to reconstruct its own inputs (i.e. autoencode) such that

and therefore discover a low-dimensional latent space

defined by the ANN activations in a bottleneck layer that preserves the salient information necessary to perform an approximate reconstruction. The appropriate dimensionality of the latent space, and therefore number of nonlinear CVs required for reconstruction, can be tuned on-the-fly. Since the encoding

is furnished by a ANN it is explicit and differentiable by construction and can be used to propagate biasing forces in the CVs

to forces on atoms

.

Figure 5. Molecular enhanced sampling with autoencoders (MESA). An autoencoding neural network (autoencoder) is trained to reconstruct molecular configurations via a low-dimensional latent space where the CVs are defined by neuron activations within the bottleneck layer. The encoder performs the low-dimensional projection from molecular coordinates

in the high dimensional atomic coordinate space

into the low-dimensional latent space

and the decoder

performs the approximate reconstruction back to

. The encoder furnishes, by construction, an exact, explicit, and differentiable mapping from the atomic coordinates to CVs that can be modularly incorporated into any off-the-shelf CV biasing enhanced sampling technique.

To encourage complete sampling of phase space and improvement of the data-driven CVs, rounds of CV discovery and enhanced sampling are interleaved in an iterative framework comprising successive: (i) learning CVs from simulation trajectories (either the initial unbiased trajectory or biased trajectories obtained from previous iterations of CV biasing) and (ii) applying CV biasing with the learned CV to push the frontier outwards and drive exploration of new regions of phase space using umbrella sampling (but arbitrary CV biasing approaches may be employed). The process is terminated when CVs stabilise between successive rounds and the volume of phase space explores converges. Applications to alanine dipeptide and Trp-cage demonstrate the capacity of the technique to discover, sample, and determine free energy surfaces in nonlinear CVs starting from no prior knowledge of the system [Citation37]. The iterative expansion of the frontier and refinement of CVs as a function of location in phase space is analogous to that in iMapD and DM-d-MD but the application of accelerating biasing forces greatly enhances barrier crossing. The use of the explicit and differentiable mapping to perform enhanced sampling is similar to SandCV but the mathematical framework enabled by ANNs is much simpler and the functional mapping is exact by construction. The use of biasing forces does, of course, corrupt the true dynamics of the system and so dynamical observables (e.g. Markov state models) cannot be straightforwardly extracted from the simulation data. A followup paper shows that tailoring the autoencoder architecture and error functions can help discover better CVs, improve sampling efficiency, and favour the discovery of more stable and interpretable CVs [Citation64].

3.4. Reweighted autoencoded variational Bayes for enhanced sampling (RAVE)

Akin to MESA (Section 3.3), reweighted autoencoded variational Bayes for enhanced sampling (RAVE) due to Ribeiro et al. uses DNNs to learn nonlinear CVs for enhanced sampling [Citation175]. It differs from MESA in that it makes use of variational autoencoders (VAEs) [Citation122], seeks a 1D latent space encoding only the leading CV, and conducts sampling not directly in the discovered CV but in a proxy physical variable (or linear combinations thereof) that maximally resembles that of the CV. The use of VAEs compared to AEs conveys advantages in producing better regularised and continuous latent space embeddings. Identification of a physical variable χ in which to perform sampling is very attractive from an interpretability standpoint, but means sampling is necessarily performed in a proxy for the data-driven CV. The quality of the proxy variable in approximating the discovered CV is contingent on the space of candidate physical variables considered. The probability distribution in the optimal physical variable is then turned into a biasing potential

from which, by virtue of the physical nature of χ for which an explicit relation to the atomic coordinates is known, is straightforwardly converted into biasing forces. An iterative procedure very similar to MESA is then applied to drive system exploration by interleaving rounds of biased simulation and CV learning.

The restriction to single CVs is limiting, but the framework can, in principle, be extended to multidimensional CVs. One way to do so may be to employ β-VAEs to encourage independence of the various CVs [Citation176,Citation177], but an alternative approach is adopted in an extension of the framework known as Multi-RAVE in which a set of locally valid one-dimensional CVs are constructed and the piecewise sum of these position-dependent components is a single-nonlinear CVs spanning relevant configurational space [Citation178]. Numerical experiments with the disassociation of benzene from L99A lysozome predict unbinding free energies in good agreement with experiment.

3.5. Reinforcement learning based adaptive sampling (REAP)

The CVs parameterising configurational space may vary substantially as a function of location over that space. For example, those CVs appropriate to parameterise and enhance configurational sampling in the vicinity of the native fold of a protein may differ significantly from those appropriate for the unfolded ensemble, and protein activation frequently involves two (or more) distinct molecular events parameterised by different CVs that occur in series and result in characteristic ‘L-shaped’ landscapes. By maintaining a sufficiently large ensemble of CVs, one may determine on-the-fly which subset of CVs constitute the active space for enhanced sampling at any given location in phase space. This is the approach taken by reinforcement learning based adaptive sampling (REAP) introduced by Shamsi et al., which employs reinforcement learning (RL) to determine the relative importance of different candidate CVs as a system explores phase space [Citation36]. REAP proceeds by running an initial round of short molecular simulations. The resulting configurations are then clustered, the least-populated clusters identified, and a reward function measuring the normalised absolute distance from the ensemble mean evaluated for each candidate CV for each cluster. An optimisation problem is solved to maximise the overall reward function as a weighted sum of the candidate CVs, and the clusters that offer the highest rewards selected as those from which to harvest configurations to seed a new round of simulations. This process is repeated until sufficient sampling of the phase space is achieved. The key feature of any RL approach is the reward function, which in the case of REAP is designed to maximise discovery of new conformational states. Like RAVE, the success of REAP is contingent on the quality and size of the space of candidate CVs. RL remains one of the less explored areas of ML in applications to molecular simulation, and it remains to be seen what advantages it can bring to adaptive sampling relative to the unsupervised approaches discussed in Sections 3.1–3.4.

3.6. Determining collective variables through supervised learning

Supervised learning is also relatively under-explored in molecular CV discovery relative to unsupervised techniques since the output variables (a.k.a. dependent variables, labels) for which we wish to construct a model in terms of our input variables (a.k.a. independent variables, descriptors, features) are often not obvious or available. Sultan and Pande recently proposed that the (pre-defined) metastable states of a molecular system may be adopted as output variables and supervised learning deployed to construct a pairwise or one-vs-all decision function to discriminate between the states and serve as a CV for enhanced sampling [Citation179]. Such a situation may arise in protein activation where crystal structures for the active and inactive states are available but the activation pathway and mechanism is unknown. The supervised learning task is cast as a classification problem taking as input the atomic coordinates of the molecule in the various states and output as the labels of the states, and which is solved by support vector machines (SVM), logistic regression, and ANNs. The resulting decision function – distance to separating hyperplane for SVM, probability or odds ratio for logistic regression, unnormalised network output for ANN – provides an explicit and differentiable CV that is deployed in metadynamics simulations to drive sampling between states. The approach is demonstrated in applications to alanine dipeptide and chignolin, where it is shown to effectively drive reactive transitions [Citation179]. Success of the approach is predicated on prior knowledge of the relevant states, and, like path sampling, the decision function CVs are inherently interpolative and so can have difficulty driving sampling into unexplored regions of phase space.

Mendels et al. independently developed harmonic linear discriminant analysis (HLDA) and multi class HLDA (MC-HLDA) as a supervised learning approach based on a generalisation of Fisher's linear discriminant [Citation180,Citation181]. The method takes as input the means and covariance matrices within a predefined set of descriptors for K metastable states as measured by short molecular simulations. An optimisation problem is formulated to find the linear projections within the descriptor space that maximise the ratio between the between-state and within-state scatter matrices, which corresponds to maximisation of a Fisher ratio and can be solved via a generalised eigenvalue problem. The linear projections within the descriptor space furnish CVs in which to perform metadynamics enhanced sampling. An application to chignolin demonstrates that the method successfully generates reactive pathways between the folded and unfolded states, although the efficiency of the approach can be sensitive to the user-defined selection of descriptors [Citation182]. Again, this approach requires prior knowledge of the relevant metastable states, and is not designed to drive sampling into new configurational states.

3.7. Transfer operator theory and variational approaches to conformational dynamics

The CV discovery approaches discussed thus far have largely sought to discover high-variance CVs within the configurational phase space using some form of unsupervised, supervised, or reinforcement learning. We now proceed to discuss some recent developments in the data-driven discovery of slow (i.e. maximally autocorrelated) CVs that can often be more mechanistically meaningful and provide superior coordinates for the direct acceleration of the slowest dynamical processes. The theoretical foundations for the determination of these CVs is founded in spectral analysis of the transfer operator that propagates probability distributions over molecular microstates through time [Citation84,Citation85,Citation88,Citation106,Citation162,Citation163]. In an important theoretical development, Noé and Nuske showed that the spectral analysis of the operator can be performed in a data-driven fashion within the variational approach to conformational dynamics (VAC) in the case of equilibrium systems [Citation84,Citation85] or variational approach to Markov processes (VAMP) in the case of non-reversible and non-stationary dynamics [Citation80,Citation81]. These frameworks possess a pleasing parallel with the variational approach to approximate electronic wavefunctions within a given basis set through solution of the quantum mechanical Roothan-Hall equations [Citation183,Citation184]. Full details of the VAC and VAMP can be found in Refs. [Citation80,Citation81,Citation84,Citation85,Citation88,Citation106,Citation185]. Here we briefly survey a number of recently developed machine learning approaches for slow CV discovery that seek to perform data-driven diagonalisation of the transfer operator.

As discussed in Section 2.4, TICA adopts as a basis set a featurization of the atomic coordinates

(in the original TICA formulation

) and solves a generalised eigenvalue problem (Equation (Equation3

(3)

(3) )) to define maximally autocorrelated linear projections within this basis. Kernel TICA (kTICA) is a generalisation of the TICA algorithm described in Section 2.4 that employs the kernel trick to apply the TICA machinery within a nonlinear transformation of the feature space [Citation88]. The nonlinearity of the kernel function provides kTICA with greater expressive power, and the capacity to learn nonlinear slow modes from time-series data with higher fidelity than TICA [Citation88]. As is typical of kernel-based methods, kTICA is computationally expensive and sensitive to kernel selection and hyperparameter choice [Citation88, Citation89, Citation106, Citation110].

Time-lagged autoencoders (TAE) approximate slow CVs by performing nonlinear time-lagged regression using deep learning. Applied in the context of molecular simulation by Wehmeyer and Noé, TAEs employ an autoencoder architecture in which the encoder maps a configuration at time t to a latent encoding

, and the decoder maps

to a time-lagged output

that minimises the time-lagged reconstruction loss to the true time-lagged configuration

[Citation108] (Figure ). The underlying principle of operation is that minimisation of this time-lagged reconstruction loss promotes the discovery of slow CVs as the latent space variables

[Citation109]. The technique is demonstrated in applications to alanine dipeptide and villin protein, and is shown to perform favourably against TICA, particularly when suboptimal molecular featurizations are employed.

Figure 6. Block diagram of a time-lagged autoencoder (TAE). The encoder projects a molecular configuration at time t into a low-dimensional latent embedding

from which a time-lagged molecular configuration

at time

is subsequently reconstructed. For τ = 0 the TAE reduces to a standard AE and the CV discovery process is equivalent to MESA (Section 3.3). Image reprinted from Ref. [Citation108], with the permission of AIP Publishing.

![Figure 6. Block diagram of a time-lagged autoencoder (TAE). The encoder projects a molecular configuration zt at time t into a low-dimensional latent embedding et from which a time-lagged molecular configuration zt+τ at time (t+τ) is subsequently reconstructed. For τ = 0 the TAE reduces to a standard AE and the CV discovery process is equivalent to MESA (Section 3.3). Image reprinted from Ref. [Citation108], with the permission of AIP Publishing.](/cms/asset/a5bce448-1438-4a61-b1d5-4bccb86551d6/tmph_a_1737742_f0006_ob.jpg)

Variational dynamics encoders (VDEs) are a deep learning approach for slow CV discovery first introduced by Hernández et al. [Citation110]. VDEs employ a similar DNN autoencoding architecture as TAEs, but differ in their use of a VAE, as opposed to a standard AE, and a mixed loss function,

(5)

(5) where

is the time-lagged reconstruction loss,

is the autocorrelation of the learned 1D latent space CV

,

is a regularisation term that measures the similarity of the distribution of

in the latent space to a Gaussian distribution, and

is a linear mixing parameter [Citation109]. In an application to the folding of villin protein, VDEs were shown to outperform TICA in the discovery of CVs capable of resolving metastable states and that the VDE latent coordinates produced superior MSMs with slower implied timescales [Citation110].

TAEs and VDEs possess two key limitations. First, they are restricted to the discovery of 1D latent spaces and cannot be applied to learn multiple hierarchical slow modes due to the absence of orthogonality constraints in latent space [Citation109]. Second, the incorporation of the time-lagged reconstruction loss within the loss function compromises the ability of the networks to discover the highly autocorrelated (i.e. slow) modes at the expense of high-variance modes [Citation109]. In general, TAEs and VDEs discover mixtures of maximum variance modes and slow modes [Citation109].

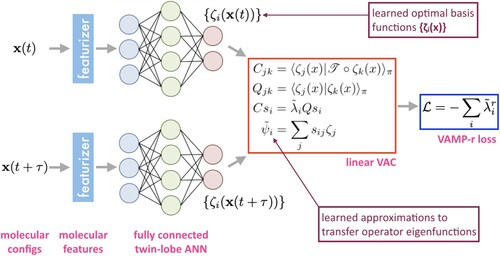

State-free reversible VAMPnets (SRVs) solve both of the deficiencies of TAEs and VDEs for equilibrium systems by employing a variational minimisation of a loss function that maximises the VAMP-2 (or more generally VAMP-r) score measuring the cumulative kinetic variance explained within the subspace of data-driven slow CVs [Citation106]. The VAMP-2 score can be interpreted as the squared sum of the exponentials of the implied timescales of the slow CVs discovered by SRVs, and is guaranteed by the VAC to reach a maximum when the approximated slow CVs are coincident with the true slow CVs of the transfer operator [Citation20,Citation81,Citation162]. SRVs can be conceived of as an application of TICA in which DNNs are employed to learn optimal nonlinear featurizations of the atomic coordinates as a learned basis set that is subsequently passed to the generalised eigenvalue problem (Equation (Equation3(3)

(3) )) [Citation106]. The idea of learning an optimal basis to pass to a linear variational approach was first proposed by Andrew et al. in the context of deep CCA [Citation104] and first applied to molecular simulations in Mardt et al.'s VAMPnets [Citation81].

SRVs employ a twin-lobe neural network that transform pairs of time-lagged molecular configurations into a space of d learned nonlinear basis functions

(Figure ). These basis functions are passed to the linear VAC where solution of the generalised eigenvalue problem furnishes approximations to the transfer operator eigenfunctions as orthogonal linear projections within this basis. The key to the entire approach is the definition of the negative VAMP-r score as a loss function under which the twin-lobed ANN is iteratively trained to learn the nonlinear basis within which linear approximations of the d leading transfer operator eigenfunctions

are computed. Once trained, the ANN and generalised eigenvalue problem define an explicit and differentiable mapping between the atomic coordinates and slow CVs that can be straightforwardly deployed in CV biasing enhanced sampling routines [Citation106]. SRVs have been demonstrated in applications to alanine dipeptide, WW domain, and Trp-cage, and proven to be a simple, efficient, and robust means for slow CV determination that possesses strong theoretical guarantees [Citation106,Citation107]. Moreover, SRVs have been shown to present an excellent and modular replacement for TICA within MSM construction pipelines. The nonlinear SRV latent space presents a kinetically superior latent space for microstate clustering than the linear embeddings furnished by TICA, with the resulting MSMs exhibiting faster implied timescale convergence and higher kinetic resolution than current state-of-the art approaches [Citation107]. Replacement of the VAC within the SRV with the more general VAMP principle serves to extend the approach to non-stationary and non-reversible processes resulting in the more general state-free non-reversible VAMPnets (SNRV).

Figure 7. State-free reversible VAMPnets. Pairs of time-lagged molecular configurations are featurized and transformed by a twin-lobe ANN into a space of nonlinear basis functions

. These basis functions are employed within a linear VAC to furnish approximations

to the leading eigenfunctions of the transfer operator. The twin-lobed ANN is trained to maximise a VAMP-r score measuring the cumulative kinetic variance explained and which reaches a maximum when the eigenfunction approximations are coincident with the true eigenfunctions of the transfer operator.

3.8. Deep learning based MSMs

Noé and co-workers recently proposed two variants of MSMs based on deep learning: VAMPnets [Citation81] and deep generative MSMs (DeepGenMSM) [Citation135]. SRVs (Section 3.7, Figure ) were inspired by VAMPnets and both approaches share a similar twin-lobe network architecture to apply deep CCA [Citation81,Citation104,Citation106]. They differ in two main respects. First, whereas SRVs pass these basis functions to a VAC analysis that is appropriate for approximating the transfer operator eigenfunctions for equilibrium data, VAMPnets pass them to a more general VAMP analysis to approximate the transfer operator singular functions for non-stationary and non-reversible data [Citation81,Citation105]. Second, whereas it is the goal of SRVs to furnish approximations to the leading modes of the transfer operator, it is the goal of VAMPnets to offer an end-to-end replacement for the entire MSM pipeline of featurization, dimensionality reduction, clustering, and kinetic model construction [Citation81]. Integration of these steps within a single framework can be advantageous in helping to avoid the extensive parameter tuning that can plague the various steps in MSM model construction (Section 2.3). VAMPnets achieve this goal by employing softmax activations in the terminal layer of the twin ANN lobes that map a time-lagged pair of molecular configurations to fuzzy state assignments

, where

and

are k-dimensional vectors defined over the k softmax output nodes of the two ANN lobes, and which assign a probability that the molecular configuration should be assigned to one of k metastable macrostates. The instantaneous and time-lagged covariance matrices

and

are then computed and used to estimate the MSM transition matrix between states

[Citation81]. VAMPnets are illustrated in an application to NTL9 where they discovers a 5-state model with kinetic properties on par with a 40-state conventional MSM, thereby illustrating the value of the approach in furnishing more parsimonious, efficient, and interpretable models without compromising kinetic accuracy [Citation81].

DeepGenMSMs are a deep learning approach to not only learn a MSM defined by a discrete transition matrix between metastable states, but also a means to generate realistic molecular trajectories including previously unseen configurations not included in the training data [Citation105,Citation135]. DeepGenMSMs are based on the following representation of the transition density between a configuration at time t and

at time

,

(6)

(6) where

is a normalised vector representing the probability that configuration

exists within each of the

metastable macrostates,

is the vector of ‘landing densities’ where

defines the probability that a system in macrostate i at time t lands in molecular configuration

at time

. The membership probabilities

and landing densities

are learned by training a two-lobe ANN architecture similar to VAMPnets to maximise the likelihood of time-lagged pairs

observed in simulation trajectories (Figure ). The MSM transition matrix

between the m metastable states is furnished by the ‘rewiring trick’ wherein

. In order to generate molecular configurations outside of the training data, it is additionally necessary to train a generator to sample from the density specified by the learned landing densities

,

(7)

(7) where i indexes over the states and ε is i.i.d. random noise sampled from a Gaussian distribution that powers the generator. Applications of DeepGenMSM to alanine dipeptide demonstrate its ability to accurately estimate the long-time kinetics and stationary distributions and also generate molecularly realistic structures including in regions of phase space where no training data was supplied [Citation135].

Figure 8. Deep Generative MSM (DeepGenMSM) and the ‘rewiring trick’. (left) The encoder within the twin-lobe ANN is trained to learn mappings of molecular configurations

to probabilistic memberships

of one of m macrostates. The generator is trained against the learned ‘landing probabilities’

that a system prepared in macrostate i will transition to molecular configuration

after a time τ. (right) The rewiring trick reconnects the generator and encoder to furnish a valid estimate

for the MSM transition matrix between the embedding into the m discrete states learned by the encoder. Image adapted from Ref. [Citation135], with permission from the author Prof. Frank Noé (Freie Universität Berlin).

![Figure 8. Deep Generative MSM (DeepGenMSM) and the ‘rewiring trick’. (left) The encoder χ(x) within the twin-lobe ANN is trained to learn mappings of molecular configurations x to probabilistic memberships y of one of m macrostates. The generator is trained against the learned ‘landing probabilities’ qi(z;τ) that a system prepared in macrostate i will transition to molecular configuration z after a time τ. (right) The rewiring trick reconnects the generator and encoder to furnish a valid estimate K~ for the MSM transition matrix between the embedding into the m discrete states learned by the encoder. Image adapted from Ref. [Citation135], with permission from the author Prof. Frank Noé (Freie Universität Berlin).](/cms/asset/a8651b78-660e-4b44-89c7-36db57fd80f4/tmph_a_1737742_f0008_oc.jpg)

3.9. Software

We list in Table software packages and libraries implementing some of the CV discovery and enhanced sampling methods discussed in this review.

Table 1. Software packages and libraries available for some of the collective variable discovery and enhanced sampling techniques discussed in this review.

4. Conclusion and outlook

It has been the goal of this review to offer a survey of some of the most exciting recent developments and applications of machine learning to collective variable discovery and enhanced sampling in biomolecular simulation. We sought to expose the essence of each method, its advantages and drawbacks, the systems in which it has been applied and demonstrated, and the availability of software implementations. We close with a retrospective assessment of the key milestones in the field and our outlook on emerging challenges and opportunities.

The origins of machine learning for CV discovery can be traced back to pioneering applications of linear dimensionality reduction techniques in the early 1990s. The first major development arrived in the early 2000s with the debut of powerful nonlinear dimensionality reduction tools. The mid-2000s witnessed the emergence of MSMs in the field. The late 2000s and early 2010s saw the introduction of techniques focussed on the discovery of slow as opposed to high-variance CVs. Advances in the past several years have been propelled in large part by deep learning methodologies coming to the fore. ANNs themselves are, of course, not a new idea, with roots dating back to Rosenblatt's perceptron in 1958 [Citation186], but the availability of fast simulation codes (e.g. Gromacs, HOOMD, LAMMPS, NAMD, OpenMM), cheap storage, inexpensive high-performance GPU hardware, and user-friendly neural network libraries (e.g. PyTorch, TensorFlow, Keras) created ideal conditions for this flare of creative new applications and has supercharged the field. There has been a tandem development of enhanced sampling techniques for accelerated sampling of configurational space. Umbrella sampling is one of the earliest techniques that is still in use today [Citation152] and which is itself based on ideas some 10 years prior by McDonald and Singer [Citation187]. There has been an enormous proliferation of techniques since that time, based on a variety of approaches to enhance sampling [Citation188]. Metadynamics [Citation23], itself based on ideas from local elevation [Citation189] and conformational flooding [Citation190], has emerged as one of the most popular, flexible, and robust enhanced sampling techniques [Citation191]. Enhanced sampling approaches have also benefited from the proliferation of deep learning technologies, and there are now a number of examples of ANN-based approaches to build biasing potentials for enhanced sampling [Citation124–128].

Looking forward, we see a number of new frontiers and important challenges for machine learning-enabled CV discovery and enhanced sampling. First, with relatively few exceptions, many of the new tools are developed and tested for relatively small systems, and tend not to be tested in applications to larger systems. Of course it is vital to validate new tools in testbed problems where the ground truth is known a priori, but demonstrating the efficacy of these approaches in applications to large biomolecules of technological, biological, or biomedical import is crucial in proving their potential in the context of impactful and challenging problems.