ABSTRACT

The reliance on the use of virtual learning environments, particularly computer-supported collaborative learning environments, is increasing in higher education institutions. This paper explores the perceived sociability of a virtual learning environment after it was implemented in an interdisciplinary project-based course in a higher education institute in Norway due to the COVID-19 pandemic. It describes the validation of a sociability scale in a sample of 1,611 students. The paper also reports on differences in perceived sociability according to gender, field of study and personality and the extent to which perceptions of sociability relate to the experience of a sudden change from a real-life learning environment to a virtual one. The results of this large-scale study suggest that the Norwegian version of the sociability scale can be used as a valid and reliable instrument to measure the perceived sociability of a virtual learning environment in the context of self-organized student project teams.

1. Introduction

The COVID-19 pandemic triggered an acceleration of online collaboration in work life and education. Due to restrictions and enforced social distancing, face-to-face collaboration was rapidly replaced by the use of a variety of collaboration platforms and online learning management systems. What many people believed to be a short-term state of emergency now seems to have led to lasting changes in education and work life, with increased use of online teaching (Farnell et al., Citation2021) and new hybrid collaboration practices (Sjølie & Moe, Citation2021; Smite et al., Citation2021). In light of these changes, it is imperative that students learn to work in and as teams in distributed online settings, and that teachers and educational researchers and policymakers understand how online environments enable and constrain student collaboration.

Research on online student collaboration has been shown to have substantial potential for promoting student learning (e.g., Saghafian & O’Neill, Citation2018; Tseng & Yeh, Citation2013). The advantages of online learning environments include the flexibility they offer students who cannot regularly attend campus (Allen & Seaman, Citation2016) and the opportunities they provide for student collaboration across institutions and national borders and thus for exposing students to viewpoints of people from different places (Usher & Barak, Citation2020). However, online collaboration also brings challenges, particularly those related to the social dimensions of teamwork. Social interaction is more difficult when communication happens digitally (Janssen & Kirschner, Citation2020; Sjølie et al., Citation2022). Without the non-verbal communication that can occur in face-to-face interactions, digital interactions are often more formal (Pérez-Mateo & Guitert, Citation2012), and misunderstandings occur more frequently, which can result in frustration, demotivation and disruption of performance (Oliveira et al., Citation2011; Usher & Barak, Citation2020). Next to a feeling of loneliness, unfit housing situations and a sense of reduced motivation and effort, reduced and impeded social interaction was found to be among the most pressing concerns among students during the COVID-19 pandemic (Almendingen et al., Citation2021).

Social interaction is one of the core principles of collaborative learning, and involves both a cognitive and a socio-emotional dimension (Bales, Citation2001). While the cognitive dimension is primarily task related and refers to the acquisition of knowledge and skills, the social-emotional dimension refers to the relationships formed within the group and personal well-being of the members. The socio-emotional dimension of social interaction is typically fostered in non-task contexts and is characterized as more casual than task-related interaction (Kreijns et al., Citation2013). Some researchers understand the socio-emotional dimension as the interaction that is perceived to go beyond what is strictly necessary to achieve an academic goal (Pérez-Mateo & Guitert, Citation2012). Research shows that these socio-emotional processes have remained largely neglected in the design of online learning environments. Being primarily designed for productivity, the systems’ functionality with regard to social interaction is generally directed toward cognitive processes and task related activities (Abedin et al., Citation2011; Balacheff et al., Citation2009; Kreijns et al., Citation2013). Traditionally, online learning has often been only a part of the learning environment and has been combined or alternated with face-to-face meetings. In such a setup, the “in real life” meetings are expected to cater for the social dimension of a collaboration (Lowenthal & Dennen, Citation2017; Richardson & Swan, Citation2003). Recognizing the importance of developing online learning environments that nurture the social dimensions of collaboration and facilitate social interaction in both task and non-task contexts, one line of CSCL research has focused on so-called sociable learning environments.

However, recent developments in higher education reveal two gaps in research on online student collaboration. First, limited research has been conducted on learning settings where student teams self-organize their collaboration. Research has mainly focused on how the teacher and technology can facilitate and stimulate social interaction (e.g., Lin, Citation2020; Radkowitsch et al., Citation2020; Hämäläinen & Arvaja, Citation2009). With the increased use of collaborative activities such as project-based learning (e.g., Elken et al., Citation2020), there is a need to understand the forms that social interactions take in settings where students (primarily) organize and lead themselves. Second, research has largely been conducted in the context of specific platforms, often evaluating the effect of specific tools or environments on students’ learning or group performance or investigating how students interact with particular built-in affordances (Ludvigsen et al., Citation2021). What this research fails to consider is the recent acceleration in the development of digital tools and platforms and thus the variety of alternatives that student project teams have at their disposal. In addition to universities’ online learning management systems (e.g., Blackboard, Canvas or tailored systems), options include the many different video conference systems, tools to support creative processes (e.g., digital whiteboards), and social platforms for synchronous and asynchronous communication (e.g., Slack, Teams, Discord). These different systems and tools come with different affordances and constraints and can be combined and fitted to the particular task at hand by an individual student team. This diversity opens up new possibilities and offers flexibility, but it also brings with it the need for ways of measuring social interaction and of assessing whether and how the social needs of students are being met.

This study explores the perceived sociability of a virtual learning environment that was suddenly introduced in the context of an interdisciplinary project-based course in a higher education institute in Norway. We briefly explain the conceptual framework of sociability and then present an intensive validation study based on a large sample of 1611 university students to examine the validity and reliability of the sociability scale. We examine differences in perceived sociability according to gender, field of study and personality. We also examine the extent to which the perception of sociability relates to the experience of a sudden change from a real-life learning environment to a virtual one in which students must largely organize themselves.

2. Theoretical framework

2.1. Social interaction in virtual learning environments

In virtual environments, all social interaction is mediated by computational artifacts (Ludvigsen et al., Citation2021). These artifacts have affordances that can either enable or disable certain activities and are often part of a larger digital platform, such as a wiki, a learning management system that contains all courses at a university, or a simulation platform that replicates clinical scenarios for medical students. Computational artifacts can be specifically designed to stimulate social interaction between members of a group (Kreijns et al., Citation2013). Systems that are particularly designed for student collaboration are generally referred to as computer-supported collaborative learning (CSCL) environments.

Based on their earlier work, Kreijns et al. (Citation2013) proposed a framework for understanding social interaction in CSCL environments. This framework was extended by Weidlich and Bastiaens (Citation2017), who developed the so-called SIPS-model, which includes four core variables corresponding to the social aspects of a CSCL learning environment: sociability, social interaction, social presence and social space. These variables are concerned with the sociality between fellow students and are characterized as affective in that they generally relate to the socio-emotional dimensions of the learning experience (Weidlich & Bastiaens, Citation2019). The purpose of this model is to explain the conditions required for a sound social space to emerge within a group. One of these conditions, and the one explored in this paper, is related to the sociability of the virtual learning environment.

Sociability is an attribute of the learning environment as perceived by students. Kreijns et al. (Citation2007) describe it as the extent to which the environment can facilitate the emergence of a sound social space within a group.Footnote1 Social space is defined as “the network of interpersonal/social relationships among group members embedded in the group’s norms and values, rules and roles, beliefs and ideas” (Kreijns et al., Citation2013, p. 234). It is often associated with concepts like a sense of community, group cohesion and a beneficial learning climate (Weidlich & Bastiaens, Citation2019). A sound social space is characterized by strong interpersonal relationships, trust, respect, and a strong sense of community (Kreijns et al., Citation2013). These qualities form the conditions for the creation of an optimal social context for collaborative learning and group performance (Rourke & Anderson, Citation2002; Rovai, Citation2002. A sociable learning environment contains social affordances that may initiate, encourage, and sustain the socio-emotional dimension of social interaction (Weidlich & Bastiaens, Citation2019). One concrete example of a social affordance in a physical context is the coffee machine, where people meet for informal and spontaneous conversations. The assertion is that “the greater the sociability of an environment, the more likely it is that social interaction will take place and that it will result in the emergence of a sound social space” (Kreijns et al., Citation2007, p. 180).

According to the SIPS model, social interaction can either predict a sound social space directly or via social presence (Weidlich & Bastiaens, Citation2017). Social presence refers to the degree to which people experience each other as “real” people in mediated communication (Gunawardena & Zittle, Citation1997). It has been identified as a vital factor in many studies, being positively associated with learning outcomes (Hostetter, Citation2013; Molinillo et al., Citation2018), trust (Tseng et al., Citation2019), satisfaction (Richardson et al., Citation2017) and the maintenance of positive relational dynamics (Remesal & Colomina, Citation2013). Social interaction seems to be the most important driver of social presence and of the creation of a sound social space. In contrast to the model developed by Kreijns et al. (Citation2013), the SIPS-model presents sociability not as a direct precursor to social presence but as that which exerts its effects through social interaction.

In learning environments where the teacher is mostly absent (e.g., environments based on self-organizing teams), perceived sociability is a critical factor, and this is particularly true of virtual learning environments. In other words, sociable learning environments with both educational and social functionalities, fulfil not only the learning needs of the students but also their social-emotional needs, thereby facilitating a complete learning experience.

To operationalize their framework, Kreijns et al. (Citation2007) developed a self-reporting sociability scale. This scale was preliminarily validated in an exploratory study that evaluated the perceived degree of sociability of a particular CSCL environment. The authors concluded that the sociability scale had the potential to be used as a measure for evaluating the sociability of CSCL environments. Their study sample was small (N = 81), however, so they issued an invitation for other researchers to further validate this instrument. Other scholars have applied the instrument in various contexts. Gao et al. (Citation2010) transformed the sociability scale in their attempt to identify factors that affect the perceived sociability of social software and to examine the impact of sociability on users’ attitudes and behaviour intentions. Abedin et al. (Citation2012) investigated the relation between non-task sociability (in contrast to on-task interactions) of CSCL environments and learning outcomes and created and validated their own instrument, including the items used by Kreijns et al. (Citation2007). However, to the best of our knowledge, the initial instrument has not been validated by other researchers since it was developed; thus, additional validation of the instrument is desirable. Given the innovative yet unexplored character of the instrument, more detailed knowledge of the psychometric quality of the instrument and its (descriptive) outcomes is desirable, especially in the context of self-organized student teams collaborating in online environments.

Learning environments in general, and virtual learning environments in particular, are perceived in divergent ways by its recipients. A recent review by Reis et al. (Citation2018) showed that 90% of studies consider that this variety in perceptions of CSCL environments can be accounted for by personality traits, although the results are still incipient. To increase our understanding, it is useful to take a closer look at how different personality profiles perceive the sociability of a virtual learning environment when validating the instrument.

2.2. Aims and research questions of this study

The purpose of this study was to validate the sociability scale in a new context, that of project-based learning (self-organizing student teams) in an extended virtual learning environment in Norwegian higher education. Given the novel nature of the instrument, it was relevant to look at descriptive results related to student background characteristics, such as gender, field of study and personality. The sudden worldwide impact of the COVID-19 pandemic forced higher education institutes to change to online education. This provided a unique opportunity to examine how this sudden change was experienced by the students and how their experiences correlated with the perceived sociability of the learning environment in which they were operating.

This paper addresses the following three research questions:

RQ1: To what extent can the structure of the sociability scale be confirmed?

RQ2: To what extent are sociability scale scores related to gender, field of study and personality?

RQ3: To what extent are sociability scale scores related to the experience of a sudden change in a virtual learning environment?

The following section explains the context of the study and describes the participants, instruments, data collection and statistical analyses.

3. Methods

3.1. Context

This study was conducted among students enrolled in an interdisciplinary project course, Experts in Teams, at the Norwegian University of Science and Technology. The course included 1,799 students from all faculties at the university, divided into 97 classes of 25–35 and teams of 5–7 students. The teams worked on real-world problems and defined their own projects. No specific guidelines were provided regarding how to distribute team roles and tasks. The teaching staff for each class comprises one faculty member and two learning assistants who have been trained in team facilitation. The students are assessed as a group based on two exam reports, each accounting for 50% of the final grade: one team process report with reflections on situations from their collaboration and one team product report outlining and discussing the project results. One of the main characteristics of the course was its explicit focus on collaboration skills as a learning outcome.

Originally, the course was taught each Wednesday throughout the semester, when the student groups met face to face for a full workday, comprising 15 days in total. Attendance was compulsory, and the entire class of 30 sat in the same classroom, with the teaching staff present most of the time. In March 2020, the students were two-thirds of the way through the course when the university campus was abruptly closed due to the COVID-19 pandemic. Therefore, the final six weeks had to be completed in a fully online setting. The university’s learning management system, Blackboard Collaborate, was used as the main platform. It includes functions such as video meetings, breakout rooms, chats and file sharing. However, the students were free to use additional tools and therefore other tools were used as well, such as Slack, Zoom, Teams, Discord, Trello, Facebook, Messenger and WhatsApp.

3.2. Participants

The data for this study are from students who were enrolled in the course Experts in Teams in the spring semester of 2020. The data are part of a larger research programme examining the nature and effects of group processes. The study sample consisted of 1,611 students (29.1% women, 63.0% men, 7.9% not reported), accounting for a response rate of 89.5%. The students included in the study were from eight different faculties: Architecture and Design, Economics and Management, Engineering, Humanities, Information Technology and Electrical Engineering, Medicine and Health Sciences, Natural Sciences, and Social and Educational Sciences.

3.3. Instruments

The initial sociability scale developed by Kreijns et al. (Citation2007) is used to measure the perceived sociability of virtual learning environments. The instrument has 10 items. Possible answers range from 1 = not applicable at all to 5 = totally applicable (the Norwegian translation can be found in Appendix AFootnote2). Kreijns et al. (Citation2007) showed that the instrument is unidimensional and has good reliability (Cronbach’s alpha = .93).

The Mini-IPIP is a personality scale based on the five-factor model of personality traits and is a short version of the 50-item IPIP-FFM (Goldberg, Citation1999). The five traits are extraversion, agreeableness, conscientiousness, neuroticism and intellect/imagination. Each of the five traits is measured with four items. Possible answers range from 1 = very inaccurate to 5 = very accurate. Several studies have shown acceptable to good psychometric properties of the instrument (Cooper et al., Citation2010; Donnellan et al., Citation2006), including convergent, discriminant and criterion-related validity and internal consistency.

Finally, a one-item evaluative question was used to allow students to express how they experienced the sudden change to online learning. The question was: How did you experience the period after Experts in Team became fully digital? Possible answers were very negative, negative, no change, positive or very positive. The first and last answer categories were for the analyses.

3.4. Procedure and data collection

This study follows the guidelines for research ethics (NESH, Citation2021) and general data protection (GDPR), and approval was provided by the Norwegian Centre for Research Data (NSD). An electronic questionnaire was distributed to all registered students of the course via e-mail. The participants gave their consent to participate after being informed about the study. They were informed that they could withdraw from the study at any time and for any reason.

3.5. Statistical analysis

A number of preliminary tests were run before the process of validating the sociability scale was initiated. Descriptive statistics, Kolmogorov–Smirnov tests of normality and the inter-item correlation matrix were performed, using SPSS, V.28). The unidimensional nature of the instrument was tested by confirmatory factor analysis (CFA) using MPlus, version 8.6 (Muthén & Muthén, Citation1998–Citation2017). Confirmatory factor analysis affords a stricter interpretation of one-dimensionality than can be provided by more traditional methods, such as item–total correlations and exploratory factor analysis (Gerbing & Anderson, Citation1988). The robust maximum likelihood estimation method is used, given the non-normal distribution of the items, which leads to robust standard errors and robust test statistics to evaluate the models. The following fit indices were used to assess the model fit (cut-off scores are provided in parentheses): the root mean square error of approximation (RMSEA, < .05 to .10), the comparative fit index (CFI, > .90), the Standardized Root Mean Square Residual (SRMR, < .08), and the Tucker–Lewis index (TLI, > .90) (Hooper et al., Citation2008; Hu & Bentler, Citation1999). The χ² index was also used, but should be interpreted with caution, give its sensitivity to sample size. In the resulting model, the estimated factor loadings should be greater than .5 and the standardized residuals should be lower than 2.0 (Hair et al., Citation2010).

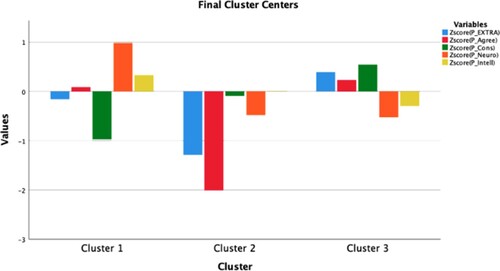

The internal consistency among the items of the scale was calculated using McDonald’s omega and Chronbach’s to allow comparison with Kreijns et al. (Citation2007). To obtain a comprehensive yet clear overview of personality patterns, non-hierarchical cluster analysis with K-means was applied with SPSS v28. The most common and best-known typology of the underlying Big-Five model of personality distinguishes three personality types (De Fruyt et al., Citation2002). Empirical evidence confirmed this choice (number of iterations, number of participants per group, number of non-significant differences between the clusters). This yielded three distinct groups with diverging personality patterns, containing 458, 298 and 690 cases, respectively. The z-scores of the final cluster centres for the three groups can be found in and . The results of ANOVA tests showed that the groups differed significantly, and Bonferroni post hoc tests showed that all differences were significant except the neuroticism scores for groups two and three.

Table 1. Final Cluster Centres

4. Results

The descriptive statistics can be found in . The means varied from X = 3.71 (Y08, “I feel comfortable with this virtual learning environment”) to X = 2.17 (Y10, “This virtual learning environment enables me to make close friendships with my teammates”). The standard deviations were all above 1, indicating significant variety in the perceived sociability of the virtual learning environment. The Kolmogorov–Smirnov tests of normality showed a deviation from the normal distribution for some items. Therefore, robust maximum likelihood estimation was used to estimate the fit indices in the confirmatory factor analyses. The fit indices of the initial confirmatory factor analyses showed that the data did not fit sufficiently with the theoretical model: chi-square = 735.99 (df = 35, p = .00); RMSEA = .112[.105, .119]; CFI = .888; SRMR = .054; TLI = .856. An overview of the modification indices suggested that adding three error covariances could improve the model fit, particularly between items Y09 and Y04, Y09 and Y10, and Y05 and Y06. Regarding content, there were similarities and overlaps between the respective items. For example, items Y09 and Y04 referred to non-task-related and spontaneous informal conversations, while Y10 referred to establishing close relationships with teammates. Items Y05 and Y06 addressed the development of a well-performing team and good working relationships. Allowing these error covariances improved the model fit, leading to acceptable to good fit indices: RMSEA = .067 [.06, .075]; CFI = .962; SRMR = .035; TLI = .947. Chi-square dropped significantly but was still not satisfactory (chi-square = 266.41 (df = 32, p = .00).

Table 2. Sociability scale items, means and standard deviations

presents an overview of the factor loadings. All but one of the factor loadings were higher than the cut-off score of .5 (Y2 = .430). All standardizes residuals were lower than 2.0, the highest being .892 for Y6). Thus, the unidimensional nature of the sociability scale can be confirmed, acknowledging the three added covariances. The internal consistency of the sociability scale was found to be good (McDonald’s omega = .892; Chronbach’s alpha = .892), but lower than that previously reported Chronbach’s alpha = .93 by Kreijns et al. (Citation2007).

Table 3. Standardized loadings of the items of the Sociability scale

After the sum scores were calculated using the factor loading of each item, a -t-test (t = (1460) = -.542 p-value, p = .588) revealed no significant differences with respect to gender (XWomen = 19.31, SD = 5.36; XMen = 19.47, SD = 5.41). shows the sum score means and standard deviations of the sociability scale by faculty. The highest scoring students were from the Faculty of Architecture and Design (X = 21.32, SD = 5.85). The students with the lowest scores were from the Faculty of Social and Educational Sciences (X = 16.70, SD = 3.97) and the Faculty of Medicine and Health (X = 17.21, SD = 8.28). Caution should be exercised when interpreting these results given the low number of students from some faculties.

Table 4. Number of students, average weighted sum scores of the sociability scale and standard deviations by faculty (N = 1,611).

Despite the different personality patterns in the three clusters (see and ), no significant differences were found in the sociability scale scores across the three groups (XClus1 = 19.19, SDClus1 = 5.37; XClus2 = 18.97, SDClus2 = 5.37; XClus3 = 1.54, SDClus3 = 5.38).

Experiences of the sudden change of the learning environment from weekly whole-day face-to-face group work to a virtual learning environment differed: 44.7% of the students found this experience (very) negative, 19.6% reported no difference and 35.7% experienced the change as (very) positive. An ANOVA showed significant differences on the sociability scale across these three groups. The means varied from 16.52 (SD = 4.24) for the group that experienced the change as (very) negative to 20.16 (SD = 4.67) for the group that reported no change to 22.66 (SD = 5.07) for the group that reported this as a (very) positive change.

5. Discussion

Online student collaboration in higher education has increased substantially since the beginning of this century, recently also in the context of project-based learning. In such learning activities, students work with real-life problems and organize themselves, and their activities are expected to result in some kind of product (Spronken-Smith & Walker, Citation2010). It may be assumed that they encounter challenges and constraints in developing team cohesion and effective collaboration that are like those faced by ad hoc project teams in organizations (Fransen et al., Citation2013). Therefore, to support student learning and group performance in such a context, it is important to listen to the views of students and consider how the sociality between the group members is perceived by the students.

Students’ perception of the sociability of the learning environment is relevant not only for designing virtual learning environments but also for the actual use of the environment in the context of higher education. The sudden change that took place in the daily lives of many students because of the pandemic provided a natural opportunity to study the impact of a transition from face-to-face to online collaboration. We can expect virtual learning environments to continue to be important, and it is possible that the post-COVID-19 period will see permanent and intensive use of digital teaching methods. Therefore, further insights into the relevant actors (i.e., students and teaching staff) and factors and the (dis)advantages and effects of these methods in diverse learning environments are required. This study focuses on the perceived sociability of virtual learning environments. The purpose of the study was to validate an instrument for measuring the perceived sociability of a learning environment that included freedom for students to choose among a variety of tools beyond the CSCL environment (Blackboard collaborate) that was provided by the university. Three research questions were addressed: (1) To what extent can the structure of the sociability scale be confirmed? (2) To what extent are sociability scale scores related to variables such as gender, field of study and personality? (3) To what extent are sociability scale scores related to the experience of a sudden change in a virtual learning environment?

During the construction and refinement process of the sociability scale, Kreijns et al. (Citation2007) dealt with the content validity to avoid construct underrepresentation and construct irrelevance (Messick, Citation1995). The initial scale was developed based on the literature from computer-supported cooperative work and human–computer interaction. The authors developed an approach to increase sociability, i.e. “group awareness, communication, and potential for facilitating the creation of a community of learning” (Kreijns et al., Citation2007, p. 184). To avoid the danger of underrepresentation, the first version of the scale included 34 test items, deliberately, argue the authors, to overrepresent the sociability construct. Redundant items were removed during the following refinement process, considering the relevance of the items. Therefore, in the next phase, the authors removed items based on a content analysis, i.e. when items addressed the utility or usability aspect of CSCL instead of the sociability aspect. Due to their small sample (N = 79), the authors were only able to test the construct validity based by performing principal component analysis using Varimax rotation on the 10 items of the sociability scale. In our study, the large sample size (N = 1,611) allowed us to perform a thorough CFA, using robust maximum likelihood estimation method, to test the structural validity of the instrument using MPlus v. 8.6 (Muthén & Muthén, Citation1998–Citation2017). The fit indices showed acceptable to good results and confirmed the unidimensional nature of the instrument. The chi-square value of the final model was not satisfactory (chi-square = 266.41, df = 32, p = .000), but this statistic is very sensitive for sample sizes (in our case N = 1,611 students), which makes very small differences statistically significant (Bearden, Sharma, & Teel, Citation1982). In line with the findings of Kreijns et al. (Citation2007), the McDonald’s Omega in our study suggests that the internal consistency of the scale is good (Omega = .892), and the instrument is reliable. A further investigation showed no significant differences in the perceived sociability of the virtual learning environment by gender or personality pattern. However, the instrument showed significant differences between groups of students depending on how they perceived the sudden change from a real-life learning environment to an exclusively virtual learning environment. This illustrates the sensitivity of the instrument to this type of change in context.

Despite the strengths of this study, certain limitations that leave room for further validation of the instrument must be mentioned. Although the sample in this study was much larger and included students from a wider variety of disciplines than those of many other validation studies, all the students came from a single university and were all enrolled in the same master’s level course. Further analyses with more diverse samples would be beneficial to confirm the robustness of the instrument. We would encourage other researchers to use this same instrument, translated into the native language of their students, to facilitate further cross validate the instrument by, for instance, examining the measurement invariance.

Further research that goes beyond the validation of the instrument could provide answers to the following pertinent questions in the field of CSCL. Which group(s) can cope better (or worse) with which type of virtual learning environment in terms of sociability? Which students in terms of gender, field of study and personality traits are at risk? What is the effect of the use of a virtual learning environment on their learning outcomes? What is the impact of the virtual learning environment on interpersonal relationships, group cohesiveness, sense of belonging and social and academic integration? Some of these questions can be answered using the perceived sociability of the virtual learning environment. Therefore, a valid and reliable instrument to measure this is necessary.

6. Conclusion

In many higher education institutions, the reliance on virtual learning environments, particularly CSCL environments, is increasing. This tendency accelerated due to the COVID-19 crisis in 2020 and 2021. During this period, students in most countries across the globe had to rely on digital learning environments for their studies. It is expected that in the post-pandemic future, the use of virtual learning environments will continue, including in learning environments where students largely depend on each other in formats such as in project-based learning, organizing themselves as a team. Therefore, the validation of an instrument for measuring the perceived sociability of virtual learning environments from the perspective of students is timely.

The results of this large-scale study suggest that the sociability scale can be used as a valid and reliable instrument to measure the perceived sociability of a virtual learning environment in the context of project-based learning. In line with the invitation issued by Kreijns et al. (Citation2007), we encourage researchers from other countries to use a translated version of the instrument in their own country contexts and share the data to allow for cross-validation purposes across countries. The continued use of this instrument in learning settings involving self-organized teams will open the world for additional research purposes in the context of CSCL.

Disclosure statement

No potential conflict of interest was reported by the author(s).

Notes

1 In this paper, the terms group and team are used interchangeably. Although many researchers make a distinction between the two, often building on Katzenbach and Smith’s (1993) definition, the terms are used interchangeably in the literature across disciplines and research contexts. Both terms will therefore be used here, depending on the terminology used in the literature that is referred to.

2 The items of the scale are published with permission from Karel Kreijns

References

- Abedin, B., Daneshgar, F., & D’Ambra, J. (2011). Enhancing non-task sociability of asynchronous CSCL environments. Computers & Education, 57(4), 2535–2547. https://doi.org/10.1016/j.compedu.2011.06.002

- Abedin, B., Daneshgar, F., & D’Ambra, J. (2012). Do nontask interactions matter? The relationship between nontask sociability of computer supported collaborative learning and learning outcomes. British Journal of Educational Technology, 43(3), 385–397. https://doi.org/10.1111/j.1467-8535.2011.01181.x

- Allen, I. E., & Seaman, J. (2016). Online report card: Tracking online education in the United States. ERIC.

- Almendingen, K., Sandsmark Morseth, M., Gjølstad, E., Brevik, A., & Tørris, C. (2021). Student’s experiences with online teaching following COVID-19 lockdown: A mixed methods explorative study. PLOS One, 16(8). https://doi.org/10.1371/journal.pone.0250378.

- Balacheff, N., Ludvigsen, S., Jong, T., Lazonder, A., & Barnes, S. (2009). Technology-enhanced learning: Principles and products (1st ed.). Springer Publishing Company, Incorporated.

- Bales, R. F. (2001). Social interaction systems: Theory and measurement (1st ed.). CRC Press.

- Bearden, W. O., Sharma, S., & Teel, J. E. (1982). Sample size effects on Chi square and other statistics used in evaluating causal models. Journal of Marketing Research, 19(4).

- Cooper, A. J., Smillie, L. D., & Corr, P. J. (2010). A confirmatory factor analysis of the mini-IPIP five-factor model personality scale. Personality and Individual Differences, 48(5), 688–691. https://doi.org/10.1016/j.paid.2010.01.004

- De Fruyt, F., Mervielde, I., & van Leeuwen, K. (2002). The consistency of personality type classification across samples and five-factor measures. European Journal of Personality, 16(1_suppl), S57–S72. https://doi.org/10.1002/per.444

- Donnellan, M. B., Oswald, F. L., Baird, B. M., & Lucas, R. E. (2006). The mini-IPIP scales: Tiny-yet-effective measures of the big five factors of personality. Psychological Assessment, 18(2), 192–203. https://doi.org/10.1037/1040-3590.18.2.192

- Elken, M., Maassen, P., Nerland, M., Prøitz, T. S., Stensaker, B., & Vabø, A. (2020). Quality work in higher education. Springer.

- Farnell, T., Skledar Matijevic, A., & Scukanec Smith, N. (2021). The impact of COVID-19 on higher education: A review of emerging evidence. European Union.

- Fransen, J., Weinberger, A., & Kirschner, P. A. (2013). Team effectiveness and team development in CSCL. Educational Psychologist, 48(1), 9–24. https://doi.org/10.1080/00461520.2012.747947

- Gao, Q., Dai, Y., Fan, Z., & Kang, R. (2010). Understanding factors affecting perceived sociability of social software. Computers in Human Behavior, 26(6), 1846–1861. https://doi.org/10.1016/j.chb.2010.07.022

- Gerbing, D. W., & Anderson, J. C. (1988). An updated paradigm for scale development incorporating unidimensionality and its assessment. Journal of Marketing Research, 25(2), 186–192. https://doi.org/10.1177/002224378802500207

- Goldberg, L. R. (1999). A broad-bandwidth, public-domain, personality inventory measuring the lower-level facets of several five-factor models. In I. Mervielde, I. Deary, F. De Fruyt, & F. Ostendorf (Eds.), Personality psychology in Europe (pp. 7–28). Tilburg University Press.

- Gunawardena, C. N., & Zittle, F. J. (1997). Social presence as a predictor of satisfaction within a computer-mediated conferencing environment. American Journal of Distance Education, 11(3), 8–26. https://doi.org/10.1080/08923649709526970

- Hair, R. E., Babin, BJ, & Black, W. C. (2010). Multivariate data analysis: A global perspective. Pearson Education.

- Hämäläinen, R., & Arvaja, M. (2009). Scripted collaboration and group-based variations in a higher education CSCL context. Scandinavian Journal of Educational Research, 53(1), 1–16. https://doi.org/10.1080/00313830802628281

- Hooper, D., Coughlan, J., & Mullen, M. R. (2008). Structural equation modelling: Guidelines for determining model fit. Electronic Journal of Business Research Methods, 6(1), 53–60.

- Hostetter, C. (2013). Community matters: Social presence and learning outcomes. Journal of the Scholarship of Teaching and Learning, 13(1), 77–86.

- Hu, L. T., & Bentler, P. M. (1999). Cut-off criteria for fit indexes in covariance structure analysis: Conventional criteria versus new alternatives. Structural Equation Modeling: A Multidisciplinary Journal, 6(1), 1–55. https://doi.org/10.1080/10705519909540118

- Janssen, J., & Kirschner, P. A. (2020). Applying collaborative cognitive load theory to computer-supported collaborative learning: Towards a research agenda. Educational Technology Research and Development, 68(2), 783–805. https://doi.org/10.1007/s11423-019-09729-5

- Kreijns, K., Kirschner, P. A., & Jochems, W. (2003). Identifying the pitfalls for social interaction in computer-supported collaborative learning environments: A review of the research. Computers in Human Behavior, 19(3), 335–353. https://doi.org/10.1016/S0747-5632(02)00057-2

- Kreijns, K., Kirschner, P. A., Jochems, W., & van Buuren, H. (2007). Measuring perceived sociability of computer-supported collaborative learning environments. Computers & Education, 49(2), 176–192. https://doi.org/10.1016/j.compedu.2005.05.004

- Kreijns, K., Kirschner, P. A., & Vermeulen, M. (2013). Social aspects of CSCL environments: A research framework. Educational Psychologist, 48(4), 229–242. https://doi.org/10.1080/00461520.2012.750225

- Lin, G.-Y. (2020). Scripts and mastery goal orientation in face-to-face versus computer-mediated collaborative learning: Influence on performance, affective and motivational outcomes, and social ability. Computers & Education, 143, 103691. https://doi.org/10.1016/j.compedu.2019.103691

- Lowenthal, P. R., & Dennen, V. P. (2017). Social presence, identity, and online learning: Research development and needs. Distance Education, 38(2), 137–140. https://doi.org/10.1080/01587919.2017.1335172

- Ludvigsen, S., Lund, K., & Oshima, J. (2021). A conceptual stance on CSCL history. In U. Cress, C. Rosé, A. F. Wise, & J. Oshima (Eds.), International handbook of computer-supported collaborative learning (pp. 45–63). Springer International Publishing.

- Messick, S. (1995). Validity of psychological assessment: Validation of inferences from persons’ responses and performances as scientific inquiry into score meaning. American Psychologist, 50(9), 741–749. https://doi.org/10.1037/0003-066X.50.9.741

- Molinillo, S., Aguilar-Illescas, R., Anaya-Sánchez, R., & Vallespín-Arán, M. (2018). Exploring the impacts of interactions, social presence and emotional engagement on active collaborative learning in a social web-based environment. Computers & Education, 123, 41–52. https://doi.org/10.1016/j.compedu.2018.04.012

- Muthén, L. K., & Muthén, B. O. (1998–2017). Mplus user’s guide (8th ed.). Muthén & Muthén.

- NESH. (2021). Guidelines for research ethics in the social sciences, humanities, law and theology. Retrieved from Downloaded February 2, 2021 from https://www.forskningsetikk.no/retningslinjer/hum-sam/forskningsetiske-retningslinjer-forsamfunnsvitenskap-og-humaniora/.

- Oliveira, I., Tinoca, L., & Pereira, A. (2011). Online group work patterns: How to promote a successful collaboration. Computers & Education, 57(1), 1348–1357. https://doi.org/10.1016/j.compedu.2011.01.017

- Pérez-Mateo, M., & Guitert, M. (2012). Which social elements are visible in virtual groups? Addressing the categorization of social expressions. Computers & Education, 58(4), 1234–1246. https://doi.org/10.1016/j.compedu.2011.12.014

- Radkowitsch, A., Vogel, F., & Fischer, F. (2020). Good for learning, bad for motivation? A meta-analysis on the effects of computer-supported collaboration scripts. International Journal of Computer-Supported Collaborative Learning, 15(1), 5–47. https://doi.org/10.1007/s11412-020-09316-4

- Reis, R. C. D., Isotani, S., Rodriquez, C. L., Lyra, K. T., Jaques, P. A., & Bittencourt, I. I. (2018). Affective states in computer-supported collaborative learning: Studying the past to drive the future. Computers & Education, 120, 29–50. https://doi.org/10.1016/j.compedu.2018.01.015`

- Remesal, A., & Colomina, R. (2013). Social presence and online collaborative small group work: A socioconstructivist account. Computers & Education, 60(13), 357–367. doi:10.1016/j.compedu.2012.07.009

- Richardson, J. C., Maeda, Y., Lv, J., & Caskurlu, S. (2017). Social presence in relation to students’ satisfaction and learning in the online environment: A meta-analysis. Computers in Human Behavior, 71, 402–417. https://doi.org/10.1016/j.chb.2017.02.001

- Richardson, J. C., & Swan, K. (2003). Examining social presence in online courses in relation to students’ perceived learning and satisfaction. JALN, 7(1), 68–88.

- Rourke, L., & Anderson, T. (2002). Exploring social communication in computer conferencing. Journal of Interactive Learning Research, 13(3), 259–275.

- Rovai, A. P. (2002). Sense of community, perceived cognitive learning, and persistence in asynchronous learning networks. The Internet and Higher Education, 5(4), 319–332.

- Saghafian, M., & O’Neill, D. K. (2018). A phenomenological study of teamwork in online and face-to-face student teams. Higher Education, 75(1), 57–73. https://doi.org/10.1007/s10734-017-0122-4

- Sjølie, E., Espenes, T. C., & Buø, R. (2022). Changes in social interaction among self-organizing student teams following a transition from face-to-face to online learning. Computers and Education, 189, 104580. https://doi.org/10.1016/j.compedu.2022.104580.

- Sjølie, E., & Moe, N. B. (2022). Work from X. Den digitale hverdagen [work from X. Digital collaboration in a hybrid everyday life]. In A. Rolstadås, A. Krokan, G. E. D. Øien, M. Rolfsen, G. Sand, H. Syse, L. M. Husby, & T. I. Waag (Eds.), Den digitale hverdagen (pp. 227–240). John Grieg Forlag.

- Smite, D., Tkalich, A., Moe, N. B., Papatheocharous, E., Klotins, E., & Buvik, M. P. (2021). Changes in perceived productivity of software engineers during COVID-19 pandemic: The voice of evidence. Journal of Systems and Software, 186, 111197. https://doi.org/10.1016/j.jss.2021.111197.

- Spronken-Smith, R., & Walker, R. (2010). Can inquiry-based learning strengthen the links between teaching and disciplinary research? Studies in Higher Education, 35(6), 723–740. doi:10.1080/03075070903315502

- Tseng, H. W., & Yeh, H.-T. (2013). Team members' perceptions of online teamwork learning experiences and building teamwork trust: A qualitative study. Computers & Education, 63, 1–9. https://doi.org/10.1016/j.compedu.2012.11.013

- Tseng, H. W., Yeh, H.-T., & Tang, Y. (2019). A close look at trust among team members in online learning communities. International Journal of Distance Education Technologies, 17(1), 52–65. https://doi.org/10.4018/IJDET.2019010104

- Usher, M., & Barak, M. (2020). Team diversity as a predictor of innovation in team projects of face-to-face and online learners. Computers & Education, 144, 103702. https://doi.org/10.1016/j.compedu.2019.103702.

- Weidlich, J., & Bastiaens, T. J. (2017). Explaining social presence and the quality of online learning with the SIPS model. Computers in Human Behavior, 72, 479–487. https://doi.org/10.1016/j.chb.2017.03.016

- Weidlich, J., & Bastiaens, T. J. (2019). Designing sociable online learning environments and enhancing social presence: An affordance enrichment approach. Computers & Education, 142, 103622. https://doi.org/10.1016/j.compedu.2019.103622.