Abstract

Background and aims

Computer-aided polyp detection (CADe) may become a standard for polyp detection during colonoscopy. Several systems are already commercially available. We report on a video-based benchmark technique for the first preclinical assessment of such systems before comparative randomized trials are to be undertaken. Additionally, we compare a commercially available CADe system with our newly developed one.

Methods

ENDOTEST consisted in the combination of two datasets. The validation dataset contained 48 video-snippets with 22,856 manually annotated images of which 53.2% contained polyps. The performance dataset contained 10 full-length screening colonoscopies with 230,898 manually annotated images of which 15.8% contained a polyp. Assessment parameters were accuracy for polyp detection and time delay to first polyp detection after polyp appearance (FDT). Two CADe systems were assessed: a commercial CADe system (GI-Genius, Medtronic), and a self-developed new system (ENDOMIND). The latter being a convolutional neuronal network trained on 194,983 manually labeled images extracted from colonoscopy videos recorded in mainly six different gastroenterologic practices.

Results

On the ENDOTEST, both CADe systems detected all polyps in at least one image. The per-frame sensitivity and specificity in full colonoscopies was 48.1% and 93.7%, respectively for GI-Genius; and 54% and 92.7%, respectively for ENDOMIND. Median FDT of ENDOMIND with 217 ms (Inter-Quartile Range(IQR)8–1533) was significantly faster than GI-Genius with 1050 ms (IQR 358–2767, p = 0.003).

Conclusions

Our benchmark ENDOTEST may be helpful for preclinical testing of new CADe devices. There seems to be a correlation between a shorter FDT with a higher sensitivity and a lower specificity for polyp detection.

Introduction

Improvement of surveillance colonoscopy for the prevention of colorectal cancer (CRC) has always been a field of intensive research in gastrointestinal endoscopy. By detection and subsequent resection of adenomatous polyps, patients are preserved from cancer development. Thus, the adenoma detection rate (ADR) was established as a validated marker of colonoscopy quality [Citation1]. An increase in ADR results in a decrease in interval carcinoma [Citation2]. Still, the miss rate of neoplastic lesions is unacceptably high with great variability among individual endoscopists [Citation3, Citation4]. The implementation of artificial intelligence systems for CADe resulted in increased ADRs in multiple prospective mostly single-center randomized trials [Citation5]. Although surveillance colonoscopy is usually an outpatient procedure, training data of those systems mainly rely on colonoscopy videos recorded in in-hospital settings [Citation6–8]. Several commercial CADe systems have already entered the market [Citation9–11]. GI Genius (Medtronic plc., Dublin, Ireland) was one of the first commercial CADe systems in Europe. The multicenter randomized study by Repici et. al reported an increase in ADR of 14.4 percentage points regarding colonoscopies performed without the CADe system [Citation10]. However, direct comparison of different CADe systems using the same benchmark data has to our knowledge never been done. Therefore, in this study we have generated ENDOTEST, a dataset that allows the comparison of different polyp detection systems. ENDOTEST includes polyp and non-polyp video sequences, in the same way as has previously been done in other studies [Citation12–14], but it also includes full-length frame-by-frame polyp annotated colonoscopies.

There is a wide variety of databases for colonoscopy fully annotated regarding the presence of polyps. CVC-VideoClinicDB was provided in the context of the GIANA sub-challenge that was part of the MICCAI 2017 Endoscopic Vision Challenge. This data set contains 18,733 frames from 18 videos without ground truth and 11,954 frames with ground truth [Citation15]. SUN Colonoscopy Video Database was developed by Mori Laboratory and it contains 49,135 fully annotated polyp frames from 100 different polyps. It also contains 109,554 non-polyp frames [Citation13]. The biggest and more diverse one is LDPolypVideo dataset which contains 160 colonoscopy video sequences and 40,266 frames with polyp annotations [Citation14]. However, to our knowledge, there is no publicly available dataset containing full-length colonoscopies frame-by-frame annotated regarding the presence of a polyp.

In addition, in this work we also introduce ENDOMIND, a publicly funded investigator-initiated project of artificial intelligence applications for polyp detection in screening colonoscopy. ENDOMIND was developed by computer engineers as well as endoscopists in the same work group.

The aim of this study is to describe the validation, and performance comparison between the newly developed CADe system ENDOMIND trained with multicentric outpatient colonoscopy videos and the commercially available CADe system GI Genius using a defined benchmark data set ENDOTEST that contains screening colposcopies.

Methods

Training data set of ENDOMIND

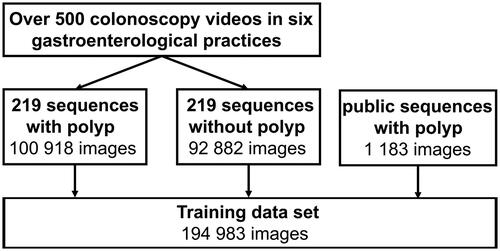

Videos from routine colonoscopies were recorded in six gastroenterologic practices in Germany. The endoscopic processors were Olympus CV-170 and CV-190 (Olympus Europa SE & Co. KG, Hamburg, Germany), Pentax i7000 (Pentax Europe GmbH, Hamburg, Germany), and Storz TC301 (Karl Storz SE & Co. KG, Tuttlingen, Germany). The recording included retrospectively and prospectively collected endoscopic videos ranging from January 2018 to May 2021. Over 500 colonoscopy videos were screened for polyps. Subsequently, 219 video sequences comprising of 500 to 2000 images displaying one polyp were extracted. Additional sequences of the same video with the same length showing normal mucosa, residual stool, bubbles, or water irrigation images were labeled accordingly and added to the training data.

Polyps were manually classified according to the estimated size (<5 mm, 5–10 mm, >10 mm) and Paris classification [Citation16]. A representative subset of polyps was chosen for box annotation, which included a frame-by-frame annotation of the visible polyp. This was performed by an experienced endoscopist using a custom-made annotation tool as previously described [Citation17]. Annotation of the 219 sequences with and without a visible polyp resulted in 194,983 labeled images out of which 52.4% contained a polyp. Those images were used as training set for the development of ENDOMIND ().

ENDOMIND training

For polyp detection an artificial intelligence (AI) was trained using an on 1,183 publicly available polyp images [Citation18–20] pre-trained deep learning algorithm (Supplementary material). It refers to a machine learning method that uses artificial neural networks with numerous intermediate layers, forming an extensive internal structure between the input and output layers. We used the YOLOv5 architecture [Citation21]. Different techniques were used to further enhance YOLOv5. Firstly, we used early stopping during training. Early stopping is a form of regularization to prevent overfitting in iterative machine learning methods. Overfitting increases specificity on the training dataset but does not allow generalization into the real-world scenario. Secondly, we use a dropout value of 30%. When the network is trained, 30% of neurons in each layer of the network are turned off (“dropout”) and not considered for the upcoming computational step. This technique also prevents overfitting of the model. Thirdly, we trained with different image augmentations. In deep learning, augmenting image data means using various processes to modify the original image data. For data augmentation, we used techniques including flipping, rotating, translation, scaling, and mosaic augmentation [Citation22]. ENDOMIND can be downloaded for research purposes using this link: https://www.ukw.de/research/inexen/ai-for-polyp-detection/

Video based benchmark data set (ENDOTEST)

ENDOTEST was composed of two subsets of video based images (or frames): the validation and the performance dataset. Both were developed from retrospective recordings of colonoscopies from two centers (University Hospital Ulm and Würzburg) that differ from the data used for ENDOMIND training. Both centers used the Olympus CV-190 endoscopy processor. In one center, the recordings were performed using a video frame grabber (SDI2USB 3.0, Epiphan Systems Inc.) and stored with the same input resolution and minimal compression. Later, the video recordings were processed by GI Genius using an image converter (HA5, AJA Video Systems Inc.). In the second center, both signals (raw and GI Genius processed signal) were simultaneously recorded in real-time.

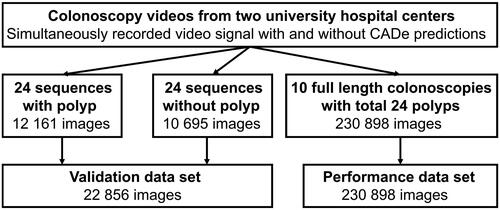

The validation dataset consists of a balanced dataset of 24 polyp and corresponding non-polyp sequences comprising a total of 22,856 images that were manually annotated by marking of a bounding box over polyps (). In the validation dataset, we considered the balance of 1:1 to be appropriate as many publicly available datasets do compare themselves on e.g. only polyp images and therefore have a ratio of 1:0 [Citation12,Citation13]. However, in general, equally balanced polyp and non-polyp video sequences do not resemble reality where visible polyps make up a minority of the total examination length. Therefore, additional 10 full colonoscopy videos were manually annotated by an experienced board certified gastroenterologist defining if the frame contained a polyp or not. Criteria for the selection of the videos included screening as the indication for the colonoscopy, the existence of minimum one adenomatous polyp, and a good bowel preparation with a BBPS score of 6 or higher. Annotation resulted in 230,898 images for the performance dataset. 15.8% of these images contained polyps. Those 24 polyps are characterized in . The performance dataset provides a more realistic scenario.

Figure 2. ENDOTEST components including the validation and performance data set for the head-to-head comparison of the GI Genius and ENDOMIND.

Table 1. Characteristics of the 24 polyps included in the 10 videos of the performance data set.

Validation of ENDOMIND and GI Genius

The manually annotated boxes using the raw colonoscopy video signal were defined as ground truth. For evaluation of the GI Genius system, the 24 polyp and corresponding non-polyp sequences were processed by the GI Genius device in real-time. The video output of GI Genius was recorded to be annotated in a second step. The resulting bounding box containing frames were reviewed by an experienced endoscopist. A frame was considered as true positive (TP) in case of overlap by the CADe bounding box and the annotated box. The absence of a CADe bounding box in a polyp-containing frame counted as a false negative (FN). A false positive detection (FP) was defined as a CADe bounding box that was not in contact with the manually annotated box.

In contrast, ENDOMIND algorithm was directly applied to every single frame of the raw colonoscopy videos for polyp detection. Both CADe systems were analyzed for accuracy, per-frame specificity, per-frame sensitivity, precision and F1-score.

Performance of ENDOMIND in comparison with GI Genius

For performance evaluation, the full-length colonoscopies were processed by the GI Genius device creating videos with bounding boxes. All frames with visible bounding boxes (CADe detections) were automatically identified by a custom-made application.

ENDOMIND for polyp detection was applied on the raw colonoscopy video signal using the performance data set. For performance analysis, a video frame was defined as TP if a CADe bounding box appeared in a frame that had been manually annotated to contain a polyp. Polyp-containing frames without a CADe bounding box were considered as FN. FP frames were considered if the CADe system drew a bounding box on video frame without a visible polyp.

A direct comparison of both CADe systems was performed regarding the standard metrics accuracy = (TP + TN)/(TP + TN + FP + FN), per-frame specificity = TN/(TN + FP), per-frame sensitivity (recall)=TP/(TP + FN), precision = TP/(TP + FP) and F1-score = 2*precision*recall/(precision + recall) calculated from the TP, FP, FN und TN values. Furthermore, videos were analyzed for the median first detection time. FDT was defined for each polyp as the time in between the first appearance of the polyp in a video and the first marking with a bounding box by the CADe system.

Statistical analysis

Statistical analysis was performed using IBM SPSS Statistics 28. Wilcoxon Signed Ranks test was performed to test for significant differences between the paired groups. A p-value of <.05 indicated statistical significance.

Ethical considerations

The study including retrospective and prospective collection of examination videos and reports was approved by the responsible institutional review board (Ethical committee Stuttgart, 21 January 2021, F-2020-158). The study was registered at the German Clinical Trial Register (26 March 2021, DRKS-ID: DRKS00024150). Signed informed consent from each patient for data recording was obtained for the prospective data collection.

Results

Both systems detected all polyps in both data sets in at least one image. A summary of the comparison of both systems for both data sets included in ENDOTEST with five standard metrics is shown in . ENDOMIND had a significantly higher per-frame sensitivity (recall) but lower per-frame specificity and precision in both data sets compared to GI Genius. This is represented by a mostly continuous detection of polyps by ENDOMIND in every frame whereas GI Genius often stops detecting the same polyp intermittently. This results in a flickering bounding box. See Supplementary video as an example. In aggregated metrics combining both false positives and false negatives ENDOMIND had a lead of 85.7% accuracy and 86.6% F1-score compared to GI Genius (79% accuracy and 76% F1-score) in the balanced validation data set, while GI Genius was partially better in the unbalanced performance data set with 89.1% accuracy and 45.8% F1-Score compared to ENDOMIND (85% accuracy and 45.8% F1-Score). The rate of false positive detections in the full length colonoscopies was 6% in case of ENDOMIND and 2% in case of GI Genius.

Table 2. Comparison of ENDOMIND with GI Genius regarding accuracy, precision, specificity, sensitivity and F1-score using the validation data set with balanced polyp and non-polyp images and the performance data set with more non-polyp images in the video sequences.

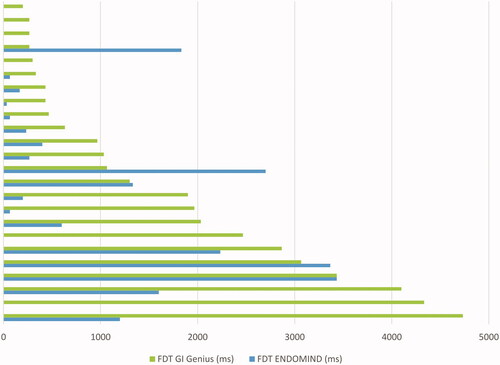

An important factor beside the recognition of a neoplastic lesion is how fast an algorithm detects a polyp and alerts or orientates the examiner through the bounding box to examine a region more closely. We evaluated this period as FDT in performance data set. ENDOMIND had a significantly faster median FDT of 217 milliseconds (ms; with an Inter-Quartile Range (IQR) between 8 and 1533 ms) compared with 1050 ms (IQR 358, 2767 ms) of GI Genius (p = .003). ENDOMIND detected 79.2% of the polyps faster than GI Genius ().

Discussion

In this work we introduce ENDOTEST, a novel video-based benchmark dataset that includes polyp sequences and full-length screening colonoscopies with minimum one adenomatous polyp. In addition, we have compared our recently developed CADe system, ENDOMIND, with a commercially available CADe system. ENDOMIND has been developed as part of a publicly funded investigator-initiated project. Besides the usage of data obtained from routine colonoscopies in an outpatient setting in gastroenterological practices, we aimed to evaluate the CADe system using a head-to-head comparison with the commercially available CADe system GI Genius with the software version present on March 2020. To our knowledge, there is no previous study comparing different CADe.

As a basis for our deep learning algorithm, we chose YOLOv5 [Citation21]. YOLOv5 offers two significant advantages: First, it is an end-to-end neural network and thereby fast and easy to use in a real-time system. Thus, it enables the system to run during the clinical routine. Second, YOLOv5 is one of the AI models with a very high detection rate while not overfitting detection box accuracy. Finding the polyp is considered more important than drawing the bounding box 100% precisely around the edges of the polyp. YOLOv5 is an algorithm maximizing those aspects.

Beside the new algorithm used for ENDOMIND, we focused on optimal training data. We decided not to include single images of polyps generated for the endoscopic report since those images often present a cleaned polyp viewed from an optimal angle. This does not reflect the look of a polyp at its first appearance. Thus, AI might not learn how to recognize polyps at this early stage of visualization. Our training data consists exclusively of videos. Accordingly, the performed annotation of polyps included the earliest time point of polyp appearance. Additionally, the endoscopies were performed in gastroenterological practices. This is in contrast to many other CADe systems where training data mainly relies on colonoscopy videos recorded in an in-hospital setting [Citation6,Citation8,Citation23]. The vast majority of the screening colonoscopies are performed in gastroenterological practices. Therefore, usage of data coming from this source as training data for the development of polyp detection systems might be better suited for the intended purpose.

Validation data sets used for different CADe systems vary largely [Citation6,Citation23,Citation24]. The proportion of polyp and non-polyp images is mainly composed in a balanced manner. However, differences regarding polyp morphology and size in between those validation data sets prevent direct benchmarking. Still, the performance of ENDOMIND on validation dataset of polyp sequences is comparable to other published CADe systems with a per-frame sensitivity of 82%–86% and specificity of 86%–89%: on publicly available datasets. Indeed, using just still images of polyps results in a marked higher sensitivity and specificity [Citation25]. Due to the fact that validation data sets of commercially available CADe systems are not publicly available, we applied the already CE-approved CADe system GI Genius to our data set. The direct comparison showed a marked higher per-frame sensitivity of ENDOMIND with inferiority in regard to per-frame specificity and precision and a higher accuracy score. As for many screening tools CADe developers prioritize sensitivity [Citation23,Citation26]. Therefore, polyp images are overrepresented in publicly available validation data sets, and results derived from those datasets are difficult to be extrapolated to performance in real-life use. To overcome this issue, ENDOTEST additionally included full-length screening colonoscopies that directly resemble situations in daily work of an endoscopist. In both evaluation data sets, ENDOMIND has a much higher per-frame sensitivity (recall), while GI Genius showed superior per-frame specificity. If the data set contains many polyps frames like in the validation data set, this results in better aggregate values like accuracy and F1-score for ENDOMIND, and if the data set contains more images without polyps like in the performance data set, GI Genius has better aggregate values. Since ENDOMIND has been trained with a data set where roughly half of the images contained polyp, this behavior could have been expected. Additionally, our data set ENDOTEST provides high-quality compared to other open-source databases like SUN Colonoscopy Video Database, LDPolypVideo-Benchmark, CVC-ClinicVideoDB [Citation12–14]. Those datasets include sequences of polyps and non full-length colonoscopies. These polyp sequences are usually recorded after polyp identification with subsequent cleaning of the polyp and therefore do not provide a realistic scenario of the intervention. As ENDOTEST includes full-length colonoscopies we provide a more realistic approach and therefore consider our data to be of higher quality. Furthermore, the first crucial frames of polyp appearance are included, which allows to measure the FDT. ENDOTEST is therefore divided in two subsets: the classic evaluation using video sequences, and the full-length colonoscopies that represent a more realistic scenario.

The main reason behind optimizing sensitivity (recall) over specificity and precision was, that the system should alert physicians to polyps they might miss during a colonoscopy. Such polyps are visible for a short time period only – much shorter than in the videos used in the performance evaluation, where the polyps were detected by the physicians and therefore were in view for an extended period of time. If it takes longer to detect a polyp, the polyp might go out of view and remain undetected. Therefore, the FDT is a key indicator for the benefit of a CADe. Here, ENDOMIND detected 79.2% of the polyps faster than GI Genius. This is a direct consequence of the higher sensitivity (recall) of ENDOMIND and comes at the cost of more false alarms, as the lower precision of ENDOMIND shows. However, we regard a type II error of missing a polyp as more severe than a type I error of a false alarm. Nasohisa et al described that twofold withdrawal velocity dramatically decreases sensitivity per lesion of the CADe system CAD EYE (Fujifilm Europe GmbH, Düsseldorf, Germany) [Citation27]. Indeed withdrawal time shows variability among endoscopists [Citation28] with impact on ADR. Thus, a faster detection of a polyp visible only for a fraction of a second might help to urge the examiner to revisit the polyp location. The lower sensitivity of GI Genius results in non-consistent recognition of polyps with short interruptions that are visible as a flickering signal (Supplementary video).

A field for future improvement of ENDOMIND is the comparatively low precision corresponding to the lower specificity and the higher FP rate compared to GI Genius. Pfeifer et al showed a similar rate of FP in the commercially available CADe system Discovery AI (Pentax Europe GmbH, Hamburg, Germany) [Citation9]. A high rate of FP detections might have the potential to induce distrust of the endoscopist to the CADe system. The examiner might thereby inspect bounding boxes less thoroughly. Still, compared to the GI Genius validation study published by Hassan C et al. the current GI Genius FP rate in our dataset is higher [Citation24]. This once more illustrates the effect of different evaluation data sets. A uniform evaluation dataset used to measure performances of different CADe systems is therefore needed.

Our future focus is on adding video sequences of normal mucosa to the training data in order to overcome the high rate of FP detections. Additionally, applying a threshold that suppresses short detections flagged by the CADe with only low precision might help. Alternatively, a different deep learning network architecture can be chosen like in a recent published study with only minimal false positive detections [Citation29]. Another future interesting point would be the comparison of the results here obtained with other publicly available datasets in order to assess the quality of ENDOTEST.

A limitation of our study might be the differing CADe analysis of the data sets. The FDT of the CADe GI Genius might have been influenced by the delay due to the input and output of the video signal into the device whereas ENDOMIND predictions were done on a high-performance computer on every video frame without the delay described above. We estimate the delay of ENDOMIND during real-time endoscopy to be further increased by 25 ms. Liu P et al reported a delay of 20 ms of their CADe system [Citation26] which is at the same level as the 50 ms a CAD system reported by Byrne et al [Citation30]. Additional evaluation with a complete computer set up of the CADe system directly attached to the endoscopy processor is therefore needed in the next step to overcome the mentioned limitations. Additionally, although not observed, the minimal compression of the videos during the recording of the colonoscopies could affect the CADe system since the signal does not originate directly from the endoscopy processor.

Conclusion

In this work, we have used the generated ENDOTEST database to compare two computer-aided polyp detection systems. ENDOTEST contains full-length screening colonoscopies, frame-by-frame annotated regarding the presence of a polyp. Therefore, it resembles a more realistic scenario than previous existing databases, and allows the calculation of the crucial parameter FDT. In addition, the developed CADe system prototype ENDOMIND, has shown promising performance and sensitivity in this preliminary evaluation when compared with a commercially available CADe system. However, further training data is needed to increase the precision to avoid false alarms. In general, there is a lack of common definitions of quality measures of annotation and validation. Benchmark data sets for validation of CAD systems could overcome these limitations. Even more important, we currently lack realistic data sets for evaluation containing undetected polyps being presented only for a short period of time, since their detections is the main purpose of the systems.

Author contributions

DF, AK, JT, FP and AH: study concept and design. DF, AK and JT: performed the experiments. DF, AK, JT, TR, FP and AH: interpretation of results, and drafting of the manuscript. DF, FP and AH: statistical analysis. DF, JT, MB, BS, WB, and WGZ: acquisition of data. All authors: critical revision of the article for important intellectual content and final approval of the article.

Supplemental Material

Download MS Word (21.9 KB)Supplemental Material

Download MP4 Video (8.6 MB)Acknowledgements

The authors acknowledge the support by Prof. J.F. Riemann, "Stiftung Lebensblicke” the Foundation for early detection of colon cancer.

Disclosure statement

The authors report there are no competing interests to declare.

Additional information

Funding

References

- Kaminski MF, Robertson DJ, Senore C, et al. Optimizing the quality of colorectal cancer screening worldwide. Gastroenterology. 2020;158(2):404–417.

- Corley DA, Jensen CD, Marks AR, et al. Adenoma detection rate and risk of colorectal cancer and death. N Engl J Med. 2014;370(14):1298–1306.

- Zhao S, Wang S, Pan P, et al. Magnitude, risk factors, and factors associated with adenoma miss rate of tandem colonoscopy: a systematic review and meta-analysis. Gastroenterology. 2019;156(6):1661–1674.e11.

- Brenner H, Altenhofen L, Kretschmann J, et al. Trends in adenoma detection rates during the first 10 years of the German screening colonoscopy program. Gastroenterology. 2015;149(2):356–366.e1.

- Hassan C, Spadaccini M, Iannone A, et al. Performance of artificial intelligence for colonoscopy regarding adenoma and polyp detection: a meta-analysis. Gastrointest Endosc. 2020; 93(1):77–85.

- Liu W-N, Zhang Y-Y, Bian X-Q, et al. Study on detection rate of polyps and adenomas in artificial-intelligence-aided colonoscopy. Saudi J Gastroenterol. 2020;26(1):13–19.

- Wang P, Liu X, Berzin TM, et al. Effect of a deep-learning computer-aided detection system on adenoma detection during colonoscopy (CADe-DB trial): a double-blind randomised study. Lancet Gastroenterol Hepatol. 2020;5(4):343–351.

- Su J-R, Li Z, Shao X-J, et al. Impact of a real-time automatic quality control system on colorectal polyp and adenoma detection: a prospective randomized controlled study (with videos). Gastrointest Endosc. 2020;91(2):415–424.e4.

- Pfeifer L, Neufert C, Leppkes M, et al. Computer-aided detection of colorectal polyps using a newly generated deep convolutional neural network: from development to first clinical experience. Eur J Gastroenterol Hepatol. 2021;33(1S Suppl 1):e662–e669.

- Repici A, Badalamenti M, Maselli R, et al. Efficacy of real-time computer-aided detection of colorectal neoplasia in a randomized trial. Gastroenterology. 2020;159(2):512–520.e7.

- Weigt J, Repici A, Antonelli G, et al. Performance of a new integrated computer-assisted system (CADe/CADx) for detection and characterization of colorectal neoplasia. Endoscopy. 2022;54(2):180–184.

- Bernal J, Sánchez FJ, Fernández-Esparrach G, et al. WM-DOVA maps for accurate polyp highlighting in colonoscopy: validation vs. saliency maps from physicians. Comput Med Imaging Graph. 2015;43:99–111.

- Misawa M, Kudo S-E, Mori Y, et al. Development of a computer-aided detection system for colonoscopy and a publicly accessible large colonoscopy video database (with video). Gastrointest Endosc. 2021;93(4):960–967.e3.

- Ma Y, Chen X, Cheng K, et al. 2021. LDPolypVideo benchmark: a large-scale colonoscopy video dataset of diverse polyps. In de Bruijne M, Cattin PC, Cotin S, Padoy N, Speidel S, Zheng Y, Essert C (eds) Medical image computing and computer assisted intervention – MICCAI 2021. Springer International Publishing, Cham, pp 387–396.

- Angermann Q, Bernal J, Sánchez-Montes C, et al. 2017. Towards real-time polyp detection in colonoscopy videos: adapting still frame-based methodologies for video sequences analysis. In: Cardoso Arbel T, Luo X, Wesarg S, Reichl T, González Ballester MÁ, McLeod J, Drechsler K, Peters T, Erdt M, Mori K, Linguraru MG, Uhl A, Oyarzun Laura C, Shekhar R (eds) Computer assisted and robotic endoscopy and clinical Image-Based procedures. Springer International Publishing, Cham, pp 29–41.

- Endoscopic Classification Review Group. Update on the Paris classification of superficial neoplastic lesions in the digestive tract. Endoscopy. 2005;37:570–578.

- Krenzer A, Makowski K, Hekalo A, et al. Semi-automated machine learning video annotation for gastroenterologists. Stud Health Technol Inform. 2021;281:484–485.

- Ali S, Braden B, Lamarque D, et al. 2020. Endoscopy Disease Detection and Segmentation (EDD2020)

- Jha D, Smedsrud PH, Riegler MA, et al. 2020. Kvasir-SEG: a segmented polyp dataset. In: Ro YM, Cheng W-H, Kim J, Chu W-T, Cui P, Choi J-W, Hu M-C, De Neve W (eds) MultiMedia modeling. Springer International Publishing, Cham, pp 451–462.

- Vázquez D, Bernal J, Sánchez FJ, et al. A benchmark for endoluminal scene segmentation of colonoscopy images. J Healthc Eng. 2017;2017:4037190.

- Redmon J, Farhadi A. 2018. YOLOv3: An Incremental Improvement. arXiv:180402767 [cs]

- Shorten C, Khoshgoftaar TM. A survey on image data augmentation for deep learning. J Big Data. 2019;6(1):60.

- Wang P, Xiao X, Glissen Brown JR, et al. Development and validation of a deep-learning algorithm for the detection of polyps during colonoscopy. Nat Biomed Eng. 2018;2(10):741–748.

- Hassan C, Wallace MB, Sharma P, et al. New artificial intelligence system: first validation study versus experienced endoscopists for colorectal polyp detection. Gut. 2020;69(5):799–800.

- Wang D, Zhang N, Sun X, et al. 2019. AFP-Net: realtime anchor-free polyp detection in colonoscopy. In 2019 IEEE 31st International Conference on Tools with Artificial Intelligence (ICTAI). IEEE, Portland, OR, USA, pp 636–643

- Liu P, Wang P, Glissen Brown JR, et al. The single-monitor trial: an embedded CADe system increased adenoma detection during colonoscopy: a prospective randomized study. Therap Adv Gastroenterol. 2020;13:1756284820979165.

- Yoshida N, Inoue K, Tomita Y, et al. An analysis about the function of a new artificial intelligence, CAD EYE with the lesion recognition and diagnosis for colorectal polyps in clinical practice. Int J Colorectal Dis. 2021;36(10):2237–2245.

- Benson ME, Reichelderfer M, Said A, et al. Variation in colonoscopic technique and adenoma detection rates at an academic gastroenterology unit. Dig Dis Sci. 2010;55(1):166–171.

- Livovsky DM, Veikherman D, Golany T, et al. Detection of elusive polyps using a large-scale artificial intelligence system (with videos). Gastrointest Endosc. 2021;94(6):1099–1109.e10.

- Byrne MF, Chapados N, Soudan F, et al. Real-time differentiation of adenomatous and hyperplastic diminutive colorectal polyps during analysis of unaltered videos of standard colonoscopy using a deep learning model. Gut. 2019;68(1):94–100.