Abstract

The ever-changing nature of online learning foregrounds the limits of separating research from design. In this article, we take the difficulty of making generalizable conclusions about designed environments as a core challenge of studying the educational psychology of online learning environments. We argue that both research and design can independently produce empirically derived knowledge, and we examine some of the configurations that allow us to simultaneously invent and study designed online learning environments. We revisit design-based research (DBR) methods and their epistemology, and discuss how they contribute various types of usable knowledge. Rather than compromising objectivity, we argue for how design researchers can acknowledge their intent and, in so doing, promote ways in which research and design can not only produce better interventions but also transform people and systems.

Design and research: Two ways to make change in the world

The contingency of artificial phenomena has always created doubts as to whether they fall properly within the compass of science. Sometimes these doubts are directed at the… difficulty of disentangling prescription from description. This seems to me not to be the real difficulty. The genuine problem is to show how empirical propositions can be made at all about systems that, given different circumstances, might be quite other than they are. (Simon, Citation1969, p. x)

Educational psychology has helped bridge the gap between the understanding of the human mind and the inherently applied field of education, in which educators must continuously make decisions about their teaching and learning aims. The theory of action in this relationship is simple: if people better understand how the mind works, they can better shape teaching to meet its constraints. The presumed division of labor has researchers in educational psychology “(i) adopting a psychological perspective to human problems; (ii) uncovering mediating/psychological variables which link particular situations with specific outcomes; (iii) employing psychological knowledge to create explanatory models of complex human problems; (iv) using evidence based strategies for change; and (v) sharing and promoting big ideas from psychology” (Cameron, Citation2006, p. 289), while others develop and enact instruction, ideally using insights from psychologists.

However, psychology research does not completely specify how to optimally educate (although some brave people have claimed otherwise). Even if psychological theory could specify thinking as well as Newton’s laws can specify the movement of objects, the thorny problem of defining initial conditions (e.g., prior knowledge, affective state, etc.) would still exist. Even when researchers zoom out and squint a bit, they still confront aspects of complexity that make solving for a desired end state impossible. Though researchers might cycle through different levels of abstraction or different attempts to take initial conditions into account, they still come up against a challenge of changing descriptive or predictive models into actionable information. The problems of designing and studying online learning environments exemplify these challenges. In this article, we describe how design-based research (DBR) methods are uniquely positioned to address the complex challenges of balancing educational and psychological research goals and design efforts to achieve actionable information about online learning environments.

Challenges of online learning design and research

Initial enthusiasm about online learning among educators stemmed from the freedoms it presents: freedom to avoid typical place and time constraints, to make learning more interactive, to design micro-affordances of learner engagement, to broaden access to the best teaching, and to use technology to record behaviors at a fine-grained level impractical in offline learning. This enthusiasm, however, was met with the several challenges inherent in real-world learning environments. Take the case of the massive shift from physical classrooms to online spaces during the COVID-19 pandemic, where a plethora of challenges became evident: from low student engagement, to inequitable access to devices, to rote transposition of instructionist pedagogies to the online environment (Greenhow et al., Citation2022/this issue). Online learning in the pandemic changed almost every characteristic of school as people knew it, and challenged how educators, students and families experienced fairly established ideas such as attention, involvement, and the social connections schools typically promote.

The pandemic also highlighted the protean arrangements of online learning. What online learning means in practice may vary greatly from context to context, and even from classroom to classroom (Greenhow et al., Citation2022/this issue; Heinrich et al., Citation2019; Lee & Hannafin, Citation2016). Online learning varies tremendously, ranging from individual computer-assisted instruction (Fletcher-Flinn & Gravatt, Citation1995), to asynchronous, interactive learning settings, to synchronous interactions (Hiltz & Goldman, Citation2004), to teleconferencing-based models (Finkelstein, Citation2006), to endemic, informal interactions on social media that support learning in communities of interest (Greenhow, Sonnevend, & Agur, Citation2016), to communities of inquiry (Shea et al., Citation2022/this issue), to computer-supported collaborative learning in which interactions may be a mix of synchronous and asynchronous, but often heavily mediated (Cress et al., Citation2021). All of these models are subject to rapid technological change, and more importantly, rapid changes in the practices of using technology. Take, for example, pre and post COVID-19 educational uses of Zoom: the platform itself evolved rapidly during the pandemic, with new features such as self-selecting breakout rooms and live closed-captioning, while simultaneously, familiarity with and conventions for the platform progressed, with new etiquette arising around everything from video muting to turn-taking norms.

Additionally, any configuration of online learning may be blended with face-to-face interactions, making the study of such hybrid learning environments even more challenging. The adoption of hybrid (or “blended”) learning models is being increasingly recommended by and adopted by policymakers, from K-12 schools to higher education institutions (Greenhow et al., Citation2022/this issue; Poirier et al., Citation2019; Tate & Warschauer, Citation2022/this issue). But how exactly one approaches such heterogeneous, ever-changing configurations to draw well-grounded conclusions remains a question. For example, how are online and in-person components of instruction organized in relation to each other? Do instructors “flip” the classroom (e.g., requiring learners to engage with materials available online prior to a lesson), thus altering the “schedule, style, and difficulty level students are accustomed to” (Poirier et al., Citation2019, p. 4)? The vast differences in both the contexts of application, and in the potential design features of the online learning environment as implemented, make it difficult to know how to generalize empirical findings, and/or recontextualize them to new circumstances. These diverse arrangements reveal some of the Herculean challenges of producing rigorous empirical knowledge about online learning environments.

Conducting a multitude of studies about the psychology of online learning in Zoom in 2019 could have advanced understanding of, as Cameron (Citation2006) suggests, “mediating/psychological variables which link particular situations with specific outcomes,” “explanatory models of complex human problems,” or “using evidence-based strategies for change” (p. 289). Even if such studies had helped arrive at both explanations and evidence suggesting particular strategies for change, researchers and practitioners would still have been ill-prepared for online learning in the pandemic, because as Simon (Citation1969) pointed out, they would be trying to make empirical claims about “systems that, given different circumstances, might be quite other than they are” (p. x). Would models have taken into account the challenges of children at home with distracted parents working online alongside them, or of learners and educators coping with trauma? What about microcultures emerging within particular online classrooms, highly influenced by how a teacher might adapt to a rapidly changing pandemic situation? Would the research community have been able to grasp how interest-driven groups connect outside of the formal borders of instruction to further learn and specialize? Any research effort would have needed to encompass both intentional components of a designed environment but also exogenous components such as the trauma produced by the pandemic, and inherited, endogenous, and rapidly changing elements such as Zoom’s interface changes or emerging practices (CitationTabak, 2004).

The complexities of online learning reveal the pitfalls of lack of mutuality between research and design. On the one hand, online learning is a complex system in the mathematical sense: identification of variables and initial conditions in sufficient detail to enable robust comprehensive predictions is impossible. On the other hand, the research challenge of disentangling enduring psychological phenomena from how they manifest within rapidly changing instructional technologies makes it hard to know whether the results of a study should be treated as a finding on the unchanging nature of human learning or as an evaluation of an intervention at a moment in time that will never be duplicated.

Furthermore, considering how technology evolves, research often focuses on either rapid changes in software itself or on technology-mediated culture. But a third aspect is at least as important but even more ephemeral than the broader cultural or technological zeitgeist: how to best deploy the technology of the day? For example, consider pandemic-era innovation of icebreaker games like scavenger hunts for online learners within their respective homes: such an activity can have profound effects on whether the class feels like it exists only on a screen or feels embedded in real life, and might similarly give some students stress as aspects of their home life are exposed. Prior research is not equipped to predict prospectively whether such an innovation will work or how to tweak it until it does. Sandoval (Citation2004) saw this as the problem of how enacted designs “embody” conjectures about either interventions or learning. Design is how those highly situated systems are studied and this directly challenges the goals of developing generalizable knowledge (Kali & Hoadley, Citation2020). As Cuban (Citation2003) noted, when people take essentialist stances toward technology, they tend to overlook the systems in which the technology is embedded and the other ways in which learning environments are constructed. Neither the technology, nor people’s behavior with it, are fixed, and this challenges a program of finding a simple answer to the question “What works?.”

Three paradigms that link science and action

“The real essence of the problem is found in an organic connection between the two extreme terms—between the theorist and the practical worker—through the medium of the linking science. The decisive matter is the extent to which the ideas of the theorist actually project themselves, through the kind offices of the middleman, into the consciousness of the practitioner.” (Dewey, Citation1890/2003, p. 136)

When researchers attempt to link an empirical scientific stance to prescription, they run across three important conceptual paradigms. First, and most obvious, is the engineering or applied science stance. Consider bridge construction, for example. Scientific knowledge describes constraints on what types of structures will stand. Engineering principles can be used to analyze any proposed structure to predict whether it will meet its specifications or to solve specific problems such as coming up with a structure for a particular site or location. Should there be aspects of the plan that require new knowledge, applied science stands ready to fill in the gap (e.g., how deep is the bedrock in a particular location?). However, what about larger questions, like deciding whether a bridge is needed in the first place, or how much money should be spent to engineer a safer bridge? Similar dilemmas would apply to our online education scenario: for instance, how would one build an alternative to Zoom that provides a greater sense of social connection?

A second paradigm prioritizes the question of human values, which Aristotle termed phronesis (Flyvbjerg, Citation2001). No amount of engineering can tell us what outcomes to seek when trying to take science from description to prescription. Attempts to "scientize" human values are linked to technocratic approaches and they famously fall flat. Problems that inherently engage human political complexities can be thought of as "wicked" (Rittel & Webber, Citation1973): even when goals are well-specified, scientifically derived solutions may flop because goals are filtered through human subjectivity. Flyvbjerg (Citation2001) described this issue as a crisis in social science: as scientists, social scientists confuse their epistemologies when they treat values as akin to natural phenomena that can be determined with experimental, positivistic scientific methods. Instead, exploring human experiences and values can be done through the methods of the humanities and other interpretivist or relativistic ways of building knowledge. Consensus building—for instance, on whether or not to develop and deploy new affordances of an online learning platform—is neither an engineering problem nor a scientific one, even if it is something scientists and engineers must concern themselves with. Subtly, if researchers pretend that their research is value-neutral, they end up obscuring values that get deeply embedded in the research itself (Stevenson, Citation1989; Vakil et al., Citation2016). Accordingly, the question of whether virtual or hybrid classes in the pandemic should focus on traditional learning objectives or balance them with socio-emotional well-being (Archambault et al., Citation2022/this issue) is a choice among values that engineering research cannot answer, but that could be explored using humanistic, political, or other phronesis-centric stances.

A third paradigm is the paradigm of design

Simon (1987/Citation1995) made a distinction between two cognitive modes: problem solving, in which a person uses a repertoire of known methods to reach a solution, and design, in which problem finding and definition are as important as solutions, where outcomes may not be predictable, and in which a person may need to go beyond their existing repertoire of methods. Design often serves to negotiate between the paradigms of goals and values, and engineering and science. In the case of our bridge, design serves to respond to not only the engineering constraints of making the bridge stand, but also the human concerns such as how to ensure the bridge might serve a wide audience, or how to consider environmental impact. Design can produce novel ways of aligning values and engineered decisions. In the case of Zoom-based classes, design serves to respond not only to the constraints of developing materials and activities to be delivered, but also the more value-centric concerns of deciding when and how to set aside those objectives in favor of overriding values such as empathy in the face of trauma, or redefining assessment goals in the face of pandemic-related changes to schooling. In short, design moves beyond problem solving, to include elements of problem finding, creativity and invention (Ko, Citation2020; Norman, Citation2013). In the next section, we delve into how design might produce knowledge that is generalizable in very different ways than scientific, positivistic knowledge.

Knowledge creation in design

Good design is empirical, but is it research? Argyris and Schön (Citation1991) characterized design as a profession: designers must take both the scientific knowledge of the field and intimate knowledge of the particular situation through a process of professional judgment to make their decisions particular to the case at hand, just as a lawyer does in a court case or a doctor does with a patient. Designers typically gather information to support their design processes: everything from needs analysis to examining relevant design examples to better understanding the context of design (Bednar & Welch, Citation2009; Raven & Flanders, Citation1996). Next, the designer must realize and iterate their designs. This process entails gathering data about the properties of proposed designs and anticipating impacts within a system. This is what Schön (Citation1992) called see-move-see: if someone considers rearranging furniture in their home, they can partially envision the outcome of a particular rearrangement, but often gain additional insight by trying an arrangement out. Sometimes design involves more complex evaluation, either based on "hard" criteria, like evaluating whether a particular new feature in online classes would be supported by learners’ devices, or sometimes based on "soft" criteria, like getting public feedback on the esthetics of a new visual interface. Taken together these forms of empirical work are often called design research (Laurel, Citation2003). Professionals like instructional designers or teachers must align known patterns and psychological findings with the particulars of the context and individual learners.

Although design research typically applies to the gathering of information that feeds into a creative process of design, design itself creates knowledge. Christopher Alexander et al. (Citation1977), in their groundbreaking work on design patterns, showed how the knowledge of skilled architects could be viewed as a cumulative set of patterns, a "pattern language" that could be used to express different designs. Each pattern represented a known partial solution to a category of problems, such that patterns were documented by describing the essential characteristics of a design solution, the circumstances under which they might be necessary, and links to other patterns that were frequently composed together. Considering the Argyris and Schön (Citation1991) framing of using judgment to apply general professional knowledge to particular situations, it is evident new patterns can be part of knowledge creation in design. Returning to our bridges, we could see a contribution to knowledge if a designer combines known patterns to solve a particular problem, like the suspension solution to the narrow opening at the San Francisco Bay. In this case, the contribution to the field’s knowledge is the particular reference point: the Golden Gate Bridge. But going in the direction of creating patterns, the Bonn-Nord bridge used a way to arrange cables that allowed other bridges to look unusually light and sweeping, making this an example of a novel pattern that was later used over and over by other designers. The pattern, rather than the instance, was the primary contribution in this case.

The type of knowledge creation that connects the general to the particular, and then creates new particulars that suggest the general, is often considered to be "unscientific"; not because it is not rooted in systematic, empirical discovery processes, but because it claims less generalizability than a law of nature that applies universally. One can envision similar knowledge creation in education, where a lesson design becomes the basis for a pattern that then can be disseminated or improved upon. For example, WebQuests—an early technology-based approach that integrated web inquiry activities into teaching and learning (Dodge, Citation1995)—began with examples that turned into widely replicated and studied patterns for online learning (Abbit & Ophus, Citation2008).

Within education, the research and design landscapes encounter the three paradigms discussed previously: applied science, value-setting, and design. How do the paradigms interact, and what are the ways in which knowledge can be generated across all three? An initial goal might be to have robust replicable science with clear conditions of applicability of the scientific principles that could be applied to arbitrary value-derived goals through a predictable, optimizable form of engineering design. Occasionally, in education, this arrangement is possible, and both educational psychology and instructional design default to it (for an example, see Cook et al., Citation2021). But with most learning through and with technology, science is less replicable and applicable (Dede, Citation2005; Simon, Citation1969). In addition, design is not predictive but iterative; it requires human judgment in context, and resists optimization because it is both particularized and ever-changing in response to shifts over time (Argyris & Schön, Citation1991).

Design knowledge generalizes in very different ways than generalized scientific knowledge. Even if researchers abandon scientific modeling and prediction in favor of thumbs-up or thumbs-down evaluation, one probably will not know when two interventions are equivalent in all the ways that matter so that evaluation results can be used to generalize to new settings. In contrast, design knowledge explicitly arises from the conjunction of the general and the particular (Kali & Hoadley, Citation2020; Nelson & Stolterman, Citation2012), and can help fill the gap left by methods that depend on universality and complete generalizability (Maxwell, Citation2004). In the next section, we examine various ways in which design and research activities can be arranged methodologically, and how these ways aid the study and creation of learning environments online.

Linking design and research in online learning

Before we can explore the variety of methods that allow researchers to build the kinds of knowledge they need, we present challenges facing a generalized, positivist approach to educational research. For example, in the late 20th Century, when studies proliferated to examine whether computer-based multimedia improved learning, results were highly inconsistent (Fletcher-Flinn & Gravatt, Citation1995). Why? First, some learning environments, and some learners, may have benefited from multimedia and others may have not. Second, not all multimedia is equivalent; good studies on bad media designs muddied the waters. Third, conditions changed; what would an appropriate control condition be, especially as “business as usual” and multimedia use converged? Fourth, the tools, conventions, and expectations around multimedia held by learners were a moving target (e.g., the technical and cultural changes in video literacies after the introduction of YouTube). When the field finally did make progress in this area, it was on the ways in which people process multimedia cognitively, rendering further questions related to “multimedia” as nonsensical and shifting the research focus to enduring cognitive processes underlying multimedia learning rather than the media formats themselves (Mayer, Citation1997). This shift led to research findings that were more robust and generalizable (for example, Moreno & Mayer, Citation1999; Sweller, Citation2011), and simultaneously harder to apply in design work, engaging the questions of what cognition a given multimedia artifact might engender for any specific audience and context (for example, Aronson et al., Citation2013). Two key challenges are evident in this example: appropriate generalization and particularization. First, to make valid generalizations, education researchers need a good sense of what is important about the intervention and its context to do any comparison, whether by creating an appropriate control condition, or describing what the intervention represents in terms of theory. In online learning one may find it difficult to determine what the “treatment” is, or what aspects of an intervention are being changed or held constant in different cases. Interpreting results from any experiment requires opening the black box of the intervention, and understanding which elements of the outcomes are attributable to hypotheses or theories, and which ones are attributable to the particular design that was created from those theories. Second, getting the human psychology right but testing it with the wrong design (e.g., testing the learning impact of collaborative online gaming based on shoddy games that are not fun for participants) may create spurious results. In a designed environment, one needs the right particulars to have results than can be interpreted meaningfully.

In the learning sciences, various approaches have been developed to address these challenges, usually crossing disciplinary traditions (Hoadley, Citation2018), and often triangulating via a range of approaches (Tobin & Ritchie, Citation2012), from cognitive microgenetic methods (Schoenfeld et al., Citation1993; Siegler & Crowley, Citation1991), to thick cultural descriptions (Goldman et al., Citation2014), to tools such as multimodal learning analytics (Blikstein, Citation2013). More importantly, the learning sciences have adopted a variety of methods of research on and through design, linking methods of the past with new ways of building trustworthy and applicable theories (Hoadley, Citation2018; Hung et al., Citation2005). Perhaps earliest among these were “design experiments,” (Brown Citation1992; Cobb et al., Citation2003; Collins, Citation1992), now more commonly termed “design-based research methods” (Design-based Research Collective, Citation2003; Hoadley, Citation2002; Sandoval & Bell, Citation2004). In the sections that follow, we describe design-based research (henceforth, DBR) methods as a way to solve some of the challenges of knowledge production in the context of online learning, and provide a process model to help illustrate ways DBR can produce the types of knowledge needed to study online learning.

Design-based research methods

DBR attempts to understand the world by trying to change it, making it an interventionist research method. However, DBR problematizes the designed nature of interventions, recognizing that the intended design is different from what may be enacted in a complex social context, one in which both participants and designer-researchers have agency (CitationTabak, 2004). In DBR, hypotheses take a dualistic form as design conjectures, in which there are explicitly (at least) two degrees of freedom between inputs (context, initial conditions, participants and their histories, etc.) and final outcomes (Sandoval, Citation2004). Generally, this dual linkage comes from joining a design proposition (i.e., this design should achieve specified goals or mediating processes) to a proposition about people (i.e., a testable prediction that the achievement of a set of goals should engage psychological processes in a way that supports or contradicts the theory being tested). Often, instead of comparing a treatment and a control group, DBR will compare treatments as iterations on a particular design. Thus, a DBR study on whether, say, an online community of inquiry has certain impacts on learning (Shea et al., Citation2022/this issue), would explicitly construct two arguments linking treatments to hypothesized outcomes—what elements would foster a community of inquiry, and how such community leads to particular learning outcomes. Such a study would proceed in a way that looks more like a design process than a simple laboratory experiment. Researchers would document the baseline, then collect data on multiple iterations as refined versions of the design are implemented in context. Throughout, the researcher would maintain a dual identity as a designer, favoring participant observation over total detachment, including tweaking the intervention to better match the design intent mid-implementation. This identity does not prevent the researcher from drawing conclusions about the research questions, but necessitates both a tentative stance toward generalizing any findings out of the context of exploration, and a humility toward making causal claims because attribution to either of the elements of the design conjectures is possible. Iteration allows systematic exploration of interpretations either through revised designs, embedded comparisons (e.g., A/B testing), reanalysis of earlier data, or shifts in the context of the research. Causal claims must be made tentatively and can only be made more strongly as further iterations and additional evidence rules out alternative interpretations.

As we work toward defining the DBR process and identifying its key characteristics, we should be clear about how DBR contrasts with dominant quantitative and qualitative traditions (see ). In positivistic experimentation, there is a presumption that the researcher is flawless in specifying interventions that are implied by theory and hypotheses, and at accurately reporting experimental designs and data collected, but also that the research may be deeply biased in collecting data, analyzing data, or in some cases in implementing interventions or control groups. To manage these biases, techniques such as double-blind experiments and sophisticated statistical analysis are used to make science as objective as possible. In DBR, the agenda of the designers is seen as a positive force rather than a threat to validity; their intimate knowledge of the design rationale makes them relevant to ensure implementation fidelity and methodological alignment (Hoadley, Citation2004) between “what we wanted to test,” “what we think we actually tested,” and “what we now think we should have been testing.” Because of this intimacy, the research (1) reports not only data collected, but narrates design moves, rationale, and other aspects of the design narrative (Hoadley, Citation2002; Shavelson, Citation2003); (2) collects data broadly to continuously check assumptions and for future retrospective analysis; and (3) keeps not only the implementation but inferences or generalizations contextualized and localized. In some ways, this stance starts looking like the stance of the qualitative researcher in an interpretivist tradition, in that the central role of the researcher-as-interpreter is acknowledged. Yet, in contrast to many qualitative methods, DBR researchers are interventionist and do not avoid positivistic generalizations or causal inference as end goals. Interventions may be used to achieve desired design outcomes, but also to probe human psychology, with a goal of building theory rather than just designed interventions. From a philosophical perspective, this aligns well with ideas of pragmatism (Legg & Hookway, Citation2020), which might be summarized as “knowledge is as knowledge does,” in which all truth is subordinate to the action which it can motivate. In educational psychology, this might reasonably be called consequential validity (Messick, Citation1998). Of note, the pragmatists were divided on whether human knowing was based on a singular reality (i.e., traditional positivism) or pluralist perspectives (i.e., more like ethnomethodology or other interpretivist traditions; see ). Thus, DBR can be done in a way looking for a singular truth, or can encompass more perspectivity (Goldman, Citation2004) by following a Deweyan idea of pragmatism.

Table 1. Comparing DBR with qualitative and experimental traditions.

Modeling the DBR process

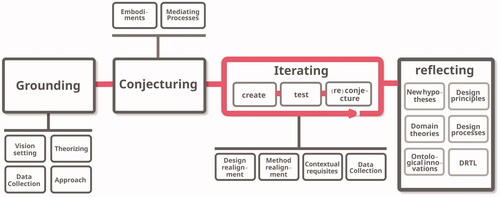

As a young research approach, DBR is still under construction. Several scholars have attempted to pin down defining characteristics, but all agree it involves studying flexible iterations of designed interventions in a naturalistic context (Anderson & Shattuck, Citation2012; DBRC, Citation2003; Wang & Hannafin, Citation2005). In , we summarize and reorganize different descriptions of the overall DBR approach in one process model. (See Appendix for a discussion of how DBR relates to other similar methodologies.) This reorganization into a single process model supports our understanding of DBR and how to use it for studying real-world learning environments. By emphasizing the ways DBR produces knowledge, this model helps illustrate how DBR solves the problem of generalization and particularization in online learning research, identified above. In particular, the process model embraces four activities that help DBR researchers produce knowledge about online learning even in the face of its dependence on interactions between context and design enactments.

Grounding

Design-based scholars of different traditions typically depict a research endeavor as starting in what we term a grounding phase.Footnote1 In this initial stage, researchers identify a theoretical gap or problem of practice they aim to investigate by creating and enacting a real-world intervention (Edelson, Citation2002; Kali & Hoadley, Citation2020), including developing a sense of the contexts in which that intervention might be tested. Grounding a project involves collecting data about current situations or implementations of a given intervention, analyzing them in light of contextual factors and theory to ultimately set the tension between what-is and what-might-be (Bednar & Welch, Citation2009; Tatar, Citation2007). This initial data collection may also include information about learners, specific domains, and historical data about previous experiences with existing solutions (Easterday et al., Citation2018).

Grounding a DBR project also means making initial higher-level choices that will bound the scope and methods of a project. This means, for example, specifying stakeholders and their roles (i.e., consultant, co-designer, etc.), which in turn helps bound the perspectives and lenses the project will employ. This phase is crucial to setting the direction of a DBR project and ensuring that “there is something worth designing … and that the team has the resources to potentially succeed" (Easterday et al., Citation2018, p. 138).

Conjecturing

The next phase involves laying out an initial set of high-level conjectures that will guide the development and testing of a design. Considering that DBR involves the generation of "knowledge about which actions under what circumstances will lead to which kind of intended consequences" (Bakker, Citation2018, p. 47), conjecturing gives concretude to the problems identified in the grounding phase by tracing out a theory of action. It is therefore important, as proposed by Sandoval (Citation2014), to map this high-level theory of action involved in a DBR project. The mapping serves the purpose of capturing the research team’s idea about how particular embodiments might generate mediating processes that will, ultimately, lead learners into the hypothesized outcomes. Embodiments represent the various features of a design learning environment or intervention, such as functionalities, materials, associated tasks and structures, or imposed practices. But how might particular aspects or features of a design lead to the hypothesized outcomes? Following Sandoval’s (Citation2014) model, designs cannot lead directly to outcomes, but first produce key mediating processes.

Iterating

Iterations are recurrent building-testing-reconjecturing cycles used to gain theoretical and practical insights about unclear or ill-structured aspects of a research project (Lewis et al., Citation2020). Iterating means taking initial abstractions—such as psychological theories, hypothesized learning trajectories, broad design principles, etc.—and embodying them in concrete designs that will produce the anticipated mediating processes. In each cycle of iteration, researchers collect data about how participants use, talk about, create social structures, or even re-signify designs in their actual contexts of use. This data is then used to refine the initial conjectures about learning and to produce new designs that will ultimately test such hypotheses.

Successive iterations are the key methodological feature of a DBR project that allow researchers to make empirical claims about designed learning tools, artifacts, or interventions while they are being created and brought into play (Kali & Hoadley, Citation2020). In each cycle, researchers collect new data, take into account unpredicted contextual requisites, adjust their designs (i.e., what aspect of the embodiment will need redesigning in the next cycle and why), and review their methods of implementation (e.g., participants’ roles, tasks, stimuli, etc.). DBR teams then register these realignments into new conjecture maps (Sandoval, Citation2004, Citation2014), hypothesized learning trajectories (Bakker, Citation2018), and/or thick design narratives (Hoadley, Citation2002). It is precisely this intense and reflective act during each iteration that permits DBR researchers to produce new knowledge by adjusting the "fit between the theory, the design, and the enactment or implementation so as to best test the theoretical conjectures" (Kali & Hoadley, Citation2020, p. 1).

Reflecting

The act of building and testing something in a real-world context is an intense reflection-through-design practice (Schön, Citation1992). Reflecting involves analyzing all data collected through the process, including formative evaluations and sequential conjecture maps (among many others) in light of initial hypotheses and learning theories. The ultimate goal of this phase is to allow the research team to explore which actions or circumstances might or might not have led to potential changes in the studied environment (Bakker, Citation2018).

In pursuing this question, DBR might lead to a variety of end points that go beyond the artifact or intervention per se (Edelson, Citation2002). Although some scholars challenge what types of outcomes may properly be claimed based on DBR (Dede, Citation2004; Kelly, Citation2004; Shavelson et al., Citation2003) we list the following:

Domain Theories: Domain theories generalize a particular piece of a high-level, hypothesized learning theory (e.g., how online environments influence learning). DBR elicits domain theories about the context of the intervention or the outcomes permitted by the design (Edelson, Citation2002).

Design Principles or Patterns: Design principles are generalized heuristics that respond to a particular learning problem, achieved by successive iteration and reconjecturing (Edelson, Citation2002). Design patterns are generalized partial solutions for particular learning problems (Goodyear, Citation2005; Pea & Lindgren, Citation2008; Rohse & Anderson, Citation2006). Whereas domain theories are capable of describing a phenomenon, design principles and patterns carry a prescriptive message about how and when learning environments need to be designed in certain ways to achieve the desired outcome in a particular context (Edelson, Citation2002; Van den Akker, Citation1999), with the caveat that "no two situations will be identical and that adaptation to local circumstances is always necessary" (Bakker, Citation2018, p. 52).

Design Processes: A design process is the prescriptive procedural knowledge that describes the particular methodology for achieving a type of design, which expertise is needed, and the roles that need to be played by individuals involved in the process (Edelson, Citation2002).

Ontological Innovations: Throughout successive cycles of building and testing, design researchers might find situations that challenge current frameworks or assumptions about a domain or design. In this sense, ontological innovations are novel explanatory constructs, categories or taxonomies that help explain how something works (diSessa & Cobb, Citation2004).

New Hypotheses: Sometimes design yields questions rather than answers (Brown, Citation1992). Through iterative cycles, DBR researchers develop new hypotheses that may be the subject of future research endeavors (Shavelson et al., Citation2003).

Design Researcher Transformative Learning (DRTL): The immersive nature of DBR may also shape how researchers experience the notion of design knowledge (Kali, Citation2016). DRTL describes a series of "a-ha moments" that enable design researchers to develop new conceptualizations of "how they position themselves as actors within the situation they are exploring" (Kali & Hoadley, Citation2020, p. 10).

These six types of “findings” are dissimilar from those achieved either through positivistic or interpretivist qualitative research (Ryu, Citation2020; CitationTabak, 2004). Even domain theories, which may look similar to those produced by experimental psychology, are epistemologically different in that they represent tentative understandings with an explicit acknowledgment of an unknown degree of limited generalizability to other contexts or design variations. Yet, they align squarely with Deweyan ideas about the aims of inquiry:

“All the pragmatists, but most of all Dewey, challenge the sharp dichotomy that other philosophers draw between theoretical beliefs and practical deliberations. In some sense, all inquiry is practical, concerned with transforming and evaluating the features of situations in which we find ourselves.” (Legg & Hookway, Citation2020)

Applying the DBR process to studying and designing online learning

The reorganized DBR process model is especially useful for creating new knowledge about online learning. First, through grounding, the context of learning is documented and a baseline of theories and assumptions is established by researchers. For example, if DBR researchers were to study how to facilitate science learning in the pandemic Zoom-schools, they would first need to ground their understanding of this particular problem of practice. What key components of science learning went missing when schools moved to digital environments? How did students experience the sudden loss of fundamental socio-emotional supports for learning? How might one emulate the social interactions and collective inquiry of a physical lab in a purely virtual platform? Second, through conjecturing, theories of learning and instruction would be coordinated with respect to the particular context, and researchers would devise a map of conjectures. In our example, researchers could hypothesize that virtual labs that permit synchronous and collective manipulation of real-world, live single-cell organisms (as seen in Hossain et al., Citation2018, for example), working in conjunction with Zoom, might have served as embodiments, or the tangible part of an intervention. A map of conjectures would then link such labs to key mediating processes: by solving problems cooperatively and manipulating a living organism in real time, students would develop a solid conceptualization of and interest for the subject matter in question. This means DBR does not assume that a designed online environment is conducive to learning by itself. Instead, it theorizes a chain linking embodiments and mediating processes that might lead to the desired outcome.

Third, through iterating, these theoretically driven conjectures would be aligned with the unique practices of the particular online learning context and audience. Through successive iterations, researchers could introduce new elements that challenge, confirm or review initial conjectures and assumptions. In our online science lab example, how would students engage with computerized models of biological specimen behavior within the online science lab? Would they be more conducive to learning, and under what contextual conditions or corequisite supports? Finally, through reflecting, claims would be made about how general psychological learning processes and specific enacted designs-in-practice would create particular outcomes, with an eye toward appropriate generalization. For instance, could the individual experience with online science labs accentuate the gap in socio-emotional learning left when schools moved to remote instruction? What general principles, design patterns or new hypotheses related to how students learn with online science labs could be devised? As an illustration of our example, Hossain et al. (Citation2018) used DBR to test hypotheses about how students learn in a cloud-based biology lab that allows interactions with living single-cell organisms, as well as if the effort of building such an environment was justified when compared with computer simulations. Successive iterations of the online biology course led to new hypotheses (i.e., would group activities in online learning environments further facilitate learning? How?) and a key reflection: students advance their comprehension of biological phenomena when they are able to predict and compare computational modeling results with the behavior of real living specimens. In this case, DBR allowed researchers and practitioners alike to move away from simply inquiring "what works best" or producing rote comparisons between design features to dive deeper into a successive process of re-conjecturing about which embodiments and mediating processes would lead to learning in an online science lab.

Thus, this process model shows how DBR engages with and produces knowledge with four main categories of activity: grounding, conjecturing, iterating, and reflecting, each of which produces knowledge that helps to link across the paradigms of engineering, phronesis, and design. Within the context of online learning, the framing of design-based research methods helps address some of the challenges associated with more traditional applied science paradigms, including methodological alignment, sensitivity to dynamic contexts, tentative generalization, and turning insights into designed interventions. Each of the four categories of activity involves producing types of knowledge that sit between the particular and the general in ways that support further exploration both in research and design of online learning.

Conclusion

Design-based research methods are a thirty-year old tradition from the learning sciences that have been taken up in many domains as a way to study designed interventions that challenge the traditional relationship between research and design, as is the case with online learning. Key to the contribution and coherence of this method are different forms of knowledge, which lie less in the domain of positivistic truth statements and more in the domain of patterns, heuristics, and tentative generalizations. This aligns well with the philosophy of pragmatism as expressed by Dewey and others, in which the knowledge sought is actionable. Earlier versions of DBR emphasized the interrelationship between design, context, and inference, with strong attention to challenges of linking different parts of design conjectures in the interpretation of data. In the last fifteen years an increasing sophistication regarding sociocultural or sociopolitical systems that mediate outcomes has led to related approaches that focus more on social change, agency and equity, and practices rather than technologies, leading to approaches to reconcile DBR with mixed and action-research methods (e.g., Gutiérrez & Jurow, Citation2016, Ryu, Citation2020).

More importantly, we see that on top of designs and findings as outcomes, there is the possibility of transforming systems, organizations, and, notably, transforming researchers and participants. Academic research in social sciences has predominantly treated knowledge as fungible: that is, that discoveries are usable by all if published well. Through a framework of design researcher’s transformative learning (Kali, Citation2016) we see that research can serve a function of transforming researchers and participants themselves, creating ripple effects of design capacity more like the professional judgment of Argyris and Schön (Citation1991) or the usable knowledge described by Lindblom and Cohen (Citation1979). Researchers have not often thought about their own learning or knowledge management as key goals of research, but this may be an opportunity we face with current uses of design-oriented methods like DBR.

These properties of DBR make it particularly well suited for developing and studying online learning. Researchers in online learning often struggle with what aspects of their findings are generalizable or bound to a particular time, place, or community (Greenhow et al., Citation2022/this issue). Online learning technologies are often a moving target and, even without rapid evolution, are not monolithic. Because DBR can support contextualized and interventionist grounding, conjecturing, iterating, and reflecting, it is well suited to the ways scholars need to coordinate design-centric knowledge with psychological theories and claims. Incumbent on those using these novel methods is to help shape expectations about findings such that they are not taken for dogma or purely positivistic truth claims. Nonetheless, by holding their knowledge claims, and their own transformation as knowers, up to scrutiny, online learning researchers have tremendous opportunity to make research more consequential and to transform from current situations to better futures.

Notes

1 We term this phase grounding both to evoke the inductive stance of grounded theory (Strauss & Corbin, Citation1994), and the linguistic idea of common ground (Clark, Citation2020).

References

- Abbit, J., & Ophus, J. (2008). What we know about the impacts of WebQuests: A review of research. AACE Journal, 16(4), 441–456.

- Alexander, C., Ishikawa, S., Silverstein, M., Jacobson, M., Fiksdahl-King, I., & Angel, S. (1977). A pattern language: Towns, buildings, construction. Oxford University Press.

- Anderson, G. (2017). Participatory action research (PAR) as democratic disruption: New public management and educational research in schools and universities. International Journal of Qualitative Studies in Education, 30(5), 432–449. https://doi.org/10.1080/09518398.2017.1303211

- Anderson, T., & Shattuck, J. (2012). Design-based research: A decade of progress in education research? Educational Researcher, 41(1), 16–25. https://doi.org/10.3102/0013189X11428813

- Archambault, L., Leary, H., & Rice, K. (2022/this issue). Pillars of online pedagogy: A framework for teaching in online learning environments. Educational Psychologist, 57(3), 178–191. https://doi.org/10.1080/00461520.2022.2051513

- Argyris, C., & Schön, D. A. (1991). Theory in practice: Increasing professional effectiveness (1st Classic Paperback ed.). Jossey-Bass Publishers.

- Aronson, I. D., Marsch, L. A., & Acosta, M. C. (2013). Using findings in multimedia learning to inform technology-based behavioral health interventions. Translational Behavioral Medicine, 3(3), 234–243. https://doi.org/10.1007/s13142-012-0137-4

- Bakker, A. (2018). Design research in education: A practical guide for early career researchers. Taylor & Francis Group.

- Bednar, P. M., & Welch, C. (2009). Contextual inquiry and requirements shaping. In Information systems development (pp. 225–236). Springer-Verlag. https://doi.org/10.1007/978-0-387-68772-8_18

- Bell, P. L. (2004). On the theoretical breadth of design-based research in education. Educational Psychologist, 39(4), 243–253. https://doi.org/10.1207/s15326985ep3904_6

- Blikstein, P. (2013, April). Multimodal learning analytics. In Proceedings of the Third International Conference on Learning Analytics and Knowledge (pp. 102–106). https://doi.org/10.1145/2460296.2460316

- Brown, A. L. (1992). Design experiments: Theoretical and methodological challenges in creating complex interventions in classroom settings. Journal of the Learning Sciences, 2(2), 141–178. https://doi.org/10.1207/s15327809jls0202_2

- Bryk, A. S., Gomez, L. M., Grunow, A., & LeMahieu, P. G. (2015). Learning to improve: How America’s schools can get better at getting better. Harvard Education Press.

- Cameron, R. J. (2006). Educational psychology: The distinctive contribution. Educational Psychology in Practice, 22(4), 289–304. https://doi.org/10.1080/02667360600999393

- Clark, H. H. (2020). Common ground. In J. Stanislaw (Ed.), The international encyclopedia of linguistic anthropology (pp. 1–5). Wiley. https://doi.org/10.1002/9781118786093.iela0064

- Cobb, P., Confrey, J., diSessa, A., Lehrer, R., & Schauble, L. (2003). Design experiments in educational research. Educational Researcher, 32(1), 9–13. https://doi.org/10.3102/0013189X032001009

- Collins, A. (1992). Toward a design science of education. In New directions in educational technology (pp. 15–22). Springer.

- Collins, A., Bielaczyc, K., & Joseph, D. (2004). Design experiments: Theoretical and methodological issues. Journal of the Learning Sciences, 13(1), 15–42. https://doi.org/10.1207/s15327809jls1301_2

- Cook, B. G., Fleming, J. I., Hart, S. A., Lane, K. L., Therrien, W. J., van Dijk, W., & Wilson, S. E. (2021). A how-to guide for open-science practices in special education research. Remedial and Special Education, 2021, 074193252110191. https://doi.org/10.1177/07419325211019100

- Cress, U., Rose, C., Wise, A., & Oshima, J. (2021). International handbook of computer-supported collaborative learning. Springer.

- Cuban, L. (2003). Oversold and underused: Computers in the classroom. Harvard University Press.

- Dede, C. (2004). If design-based research is the answer, what is the question? A commentary on Collins, Joseph, and Bielaczyc; diSessa and Cobb; and Fishman, Marx, Blumenthal, Krajcik, and Soloway in the JLS special issue on design-based research. Journal of the Learning Sciences, 13(1), 105–114. https://doi.org/10.1207/s15327809jls1301_5

- Dede, C. (2005). Why design-based research is both important and difficult. Educational Technology, 45(1), 5–8.

- Design-Based Research Collective (DBRC). (2003). Design-based research: An emerging paradigm for educational inquiry. Educational Researcher, 32(1), 5–8. https://doi.org/10.3102/0013189X032001005

- Dewey, J. (2003). Psychology and social practice. In J. A. Boydston (Ed.), The Middle Works of John Dewey 1899–1924. Volume 1: 1899–1901, Essays, The School and Society, The Educational Situation (Vol. 6, pp. 132–146). InteLex. (Original work published 1890)

- DiSessa, A. A., & Cobb, P. (2004). Ontological innovation and the role of theory in design experiments. The Journal of the Learning Sciences, 13(1), 77–103. https://doi.org/10.1207/s15327809jls1301_4

- Dodge, B. (1995). WebQuests: A technique for internet-based learning. Distance Educator, 1(2), 10–13.

- Easterday, M. W., Lewis, D. R., & Gerber, E. M. (2014). Design-based research process: Problems, phases, and applications. In J. L. Polman, E. A. Kyza, D. K. O’Neill, I. Tabak, W. R. Penuel, A. S. Jurow, K. O’Connor, T. Lee, and L. D’Amico (Eds.). Learning and becoming in practice: The International Conference of the Learning Sciences 2014 (Volume 1., pp. 317–324). International Society of the Learning Sciences.

- Easterday, M. W., Rees Lewis, D. G., & Gerber, E. M. (2018). The logic of design research. Learning: Research and Practice, 4(2), 131–160. https://doi.org/10.1080/23735082.2017.128636

- Edelson, D. C. (2002). Design research: What we learn when we engage in design. Journal of the Learning Sciences, 11(1), 105–121. https://doi.org/10.1207/S15327809JLS1101_4

- Egan, D. E., Remde, J. R., Gomez, L. M., Landauer, T. K., Eberhardt, J., & Lochbaum, C. C. (1989). Formative design evaluation of superbook. ACM Transactions on Information Systems, 7(1), 30–57. https://doi.org/10.1145/64789.64790

- Engeström, Y. (2011). From design experiments to formative interventions. Theory & Psychology, 21(5), 598–628. https://doi.org/10.1177/0959354311419252

- Engeström, Y., Sannino, A., & Virkkunen, J. (2014). On the methodological demands of formative interventions. Mind, Culture, and Activity, 21(2), 118–128. https://doi.org/10.1080/10749039.2014.891868

- Fallman, D. (2007). Why research-oriented design isn’t design-oriented research: On the tensions between design and research in an implicit design discipline. Knowledge, Technology & Policy, 20(3), 193–200. https://doi.org/10.1007/s12130-007-9022-8

- Finkelstein, J. E. (2006). Learning in real time: Synchronous teaching and learning online. Jossey-Bass (Wiley).

- Fletcher-Flinn, C. M., & Gravatt, B. (1995). The efficacy of Computer Assisted Instruction (CAI): A meta-analysis. Journal of Educational Computing Research, 12(3), 219–241. https://doi.org/10.2190/51D4-F6L3-JQHU-9M31

- Flyvbjerg, B. (2001). Making social science matter: Why social inquiry fails and how it can succeed again (S. Sampson, Trans.). Cambridge University Press. https://doi.org/10.1017/CBO9780511810503

- Goldman, R. (2004). Video perspectivity meets wild and crazy teens: A design ethnography. Cambridge Journal of Education, 34(2), 157–178. https://doi.org/10.1080/03057640410001700543

- Goldman, R., Pea, R., Barron, B., & Derry, S. J. (Eds.). (2014). Video research in the learning sciences. Routledge.

- Goodyear, P. (2005). Educational design and networked learning: Patterns, pattern languages and design practice. Australasian Journal of Educational Technology, 21(1), ajet.1344. https://doi.org/10.14742/ajet.1344

- Greenhow, C., Graham, C. R., & Koehler, M. J. (2022/this issue). Foundations of online learning: Challenges and opportunities. Educational Psychologist, 57(3), 131–147. https://doi.org/10.1080/00461520.2022.2090364

- Greenhow, C., Sonnevend, J., & Agur, C. (2016). Education and social media: Toward a digital future. The MIT Press.

- Gregory, S. (1979). Design studies—the new capability [Editorial]. Design Studies, 1(1), 2. https://doi.org/10.1016/0142-694X(79)90018-8

- Gutiérrez, K. D., & Jurow, A. S. (2016). Social design experiments: Toward equity by design. Journal of the Learning Sciences, 25(4), 565–598. https://doi.org/10.1080/10508406.2016.1204548

- Heinrich, C. J., Darling-Aduana, J., Good, A., & Cheng, H. (2019). A look inside online educational settings in high school: Promises and pitfalls for improving educational opportunities and outcomes. American Educational Research Journal, 56(6), 2147–2188. https://doi.org/10.3102/0002831219838776

- Hiltz, S. R., & Goldman, R. (2004). Learning together online. Routledge. https://doi.org/10.4324/9781410611482

- Hoadley, C. (2002). Creating context: Design-based research in creating and understanding CSCL. In G. Stahl (Ed.), Computer Support for Collaborative Learning 2002. Foundations for a CSCL Community (pp. 453–462) Lawrence Erlbaum Associates. https://repository.isls.org/bitstream/1/3808/1/453-462.pdf

- Hoadley, C. M. (2004). Methodological alignment in design-based research. Educational Psychologist, 39(4), 203–212. https://doi.org/10.1207/s15326985ep3904_2

- Hoadley, C. (2018). A short history of the learning sciences. In F. Fischer, C. E. Hmelo-Silver, S. R. Goldman, & P. Reimann (Eds.), International Handbook of the Learning Sciences. (pp. 11–23). Routledge. https://doi.org/10.4324/9781315617572-2

- Hossain, Z., Bumbacher, E., Brauneis, A., Diaz, M., Saltarelli, A., Blikstein, P., & Riedel-Kruse, I. H. (2018). Design guidelines and empirical case study for scaling authentic inquiry-based science learning via open online courses and interactive biology cloud labs. International Journal of Artificial Intelligence in Education, 28(4), 478–507. https://doi.org/10.1007/s40593-017-0150-3

- Hung, D., Looi, C.-K., & Hin, L. T. W. (2005). Facilitating inter-collaborations in the learning sciences. Educational Technology, 45(4), 41–44.

- Kali, Y. (2016). Transformative learning in design research: The story behind the scenes [Keynote Presentation] International Conference of the Learning Sciences, 2016. https://doi.org/10.13140/RG.2.2.21293.33760

- Kali, Y., & Hoadley, C. (2020). Design-based research methods in CSCL: Calibrating our epistemologies and ontologies. In U. Cress, C. Rosé, A. Wise, and J. Oshima (Eds.), International Handbook of computer-supported collaborative learning. Springer. https://doi.org/10.13140/RG.2.2.31503.20642

- Kelly, A. (2004). Design research in education: Yes, but is it methodological? Journal of the Learning Sciences, 13(1), 115–128. https://doi.org/10.1207/s15327809jls1301_6

- Ko, A. J. (2020). How to design. In Design Methods. University of Washington. https://faculty.washington.edu/ajko/books/design-methods/#/design

- Laurel, B. (2003). Design research: Methods and perspectives. MIT Press.

- Lee, E., & Hannafin, M. J. (2016). A design framework for enhancing engagement in student-centered learning: Own it, learn it, and share it. Educational Technology Research and Development, 64(4), 707–734. https://doi.org/10.1007/s11423-015-9422-5

- Legg, C., & Hookway, C. (2020). Pragmatism. In E. N. Zalta (Ed.), The stanford encyclopedia of philosophy. Metaphysics Research Lab, Stanford University. https://plato.stanford.edu/archives/fall2020/entries/pragmatism/

- Lewis, C. (2015). What is improvement science? Do we need it in education? Educational Researcher, 44(1), 54–61. https://doi.org/10.3102/0013189X15570388

- Lewis, D. R., Carlson, S., Riesbeck, C., Lu, K., Gerber, E., & Easterday, M. (2020). The logic of effective iteration in design-based research. In M. Gresalfi and I. S. Horn (Eds.), The interdisciplinarity of the learning sciences, 14th International Conference of the Learning Sciences (ICLS) 2020) (Vol. 2, pp. 1149–1156). International Society of the Learning Sciences.

- Lindblom, C. E., & Cohen, D. K. (1979). Usable knowledge: Social science and social problem solving. Yale University Press.

- Linn, M. C. (2000). Designing the knowledge integration environment. International Journal of Science Education, 22(8), 781–796. https://doi.org/10.1080/095006900412275

- Martin, T. H. (1979). Formative evaluation. ACM SIGSOC Bulletin, 11(1), 11–12. https://doi.org/10.1145/1103002.1103007

- Maxwell, J. A. (2004). Causal explanation, qualitative research, and scientific inquiry in education. Educational Researcher, 33(2), 3–11. https://doi.org/10.3102/0013189X033002003

- Mayer, R. E. (1997). Multimedia learning: Are we asking the right questions? Educational Psychologist, 32(1), 1–19. https://doi.org/10.1207/s15326985ep3201_1

- Messick, S. (1998). Test validity: A matter of consequence. Social Indicators Research, 45(1/3), 35–44. https://doi.org/10.1023/A:1006964925094

- Moreno, R., & Mayer, R. E. (1999). Cognitive principles of multimedia learning: The role of modality and contiguity. Journal of Educational Psychology, 91(2), 358–368. https://doi.org/10.1037/0022-0663.91.2.358

- Nelson, H. G., & Stolterman, E. (2012). The design way: Intentional change in an unpredictable world (2nd ed.). MIT press.

- Norman, D. A. (2013). The design of everyday things: Revised and expanded. Basic Books.

- Pea, R., & Lindgren, R. (2008). Video collaboratories for research and education: An analysis of collaboration design patterns. IEEE Transactions on Learning Technologies, 1(4), 235–247. https://doi.org/10.1109/TLT.2009.5

- Penuel, W. R., Allen, A.-R., Farrell, C., & Coburn, C. E. (2015). Conceptualizing research–practice partnerships as joint work at boundaries. Journal for Education of Students at Risk (JESPAR), 20(1–2), 182–197. https://doi.org/10.1080/10824669.2014.988334

- Penuel, W. R., Fishman, B. J., Haugan Cheng, B., & Sabelli, N. (2011). Organizing research and development at the intersection of learning, implementation, and design. Educational Researcher, 40(7), 331–337. https://doi.org/10.3102/0013189X11421826

- Platt, J. (1983). The development of the “participant observation” method in sociology: Origin myth and history. Journal of the History of the Behavioral Sciences, 19(4), 379–393. https://doi.org/10.1002/1520-6696(198310)19:4<379::AID-JHBS2300190407>3.0.CO;2-5

- Poirier, M., Law, J. M., & Veispak, A. (2019). A spotlight on lack of evidence supporting the integration of blended learning in K-12 education: A systematic review. International Journal of Mobile and Blended Learning, 11(4), 1–14. https://doi.org/10.4018/IJMBL.2019100101

- Raven, M. E., & Flanders, A. (1996). Using contextual inquiry to learn about your audiences. ACM SIGDOC Asterisk Journal of Computer Documentation, 20(1), 1–13. https://doi.org/10.1145/227614.227615

- Rittel, H. W., & Webber, M. M. (1973). Dilemmas in a general theory of planning. Policy Sciences, 4(2), 155–169. https://doi.org/10.1007/BF01405730

- Rohse, S., & Anderson, T. (2006). Design patterns for complex learning. Journal of Learning Design, 1(3), v1i3.35. https://doi.org/10.5204/jld.v1i3.35

- Ryu, S. (2020). The role of mixed methods in conducting design-based research. Educational Psychologist, 55(4), 232–243. https://doi.org/10.1080/00461520.2020.1794871

- Sandoval, W. A. (2004). Developing learning theory by refining conjectures embodied in educational designs. Educational Psychologist, 39(4), 213–223. https://doi.org/10.1207/s15326985ep3904_3

- Sandoval, W. (2014). Conjecture mapping: An approach to systematic educational design research. Journal of the Learning Sciences, 23(1), 18–36. https://doi.org/10.1080/10508406.2013.778204

- Sandoval, W. A., & Bell, P. (2004). Design-based research methods for studying learning in context: Introduction. Educational Psychologist, 39(4), 199–201. https://doi.org/10.1207/s15326985ep3904_1

- Sannino, A., & Engeström, Y. (2017). Co-generation of societally impactful knowledge in Change Laboratories. Management Learning, 48(1), 80–96. https://doi.org/10.1177/1350507616671285

- Sannino, A., & Laitinen, A. (2015). Double stimulation in the waiting experiment: Testing a Vygotskian model of the emergence of volitional action. Learning, Culture and Social Interaction, 4, 4–18. https://doi.org/10.1016/j.lcsi.2014.07.002

- Schoenfeld, A. H., Smith, J. P. I., & Arcavi, A. (1993). Learning: The microgenetic analysis of one student’s evolving understanding of a complex subject matter domain. In R. Glaser (Ed.), Advances in instructional psychology (Vol. 4, pp. 55–175). Lawrence Erlbaum Associates. https://doi.org/10.4324/9781315864341-2

- Schön, D. A. (1992). Designing as reflective conversation with the materials of a design situation. Knowledge-Based Systems, 5(1), 3–14. https://doi.org/10.1016/0950-7051(92)90020-G

- Shavelson, R. J., Phillips, D. C., Towne, L., & Feuer, M. J. (2003). On the science of education design studies. Educational Researcher, 32(1), 25–28. https://doi.org/10.3102/0013189X032001025

- Shea, P., Richardson, J., & Swan, K. (2022 /this issue). Building bridges to advance the community of inquiry framework for online learning. Educational Psychologist, 57(3). https://doi.org/10.1080/00461520.2022.2089989

- Siegler, R. S., & Crowley, K. (1991). The microgenetic method: A direct means for studying cognitive development. American Psychologist, 46(6), 606–620. https://doi.org/10.1037/0003-066X.46.6.60

- Simon, H. A. (1969). The sciences of the artificial. MIT Press.

- Simon, H. A. (1995). Problem forming, problem finding, and problem solving in design. In A. Collen & W. W. Gasparski (Eds.), Design and systems: General applications of methodology (Vol. 3, pp. 245–257). Transaction Publishers. (Original work published 1987) 10.1184/pmc/simon/box00070/fld05401/bdl0001/doc0001

- Stevenson, L. (1989). Is scientific research value‐neutral? Inquiry, 32(2), 213–222. https://doi.org/10.1080/00201748908602188

- Strauss, A. L., & Corbin, J. (1994). Grounded theory methodology: An overview. In N. K. Denzin & Y. S. Lincoln (Eds.), Handbook of qualitative research (pp. 273–285). SAGE.

- Sweller, J. (2011). Cognitive load theory. In Psychology of learning and motivation (Vol. 55, pp. 37–76). Academic Press.

- Tabak, I. (2004). Reconstructing context: Negotiating the tension between exogenous and endogenous educational design. Educational Psychologist, 39(4), 225–233. https://doi.org/10.1207/s15326985ep3904_4

- Tatar, D. (2007). The design tensions framework. Human-Computer Interaction, 22(4), 413–451. https://doi.org/10.1080/07370020701638814

- Tate, T., & Warschauer, M. (2022/this issue). Equity in online learning. Educational Psychologist, 57(3). https://doi.org/10.1080/00461520.2022.2062597

- Tobin, K., & Ritchie, S. M. (2012). Multi-method, multi-theoretical, multi-level research in the learning sciences. Asia-Pacific Education Researcher, 21(1), 117–129.

- Vakil, S., McKinney de Royston, M., Suad Nasir, N., & Kirshner, B. (2016). Rethinking race and power in design-based research: Reflections from the field. Cognition and Instruction, 34(3), 194–209. https://doi.org/10.1080/07370008.2016.1169817

- Van den Akker, J. (1999). Principles and methods of development research. In Design approaches and tools in education and training (pp. 1–14). Springer.

- Wang, F., & Hannafin, M. J. (2005). Design-based research and technology-enhanced learning environments. Educational Technology Research and Development, 53(4), 5–23. https://doi.org/10.1007/BF02504682

- Weston, C., McAlpine, L., & Bordonaro, T. (1995). A model for understanding formative evaluation in instructional design. Educational Technology Research and Development, 43(3), 29–48. https://doi.org/10.1007/BF02300454

- Zimmerman, J., Forlizzi, J., & Evenson, S. (2007). Research through design as a method for interaction design research in HCI. In Conference on Human Factors in Computing Systems – Proceedings, 493–502. https://doi.org/10.1145/1240624.1240704

Appendix: Disambiguating design-based research from related terms

Design-based research, although increasingly visible and used (Anderson & Shattuck, Citation2012), still confuses many. This is partly due to inconsistent terminology; partly due to extant methods that share characteristics with DBR; and partly due to the adoption of stances from DBR in related methodologies (what Bell [Citation2004] calls a “manifold enterprise”). We take each of these in turn.

Other terminology for DBR

Design-based research as a term first appeared in 2002 (Hoadley, Citation2002) and was explicitly a proposed replacement for the earlier term “design experiments” (Brown, Citation1992; Collins, Citation1992), which was confusingly similar to the concept of experimental design. In the early 2000s, some authors preferred terms such as “design studies” (e.g., Linn, Citation2000) or “design research” (e.g., Edelson, Citation2002), although these terms had both previously been used for other purposes (Gregory, Citation1979).

Methods that share characteristics with DBR

Within the larger world of social science research methods, design-based research is similar to several other methodologies (). Within instructional design and technology design alike is the notion of formative design evaluation (Edelson, Citation2002), or simply formative evaluation (Martin, Citation1979; Weston, Citation1995). In formative evaluation, the top priority is improving an intervention through collecting and analyzing data to assess or improve a design, as contrasted with DBR which is positioned as research first, design second. Another related idea is that of developmental research (Bakker, Citation2018), in which research and design go hand in hand to focus on questions of the sort “How can (a design goal) be promoted?,” albeit this was not thought of as a research methodology per se, but instead linked design and research through existing methods. On the research side, DBR shares characteristics with earlier research methods. Participatory action research (PAR; Anderson, Citation2017) resembles DBR in that both engage researchers and participants in agenda setting. The main difference is the emphasis on iterative refinement and conjecture mapping with respect to designs; in this way PAR tends to engage more with phronesis than DBR.

Table A1. Methods that share characteristics with DBR.

Finally, “Design-based research” suggests a complementary term, “research-based design,” that describes how designers sometimes incorporate social science research into an overarching design goal, as when a team performs an ethnographic study to collect user needs for a product design process.

Methods linked to DBR

Several other methodologies relate to DBR more directly, either stemming from it or evolving in response to it (). The first, Design-Based Implementation Research or DBIR (Penuel et al. Citation2011) may be thought of as an offshoot from DBR when applied to problems of practice that relate to systems of education, tightly tied to the notion of Research-Practice Partnerships (Penuel et al., Citation2015). DBR and DBIR share a contextualized, emergent, iterative approach to knowledge building, linked to a design process aimed at producing knowledge. The prototypical DBIR project, however, uses linkage between educational systems and practitioners and researchers not only to set a common research agenda and test and implement designed interventions, but also prioritizes understanding how those interventions scale within a partnership. A second method which emerges from DBR and other earlier traditions is social design experimentation (Gutiérrez & Jurow, Citation2016) in which DBR is blended with ecological psychology approaches to social transformation for nondominant communities. Another related method is that of formative interventions (Engeström, Citation2011) which draws on Vygotsky’s earlier notion of double stimulation (Sannino & Laitinen, Citation2015). Central to formative interventions is treating participants’ agency as a necessary component of any design conjecture. Sannino & Engeström (Citation2017) operationalize formative intervention research in “change laboratories” which explicitly prioritize outcomes related to change in activity systems. In this sense, designed interventions often have shifts in individual and cultural activities and practices as their aim, and always with respect to transformation of a particular context. Another method, extended from earlier organizational science research, is the notion of improvement science in the context of networked improvement communities (Bryk et al., Citation2015). In this configuration, research is again iterative and embedded in cycles of design, but there is an explicit focus on studying variation across people and settings, and change over time, and on attempting to primarily coordinate insights by looking not only within but across contexts.

Table A2. Other design-oriented approaches to research methods.

Each of these methods has roots predating DBR, and each might be considered an alternative to, a specialized version of, or a variant of DBR. Proponents of all four explicitly engage DBR literature, and all four help solve a problem left unresolved by early DBR literature: once one saturates an understanding of an intervention in a small number of contexts, how one aims for more transformative generalization. With DBIR and NICs, the question of scale and dissemination is prominent; with change labs and social design experimentation, lasting transformation of social structures is a goal. Also, all four have a greater emphasis on participant contributions to the design and research agenda (albeit in different forms) than DBR, whose early literature spoke of “engagement” but not power-sharing between researchers and participants. This seems to be a natural and productive evolution methodologically, since DBR’s tentative generalizations may not drive the change wished for without a structure for doing so.