?Mathematical formulae have been encoded as MathML and are displayed in this HTML version using MathJax in order to improve their display. Uncheck the box to turn MathJax off. This feature requires Javascript. Click on a formula to zoom.

?Mathematical formulae have been encoded as MathML and are displayed in this HTML version using MathJax in order to improve their display. Uncheck the box to turn MathJax off. This feature requires Javascript. Click on a formula to zoom.Abstract

Many approximate Bayesian inference methods assume a particular parametric form for approximating the posterior distribution. A Gaussian distribution provides a convenient density for such approaches; examples include the Laplace, penalized quasi-likelihood, Gaussian variational, and expectation propagation methods. Unfortunately, these all ignore potential posterior skewness. The recent work of Durante et al. [Skewed Bernstein-von Mises theorem and skew-modal approximations; 2023. ArXiv preprint arXiv:2301.03038.] addresses this using skew-modal (SM) approximations, and is theoretically justified by a skewed Bernstein-von Mises theorem. However, the SM approximation can be impractical to work with in terms of tractability and storage costs, and uses only local posterior information. We introduce a variety of matching-based approximation schemes using the standard skew-normal distribution to resolve these issues. Experiments were conducted to compare the performance of this skew-normal matching method (both as a standalone approximation and as a post-hoc skewness adjustment) with the SM and existing Gaussian approximations. We show that for small and moderate dimensions, skew-normal matching can be much more accurate than these other approaches. For post-hoc skewness adjustments, this comes at very little cost in additional computational time.

1. Introduction

Fast, scalable approximations to posterior distributions have been a staple of Bayesian inference when dealing with big data [Citation1,Citation2], especially when Markov Chain Monte Carlo (MCMC) is too computationally expensive. Approximate Bayesian inference (ABI) is a class of alternative methods that includes the Laplace approximation, the fully exponential (or improved) Laplace approximation [Citation3], variational Bayes and related methods, and expectation propagation [Citation4]. These alternative methods are often deterministic, based on approximating the posterior distribution via a standard distribution, and are often significantly faster than MCMC when working with large datasets. ABI methods typically work by minimizing some distance between the approximation and the posterior distribution. By performing optimization instead of sampling, large computational savings may be made. This major benefit is reflected by the extensive application of ABI methods for inference in a wide range of fields [Citation5].

However, this increase in speed is clearly also compromised by a decrease in accuracy, as ABI-based approximations can be only as accurate as the distribution used to approximate the posterior. If the chosen distribution used is not a good fit for the posterior, then the approximation can be poor. In particular, ABI methods often base their posterior approximations on the parametric form of a multivariate Gaussian density, in order to exploit the asymptotic normality of the posterior distribution guaranteed by the Bernstein-von Mises theorem [Citation6]. While such Gaussian approximations can usually be obtained quickly and are reasonably accurate, their quality will inevitably be questionable when the posterior is not similar in shape to the multivariate Gaussian density. For example, [Citation3] note that the principal regularity condition required for reasonable Laplace approximations is that the posterior is dominated by a single mode. Furthermore, skewness in the posterior density can lead to sub-par fits, as the Gaussian density is not skewed. This can potentially become a problem when the sample size is small compared to the number of parameters in the model. For instance, [Citation7] indicate that integrated nested Laplace approximations for generalized linear mixed models tend to be less accurate for binomial data with small sample sizes, which results in skewed posterior distributions. In addition, it is pointed out in [Citation8] that both low counts in Poisson regression and imbalanced data in binary regression can lead to skewness in the posterior distribution of the latent variables.

A recent advancement by [Citation9] addresses the second problem by way of a skewed Bernstein-von Mises theorem, where the asymptotic approximation takes the form of a generalized skew-normal (GSN) distribution [Citation10]. This leads to plug-in-based skew-modal (SM) approximations which converge to the posterior distribution faster than those of the classical Bernstein-von Mises theorem (i.e. Laplace approximations) by an order of , where n is the number of observations. While this method is an important theoretical contribution and was shown to be quite accurate for certain models, it is not without its drawbacks; these are discussed in Section 2. In Section 3, we develop the skew-normal matching method, an alternate approach to fitting general skewed approximations which does not suffer from these drawbacks. In Section 4, experiments are conducted to compare the performance of skew-normal matching with that of the SM and existing Gaussian approximations. In Section 5, the results and future directions are discussed. Finally, the supplementary material contains derivations and figures.

Notation

Let denote the set of observed variables, i.e. the data. Let

denote the set of all

positive definite matrices. Let

denote the density of the

distribution evaluated at

with mean

and covariance

, and Φ denote the cumulative distribution function of the standard Gaussian distribution. The ⊙ symbol in the exponent denotes the Hadamard power, the operation where all elements in a matrix are raised to the same power. If

is a square matrix then

is the vector consisting of the diagonal elements of

. If

is a vector then

is a diagonal matrix with diagonal elements

. Functions applied to vectors are interpreted as being applied element-wise.

2. The skew-modal approximation

The SM approximation of [Citation9] is based on the (multivariate) GSN distribution [Citation10]. In p dimensions, it is denoted by , where

,

, and

are the location parameter, scale parameter, and skewing function respectively. For

, its density has the form

Consider a p-dimensional posterior distribution in

. Suppose that

is its mode,

is the negative Hessian evaluated at this point, and

is third-order derivative evaluated at this point with respect to the s, t, and lth entries of

, where

. The SM approximation sets

Such a form can be derived from applying the third-order version of the Laplace method to the posterior distribution, leading to a skewed version of the Bernstein-von Mises theorem; the details are left to [Citation9]. It can be shown that given certain regularity conditions, the total variation distance between the posterior distribution and the SM approximation is of the order

, where n is the number of observations and

denotes order in probability with respect to the true data-generating mechanism. This improves on the rate of convergence of the standard Laplace approximation by a factor of

. Again, refer to [Citation9] for more details.

The SM approximation can be impractical, as there do not exist closed forms for properties such as the moments and marginal distributions of the GSN distribution. This can be an issue for example when attempting to perform inference on the marginal distributions or use SM approximations in other methods where an explicit functional form is needed to be efficient, such as site approximations in expectation propagation. If these quantities are of interest, they need to be estimated via Monte Carlo or further approximation is required. A sample from the GSN distribution may be be generated by first sampling

and

independently, before computing

, as noted in [Citation11]. However, sampling can introduce additional complexity in the approximation; for example, choices now need to be made regarding the number of samples and how density estimation is performed.

It is also clear that computations related to the SM approximation require the storage of all third derivatives of the posterior distribution. This costs

space, which is a factor of p more than any Gaussian approximation; again, this can be impractical when working with models with a large number of parameters.

Another potential issue with the SM approximation is the fact that only derivative information about the mode is used. By basing the approximation at the mode, important posterior information can be lost, similar to the standard Laplace approximation. This concept is highlighted in [Citation8] for example, where a variational Bayes correction (based on global posterior information) to the mean of the Laplace approximation (which only uses local information about the mode) is shown to result in higher accuracies.

3. Skew-normal matching

We propose skew-normal matching, a group of more practical (and potentially more accurate), but less theoretically grounded, matching-based alternatives to the SM approximation based on a standard skew-normal approximating form. Working with the more simple standard skew-normal distribution allows for more tractable posterior inference and lower memory requirements, which increases practicality. On the other hand, matching allows for the skewed approximation to be constructed from more general posterior statistics, potentially increasing accuracy.

To start, we introduce the standard (multivariate) skew-normal (SN) distribution. While it is usually presented using the original parameterization of [Citation12], we choose a common alternate form that simplifies the exposition. In p dimensions, the SN distribution is denoted by , where

,

, and

are the location, scale, and skewness parameters respectively. For

, its density can be written as

Note that all computations related to the density only require

space, which is p times less than the SM approximation, and equal to that of a Gaussian approximation. The SN distribution is a special case of the GSN family described in Section 2. An account of the properties of the SN distribution (including how to compute the marginal distributions) is given in detail by [Citation13]. Of particular importance in this paper are the formulas for the expectation, variance, and third-order unmixed central moments (TUM), which are

respectively for

, where

. Given

and

, the vector

can be recovered via the identity

, leading to the constraint

(1)

(1)

This implies a maximum ‘size’ of the TUM vector admittable by the SN distribution with a fixed

, and will be important in the moment matching scheme.

As a natural extension to the Gaussian distribution, the SN distribution lends itself particularly well to approximating probability distributions which are potentially skewed; [Citation13] give a brief outline of existing frequentist work using SN approximations in their applications chapter. In particular, SN approximations to the discrete binomial, negative binomial, and hypergeometric distributions via moment matching have been investigated by [Citation14]. Furthermore, unobserved continuous covariates were modelled using the SN distribution by [Citation15] in the analysis of case-control data. Additionally, [Citation16] have used the skew-normal functional form as the main component of their Edgeworth-type density expansions, in order to account for skewness in the density.

Under the skew-normal matching method, key statistics of the posterior distribution are estimated and then matched with the SN density to construct a suitable SN approximation. When the parameter of interest is p-dimensional, the SN approximation has a total of

parameters to vary. Four matching schemes were devised, each of which matches

posterior statistics with those of the SN density, so that there is at most one solution in general. These are summarized in the following subsections. Each scheme supports a standalone variant, and possibly a post-hoc skewness adjustment variant. In the standalone variant, the posterior statistics of interest are estimated using methods which do not already give an approximation of the posterior distribution. In the post-hoc skewness adjustment variant, at least one posterior statistic of interest is estimated using an existing Gaussian approximation, with the remainder estimated quickly via other methods. We give the variant its name because the SN approximation can be interpreted as a post-hoc skewness adjustment to the Gaussian approximation. The motivation for this variant is to have a fast way to correct for skewness in a general setting; we believe that this is where the practicality of skew-normal matching lies. For all schemes, details on posterior statistic estimation in the standalone variants can be found either in the supplementary material or in the referenced papers.

3.1. Moment matching

In the moment matching (MM) scheme, the mean and covariance matrix

, along with the TUM vector

of the posterior distribution

are estimated. These are then matched to the SN density to form an approximation of the posterior. This is similar to the work of [Citation14], where common distributions were moment matched to an SN distribution in the univariate case. The practicality of this approach is limited as posterior moments (especially third-order moments) are often not readily available. For the standalone variant, we estimate

,

, and

using Pareto smoothed importance sampling (PSIS) [Citation17] in this paper. There is no post-hoc skewness adjustment variant, since there is no way to quickly estimate

using a standard Gaussian approximation. The final system of matching equations for the MM scheme are given by

(2a)

(2a)

(2b)

(2b)

(2c)

(2c)

(2d)

(2d)

The derivations are provided in the supplementary material. It is straightforward to obtain the values

,

, and

of the approximating SN distribution. Once

and

are known,

can also be recovered easily. The steps of the moment matching scheme are outlined in Algorithm 1.

It is important to note that the MM equations (Equation2a(2a)

(2a) ) do not always admit a solution due to constraint (Equation1

(1)

(1) ). Using constraint (Equation1

(1)

(1) ) and the Woodbury identity, it can be shown that when

there is no solution to (Equation2a

(2a)

(2a) ). This intuitively corresponds to cases where the skewness is too large compared to the covariance. In such cases, one may make an adjustment to the observed value of

to obtain an approximate solution; the updated value has the form

for some multiplier

. As

, the norm of the matched value of

approaches infinity, which can cause numerical instabilities in the computations relating to the SN distribution. Thus, the value of m should be kept close to one so that the adjustment is minimized, but no so close such that numerical instabilities arise. We recommend setting m to a moderately high initial value such as 0.99, and if numerical instabilities arise, adjusting m downwards by taking powers (i.e.

,

, and so on) until they are resolved.

3.2. Derivative matching

In the derivative matching (DM) scheme, we denote the mode by , the negative Hessian at the mode by

, and the third-order unmixed derivatives (TUD) at the mode of the observed log-posterior by

. These are matched to the corresponding values of the SN density to form an approximation of the posterior distribution

. This can be viewed as a less memory-intensive approach to that of [Citation9]. For the standalone variant, we estimate

,

, and

using the Newton-Raphson method in this paper. There is no post-hoc skewness adjustment variant, since all posterior statistics of interest can already be quickly estimated. The final system of matching equations for the DM scheme are given by

(3a)

(3a)

(3b)

(3b)

(3c)

(3c)

(3d)

(3d)

where

. The derivations are provided in the supplementary material. Here, we have extracted the common term κ appearing in (Equation3a

(3a)

(3a) )–(Equation3c

(3c)

(3c) ) as an additional equation, which facilitates a (unique) solution to (Equation3a

(3a)

(3a) ). The key idea is to reduce the original system of matching equations to a line search in one dimension for κ. Once κ is solved for, the parameters of the SN density are then easily recovered. The steps of the derivative matching scheme are outlined in Algorithm 2.

3.3. Mean-mode-Hessian matching

In the mean-mode-Hessian (MMH) matching scheme, we use the mode , negative Hessian of the observed log joint likelihood at the mode

, and the posterior mean

. We match these with the corresponding quantities of the SN density. This leads to an approximation of the posterior distribution

. Intuitively, the difference between the mode and mean provides information about the posterior skewness. For the standalone variant, we estimate

and

using Newton-Raphson, and estimate

using one of three methods: an approach using the Jensen inequality, an improved Laplace approach, and PSIS. For the post-hoc skewness adjustment variant,

can instead be taken from an existing Gaussian approximation. The system of matching equations for the MMH scheme is given by

(4a)

(4a)

(4b)

(4b)

(4c)

(4c)

(4d)

(4d)

The derivations are provided in the supplementary material. Similar to the DM scheme, an auxiliary parameter/equation for κ is introduced to facilitate solving (Equation4a

(4a)

(4a) ). The steps of the MMH matching scheme are outlined in Algorithm 3. Again, the solution reduces the original system of matching equations to a line search in one dimension for κ (see line 5 in Algorithm 3). Once κ is obtained, the parameters of the SN density are then easily recovered.

Note that in Algorithm 3 is the difference between the mean and the mode of the target distribution, and indicates the strength and direction of skewness. It is assumed to be non-zero in the algorithm; the case for

can be reduced to moment matching a multivariate Gaussian and is trivial.

3.4. Mean-mode-covariance matching

In the mean-mode-covariance (MMC) matching scheme, the mode of the observed log-posterior, along with the mean

and covariance matrix

of the posterior

are estimated and then matched to the SN density to form an approximation of the posterior distribution

. Care should be taken to avoid confusion with the MM scheme, which also uses the mean and covariance. For the standalone variant, we estimate

using Newton-Raphson, and estimate

and

using PSIS. For the post-hoc skewness adjustment variant,

and

can instead be taken from an existing Gaussian approximation. The final system of matching equations for the MMC scheme is given by

(5a)

(5a)

(5b)

(5b)

(5c)

(5c)

(5d)

(5d)

The derivations are provided in the supplementary material. Similarly to the DM and MMH schemes, an auxiliary variable κ is introduced. The solution again hinges on solving a line search for κ after which the parameters of the SN density are easily recovered. The steps of the mean-mode-covariance matching scheme are outlined in Algorithm 4. Again, note that

in Algorithm 4 indicates the strength and direction of posterior skewness, and is assumed to be non-zero.

It is important to note that the the MMC equations (Equation5a(5a)

(5a) ) do not always admit a solution. When

, step 5 in Algorithm 4 has no solution (see the supplementary material for more details). This intuitively corresponds to cases where the skewness is too large compared to the covariance. In such cases, one may make an adjustment to the observed value of

to obtain an approximate solution; the updated value has the form

for some multiplier

. Similar to the MM scheme, as

, the norm of the matched value of

approaches infinity, which can cause numerical instabilities in the computations relating to the SN distribution. As with the MM scheme, we recommend setting m to a moderately high initial value such as 0.99 (so that the adjustment is minimized), and if numerical instabilities arise, adjusting m downwards by taking powers until they are resolved.

4. Experiments

We now consider practical applications of the skew-normal matching method for simple Bayesian problems, and measure its performance on both simulated and benchmark datasets through experiments. In particular, the case of Bayesian probit regression was considered, with logistic regression results provided in the supplementary material.

The gold standard to which all other methods were compared was single-chain MCMC using the No-U-Turn sampler, as implemented via the R package rstan [Citation18,Citation19] with 50,000 MCMC iterations (including 5000 warm-up iterations). Convergence was verified by checking that the value was less than 1.1 [Citation6]. If the chain did not converge, the result was discarded. We evaluated the four skew-normal matching schemes and a selection of common ABI methods. The first of these is Laplace's method, which has seen widespread use in the approximation of posteriors and posterior integrals [Citation3,Citation20,Citation21]. Next, we considered Gaussian variational Bayes (GVB), another well-established method, with direct optimization of the evidence lower bound performed via the Broyden–Fletcher–Goldfarb–Shanno (BFGS) algorithm. As a related method with relative ease of implementation, the delta method variational Bayes (DMVB) variant of [Citation22] and [Citation23] was also chosen. The expectation propagation (EP) framework from [Citation4], in its classical Gaussian product-over-sites implementation, was also investigated. Finally, the SM approximation of [Citation9] (with 50,000 samples used for posterior inference) was considered as a potential theoretically justified alternative to the skew-normal matching method.

For each combination of method, , and

, either random Gaussian (

for

and

) or AR1 covariate data (

with coefficient

, for

) was generated. In both cases,

, for

. Call n = 2p the low data case and n = 4p the moderate data case; we chose n to be small relative to p so as to induce more difficult situations where the posterior distribution can become quite skewed. The corresponding response data was then randomly generated using

, with

having p entries. Data was simulated 50 times for each combination of settings. In order to avoid pathological cases, we discarded simulations where separation was detected in the data (see [Citation24] for an overview). In addition to simulated examples, a selection of eight commonly used benchmark datasets for binary classification from the UCI machine learning repository were considered. Each dataset contained a certain number of numeric and categorical predictors, in addition to a column of binary responses to be predicted on. These datasets were coded as O-rings (n = 23, p = 3), Liver (n = 345, p = 6), Diabetes (n = 392, p = 9), Glass (n = 214, p = 10), Breast cancer (n = 699, p = 10), Heart (n = 270, p = 14), German credit (n = 1000, p = 25), and Ionosphere (n = 351, p = 33), where the value p includes the intercept. Breast cancer, German credit, and Ionosphere were shortened to Breast, German, and Ion respectively. In all examples, the prior distribution was set to

).

Given the previous settings, the experiments were split into two sets. The first set of experiments focussed on the use of the skew-normal matching method as a standalone approximation. The accuracy and run times of each method was evaluated individually, and compared. Approximate solutions for the MM and MMC schemes were allowed, and for the MMH scheme, all three variants relating to the estimation of the posterior mean were considered. The second set of experiments focussed on the use of the skew-normal matching method (more specifically the MMH and MMC schemes) as a post-hoc skewness adjustment to the DMVB, GVB and EP Gaussian approximations in particular. The Laplace and SM approximations were not considered as they do not provide estimates of the either the posterior mean or covariance, which are needed for the adjustments. The change in accuracy for each combination of Gaussian approximation and skewness adjustment was evaluated and examined. Approximate solutions were not allowed for the MMC scheme, with the justification being that there is a Gaussian approximation to fall back to. In such cases, the result was discarded.

Across the experiments, accuracy was calculated using the marginal components. More specifically, the difference between matching marginal components of the approximation and the MCMC estimate were computed using the norm. An appropriate transformation was then performed on this norm (ranging between 0 and 2) in order to obtain the

accuracy. The

accuracy for the jth marginal component is given by

(6)

(6)

where

and

are the jth marginal components of the MCMC estimate and the approximation, respectively. The former was estimated via density(), the default kernel density estimation function in R. The

accuracy ranges in value from 0 to 1, with values closer to one indicating better performance. The integral in (Equation6

(6)

(6) ) was computed numerically, with the bounds of integration set to

, with

and

being the mean and covariance respectively of the MCMC samples. This range was evenly split into 1000 intervals for use with the composite trapezoidal rule.

4.1. Standalone approximations

Simulation results for probit regression with independent covariates are shown in Table . The other cases are included in the supplementary material. MM and MMC tended to have the highest accuracies, followed by MMH and DM. The SM approximation had around the same

accuracies as DM, with this accuracy improving when both p and n were high. We suspect that the relatively low accuracies of the SM approximation is mainly due to the fact that it only uses local posterior information centred around the mode, unlike most skew-normal matching schemes.

Table 1. Mean marginal accuracies (Acc.) (expressed as a percentage) and times (in seconds) across probit regression simulations with independent covariate data. The highest accuracies for each value of p appear in bold.

In both the low and moderate data cases, skew-normal matching generally performed better compared to standard ABI approaches. However, as p increased, this improvement in accuracy diminished. Furthermore, as p increased, exact solutions to the MM and MMC schemes became less common and matching became less effective compared to variational approaches; this also led to decreases in accuracy. When p was above or equal to 24, GVB and EP started to outperform the skew-normal matching method. Interestingly, the SM approximation was only seen to consistently outperform the Laplace approximation out of the Gaussian approaches, even though it was shown to offer improvements in accuracy over a wide range of Gaussian approximations in [Citation9]. We suspect that this might be a result of the benchmark dataset used in [Citation9], where n = 27 and p = 3. A small number of dimensions coupled with a relatively high number of observations means that the accuracy of the SM approximation is guaranteed by the asymptotic results of the skewed Bernstein von-Mises theorem; such asymptotics may not apply for the datasets we were using, where n was much lower relative to p.

In most situations, the skew-normal matching variants tended to be slower than their Gaussian approximations by an order of around 2 to 100, depending on the setting, with the SM approximation taking roughly the same amount of time as skew-normal matching. It should be noted that some parts of GVB and SM were implemented in for speed, and so a direct comparison with the skew-normal matching method is not available. GVB is expected to be slower than some of the skew-normal matching variants under a fair comparison.

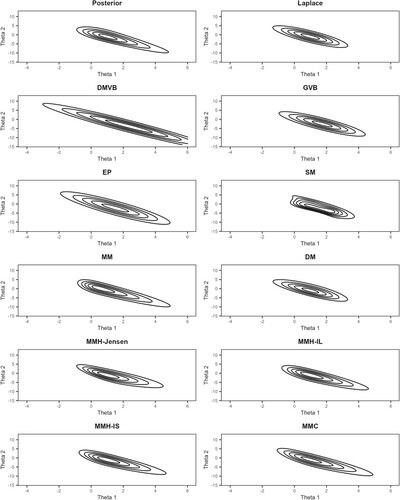

In general, the variants of the skew-normal matching method are seen to provide an increase in posterior fit compared to Gaussian approaches when p is not too high, at the cost of decreased speed. Better overall performance for the MM and MMC schemes may be achieved by only using them when an exact solution exists, and deferring to a Gaussian approximation otherwise. Figure illustrates the benefits of the skew-normal matching method in a particular low-dimensional case with p = 2 and n = 4. Note that, for this specific example, approximate solutions were not needed for the MM and MMC schemes.

Figure 1. Contour plots for the posterior and across various approximation methods, for a small probit regression example with p = 2 and n = 4.

Results for the benchmark datasets are presented in Table . A similar hierarchy of performance to the simulations was observed among the skew-normal matching variants, with MM, MMC, and MMH generally performing better, and DM performing worse. Here, the SM approximation had higher accuracies compared to DM, but lower accuracies compared to the other skew-normal matching variants. The skew-normal matching method performed as well or better compared to its Gaussian approximation methods when p was not too high, as was the case for the German credit and Ionosphere datasets. For Ionosphere in particular, approximate solutions were required for the MM and MMC schemes. As with before, the skew-normal matching method comes with the cost of decreased speed. Similar points of discussion compared to the simulations can be made for the relative performance of the SM approximation across the benchmarks.

Table 2. Mean marginal accuracies (Acc.) (expressed as a percentage) across probit regression benchmarks. The highest accuracies for each benchmark dataset appear in bold.

4.2. Post-hoc skewness adjustments

Simulation results for probit regression with independent covariates are shown in Tables and . We have included the probit case because it showed the most promising results. The other cases are included in the supplementary material.

Table 3. Mean total marginal improvement/deterioration of accuracies (expressed as a percentage) across probit regression post-hoc simulations with independent covariate data. The column ‘+’ indicates a mean total improvement in marginal

accuracy, while the column ‘−’ indicates the mean total decrease in marginal

accuracy across simulations. For example, if the marginal improvements in

accuracy for a p = 4 example were

, then the total marginal

improvement and deterioration are 6 and 5 respectively. Pairs where there is an overall improvement are highlighted in bold. Long dashes indicate no data.

Table 4. Success rates, i.e. rates where a solution exists to Algorithm 4, (expressed as a percentage) averaged over base approximations for the mean-mode-covariance based skewness adjustment. The mean-mode-Hessian based adjustments had a 100% success rate.

In the low data case, an MMH adjustment provided a noticeable increase in the quality of the posterior fit for the DMVB and GVB approximations, while a decrease in the quality of fit was seen for EP. On the other hand, MMC adjustments tended to increase or maintain accuracy for low values of p, with diminishing returns as p increased and exact solutions became rarer (as indicated by Table ). In the moderate data case, the MMH adjustment was seen to increase

accuracies across all methods for low values of p, but slightly decreased these accuracies for GVB and EP as p approached 40. In contrast, the MMC adjustment always increased or maintained the quality of the posterior fit. In general, simulations show that in most cases, some form of post-hoc skewness adjustment works reasonably well if the number of dimensions is not too high. The MMH adjustment gave very favourable results when there was low data (excluding EP, where it appeared to break down), while the MMC adjustment gave favourable results in all situations.

Results for the benchmarks are shown in Table . In the majority of cases, a post-hoc skewness adjustment was seen to give a slight improvement to the accuracy of the original Gaussian approximation. As with the simulations, MMH adjustments gave larger increases in

accuracy but also had the risk of decreasing accuracy when p was large. This was seen with the Heart, German credit, and Ionosphere datasets. On the other hand, MMC adjustments tended to give more modest increases in accuracy but had a much lower chance of decreasing posterior fit. The German credit dataset appears to be an anomaly in this regard, and requires further investigation. In general, the benchmarks show that it is beneficial to apply some form of post-hoc skewness adjustment to an existing Gaussian approximation when the number of dimensions is not too high. Some approximations are also seen to benefit from a skewness adjustment more than others.

5. Summary and future work

This paper has introduced the skew-normal matching method as an alternative to standard Gaussian-based approximations of the posterior distribution, providing a potentially more practical and accurate alternative to the SM approximation of [Citation9]. Four matching schemes are suggested, namely moment matching, derivative matching, mean-mode-Hessian matching, and mean-mode-covariance matching. Each scheme was based on matching a combination of derivatives at the mode and moments of the posterior with that of the multivariate SN density. The performance of these matching schemes was evaluated on a Bayesian probit regression model, where it was seen that for both simulated and benchmark data, the skew-normal matching method tended to outperform standard Gaussian-based approximations in terms of accuracy for low and moderate dimensions, at the cost of run time. In addition, all matching schemes except DM tended to outperform the SM approximation in the settings investigated. Each matching scheme is seen to offer a trade-off between performance and speed:

Moment matching generally outperforms standard Gaussian approximations if an exact solution exists (more so than mean-mode-Hessian matching) and the dimensionality is not too high, but is compromised by an increase in run time with the estimation of the covariance and third moments, requiring importance sampling.

Derivative matching offers an improvement in accuracy to the regular Laplace approximation when there is low data and the posterior is very skewed, at very little additional run time cost.

Mean-mode-Hessian matching provides as or better performance compared to most standard Gaussian approximations when the dimensionality is not too high, at the cost of increased run time.

Mean-mode-covariance matching generally outperforms standard Gaussian approximations if an exact solution exists (more so than mean-mode-Hessian matching) and the dimensionality is not too high, at the cost of increased run time with the estimation of the covariance, requiring importance sampling. Exact solutions are more frequent compared to moment matching.

Table 5. Mean total marginal improvement/deterioration in accuracies (expressed as a percentage) across probit regression post-hoc benchmarks. The ‘+’ columns indicates the total increases in marginal

accuracy, while the ‘−’ column indicates the total decrease in marginal

accuracy. For example, if the marginal improvements in

accuracy for a p = 4 example were

, then the total marginal

improvement and deterioration are 6 and 5 respectively. Pairs where there is an overall improvement are highlighted in bold. Long dashes indicate no data.

The MMH and MMC schemes additionally gave way to post-hoc skewness adjustments for standard Gaussian approximations. For the case of probit regression, these adjustments were shown to generally result in increased marginal accuracies compared to the base approximation:

Mean-mode-Hessian adjustments tend to give larger increases in marginal accuracies, but also risks decreasing these marginal accuracies when the dimensionality of the data is too large. Better performance was seen when there was low data.

Mean-mode-covariance adjustments tend to give more modest increases in marginal accuracies but generally did not decrease marginal accuracies.

Although the skew-normal matching method has its benefits when the posterior is known to be skewed, this is not without its drawbacks. Using a matching, rather than an optimal variational approach can impact performance for some problems. This may explain why performance breaks down when the number of dimensions is large. We are also heavily dependent on good estimates of the moments; without these, the skew-normal approximation can fail very easily. For high dimensional data where , skew normal matching is still possible, provided that the appropriate inverses exist to quantities such as the Hessian, although further work needs to be done in verifying the quality of these approximations.

There is clearly a variety of ways the work here could be extended. Other families of skewed densities could be entertained, the matching methods could be used as warm starts for skew-normal variational approximations [Citation25,Citation26], and matching with other posterior statistics is possible.

We hope that the results presented in this paper act as a benchmark for the performance of matching-based skewed approximations to posterior distributions. We believe that the skew-normal matching method fulfils a certain niche in posterior approximations and would like to see similar, if not better, methodologies being developed as approximate Bayesian inference grows and the need for such approximations increase.

Supplemental Material

Download PDF (5 MB)Disclosure statement

The authors confirm that there are no relevant financial or non-financial competing interests to report.

Additional information

Funding

References

- Graves A. Practical variational inference for neural networks. Adv Neural Inf Process Syst. 2011;24:1–9.

- Zhou X, Hu Y, Liang W, et al. Variational LSTM enhanced anomaly detection for industrial big data. IEEE Trans Ind Inform. 2020;17(5):3469–3477. doi: 10.1109/TII.9424

- Tierney L, Kadane JB. Accurate approximations for posterior moments and marginal densities. J Am Stat Assoc. 1986;81(393):82–86. doi: 10.1080/01621459.1986.10478240

- Minka TP. A family of algorithms for approximate Bayesian inference [dissertation]. Massachusetts Institute of Technology; 2001.

- Blei DM, Kucukelbir A, McAuliffe JD. Variational inference: A review for statisticians. J Am Stat Assoc. 2017;112(518):859–877. doi: 10.1080/01621459.2017.1285773

- Gelman A, Carlin JB, Stern HS, et al. Bayesian data analysis. New York: Chapman and Hall/CRC; 1995.

- Fong Y, Rue H, Wakefield J. Bayesian inference for generalized linear mixed models. Biostatistics. 2010;11(3):397–412. doi: 10.1093/biostatistics/kxp053

- van Niekerk J, Rue H. Correcting the Laplace method with variational Bayes; 2021. arXiv preprint arXiv:211112945.

- Durante D, Pozza F, Szabo B. Skewed Bernstein-von Mises theorem and skew-modal approximations; 2023. ArXiv preprint arXiv:2301.03038.

- Genton MG, Loperfido NMR. Generalized skew-elliptical distributions and their quadratic forms. Ann Inst Stat Math. 2005;57(2):389–401. doi: 10.1007/BF02507031

- Wang J, Boyer J, Genton MG. A skew-symmetric representation of multivariate distributions. Stat Sin. 2004;14(4):1259–1270.

- Azzalini A. The multivariate skew-normal distribution. Biometrika. 1996;83(4):715–726. doi: 10.1093/biomet/83.4.715

- Azzalini A, Capitanio A. The skew-normal and related families. New York: Cambridge University Press; 2014.

- Chang CH, Lin JJ, Pal N, et al. A note on improved approximation of the binomial distribution by the skew-normal distribution. Am Stat. 2008;62(2):167–170. doi: 10.1198/000313008X305359

- Guolo A. A flexible approach to measurement error correction in case–control studies. Biometrics. 2008;64(4):1207–1214. doi: 10.1111/biom.2008.64.issue-4

- Gupta AK, Kollo T. Density expansions based on the multivariate skew normal distribution. Sankhyā: Indian J Stat. 2003;65(4):821–835.

- Vehtari A, Simpson D, Gelman A, et al. Pareto smoothed importance sampling; 2021. ArXiv preprint arXiv:1507.02646.

- R Core Team. R: A language and environment for statistical computing. Vienna, Austria: R Foundation for Statistical Computing; 2021.

- Stan Development Team. RStan: the R interface to Stan; 2023. R package version 2.21.8.

- Raudenbush SW, Yang ML, Yosef M. Maximum likelihood for generalized linear models with nested random effects via high-order, multivariate Laplace approximation. J Comput Graph Stat. 2000;9(1):141–157.

- Rue H, Martino S, Chopin N. Approximate Bayesian inference for latent Gaussian models by using integrated nested Laplace approximations. J R Stat Soc Series B Stat Methodol. 2009;71(2):319–392. doi: 10.1111/j.1467-9868.2008.00700.x

- Braun M, McAuliffe J. Variational inference for large-scale models of discrete choice. J Am Stat Assoc. 2010;105(489):324–335. doi: 10.1198/jasa.2009.tm08030

- Wang C, Blei DM. Variational inference in nonconjugate models. J Mach Learn Res. 2013;14(1):1005–1031.

- Mansournia MA, Geroldinger A, Greenland S, et al. Separation in logistic regression: causes, consequences, and control. Am J Epidemiol. 2018;187(4):864–870. doi: 10.1093/aje/kwx299

- Ormerod JT. Skew-normal variational approximations for Bayesian inference. Technical report. 2011.

- Smith MS, Loaiza-Maya R, Nott DJ. High-dimensional copula variational approximation through transformation. J Comput Graph Stat. 2020;29(4):729–743. doi: 10.1080/10618600.2020.1740097