Abstract

Background: Ingrained assumptions about clinical placements (clerkships) for health professions students pursuing primary basic qualifications might undermine best educational use of mobile devices.

Question: What works best for health professions students using mobile (hand-held) devices for educational support on clinical placements?

Methods: A Best Evidence Medical Education (BEME) effectiveness-review of “justification” complemented by “clarification” and “description” research searched: MEDLINE, Educational Resource Information Center, Web of Science, Cumulative Index to Nursing and Allied Health Literature, PsycInfo, Cochrane Central, Scopus (1988–2016). Reviewer-pairs screened titles/abstracts. One pair coded, extracted, and synthesized evidence, working within the pragmatism paradigm.

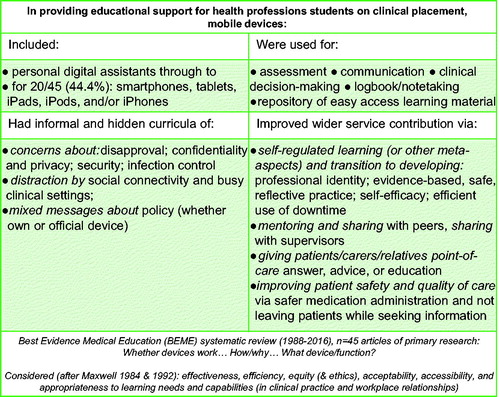

Summary of results: From screening 2279 abstracts, 49 articles met inclusion-criteria, counting four systematic reviews for context. The 45 articles of at least Kirkpatrick K2 primary research mostly contributed K3 (39/45, 86.7%), mixed methods (21/45, 46.7%), and S3-strength (just over one-half) evidence. Mobile devices particularly supported student: assessment; communication; clinical decision-making; logbook/notetaking; and accessing information (in about two-thirds). Informal and hidden curricula included: concerns about: disapproval; confidentiality and privacy; security;—distraction by social connectivity and busy clinical settings; and mixed messages about policy.

Discussion and conclusion: This idiosyncratic evidence-base of modest robustness suggested that mobile devices provide potentially powerful educational support on clinical placement, particularly with student transitions, metalearning, and care contribution. Explicit policy must tackle informal and hidden curricula though, addressing concerns about transgressions.

Introduction

In undergraduate medical education, mobile devices are increasingly used to enable and mediate activities of learning, educating, practising medicine, and everyday living (Masters et al. Citation2016), but maybe clinical placements (clerkships) could incorporate them better. Ingrained assumptions about organizing clinical placements might clash with the idea of mobile devices supporting workplace learning.

Holmboe et al. (Citation2011) highlighted that educator complacency about how students learn in the workplace meant persisting with clinical placements in blocks, despite this probably hindering meaningful learning and relationships with patients, colleagues, and teams. Medical students’ clinical placements have been suboptimal learning environments, providing inconsistent student experiences of active learning, coaching, feedback, and supervision (Remmen et al. Citation2000). Evidence suggests that, based on the continuity principle (Hirsh et al. Citation2007), longitudinal integrated placements improve learning and professional development (Ogur and Hirsh Citation2009; Walters et al. Citation2012). Transitions will still challenge students though.

In the transition to clinical work in longitudinal integrated placements, for example, Dubé et al. (Citation2015) found that students must transform classroom to clinical mindset, deal with confusing learning environments, and develop their professional identity, progressively performing future clinical roles. It is complex to organize clinical placements for good quality learning experiences that reflect such transitions and continuities (clinical, professional, social, and organizational), and technology transitions should also be considered.

The evidence-base originally focused more on technicalities of devices and functions than how they might support transitions in learning, educating, medical practice, and everyday living, e.g. regarding medical students’ professional development on clinical placements. Ellaway’s (Citation2014) domains of medical learners’ use of mobile devices were:

logistical (self-managing: personal data; web-browsing; diary; contacts; maps; time);

personal (for entertainment and social networking);

learning-tools (for note-taking, accessing documents, recording);

learning resources (for timely checking and reviewing information).

Challenging ingrained assumptions, she wanted educators to be more positive about students using mobile devices as learning-tools and resources not dwell on personal use. For many health professions students though, using mobile devices in clinical placements might be ad hoc and discouraged for apparently transgressing boundaries (personal, professional, privacy). Ellaway (Citation2014) recommended research to reduce negative messages from informal and hidden curricula about this use of mobile devices.

Masters et al. (Citation2016) highlighted that, rather than just fitting mobile devices around current activities, educational practices should develop to make best use of them. Nevertheless, educators should remain alert to mobile devices disrupting educational interactions whether in the classroom, clinical placement, or elsewhere. Ellaway (Citation2014) highlighted the:

potential doubt and distrust in informal interactions (from other students, educators, or patients) about personal use and

inadequate system-level policy explicitly about how the curriculum acknowledges and incorporates the domain of use for learning (and probably logistics).

Misinterpretation of students’ use of mobile devices in the clinical setting is prominent (Payne et al. Citation2012).

The evidence-base specifically about medical students’ use of mobile devices on clinical placement has been slow to develop. By April 2011, Mosa et al. (Citation2012) found evidence of about eleven “smartphone applications” providing educational reference-material for medical and nursing students (focused on anatomy or core clinical texts/tutorials). In the first three years of tablet technology, research did not reach medical students’ use in the clinical setting (Hogue Citation2013). For use of “mobile smart devices” in interprofessional communications in the inpatient clinical setting, 1999–2014, only 2/16 articles in Aungst and Belliveau’s (Citation2015) review referred to students, and these were medical students.

An evidence-based approach must manage: information overload; conflicting information systems; and informal and hidden curricula against use of mobile devices (from staff, patients, or other students). The “twelve tips” for medical students to get the most out of clinical placements notably omitted optimal use of mobile devices for just-in-time learning or point-of-care evidence (Bharamgoudar and Sonsale Citation2017). Nevertheless, mobile devices could support the tips recommending peer-to-peer learning, practising presenting findings, efficient time-management, and lifelong learning with reflective practice.

The evidence-base about nursing undergraduates focused originally on technical use of devices, functions, preferences, and barriers such as staff resistance (e.g. Williams and Dittmer Citation2009; George et al. Citation2010; Secco et al. Citation2013). O’Connor and Andrews (2015) considered that mobile devices might support nursing undergraduates with the transition to clinical practice, i.e. unpredictable setting, unpredictable supervision levels, and theory-practice gap. They found that the evidence-base was: poor on defining and clarifying device terminology; focused on how devices were reshaping clinical education and practice; and focused on complexity of sociotechnical barriers to implementation. Swan et al. (Citation2013) had also highlighted the hostile clinical environment for nursing students to use mobile devices (privacy concerns, unwelcoming clinicians, and Wi-Fi logistics). Others have highlighted that students value nurse educators role-modelling their use (Cibulka and Crane-Wider Citation2011). Raman’s (Citation2015) structured review found that mobile technology enhanced nursing students' learning and performance on clinical placements, but substantial sociotechnical constraints undermined this use.

The perceived and actual usefulness of students using mobile devices is thus context-dependent and subject to mixed messages (Ellaway Citation2014; Masters et al. Citation2016). The evidence-base in health professions education must move beyond mobile device technicalities to explore how it supports learning and patient care (Masters et al. Citation2016).

Here, the focus was on “small wireless, portable, handheld devices” (Willemse and Bozalek, Citation2015, p. 2), i.e. “cellphones… mobile phones… smartphones… tablets” (Ellaway et al. Citation2014, p. 131). This fits with Masters et al.’s (Citation2016) concept of a mobile device (the hardware “and, sometimes, by inference, its functionality,” other than SMS text-messaging) rather than their wider concept of mobile technologies (“software… operating systems… related infrastructure and technical protocols”) (Masters et al. Citation2016, p. 538).

For health professions students, the specific context of the clinical placement merits exploration, recognizing its complexity and learning transactions (Kilminster Citation2012). This Best Evidence Medical Education (BEME) “effectiveness-review” thus focused on the mobile device as educational support for health professions students on clinical placement.

This merited a broad interpretation around “effectiveness” (Gordon et al. Citation2014) as a component of improving “quality” of outcome for the student or their service contribution, thus incorporating both educational and health services perspectives (Kirkpatrick Citation1996; Maxwell Citation1984, Citation1992). The focus extended beyond “justification research.” While Cook et al.’s (Citation2008) classification of medical education research in terms of justification, clarification, and description referred specifically to intervention studies, all three aspects illuminate medical education research generally. “Whether.” “how/why,” and “what” (Gordon Citation2016) all relate to this review-question.

Review-question

“What works best for health professions students using mobile (hand-held) devices for educational support on clinical placements?”

Sub-questions

Do mobile devices used on clinical placement support the education of health professions students, improving the quality of their learning (knowledge, attitude, skills, behaviour, perception, or approach)? [Justification research] How/why? [Clarification research]

What broad types of mobile devices and functions are used, the main learning activities supported, and the best conditions to support these? [Description research]

Methods

Definition of concepts

A mobile (handheld) device referred to a smartphone, tablet, or other personal digital assistant (PDA)-type instrument with advanced computing functions (e.g. Internet/Wi-Fi access, office, camera/recording, image/video display).

Health professions students referred to students in medicine, nursing, or allied health professions studying for the basic primary qualification.

Clinical placement (attachment, clerkship, rotation) referred to sustained periods of practical work (not just observation) in the clinical setting of hospitals, general practice, or community clinics, i.e. health professions students’ clinical workplace (not classroom or private study), and included simulated complex workplace practice with patients.

Synthesis of main messages

Built on a scoping-review, this BEME effectiveness-review extracted and summarized main messages about whether, how/why, and what, using an eclectic approach, from a disparate collection of evidence, consistent with guidance for both quantitative and qualitative reviews (Harden et al. Citation1999; Hammick et al. Citation2010; Bearman and Dawson Citation2013; Sharma et al. Citation2015). Synthesis used both content and thematic analysis, working within the pragmatism paradigm (Creswell Citation2003), i.e. where the review-question, rather than a particular worldview, is paramount in choosing methods.

Defining “outcomes”

Primary outcome comprised (in)direct or (un)intended impact on students (knowledge, attitudes, skills, behaviours, perceptions, or learning approaches) or on patients, staff, organization, or population. This extended beyond effectiveness to other aspects of the quality of students’ learning experience, but satisfaction was a secondary outcome. The main focus was on justification complemented by clarification and description (Cook et al. Citation2008).

Measuring outcome

Two nonhierarchical classifications underpinned “measurement” of outcomes (and related processes) in self-reported or observed evidence, adapted from educational and health services perspectives on quality, respectively:

Kirkpatrick (K) four-level model of effectiveness (Kirkpatrick Citation1996):

– K1: reaction (e.g. preferences and technical barriers) to using the mobile device: from students re self/peers, from others, from (tracking) data about frequency/type of use

– K2a: its impact on attitude, behaviour, perception, or learning approach; K2b: its impact on knowledge or skills

– K3: its impact on how students reflect on their applied learning, their self-efficacy, and other such meta-learning

– K4a: its benefits for institutional/organizational practice; K4b: its benefits for others (patients, staff, or population), i.e. making a difference to others

Maxwell dimensions of quality (Maxwell Citation1984, Citation1992):

– effectiveness: how the device works to improve learning on clinical placement

– efficiency: how it affects outputs to inputs

– equity: how it relates to fairness (a dimension widened to include ethical and professionalism aspects of using the device)

– acceptability: what students prefer and how satisfied they are

– accessibility: what usage/barriers, purposes, and advantages are reported

– appropriateness: how it meets or challenges learning needs and capabilities in clinical practice and work relationships (with patients/peers/staff)

Search strategy

A scoping-exercise by OA informed options for developing the main search. The main search-strategy used key-term variants within four domains (Supplementary e-Appendix 1):

– student-type (study population) and

– mobile (handheld) device (“intervention”, but no comparison-group required) and

– clinical placement/workplace (setting of intervention) and

– learning outcome/activity (integral to that placement)

To check further for mobile devices, the search included “telemedicine” and “text message,” but articles focused solely on these would be excluded. The search of Latin alphabet electronic databases spanned 1988–2016 (1988–2015 performed February 2016, supplemented in March 2017 with 2016 results), without language restrictions, in the sequence:

– MEDLINE, Educational Resource Information Center, Web of Science (core collection), Cumulative Index to Nursing and Allied Health Literature, PsycInfo, Cochrane Central, Scopus.

The scoping-review used five inclusion-criteria to find primary reports of:

1. empirical studies (primary or secondary research) of primary or secondary data collection published as: peer-reviewed journal article, including structured or systematic review; grey literature such as conference-abstracts (to check for subsequent papers) or commissioned research featuring…

2. use of mobile device by…

– 3. health professions students on a programme for basic primary qualification…

– 4. on clinical placement/clerkship in the clinical setting/workplace…

– 5. to support their learning, including when integral to health care delivery

Editorials, opinion-pieces, commentary-reviews, news-items, letters, narrative literature reviews, conference-abstract-only “publications” were excluded. Any of five criteria led to exclusion:

no empirical study or insufficient detail to gauge against inclusion-criteria

no use of mobile device, just desktop computing, “SMS” texting, or other such telecommunications, e.g. telemedicine, videoconferencing

health professions students studying for a postbasic/postprimary (advanced/postgraduate) qualification, e.g. “graduate nursing students” on Master or doctoral research programmes (rather than other graduates now undertaking a basic nursing degree)

classroom-based activity or simulated basic skills (e.g. insufficient detail about the simulated complex workplace)

healthcare delivery only, unless student learning was integral to diagnosis or treatment (e.g. not just using mobile devices to record others’ clinical practice in extracurricular audits)

KL undertook the electronic searches, managed the results in EndNote X7.7 reference management software (Thomson Reuters then Clarivate Analytics), and deduplicated after each step, i.e. matching: firstly, on author—year—title—reference-type; secondly, on year—title—reference-type. Manual searching (OA/JG) included:

reference-sections in core articles;

key journals (2012–2016): Academic Medicine, Medical Education, Medical Teacher, Advances in Health Sciences Education;

key conference proceedings (2012–2016): “Association for the Study of Medical Education,” “Association for Medical Education in Europe,” and Ottawa Conference on Medical Education.

Grey literature was not sought beyond the electronic databases and manual search outlined.

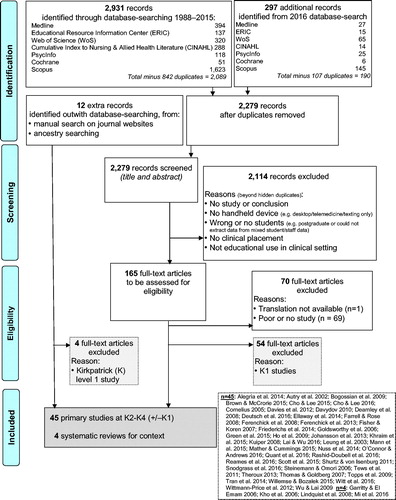

Screening

After calibrating against several examples, reviewer-pairs (GM/DCMT, DCMT/TC, JG/OA) screened independently the title and abstract of all 1988–2015 results, then discussed and resolved discrepancies in provisional inclusions/exclusions (). Two reviewers (GM/DCMT) repeated that for a 2016 updated search. DCMT incorporated full-text of provisionally included articles into NVivo 10 (QSR International).

Figure 1. Search strategy to select n = 45 primary research and n = 4 systematic reviews: “What works best for health professions students using mobile (hand-held) devices for educational support on clinical placements?”.

After calibrating against several examples, GM/DCMT then screened independently these full articles, coding proposed inclusions to one or more K-levels. They then discussed discrepancies and confirmed the remaining articles and their K-level(s) 1–4. The BEME effectiveness-review then focused on the subset from the scoping-review of primary studies at K2–K4 (i.e. excluding K1-only) plus systematic reviews providing context.

Data abstraction for BEME effectiveness-review

Using twenty articles, two reviewers (JG/OA) piloted feasibility of data extraction for quality-assessment. Two reviewers (GM/DCMT) then coded illustrative extracts of each article in NVivo for:

device; broad use (inductively); research approach (quantitative; qualitative; mixed methods); aim; nature of participants and sampling; number of participants and response rate; year, country, and design/method of data collection; year of publication; findings and conclusions;

K-level(s) and Maxwell dimensions of quality that relevant evidence supported;

level of evidence (Harden et al. Citation1999): L1 = professional judgement—the beliefs and values of experienced teachers; L2 = educational principles; L3 = professional experience; L4 adjusted to= “empirical studies” short of L5/L6; L5 = cohort studies and related methods; and L6 = randomized controlled trials;

strength of evidence (grade) (Colthart et al. Citation2008; Hammick et al. Citation2010): “S1 = No clear conclusions can be drawn. Not significant. S2 = Results ambiguous, but there appears to be a trend. S3 = Conclusions can probably be based on the results. S4 = Results are clear and very likely to be true. S5 = Results are unequivocal.”

other context, e.g. ethics approval, theoretical frameworks used

GM/DCMT summarized the primary evidence-base for the “whom, when, and how” that mobile devices supported:

Content analysis:

characteristics of evidence: basic descriptive epidemiology (time, place, person)

nature and robustness of evidence extracted: K-level(s) and Maxwell dimension(s); indicators of level and strength

Thematic analysis:

main messages: inductively from representative extracts of quantitative and/or qualitative data

main omissions and caveats: inductively

The nature of the evidence precluded further synthesis beyond broad thematic analysis, but the overall approach was consistent with the pragmatism paradigm. To be included, systematic reviews had to double-code evidence from search-questions potentially capturing relevant evidence. All authors checked the narrative summaries against the evidence.

Results

Characteristics of studies: Time/place/person; nature of evidence; robustness

Of 2,279 records screened by abstract and title, 165 full-text articles were assessed for eligibility (; Supplementary e-Appendix 2). Illustrating agreement within reviewer-pairs, GM/DCMT had good agreement for the 2016 update exercise, agreeing initially on 175/190 (92.1%, kappa = 0.64, p < 0.0001) abstracts before reaching consensus. Of 12 extra records identified outwith database-searching, four were Kirkpatrick (K)1-only, but eight joined the BEME effectiveness-review. The final set comprised four systematic reviews (searched to 2004, 2006, 2008, and April-2015) plus 45 primary studies (at least K2–K4 evidence) published 2002–2016 (median 2013). All four systematic reviews provided relevant S4 contextual evidence.

Of primary studies, 24/45 were in North America (53.3%), 11/45 (24.4%) in Australia or the United Kingdom, with only twelve countries represented overall. Furthermore, 22/45 (48.9%) focused on medical students and 20/45 (44.4%) on nursing or midwifery (one solely and one mixed with nursing) students. One focused on allied health professions students and two on staff perceptions only. Of research approaches, mixed methods predominated (21/45 (46.7%)), followed by quantitative (15/45 (33.3%)) and qualitative (9/45 (20.0%)).

The primary studies supported a median and modal number of K-levels of 2 (range 1–5), with only 3/45 (K2, K3, K3) contributing single K-level evidence. The evidence predominantly represented K3 (39/45, 86.7%) and just over three-quarters of articles provided supplementary K1 evidence (34/45, 75.6%). Twenty-four articles provided K4 evidence: 21/45 (46.7%) at K4b and 6 at K4a. The commonest profiles of Maxwell dimensions (shown by 10/45, 22.2% and 11/45, 24.4%) were for evidence to support accessibility, appropriateness, acceptability, and effectiveness or that set plus efficiency.

Of primary studies, 19/45 (42.2%) were S4, 20/45 (44.4%) were S3, 5/45 (11.1%) S2 overall (from which only S3 was extracted), and 1/45 (2.2%) S5. Most were L4 empirical studies (38/45 (84.4%)), but 5/45 (11.1%) were randomized controlled trials (L6), and two (4.4%) had longitudinal designs.

Findings

Broad types of devices, use, and functions, including “just-in-time” aspect

Over the study-period, more complex computational devices superseded PDAs. Overall, 20/45 (44.4%) primary studies focused on smartphones, tablets, iPads, iPods, and/or iPhones.

Mobile devices supported many educational functions for health professions students on clinical placement: assessment; communication; clinical decision-making; logbook/notetaking; repository for easily accessing learning material. The commonest use was as a repository in 30/45 (66.7%) primary studies, with 23/45 (51.1%) as a logbook and 16/45 (35.6%) for clinical decision-making.

Ellaway et al.’s (Citation2014) mixed methods study found that medical students’ frequency and type of use of mobile devices reflected learning culture and context. Early years’ ambivalence and later years valuing mobile devices in clinical work illustrated such “asymmetries of use.” A particular strength was to bring theory into practice via immediate and easy point-of-care (“just-in-time”) access to information integrated in one source (Johansson et al. Citation2013; Willemse and Bozalek Citation2015; Rashid-Doubell et al. Citation2016). Even with unreliable Internet access in resource-limited settings (Willemse and Bozalek Citation2015) or off-line use generally (Shurtz and von Isenburg Citation2011), mobile devices added value to clinical work via convenience, portability, and immediacy (Witt et al. Citation2016). Together, such educational functions facilitated evidence-based, safe, and reflective practice.

Reports of the iPad facilitating evidence-based practice included medical students on clinical clerkships, where it promoted learner productivity and became integral to daily workflow over a year (Nuss et al. Citation2014), and nursing students at point-of-simulated-care, where it promoted patient education (Brown and McCrorie Citation2015). Meaningful literature-searching in clinical areas may well be limited in practice though (Friederichs et al. Citation2014).

Evidence for context from systematic reviews

Of four systematic reviews giving contextual evidence, only two specified direct evidence about undergraduate health professions students using mobile devices to support learning on clinical placement.

Kho’s (2006) 1993–2004 systematic review of medical students’ and residents’ PDA use in medical education included supporting patient care but found only one randomized controlled trial with educational outcomes (Leung et al. Citation2003). No article reported objective impact on patient outcomes. While 27/67 (40.3%) articles featured medical students only, student and resident findings could not necessarily be separated in other studies. Overall for both groups though, most evidence focused on accessing resources and tracking clinical encounters (patients, diagnoses, procedures).

Lindquist et al.’s (Citation2008) 1996–2008 systematic review of PDA use by students and personnel in health care featured nursing and/or medical students in clinical settings in 10/48 (20.8%) articles. They reported two randomized controlled trials with educational outcomes (Leung et al. Citation2003; Goldsworthy et al. Citation2006) included in this review. Lindquist et al. (Citation2008) found the PDA to be valuable for undergraduate health care students, potentially improving their learning and quality of health care.

Garritty and El Emam’s (Citation2006) 1993–2006 systematic review of estimates of current and future PDA use among health care staff identified only one undergraduate student estimate, i.e. that in 2004, 49% of Canadian medical students had a PDA or wireless device (College of Family Physicians of Canada, Canadian Medical Association, Royal College of Physicians and Surgeons of Canada, 2005). Overall, Garritty and El Emam (Citation2006) reported little mention of students’ use of the devices in their included surveys.

Mi et al.’s (Citation2016) 2010-April-2015 systematic review of types and uses of mobile devices by “health professions students” had students in only 14/20 studies, the other studies involving doctors in specialty training (residents). Only 15/20 (but unlisted) related to clinical settings. Overall, Mi et al. (Citation2016) concluded that users valued portability, convenience, and access to many resources for checking evidence/knowledge and assisting learning. Nevertheless, barriers were: unreliable Wi-Fi or Internet connections; inadequate screen-size or computing or battery power; other technical constraints; or concerns about cost, security, and how using the device would be viewed. They did not conclude specifically about health professions students on clinical placements.

Evidence re logging clinical activity, workplace-based assessment, or tracking competencies

Logging clinical activity was not necessarily straightforward. Dearnley at al. (2008) reported (albeit without summarizing the basic quantitative data) that, of their first-year student midwives given Pocket PCs to maintain a clinical portfolio (for assessment) on placement, 45% avoided regularly taking it into clinical practice. This was mostly through anxiety about losing it and considering it unacceptable to clinical colleagues and clients. Some practice mentors reportedly reinforced this by deeming it unacceptable (unlike notebook and pen) to use the device in front of clients. Green et al. (Citation2015) found that only 29.2% of 274 senior medical students (strongly) agreed with: “I consider it professional to use an iPhone in a clinical setting,” despite receiving an official device loaded with academic, assessment, and logging/reflective software for such use. Consequently, that medical school provided branded cases for the clinical setting, reinforcing that mobile learning represented the official curriculum.

Students have used mobile devices to allow formal tracking of progress with intended curriculum outcomes, e.g.:

medical students’ competencies across 19–20 internal medicine problems (Ferenchick et al. Citation2008; Ferenchick et al. Citation2013) and staff time spent observing and giving feedback on clinical evaluation exercise (CEX) assessments (Ferenchick et al. Citation2013);

medical students’ gender-related discussion by specialty (Autry et al. Citation2002);

midwifery and nursing students undergoing workplace-based assessment via clinical portfolio/logbook (Dearnley at al. 2008; Bogossian et al. Citation2009).

Sometimes, portfolio/logbook entries were mostly made at home (Bogossian et al. Citation2009) or otherwise not on placement, as the devices were left elsewhere (Dearnley et al. 2008).

Ferenchick et al. (Citation2008) showed how competencies sampled in (albeit formative) assessment received more student attention and that CEX performance did not then predict objective structured clinical examination (OSCE) performance 1–11 months later (Ferenchick et al. Citation2013). The mobile device facilitated such performance analysis. Besides use in mini-CEX (Ferenchick et al. Citation2013; Green et al. Citation2015), other assessment uses included:

Snodgrass et al.’s (Citation2016) pilot study evaluated favorably formative feedback via iPADs for physiotherapy, occupational therapy, and speech pathology students on clinical placement, using free text and standard statements (mapped to national discipline-specific competencies). The very small study-sample and insufficient detail about analyzing free-text comments weakened this evidence, but overall most students evaluated the system positively, e.g. 10/14 (strongly) agreed that “The feedback helped me to reflect on my performance.” Significantly fewer physiotherapy vs the other students (strongly) agreed that the feedback highlighted areas for improvement (4/8 vs 6/6 p = 0.035). While technological constraints dominated students’ and educators’ comments on disadvantages, 2/9 participating clinical educators commented on iPad unsuitability in clinical areas requiring extra infectious disease control. Neither educators nor students reported concerns about how patients perceived their mobile device use.

Topps et al.’s (Citation2009) piloted six fifth year medical students’ and three GP registrars’ (residents’) use of a PDA (giving extra information and prompts) to record supervisors’ 1-minute comments about their workplace professionalism on PACE attributes (Van De Camp et al. Citation2004): Professional behaviour, Attitudes, Communication, and Ethics. In this small study, student and registrar findings were not presented separately, but there was good interrater reliability (Cronbach alpha∼0.8) by five raters assessing 29 comments. Learners and raters found this workplace-based assessment feasible and acceptable, but some students felt uncomfortable asking and briefing supervisors to provide comments via the PDA.

Evidence re self-regulated learning or other specific meta-aspects of learning and transition

The mobile device supporting self-regulated learning on clinical placement was a theme, with Alegría et al. (Citation2014) reporting that this role can eclipse its use as a bedside clinical tool. Wu and Lai (Citation2009) reported qualitative research on a PDA-based cognitive scaffolding and support system improving six psychiatric nursing students’ engagement, self-directed learning, and confidence on a clinical practicum. From such a 3-week placement, Lai and Wu (Citation2016) also reported mixed methods research on a netbook-based e-portfolio supporting ten junior psychiatric nursing students’ improvement in “theory and practice” and self-regulated learning. The e-system facilitated reflection, integrating activities/material that instructors considered impractical for a paper-based system. While mean overall competency scores by staff improved significantly from 2.7 to 4.3 (p < 0.001) (1 = no theory and action to 5 = full theory and action) between end-of-week 1 and end-of-week 3, the short-term effect, very small sample-size, no controls, and no longitudinal data-linking for these individual volunteers undermined that evidence. Via a 10-item open-ended questionnaire about the e-portfolio system (apparently based on Bandura’s (Citation1977) components of self-regulated learning, as per Zimmerman et al. Citation2011), all ten students considered that it supported improved self-awareness/self-observation. They cited examples of its facilitating self-assessment, accessing others’ patient care assignments, and instructors’ comments (forum interaction). All except one considered that it supported self-judgement (good decision-making and further learning). Nine students also considered that additional learning material prompted “self-reaction.”

In terms of quantifying specific effects on learning of using the mobile device:

Based on Ajzen’s (Citation1991) Theory of Planned Behaviour, Mann et al. (Citation2015) reported that undergraduate nursing students’ attitude about 18 months using an iPod Touch to access best practice resources (in classroom or clinical setting) predicted intention to use the device in future practice (overall model on cross-sectional data: r2 = 0.79, adjusting for self-efficacy and subjective norm scores). Between baseline (T1) and 18 months (T2), only subjective norm scores predicted T2 intention to use the device (overall model r2=0.45, adjusting for self-efficacy).

Thomas and Goldberg (Citation2007) reported how the PDA supported medical students’ (n = 59) timely reflective comments in their electronic log on patient encounters, prompted via embedded metacognitive cueing. The system facilitated monitoring of different types of reflection. There were modest-strong correlations between students’ logged comments classified as “diagnostic thinking” vs “therapeutic relationships” (rp = 0.42, p < 0.001) and “diagnostic thinking” vs “primary interpretation” (rp = 0.60, p < 0.001), respectively, but not vs end-of-clerkship knowledge-test (rp = 0.10, p = 0.46).

In a very small sample of volunteers, Kuiper (Citation2008) found weak-modest evidence that PDA-using nursing students on clinical placement scored similarly to non-PDA students (using conventional textbooks) in weekly measurement of clinical reasoning on the Outcome Present State Test (mean: 65.1 vs 68.1; points available = 74; p-value and test-statistic not reported). PDA students scored median = 3 on a 1–10 scale, Agree to Disagree, for: “I use PDA frequently in clinical setting,” corroborated by the PDA clinical log. On the Computer Self-Efficacy tool adapted for the clinical setting (but not exploring patients’ negative perceptions of the device), the 12 PDA-user scores suggested confidence in using the resources for assignments, self-organization, and greater clinical effectiveness but not in being more self-reliant. On “I will be less reliant on support persons” (1 = not at all… to 10 = totally… confident), mode = 4 and median = 6.

Mobile devices supporting educational transition emerged as a theme. Rashid-Doubell et al.’s (Citation2016) interpretative phenomenological study of five senior medical students at a Middle East international medical school highlighted how the device supported transition to “the doctor.” With growing confidence, students consulted the device less for information-checking, were more self-restrained (not losing trust by “fumbling with student props,” p. 8), used it more judiciously in front of patients, peers, and staff, and knew when to observe and practise clinical skills instead.

Efficiency was another theme. Davies et al. (Citation2012) generated a model whereby the device promoted just-in-time learning in the clinical context, repetition of learning, supplementing rather than replacing learning, and making use of wasted time. In Quant et al.’s (Citation2016) cross-sectional on-line survey across United States programmes, including osteopathy, 95% of “medical students” (731/2500 (29%) responding) considered that using medical applications saved time. Of Tran et al.’s (Citation2014) medical students, 94% (90/96) (strongly) agreed that “Using my personal mobile phone for clinical work makes me more efficient” (with this quantitative research not otherwise specifying efficiency). Reported efficiencies timewise have included:

medical students using the mobile device to make best use of “downtime” (Alegría et al. Citation2014; Davies et al. Citation2012);

nursing and midwifery students using iPads for prompter evidence-based decision-making and patient education with simulated patients in the “clinical setting” (Brown and McCrorie Citation2015);

nursing students reporting that the device made patient care “‘so much easier’ and ‘so much faster’” to provide, e.g. “[I] didn’t have to run back and forth 15 times because I forgot what the med was—helped keep [my] train of thought” (Wittman-Price et al. Citation2012, p. 645).

nursing students using a PDA to reduce the theory-practice gap on a psychiatric nursing clinical practicum, saving time between logging patient episodes and receiving instructors’ comments (Wu and Lai Citation2009).

nursing students considering that the device improved self-organization, freeing time to spend with patients (Johansson et al. Citation2013; Theroux Citation2013).

nursing students in a workshop codesigning a clinical skills education application prioritizing better use of time and supporting quick and effective learning in busy clinical settings (O’Connor and Andrews Citation2016).

Evidence re alternative uses to support learning

Unusual examples of mobile devices supporting learning involved using social media and monitoring sleep hygiene, respectively:

Reames et al. (Citation2016) evaluated the impact on third year medical students of receiving thrice daily surgical learning-points via Twitter on their own smartphones adjusted to show the tweets as a banner for their 8-week surgery clerkship. Of 61/66 completing a preclerkship survey, 53 (87%) regularly used a smartphone. In the postclerkship survey, while students’ aggregate means National Board of Medical Examiners (NBME) Shelf Examination in Surgery scores did not subsequently differ significantly from their predecessors (historical controls) the previous year (p = 0.37), 59% agreed that it “somewhat or very positively affected my knowledge.” Only 1/62 reported that it “somewhat negatively affected my knowledge” (2%). Most (53%) reported that it did not influence their clerkship engagement either way (possibly due to one-way information-flow) and only 32% used the tweeting tool at least weekly. In open-ended feedback, about one-half of students considered that it had improved their learning and the more frequent preclerkship Twitter-users were generally more positive. The evidence was weakened by pre- and postintervention measures not being linked on individuals.

Steinemann and Omori’s (Citation2006) third year medical students used a PDA to track surgery placement hours and sleep hours for one week midclerkship. This showed: 24/37 to have transgressed departmental policy; overestimation of work-hours by a mean of 19.5 hours; and operating room hours correlating positively (and in-hospital study hours negatively) with NBME surgery scores but negatively with clinical placement performance-ratings. As the policy restricting placement hours to 80 per week was likely aiming to enhance learning, well-being, and timetabling, such PDA-tracking might improve student and patient experience and policy.

Evidence re concerns in the clinical setting and professional identity

Informal and hidden curricula undermine students’ use of mobile devices to support their learning (Ellaway Citation2014).

What might staff think?

Across many studies, students worried about staff misinterpreting their use of mobile devices as non-work-related (Alegría et al. Citation2014; Rashid-Doubell et al. Citation2016), but potentially the tablet seemed more legitimate than the smartphone (Alegría et al. Citation2014) (and similarly in front of patients, Rashid-Doubell et al. Citation2016). Even using tablets in front of staff might require some time socializing into feeling comfortable (Nuss et al. Citation2014). Others reported actual staff disapproval of students using the devices (Dearnley et al. Citation2008; Bogossian et al. Citation2009; Davies et al. Citation2012; Ellaway et al. Citation2014; Rashid-Doubell et al. Citation2016). Examples included students noting that staff: were “old-school” and assumed personal use (Rashid-Doubell et al. Citation2016); perceived that patients would be uncomfortable (such as Johansson et al. Citation2013 renursing students with psychiatric in-patients); or opposed signing off a clinical task on the device (Green et al. Citation2015). Of Green et al.’s (Citation2015) senior medical students using an official-issue iPhone (loaded with academic, assessment, and reflective software), only 37.4% agreed that assessors responded well to completing a mini-CEX on it.

Regarding professional identity formation, Mather and Cummings’ (2015) nurse clinical educators reported positively on their own use of mobile devices. This involved freeing time to be with patients, retrieving point-of-care information, engaging patients in their own care, reducing errors, increasing collegiality, and supporting patient education. Nevertheless, policy preventing use of mobile devices and peers’ negative reactions precluded “side-by-side” learning with patients and students. The nurse educators considered that covert attempts to use mobile devices (by “ducking out” or “toilet learning” to ascertain, clarify, or check information) showed poor role-modelling that confused students. Nurse educators also thought that they should “announce use” to prevent nonwork use being assumed, a solution from Ellaway et al.’s (Citation2014) medical students too: “I say I’m going to write that into my notes and then I’ll pull out my phone to make it really clear that I’m going to do it on my phone” (p. 135). Nursing professional self-image also emerged when final year nursing students codesigning a clinical skills-based educational application (via a socio-cognitive engineering approach) discussed how it should look and work (O’Connor and Andrews Citation2016). Suggestions included that they and healthcare staff should explain to patients and each other why they were using a mobile device, to avoid misinterpretation.

Sometimes, staff in clinical settings were positive about students using a device, such as: rural supervisors in Johansson et al.’s (Citation2013) study of nursing students; staff nurses working with nursing students on their 10-week clinical placement on a medical-surgical unit (Wittmann-Price et al. Citation2012); or “new school” consultants encouraging Rashid-Doubell et al.’s (Citation2016) medical students to check information on ward-rounds. Staff might indeed actively encourage students to use the devices, e.g. nursing students accessing drug information in real time (Farrell and Rose Citation2008).

What might patients think?

A recurrent theme was that students were reluctant to use mobile devices in front of patients to avoid being seen as unprofessional (Fisher and Koren Citation2007; Dearnley et al. Citation2008; Farrell and Rose Citation2008; Bogossian et al. Citation2009; Davies et al. Citation2012; Theroux Citation2013; Green et al. Citation2015; Khraim et al. Citation2015; Willemse and Bozalek Citation2015; Witt et al. Citation2016; Rashid-Doubell et al. Citation2016), e.g.:

wasting time while there were other priorities;

missing the clinical moment;

exposing their lack of competence;

feeling inappropriate, unacceptable, distasteful, rude, or less patient-centered; or

misinterpreted as gameplaying, socializing, or otherwise using it personally.

When discussing their randomized controlled trial evidence about just-in-time videos on mobile devices, Tews et al. (Citation2011) even mentioned anecdotal student concerns about such patient misperception. Nevertheless, evidence from Wu and Lai (Citation2009) suggested patients were accommodating and curious and subsequently (Lai and Wu Citation2016) that junior Taiwanese nursing students were less reticent about patients seeing them using such devices. On their 3-week psychiatry placement piloting a netbook-based e-portfolio, “our students would routinely take out their netbook and immediately record what they observed with their patients” (p. 542). In some clinical settings, students may be greatly concerned with avoiding theft of the device (Bogossian et al. Citation2009; Witt et al. Citation2016).

Quant et al.’s (Citation2016) survey of United States medical students found that “more than 50%” considered that they appeared less engaged if using a mobile device in front of colleagues, 54% if in front of patients. Senior students were reportedly more comfortable than junior students, and more agreed with the statement that it looked to patients that “You cared enough to double check…” (p. 3), but raw data and precision of estimates were omitted. Mann et al. (Citation2015) found that 15/23 (65.2%) nursing students given an iPod Touch to use in patient care over 18 months felt that staff and patients assumed them guilty of nonwork use of the device, maybe because staff did not use mobile devices in patient care.

Scott et al.’s (Citation2015) focus groups of years 2 and 3 medical students suggested that they decided themselves whether to use a mobile device, despite the medical school prohibiting use and their own concerns about: etiquette; privacy and security; and patients wrongly assuming personal use. They were considerably more concerned than doctors about patients’/carers’ reactions to student use (78.0% vs 32.7%, p < 0.0001). Furthermore, 78% of these medical students were: “Unsure of tutors’/clinicians’ reaction” to their using a mobile device in the clinical setting; 20% of medical students and 21% of doctors agreed that “it distracts me” (p. 7). Despite this, most students, doctors, patients, and carers in Scott et al.’s (Citation2015) study considered that using mobile devices in the clinical setting would aid learning and practice.

Distraction and the busy setting

Using mobile devices at the right time and place to support the right aspect of learning was another theme. Students might find the busy clinical setting too challenging to invest time in learning optimal use of a mobile device (Khraim et al. Citation2015). Ellaway’s et al. (Citation2014) medical students’ concerns included becoming device-dependent and being distracted from patient-centered care.

Even with a policy not to use smartphones on clinical placement, students are distracted by others’ or their own use of the device or witness other students’ distraction (Cho and Lee Citation2016). Cho and Lee (Citation2015, Citation2016) found twice (r = −0.890, p < 0.05; r = −0.245, p < 0.0001, respectively) that frequent users tended to disagree with smartphone restriction policies on clinical placements. Green et al. (Citation2015) found that medical students’ self-reported frequency and proficiency of use were each significantly associated with their agreement that the device was enhancing their learning. Rashid-Doubell et al. (Citation2016) also reported medical students’ distractions during patient observation, when social connectivity displaced information-checking.

Cho and Lee (Citation2015) derived a measurement-scale for smartphone addiction comprising: withdrawal (irritation or anxiety about not being able to take smartphone messages), tolerance (overuse and the urge to reuse it straight away), interference with daily routines, and positive expectations. From a cross-sectional questionnaire survey (response rate: 99/218, 45.4%), Tran et al. (Citation2014) reported on final year medical students’ perceptions of smartphone-use disrupting clinical work: 46% (45/97) telephoned, texted, or emailed during patient encounters and 93% (89/96) perceived that the senior resident or consultant had done so. Despite evidence of “distracted doctoring” (p. 6) from increased connectivity, 86% (82/95) (strongly) agreed that smartphone-use “allows me to provide better patient care” (p. 5).

Ethical and equity concerns

The only explicit example of mobile devices supporting equity in education or care was in monitoring students’ gender-based discussions (Autry et al. Citation2002). Green et al. (Citation2015) reported about students receiving the same mobile device partly to address equity concerns. Steinemann and Omori (Citation2006) monitoring medical students by mobile devices exposed transgression of work hours policy on clinical placement, an ethical and equity issue.

Regarding ethical use of the mobile device, Cho and Lee (Citation2016) were concerned about many of their nursing students disregarding a nonuse policy, the related distraction also being reported by others (Ellaway et al. Citation2014).

Confidentiality was a more recent key concern (Tran et al. Citation2014; Willemse and Bozalek Citation2015; Scott et al. Citation2015). Tran et al. (Citation2014) focused on patient confidentiality concerns about medical students’ unsecured smartphones —26% (26/99) used no encryption or password-protection. Although 68% (65/95) (strongly) agreed that patient-related communication with colleagues on smartphones risked privacy and confidentiality breaches, 22% (21/96) still texted or e-mailed patient-identifiable data to colleagues. Willemse and Bozalek (Citation2015) reported educator concerns when nursing students “have to take pictures of the patients” (p. 8). Topps et al. (Citation2009) reported evidence of mobile devices supporting workplace-based assessment of students’ ethical attributes.

Deutsch et al. (Citation2016) found a best practice example for iPad implementation was to include digital professionalism in student orientation. Other evidence suggested the need for better staff role-modelling and institutional governance about using mobile devices in the workplace, given potential interactions with students’ professional identity formation (Mather and Cummings Citation2015; Scott et al. Citation2015; Rashid-Doubell et al. Citation2016) and their moral development (Scott et al. Citation2015).

Benefits for others

Benefits for others included:

students using mobile devices to mentor other students or e-mail them educational material (Bogossian et al. Citation2009);

patients or carers/relatives receiving more timely point-of-care answers to questions, advice, or education from nursing students (Johansson et al. Citation2013);

patient safety improving through safer medication administration (Fisher and Koren Citation2007; Wittman-Price et al. Citation2012; Theroux Citation2013);

patient safety and quality of care improving as nursing students did not leave patients on their own while seeking further information via the device (Johansson et al. Citation2013).

Quant et al.’s (Citation2016) survey of United States medical students found that 87% considered that using applications improved patient care and 78% that it increased diagnostic accuracy. Ellaway et al.’s (Citation2014) medical students used their official mobile devices in the clinical setting to support: their own learning; their healthcare team; and sometimes improved patient communication. Years 3 and 4 reported using the devices (rather than pagers issued for clinical placements) to communicate with preceptors. Nuss et al.'s (Citation2014) 37 medical students using the iPad throughout a year of clinical clerkships mainly accessed patient data and sought evidence to improve clinical decision-making. In Shurtz and von Isenburg’s (Citation2011) very small pilot study of medical students and preceptors using a Kindle e-reader on a 4-week family medicine clerkship, only 15/20 and 7/14 responded to an on-line survey, respectively. Only 8/22 reported using the device for direct patient care. This involved answering queries in the examination-room; 6 answered patient-queries. Such uses rated as “tolerable” for 9, “terrific” for 1, but “terrible” for 11, with preceptors significantly more likely to recommend this use than students.

Evidence from intervention studies (e.g. controlled before-and-after, (non-)randomized)

Two nonrandomized intervention studies investigated the effect of mobile devices on clinical placement-related anxiety (Davydov Citation2010 thesis below; Ho et al. Citation2009). Ho et al.’s (Citation2009) nonrandomized study of Year 3 medical students explored the self-reported impact of using PDA-based patient-logging, resources, and reflective tools on a paediatric clinical placement. The 94/125 choosing to participate were assigned to log patient-encounters electronically at “point-of-care,” be paper-based controls, or provide baseline data. The PDA-group logged 11 times the patient-encounters and considered learning and reflection to be enhanced more than the controls (mean 3.26 vs 2.00, on scale of 1 = not at all, 5 = extremely enhanced, p < 0.01). The intervention-group performed similarly on their clinical supervisor performance-rating, significantly better on the written examination, but worse on the OSCE. In focus groups, PDA students highlighted that repetition enhanced their learning, i.e. logging and reflecting across many similar instances.

Of four randomized controlled trials, two related to medical students learning evidence-based medicine, one to medical students receiving instructions before case presentations, and one to nursing students’ self-efficacy. A further trial of nursing students learning pharmacology attempted cluster randomization:

In a pilot study randomizing medical students to three ways of electronic literature-searching in the clinical setting, Friederichs et al. (Citation2014) found that medical students rated desktop-computing significantly more effective ([mean] = 3.22, 1 = strongly disagree, 5 = strongly agree) than tablets (2.13) and smartphones (1.68) to find the relevant Cochrane review. Students using desktop-computing were also more likely to agree (2.88) that they would try literature-searching in their next clinical placement (tablet = 2.16, p < 0.001; smartphone = 1.87, p < 0.001). Students did rate tablet (3.90) and smartphone (4.39) mobility significantly superior to desktop-computing (2.38, p < 0.001), rating tablets and smartphones similarly except for satisfaction with screen size (tablet 4.10, smartphone 2.00, p < 0.001). Generalizability of evidence was limited by studying just one practical day with simulated patients and by not excluding students already familiar with those mobile devices.

In a randomized controlled cross-over trial, medical students were accessing evidence-based decision-making tools significantly more via a PDA vs pocket-card guidance and with significantly more confidence (Leung et al. Citation2003). From items scaled 1–6 (presumably 6 = agreeing more), the pooled effects of using the PDA InfoRetriever (vs a pocket-card) were improved item scores by a mean of 0.48 (95% confidence interval 0.22–0.74) on frequency of looking up evidence and 0.19 (0.04–0.33) on improved confidence in clinical decision-making. Leung et al.’s (Citation2003) sample-size calculation called an (unspecified) effect-size of 0.25 as medium. While the evidence relied on self-reported measures, strengths were the complex design (randomizing to three groups, then randomizing to three 8-week clinical placements in a different order, and including a washout period), intention-to-treat analysis, and real-time, point-of-care use of evidence.

In Tews et al.’s (Citation2011) pilot randomized controlled trial, 22 Year 4 medical students were randomized to receive a ‘just-in-time’ instructional video by iPod Touch or not. Improved clinical case presentations followed the first viewing (Cohen's effect-size = 0.65, p = 0.032), and the video-watchers perceived increased confidence.

In a pilot study randomizing nursing students to using a PDA or not on their 8-week clinical placement, Goldsworthy et al. (Citation2006) found that nursing students’ self-efficacy improved significantly in each of two PDA groups vs two non-PDA groups. The 13 PDA students with pre- and postplacement scores increased by a mean of 3.769 (scale from 10 to 40), whereas the 12 non-PDA students with complete data improved by only 0.667 (p = 0.002). A strength was that each of two staff led an intervention-group and a control-group, thus taking their own effect into account. Complete data were only available on the 25/36 participants though. It is also unclear whether the 10-item General Self-Efficacy instrument was set against the specific context of students administering patient medication (or assumed to relate to all nursing activities during the placement). Goldsworthy et al. (Citation2006) attributed improved self-efficacy to the device easing the potentially stressful transition to clinical settings.

Farrell and Rose’s (Citation2008) intervention study of Year 2 nursing students randomized their 3-week clinical placements to either using a PDA (to access an online pharmacological database) or not, but the students were then allocated as usual to those placements. The students reported accessing the database up to 15 times per shift. Despite Farrell and Rose (Citation2008) reporting that pharmacological knowledge increased in PDA-users, the very marginal effect-size was not significant. The scores remained similar and did not justify the researchers’ conclusion: ‘Students using the PDAs demonstrated a moderate increase in their mean score, which was double the increase in the control group’ (p. 13). The “increase” of 1.33 on preplacement mean score of 16.4/35 in the PDA-group was reported as “twice that” on the preplacement mean score for controls of 15.7 (post-test score unreported).

Evidence from doctoral theses

All three doctoral theses related to nursing students:

Cornelius (Citation2005) concluded from semistructured interviews, focus groups, and field observations of nursing students and staff that a PDA-based tool supported the development of clinical competency and clinical decision-making but that there was a tension between its being a barrier to patient interaction vs being interesting for some patients. Fisher and Koren (Citation2007) similarly reported a tension between nursing students’ concern about difficulties using the device in front of patients and ward staff being “impressed to see us pull out the PDAs and use them [wishing that they had them] when they were in school” (p. 5–6). (Wu and Lai (Citation2009) also reported such “envy”.) Those staff encouraged such use and benefited from nursing students resolving conflicts about when to withhold medication.

Davydov (Citation2010) reported a significant difference in pre- to postclinical placement anxiety in nursing students whose programme required the use of a PDA (n = 29) vs a programme in each of two other nursing schools not requiring PDA use (n = 45). The effect-sizes were minimal though:

- Where 1 = not at all, 4 = very much so: Mean state anxiety decreased in the programme requiring PDA-use (pre- to post-test difference in mean = –0.02). In the two control programmes, PDA nonusers increased in state anxiety [+0.27], p = 0.02.

- Where 1 = almost never, 4 = almost always: Trait anxiety increased slightly in both groups, but this was significantly smaller in the PDA-based clinical placements (pre- to post-test difference in mean = +0.06 vs +0.37, p = 0.007).

Theroux’s (Citation2013) case study of four nursing students found the mobile device to support more than it disrupted caring relationships with patients. Support for caring came from time saved, improved confidence, safer medication administration, and better decision-making (from timely access to accurate information).

Evidence re best policy for using mobile devices

Better policy was recommended. Deutsch et al.’s (Citation2016) semistructured interviews generated eight best practices for implementing iPad-based programmes, having consulted representatives of seven of a purposive sample of nine United States medical schools reportedly with such programmes extending into clinical years:

Plan well.

Define focused goals.

Promote a tablet “culture” (including clerkship directors).

Have an implementation team (including clerkship directors) and a prominent leader for students and staff to approach with ideas.

Train students in technical and digital professionalism aspects, including the law and maintaining doctor-patient relationships while using the device (i.e. according to the literature, by strategic use of: awareness, alignment, assessment, and accountability) and managing student expectations.

Use student mentors and keep asking students what is needed from the device on clinical placement.

Accept variable use, as some will not integrate the device into everyday work.

Promote student and staff innovation about how to use the device.

Mather and Cummings’ (2015) study of clinical educators of nursing students highlighted that professionalism concerns will continue to undermine use of mobile devices until policies and standards guide their use in healthcare settings to avoid the “m-learning paradox”. This is when nurses are unable to access mobile devices in the workplace despite their potential to improve patient care and outcomes.

In Khraim et al.’s (Citation2015) pilot mixed methods study of 13 nursing students using smartphones on clinical placement, six noted their main concern to be unfamiliarity with institutional policy, which would need to address their main barriers to use (“unprofessional” appearance, not “user-friendly,” and insufficient time to use it). As follow-up to their pilot study of nursing students, Wittman-Price et al. (Citation2012) wrote an honour-code for students to sign, e.g. “Agree that the [mobile electronic device] will not be used in any patient room” (p. 645). Setting explicit policy was a recurring recommendation.

Discussion

This BEME effectiveness-review of mostly justification and clarification research found an idiosyncratic evidence-base of modest robustness about mobile devices providing educational support for health professions students on clinical placements. This evidence-base suggested that mobile devices should be a powerful tool for improving the quality of student learning and benefiting other aspects of student “caring contribution” in the clinical setting (). This review particularly starts to illuminate aspects of the devices, curriculum approaches, and potential mechanisms of action that clinical educators might consider. The approach to planning, regulating, and researching such use must, however, be more creative, relevant, and rigorous.

Main messages

The broad types of mobile devices changed considerably over the search-period, but educational practice in clinical placements has been slow to make best use of their potential impact on student learning. The evidence-base has been slow to develop and is patchy in direction, relevance, and rigour. Nevertheless, mobile devices have particularly supported student: assessment; communication; clinical decision-making; logbook/notetaking; and access to information, which featured in about two-thirds of primary studies here.

The evidence focused on supporting diverse aspects of better quality learning such as via:

just-in-time access for learning new material or checking information, thus potentially facilitating safer, evidence-based practice via more effective, efficient, and appropriate (relevant) learning

logging clinical activity, workplace-based assessment, or tracking competencies

self-regulated learning or other specific meta-aspects of learning and transition in professional identity development, including assisting with deliberative and reflective practice, improving self-efficacy, and reducing anxiety

benefiting others, e.g. supporting peer education, patient education, health care staff for just-in-time prompts to best practice, and the potential to improve quality of health care

unusual examples: promoting learning-points via social media to students’ own devices and monitoring students’ sleep hygiene for compliance with clinical workplace policies

Beyond the usual focus on technical barriers about the device (including its connectivity and compliance with software and information systems), the conditions that affected the impact of the mobile devices focused on three main aspects of informal and hidden curricula:

concerns about: actual and perceived disapproval of peers, clinicians/educators, and patients; confidentiality and privacy; and security aspects

distraction by social connectivity (or other personal use) and the busy clinical setting

mixed messages about policy.

A further very important challenge such as infection control received much less attention than might be merited.

While this review extracted some useful evidence, there were caveats on the search-process and considerable caveats on the evidence-base.

Strengths and limitations of search and synthesis

The search and synthesis involved independent double-assessment and discussion of discrepancies against clear criteria to: include/exclude abstracts, include/exclude full articles, and code Kirkpatrick (K)-level, Maxwell dimensions, grade of strength (S), and level of evidence (L). Strong points also included double-checking the extracts that represented main methods and findings of each paper. Using those verbatim extracts in the data-appendix then provided an audit-trail to move efficiently between electronic versions of the papers when comparing or confirming key features. Visual thematic coding of full articles within NVivo was likewise a strength. The use of Kirkpatrick levels did not appear to be counterproductive with this evidence-base (Yardley and Dornan Citation2012). Using Maxwell dimensions of quality gave an extra (health services research) lens for conceptualizing quality improvement of student learning in the clinical setting, broadening beyond effectiveness.

Search and synthesis were limited, however, by major changes in technology (devices and applications) and terminology (e.g. m-learning came and went) over the search-period and the hotchpotch of “knowledge” generated across quantitative, qualitative, and mixed methods research combined with very disparate research questions and limitations.

Postscript: Wallace et al. (Citation2012) was excluded, arguably harshly, for the abstract not specifically suggesting use on clinical placement. When the full paper emerged separately after this review, it was indeed hard to pin its qualitative or quantitative findings to medical students (rather than residents) on clinical placement (rather than elsewhere). This mixed methods study did present rich context though about how participants used mobile devices in medical education and in practice, with much potential to enhance learning and patient care. Complementing this review, emergent concerns were about: surface learning; finding proper resources; distraction; unsuitable use, access and privacy, and the need for clear policy.

Robustness of evidence-base

The main limitation was therefore that, despite completing a scoping search, the evidence-base was rather large, disjointed (e.g. heterogeneity of devices and their contribution to educational support), and difficult to filter and synthesize into meaningful messages for specific settings. Making sense of the main messages required expertise across quantitative, qualitative, and mixed methods research and persistence in extracting the relevant information despite some confusing or suboptimal write-ups. The conventional classifications for coding robustness (S1–S5; L1–L6) were limited in their contribution. Judgements made against these were rather context-dependent for an evidence-base of such mixed fortunes. To be included, papers had to report empirical studies, i.e. L4 or above. The papers’ main messages were not necessarily the focus of this review and many papers had a mixed level of robustness, e.g. an S3 message might be extracted from an overall S2 paper.

Quantitative research was sometimes undermined by small samples and incomplete, misleading, or no statistical analysis or interpretation. Qualitative research was sometimes undermined by unclear sampling, epistemology, and analysis. Mixed methods research was sometimes undermined by not being labelled as such, with no indication of how “mixing” was implemented, and with tokenism of the qualitative or quantitative component. Many papers did not report key methods and findings systematically (date of data collection, details of setting, sampling and response, analysis). Aims and data sometimes differed between abstracts and papers.

Even though K2–K4 articles were selected, there was often still too much focus on K1 “reaction.” The Maxwell dimensions suggested that research agendas undervalued aspects of efficiency and equity of educational support from mobile devices, overemphasized acceptability and the technical barrier aspect of accessibility, without sufficient consideration of appropriateness (relevance) to learning needs (and service contribution) and broadening interpretation of effectiveness.

Despite such limitations, the review did, however, illuminate clearly and systematically the potential directions for developing this topic.

Recommendations

For practice

An explicit policy should indicate how health professions students should use mobile devices in the clinical setting and positive role-modelling by staff and educators is required. The policy must clarify infection control, confidentiality, and security aspects and be clear on how to make best use for professional identity development and maintaining therapeutic, work, and educational relationships.

Students may need time and training in best use of the device, associated applications, technology transitions, security, and confidentiality.

For research

Priorities for further research on this topic must be much clearer on the “So what?” for health professions students on everyday clinical placements about, e.g.:

how and why the devices work

the best software platforms and the fidelity required

cost implications of supplying and maintaining the device vs using students’ own devices

how to tackle informal and hidden curricula of perceived and actual patient, staff, and peer attitudes and assumptions and system-level constraints

how best to use the devices to support learning transitions, with optimal confidentiality and security, widening participation to fulfill educational potential

how best to use the devices to support student contribution to improving quality of health care

Study design, implementation, and write-ups must be more systematic to improve generalizability or transferability of findings, with better:

alignment of research question and research approach/methods

sampling, analysis, and interpretation

completeness of reporting of key features of curriculum setting, study design, and use of device

BEME effectiveness-reviews should continue to make best use of the evidence available, extending beyond just “what works?”

Conclusions

The current evidence-base is idiosyncratic and of modest robustness – indeed, it is like “the curate’s egg” (du Maurier cartoon Citation1895). This is partly due to rapid changes in technology but also to disjointed approaches to the research agenda and to the generalizability or transferability of the evidence generated. Despite this, it was important to salvage main messages rather than dismiss so many participants’ and researchers’ contributions. This is not to promote a particular study design but better quality evidence (Eva Citation2009) and maximizing opportunities to synthesize better understanding from it (Gordon et al. Citation2014). To widen reviews beyond the justification research of whether mobile devices work (Gordon et al. Citation2013; Gordon et al. Citation2014) is also to “recognise complexity and make theory explicit” about the content of that understanding (Kilminster Citation2012, p. 1027).

More robust research agendas are now required to explore priorities for making a difference to students, patients, and population, focusing on outcomes that move beyond satisfaction with the technology and discussion of technical barriers. There is much potential for mobile devices as educational support for health professions students in the clinical setting if ingrained assumptions and system-level conflicts are challenged, but the underpinning research must be more creative, relevant, and rigorous. As Masters et al. (Citation2016) noted, educational practices should develop to make best use of mobile devices and the accompanying research must focus on how they support learning and patient care. The need for explicit policy to tackle informal and hidden curricula (Hafferty Citation1998; Ellaway Citation2014) about how students should use mobile devices in this way is imperative.

Supplemental Material

Download PDF (444.3 KB)Supplemental Material

Download PDF (210.1 KB)Disclosure statement

The authors report no conflicts of interest. The authors alone are responsible for the content and writing of this article.

Additional information

Notes on contributors

Gillian Maudsley

Gillian Maudsley, MBChB, FRCPath, MPH(dist), FFPH, MEd(dist), MD, MA(dist) Learning & Teaching in Higher Education, SFHEA, Clinical Senior Lecturer in Public Health Medicine, Department of Public Health & Policy.

David Taylor

David Taylor, BSc(Hons), MEd, MA(dist) Learning & Teaching in Higher Education, PhD, EdD, PFHEA, FAcadMEd, FRSB, FAMEE, Reader in Medical Education, School of Medicine and Professor of Medical Education & Physiology, College of Medicine.

Omnia Allam

Omnia Allam, MBBS, MSc, MSc(dist), PhD, Senior Lecturer in Medical Education, School of Medicine.

Jayne Garner

Jayne Garner, BSc(Hons), MSc, PhD, Lecturer and Evaluation Officer, School of Medicine.

Tudor Calinici

Tudor Calinici, BASc, MSc, PhD, Lecturer in Medical Informatics and Biostatistics, Faculty of Medicine.

Ken Linkman

Ken Linkman, Liaison Librarian, Harold Cohen Library.

References

- Ajzen I. 1991. The theory of planned behavior. Organ Behav Hum Decis Proc. 50:179–211.

- Alegría DA, Boscardin C, Poncelet A, Mayfield C, Wamsley M. 2014. Using tablets to support self-regulated learning in a longitudinal integrated clerkship. Med Educ Online. 19:23638.

- Aungst TD, Belliveau P. 2015. Leveraging mobile smart devices to improve interprofessional communications in inpatient practice setting: a literature review. J Interprof Care. 29:570–578.

- Autry AM, Simpson DE, Bragg DSA, Meurer LN, Barnabei VM, Green SS, Bertling C, Fisher B. 2002. Personal digital assistant for “real time” assessment of women's health in the clinical years. Am J Obstet Gynecol. 187(3 Suppl):S19–S21.

- Bandura A. 1977. Self-efficacy: toward a unifying theory of behavioral change. Psychol Rev. 84:191–215.

- Bearman M, Dawson P. 2013. Qualitative synthesis and systematic review in health professions education. Med Educ. 47:252–260.

- Bharamgoudar R, Sonsale A. 2017. Twelve tips for medical students to make the best use of ward-based learning. Med Teach. 39:1119–1122.

- Bogossian FE, Kellett SE, Mason B. 2009. The use of tablet PCs to access an electronic portfolio in the clinical setting: a pilot study using undergraduate nursing students. Nurse Educ Today. 29:246–253.

- Brown J, McCrorie P. 2015. The iPad: tablet technology to support nursing and midwifery student learning: an evaluation in practice. Comput Inform Nurs. 33:93–98.

- Cho S, Lee E. 2015. Development of a brief instrument to measure smartphone addiction among nursing students. Comput Inform Nurs. 33:216–224.

- Cho S, Lee E. 2016. Distraction by smartphone use during clinical practice and opinions about smartphone restriction policies: a cross-sectional descriptive study of nursing students. Nurse Educ Today. 40:128–133.

- Cibulka NJ, Crane-Wider L. 2011. Introducing personal digital assistants to enhance nursing education in undergraduate and graduate nursing programs. J Nurs Educ. 50:115–118.

- College of Family Physicians of Canada, Canadian Medical Association, Royal College of Physicians and Surgeons of Canada. 2005. 2004 National Physician Survey (NPS).

- Colthart I, Bagnall G, Evans A, Allbutt H, Haig A, Illing J, McKinstry B. 2008. The effectiveness of self-assessment on the identification of learner needs, learner activity, and impact on clinical practice: BEME Guide no. 10. Med Teach. 30:124–145.

- Cook DA, Bordage G, Schmidt HG. 2008. Description, justification and clarification: a framework for classifying the purposes of research in medical education. Med Educ. 42:128–133.

- Cornelius FH. 2005. Measuring anxiety in undergraduate nursing students who use personal digital assistants (PDAs) during clinical rotations [doctor of philosophy thesis]. Philadelphia: Drexel University. p. 155.

- Creswell JW. 2003. Research design: Qualitative, quantitative, and mixed methods approaches. 2nd ed. London: Sage Publications.

- Davies BS, Rafique J, Vincent TR, Fairclough J, Packer MH, Vincent R, Haq I. 2012. Mobile medical education (MoMEd)–How mobile information resources contribute to learning for undergraduate clinical students–A mixed methods study. BMC Med Educ. 12:1.