Abstract

Students have to develop a wide variety of clinical skills, from cannulation to advanced life support, prior to entering clinical practice. An important challenge for health professions’ educators is the implementation of strategies for effectively supporting students in their acquisition of different types of clinical skills and also to minimize skill decay over time. Cognitive science provides a unified approach that can inform how to maximize clinical skill acquisition and also minimize skill decay. The Guide discusses the nature of expertise and mastery development, the key insights from cognitive science for clinical skill development and skill retention, how these insights can be practically applied and integrated with current approaches used in clinical skills teaching.

Introduction

Supporting students in the effective acquisition of their clinical skills is becoming more of a challenge for health professions education (HPE) educators. The challenge is a growing one because of the increasing number of clinical skills required to be learned at the point of graduation, and also because of the increasingly limited opportunities for learning clinical skills in simulation and clinical workplace settings. There are many clinical skills that students need to acquire depending on the specific undergraduate syllabus, and these skills range from simple procedural skills, such as cannulation, through to more complex diagnostic and management reasoning skills in the case of advanced life support (Faustinella and Jacobs Citation2018). In addition, educators are becoming increasingly aware of the importance of clinical skill decay over time (Cecilio-Fernandes et al. Citation2018; Sall et al. Citation2021).

Practice points

Application of cognitive science evidence-based strategies to current approaches for clinical skills training can enhance both clinical skill acquisition and retention as well as minimize skills decay.

Understanding how learners integrate declarative and procedural knowledge can help educators to decide on the most appropriate cognitive science strategy.

Educators can integrate cognitive science strategies with their current approaches for teaching clinical skills.

The aim of this Guide is to provide a cognitive science perspective on teaching clinical skills in undergraduate, postgraduate, and continuing medical education. This perspective can provide useful evidence-based insights into how teaching can ensure the more effective acquisition of clinical skills and also minimize skill decay. The guide is divided in two sections. First, the guide discusses the theoretical foundations, including the nature of expertise and mastery development and the cognitive science basis of clinical skills development to ensure the effective acquisition of clinical skills, with reduced skill decay. Second, we discuss the practical implications and present a practical illustration of how the theoretical foundations can be integrated into current practices.

Theoretical foundations

The cognitive science basis of clinical skills acquisition

One of the first challenges facing HPE educators is the wide variety of skills than come under the definition of ‘clinical skill’. The range is from physical examination skills and practical skills through to communication skills, treatment skills, and clinical reasoning or diagnostic decision-making skills. The difference between all these skills in the type of task is significant since some are more technical or procedural in nature whereas some are more intellectual or cognitive in nature. There is also little consensus or agreement around which domains to include within clinical skills, therefore there are a number of different perspectives around the optimal teaching and learning strategies for developing clinical skills (Michels Evans and Blok Citation2012). However, from a cognitive science perspective, the underlying process of all clinical skills is similar and requires the effective integration of declarative and procedural knowledge.

Declarative knowledge refers to facts or events (‘knowing what’) (Anderson Citation1982). In the case of clinical skills, declarative knowledge includes relevant factual or conceptual knowledge in the biomedical sciences and technical aspects about how to undertake the skill in practice. For example, declarative knowledge about measuring blood pressure requires knowing the factual differences between the terms ‘systolic’ and ‘diastolic’ and the conceptual difference between ‘systolic’ and ‘diastolic’ in terms of the different phases when blood is pumped around the body. Likewise, factual knowledge about the equipment to be used is also necessary, alongside conceptual knowledge related to how best to position the patient and the machine when taking blood pressure.

Procedural knowledge (‘knowing how’) refers to the automatizing of our actions with declarative knowledge and develops with repeated practice of the skill (Anderson Citation1982). For example, procedural knowledge refers to taking a patient’s blood pressure and being able to explain the procedure to them whilst undertaking it. The automatization of the skill over time, due to both knowledge and skill, leads to faster performance in practice. This concept is essential for understanding skill decay over time. Whereas procedural knowledge is usually maintained over time, declarative knowledge can decay and may be forgotten without repeated use of the skill (Anderson Citation1982; Anderson et al. Citation2004). Even though a skill has been mastered, after a period of disuse, professionals may also still face some difficulties to demonstrate optimal performance, and again is more likely related to the decay of declarative knowledge.

Effective skill acquisition, including clinical skills, should begin with constructing and maintaining declarative knowledge through specific teacher-led instruction, which may or may not include observation by learners of the teacher demonstrating the skill in the beginning. Practice over time will increase skill acquisition by transforming declarative knowledge into procedural knowledge through a process known as proceduralization (Taatgen and Lee Citation2003). The proceduralization is effective because the mental effort for learners reduces over time as individuals automatize the performance of the skill, thereby also reducing the cognitive demand experienced and the amount of errors in the process (Anderson Citation1982).

Applying cognitive science to mastery learning and deliberate practice

Mastery learning and deliberate practice are currently widely used to develop automatization through proceduralization in clinical skills teaching. However, the effectiveness for skill acquisition and skill retention over time to avoid skill decay can be greatly enhanced by also integrating cognitive science evidence-based teaching strategies into the design of the educational activities of these approaches. Although many of these strategies have been traditionally associated with the development or construction of knowledge, we consider that these can also be applied to the teaching of clinical skills because different types of knowledge are required for skills development.

Mastery learning

Mastery learning is an individualized approach for acquiring a skill that is dependent on structured or scaffolded instruction (McGaghie Citation2015). More specifically, mastery learning requires individuals to achieve a defined level of proficiency before proceeding onto the next instructional objective. This approach usually commences with baseline or diagnostic testing of the individual and the setting of clearly defined learning objectives. This is followed by a sequence of units of activity, which have increasing difficulty, to meet the learning objectives. In terms of the way in which learning objectives are constructed or units of activity are designed and developed, there is no formal process or procedure to follow. Each unit of activities requires engagement by the learner and a focus on reaching the pre-specified learning objective. There is a minimum passing standard for each unit of activity which individuals need to achieve before moving on. Likewise, there is an assessment component to each unit to gauge the extent to which the individual has achieved a minimum passing standard for mastery. Once individuals demonstrate their success in the unit of activity, they can advance to the next unit of activity. Individuals progress to move through units until the mastery standard is reached. Therefore in mastery learning, competence or achievement is evaluated entirely by the individual’s attained performance on a specified criterion test. In terms of evidence for effectiveness as a training method, a systematic review and meta-analysis investigating mastery learning for health professionals using technology-enhanced simulation confirmed the approach was superior to non-mastery instruction but takes more time (Cook et al. Citation2013).

However, research has identified decay of clinical skills even when learners practice until mastery (Higgins et al. Citation2021a, Citation2021b). For example, learners that performed central venous catheter performance were observed to demonstrate skills decay after six and 12 months (Barsuk et al. Citation2010), and similar observations have been noted with basic life support skills after 6 months as well (Wik et al. Citation2002; Srivilaithon et al. Citation2020). These findings highlight the importance of knowledge not just for conceptual clinical skills such as clinical reasoning but also technical ones such as cannulation and blood pressure measurement. Furthermore, the findings also demonstrate the importance of ensuring decisions around teaching time and timetabling are based on theory and evidence, rather than room availability and scheduling. From a theory and evidence perspective, the differences observed are likely due to a lack of time and training for supporting learners achieve proceduralization even though they were observed to have demonstrated competence on performing the task.

From a cognitive science perspective, mastery is understood as when individuals possess all the necessary knowledge in the procedural form (as opposed to the declarative form), and that knowledge in procedural form exists as a connection between declarative knowledge and motor actions. Viewed through this perspective, skills decay is a phenomenon whereby declarative knowledge stored in memory is forgotten, and as a consequence, there is a loss of procedural knowledge as well. Therefore achieving mastery also involves preventing skills decay or certainly making it more difficult for skills decay to occur, through strengthening the connections between declarative and procedural knowledge.

At first glance, this may appear complicated or unnecessary since not all clinical skills are the same, with simple ones (e.g. blood pressure measurement) through to complicated (e.g. central line cannulation) and complex ones (e.g. advanced life support). It follows that the amount of attention given to developing and consolidating declarative knowledge should be proportionate and vary according to the complexity of the skill. A simple skill, such as measuring blood pressure, requires less declarative and procedural knowledge compared to a more complex skill, such as advanced life support, since there are less tasks to complete and each task is generally fairly consistent across different situations and circumstances individuals may find themselves in during clinical practice. In the case of advanced life support, there are multiple tasks involving multiple skills, and each task varies significantly across different situations and circumstances in clinical practice. A lot more declarative and procedural knowledge is necessary for advanced life support as a clinical skill compared to blood pressure measurement due to the quality and quantity of skill necessary for individuals to demonstrate on task. Although the amount of declarative and procedural knowledge may differ across clinical skills, and across different levels of expertise, secure skills development is dependent on effective integration between declarative and procedural knowledge in memory.

Deliberate practice

An alternative individualized method of instruction described by Ericsson is called the expertise performance approach (Ericsson and Charness Citation1994). Ericsson proposed that it is first necessary to identify reproducibly superior performance in the real world, for example, ‘what good cannulation looks like’ or ‘what good resuscitation looks like’ as undertaken by an expert or someone who has mastered the skill, which is often done using cognitive task analysis (Clark et al. Citation2008). Thereafter, the challenge for educators is to then capture and reproduce this performance, ideally with standardized tasks that can be examined in controlled conditions such as a simulation laboratory. The purpose of this process is to identify key actions that lead to better outcomes on tasks, as well as those which do not, allowing these to be reflected back to individuals. With this information, individuals can begin to work on cognitive processes that mediated the generation or selection of those actions, so that in the future, they can select superior actions and improve their overall performance on the task. When this method is applied as a training approach, supervised and guided by a teacher, it is called ‘deliberate practice’.

Deliberate practice refers to individualized training activities specially designed by a coach or teacher to improve specific aspects of an individual’s performance through repetition and successive refinement (Ericsson and Lehmann Citation1996). However, to receive maximal benefit from teacher instruction and feedback, individuals have to monitor their training with full concentration. Furthermore, practicing with full concentration is effortful so the duration of training for individuals needs to be carefully managed in any given session length. In addition, deliberate practice approaches are characterized by individuals receiving or self-generating immediate feedback. External feedback is important for identifying errors or mistakes, with follow-up advice about ways to make improvements. Likewise, self-generated feedback is important for evaluating the quality of developing internal representations, which are critical for performing independently as experts. The final component of deliberate practice is the availability of training tasks the offer individuals opportunities to engage in repetitions and structure practice in such a way that individuals make gradual improvements with each attempt. All these aspects are necessary before the approach can be considered ‘deliberate practice’, certainly the type based on the principles of expertise performance.

Research over the past two decades has demonstrated the importance of repeated practice that is combined with teacher-led coaching and guided practice for optimal skill acquisition (Ericsson et al. Citation1993; Ericsson Citation2004; McGaghie et al. Citation2011). Although studies have identified that deliberate practice improved skill acquisition and retention, recent reviews have highlighted two important issues: (1) the lack of a comparison group and (2) a decay after 2 weeks and more increasingly after 90 days (Higgins et al. Citation2021a, Citation2021b). The reason for these issues are multi-factorial, including the specific to way in which the study design was developed, and the way in which the training was delivered in practice.

We consider that current instructional techniques, such as mastery learning and deliberate practice, can be informed by cognitive science evidence-based strategies to maximize skill retention and reduce skill decay. Mastery learning has been shown to be effective, however, we recommend greater emphasis on the development of mental models. Likewise, deliberate practice is particular popular among educators due to the emphasis on instruction and teacher-learner relationships, but we recommend that it could be more effective if the greater emphasis was also given to effective knowledge construction, with the aim to prevent the decay of knowledge and skills.

Cognitive science evidence-based strategies

Spaced practice

A frequently used approach for teaching a clinical skill is for educators to describe and demonstrate the skill at the start of a session, before offering learners the opportunity to practice and ask questions towards the end of it (Bullock et al. Citation2015). This approach, called massed practice, provides teaching all at only one time. For example, advanced life support skills are generally taught as part of a single training course with consecutive teaching sessions of massed practice over a duration of 2 or 3 days. The reason for using a massed practice for advanced life support skills training appears to be as much to do with the convenience of timetabling sessions all in one go, rather than any evidence-based or theory-driven basis for the instructional design of courses. Likewise, the challenge with most, if not all, clinical skills training in HPE invariably includes the logistics of delivering teaching to cohorts of learners with a limited teaching faculty.

However, evidence from cognitive science demonstrates that spacing the teaching or spreading training time over multiple sessions is more effective for retention (Bjork and Allen Citation1970; Dempster Citation1989; Cepeda et al. Citation2006). For example, Cepeda et al. (Citation2006) conducted a meta-analysis with 184 articles comparing the practice in one session (massed) to space. They found that learners in the spaced group retained better than those in the massed group. Whilst much of the research into the spacing effect may come from studies involving tasks that heavily relies on declarative knowledge (for a review see Cepeda et al. Citation2006; Carpenter et al. Citation2012), there is also evidence demonstrating the benefit of spaced practice for clinical skills (for a review see Cecilio-Fernandes et al. Citation2018). The optimal interval between training sessions varies depending on the type of knowledge required for the specific clinical skill. For skills that are heavily based on declarative knowledge such as advanced life support, the optimal interval between sessions range from 10% to 15% of the retention interval (Carpenter et al. Citation2012). The retention interval is the time between the last training session and the final test. However, in real-life situations in which learners need to be able to act when required thinking in a retention interval may not be feasible. However, educators need to have awareness and appreciation of the time length over which skills are retained by learners, rather than assume they are constantly maintained after individual training sessions.

Retrieval practice

Another frequently used way of teaching a clinical skill is to teach it once, and then assess the extent to which learners have developed the skill either immediately afterward or at some point as part of a summative or high-stakes assessment. However, evidence from cognitive psychology suggests repeated testing of clinical skills, rather than no or single event testing, is more effective for long-term skills retention.

The testing effect (Roediger and Karpicke Citation2006a, Citation2006b; Karpicke and Roediger Citation2008) is an effective strategy for acquiring several clinical skills, including basic life support (Li et al. Citation2011), advanced life support (Kromann et al. Citation2009), radiograph interpretation (Boutis et al. Citation2019), and clinical reasoning (Raupach et al. Citation2016). There is also evidence for improving the retention of skills that relies more on declarative knowledge (Larsen et al. Citation2009; Larsen, Butler, Lawson, et al. Citation2013; Larsen, Butler, Roediger Citation2013).

The testing effect is important because ‘testing’ does not refer to the formal assessment of performance or ‘formative assessment’ in a traditional sense, but an actual teaching strategy designed to minimize skills decay. Furthermore, the testing effect as a deliberate teaching strategy is distinct from just providing more opportunities for learners to practice a given clinical skill. Specifically, the testing effect in a clinical skills context refers to learners under direct observation being watched undertaking a task, with the opportunity to integrate their declarative and procedural knowledge, and given feedback in terms of performance outcome on it, or strategies for improvement afterward.

Educators can test learners using a variety of approaches and testing should be used as a teaching strategy as soon as learners progress with skill acquisition. However, for implementation across a curriculum, time and space as well as resources are necessary for testing, as well as for giving feedback. Testing also needs to be organized in such a way that educators make observations over time and can build on feedback. Where teaching strategies involve single tests or testing too soon, learners may develop the illusion of knowledge since performance in the moment is a poor predictor of learning over time. In these situations, the ‘test’ is merely assessing the fluency of short-term retention, and not their long-term ability to recall knowledge and skills over time, with transfer these across contexts, as well as their ability to prevent forgetting.

Another feature of the testing effect involves the role of feedback and the way it is constructed. Feedback after a test is often given by teachers without triangulating observations with past performance, but in retrieval practice educators are required to proactively give attention to previous attempts on a task and ensure feedback includes a more holistic evaluation of progress made over successive attempts on the task. Peer-to-peer testing may be an alternative to educator–led activities since the essential component of the strategy is the opportunity for testing during practice (the process) and not the performance in and of itself (the outcome). This may compromise instructional designs such as deliberate practice and mastery learning because test often happens at the end of a unit, which is a smaller part of the skill training.

Overlearning

Overlearning refers to individuals engaging in repeated and further practice after competence is achieved, and the benefits include less skill decay through increased consolidation of procedural knowledge (Shibata et al. Citation2017). Overlearning as a deliberate learning strategy should not be confused with individuals engaged in ‘practice, practice, practice’ since individuals engaged in repeated practice may be repeating the wrong approach over and over again. Likewise, overlearning is also distinct from deliberate practice as an instructional approach since overlearning is often applied for proceduralization in the final part of the training.

The overall effect of overlearning as a strategy depends on the type of skill and the extent to which learners engage in both the quality and quantity of repeated practice (Driskell et al. Citation1992). To maximize effect, overlearning should continue for twice the period of time necessary to achieve a competent level of performance (Driskell et al. Citation1992; Krueger Citation1929; Rohrer et al. Citation2005). The challenge in HPE is the time taken for some individuals to achieve a competent level of performance varies across and within different clinical skills. Likewise, judgments about competence are unreliable across raters and as Ericsson (Citation2004) states, the yardstick to measure expert performance across the different clinical domains is yet to be invented. Therefore, learners need also have developed other skills around self-assessment and self-regulated learning, in order to engage fully with this strategy. Whilst some learners likely engage in overlearning independently to some degree, learners who struggle with clinical skills are likely to do so less and require support from educators to explain the purpose of it.

Overlearning generates opportunities for the individual to obtain more feedback about their level of performance when the practice is observed by an educator, as well as address any developing gaps in performance before they inhibit performance on a task. Overlearning also enhances the time to automaticity and reduces the amount of concentrated effort that individuals have to invest into the technical aspects of their performance, thereby freeing up overall cognitive capacity for monitoring their performance (Driskell et al. Citation1992). This is particularly relevant when making sense of performance differences between novices at summative assessments, especially on simple and complex clinical skills tasks. Whilst high-performing individuals are able to manage the challenge of performing clinical skills in front of others at assessment, low performers struggle for many reasons, once of which is the fact they have not achieved automaticity and their performance suffers as a consequence of the additional cognitive overload. Therefore, from a cognitive science perspective, overlearning should be used as a strategy to consolidate procedural knowledge, rather than a strategy to achieve competence. Furthermore, overlearning employed before competence is achieved, may lead mastery, not through comprehension, but through mimicry which is another manifestation related to individuals having an illusion of knowledge. In this context, by repeating the approach over and over again, and specifically mimicking the behaviors expected of individuals demonstrating competence on a given clinical skill, low performers in particular engage in mimicry in order to progress at assessment, with little concern about their lack of knowledge or understanding about the clinical skill itself.

Interleaving

Although much of the expertise espoused in HPE comes from demonstrating clinical skills across a range of different situational contexts, there can often be little variability in the types of practice opportunities afforded to learners in traditional training programs. For example, when learning cannulation, the usual practice opportunities involve chances to refine the motor aspects of this skill alone, often with a limited range of practice materials such as a plastic manikin. A similar approach is usually provided for a range of clinical skills, from suturing and venepuncture through to basic and advanced life support skills, At first sight, this focus on a single skill at a time would seem rational and logical but evidence from the cognitive science literature consistently demonstrates the benefits of interleaving as a strategy for increasing knowledge and skills development over time. Specifically, variability of skills practice – either different components of the overall skill or different skills (no matter ‘how related or unrelated’ to each other) practiced in the same session minimizes skill decay when compared with a practice that offers limited or no variability. Furthermore, individuals who engage in traditional skills practice have greater rates of decay when compared with individuals who engage with interleaving as a skills development strategy (Spruit et al. Citation2014).

The way in which variability is also designed in the practice opportunities is important. Research into the acquisition and retention of minimally invasive surgery skills demonstrates practice with randomly alternating images rather than a single or static teaching image enhances the learning process (Jordan et al. Citation2000). Individuals who practiced skills development with greater variability of the image in training sessions acquired basic surgical and psychomotor skills at a much faster rate than those trained with a limited number of images used as part of their training. This degree of variability is found in real-life settings but simulation can also provide this type of experience. The importance of variability is also highlighted in the motor skills literature (Kerr and Booth Citation1978; Savion-Lemieux and Penhune Citation2005, Corrêa et al. Citation2014), learning the relation between painters and painting styles (Kornell and Bjork Citation2008) and problem-solving (Rohrer and Taylor Citation2007).

Elaboration and generation

Elaboration refers to the connection of new knowledge with previously stored knowledge in memory (Bartsch et al. Citation2018; Holland et al. Citation2011). In the case of a simple clinical skill such as venepuncture or cannulation for example, this could involve educators supporting learners to recall the anatomy of the arm vessels prior to demonstrating the technical aspects of obtaining a blood sample or placing the actual plastic apparatus in the hand of a patient. In the case of a complex clinical skill such as advanced life support, this could involve teachers supporting learners to recall knowledge about the cardiac waveform, prior to teaching them about rhythm recognition in the context of cardiac defibrillation.

Elaboration is a conscious effort to activate previously stored knowledge and it occurs mainly through declarative knowledge, which is the knowledge that is possible to retrieve. Since clinical skills require both declarative and procedural knowledge, elaboration may also benefit the acquisition of clinical skills by activating related declarative knowledge that is stored and connecting the new knowledge with the activated knowledge. Feedback and reflection may also be used as a way to support elaboration since both require to connect what learners know with the new content. Supporting learners to make a connection between the new material with their previous knowledge will further improve their retention (Bartsch et al. Citation2018; Stark et al. Citation2002). Bartsch et al. (Citation2018) compared the effect of elaboration with non-elaboration on remembering six nouns in serial order and the results indicated that learners from the elaboration group had a better performance in the retention test than the non-elaboration learners.

Generation refers to the specific enabling of learners to identify their own solutions to a given problem, in this context, undertaking a clinical skill, before specific instruction or information is given by the educator (Jacoby Citation1978; Slamecka and Graf Citation1978; Richland et al. Citation2009). Whilst educators may associate this strategy simply as facilitating learners to ‘have a go first’, generation also involves deliberately enabling learners to activate some form of prior knowledge – both declarative and procedural – before either instruction or new information is given to them. The impact of this activation for the learner is the easier association between new and previous knowledge.

Although generation as an instructional approach in the context of clinical skills training has a limited evidence base in the wider literature, much of the existing pedagogy used in HPE draws roots from this concept. The central tenet of discovery learning or discovery practice is the notion that learners should be supported to find the answer to a given problem by themselves. Whilst this may be the case, many of the reviews in this approach suggest there is a strong role for learners to receive direct instruction after the initial discovery phase has expired. The initial discovery phase should in theory activate relevant declarative knowledge, benefiting the retention and retrieval of that knowledge by connecting the new knowledge with the previous stored knowledge. This could be used at the beginning of every clinical training by encouraging learners to first try the clinical skill. For example, in advanced life support training, the first part of the training should be a simulated scenario in which learners need to attend to a patient requiring resuscitation. In that way, learners would activate all the related knowledge they possessed.

Desirable difficulties

The amount of knowledge for clinical skills required for learners to acquire by the end of a program of study is significant, and the risk of learners engaging in massed practice ‘just to get through’ assessments is significant. Whilst overlearning is a helpful strategy in some circumstances, overlearning for the purpose of passing the assessment and not mastering the clinical skill risks learners forgetting knowledge and skills decaying over time. Although, interleaving retrieval and spaced practice mitigate some of the effects of forgetting (Bjork and Bjork Citation2019; Soderstrom and Bjork Citation2015), the use of desirable difficulties as a strategy can be effective also for reminding learners about the problem of confusing remembering, with knowing or understanding. The illusion of knowledge is the phenomenon whereby learners believe they know or have skills, by simply watching others demonstrate skills in front of them, or learners practicing skills under close observation without necessarily demonstrating them again over time, or in other contexts.

Desirable difficulties is another strategy that teachers can employ for both getting the attention of learners but also use simple questions to reinforce the difference between remember, knowing, and understanding. Simple instructions to a general population such as ‘can you draw a bicycle’ have been shown to illustrate the illusion of knowledge phenomenon. Thereafter, teachers can build on the gaining of learner attention by providing individuals with specific tasks that induce the experience of struggling, but also enables them to also experience success afterward. Therefore difficulty is only desirable if it evokes both outcomes – struggling and success (Bjork and Bjork Citation2011) – rather than just the former without any form of support, scaffold, or success provided by the teacher.

Current evidence also suggests that both making errors and difficulty retrieving declarative and procedural knowledge when undertaking a task are necessary for facilitating good long-term retention of knowledge and skills over time (Bjork and Bjork Citation2011; Steenhof Woods and Mylopoulos Citation2020). The evidence for using desirable difficulties exists against a backdrop of other evidence proposing that practice is best when errors are eradicated by the presence of a teacher. That body of literature goes further and implies that learning through making errors is counterproductive and in the event, mistakes happen, they are simply due to inadequate instruction (Skinner Citation1958). Furthermore, learning without making errors – also known as errorless learning – can ‘feel good’ for learners since they see themselves progressing without making mistakes, simply fueling the illusion of knowing even more (Glenberg et al. Citation1982). The unintended consequences of approaches that espouse errorless learning are learners (and instructors) comfortable that individuals can adequately perform without supervision, which may be evidence in the short-term but may not actually be the case over time (for a review see, Higgins et al. Citation2021a, Citation2021b).

Feedback

Feedback is one of the most studied educational interventions, and there is much evidence for the role of feedback in enhance learning within the psychology and education literature (Wisniewski Zierer and Hattie Citation2019). A number of reviews and guides on giving feedback are available (for example, see Veloski et al. Citation2006; Wulf Shea and Lewthwaite Citation2010; Tavakol et al. Citation2022), however, there are specific considerations when giving feedback about the development of clinical skills from a cognitive science perspective.

Although giving feedback to learners is essential during the period of skill acquisition, there is a consensus within cognitive science that the amount of feedback during practise should reduce over time. Evidence suggests that learning outcomes actually increase despite the amount of feedback decreasing over time (Kovacs and Shea Citation2011; Sulzenbruck and Heuer Citation2011). The rationale for modifying the volume of the feedback given over time is to minimize the risk that learners become conditioned to receiving a significant amount of feedback if unrestrained feedback irrespective of their stage of learning. Rather than moving through development stages and achieving both greater independence and self-awareness, (Schmidt Citation1991; Salmoni et al. Citation1984), learners become over-reliant on getting feedback as a form of seeking approval or permission before extending themselves or moving on with their development independently.

A progressive decrease in feedback as a form of scaffolding also creates the opportunity for learners to experience making mistakes, as they gain both experience and expertise, again reminding individuals about the importance of errors for learning, but also being mindful for the occurrence of errors in actual clinical practice. For example, during advanced life support training, feedback should be plentiful and constant at the beginning of the course, since learners are introduced to new knowledge and multiple skills at the same time; arguably both also received in a new learning and working context as well. However, at the end of the training course, students should receive very little feedback, since they should be able to demonstrate knowledge and skills without the need for close supervision; similar to actual clinical practice.

When a clinical skill is practiced frequently, the feedback should support its acquisition, whereas when practiced infrequently, the feedback should support retention. For example, Cecilio-Fernandes et al. (Citation2020) compared three different types of feedback, expert, augmented, and a combination of both, on the acquisition and retention of transthoracic echocardiogram skill. During the acquisition, learners from all groups practiced until they were able to acquire images without making any mistakes twice in a row. Learners in the expert group were faster in acquiring the skill than the other groups. However, at the retention test, learners from the combined instruction obtained better-quality of images than the other two groups. These findings indicate that for acquisition one type feedback may be optimal, while for retention another type of feedback is optimal.

Practical implication

We recommend that educators are provided with opportunities to adapt the main cognitive science strategies to their own local context, including consideration of the availability of local resources and existing systems of skills training (Cecilio-Fernandes and Sandars Citation2021). This requires faculty development if the benefit of applying insights from cognitive science for clinical skills training is to be fully realized.

The Guide has highlighted the importance of declarative and procedural knowledge for clinical skill acquisition and reduction in skill decay. In order to provide the most appropriate mix of declarative and procedural knowledge during clinical skills teaching, we recommend that identifying the skill level of the learner is essential at the start of any clinical skills teaching. Our experience is that this essential step is often not included in clinical skills teaching. Identifying what type of knowledge the learner needs to acquire during the performance of the skill allows the educator to provide training to the individual learner. For example, learners without sufficient declarative knowledge may struggle with developing even the most basic skills without addressing those knowledge gaps beforehand. Asking specific questions, either direct or by a pre-training multiple-choice quiz, can identify deficits in declarative knowledge. Deficits in procedural knowledge can be identified by watching individuals perform the skill. In contrast, learners with declarative knowledge could be supported to move on with their training and develop more sophisticated procedural knowledge and greater challenge on tasks. Some learners may have appropriate levels of both declarative and procedural knowledge but struggle to integrate both components across a range of tasks in different situations. For example, some learners attempting to measure blood pressure may be unable to correctly undertake the skill across patients with different physical attributes such as individuals with limb deformities or arteriovenous fistulae in situ.

A common question of many medical educators is how to start. Although there is no simple answer as it will depend on each context, we recommend that medical educators start with the strategy with the most evidence.

Feedback, spaced and retrieval practice are strategies with the highest level of evidence, including in medical education. More importantly, there are studies demonstrating the effect of clinical skills training, including declarative and procedural knowledge. Overlearning and interleaving have more evidence in skills that are heavily dependent on declarative knowledge and most of them are from cognitive psychology. Elaboration and generation are two concepts with the least amount of evidence for clinical skills training. Those concepts are also closely related to retrieval practice and feedback, thus both concepts may be optimal as part of retrieval practice and feedback, instead of standalone concepts.

We provide an illustrative example of how evidence-based cognitive science strategies can be implemented in clinical skills training:

Illustrative example of clinical skills training: Advanced life support training

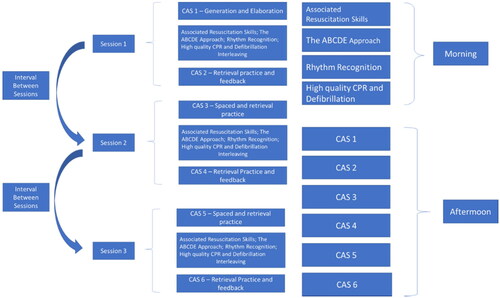

Advanced Life Support (ALS) requires multiple clinical skills, including auscultation, interpretation of electrocardiograms, and prescribing a variety of medications. The challenge for learners is the integration of a large volume of declarative and procedural knowledge. In , we provide an overview of how evidence-based cognitive science strategies can be practically implemented into ALS training (). is ordered by the strategy with the most evidence, and a description of what to do, why it is important, and how to do.

Table 1. Order of the strategy with the most evidence, and a description of what to do, why it is important, and how to do in ALS.

shows on the left side a training design of an introduction to ALS course using evidence-based cognitive science strategies. On the right side is the traditional design of the same course. This course was divided into four skill rotations and workshops:

Figure 1. On the left side is a training design of an introduction to ALS course using evidence based cognitive science strategies. On the right side is the traditional design of the same course.

Associated Resuscitation Skills

The ABCDE Approach to assessment inc IIO

Rhythm Recognition

High-quality CPR and Defibrillation

Also, in the course there are 6 Cardiac Arrest Scenarios (CAS) in which students have the opportunity to practice simulated clinical scenarios.

Further development and evaluation

Cognitive science is an exciting and evolving field that has advanced in the complexity of cognitive modeling as well as making an explicit connection with neuroscience. Cognitive models can also now predict the areas of the brain that will be activated, providing information on how declarative and procedural knowledge interact, with implications and insights for how complex skills are acquired (Borst and Anderson Citation2013; Taatgen Citation2013). Following the successful development of intelligent tutors used for teaching mathematics (Anderson et al. Citation1995), there is now an opportunity to consider how cognitive science principles can be implement to clinical skills training in HPE. Cognitive science may help in the design of theory-driven intelligent tutors for tasks such as clinical reasoning, removing the need for an expert to always be present for observing and giving feedback on performance. This may also be feasible for clinical skills with the use of simulators, which nowadays include many feedback systems. For example, laparoscopic simulators have included a haptic feedback system, which can be improved by using evidence from cognitive science. The simulators could also measure the level of performance and indicate when another training session is necessary. Cognitive science may also help increase understanding around given learners experience greater levels of difficulty when the amount of cognitive processing necessary to execute a task exceeds what they have to personally invest into it (Korbach et al. Citation2017). For example, requiring learners to perform an ultrasound exam on an obese patient without mastering all the components of the ultrasound will lead to an unsuccessful performance of the ultrasound while compromising patient care.

Conclusion

Teaching and learning clinical skills is an essential aspect of HPE. However, current approaches still do not prevent skill decay. We encourage HPE educators to implement a variety of evidence-based cognitive science strategies with their current approaches for the optimization of clinical skill acquisition and to prevent skill decay.

Authors contributions

All authors were responsible for writing, revising it critically, and final approval of the version.

Disclosure statement

The authors report no conflicts of interest. The authors alone are responsible for the content and writing of the article.

Additional information

Funding

Notes on contributors

Dario Cecilio-Fernandes

Dario Cecilio-Fernandes, MSc, PhD, AFAMEE, is a researcher in the Department of Medical Psychology and Psychiatry, School of Medical Sciences, University of Campinas and has a research interest on assessment and skill acquisition. He has also working with faculty development in the University of Campinas.

Rakesh Patel

Rakesh Patel, MB, MMEd, MD, MRCP, is an associate professor of medical education, the University of Nottingham, UK, and honorary consultant nephrologist, Nottingham University Hospitals NHS Trust, UK.

John Sandars

John Sandars, MB, MSc, MD, MRCP, MRCGP, FAcad, MEd, is Professor of Medical Education in the Health Research Institute at Edge Hill University and has a research and development interest in the use of self-regulated learning theory for performance improvement of individuals.

References

- Anderson JR, Bothell D, Byrne MD, Douglass S, Lebiere C, Qin Y. 2004. An integrated theory of the mind. Psychol Rev. 111(4):1036–1060.

- Anderson JR. 1982. Acquisition of cognitive skill. Psychol Rev. 89(4):369.

- Anderson JR, Corbett AT, Koedinger KR, Pelletier R. 1995. Cognitive tutors: lessons learned. JLS. 4(2):167–207.

- Barsuk JH, Cohen ER, McGaghie WC, Wayne DB. 2010. Long-term retention of central venous catheter insertion skills after simulation-based mastery learning. Acad Med. 85(10 Suppl):S9–S12.

- Bartsch LM, Singmann H, Oberauer K. 2018. The effects of refreshing and elaboration on working memory performance, and their contributions to long-term memory formation. Mem Cognit. 46(5):796–808.

- Bjork EL, Bjork RA. 2011. Making things hard on yourself, but in a good way: creating desirable difficulties to enhance learning. In: Gernsbacher MAE, Pew RW, Hough LM, Pomerantz JR, editors. Psychology and the real world: essays illustrating fundamental contributions to society. New York (NY): Worth Publishers; p. 59–68.

- Bjork RA, Allen TW. 1970. The spacing effect: consolidation or differential encoding? J Verbal Learn Verbal Behav. 9(5):567–572.

- Bjork RA, Bjork EL. 2019. Forgetting as the friend of learning: implications for teaching and self-regulated learning. Adv Physiol Educ. 43(2):164–167.

- Borst JP, Anderson JR. 2013. Using model-based functional MRI to locate working memory updates and declarative memory retrievals in the fronto-parietal network. Proc Natl Acad Sci USA. 110(5):1628–1633.

- Boutis K, Pecaric M, Carrière B, Stimec J, Willan A, Chan J, Pusic M. 2019. The effect of testing and feedback on the forgetting curves for radiograph interpretation skills. Med Teach. 41(7):756–764.

- Bullock I, Davis M, Lockey A, Mackway-Jones K. 2015. Pocket guide to teaching for clinical instructors. New York, NY: John Wiley & Sons.

- Carpenter SK, Cepeda NJ, Rohrer D, Kang SHK, Pashler H. 2012. Using spacing to enhance diverse forms of learning: review of recent research and implications for instruction. Educ Psychol Rev. 24(3):369–378.

- Cecilio-Fernandes D, Cnossen F, Coster J, Jaarsma ADC, Tio RA. 2020. The effects of expert and augmented feedback on learning a complex medical skill. Percept Mot Skills. 127(4):766–784.

- Cecilio-Fernandes D, Cnossen F, Jaarsma ADC, Tio RA. 2018. Avoiding surgical skill decay: a systematic review on the spacing of training sessions. J Surg Educ. 75(2):471–480.

- Cecilio-Fernandes D, Sandars J. 2021. The frustrations of adopting evidence-based medical education and how they can be overcome! Med Teach. 43(1):108–109.

- Cepeda NJ, Pashler H, Vul E, Wixted JT, Rohrer D. 2006. Distributed practice in verbal recall tasks: a review and quantitative synthesis. Psychol Bull. 132(3):354–380.

- Clark RE, Feldon DF, Van Merriënboer JJ, Yates KA, Early S. 2008. Cognitive task analysis. In: Handbook of research on educational communications and technology. Oxfordshire (UK): Routledge; p. 577–593.

- Cook DA, Brydges R, Zendejas B, Hamstra SJ, Hatala R. 2013. Mastery learning for health professionals using technology-enhanced simulation: a systematic review and meta-analysis. Acad Med. 88(8):1178–1186.

- Corrêa UC, Walter C, Torriani-Pasin C, Barros J, Tani G. 2014. Effects of the amount and schedule of varied practice after constant practice on the adaptive process of motor learning. Motricidade. 10(4):35–46.

- Dempster FN. 1989. Spacing effects and their implications for theory and practice. Educ Psychol Rev. 1(4):309–330.

- Driskell JE, Willis RP, Copper C. 1992. Effect of overlearning on retention. J Appl Psychol. 77(5):615.

- Ericsson KA, Charness N. 1994. Expert performance: its structure and acquisition. Am Psychol. 49(8):725.

- Ericsson KA, Krampe RT, Tesch-Römer C. 1993. The role of deliberate practice in the acquisition of expert performance. Psychol Rev. 100(3):363.

- Ericsson KA, Lehmann AC. 1996. Expert and exceptional performance: evidence of maximal adaptation to task constraints. Annu Rev Psychol. 47(1):273–305.

- Ericsson KA. 2004. Deliberate practice and the acquisition and maintenance of expert performance in medicine and related domains. Acad Med. 79(10):S70–S81.

- Faustinella F, Jacobs RJ. 2018. The decline of clinical skills: a challenge for medical schools. Int J Med Educ. 9:195–197.

- Glenberg AM, Wilkinson AC, Epstein W. 1982. The illusion of knowing: failure in the self-assessment of comprehension. Mem Cognit. 10(6):597–602.

- Higgins M, Madan C, Patel R. 2021a. Development and decay of procedural skills in surgery: a systematic review of the effectiveness of simulation-based medical education interventions. Surgeon. 19(4):e67–e77.

- Higgins M, Madan CR, Patel R. 2021b. Deliberate practice in simulation-based surgical skills training: a scoping review. J Surg Educ. 78(4):1328–1339.

- Holland AC, Addis DR, Kensinger EA. 2011. The neural correlates of specific versus general autobiographical memory construction and elaboration. Neuropsychologia. 49(12):3164–3177.

- Jacoby LL. 1978. On interpreting the effects of repetition: solving a problem versus remembering a solution. J Verbal Learn Verbal Behav. 17(6):649–667.

- Jordan JA, Gallagher AG, McGuigan J, McGlade K, McClure N. 2000. A comparison between randomly alternating imaging, normal laparoscopic imaging, and virtual reality training in laparoscopic psychomotor skill acquisition. Am J Surg. 180(3):208–211.

- Karpicke JD, Roediger HL. 2008. The critical importance of retrieval for learning. Science. 319(5865):966–968.

- Kerr R, Booth B. 1978. Specific and varied practice of motor skill. Percept Mot Skills. 46(2):395–401.

- Korbach A, Brünken R, Park B. 2017. Measurement of cognitive load in multimedia learning: a comparison of different objective measures. Instr Sci. 45(4):515–536.

- Kornell N, Bjork RA. 2008. Learning concepts and categories: is spacing the “enemy of induction”? Psychol Sci. 19(6):585–592.

- Kovacs AJ, Shea CH. 2011. The learning of 90 continuous relative phase with and without lissajous feedback: external and internally generated bimanual coordination. Acta Psychol. 136(3):311–320.

- Kromann CB, Jensen ML, Ringsted C. 2009. The effect of testing on skills learning. Med Educ. 43(1):21–27.

- Krueger WCF. 1929. The effect of overlearning on retention. J Exp Psychol. 12(1):71.

- Larsen DP, Butler AC, Lawson AL, Roediger HL. 2013. The importance of seeing the patient: test-enhanced learning with standardized patients and written tests improves clinical application of knowledge. Adv Health Sci Educ Theory Pract. 18(3):409–425.

- Larsen DP, Butler AC, Roediger IH. 2009. Repeated testing improves long‐term retention relative to repeated study: a randomised controlled trial. Med Educ. 43(12):1174–1181.

- Larsen DP, Butler AC, Roediger IH. 2013. Comparative effects of test‐enhanced learning and self‐explanation on long‐term retention. Med Educ. 47(7):674–682.

- Li Q, Ma E, Liu J, Fang L, Xia T. 2011. Pre-training evaluation and feedback improve medical students’ skills in basic life support. Med Teach. 33(10):e549–e555.

- McGaghie WC, Issenberg SB, Cohen ER, Barsuk JH, Wayne DB. 2011. Does simulation-based medical education with deliberate practice yield better results than traditional clinical education? A meta-analytic comparative review of the evidence. Acad Med. 86(6):706–711.

- McGaghie WC. 2015. Mastery learning: it is time for medical education to join the 21st century. Acad Med. 90(11):1438–1441.

- Michels ME, Evans DE, Blok GA. 2012. What is a clinical skill? Searching for order in chaos through a modified Delphi process. Med Teach. 34(8):e573–e581.

- Raupach T, Andresen JC, Meyer K, Strobel L, Koziolek M, Jung W, Brown J, Anders S. 2016. Test‐enhanced learning of clinical reasoning: a crossover randomised trial. Med Educ. 50(7):711–720.

- Richland LE, Kornell N, Kao LS. 2009. The pretesting effect: do unsuccessful retrieval attempts enhance learning? J Exp Psychol Appl. 15(3):243–257.

- Roediger IH, Karpicke JD. 2006a. Test-enhanced learning: taking memory tests improves long-term retention. Psychol Sci. 17(3):249–255.

- Roediger IH, Karpicke JD. 2006b. The power of testing memory: basic research and implications for educational practice. Perspect Psychol Sci. 1(3):181–210.

- Rohrer D, Taylor K, Pashler H, Wixted JT, Cepeda NJ. 2005. The effect of overlearning on long‐term retention. Appl Cogn Psychol. 19(3):361–374.

- Rohrer D, Taylor K. 2007. The shuffling of mathematics problems improves learning. Instr Sci. 35(6):481–498.

- Sall D, Warm EJ, Kinnear B, Kelleher M, Jandarov R, O'Toole J. 2021. See one, do one, forget one: early skill decay after paracentesis training. J Gen Intern Med. 36(5):1346–1351.

- Salmoni AW, Schmidt RA, Walter CB. 1984. Knowledge of results and motor learning: a review and critical reappraisal. Psychol Bull. 95(3):355–386.

- Savion-Lemieux T, Penhune VB. 2005. The effects of practice and delay on motor skill learning and retention. Exp Brain Res. 161(4):423–431.

- Schmidt RA. 1991. Frequent augmented feedback can degrade learning: evidence and interpretations. In: Requin J, Stelmach GE, editors. Tutorials in motor neuroscience. Netherlands: Springer; p. 59–75.

- Shibata K, Sasaki Y, Bang JW, Walsh EG, Machizawa MG, Tamaki M, Chang L, Watanabe T. 2017. Overlearning hyperstabilizes a skill by rapidly making neurochemical processing inhibitory-dominant. Nat Neurosci. 20(3):470–475.

- Skinner BF. 1958. Teaching machines. Science. 128(3330):969–977.

- Slamecka NJ, Graf P. 1978. The generation effect: delineation of a phenomenon. J Exp Psychol Hum Learn. 4(6):592.

- Soderstrom NC, Bjork RA. 2015. Learning versus performance: an integrative review. Perspect Psychol Sci. 10(2):176–199.

- Spruit EN, Band GP, Hamming JF, Ridderinkhof KR. 2014. Optimal training design for procedural motor skills: a review and application to laparoscopic surgery. Psychol Res. 78(6):878–891.

- Srivilaithon W, Amnuaypattanapon K, Limjindaporn C, Diskumpon N, Dasanadeba I, Daorattanachai K. 2020. Retention of basic-life-support knowledge and skills in second-year medical students. Open Access Emerg Med. 12:211–217.

- Stark R, Mandl H, Gruber H, Renkl A. 2002. Conditions and effects of example elaboration. Learn Instr. 12(1):39–60.

- Steenhof N, Woods NN, Mylopoulos M. 2020. Exploring why we learn from productive failure: insights from the cognitive and learning sciences. Adv Health Sci Educ Theory Pract. 25(5):1099–1106.

- Sulzenbruck S, Heuer H. 2011. Type of visual feedback during practice influences the precision of the acquired internal model of a complex visuo-motor transformation. Ergonomics. 54(1):34–46.

- Taatgen NA, Lee FJ. 2003. Production compilation: a simple mechanism to model complex skill acquisition. Hum Factors. 45(1):61–76.

- Taatgen NA. 2013. The nature and transfer of cognitive skills. Psychol Rev. 120(3):439–471.

- Tavakol M, Scammell BE, Wetzel AP. 2022. Feedback to support examiners’ understanding of the standard-setting process and the performance of students: AMEE Guide No. 145. Med Teach. 44(6):582–595.

- Veloski J, Boex JR, Grasberger MJ, Evans A, Wolfson DB. 2006. Systematic review of the literature on assessment, feedback and physicians’ clinical performance: BEME Guide No. 7. Med Teach. 28(2):117–128.

- Wik L, Myklebust H, Auestad BH, Steen PA. 2002. Retention of basic life support skills 6 months after training with an automated voice advisory manikin system without instructor involvement. Resuscitation. 52(3):273–279.

- Wisniewski B, Zierer K, Hattie J. 2019. The power of feedback revisited: a meta-analysis of educational feedback research. Front Psychol. 10:3087.

- Wulf G, Shea C, Lewthwaite R. 2010. Motor skill learning and performance: a review of influential factors. Med Educ. 44(1):75–84.