Abstract

Background

Artificial Intelligence (AI) is rapidly transforming healthcare, and there is a critical need for a nuanced understanding of how AI is reshaping teaching, learning, and educational practice in medical education. This review aimed to map the literature regarding AI applications in medical education, core areas of findings, potential candidates for formal systematic review and gaps for future research.

Methods

This rapid scoping review, conducted over 16 weeks, employed Arksey and O’Malley’s framework and adhered to STORIES and BEME guidelines. A systematic and comprehensive search across PubMed/MEDLINE, EMBASE, and MedEdPublish was conducted without date or language restrictions. Publications included in the review spanned undergraduate, graduate, and continuing medical education, encompassing both original studies and perspective pieces. Data were charted by multiple author pairs and synthesized into various thematic maps and charts, ensuring a broad and detailed representation of the current landscape.

Results

The review synthesized 278 publications, with a majority (68%) from North American and European regions. The studies covered diverse AI applications in medical education, such as AI for admissions, teaching, assessment, and clinical reasoning. The review highlighted AI's varied roles, from augmenting traditional educational methods to introducing innovative practices, and underscores the urgent need for ethical guidelines in AI's application in medical education.

Conclusion

The current literature has been charted. The findings underscore the need for ongoing research to explore uncharted areas and address potential risks associated with AI use in medical education. This work serves as a foundational resource for educators, policymakers, and researchers in navigating AI's evolving role in medical education. A framework to support future high utility reporting is proposed, the FACETS framework.

Background

The use of artificial intelligence (AI) in medicine is expected to have a significant impact on patient care, medical research, and health systems (Weidener and Fischer Citation2023). The integration of artificial intelligence (AI) and specific tools or techniques to access it, such as natural language processing (NLP), machine learning (ML) and generative pre-trained transformers (GPT), have the potential to transform both medical education content and processes. As technology continues to advance, it is essential to examine the impact of these developments on all aspects of medical education, from admissions to curricula, teaching, and assessment.

Practice points

What is the extent of evidence on the use of AI (in all forms including NLP, ML and GPT) in medical education and what methods of study have been employed?

What are the key content themes and areas of focus for these works?

What, if any, areas are appropriate for more in-depth systematic review?

What are the gaps in the evidence base currently that should be considered by future researchers? To aid consistent and transparent use of terms throughout this review, Table 1 gives some definitions we have employed throughout.

Whilst the field is not new, the recent application of AI based on machine learning and large language models (LLM), which form the core of AI based chat applications such as ChatGPT by OpenAI has made the technology more widely accessible and easy to use. Masters (Citation2023a) characterised this monumental step as one that could lead to consideration of a pre and post ChatGPT world.

With the rapid expansion of AI, medical educators have become increasingly concerned about the associated risks. A recent piece from a physician defense organization (Graham Citation2023) warned of the risks of students employing these technologies for their assignments. Other works have cautioned the community concerning AI’s potential to undermine the integrity of academic publishing (Masters Citation2023b; Loh Citation2023). Educators have also become more excited by AI, as recent landmark works have highlighted the power of AI to enhance assessment (e.g. Schaye et al. Citation2022), and make educational processes such as admissions and selection more efficient (e.g. Burk-Rafel et al. Citation2021).

A review in 2021 by Lee J et al. found a diverse range of 22 published studies on what and how to teach AI within undergraduate medical education (UME), but the wider fields of graduate medical education (GME) and continuing professional development (CPD) were outside of scope. Publications and interest in AI has increased exponentially in the last 12 months in response to the launch of ChatGPT, indicating the need for a new review of the evidence.

This review aims to provide insights into the current state of AI applications and challenges within the full continuum of medical education. We chose a scoping review methodology, mapping out the landscape of existing literature, to delineate the evidence base, classify methodologies, and highlight thematic content areas ripe for systematic investigation. We aim to provide the path forward, guiding AI integration into medical education and ensuring that healthcare professionals are adeptly equipped for the evolving and increasingly complex healthcare milieu.

Methods

This scoping review was conducted in a rapid timeframe – completed within 16 weeks of its inception. Despite the expedited process, we ensured systematicity, and maintained integrity and methodological precision, in line with prior publications (Gordon et al. Citation2020; Daniel et al. Citation2021). Our methodology was guided by Arksey and O’Malley’s (Citation2005) scoping review framework, which was refined by Levac et al. (Citation2010). In terms of reporting, we adhered to the STORIES statement (STructured apprOach to the Reporting In healthcare education of Evidence Synthesis) (Gordon and Gibbs Citation2014) and BEME guidance (Hammick et al. Citation2010). We also conformed to the PRISMA-ScR reporting standards (Tricco et al. Citation2018), drawing on support from Peters et al. (Citation2020, Citation2022) to offset the absence of specialized reporting standards for scoping reviews in health professions education. A protocol is available at the following link (https://clok.uclan.ac.uk/49900/).

The five stages of a scoping review were followed (Arksey and O’Malley Citation2005):

Stage 1: identifying the research aims/questions (as stated above)

Stage 2: identifying relevant studies

Stage 3: study selection

Stage 4: charting the data

Stage 5: collating, summarizing and reporting the results

Stage 2: Search strategy

In conjunction with an experienced information specialist, we conducted a search of PubMed/MEDLINE, EMBASE, and MedEdPublish without restrictions on date or language. We used a comprehensive search strategy with both controlled vocabulary (e.g. MeSH terms) and keywords. The search terms were based on keywords related to AI, ML, NLP, GPT, and medical education. The search strategy evolved through iterative pilot searches to refine the use of complex terminology. The initial search in July 2023 yielded 1,598 publications. Reference list screening identified key publications not captured, prompting further refinement. The final, more inclusive search was executed in August 2023. The final complete search strategy is detailed in Supplementary Appendix 1.

We reviewed the bibliographies of included studies for any missed articles.

Stage 3: Study selection

The screening process was conducted by pairs of authors (AA, HU, MD, MG, RG) who independently reviewed titles, abstracts, and full texts. Discrepancies were resolved by consensus or with a third author’s input. A Preferred Reporting Items for Systematic Reviews and Meta-Analyses (PRISMA) flow diagram was constructed to outline the publication selection steps.

Inclusion criteria

Publications that described the use of AI, ML, NLP, or GPT in medical education

Publications that discussed AI, its impact, attitude, knowledge or view, regardless of whether they deployed AI

Publications in undergraduate medical education (UME), graduate medical education (GME) or continuing professional development

Publications with medical students, residents, fellows, or physicians. If discussing multi-professional learner groups, medics comprised a significant proportion.

Publications focused on all relevant areas, including curriculum, teaching and learning, or assessment

Publications of all methodological forms

Publications in any language

Exclusion criteria

Publications that discussed the use of AI for diagnostic, clinical, healthcare organizational systems or governance roles in healthcare

Publications that employed AI exclusively as a research tool in health professions education

Publications employing AI to write their work without focusing on AI within their work

Stage 4: Charting the data

The approach taken was amended for each of two clear bodies of literature that were included.

The first category were articles and Innovations. These include scholarly reports of original studies of the proposed or actual employment of AI or related issues in medical education.

The second category were perspectives publications. These include scholarly pieces that present a point of view on AI issues in the field of medical education. They are not original study reports but rather informed opinions that draw on the authors’ expertise, experience, or review of the existing literature.

Articles and innovations

A team of eight authors (AA, BK, CJC, HU, MH, MS, NX, RB), working in pairs, charted the data. They used a predefined data extraction form, which was piloted and refined, with the following data extracted from each study:

Study identifiers (authors, title, publication date, journal)

Study characteristics (stage of education, country of origin, medical specialty, study aims, study design)

AI application or focus of use (e.g. admissions and selection, curriculum, assessment, etc.)

AI method (e.g. was AI treated as a general concept, or were specific methods such as DM, traditional ML, DL, and/or NLP used?)

AI use cases

Name of language model and software programs, if applicable

Rationale for AI use

Categorization per the SAMR technology integration framework (substitution, augmentation, modification, redefinition)

Description of AI implementation

Summary of results and Kirkpatrick’s outcomes, if applicable

Implications for future practice, policy or research

Perspectives

A team of four authors (JC, CM, JH, ST), working in two independent pairs, charted not only similar metadata from publications in the Perspective category but also additional details such as the underlying rationale for using AI, its applications, frameworks, and recommended topics for curriculum and research. They further considered ethical issues and the constraints of these applications.

Stage 5: Collating, summarizing and reporting the results

Articles and innovations

Utilizing data from the extraction forms, all authors collated the data into a number of tables and figures for easy visualization, to provide a map of the current evidence base. After charting, we produced a narrative account of our findings that considers the extent and range of developments included in the review, as well as the outcomes assessed. We identified areas where a paucity of research exists. We also suggested areas for future primary and secondary studies (i.e. systematic reviews).

Perspectives

Upon familiarizing itself with the data, the team developed a systematic approach for synthesis. Publications were first organized into two main categories reflecting the central themes of their perspectives: 'Importance of Integrating AI into Medical Education’ and 'Potential Application into Medical Education.'

The sub-team then applied a utility-focused approach to the latter category, highlighting the practical value of each publication for readers, termed ‘Contributions.’ These contributions are pivotal for making informed decisions, designing educational programs, or evaluating AI applications in medical education, despite the fact that most have yet to be studied or evaluated. The Contributions are delineated as follows: 1. Suggested Educational Applications and Developments, 2. Educational Frameworks, 3. Recommendations for Curriculum Content, Competencies, Tools and Resources, and 4. Ethics Considerations.

At each step, team members independently categorized the publications, followed by discussions to reach a consensus. We produced a narrative synopsis of our findings as ‘Brief Summary’ and ‘Key Points’ to aid reader comprehension and application.

Results

Overview

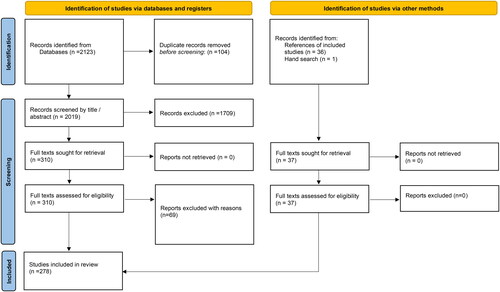

The database search yielded 2,123 publications, with 2,019 remaining after duplicate removal. Title and abstract screening narrowed the pool to 310 publications, with a high inter-rater reliability (κ = 0.86). Full-text review further excluded 70 publications, primarily for using AI solely as a research tool or lacking focus on AI, leaving 241 for inclusion. Hand-searching references added 37 publications, with 278 publications ultimately included in the review. The PRISMA diagram is shown in . Full appendix tables with extracted data for all publications have been uploaded to a repository and can be accessed following this link (https://clok.uclan.ac.uk/49919/). An infographic contained in gives much summary detail around the included studies.

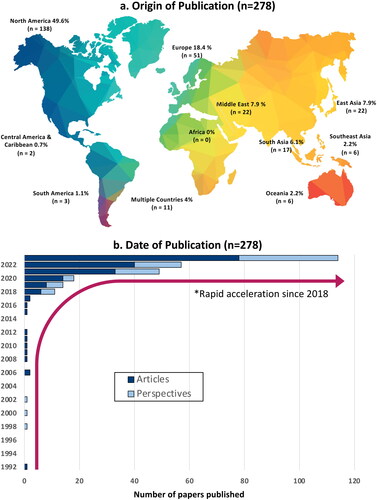

The geographic distribution of studies was predominantly Anglo-North American (n = 138, 49.6%), followed by European (n = 51, 18.4%), East Asian (n = 22, 7.9%), Middle Eastern (n = 22, 7.6%), and South Asian (n = 17, 6.1%) contributions. Other regions produced only a handful of studies and no studies were identified from Africa. The earliest publication on AI in medical education was in 1992. The temporal trend showed a surge in publications from 2018 onwards, with 11 in 2018, 14 in 2019, 18 in 2020, 49 in 2021, 57 in 2022, and 114 as of August 2023 (see and Supplementary Appendices 1 (columns E and I) and 2).

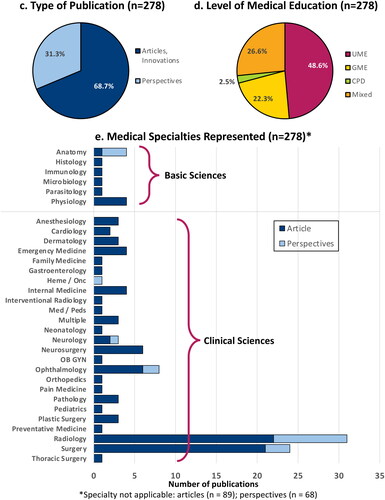

Most included publications fall under the category of articles or innovations (n = 191, 68.7%), as defined above, with the remainder being perspectives (n = 87, 31.3%). Undergraduate medical education (UME) was the focus of 48.6% (n = 135), graduate medical education (GME) 22.3% (n = 62), and continuing professional development (CPD) 2.5% (n = 7). The remaining publications spanned multiple levels (UME, GME, or CPD) (n = 74, 26.6%) (see and Supplementary Appendix 2 (column H) and 2).

Six basic science disciplines and 24 clinical specialties were represented in the included publications. Anatomy and Physiology were the most common basic science disciplines (n = 4, 1.4%, each), whereas radiology (n = 31, 11.2%) and surgery (n = 24, 8.7%) were the most common clinical specialties, followed by ophthalmology (n = 8, 2.9%) and neurosurgery (n = 6, 2.2%). One hundred fifty-seven studies (56.5%) did not specify a discipline or specialty (see and Supplementary Appendices 2 (column J) and 2).

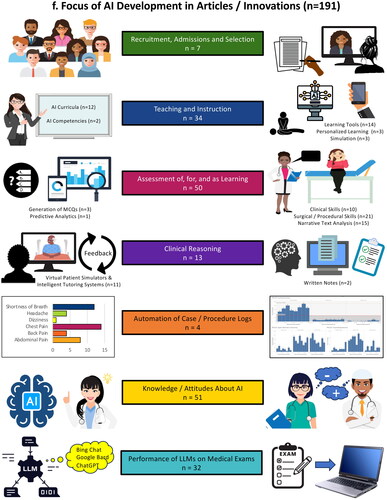

Articles and innovations included in the review

This review encompasses a wide range of articles and innovations, systematically cataloged as A1–A191 in Supplementary Table 2, facilitating cross-reference throughout this review. Of these studies, seven (3.6%) used AI for admissions and selection (A1–A7), 34 (17.8%) focused on teaching about AI or AI-augmented instruction (A8–A41), 50 (26.2%) used AI in assessment (A42–A91), thirteen (6.8%) used AI to teach or assess clinical reasoning (A92–A104), and four (2.1%) used AI for automation of case and procedure logs (A105–A108). A substantial portion (n = 51, 26.7%) investigated knowledge or attitudes about AI in medicine (A109–A159), and the performance of large language models (LLMs) on medical questions or exams was evaluated in 32 (16.8%) of the studies (A160–A191. (see and Supplementary Appendix 2, column M).

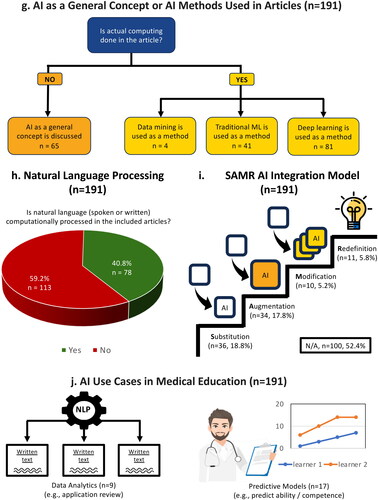

The technical application of AI varied. In 65 (34%) of the studies, AI was treated as a general concept without actual computation. The remainder of studies engaged in various computations: data mining in 4 (2.1%), traditional ML in 41 (21.5%) and DL in 81 (42.4%). NLP was used in 78 articles (40.8%). (See , and Supplementary Appendix 2, columns N and O. Also refer to for AI terminology.)

Table 1. AI terminology.

Employing the SAMR framework to evaluate four levels of technology integration, this review found that AI primarily served as a substitute in 36 articles (18.8%), enhanced existing processes through augmentation in 34 (17.8%), contributed to task redesign or modification in 10 (5.2%), and facilitated an entirely novel, previously unconceivable task or redefinition in 11 (5.8%). For a substantial number of articles (100, 52.4%) the SAMR framework did not apply (see and Supplementary Appendix 2).

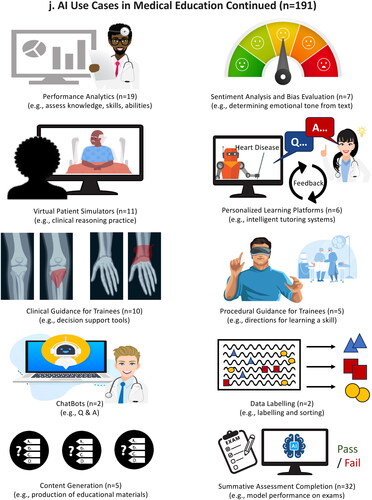

The review also identified a spectrum of AI applications, described as ‘use cases,’ which included data analytics (n = 9), predictive models (n = 17), performance analytics (n = 19), sentiment analysis or bias evaluation (n = 7), virtual patient simulators (VPSs) (n = 11), personalized learning platforms or intelligent tutoring systems (n = 6), clinical guidance for trainees (n = 10), procedural guidance for trainees (n = 5), ChatBots (n = 2), data labeling (n = 2), content generation (n = 5), and summative assessment completion (n = 32) (see and Supplementary Appendix 2, column P).

AI use in admissions and selection for medical school and residency

In one study (A7), an AI Chatbot was used during recruitment to host a virtual question and answer session. In another study (A5), sentiment analysis was used to detect gender bias in letters of reference for a surgery residency over three decades. This study demonstrated that while gender bias has decreased over time, letters continue to be positively biased towards men, impacting selection. Another study (A3) compared faculty scoring of medical student performance evaluations (MSPEs) to a machine learning (ML) model’s scores. The ML model was unable to successfully rank MSPEs using commercially available sentiment analysis programs, largely because all MSPEs were highly positive. A bespoke model may be developed in the future that can better replicate faculty scores.

In one study (A4), ML was used to develop a predictive model to identify candidates likely to be ranked and matched. The predictive accuracy for ranking was outstanding, with an area under the receiver operating characteristic (AUROC) of 0.925. In three studies (2 in UME (A2, A6) and 1 in GME (A1)), ML was used to screen applications. The ML models were trained on retrospective data sets, then validated on retrospective or prospective data sets. The models demonstrated impressive accuracy with AUCs of 0.925–0.95, and hold promise in the future for partially automating holistic review of large numbers of applications. When applied in a restorative fashion that augments human review, ML models can be used to mitigate bias and identify applicants who may otherwise have been screened out and not offered an interview (A1). With further validation and refinement, ML models have the potential to make application screening more efficient and equitable, and the ranking process more accurate.

Teaching about AI and AI-augmented instruction

A total of thirty–three articles addressed teaching about or instruction with AI (A8–41). Of these, 12 focused on AI as a learning tool, 11 on curriculum development, three on AI use for personalized education, five on the use of AI in medical simulation, and two on AI educational competencies.

The majority of articles (7 out of 12) focused on AI as a learning tool, training users to identify findings on images, including histopathologic identification of glomerulopathies (A22), neuroanatomic structures (A35), general tissue types (A31), and melanomas (A25). A few articles emphasized identification of clinical pathology (e.g. diabetic retinopathy (A26, A30) and hip fractures on plain radiographs (A24)). One article described AI–powered, real–time instruction on acquiring optimal echocardiographic views with point–of–care ultrasound (A32) and another trained students to develop AI algorithms for sonographic identification of pulmonary structures (A33). Another set of articles used the interactive features of chatbot-style tools for anatomy instruction (A29), delivering difficult news (A34), and LLM-based summaries of surgical procedures (A28).

Of the eleven articles that addressed AI curriculum development, a majority (n = 6) described the development of instructional modules. Four emphasized fundamental concepts in AI and ML for UME learners (A11, A13, A16, and A19). The GME/CPD studies largely focused on radiologists and included AI workshops (A14, A17), a deep-learning curriculum (A18), a data-science elective (A20) and a medical DM course (A21). One article described an introductory AI course for practicing physicians (A12).

Three articles explored ML for personalized learning, including an NLP algorithm to support trainee evaluation of geriatric patients through just-in-time learning (A36), a ML-powered adaptive learning system for preclinical students (A37), and a DL model for patient triage in ophthalmology (A38).

Five articles examined AI in medical simulation, covering topics like the interview of standardized patients about the use of robotic standardized patients (A39), an AI-assessed cognitive load in simulation through ECG and galvanic skin response (A40), a DL model for simulation outcome evaluation of recorded simulation sessions (A41), a ML-based multimedia simulation of an individual nephron (A23), and generative AI for creating images of dermatologic lesions (A27).

Lastly, two articles delved into educational competencies in AI, one developing a tool for assessing medical student readiness for AI use in clinical settings (A9), and the other examining the prevalence of AI competencies in GME and CPD settings in Germany (A10).

AI use in assessment

Of the 50 articles related to AI use in assessment, a focus on psychomotor skills was prevalent, with 21 articles examining this area: 18 on surgical skills and 3 on procedural skills, with the latter group focusing exclusively on intubation techniques (A71–73). Surgical skills assessments predominantly focused on minimally invasive and robotic surgery within general surgery (A74–A83, A85, A87–A88, A91), with a handful addressing neurosurgical (A84, A86, A89) and pediatric surgery (A90). Fifteen articles focused on narrative text analysis, 10 on clinical skills, 3 on generation of multiple choice questions (MCQs), and 1 on predictive analytics.

A notable trend involved leveraging ML as an alternative to human observation and feedback (A74, A76–A78, A83, A86, A88, A90). Many utilized virtual trainers or simulators to facilitate assessment in simulated environments. A particularly innovative approach involved cloud-based, AI-powered video analytics to monitor and assess users’ performance in minimally invasive surgery (A81). A few studies described the development of algorithms capable of discerning expertise levels or predicting surgical skill (A74–A76, A85, A86) or learning curves (A80, A84, A87, A91) based on visual or kinesthetic inputs. One study developed a ML model, integrating EEG data analysis of participants, to distinguish expert from novice surgeons (A89). These studies underscored the potential of AI to mitigate the resource intensity and inherent bias of traditional assessments.

Narrative text assessment is another area where AI is gaining ground, with sentiment analysis and NLP being common tools for extracting insights from textual data. This application of AI promises an efficient and automated analysis of extensive narrative information for enriching and contextualizing quantitative assessments such as ratings. Faculty’s narrative evaluations of residents (A55, A57, A62-A64, A67-A68, A69) and clerkship students (A55, A56, A65) were areas where AI was deployed to inform clinical competency committees (A55), gain insights into competency development (A57, A68-A69), generate quality ratings of faculty feedback (A64, A67), facilitate objective interpretation of faculty evaluations (A65), identify learners at risk (A68), and identify biases within written student evaluations (A56, A66). NLP was further used to parse through reflective writings of students (A59), assess professionalism in short answer responses (A61), identify patterns suggestive of professionalism lapses in evaluative feedback among faculty (A60), and evaluate physician performance as it related to professionalism and patient safety (A58).

Among studies of clinical skills assessment, seven out of ten focused on UME applications. Here, VPSs were a mainstay, reducing logistical demands (e.g. time and resource) of traditional assessments, and enabling remote examinations (A42, A48–A49, A51). AI’s role extended to virtual Objective Structured Clinical Examinations (OSCEs) (A42, A49) and automated grading of OSCEs (A43–A44, A50) or both (A46, A48, A51). In GME, there were studies describing the use of a virtual provider simulator for resident training in communication skills, with automated assessment and feedback (A47) and NLP for assessment of critical care trainees’ oral case presentations (A45).

Three studies delved into AI-generated MCQs, harnessing DL and NLP to create assessment tools and study aids (A52–A54). These studies emphasized AI’s capability to streamline question development, substituting intense human efforts, though still requiring human verification to ensure quality. The benefits of AI included speed, and ChatGPT emerged as the preferred tool. Study results were mixed, however, with some skepticism of AI’s readiness to fully take over the MCQ creation process (A52–54).

The final point to note is that predictive analytics played a notable role in evaluating surgical skills across multiple studies. However, only one study (A70) primarily focused on this aspect. This particular study investigated the prediction of medical student performance on high-stakes exams through ML. This illustrates a nascent but promising application of AI in predicting academic success in high-stakes environments.

AI to teach and assess clinical reasoning

Thirteen articles examining AI‘s role in teaching and assessing clinical reasoning reveal a promising frontier. Eight studies focused on the fusion of AI-powered VPSs and intelligent tutoring systems (ITSs) (A92, A95–A98, A101–A102, A104). VPSs simulate authentic patient interactions, where learners ask questions and receive answers from an avatar or via text, offering dynamic environments for learners to hone their reasoning through varied clinical scenarios. This allows learners opportunities to practice various components of the clinical reasoning process, from information gathering and hypothesis generation, to problem representation and diagnostic justification.

The ITSs complemented this by delivering personalized learning and real-time feedback, guiding learning through correct and incorrect reasoning responses (A92, A95–A97, A101–A102), and suggesting further study resources(A 95). When combined with analytics (A92, A96, A101, A102), these systems could yield nuanced insights for educators and learners on performance, identifying specific areas for improvement. Six studies provided Kirkpatrick level 1 (A92, A98, A101) and/or 2b evidence supporting the use of these systems (A92, A95-A96, A101–A102).

The creation of VPSs and ITSs cases, a labor-intensive process, typically involved content experts. However, 2 studies (A101, A 104) demonstrated innovative use of NLP to automate the creation of extensive case libraries (413 and 2525 cases, respectively) from actual hospital records and the New England Journal of Medicine Clinical Case Competition series. Such expansive libraries enhanced the variety of cases available to learners, addressing common challenges in teaching and assessing clinical reasoning, like case variability and the unpredictable nature of clinical encounters in real-world settings.

Further exploration into AI's utility is found in three studies that apply ML to analyze clinical reasoning in written notes (A93–A94, A99). These studies assessed different forms of clinical documentation, including post-encounter OCSE notes (A93) and diagnostic justification essays (A94) by medical students, as well as residents’ admission notes from electronic health records using a standardized rubric (IDEA tool) for clinical notes (A99). The psychometric properties and inter-rater reliability of the ML algorithms were reported as acceptable. One study achieved a kappa statistic of 0.8, meeting the reliability standard for high-stakes assessment (A93), whereas others met the standard for lower-stakes assessments. The collective findings of these studies substantiate the concept of utilizing ML to streamline assessment of clinical reasoning, thereby enhancing efficiency of scoring and significantly enriching formative feedback through assessment of clinical notes–a largely underutilized source in clinical reasoning assessment.

The final two studies in this area highlighted AI's expanding role. The first study demonstrated the use of neural networks to recognize patterns of successful student problem-solving (A100), further highlighting AIs potential in clinical reasoning assessment. The second study evaluated the effectiveness of three different LLMs at providing clinical decision support for junior doctors (A103). The authors noted that more advanced LLMs trained on medical databases, rigorously scrutinized by medical experts, could result in valuable educational tools in the future.

AI use for automation of case and procedure logs

Four of the publications detailed efforts to utilize AI/ML to automate documentation of trainee clinical and procedural experiences. In one (A107), an NLP algorithm–based system identified clinical concepts in trainee clinical notes and mapped these onto expert–defined core clinical problems, with a precision of 92.3%. A similar system based on a commercial NLP product tracked case experiences for neurology residents, with a tripling of case experiences logged in a one–month timeframe (A105). A third system (termed ‘Trove’) (A106) used an NLP algorithm to tally classes of diagnoses based on resident–created imaging impressions and to populate a dashboard for residents with accuracies ranging from 93.2 to 97.0%. Finally, a system utilizing a deep neural network with a reinforcement learning model provided suggestions for data residents needed to manually log their surgical case experiences based on EHR data and surgical case schedules (A108).

Knowledge of AI

Fifty-one of the included articles (26.7%) explored knowledge, perceptions, attitudes, hopes, and concerns related to AI in medical education.

Three studies carried out objective assessments of AI knowledge. In Spain, a study of 281 medical students used true/false questions to assess general AI knowledge, with a 65% correct response rate (A117). In Saudi Arabia, another study of 476 medical students assessed knowledge of DL, and found that 54% failed to correctly answer any of five true/false questions (A118). Across Australia, New Zealand and the United States, a third study of 245 medical students evaluated ML knowledge through MCQs, resulting in an average score of 49.7% (A120).

Self-reported knowledge of AI varied. In Western Australia, 85% of medical students claimed basic understanding of AI (A151), and 91% of pediatric ophthalmologists reported familiarity with basic AI concepts (A154). In contrast, only 31% of German medical students reported basic knowledge of AI technologies (A145), and focus group discussion showed pediatric radiology trainees at a large academic hospital struggled with AI terminology and literature appraisal (A156). A South Korea survey indicated that medical students’ primary information sources were news publications and TV (82%), social media (41%), lectures (32%), or friends or family (29%), with academic publications and books at only 22% (A127). Similarly, in a US study, 72% of medical students and 59% of faculty learned about AI from media sources (A159).

AI-related course availability was inconsistent. In Germany, 71.8% of medical schools offered AI courses, mostly as electives or extra-curricular activities (A142). However, in a sample of Canadian medical students, 85% reported no formal AI education as part of the medical degree curriculum (A146).

Attitudes towards the application of AI in clinical medicine

The studies reviewed revealed mixed attitudes toward AI in clinical medicine. Most medical students and doctors acknowledged AI's potential benefits, such as enhancing clinical judgment, research, and auditing skills, and streamlining administrative processes (A111, A117, A141). However, concerns included the ethical implications, weakened physician-patient relationships, and AI’s limitations in unexpected scenarios (A114, A119, A154, A140).

Two studies investigated medical students’ intention to use AI. One used the Unified Theory of User Acceptance of Technology (UTAUT) to examine the intention to use AI for diagnosis support, finding social influence as the only key factor (A153). In the other study, intention to use AI was only observed when students had a strong belief in the role of AI in the future of the medical profession (A157).

Attitudes toward the application of AI in medical education

Two studies assessed attitudes on the use of AI in medical education. One study assessed Lebanese medical students’ views about using AI for assessment (A131). While 58% believed AI would be more objective than a human examiner, only 26.5% preferred AI assessments. Most (71%) preferred a combination of AI and humans. Another study in a Caribbean medical school found that 33% of faculty used ChatGPT, mainly for generating MCQs. The main concerns about its use were the potential for incorporation of misinformation into teaching materials and plagiarism.

Concerns and hopes for AI

Concerns about AI included its potential to reduce the demand for doctors and influence career choices, especially in radiology (A113, A115, A124, A135, A137,A147, A150). Insufficient AI training in medical curricula was another worry (A128, A143, A151). Three studies assessed aspects of AI which should be included in medical curricula, including clinical applications, algorithm development, algorithm appraisal, coding, and basics of AI, statistics, ethics, and privacy (A133, A156, A158).

Performance of LLMs on medical exams

Thirty-two studies assessed various LLMs’ abilities to perform on medical exams or on test questions drawn from large question banks (A160-A191). The LLMs tested included Microsoft’s Bing Chat, ChatGPT, GPT-3, GPT-3.5, and GPT-4, Google’s Bard, and several other pre-trained language models. They were tested on multiple choice (A160-A185, A188-A190), short answer and essay questions (A186-A187, A191), though questions with images and diagrams were typically excluded due to LLMs’ current limitations processing visuals. A few studies asked the LLM to justify its answers (A164, A168, A175-A176), which were then assessed for accuracy. LLM performance was compared to varying levels of human performance (i.e. novice to expert), as well as ‘gold standard’ answers (A160-A191). LLMs scored at or above passing thresholds on a number of local, university-derived, basic and clinical science exams (A178, A183, A184), as well as on national licensure exams (A160, A169, A180, A182, A187, A189) and specialty licensure exams (A169, A175, A181). Despite these successes, LLMs performed only modestly or poorly on other exams (A168, A170, A173-A174, A176, A190). Newer generation LLMs generally outperformed older generation models, though this was not universally true. LLMs typically performed better on general medical exams, compared to specialty or subspecialty exams, though this was also not universal. LLM performance was tested on exams from Australia (A177), Canada (A162, A174), China (A173, A190), France (A160, A170, A181), Germany (A165, A182), India (A160, A163, A183-A184, A187), Italy (A160), Japan (A189), Korea (A171, A180), Netherlands (A179), Saudi Arabia (A178), Spain (A160), Switzerland (A185), Taiwan (A173), Turkey (A166), the UK (A160, A167, A191), and the US (A160-A161, A164, A168-A169, A172-A173, A175-A176, A186, A188). In most cases, LLMs performed better on English language questions, or when questions in other languages were first translated into English. Their performance in other languages was highly variable (e.g. 22% accuracy in French vs. 73% in Italian (A160, A170). At this time, learners and educators should approach use of LLMs with caution due to variable accuracy (A188), though the notable improvements of newer LLMs may soon overcome this barrier. As capabilities of LLMs improve, educators must contend with concerns about the integrity of formal assessments, as well as their reliability and validity. Assessments may require redesign, and a paradigm shift towards open book tests that emphasize learners’ abilities to integrate information, rather than recall facts, may be warranted (A186).

Overview of perspectives publications included in the review

Eighty-seven perspective publications met inclusion criteria for this review, labeled P1–P87 in Supplementary Table 3. These perspectives, encompassing commentaries, editorials, and letters to the editor, offered insights on the use of AI in medical education, presenting opinions, recommendations, or examples of potential use cases of AI. We categorized these perspectives into five groups according to their primary content—general arguments for the importance of incorporating AI in medical education (n = 42); suggested educational applications or developments (n = 18); frameworks for curricular development (n = 8); recommendations for curricular content, AI competencies, or resources to support use of AI (n = 15); and ethics considerations (n = 4). Some publications had secondary content in an additional category (see Supplementary Table 3 and Supplementary Appendix 2 for additional details).

Importance of integrating AI into medical education

Many of the perspectives argued that incorporating AI in medical education is essential to prepare future physicians to apply AI to patient care (P50, P52–53, P60, P65–67, P70, P79). AI is already influencing diagnosis and treatment decisions in health care, and medical students and residents need to be ready to use AI tools effectively in the health care system. Additionally, several perspectives advocated for the use of AI to enhance teaching and learning (P55–56, P58–59, P61, P64, P69, P71, P84–86) or assessment (P48, P81). Two perspectives urged medical educators to incorporate teaching about AI’s influence and ethical and legal considerations (P57, P87). Some of the perspectives raise cautions about AI’s potential to compromise or limit clinical learning (P46, P49, P54, P63, P72–73, P82). The remaining perspectives contained general statements about the potential use of AI in medical education (P47, P49, P51, P54, P62, P68, P74–78, P80, P82–83).

Specific suggestions for educational applications and ideas for development

The 18 perspectives with specific educational applications included the following uses of AI tools in medical education:

Intelligent Tutoring Systems – AI-powered platforms providing personalized learning experiences to improve the teaching of decision-making skills (P5)

AI-assisted learner assessment – Latent Semantic Analysis to grade students’ clinical case summaries and provide feedback (P11); NLP to score clinical skills exams (P15)

ChatBot (e.g. ChatGPT) – AI processing and understanding of medical literature to teach clinical management (P10), assist with USMLE (P12) and other exam (P17) preparation, promote critical thinking, creativity, and patient communication (P8, P14, P16)

Personalized Learning Platforms – AI-driven platforms creating personalized learning paths for students (P1) and providing personalized feedback (P8)

Robot-Assisted Surgery Simulations (e.g. Virtual Reality) – AI-powered simulators for surgical training and evaluation (P13)

Enhanced anatomy education – enhancing teaching, learning, and assessment in anatomy education for deeper learning and long-term retention (P2)

AI tools to prepare applications – medical school applications (with a call for guidelines to ensure fairness in admissions) (P6) and personal statements for residency applications (P18)

AI-generated art – to enhance visual storytelling for patient encounters (P7)

Machine learning and intra-operative video analysis to improve patient care – teaching competency-based patient assessments (P9)

Frameworks for AI in medical education

Several perspectives provided frameworks to guide the incorporation of AI into medical education. An AI literacy framework for medical school described aims, opportunities, and impact of AI for selection into medical school and training programs, learning, assessment, and research (P19). A framework for radiology education described the role of AI to promote personalized learning and decision support tools (P20). A framework that emphasized digital skills provided a structure for teaching data protection, legal aspects of digital tools, digital communication, data literacy and analytics (P21). A framework for integrating AI in UME used a case-based approach with a detailed delineation of what AI content could be taught through cases (P22). A framework for early and progressive exposure to AI for medical students provided an analytics hierarchy to scaffold learning to prepare students to adapt to evolving clinical settings (P23). A framework for utilizing the rapidly expanding medical knowledge base also incorporated content about how AI relates to health care economics, regulations, and patient care (P24). An embedded AI ethics education framework provided a curricular structure for teaching the ethical dimensions of medical AI in the context of existing bioethics curricula. (P25). Finally, a curriculum framework incorporated ML to build clinicians’ skills in research, applied statistics, and clinical care (P26).

Recommendations for AI curriculum content or competency, tools and resources

Fifteen perspectives offered explicit recommendations for curricular content about AI, AI competencies, and specific tools and resources that can support curriculum development for teaching about AI in medical education. Recommended content included what physicians will need to know to meet patients’ needs as AI changes the practice of medicine (P31–32), data science and concepts underlying AI (P33, P35), using AI to improve surgical outcomes (P41), surgical training for ophthalmologists (P40), AI model performance, financial implications, and generation of reports in radiology (P38–39, P37), data science to support evidence-based medicine (P36), using AI for diagnostics and prognosis in hematology (P28), concepts underlying machine learning (P34), and ethical uses of AI in medicine (P27). One publication proposed a curriculum for a new specialty, Doctor in Medical Data Sciences (P29). One publication explained six domains of competency for effective use of AI tools in primary care, in the context of the Quintuple Aim (P30).

Ethics considerations for AI education

Fourteen perspectives addressed ethics considerations and reflected a multifaceted view of the ethical landscape AI introduces to healthcare. Many of these publications expressed caution about the limitations of AI applications in medical education. These perspectives also stressed the importance of educational approaches that equip healthcare professionals with the necessary skills to navigate AI challenges, emphasizing the irreplaceable value of human judgment and ethical reasoning in the age of AI.

Recommended focus for ethics education

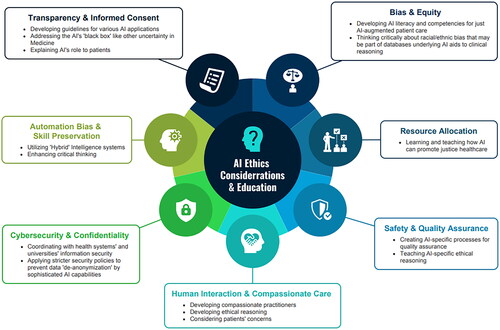

Many of the perspectives papers raised concerns about the ethics of using AI in healthcare and medical education, and discussed the responsibility of physicians to grapple with the ethical challenges that can compromise education and health care. These papers emphasize the importance of preparing current and future physicians to grapple with these issues, while acknowledging that we have neither fully grasped the ethical implications of AI nor learned how to adequately respond to the ethical challenges. When considered collectively, these papers contribute important insights about the topics that must be addressed in medical education. Referring to these papers can provide clear guidance to medical educators about ethical issues to include in curricula. The perspectives that addressed ethics related to AI recommended ethics education on the following topics, which are summarized in :

Algorithmic Bias and Equity: Future clinicians must understand biases in health AI, especially those caused by training on non-representative data sets, which might exacerbate healthcare disparities (P42). Through an AI curriculum, physicians can reflect critically on their assumptions and the biases in both AI and clinical practice. This could help them better interpret AI outputs and recognize the limitations of current medical knowledge (P22).

Resource Allocation: AI in healthcare brings to the fore issues of just resource allocation and equity, challenging students to consider how AI can be designed and used to promote justice in healthcare (P42).

Safety and Quality Assurance: AI necessitates agreement among healthcare professionals to use AI tools responsibly and in alignment with professional obligations, such as practicing medicine safely and effectively (P25). With AI's integration into clinical practice, students must understand regulatory efforts, gaps in AI safety, and the ethical implications of using such technologies (P42).

Human Interaction and Compassionate Care: AI-based ethics training might be less likely to instill compassion in students compared to traditional methods. One publication argued that compassion can indeed be taught and that positive role models in medical education can deepen students’ understanding of compassionate practice (P44).

Data Privacy and Security: The use of AI in medicine necessitates a strong understanding of data privacy, the ethical handling of patient data, and the legal dimensions associated with AI (P42, P43). The large number of available data sets and increased power of AI capabilities make data de-anonymization (or reidentification) highly possible through cross-referencing with other data sets (P43).

Automation Bias and Skill Preservation: Clinicians must be wary of automation bias, where undue reliance on AI could lead to errors, highlighting the need for balanced trust and skepticism (P25). Ethics education must ensure AI complements, not replaces, the clinician’s role in compassionate care (P25). Training should focus on preserving clinical skills and human interactions that AI cannot replicate (P42), leveraging ‘hybrid intelligence’ systems to strengthen therapeutic patient-provider relationship and empathy (P44).

Transparency and Informed Consent: The deployment of health AI raises critical questions about informed consent and the physician’s duty to inform patients about AI's involvement in their care decisions, highlighting the need for medical students to engage in these discussions (P42). Proficiency in medical AI is intertwined with ethical competency, where ethical considerations significantly influence clinical decisions and vice versa. As future practitioners, students need to learn to identify AI’s potential consequences and how to discuss them with patients in light of ethical principles (P25). In medical education, students must be made aware of data collection, provide consent, and understand the extent and implications of their consent (P43).

Proposed educational approaches for ethics education

One recommended approach to teaching ethics about AI is the incorporation of real-life case studies in medical curricula to elucidate the multifaceted ethical issues presented by health AI, such as informed consent, bias, and transparency (P42). This case-based methodology not only enriches the theoretical understanding but also hones practical decision-making skills. An Embedded AI Ethics Education Framework, which is an incremental approach that integrates AI ethics within existing medical ethics courses, addresses the potential harms of technology misuse and equips students to conduct risk-benefit analyses fundamental to patient care (P25). A third approach uses AI scenarios currently employed in clinical practice as examples for case-based learning to prepare students to critically evaluate the technical aspects of AI alongside ethical implications (P22). This approach fosters critical reflection and encourages interdisciplinary dialogue essential for the application of AI in patient-centered care. Another approach emphasizes the limitations of AI through human-led ethics teaching, much like ethics teaching that has become commonplace in medical school curricula. This approach maintains an emphasis on human interaction and the cultivation of moral reasoning over reliance on technology, while remaining open to the potential for ‘hybrid’ intelligence systems, in which humans and machines learn from each other, resulting in superior performance in ethical decision-making. Collectively, these educational strategies underscore the necessity of equipping healthcare professionals with both technical skill and ethical discernment to navigate the complexities introduced by AI in healthcare and health professions education.

Discussion

This review embarked on a journey to map the explosion of interest in AI across the medical education landscape through the lens of published works. The detailed tables of articles and perspectives papers, summary infographics and associated narrative clarify the current state of the evidence. The first published study on AI in medical education dated back three decades to 1992, though AI has existed much longer than this. A steady flow of publications has been seen since, a testament to the enduring fascination and steady evolution of AI in medical education. The recent surge in publications may at first appear to be a growth spurt associated with maturation in the field, though our analysis suggests it is more likely a contemporaneous response to the public release of ChatGPT.

The recent advancements in AI technology have led to rapid, widespread and in many ways limitless distribution of accessible, cost-effective AI tools globally. This technological democratization is mirrored in the diverse origin of publications with almost a third from regions outside of the traditional North American or European academic hubs, more than found in most recently published BEME reviews (Daniel et al. Citation2022; Doyle et al. Citation2023; Hamza et al. Citation2023; Li S et al. Citation2023). This review reflects a shift from centralized to distributed perspectives in AI research and development, offering a more inclusive and comprehensive understanding of AI’s role in shaping medical education worldwide.

This scoping review, in charting the course of AI in medical education, reveals not just the peaks and troughs of evidence, but also an expansive change that has permeated every facet of the educational environment. When considering the impact at such a broad environmental level, our analysis, viewed through the conceptual lens of Oberg’s theory of ‘culture shock’ (Oberg Citation1960; Zhou et al. Citation2008), aligns with the theory’s four phases: Honeymoon, Frustration, Adaptation, and Acceptance. Each phase represents a distinct emotional, psychological, and behavioral response to AI integration manifesting variably, across different areas and individuals.

The initial ‘Honeymoon’ phase is characterized by a mixture of fascination and idealism, often reflected in perspective and innovation pieces. These works, often written by experts, provide valuable insights, useful resources and some directions or foundations to move the field forward. These sometimes portray an overtly optimistic view of AI’s potential, lacking detailed exploration of challenges and ethical considerations.

In contrast, the ‘Frustration’ phase has been seen in evidence of attitudes of feeling overwhelmed, fear related to lack of understanding, and feeling lost and out of place. These concerns are not only valid but a necessary step in progressing towards acceptance of such a shock in the system. The ethical considerations and challenges related to specific applications of AI are key to consider. Balancing these aspects is crucial for sustainable implementation before the next phase.

The ambivalence observed in a large group of studies examining NLP, mostly ChatGPT, for medical exams [A160–191], exemplifies the non-linear and individual nature of cultural adaptation. This straddling of both Honeymoon and Frustration phases reflects a duality – awe of AI's capabilities juxtaposed with apprehension about its implications, particularly in reducing the need for traditional medical education methods. We draw attention to this group of publications not just as an interesting example, but to highlight that it is clear that without an innovative or different perspective, no further studies of this form appear justified.

As the initial frustrations and challenges of AI integration begin to crystallize, we transition into the ‘Adaptation’ phase. Educators begin to find practical and effective ways to incorporate AI tools. This phase is marked by the pragmatic ‘use cases’ of AI, showing a shift from initial awe and skepticism towards AI to a more balanced and functional integration of these technologies. However, discussions around these use cases in perspective pieces often lack depth in building a longitudinal or iterative understanding, which is critical for effective integration.

Finally, in the ‘Acceptance’ phase, we witness a transformation in the educators’ relationship with AI, evolving from mere adaptation to a state of genuine integration and comfort. This phase signifies a shift where AI is no longer viewed as an external, disruptive force but as an integral part of the educational blueprint. The subset of publications exhibits a deep understanding and skillful utilization of AI, seamlessly incorporating these technologies into their wide-ranging educational developments, characterized by a blend of creativity, critical thinking, and a forward-looking perspective that acknowledges the potential of AI while remaining cognizant of its limitations and ethical considerations. This mature phase of acceptance is not merely about recovery from the initial 'shock’ of AI integration; it represents a proactive, thoughtful embrace of these technologies. These educators have become pioneers in a true sense, setting benchmarks for how AI can be harmoniously and innovatively integrated into medical education. Their experiences and practices offer valuable insights for the broader educational community, illustrating a roadmap from apprehension to acceptance, a journey that is as much about personal and professional growth as it is about technological adoption.

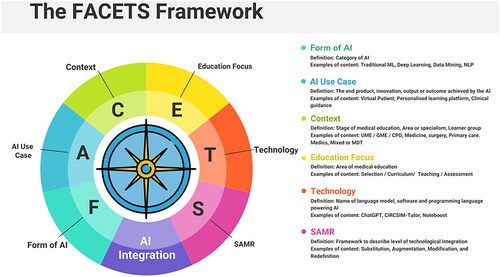

In the process of extracting and charting the data, we have identified critical gaps in how a study is reported – with a need for a ‘compass’ to navigate the roadmap in AI applications in medical education. Here, addressing gaps involves a shift from mere reporting of disparate items, such as educational contexts, stages, or AI technologies, to a comprehensive framework that supports dissemination, replication, and innovation through several elements. We propose the FACETS framework, presented in , as a structured approach for future AI studies in medical education, focusing on key elements: form, use case, context, education form, technology, and SAMR framework for technology integration. Our review reveals that these elements are commonly present in the literature, but they are typically reported in isolation, not cohesively in a single publication. Bringing together these disparate elements in a more systematic and unified manner is valuable in explaining to both educators and education researchers the specific details of the AI being employed, their relevance to past practice, and how they can be integrated into the educational context. It is designed to not only guide authors in conceptualizing their works but also to help readers to grasp the full scope and impact of AI in medical education. This approach is essential for advancing understanding and innovation in the field, forming a core recommendation from the author team for future research.

This framework cannot be applied to perspective pieces or attitudes surveys, but the use of this framework by authors of works published regarding any AI use-case or broader innovation will be of significant benefit. The FACETS framework may aid in identifying converging areas of research and serve as a useful guide for more focused syntheses in the future.

As this review was rigorous, systematic, and wide ranging in true alignment with the scoping tradition, we do not believe further scoping reviews are indicated. However, given the velocity of output, more in-depth systematic reviews will soon be indicated in the areas of admissions, teaching, assessment, and clinical reasoning. Another area where a systematic review may be warranted was out of scope for this review, namely, AI use in medical education research. Given the huge untapped potential of big data in educational institutions, this is a key area for further work.

Limitations of this review include the limitations of the scoping tradition, which by definition, does not synthesise evidence (Levac et al. Citation2010). Further limitations include subjectivity in categorizing studies (e.g. some studies could be classified as both teaching and assessment), as well as issues with consistent terminology, which underscore the complexity of navigating a rapidly advancing field like AI in medical education. The review iterated the search on a number of occasions in the early phases, reflecting the vast and evolving scope of AI literature. In light of this, studies may have been missed, despite systematic and rigorous methods. The inclusion of perspective articles, while unconventional for BEME reviews, offers a diverse perspective on AI in medical education. However, this inclusion may be perceived as diluting the empirical strength of the review. Thus, a decision was made to present their synthesis transparently as a separate parallel data set within the results, as there was much value in clarifying what these articles offered (and did not offer). We did not perform the sixth stage of the Arksey and O’Malley scoping review framework. While we had AI and education experts on the author team who fulfilled this role, skipping the external expert consultation step could be viewed as a limitation.

Implications for practice and policy

This review underscores the transformative role of AI in medical education, necessitating an integration of AI into various aspects like curriculum development, teaching methodologies, and learner assessment to maintain alignment with technological advancements. It calls for updating competency frameworks and establishing standardized guidelines for AI usage in education. The need for collaborative efforts between educators, policymakers, and AI developers is highlighted to ensure effective integration and address equity and accessibility issues, ensuring AI tools are available to all without bias. Continuous investment in resources and time is essential to transition from initial fascination with AI to its profound adoption in educational settings. The review also stresses managing risks associated with AI, including ethical concerns and potential misuse. Crucially, while leveraging AI for new insights and efficiencies, the irreplaceable human element in healthcare must be preserved. Ethical education is vital, ensuring AI enhances rather than replaces the healthcare provider’s role, upholding patient-provider relationships, empathy, and respect for patient autonomy. This approach prevents the dehumanization of care and ensures AI's use bolsters rather than undermines the core human skills and values central to the medical profession.

Implications for future research

The review highlights several key areas for future research and educational development. It underscores the need for comprehensive studies on the long-term impacts of AI, both on the overall landscape of medical education and specific learning outcomes. Additionally, there is a call for research addressing potential risks associated with AI, such as automation bias, over- and under-skilling, exacerbating disparities, and the preservation of essential clinical skills. It also recommends employing the FACETS framework for future AI studies in medical education. This comprehensive approach to research and development will ensure that AI is integrated into medical education in a manner that is ethically sound, educationally effective, and cognizant of the potential risks and challenges.

Conclusions

The landscape of AI in medical education, as charted in this review, spans a wide array of stages, specialties, purposes, and use cases, primarily reflecting early adaptation phases–only a few describe more in-depth employment for longitudinal or deep change. The proposed FACETS framework is a key outcome, offering a structured approach for future research and practice. While no immediate areas for systematic review are identified, the dynamic nature of AI in education necessitates ongoing vigilance and adaptability.

Disclosure statement

The authors report no conflicts of interest. The authors alone are responsible for the content and writing of the article.

Additional information

Funding

Notes on contributors

Morris Gordon

Morris Gordon, MBChB, PHD, MMed, is Co-chair of the BEME Council, Coordinating Editor of the Cochrane Gut Group, Professor of Evidence Synthesis and Systematic Review and a Consultant Pediatrician in the North West of the UK.

Michelle Daniel

Michelle Daniel, MD, MHPE, is Co-chair of the BEME Council, Associate Editor for Medical Teacher, Vice Dean for Medical Education and Clinical Professor of Emergency Medicine at the University of California, San Diego School of Medicine, La Jolla, California, USA.

Aderonke Ajiboye

Aderonke Ajiboye, BDS, MPH, is a Researcher at the School of Medicine and Dentistry, University of Central Lancashire.

Hussein Uraiby

Hussein Uraiby, MBChB, MMedEd, FHEA, is a Specialty Trainee in Histopathology at the University Hospitals of Leicester NHS Trust, Leicester, UK.

Nicole Y. Xu

Nicole Y. Xu is a medical student at the University of California, San Diego School of Medicine, La Jolla, California, USA.

Rangana Bartlett

Rangana Bartlett is a recent graduate of the University of California, San Diego and current research assistant at the Icahn School of Medicine at Mount Sinai, New York, New York, USA.

Janice Hanson

Janice Hanson, PhD, EdS, MH, is Professor of Medicine, Co-Director of the Medical Education Research Unit, and Director of Education Scholarship Development at Washington University in Saint Louis, Missouri, USA.

Mary Haas

Mary Haas, MD, MHPE, is Assistant Residency Program Director and Assistant Professor, Department of Emergency Medicine, University of Michigan Medical School, Ann Arbor, Michigan, USA.

Maxwell Spadafore

Maxwell Spadafore, MD, has expertise in AI and informatics and is a Chief Resident, Department of Emergency Medicine, University of Michigan Medical School, Ann Arbor, Michigan, USA.

Ciaran Grafton-Clarke

Ciaran Grafton-Clarke, MBChB, is an NIHR Academic Clinical Fellow in Cardiology, University of East Anglia, Norwich, UK.

Rayhan Yousef Gasiea

Rayhan Yousef Gasiea, MBBS, is a junior clinical fellow in medical education at Blackpool Hospitals NHS Foundation Trust.

Colin Michie

Collin Michie, FRCPCH, FRSPH, FLS, RNutr, is Associate Dean for Research and Knowledge Exchange at the School of Medicine and Dentistry, University of Central Lancashire, Preston, UK.

Janet Corral

Janet Corral, EdD is Professor of Medicine and Associate Dean of Undergraduate Medical Education at the University of Nevada School of Medicine, Reno, Nevada, USA.

Brian Kwan

Brian Kwan, MD has advanced training in informatics, and is an Assistant Clinical Professor and Medical Director for Education Informatics at UC San Diego School of Medicine, USA.

Diana Dolmans

Diana Dolmans, PhD, is a Professor and Chair of the Department of Educational Development & Research, staff member of the School of Health Professions Education, Faculty of Health, Medicine and Life Sciences, Maastricht University, Maastricht, NL.

Satid Thammasitboon

Satid Thammasitboon, MD, MHPE, is Director of the Center for Research, Innovation and Scholarship in Health Professions Education, Texas Children’s Hospital and Baylor College of Medicine, Houston, Texas, USA, and member of the BEME Council.

References

- Abbott KL, George BC, Sandhu G, Harbaugh CM, Gauger PG, Ötleş E, Matusko N, Vu JV. 2021. Natural language processing to estimate clinical competency committee ratings. J Surg Educ. 78(6):2046–2051. doi: 10.1016/j.jsurg.2021.06.013.

- Abd-Alrazaq A, AlSaad R, Alhuwail D, Ahmed A, Healy PM, Latifi S, Aziz S, Damseh R, Alrazak SA, Sheikh J. 2023. Large language models in medical education: opportunities, challenges, and future directions. JMIR Med Educ. 9(1):e48291. doi: 10.2196/48291.

- Abdellatif H, Al Mushaiqri M, Albalushi H, Al-Zaabi AA, Roychoudhury S, Das S. 2022. Teaching, learning and assessing anatomy with artificial intelligence: the road to a better future. Int J Environ Res Public Health. 19(21):14209. doi: 10.3390/ijerph192114209.

- Abid S, Awan B, Ismail T, Sarwar N, Sarwar G, Tariq M, Naz S, Ahmed A, Farhan M, Uzair M, et al. 2019. Artificial intelligence: medical students’ attitude in district Peshawar Pakistan. PJPH. 9(1):19–21. doi: 10.32413/pjph.v9i1.295.

- Afzal S, Dhamecha TI, Gagnon P, Nayak A, Shah A, Carlstedt-Duke J, Pathak S, Mondal S, Gugnani A, Zary N, et al. 2020. AI medical school tutor: modelling and implementation. AIME 2020. Proceedings of the 18th International Conference on Artificial Intelligence in Medicine; Aug 25–28; Minnesota. Springer International Publishing; p. 133–145. doi: 10.1007/978-3-030-59137-3_13.

- Agarwal M, Sharma P, Goswami A. 2023. Analysing the applicability of ChatGPT, Bard, and Bing to generate reasoning-based multiple-choice questions in medical physiology. Cureus. 15(6):e40977. doi: 10.7759/cureus.40977.

- Agha-Mir-Salim L, Mosch L, Klopfenstein SA, Wunderlich MM, Frey N, Poncette AS, Balzer F. 2022. Artificial intelligence competencies in postgraduate medical training in Germany. Challenges of trustable AI and added-value on health [e-book]. Amsterdam: IOS Press; p. 805–806. doi: 10.3233/shti220589.

- Ahmed Z, Bhinder KK, Tariq A, Tahir MJ, Mehmood Q, Tabassum MS, Malik M, Aslam S, Asghar MS, Yousaf Z. 2022. Knowledge, attitude, and practice of artificial intelligence among doctors and medical students in Pakistan: a cross-sectional online survey. Ann Med Surg. 76:103493. doi: 10.1016/j.amsu.2022.103493.

- Ahmer H, Altaf SB, Khan HM, Bhatti IA, Ahmad S, Shahzad S, Naseem S. 2023. Knowledge and perception of medical students towards the use of artificial intelligence in healthcare. J Pak Med Assoc. 73(2):448–451. doi: 10.47391/jpma.5717.

- Ahuja AS, Polascik BW, Doddapaneni D, Byrnes ES, Sridhar J. 2023. The digital metaverse: applications in artificial intelligence, medical education, and integrative health. Integr Med Res. 12(1):100917. doi: 10.1016/j.imr.2022.100917.

- Alamer A. 2023. Medical students’ perspectives on artificial intelligence in radiology: the current understanding and impact on radiology as a future specialty choice. Curr Med Imaging. 19(8):921–930. doi: 10.2174/1573405618666220907111422.

- Aldeman NL, de Sá Urtiga Aita KM, Machado VP, da Mata Sousa LC, Coelho AG, da Silva AS, da Silva Mendes AP, de Oliveira Neres FJ, do Monte SJ. 2021. Smartpathk: a platform for teaching glomerulopathies using machine learning. BMC Med Educ. 21(1):248. doi: 10.1186/s12909-021-02680-1.

- Alfertshofer M, Hoch CC, Funk PF, Hollmann K, Wollenberg B, Knoedler S, Knoedler L. 2023. Sailing the seven seas: a multinational comparison of ChatGPT’s performance on medical licensing examinations. Ann Biomed Eng. 8:1–4. doi: 10.1007/s10439-023-03338-3.

- Ali R, Tang OY, Connolly ID, Sullivan PL, Shin JH, Fridley JS, Asaad WF, Cielo D, Oyelese AA, Doberstein CE, et al. 2023. Performance of ChatGPT and GPT-4 on neurosurgery written board examinations. Neurosurgery. 93(6):1353–1365. doi: 10.1227/neu.0000000000002632.

- Alonso-Silverio GA, Pérez-Escamirosa F, Bruno-Sanchez R, Ortiz-Simon JL, Muñoz-Guerrero R, Minor-Martinez A, Alarcón-Paredes A. 2018. Development of a laparoscopic box trainer based on open source hardware and artificial intelligence for objective assessment of surgical psychomotor skills. Surg Innov. 25(4):380–388. doi: 10.1177/1553350618777045.

- Al Saad MM, Shehadeh A, Alanazi S, Alenezi M, Abu Alez A, Eid H, Alfaouri MS, Aldawsari S, Alenezi R. 2022. Medical students’ knowledge and attitude towards artificial intelligence: an online survey. TOPHJ. 15(1):1–8. doi: 10.2174/18749445-v15-e2203290.

- Alverson DC, Saiki SM, Jr, Caudell TP, Goldsmith T, Stevens S, Saland L, Colleran K, Brandt J, Danielson L, Cerilli L. 2006. Reification of abstract concepts to improve comprehension using interactive virtual environments and a knowledge-based design: a renal physiology model. Stud Health Technol Inform. 119:13–18.

- AlZaabi A, AlMaskari S, AalAbdulsalam A. 2023. Are physicians and medical students ready for artificial intelligence applications in healthcare? Digit Health. 9:20552076231152167. doi: 10.1177/20552076231152167.

- Antaki F, Touma S, Milad D, El-Khoury J, Duval R. 2023. Evaluating the performance of chatgpt in ophthalmology: an analysis of its successes and shortcomings. Ophthalmol Sci. 3(4):100324. doi: 10.1016/j.xops.2023.100324.

- Arksey H, O’Malley L. 2005. Scoping studies: towards a methodological framework. Int J Soc Res Methodol. 8(1):19–32. doi: 10.1080/1364557032000119616.

- Armitage RC. 2023. ChatGPT: the threats to medical education. Postgrad Med J. 99(1176):1130–1131. doi: 10.1093/postmj/qgad046.

- Arora VM. 2018. Harnessing the power of big data to improve graduate medical education: big idea or bust? Acad Med. 93(6):833–834. doi: 10.1097/acm.0000000000002209.

- Asghar A, Patra A, Ravi KS. 2022. The potential scope of a humanoid robot in anatomy education: a review of a unique proposal. Surg Radiol Anat. 44(10):1309–1317. doi: 10.1007/s00276-022-03020-8.

- Aubignat M, Diab E. 2023. Artificial intelligence and ChatGPT between worst enemy and best friend: the two faces of a revolution and its impact on science and medical schools. Rev Neurol. 179(6):520–522. doi: 10.1016/j.neurol.2023.03.004.

- Auloge P, Garnon J, Robinson JM, Dbouk S, Sibilia J, Braun M, Vanpee D, Koch G, Cazzato RL, Gangi A. 2020. Interventional radiology and artificial intelligence in radiology: is it time to enhance the vision of our medical students? Insights Imaging. 11(1):127. doi: 10.1186/s13244-020-00942-y.

- Ayub I, Hamann D, Hamann CR, Davis MJ. 2023. Exploring the potential and limitations of chat generative pre-trained transformer (ChatGPT) in generating board-style dermatology questions: a qualitative analysis. Cureus. 15(8):e43717. doi: 10.7759/cureus.43717.

- Bakshi SK, Lin SR, Ting DS, Chiang MF, Chodosh J. 2021. The era of artificial intelligence and virtual reality: transforming surgical education in ophthalmology. Br J Ophthalmol. 105(10):1325–1328. doi: 10.1136/bjophthalmol-2020-316845.

- Baloul MS, Yeh VJ, Mukhtar F, Ramachandran D, Traynor MD, Jr Shaikh N, Rivera M, Farley DR. 2022. Video commentary & machine learning: tell me what you see, I tell you who you are. J Surg Educ. 79(6):e263-72–e272. doi: 10.1016/j.jsurg.2020.09.022.

- Banerjee M, Chiew D, Patel KT, Johns I, Chappell D, Linton N, Cole GD, Francis DP, Szram J, Ross J, et al. 2021. The impact of artificial intelligence on clinical education: perceptions of postgraduate trainee doctors in London (UK) and recommendations for trainers. BMC Med Educ. 21(1):1–0. doi: 10.1186/s12909-021-02870-x.

- Bansal M, Jindal A. 2022. Artificial intelligence in healthcare: should it be included in the medical curriculum? A students’ perspective. Natl Med J India. 35(1):56–58. doi: 10.25259/nmji_208_20.

- Barreiro-Ares A, Morales-Santiago A, Sendra-Portero F, Souto-Bayarri M. 2023. Impact of the rise of artificial intelligence in radiology: what do students think? Int J Environ Res Public Health. 20(2):1589. doi: 10.3390/ijerph20021589.

- Bhanvadia S, Saseendrakumar BR, Guo J, Daniel M, Lander L, Baxter SL. 2022. Evaluation of bias in medical student clinical clerkship evaluations using natural language processing. Acad Med. 97(11S): S154–S154. doi: 10.1097/ACM.0000000000004807.

- Bhattacharya P, Van Stavern R, Madhavan R. 2010. Automated data mining: an innovative and efficient web-based approach to maintaining resident case logs. J Grad Med Educ. 2(4):566–570. doi: 10.4300/jgme-d-10-00025.1.

- Bin Dahmash A, Alabdulkareem M, Alfutais A, Kamel AM, Alkholaiwi F, Alshehri S, Al Zahrani Y, Almoaiqel M. 2020. Artificial intelligence in radiology: does it impact medical students preference for radiology as their future career? BJR Open. 2(1):20200037. doi: 10.1259/bjro.20200037.

- Bisdas S, Topriceanu CC, Zakrzewska Z, Irimia AV, Shakallis L, Subhash J, Casapu MM, Leon-Rojas J, Pinto dos Santos D, Andrews DM, et al. 2021. Artificial intelligence in medicine: a multinational multi-center survey on the medical and dental students’ perception. Front Public Health. 9:795284. doi: 10.3389/fpubh.2021.795284.

- Blacketer C, Parnis R, B Franke K, Wagner M, Wang D, Tan Y, Oakden‐Rayner L, Gallagher S, Perry SW, Licinio J, et al. 2021. Medical student knowledge and critical appraisal of machine learning: a multicentre international cross‐sectional study. Intern Med J. 51(9):1539–1542. doi: 10.1111/imj.15479.

- Blease C, Kharko A, Bernstein M, Bradley C, Houston M, Walsh I, Mandl KD. 2023. Computerization of the work of general practitioners: mixed methods survey of final-year medical students in Ireland. JMIR Med Educ. 9(1):e42639. doi: 10.2196/42639.

- Blease C, Kharko A, Bernstein M, Bradley C, Houston M, Walsh I, Hägglund M, DesRoches C, Mandl KD. 2022. Machine learning in medical education: a survey of the experiences and opinions of medical students in Ireland. BMJ Health Care Inform. 29(1):e100480. doi: 10.1136/bmjhci-2021-100480.

- Boillat T, Nawaz FA, Rivas H. 2022. Readiness to embrace artificial intelligence among medical doctors and students: questionnaire-based study. JMIR Med Educ. 8(2):e34973. doi: 10.2196/34973.

- Bond WF, Zhou J, Bhat S, Park YS, Ebert-Allen RA, Ruger RL, Yudkowsky R. 2023. Automated patient note grading: examining scoring reliability and feasibility. Acad Med. 98(11S):S90–S97. doi: 10.1097/acm.0000000000005357.

- Booth GJ, Ross B, Cronin WA, McElrath A, Cyr KL, Hodgson JA, Sibley C, Ismawan JM, Zuehl A, Slotto JG, et al. 2023. Competency-based assessments: leveraging artificial intelligence to predict subcompetency content. Acad Med. 98(4):497–504. doi: 10.1097/acm.0000000000005115.

- Borchert RJ, Hickman CR, Pepys J, Sadler TJ. 2023. Performance of ChatGPT on the situational judgement test - a professional dilemmas–based examination for doctors in the United Kingdom. JMIR Med Educ. 9(1):e48978. doi: 10.2196/48978.

- Boscardin CK, Gin B, Golde PB, Hauer KE. 2023. ChatGPT and generative artificial intelligence for medical education: potential impact and opportunity. Acad Med. 99(1):22–27. doi: 10.1097/acm.0000000000005439.

- Brandes GIG, D'Ippolito G, Azzolini AG, Meirelles G. 2020. Impact of artificial intelligence on the choice of radiology as a specialty by medical students from the city of São Paulo. Radiol Bras. 53(3):167–170. doi: 10.1590/0100-3984.2019.0101.

- Brown JD, Kuchenbecker KJ. 2023. Effects of automated skill assessment on robotic surgery training. Int J Med Robot Comput Assist Surg. 19(2):e2492. doi: 10.1002/rcs.2492.

- Buabbas AJ, Miskin B, Alnaqi AA, Ayed AK, Shehab AA, Syed-Abdul S, Uddin M. 2023. Investigating students’ perceptions towards artificial intelligence in medical education. Healthcare. 11(9):1298. doi: 10.3390/healthcare11091298.

- Burk-Rafel J, Reinstein I, Feng J, Kim MB, Miller LH, Cocks PM, Marin M, Aphinyanaphongs Y. 2021. Development and validation of a machine learning-based decision support tool for residency applicant screening and review. Acad Med. 96(11S):S54–S61. doi: 10.1097/acm.0000000000004317.

- Busch F, Adams LC, Bressem KK. 2023. Biomedical ethical aspects towards the implementation of artificial intelligence in medical education. Med Sci Educ. 33(4):1007–1012. doi: 10.1007/s40670-023-01815-x.

- Çalışkan SA, Demir K, Karaca O. 2022. Artificial intelligence in medical education curriculum: an e-Delphi study for competencies. PLoS One. 17(7):e0271872. doi: 10.1371/journal.pone.0271872.

- Caparros GC, Portero FS. 2022. Medical students’ perceptions of the impact of artificial intelligence in radiology. Radiología. 64(6):516–524. doi: 10.1016/j.rx.2021.03.006.

- Carin L. 2020. On artificial intelligence and deep learning within medical education. Acad Med. 95(11S Association of American Medical Colleges Learn Serve Lead: proceedings of the 59th Annual Research in Medical Education Presentations):S10–S11. doi: 10.1097/acm.0000000000003630.

- Chai SY, Hayat A, Flaherty GT. 2022. Integrating artificial intelligence into haematology training and practice: opportunities, threats and proposed solutions. Br J Haematol. 198(5):807–811. doi: 10.1111/bjh.18343.

- Chen H, Gangaram V, Shih G. 2019. Developing a more responsive radiology resident dashboard. J Digit Imaging. 32(1):81–90. doi: 10.1007/s10278-018-0123-6.