ABSTRACT

The role of Earth observation (EO) data in addressing societal problems from environmental through to humanitarian should not be understated. Recent innovation in EO means provision of analysis ready data and data cubes, which allows for rapid use of EO data. This in combination with processing technologies, such as Google Earth Engine and open source algorithms/software for EO data integration and analyses, has afforded an explosion of information to answer research questions and/or inform policy making. However, there is still a need for both training and validation data within EO projects – often this can be challenging to obtain. It has been suggested that citizen science can help here to provide these data, yet there is some perceived hesitancy in using citizen science within EO projects. This paper reports on the Citizen Science 4 EO (Citizens4EO) project that aimed to obtain an in-depth understanding of researchers’ and practitioners’ experiences with citizen science data in EO within the UK. Through a mixed methods approach (online and in-depth surveys and a spotlight case study) it was found that although the benefits of using citizen science data in EO projects were many (and highlighted in the spotlighted “Slavery from Space” case study), there were a number of common concerns around using citizen science. These were around the mechanics of deploying citizen science and the unreliability of a potentially misinformed or undertrained citizen base. As such, comparing the results of this study with those of a similar survey undertaken in 2016, it is apparent that progress towards optimizing citizen science use in EO has been incremental but positive with evidence of the realization of the benefits of citizen science for EO (Citizens4EO). As such, we conclude by offering priority action areas to support further use of citizen science by the EO community within the UK, which ultimately should be adopted further afield.

Keywords:

1. Introduction

Remote sensing for Earth Observation (EO) data, and their derived products, are readily suggested as a way to meet the data gap hindering progress towards meeting the UN Sustainable Development Goals (SDGs) (Kavvada et al. Citation2020). Already a good 5 years along an ambitious timeline (now less than a decade until 2030) the need is clear for a knowledge-base that is accurate, timely and flexible to both produce underpinning research and innovation but also monitor and track progress of all nation states towards these global goals. The Group on Earth Observations (GEOs) and its space-agency arm Committee on Earth Observation satellites (CEOS) have presented an initial view as to how EO can support the population of SDG indicators and the GEO EO4SDG programme supported the potential of EO to advance the United Nations 2030 Agenda (http://eohandbook.com/sdg/). CEOS has created an ad hoc team on the SDGs to better coordinate the activities of the CEOS agencies around the SDGs. GEO is working closely with the United Nations Statistics Division, the United Nations Committee of Experts on Global Geospatial Information Management, and the United Nations Sustainable Development Solutions Network (Anderson et al. Citation2017). Usefully, Andries et al. (Citation2018) used a Maturity Matrix Framework to assess the potential of EO approaches to populate the 232 indicators towards the SDGs. They demonstrate that satellite EO derived data can make a substantial contribution in supporting progress towards many of the SDGs, including those that are more socio-economic in nature. There is also potential to develop indicators outside the established set of SDG indicators that may be more amenable to the use of EO-derived data. Hence, maximizing how EO data and associated derived products are used is of global importance.

Big strides have been made within the field of EO to provide analysis ready data (ARD) and data cubes which allows for rapid use of EO data removing somewhat the need for pre-processing and specific image processing expertise. This in combination with processing technologies such as Google Earth Engine, Microsoft’s Planetary Computer and the Sentinel Hub Playground and other open source algorithms/software for EO data integration and analyses has afforded an explosion of information to answer research questions or be used in policy making (Zhao et al. Citation2021). However, there is an important caveat in this: to fully exploit the information carried in EO data (ARD or otherwise) there is often a need for both training and validation data. This can be an expensive component of any EO project (López-Lozano and Baruth Citation2019). While common approaches to reducing the costs associated with underpinning data acquisition has been to employ intelligent methods (e.g. intelligent training – Mathur and Foody Citation2008) or community initiatives between scientists (e.g. The Forest Observation System – Schepaschenko et al. Citation2019), this has achieved varying degrees of success and maturity.

An alternative, very promising approach, yet currently much underexploited within EO, to underpinning training/validation data provision is to look to the public to provide these necessary data, or to supplement those data collected by those with scientific authority. As a non-traditional data source, the virtues of the so-called citizen science have already been stated and the concept of volunteered geographical information (VGI) has gained great traction (Goodchild and Glennon Citation2010; Fritz, Fonte and See Citation2017; Callaghan et al. Citation2019). The use of citizen scientists to conduct scientific work, performed partially or completely by usually non-expert volunteers, has dramatically increased of late in a number of fields. Traditional areas of citizen science activities, such as bird watching, are enhanced by current technological and societal activities. Further, the information that is provided by citizen scientists can now be more easily verified and tested, and therefore be integrated into research projects faster. However, despite the inherent benefits of using citizen science for data collection its use within the field of EO has not moved apace with this trend, even though a number of commentaries have recognized and suggested an important role for citizen science in EO science (Hakley et al. Citation2018; Muzumdar, Wrigley and Ciravegna Citation2017; Kosmala et al. Citation2016a). For instance, the Horizon 2020 Space Advisory Group’s Advice on potential priorities for R&I in the Work Programme 2016–2017 notes the importance of crowdsourcing and citizen science and involvement of the public as significant. Several research projects, e.g. the NASA Roses Program’s Citizen Science for Earth Systems; the ESA’s Crowd4Sat project; and the EU’s Horizon 2020 Landsense project, ensued.

A review of the papers published in 2020 using Web of Science revealed that of all papers published in the prominent EO journals of International Journal of Remote Sensing; Remote Sensing and Remote Sensing of Environment only 10 mentioned citizen science (by searching for the term “citizen science” in all). Data collected via citizen science approaches are often notoriously difficult to adopt into daily operational activities, particularly given the potentially noisy nature of the data. Redundancies and gaps arising from human behaviour and the uncertain and potentially unreliable nature of participants often complicate the process of embedding such data into highly structured and organized processes. A primary issue stems from the very nature of citizen science – relying on the goodwill and voluntary interests of citizens and communities. Whilst the proliferation of citizen science and crowdsourcing platforms make it relatively easy to organize projects, citizen interests are highly dynamic. Initiatives that are highly topical can generate high volumes of data (e.g. Tomnod’s MH 370 crowdsourcing website had several million contributors - https://www.theguardian.com/world/20 14 March 2014/tomnod-online-search-malaysian-airlines-flight-mh370), but are at risk of quickly losing public interest. The ‘non-expert’ nature of participants often further increase concerns regarding data quality and accuracy. Moreover, the nature of participation is non-standard and with the wide variety of opportunities for participation, it is often difficult to establish a formalized process to operationalize robustly ad hoc voluntary inputs such as data from citizen scientists.

Despite these noted concerns, experts surveyed as part of the aforementioned Crowd4Sat project shared a positive outlook on the future of citizen science in the scientific community, though use of citizen science data within EO was limited – the intent was evident though the practice not. Others view citizen science as a crucial part of EO - Grainger (Citation2017) advocates that citizen observatories are the next phase in the evolution of EO science and over the past couple of years a number of projects have sought to build further still a fuller integration of citizens within EO (e.g. Karagiannopoulou et al. Citation2022). Furthermore, there is a growing body of work that specifically focuses on addressing the concerns (Foody et al. Citation2016). It could be argued that the time is right to for a measurable shift in the uptake of citizen science within EO.

This paper presents findings from a UK-focused project (Citizens4EO) which had the ambitious aim of moving the EO community towards a position of optimal uptake in the use of citizen science data within projects that are EO data-focused. Project objectives included: (i) to understand how the UK EO community views the challenges, opportunities, and pitfalls in using citizen science produced underpinning data in their projects; (ii) to produce demonstrator case studies and start build a community of intent of using citizen science data within the UK and (iii) to suggest a roadmap for future activity. This paper reports on findings from this Citizens4EO project: specifically the results of an online survey and in-depth interviews are presented; as is an in depth case study of how citizen science has been used to support satellite-EO project with a focus on concerns raised by the on-line survey – data quality and citizen motivation (the “Slavery from Space” project). From these findings a series of actions to encourage further uptake of citizen science within EO projects are presented.

2. Methods

2.1. Online survey

An online survey, based on previous work by Muzumdar, Wrigley and Ciravegna (Citation2017), was distributed to the UK EO community through active networks (e.g. the Remote Sensing and Photogrammetry Society (RSPSoc), the Natural Environment Research Councils’ Centre for EO (NCEO)). The online survey aimed to gauge an impression of how citizen science is used or could be used across the UK’s EO community. Questions focused on participant experience and background; how citizen science was seen to be used (or not) in the general EO community within various sectors (e.g. research and development; commercial and public services); the main mechanisms of engagement of citizens for EO projects; the identification of impact, benefits and opportunities presented by use of citizen science data; any barriers to use, and whether COVID-19 had impacted on views of citizen science for EO.

By building on the previous survey by Muzumdar, Wrigley and Ciravegna (Citation2017) as part of the Crowd4Sat project, the 2020 survey afforded an insight into recent adoption (or not) of citizen science in EO. Specifically, the survey aimed to capture specific information on how the stakeholders expect citizen science to be employed in the near future in three different settings: Scientific (as deployers), Commercial (as deployers) and Societal (citizens, authorities, civil protection, tourism and science and academia). Adoption was categorized into short (<3 years), medium (3–5 years) and long term (5–15 years), by different types of users such as innovators, early adopters, early majority, late majority and laggards (Rogers Citation1962). A generic description of these categories, was provided to the participants is as follows:

Innovators: willing to take risks. Their risk tolerance allows them to adopt technologies that may ultimately fail.

Early Adopters: judicious choice of adoption; socially forward.

Early Majority: adopt an innovation after a varying degree of time that is significantly longer than the innovators and early adopters.

Late Majority: adopt an innovation after the average participant.

Laggards: last to adopt an innovation.

The participants who were invited to respond to the survey were primarily experts in EO. In contrast, the 2016 survey invited participants who were experts in citizen science (with particular interest in EO data), and had experience in working with citizens and communities, so a direct comparison of its results with those collected in 2016 was not possible but coincident themes were extracted and used to support actions offered as part of the roadmap to encourage uptake.

2.2. In depth (virtual) face-to-face interviews

The online survey also asked whether respondents were prepared to be involved in a face-to-face interview to gain a deeper analysis of the use of citizen science in EO. The interview broadly used the same framework as the online survey but employing a reduced set of open-ended questions ending with a question supporting the development of actions to be adopted going forward. Similar to the potential survey participants, interviewees were experts in EO. Where appropriate, interviewees were invited to explain case studies of their use of citizen science in EO projects, to highlight a particular aspect of the use of citizen science for EO. One of these case studies was chosen to “spotlight” based on particular concerns highlighted in the online survey and current literature. A primary issue stems from the very nature of citizen science – the reliance on the goodwill and voluntary interests of citizens and communities. Whilst the proliferation of citizen science and crowdsourcing platforms make it relatively easy to organize projects, citizen interests are highly dynamic and there is concern over citizen motivation and associated data quality. The spotlighted ‘Slavery from Space’ project is presented below.

2.2.1. Spotlighted case study: rights lab slavery from space project – how important is citizen motivation?

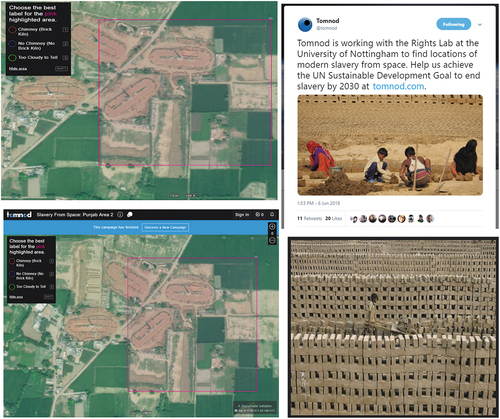

This spotlighted case study focuses on use of EO data to map all Bull Trench Brick kilns (which occur across India and surrounding countries across the “Brick Belt”). These kilns are “objects of UN Sustainable Development Goals (SDG) intersectionality” (Boyd et al. Citation2021), reflecting a material risk to SDG Target 8.7, which aims to abolish modern slavery, as well as to Target 16.2 (end abuse, exploitation, trafficking and all forms of violence and torture against children); to SDG 5 (achieve gender equality) and the environmental SDGs (SDG no. 13, 15). Boyd et al. (Citation2021) mapped all brick kilns using machine learning deployed on Very High Resolution (VHR) satellite imagery and to do so required to have sets of kilns for training and testing purposes. These sets were generated by a crowd of citizens who tagged the presence/absence of Brick Kiln on VHR satellite imagery served to them via an online citizen science platform.

On a limited budget, the value of using citizen scientists (by way of a crowd of volunteers) to provide tagging is particularly attractive; however, as stated previously there are often questions around a volunteered crowd’s tagging accuracy, their trust reliability and ability to sustain performance. Previous studies have shown that citizen scientists can be as, if not more, accurate than experts and certainly good enough to perform the task set (Olteanu-Raimond et al. Citation2020). This is crowd-dependent (Comber et al. Citation2016; Kazai, Kamps and Milic-Frayling Citation2011), treatment-dependent (Silberman et al. Citation2018) and studies can gain from incorporating information on contributors expertise into their studies. The motivation of the volunteers is important, with key motivations being the 4Fs (Kazai, Kamps and Milic-Frayling Citation2013; Parvanta, Roth and Keller Citation2013): fun, fortune, fame and fulfilment, with their impact on quality of contributions varying between different groups of people (Coleman, Georgiadou and Labonte Citation2009; Kaufmann, Schulze and Veit Citation2011). Fun and fame, although useful, are often the weakest motivators and can be associated with contributions of low accuracy (Kazai, Kamps and Milic-Frayling Citation2013). Fortune and fulfilment, on the other hand, are sometimes the strongest motivations and can be associated with contributions of high accuracy. There have been some studies evaluating the differences between paid and unpaid crowds (Mao et al. Citation2013; Kirilenko et al. Citation2017), and these suggest for tasks pertaining to social good observe little or no difference in accuracy of contributions. Given that much work on combating modern slavery relies on NGOs, who have limited resources, we sought to explore the differences in contributions to tagging brick kilns produced from paid and unpaid crowds.

Tomnod (formerly of Maxar) is a web platform that provided geospatial content for crowdsourcing and labelling (Baruch et al. Citation2016). In this project Maxar’s VHR WorldView imagery (RGB 0.46 cm resolution) was used to serve imagery to the crowd for two areas of interest (AOI) in the Punjab region of northern India. AOI 1 covered a region of 7,144 km2 and AOI 2 a region of 11,363 km2. For each AOI the imagery was divided into 300 by 300 m grid cells which were served randomly to be attributed by the crowd; meaning AOI had 76,606 grid cells and AOI 2 had 122,139 grid cells. AOI 1 was served by the Tomnod platform between 6th to 26th June 2018, followed by AOI 2 served during the month of August 2019. For both AOIs instructions and examples were shown to the crowd before inviting attributions for each of the grid cells seen. The crowd was asked if a Brick Kiln was present (or not) in the grid cell or if it might be obscured by cloud (see ). Instructions to the crowd for attribution differed for each AOI, with more sign posting included in the instructions for AOI 2 to assist the crowd (i.e. a chimney or shadow of chimney visible – this was added as a result of feedback from citizens undertaken the tagging in AOI 1). There were two sub-crowds looking at the two AOIs: a paid crowd recruited through Amazon mechanical turk and an unpaid crowd was recruited via the Tomnod platform which has a huge following of volunteers (known as Nodders).

Since, the focus was a binary classification: kiln or no kiln, only four possible labelling or annotation scenarios existed: true positive (TP, here a situation in which an image with a kiln was correctly identified as containing a kiln), false positive (FP), false negatives (FN) and true negatives (TN). These can be summarized in binary confusion matrix for each participant. From the confusion matrix measures of labelling accuracy for a contributor can be estimated. Here, the attention is on the accuracy of the two groups of participants rather than that of the individuals that they contain. The accuracy with which images were annotated was quantified using standard measures of classification accuracy. Particular attention was focused on sensitivity (TP/(TP+FN)) and specificity (TN/TN+FP) (Hand Citation2012). Alternatively, the sensitivity could be expressed as the producer’s accuracy for the kiln class and specificity the producer’s accuracy for the no brick kiln class. These measures of accuracy were selected as they are widely used and estimation is unaffected by prevalence.

3. Findings

3.1. Online survey

Thirty-four complete responses were obtained from respondents who had experience in using EO/Remote Sensing data in research and/or teaching, with 50% of those stating they have had experience with citizen science. This varied from respondents stating they have “some” or “limited” experience, to those supervising PhD students using citizen science data, through to leading geospatial activity in a five-year UKRI funded project which involves both citizen science and satellite-based EO methods, and to being a co-investigator in a Horizon 2020 citizen science project.

3.1.1. Scope of citizen science in EO projects

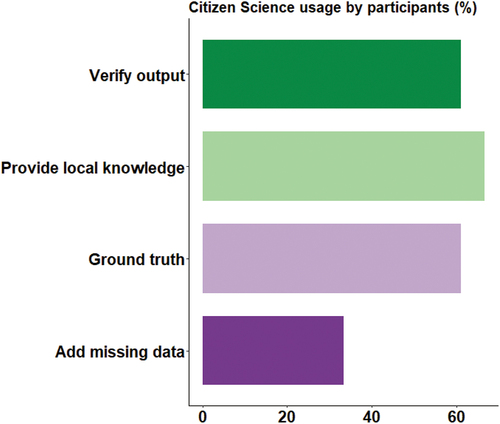

When asked which of the following matched how citizen science is currently used (i.e. data gap fill; verify EO outputs; to ground truth; to provide local knowledge), the large majority (65%) of respondents reported currently using citizen science data in at least two ways, while a minority (16%) selected all four available uses. Providing ‘local’ knowledge was the most common use, selected by two thirds of respondents, and adding missing data the least common, selected by one-third of respondents ().

3.1.2. Adoption of citizen science in EO projects

Respondents were in agreement that use of citizen science will be variable across different domains/applications of EO, and this was mirrored in responses to the adopter categories for each community. Researchers were seen to be more likely to adopt citizen science data into their EO work in the short-term than Commercial users (44% vs 22% respectively), who were more likely to adopt citizen science in their work on a long term timeframe (31% vs 18%). An example given of where early adoption may occur was with more observable phenomena, such as mapping land cover/land use. Areas that require more precise field measurements, expertise, or instrumentation were suggested as areas adopting later. Improvements and networks in technology, specifically software, sensors, and IT were considered a requirement in instigating growth in the use of citizen science data by the research/science community. Others commented on their anticipation of citizen science approaches increasingly adopted for EO validation, and how a changing climate will likely stimulate interest and subsequently increase processing power. Participants suggested that greater appreciation of information from large numbers of people can have huge value, despite potential issues from individuals, and there could be a requirement of citizen science teaching in universities (Hitchcock et al. Citation2021), in order for it to be considered as a data source for EO.

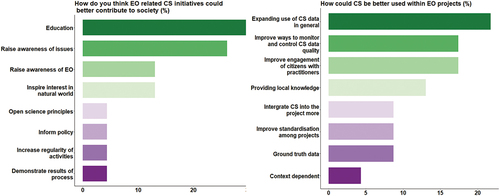

illustrates responses on how citizen science could be better used within EO projects. Roughly a third (35%) of responses referred to quality control, verification, ground truth, and calibration tasks. Several responses stated the importance of including citizen science as part of project proposals and ensuring a two-way usage of data. reports the responses to the question on how EO-related citizen science initiatives could better contribute to society where 25% of respondents highlighted the real potential for raising awareness and tackling environmental challenges, both at a local and global scale. One respondent expanded on this to mention the potential of initiatives influencing policies relating to environmental challenges. Other general comments were made on helping people explore, engage, and reconnect with nature and wildlife in their local environment. Several responses mentioned the integration of EO and citizen science initiatives into school projects and the science/geography curriculum. One response made particular mention to tapping into the growth of public interest in photography (e.g. personal drone use, social media) as a method of increasing awareness of EO. A key theme in responses concerned ensuring ongoing citizen science engagement, creating feedback loops between contributions and the projects, and preventing loss of data and tools once a project has ended.

3.1.3. Impact of citizen science for EO the community

When asked if there are any areas citizen science could really make a difference to the EO community, under a third (29.4%) of responses considered the potential of citizen science for improving spatial and temporal coverage, to fill particularly where there are considerable gaps in EO data (e.g. African countries, rates of satellite revisit). Under one in five (17.6%) responses mentioned the potential of citizen scientists assisting with observations in the real time, such as for navigation, traffic, and disasters. Specifically, for ground “truthing” and validation. Other comments were made on mapping, quantifying, and monitoring environmental change and degradation. One comment highlighted the requirement of better equipping citizen scientists with an understanding of EO data, so they can make connections between it and what is on the ground. One response indicated Citizen Science projects require “readily recognizable EO data … medium and fine spatial resolution”, they went on to say that much of the fine spatial data is not freely/easily available, suggesting a limitation to project scope.

Applications where citizen science is used in an EO project that respondents would trust included: Land cover mapping; Land use mapping; Urban tree size and location; Pollution and urban green infrastructure; General mapping (e.g. OpenStreetMap); Habitat/ecological mapping, and observations of terrestrial, aquatic, or atmospheric properties. However, also respondents noted a number of barriers currently preventing further uptake of citizen science that are procedural, technical, ethical and trust-related in nature (see next section).

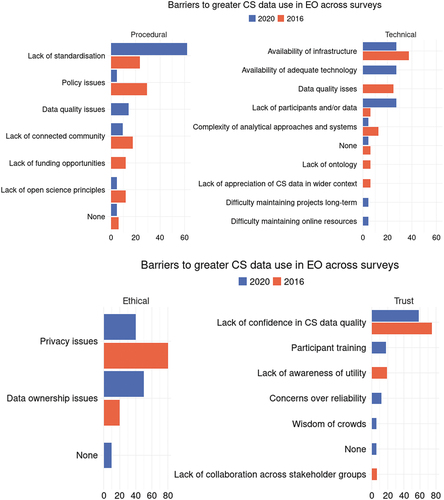

3.1.4. Barriers to adoption of citizen science in EO projects

Responses of 2020 are presented in against those obtained in 2016. Focusing on 2020 only, two-third of participants raised standardization measures as procedural barriers to uptake. Other concerns included issues around open data, data security and privacy, and policy support at a national level. On the other hand, one response described the barriers due to a lack take-up of EO data in the general user community, rather than technological or monetary reasons. Technical barriers such as such as data storage infrastructure, hardware, software, and training were also noted. In terms of data collection, responses highlighted the need for reliable, customizable, user-friendly applications, which are operable on a range of devices. In addition to this, one response emphasized that tools and software should operate in many different languages. One comment also noted that barriers in different counties become much starker due to factors such as access to technology and income.

Figure 4. Themes emerging from responses to the question “What barriers do you foresee to greater uptake of citizen science data usage in EO projects?”.

The need for more work on trust and provenance was also reiterated as a requirement for ensuring consistency and reliability in citizen science data to be used. However, several responses suggested that trust in citizen science data will become less of a problem as the volume of data increases, with trends being easily identifiable. One response suggested the use of artificial intelligence methodologies for identifying fake data. Lastly, multiple responses highlighted the potential issues of data ownership and copyright agreements.

In terms of societal aspects, one participant related to this as value gained from the engagement of citizen scientists. When asked about the accessibility of citizen science data, over 50% of responses stated that it is not easy to find out about or access citizen science data. The need for a recognized database or website for citizen science-generated data was raised. Other responses from participants included comments only on experience where their own data had been generated in collaboration with citizen scientists. The responses regarding when citizen science data was most trustworthy included when data is easy to collect and is relatively objective, clear protocols for collection, and when sensors have been well characterized and calibrated. Integrity of the data source was raised as a trust issue with regards to the data quality. Another response stated that the provenance and accuracy of citizen science data as not being critical, as it can be checked and refined as necessary.

Comparing responses from the 2020 survey with those obtained in 2016 (), suggests barriers to greater use of citizen science in EO projects were not as apparent and overall it appears the EO and CS community have moved forward towards more operationalization of citizen science within EO projects. In the 2020 survey, there was less of a concern around policy, but much more concern around data quality (though a lack of trust in the data was less of issue in 2020 than 2016), data ownership and how to standardize the use of citizen science with EO projects, indicating a greater awareness of benefits of using citizen science and questions being asked around best practices. Answers from 2020 also related to thinking in the longer-term, i.e. around maintaining projects and developing a connected community of those involved in CS4EO.

3.1.5. Impact of Covid-19 on citizen science and EO

The increased value of citizen science for EO during the Covid-19 pandemic restriction of movement period was recognized by the respondents. Two-thirds of respondents confirmed a fuller recognition of the significant benefits possible if citizen science is used appropriately as a result of the pandemic. One response reflected on the familiarity gained with communicating online during the pandemic, stating everything should go online such as surveys and meetings. Other comments were made on the value of citizen science for when data collection/fieldwork is not possible. Where access to the outdoors is permitted, suggestions included the possibility of assessing data products that are currently being generated, reporting outputs of field sensors, site examinations, ground truthing, and collecting photos and coordinates of local features. Other responses were more focused towards stricter lockdown rules, and therefore stated the requirement of contributions being desk based. Additionally, one participant noted the potential of integrating citizen science with home-schooling their children during the lockdown, if they had known about active projects. Another stated that one of their Ph.D students is considering use of a citizen science website as an alternative to fieldwork which has been disrupted as a result of the pandemic.

3.2. Face to face interviews

In addition to experiences of the Rights Lab (see section 2.3, where we discuss a spotlight case study), in depth interviews were undertaken with four users of citizen science within EO projects undertaken: (i) a nature conservation body; (ii) an EO technology and innovation company; (iii) two researchers within a University Department of Engineering. Each of these organizations had their main strengths in EO, with the experience using citizen science in their projects ranging from those who were currently heavily involved in citizen science research to those who were developing methods and tools for the application of citizen science in EO. Topic focus covered civil engineering, ecology (habitat/species mapping) and humanitarian aid coordination efforts. Common uses of citizens within projects were labelling satellite imagery to produce training data sets and community mapping to provide local knowledge. All organisations used available online citizen science platforms (e.g. Zooniverse) or outsourced recruitment of citizen scientists to specialized outfits. Efforts to improve citizen engagement so that sufficient data are collected on a large enough scale was seen as key, as was quality in communication of tasks and justifications for each aspect are key to ensuring higher quality data, and using available networks of citizens on the ground can help widen the citizen base. The speed with which the available infrastructure or specialists engaged and mobilized the citizen base was seen to be very attractive. It was also noted that the larger a citizen base, the more time and effort to manage and coordinate with volunteers is required, and sometimes, this was a barrier to tweaking or improving a methodology during a project due to the data that would have to be jettisoned. Obtaining satellite imagery was often noted to be slower than recruiting citizens to undertake tasks. Yet effort is key in order to maintain engagement of citizens – feedback on their effort is also something citizens actively demand throughout a project.

Key challenges across the case study projects included citizen reliability and privacy issues. Awareness of general quality of available satellite imagery was low, and often citizens did not understand why the imagery was lower quality than Google Earth imagery (the latter probably due to familiarity with VHR data in everyday life). Balancing between project scale and available citizen effort was important, ensuring citizens are not overwhelmed by tasks. Additionally, data privacy and ownership can be problematic if not using well-worn infrastructure such as Zooniverse. Thought and effort needs to go into the citizen recruitment process for many reasons a) to ensure the motivations of citizens align well with that of the project’s; b) to ensure the information and in some cases training is sufficient for the role and c) to enable the maximal amount of data can be recorded. When these aspects are considered, data quality and project longevity are maximized. Standardisation of approaches and methods is key, as a lack of this makes integrating wider citizen data sets harder, reducing generalizability of findings. All organizations noted that CS4EO provide a necessary opportunity to educate the citizen base and reduce misinterpretation of EO data among wider society.

3.3. Spotlighted case study: slavery from space

The case study focused on the identification of brick kilns that are sites associated with slavery in VHR imagery. In total 317,995 images were annotated by citizens using imagery released to them in two rounds of study. Of these images only 2804 actually contained a kiln. In each round the citizens belonged to one of two groups: unpaid volunteers and a paid group. This helped assess the impact of motivation. Feedback on labelling was provided between the two rounds and this helped assess the importance of feedback.

In first round of annotations (AOI 1), very similar numbers of paid and unpaid contributors took part in the study. Specifically, there were 394 paid and 419 unpaid contributors. In both sets of contributors, many citizens participating tagged only a very small number of images. The number of images tagged by each group of participants was distinctly non-normal with many labelling only a small number of images while a small number labelled a substantia number of images. Summary statistics, showing the minimum, maximum, mean, median and upper and lower quartiles of labelling is shown in . It seems evident that the unpaid crowd stopped tagging before the paid crowd and that the paid crowd appeared to be more dependable and likely to produce annotations. Note for example that the median number of images labelled by the paid crowd was 678.0, very considerably larger than the median for the unpaid crowd of 25. Note also that the lower quartile value for the paid crowd (187.0) is larger than the upper quartile of the unpaid crowd (146.0). However, it was also evident that a few unpaid participants were willing to invest substantial time and effort and produced an extremely large number of annotations. The largest individual contribution received was for 73,316 labelled images from an unpaid participant, far in excess of the 6173 received from the paid participant contributing the most to the study. Overall, it seemed that the unpaid community mostly contributed fewer annotations than the paid except at the upper end when a few unpaid contributors provided an order of magnitude more annotations than the paid community.

Table 1. Results on tagging accuracy for AOI 1

There were notable differences in the accuracy of the annotations provided by the two groups. In particular, it was evident that the annotations provided by the unpaid group were generally more accurate than those from the paid community. For example, for each accuracy measure, the mean and median accuracy obtained from the unpaid crowd was larger than the corresponding values obtained from the paid crowd ).

Based on experiences learned on the labelling tasks feedback was provided to try and enhance the labelling. The general trends observed in the second round (AOI 2) were similar to those observed in the first round. For example, a similar number of participants from each community took part in the study: 312 paid and 275 unpaid participants. The unpaid community also tended to contribute fewer annotations. For example, the first and third quartile of the number of images annotated by the unpaid community was 4.0 and 144.0 while the corresponding values for the paid community were 144.3 and 1238.8 respectively. However, a small number of unpaid contributors again annotated a massive number of images. The maximum number of images annotated by a participant in the two communities was 5728 from the paid and 61,754 from the unpaid community. Finally, it was also evident that the accuracy of the annotations received from the unpaid community were generally more accurate than those from the paid community. As before, for example, the median value of each measure of accuracy was larger from the unpaid than paid group. A slight deviation from the general trend was noted, for example, with the mean specificity of the paid group slightly larger than that from the unpaid (). A key feature to note, however, was that the accuracy values, for all measures, were generally increased in the second round over those observed in the first (). The latter highlights the value of feedback and revision of the challenge posed.

Table 2. Results on tagging accuracy for AOI 2

These findings provide some confidence in using citizen science to provide reference data to be used, for example, in machine learning models for image object detection (see Boyd et al. Citation2021). The production of training sets in this way did require some further refinements by experts but using citizens reduced the burden of data to annotate at the outset. The study, also highlights the importance of fulfilment as a key motivator. This is particularly pertinent for the “Slavery from Space” project as we know we can trust volunteers to help and we will continue to do so going forward as we start to extend the mapping to look for brick kilns using new firing technologies – see Boyd et al. (Citation2021) for further details.

4. Discussion: towards an increased uptake of citizen science in EO (Citizens4EO)

This research has sought to understand the current situation in terms of citizen science data use among the UK EO community. We have begun a dialogue among a community of interest that are committed to expanding their understanding, capability and innovation in terms of use of citizen science in EO projects. Comparing this insight with the findings from Muzumdar, Wrigley and Ciravegna (Citation2017) undertaken in 2016 suggests that although those using citizen science were keen to stress the benefits of doing so, overall little progress has been made to remove hesitancy and perceived barriers over the past 5 years. We acknowledge that this study involved a range of EO experts and the 2016 study involved CS experts; however, both studies involved participants who had clear insight into the field of the application of CS and EO. We believe the comparison between the two studies is therefore still useful, and at the same time, was an opportunity to explore the EO community in greater depth. Key points emerging are summarized below and serve as focus for action, which we offer in order, to change the status quo.

Citizen science approaches used within an EO project can support high-quality outcomes. Used within a well-run project that effectively engages and recruits a large volunteer base can make use of huge amount of an otherwise untapped resource. Successful projects using citizen scientists can be exceptionally good at producing data quickly, and in some cases quicker than delivering projects solely with experts. This is particularly evident with the spotlighted case study on modern slavery in brick kilns, a project that also highlighted that having motivated and engaged citizens in the project is vital. Ensuring that those volunteering their time to a project are enthusiastic and have an understanding of a project’s objectives promotes better data quality, levels of involvement and larger data sets. In summary, projects for social good tend not to require much incentivization of the ‘crowd’; data collection techniques that citizens are familiar with and easy to pick up or be trained in perform well; and clear reasons and messaging surrounding the work helps to motivate citizens. This links closely with altruism and collectivism motivations of Batson, Ahmad and Tsang (Citation2002)’s four motivations of community participation – egoism (increasing ones’ own welfare), altruism (increasing welfare of another individual or group), collectivism (increasing the welfare of ones’ group), principlism (upholding ones’ principles, e.g. justice, equality). While Batson’s model does not explicitly focus on citizen science, the role of community participation and its motivations is highly relevant in this context (Rotman et al. Citation2012). More studies, such as that spotlighted, which demonstrate the value in using citizens for provision of supporting data for EO projects should serve to shift preconceived opinions. One particularly valuable exercise within these would be to compare training and validation data collected within EO projects by experts with those collected by citizen scientists. In short, more experience in CS4EO is required.

Novel technologies and approaches (e.g. mobile applications, sensors) can increase appeal of projects to citizens (Newman et al. Citation2012). It is clear that the innovative use of technologies in some cases has helped engage a volunteer base, and has appeal that reaches beyond the confines of the issue in question, enabling the engagement of a wider audience of citizens. Specific types of users (such as younger participants) might also find the use of technology appealing (Bowser et al. Citation2013). Related to this is that availability of good infrastructure is also important. There is already a large, tried and tested infrastructure for engaging and working with citizens or communities on EO projects that when used has made projects progress smoothly and obtain data (and subsequently results) quickly and efficiently. Leveraging existing citizen science infrastructures can help make projects more technically robust and resilient, thereby reducing risks of introducing underlying frailties potentially arising out of involving volunteer technicians without sufficient resources to support projects (Bowser et al. Citation2020). Respondents to the surveys recommended using available resources to recruit citizens/communities/crowds, such as Zooniverse, as they worked well in obtaining an engaged citizen base. These resources provide opportunities for efficient two-way communication, and reduce time spent attempting to recruit citizens. To quote one of the case studies: “if the infrastructure is already there why not use it”.

Respondents across both surveys (2016 and 2020) cited similar issues/themes, though the balance of concern between the themes has changed (though a direct comparison is not valid due to a different population being surveyed). For example, when asked to provide barriers to progress, the standardization of citizen/crowd collected data, privacy issues, infrastructure and potential biases were common responses in both 2016 and 2020. Indeed, most respondents (>50%) across the surveys stated that access and opportunity to use citizen science data was difficult and not as simple as it could be, suggesting improvement in these areas is key. In fact, the issues around data quality and standardization have been described as a challenge in citizen science (Alabri and Hunter Citation2010). However, previous studies also demonstrate that data quality in citizen science can be measured and processes could be setup to enhance data quality within citizen science projects (Kosamala et al. Citation2016b). It was noted that recruitment of a citizen base without use of available resources and infrastructure (i.e. independently) can be very challenging, and often leads to smaller datasets than anticipated, and of potentially lower quality due to lack of experience motivating the volunteers. Even with use of available infrastructure, aspects of data pre-processing for upload to crowdsourcing sites can be time consuming and draining. Further, access to pre-collected citizen science data from across projects, and data storage in general, have often been seen as challenges to a project’s progress. For example, General Data Protection Regulation 2016/679 (GDPR) can lead to challenges regarding the use of data already obtained but for new purposes, additionally the financial and logistical cost of data storage can be prohibitive. To this end, the WeObserve Policy Brief (Masó and Wehn Citation2020) proposes several recommendations as a way forward to address issues around the integration of citizen science data into GEOSS. These recommendations are across different levels – project level (e.g. creation of a federation of citizens observatories, joining forces via Citizen Science associations), policy level (e.g. creating a permanent e-infrastructure, sponsoring the creation of common vocabularies and methodologies, showcasing the use of citizen science data) and GEOSS level (e.g. assessing the potential of citizen science data to complement EO data, simplifying the mechanisms for including citizen science data in GEOSS platform).

The seemingly irregular nature of wider citizen concerns and behaviours mean that sometimes it is difficult to ensure sufficient participation and reliable data. Participants in our study commented that crowd members can be unreliable, it is vital to have measures or abilities to assess the quality of data obtained through citizens, this is a view shared by many respondents, and this is a barrier to further uptake. On a similar note, training is vital as different regions (if going national or beyond) often have different systems, being aware of this is key. In fact, one of the seven procedures that can enhance data quality in citizen science projects is citizen training and testing (Kosmala et al. Citation2016b). Lack of standardization around data and processes is recurrently seen as problematic. Lack of awareness among citizens about the quality of geospatial data available has meant that much time has been spent explaining these issues to volunteers, reiterated by many case studies, if using citizens to ground truth data this issue can lead to misunderstanding and confusion about the quality and pertinence of EO data. That said, it was recognized that citizen science can be used to educate and inform ‘the public’. Some projects reported specifically built into their programmes the need to raise awareness, and understanding in key issues among citizens (Bonney et al. Citation2009; Silvertown Citation2009). As has been observed in the literature and our study, the ability of citizen science to help increase science-informed decisions among the public and participation in positive action (Rossiter et al. Citation2015) and the noticeable shift of volunteer roles from simply data collection to more active involvement as data interpreters and analysts (Buytaert et al. Citation2014; Paul et al. Citation2018) should be a key motivation for EO scientists to use it.

Advances in available technology and EO data and products, particularly those that are analysis ready, will be at the heart of increasing citizen science into EO projects. There is currently a rapid expansion in new satellite EO sensors that are blurring the tradeoff between resolutions (e.g. Planet Pelican, Airbus Pléiades Neo). Key things to consider on these however are ensuring the interpretability and usability of these products to citizens. Engaging younger audiences and ensuring the approach to citizen science is inclusive to all is very important. Usability, accessibility and user experience are key for engagement (O’Keeffe and Walls Citation2020) and inaccessible and difficult to use digital solutions in crowdsourcing projects can lead to participation inequality and non-representative demographic groups (Haklay Citation2016). Ensuring quality of citizen science data is key, whether this be through training or methods to measure quality. Project messaging is vitally important for ensuring a good citizen base. Understanding how to do this, and citizen motivations is key to ensuring attractiveness to citizens on larger spatial and temporal scales. By considering each of the above points, three main areas of action towards a better take up of Citizens4EO are suggested.

Action one, seeks to address the awareness deficit among EO community of the benefit of citizen science within their work. In terms of capability, we have identified that there already is sufficient, expanding ability and skill in the approaches and techniques to incorporating citizen science data in EO projects. A key issue however, is that there is a lack of awareness of these among the wider EO community. It is imperative that awareness of current citizen science infrastructure, data quality assurance techniques and other successful aspects of incorporating citizen science in EO research and practice is increased. A community of intent (to further uptake and raise awareness of the value of citizen science in EO) for those in the UK EO community willing to use citizen science data should be established. This could take the form of a Citizens4EO Special Interest Group within an agency such as the Remote Sensing and Photogrammetry Society (RSPSoc). One of the key roles of this working group will be to act as a facilitator for future projects, additionally the group will coordinate with and learn from other international citizen science working groups and bodies (including for example, the U.S. Citizen Science Association, the European Citizen Science Association, the WeObserve Citizen Science observatory, and the Group on Earth Observation). A particular focus for awareness raising would be the value-added by using citizen scientists to generate training and validation data and approaches to doing this. Good practice in this regard (e.g. Olofsson et al. Citation2014; Chandler et al. Citation2017) could be centred within a citizen science framework.

Action two, focuses on improving access, and flexibility of infrastructure for citizen scientists to engage with EO projects. There is a large swathe of resources for those that wish to engage the crowd and citizen bases for input at various stages of research and practice, yet there are numerous cases where researchers and practitioners have had to develop their own bespoke infrastructure for their particular projects. Many respondents and participants stated that the diversity and apparent lack of standardization across these areas is daunting and confusing. Improving awareness of available infrastructure is an important component of our roadmap, but further attention also needs to be paid to more collective production and development of flexible infrastructure that can meet a broad spectrum of project needs. In order to achieve this there needs to be increased opportunities for accessible training and information on use of available infrastructure. A forum should be established (such as Citizens4EO) for development of more standardized approaches to obtaining, verifying and analysing citizen science data, creating a more open discussion and spreading the reach of this information among the EO community. ‘Hackathon’ style workshops aimed at improving aspects of citizen science infrastructure that often raise problems for EO scientists should be organized.

Action three should explore approaches to improving citizen reliability, training and information concerns of some of the EO community. One of the most insightful findings of our surveys, and the spotlight case study, was the importance of engaging, informing and training citizen scientists across a multitude of settings in which they have been enlisted to work with EO scientists. The projects with seemingly the most sustainable relationships were those that invested time and effort to explain and engage the communities and citizens they were working with, often easier, if done using available infrastructure. Further, the quality of data collected, and effort contributed by citizens was greater when the information flow was reciprocal between researchers and citizens. Good examples of projects that created and maintained sustainable relationships with project partners and citizen scientists were those focused on community mapping (the projects set up by Humanitarian Open Street Map are good cases). In order to overcome concerns around citizen reliability further attempts to share the experiences of successful projects through consistent and regular communications via the working group and other bodies. Indeed, the afore-mentioned WeObserve policy paper (Masó and Wehn Citation2020) discusses the need to “showcase” the use of citizen science data as a complementary data source to in-situ and remote sensing data in operational use cases. A possible model for engagement could be that of Conservation Optimism (conservationoptimism.org), which has pushed a positive and proactive agenda towards conservation research and practice through constant online and in-person exposure.

5. Conclusion

We initiated this process by seeking to understand how the UK EO community views the challenges, opportunities, and pitfalls in using citizen science data in their projects; to produce demonstrator case studies and start building a community of intent within the UK of EO practitioners and researchers; and by reporting on this, facilitate growth in citizen science 4 EO (Citizens4EO). Across data streams we found much agreement between Earth Observation practitioners and researchers towards the benefits of using citizen science data in their projects. These included having motivated and engaged citizens who can produce high-quality data for training/validation datasets (the “Slavery from Space” project was spotlighted here); and in doing so have the added benefit of knowledge exchange (i.e. educate and inform ‘the public’ on EO). Recent advances in novel technologies and approaches and infrastructure have increased the appeal of Citizens4EO. Additionally, we uncovered common barriers to increased uptake among the wider EO community. These were around the mechanics of deploying citizen science and the unreliability of a potentially misinformed or undertrained citizen base. As such, comparing results with those of a survey undertaken in 2016 as part of the Crowd4Sat project, progress towards optimizing citizen science use in EO has been slow. We suggest that progress would be facilitated by addressing the awareness deficit among the EO community of already available and quality of citizen science infrastructure, methodology and approaches and improving the availability and adaptability (flexibility) of current and future citizen science infrastructure. We invite you to engage as appropriate.

Acknowledgement

We thank wholeheartedly all those who took part in the surveys and supported this project.

Disclosure statement

No potential conflict of interest was reported by the author(s).

Data availability statement

Due to the nature of this research, participants of this study did not agree for their data to be shared publicly, so supporting data is not available.

Correction Statement

This article has been corrected with minor changes. These changes do not impact the academic content of the article.

Additional information

Funding

References

- Alabri, A. and J. Hunter. 2010. “Enhancing the Quality and Trust of Citizen Science Data.” 2010 IEEE sixth international conference on e-science, Indianapolis, USA, 81–88, December.

- Anderson, K., B. Ryan, W. Sonntag, A. Kavvada, and F. Lawrence. 2017. “Earth Observation in Service of the 2030 Agenda for Sustainable Development.” Journal of Geo-Spatial Information Science 20 (2): 77–96. doi:https://doi.org/10.1080/10095020.2017.1333230.

- Andries, A., S. Morse, R. Murphy, J. Lynch, E. Woolliams, and J. Fonweban. 2018. “Translation of Earth Observation Data into Sustainable Development Indicators: An Analytical Framework.” Sustainable Development 27 (3): 366–376. doi:https://doi.org/10.1002/sd.1908.

- Baruch, A., A. May, and D. Yu. (2016). The Motivations, Enablers and Barriers for Voluntary Participation in an Online Crowdsourcing Platform. Computers in Human Behavior, 64 923–931. https://doi.org/10.1016/j.chb.2016.07.039

- Batson, C. D., N. Ahmad, and J. A. Tsang. 2002. “Four Motives for Community Involvement.” The Journal of Social Issues 58 (3): 429–445. doi:https://doi.org/10.1111/1540-4560.00269.

- Bonney, R., C. B. Cooper, J. Dickinson, S. Kelling, T. Phillips, K. V. Rosenberg, and J. Shirk. 2009. “Citizen Science: A Developing Tool for Expanding Science Knowledge and Scientific Literacy.” BioScience 59 (11): 977–984. doi:https://doi.org/10.1525/bio.2009.59.11.9.

- Bowser, A., D. Hansen, Y. He, C. Boston, M. Reid, L. Gunnell, and J. Preece. 2013. “Using Gamification to Inspire New Citizen Science Volunteers.” Proceedings of the First International Conference on Gameful Design, Research, and Applications, Waterloo, Canada, 18–25.

- Bowser, A., C. Cooper, A. De Sherbinin, A. Wiggins, P. Brenton, T. R. Chang, and M. Meloche. 2020. “Scaling Up: Citizen Science Engagement and Impacts Beyond the Individual.” Citizen Science: Theory and Practice 5 (1): 1–13. doi:https://doi.org/10.5334/cstp.244.

- Boyd, D. S., B. Perrat, X. Li, B. Jackson, T. Landman, F. Ling, K. Bales, et al. 2021. “Informing Action for United Nations SDG Target 8.7 and Interdependent Sdgs: Examining Modern Slavery from Space.” Nature Humanities and Social Sciences Communications 8 (1): 1–14. doi:https://doi.org/10.1057/s41599-021-00792-z.

- Buytaert, W., Z. Zulkafli, S. Grainger, L. Acosta, T. C. Alemie, J. Bastiaensen, and M. Zhumanova. 2014. “Citizen Science in Hydrology and Water Resources: Opportunities for Knowledge Generation, Ecosystem Service Management, and Sustainable Development.” Frontiers in Earth Science 2: 26. doi:https://doi.org/10.3389/feart.2014.00026.

- Callaghan, C. T., J. J. L. Rowley, W. K. Cornwell, A. G. B. Poore, and R. E. Major. 2019. “Improving Big Citizen Science Data: Moving Beyond Haphazard Sampling.” PLoS Biology 17 (6): e3000357. doi:https://doi.org/10.1371/journal.pbio.3000357.

- Chandler, M., L. See, C. D. Buesching, J. A. Cousins, C. Gillies, R. W. Kays, C. Newman, H. M. Pereira, and P. Tiago. 2017. ”Involving Citizen Scientists in Biodiversity Observation.” In The GEO Handbook on Biodiversity Observation Networks, edited by M. Walters and R. J. Scholes. https://doi.org/10.1007/978-3-319-27288-7_9).

- Coleman, D., Y. Georgiadou, and J. Labonte. 2009. “Volunteered Geographic Information: The Nature and Motivation of Producers.” International Journal of Spatial Data Infrastructures Research 4 (4): 332–358.

- Comber, A., P. Mooney, R. S. Purves, D. Rocchini, and A. Walz. 2016. “Crowdsourcing: It Matters Who the Crowd Are. The Impacts of Between Group Variations in Recording Land Cover.” PloS One 11 (7): e0158329. doi:https://doi.org/10.1371/journal.pone.0158329.

- Foody, G. M., L. See, S. Fritz, M. van der Velde, C. Perger, C. Schill, D. S. Boyd, and A. Comber. 2016. “Accurate Attribute Mapping from Volunteered Geographic Information: Issues of Volunteer Quantity and Quality.” The Cartographic Journal 52 (4): 336–344. doi:https://doi.org/10.1080/00087041.2015.1108658.

- Fritz, S., C. C. Fonte, and L. See. 2017. “The Role of Citizen Science in Earth Observation.” Remote Sensing 9 (4): 357. doi:https://doi.org/10.3390/rs904035.

- Goodchild, M. F. and J. A. Glennon. 2010. “Crowdsourcing Geographic Information for Disaster Response: A Research Frontier.” International Journal of Digital Earth 3 (3): 231–241. doi:https://doi.org/10.1080/17538941003759255.

- Grainger, A. 2017. “Citizen Observatories and the New Earth Observation Science.” Remote Sensing 9 (2): 153. doi:https://doi.org/10.3390/rs9020153.

- Haklay, M. E. 2016. Why is Participation Inequality Important?. London: Ubiquity Press.

- Hakley, et al. 2018. Citizen science for observing and understanding the Earth. In: MATHIEU, Pierre-Philippe and AUBRECHT, Christoph, (eds.) Earth observation open science and innovation. ISSI Scientific Report Series (15). Cham, Springer, 69-88, https://doi.org/10.1007/978-3-319-65633-5_4.

- Hand, D. J. 2012. “Assessing the Performance of Classification Methods.” International Statistical Review 80 (3): 400–414. doi:https://doi.org/10.1111/j.1751-5823.2012.00183.x.

- Hitchcock, C., H. Vance-Chalcraft, and M. Aristeidou. 2021. “Citizen Science in Higher Education.” Citizen Science: Theory and Practice 6 (1): 22. doi:https://doi.org/http://doi.org/10.5334/cstp.467.

- Karagiannopoulou, A., A. Tsertou, G. Tsimiklis, and A. Amditis. 2022. “Data Fusion in Earth Observation and the Role of Citizen as a Sensor: A Scoping Review of Applications, Methods and Future Trends.” Remote Sensing 14 (5): 1263. doi:https://doi.org/10.3390/rs14051263.

- Kaufmann, N., T. Schulze, and D. Veit. 2011. “More Than Fun and Money: Worker Motivation in Crowdsourcing-A Study on Mechanical Turk.” Proceedings of 17th Americas Conference on Information Systems Detroit, USA, Aug 4-7, 11.

- Kavvada, A., G. Metternicht, F. Kerblat, N. Mudau, M. Haldorson, S. Laldaparsad, L. Friedl, A. Held, and E. Chuvieco. 2020. “Towards Delivering on the Sustainable Development Goals Using Earth Observations.” Remote Sensing of Environment 247. doi:https://doi.org/10.1016/j.rse.2020.111930.

- Kazai, G., J. Kamps, and N. Milic-Frayling. 2011. “Worker Types and Personality Traits in Crowdsourcing Relevance Labels.” Proceedings of the 20th ACM International Conference on Information and Knowledge Management, Glasgow, Scotland, 1941–1944.

- Kazai, G., J. Kamps, and N. Milic-Frayling. 2013. “An Analysis of Human Factors and Label Accuracy in Crowdsourcing Relevance Judgments.” Information Retrieval 16 (2): 138–178. doi:https://doi.org/10.1007/s10791-012-9205-0.

- Kirilenko, A. P., T. Desell, H. Kim, and S. Stepchenkova. 2017. “Crowdsourcing Analysis of Twitter Data on Climate Change: Paid Workers Vs. Volunteers.” Sustainability 9 (11): 2019. doi:https://doi.org/10.3390/su9112019.

- Kosmala, M., A. Crall, R. Cheng, K. Hufkens, S. Henderson, and A. D. Richardson. 2016a. “Season Spotter: Using Citizen Science to Validate and Scale Plant Phenology from Near-Surface Remote Sensing.” Remote Sensing 8 (9): 726. doi:https://doi.org/10.3390/rs8090726.

- Kosmala, M., A. Wiggins, A. Swanson, and B. Simmons. 2016b. “Assessing Data Quality in Citizen Science.” Frontiers in Ecology and the Environment 14 (10): 551–560. doi:https://doi.org/10.1002/fee.1436.

- López-Lozano, R. and B. Baruth. 2019. “An Evaluation Framework to Build a Cost-Efficient Crop Monitoring System. Experiences from the Extension of the European Crop Monitoring System.” Agricultural Systems 168 (C): 231–246. doi:https://doi.org/10.1016/j.agsy.2018.04.002.

- Mao, A., E. Kamar, Y. Chen, E. Horvitz, M. E. Schwamb, C. J. Lintott, and A. M. Smith 2013. “Volunteering versus Work for Pay: Incentives and Tradeoffs in Crowdsourcing.” First AAAI conference on human computation and crowdsourcing, Palm Springs, California, 94–102.

- Masó, J. and U. Wehn. 2020. A Roadmap for Citizen Science in GEO - the Essence Of the Lisbon Declaration. European Union Horizon 2020: WeObserve policy brief 1.

- Mathur, A. and G. M. Foody. 2008. “Crop Classification by Support Vector Machine with Intelligently Selected Training Data for an Operational Application.” International Journal of Remote Sensing 29 (8): 2227–2240. doi:https://doi.org/10.1080/01431160701395203.

- Muzumdar, S., S. Wrigley, and F. Ciravegna. 2017. “Citizen Science and Crowdsourcing for Earth Observations: An Analysis of Stakeholder Opinions on the Present and Future.” Remote Sensing 9 (1): 87. doi:https://doi.org/10.3390/rs9010087.

- Newman, G., A. Wiggins, A. Crall, E. Graham, S. Newman, and K. Crowston. 2012. “The Future of Citizen Science: Emerging Technologies and Shifting Paradigms.” Frontiers in Ecology and the Environment 10 (6): 298–304. doi:https://doi.org/10.1890/110294.

- O’-Keeffe, W. and D. Walls. 2020. “Usability Testing and Experience Design in Citizen Science: A Case Study.” Proceedings of the 38th ACM International Conference on Design of Communication, Texas, USA, 1–8.

- Olofsson, P., G. M. Foody, M. Herold, S. V. Stehman, C. E. Woodcock, and M. A. Wulder. 2014. “Good Practices for Estimating Area and Assessing Accuracy of Land Change.” Remote Sensing of Environment 148: 42–57. doi:https://doi.org/10.1016/j.rse.2014.02.015.

- Olteanu-Raimond, A. M., L. See, M. Schultz, G. M. Foody, M. Riffler, T. Gasber, L. Jolivet, et al. 2020. “Use of Automated Change Detection and VGI Sources for Identifying and Validating Urban Land Use Change.” Remote Sensing 12 (7): 1186. doi:https://doi.org/10.3390/rs12071186.

- Parvanta, C., Y. Roth, and H. Keller. 2013. “Crowdsourcing 101: A Few Basics to Make You the Leader of the Pack.” Health Promotion Practice 14 (2): 163–167. doi:https://doi.org/10.1177/1524839912470654.

- J.D. Paul, W. Buytaert, S. Allen, J.A. Ballesteros-Cánovas, J. Bhusal, K. Cieslki, J. Clark, S. Dugar, D.M. Hannah, M. Stoffel, A. Dewulf, M.R. Dhital, W. Liu, J.L. Nayaval, B. Neupane, A. Schiller, P.J. Smith, R. Supper Citizen science for hydrological risk reduction and resilience building, Wiley Interdisciplinary Reviews, 5 (2018), p. e1262

- Rogers E.M. 1962. Diffusion of Innovations. New York: The Free Press of Glencoe.

- Rossiter, D. G., J. Liu, S. Carlisle, and A. X. Zhu. 2015. “Can Citizen Science Assist Digital Soil Mapping?” Geoderma 259: 71–80. doi:https://doi.org/10.1016/j.geoderma.2015.05.006.

- Rotman, D., J. Preece, J. Hammock, K. Procita, D. Hansen, C. Parr,andD. Jacobs. 2012. “Dynamic Changes in Motivation in Collaborative Citizen-Science Projects.” Proceedings of the ACM 2012 Conference on Computer Supported Cooperative Work, Seattle, Washington, 217–226.

- Schepaschenko, D., J. Chave, O. L. Phillips, S. L. Lewis, S. J. Davies, M. Réjou-Méchain, P. Sist, et al. 2019. ”The Forest Observation System, Building a Global Reference Dataset for Remote Sensing of Forest Biomass.” Scientific Data 6 (1): 198. doi:https://doi.org/10.1038/s41597-019-0196-1.

- Silberman, M. S., B. Tomlinson, R. LaPlante, J. Ross, L. Irani, and A. Zaldivar. 2018. “Responsible Research with Crowds: Pay Crowdworkers at Least Minimum Wage.” Communications of the ACM 61 (3): 39–41. doi:https://doi.org/10.1145/3180492.

- Silvertown, J. 2009. “A New Dawn for Citizen Science.” Trends in Ecology & Evolution 24 (9): 467–471. doi:https://doi.org/10.1016/j.tree.2009.03.017.

- Zhao, Q., L. Yu, X. C. Li, D. L. Peng, Y. G. Zhang, and P. Gong. 2021. “Progress and Trends in the Application of Google Earth and Google Earth Engine.” Remote Sensing 13 (18): 3778. doi:https://doi.org/10.3390/rs13183778.