?Mathematical formulae have been encoded as MathML and are displayed in this HTML version using MathJax in order to improve their display. Uncheck the box to turn MathJax off. This feature requires Javascript. Click on a formula to zoom.

?Mathematical formulae have been encoded as MathML and are displayed in this HTML version using MathJax in order to improve their display. Uncheck the box to turn MathJax off. This feature requires Javascript. Click on a formula to zoom.ABSTRACT

To obtain the complete coverage when scanning a large-scale scene using a laser scanner, registration of pairwise point clouds by the corresponding geometric features is usually necessary under the condition of insufficient direct geographic reference information of GNSS sensors. In this paper, we propose a target-less coarse registration method for pairwise point clouds using the corresponding voxel-based planes, which are identified by a pair of conjugate 2-plane bases. The voxel-based planes are firstly extracted from the entire point cloud with 3D cubic grids decomposed by the octree-based voxelization; Then, the 2-plane bases in each point cloud are constructed, and by comparing the dihedral angles of two 2-plane bases that come from the source and target point clouds, respectively, the conjugate 2-plane base pairs are generated one by one; Next, a set of plane correspondences is identified by a pair of conjugate 2-plane bases, and its corresponding largest consistency planes (LCP) set is calculated; Finally, a series of plane correspondence sets are obtained using the generated pairs of conjugate 2-plane bases, and the one with the highest LCP is used to compute the transformation matrix between the pairwise point clouds. Experimental results revealed that our proposed pairwise coarse registration method can be effective for aligning point clouds acquired from indoor and outdoor scenes, with rotation errors less than 0.4 degrees, translation errors less than 0.4 m, root mean square distance (RMSD) less than 0.42 m, and successful registration rate (SRR) about 98%. Furthermore, our proposed method was more efficient than the point- and line-based methods under the same hardware and software configuration conditions.

1. Introduction

The light detection and ranging (LiDAR) technology has been developed rapidly over the past few decades, which can obtain millions of 3D points in a few seconds, and these data have become widely used in an extensive variety of applications, such as urban planning (Gehrung et al. Citation2016; Li et al. Citation2017), scene reconstruction (Choi, Zhou, and Koltun Citation2015; Dong et al. Citation2018a), digitization management of cultural heritage (Remondino Citation2011; Yang and Zang Citation2014; Sánchez-Aparicio et al. Citation2018), deformation monitoring (Awrangjeb, Gilani, and Siddiqui Citation2018; Chen, Yu, and Wu Citation2018; Kusari et al. Citation2019; Zang et al. Citation2019), and forest monitoring (Kelbe et al. Citation2016; Polewski et al. Citation2018). Due to the limited view of a laser scanner, a single scan is not sufficient to describe the entire target area. Therefore, multiple scans need to be connected to get the whole information of the measured object; That is, all individual point clouds should be aligned to a common coordinate system (Dong et al. Citation2018b), which is an essential technology in 3D data processing (Wang et al. Citation2021). This process includes two steps: coarse and fine registration. Fine registration usually adopts the well-known iterative closet point (ICP) (Besl and McKay Citation1992) algorithm or its variants (Li and Song Citation2015; Tao et al. Citation2018; Shi, Liu, and Han Citation2020). However, the ICP-based methods usually require an appropriate initial transformation matrix and their iterative process is time-consuming. Without a coarse registration as a priori, this kind of methods tends to converge to a local minimum or does not converge at all. Therefore, coarse registration is of vital importance, which is just to calculate the transformation matrix between two scans only using the geomatic information that is obtained from the point cloud directly but without any additional complements. In this paper, our goal is to perform the coarse registration based on voxel-based plane congruent sets. Many researchers have developed a large number of methods to register pairwise point clouds roughly. These methods compute the transformation matrix with six degrees of freedom (DOF) (i.e. three translations and three rotations) by extracting different geometric features, including point, line, and plane.

The point-based approaches are the most common and many algorithms have been proposed. Some methods first extract the keypoints from pairwise point clouds to be matched, and then calculate the corresponding local shape descriptors. By comparing the similarity of these descriptors, the correspondences between keypoints from different point clouds can be established, which are used to compute the transformation matrix. These methods are called descriptor-based methods. Rusu, Blodow, and Beetz (Citation2009) proposed the fast point feature histogram (FPFH) descriptor, and the possible correct corresponding points were established using the sample consensus method by comparing their FPFHs, then the transformation matrix was obtained. Fu et al. (Citation2021a) designed a novel descriptor called multiscale eigenvalue statistic-based descriptor (MEVS), which was then used to perform the coarse registration for mobile laser scanning (MLS) point clouds. Based on the covariance matrix of the keypoints, Fu et al. (Citation2021b) constructed the feature descriptor vector (FDV), and the pairwise terrestrial laser scanner (TLS) point clouds were aligned. Besides the above-mentioned descriptors, a lot of other descriptors have also been reported in the literatures, including quintuple local coordinate image (QLCI) (Tao et al. Citation2020a), binary shape context (BSC) (Dong et al. Citation2018b), rotational contour signature (RCS) (Yang, Xiao, and Cao Citation2019), local voxelized structure (LoVS) (Quan et al. Citation2108), and so on. The descriptor-based methods can well align pairwise point clouds with large overlap, but they are easy to fail when the overlap is small. There are also some other methods that are different from descriptor-based methods. For example, the four-point congruent sets (4PCS) (Aiger, Mitra, and Cohen-Or Citation2008) also belong to the point-based methods, which utilized the rule of intersection ratios between four points to establish correspondences and calculate the transformation matrix together with the registration score. By repeating establishing correspondences and calculating transformation as well as its score, the transformation matrix with the highest score was regarded as the final registration result. In order to improve the registration accuracy and efficiency, some improvements were made for 4PCS, e.g. Super4PCS (Mellado, Aiger, and Mitra Citation2014), keypoint-based 4PCS (K-4PCS) (Theiler, Wegner, and Schindler Citation2014) and semantic keypoint-based 4PCS (SK-4PCS) (Ge Citation2017). The 4PCS-based algorithms are robust to noise, outliers, and small overlap between pairwise point clouds to some extent. But their registration accuracy and efficiency need to be further improved. There are also some other point-based algorithms besides the before-mentioned methods, such as coherent point drift (CPD) algorithm (Myronenko and Song Citation2010) and its variants (Lu et al. Citation2016; Zang et al. Citation2020). This kind of methods solves the transformation matrix between pairwise point clouds using the method of probability density estimation, but which cannot well handle point clouds with large volume and are easily affected by noises and outliers. Moreover, as a promising method, deep learning-based methods (Aoki et al. Citation2019; Zhang et al. Citation2019; Pujol-Miró, Casas, and Ruiz-Hidalgo Citation2019) can learn advanced information from mass data to well register pairwise point clouds with large overlap and small-scale, but the result of aligning a pair of point clouds with small overlap and large-scale is poor.

In a word, the point-based methods have good versatility and can obtain enough good registration results for large overlapped point clouds, but they possess high computation complexity and low robustness to noises, occlusions and limited overlap.

Compared with point features, lines are higher level geometric features with better robustness in surveying and remote sensing. Many scholars have studied the registration method based on line features. Al-Durgham et al. (Citation2013, Citation2014) first intersected the planes separated from the origin point cloud to extract 3D line features; Then, the included angle and distance between all pairs of lines were calculated and the candidate corresponding lines were selected; Finally, the transformation matrix was calculated through the weight correction process (Renaudin, Habib, and Kersting Citation2011). The above two methods (Al-Durgham, Habib, and Kwak Citation2013; Al-Durgham and Habib Citation2014) use non-conjugate point pairs of the conjugate 3D lines to compute the transformation parameters, although adjusted by the weight process, the accuracy is still poor. Yang et al. (Citation2015) extracted and matched 2D building contours to register different types of urban point clouds. Their method provides a robust and reliable solution for registering TLS and airborne laser scanning (ALS) data, but it is only suitable for specific datasets with many buildings. Ge and Hu (Citation2020), Li et al. (Citation2021a) and Tao et al. (Citation2020b) first projected point clouds along the vertical (z-axis) direction to extract 2D line features; Then, constructed 2D line structural unit pairs for horizontal rough registration; Finally, calculated the vertical alignment and obtained the 3D transformation matrix. These three methods (Ge and Hu Citation2020; Li et al. Citation2021a; Tao et al. Citation2020b) can only well register pairwise point clouds that there are only rotation differences along the z-axis. Xu, Xu, and Yang (Citation2022) proposed a coarse registration method for urban point clouds based on 2-lines congruent sets (2LCS). First, the vertical and horizontal direction lines were extracted to construct the 2-lines bases; Then, according to the geometric character, the congruent 2-lines base pairs were identified and the 2LCS was formed; Finally, transformation parameters were estimated using the 2LCS. While determining conjugate 2-lines bases, the length and midpoint of the line were adopted. Due to shielding and difference of scanning views, the length of a line may be inconsistent in different scanning, which is not invariant character between pairwise point clouds.

Generally speaking, line-based methods can well register pairwise point clouds to some extent, and are more robust and efficient than point-based methods on aligning point clouds with large-scale and small overlap. However, 2Dline-methods can only register point clouds obtained by a strictly levelled TLS, because which hypothesizes that there only exist rotation differences along the z-axis; 3Dline-based methods lack the support of theory, because the points on the corresponding line pairs cannot be guaranteed a pair of conjugate points.

Regarding plane-based methods, the four parameters (a unit normal vector and a constant term denoting the distance from the origin to the plane) of a plane are unique, even obtained from different scans and partly. Therefore, this kind of methods have the theoretical support while solving the transformation matrix by the parameters of a pair of corresponding planes. Moreover, plane features are usually less than point or line features in a point cloud, which indicates that plane-based methods are more time-saving. Thus, the plane-based registration methods are worthy to be studied. Li et al. (Citation2021b; Citation2022) applied the relationship between point and plane (Li et al. Citation2021b), line and plane (Li et al. Citation2022) as the constraint, respectively, and realized the accurate registration between pairwise point clouds using the duality four elements method. However, the two methods rely on human-computer interaction to select the corresponding primitives, which is difficult to be widely applied in the field of remote sensing due to its poor degree of automation. Xiao et al. (Citation2013) first segmented the planes from the original point cloud using the cache octree region growth method; Then, two planes with similar areas from source and target point clouds were selected as a pair of potential corresponding planes to calculate the transformation matrix. Liang et al. (Citation2016) first grouped the extracted planes according to the angle between their unit normal vectors; Then, the corresponding plane pairs were identified from the pairwise point clouds by comparing their dihedral angles and plane areas; Finally, the plane correspondences were used to calculate the transformation matrix. Favre et al. (Citation2021) introduced a new metric based on plane characteristics (e.g. the distance between the projections of the origin on source and target planes, the distance between the centroids of source and target planes, the area ratio between the source and target planes, and the dot product of the normal vectors of the source and target planes) to find the best plane correspondences; Then, the optimal transformation was estimated using the plane correspondences through a two-step minimization approach: closed-form optimization and Gauss-Newton minimization. The before-mentioned three methods (Xiao et al. Citation2013; Liang et al. Citation2016; Favre et al. Citation2021) all use the plane area as a constraint for correspondences identifying, which requires to keep the completeness of a plane while scanning. However, due to the scanning shade, it is less possible to obtain one plane completely and with the same areas in different scans. Xu et al. (Citation2019) first extracted planar patches from voxelized point clouds by merging plane voxels; Then, the ratios of plane normal vectors were used to find the candidate corresponding four planar patches from the pairwise point clouds; Finally, the voxel-based four-plane congruent sets (V4PCS) method was proposed to register pairwise point clouds. This method just uses plane parameters to identify the correspondences and realize registration, which can obtain high alignment accuracy. But the process of generating V4PCS bases in each point cloud and constructing conjugate V4PCS bases from different point clouds are complicated.

To overcome the high complexity of the pairwise point clouds registration, as well as guarantee its theoretical foundation and efficiency, in this paper, a pairwise coarse registration method by traversing voxel-based 2-plane bases was proposed, which was inspired by Xu et al. (Citation2019). First, the point cloud was voxelized by the octree structure and these with planar properties were preserved, but without merging like what Xu et al. (Citation2019) did; Second, the corresponding plane pairs were identified by a pair of conjugate 2-plane bases that have the similar dihedral angle; Finally, a series of transformation matrixes were computed by the corresponding plane pairs using the traversing strategy, and the optimal one was selected to register the pairwise point clouds. The main contributions of our work are summarized as follows:

A voxel-based plane extraction method is proposed. The points in the voxel with the planar properties are used to fit the parameters of the voxel-based plane, which will form the basic plane primitives for the proposed registration method.

A 2-plane bases construction and conjugate 2-plane base pairs generation algorithms are developed only by the dihedral angle. And a closed-form solution of transformation estimation using all corresponding plane pairs is adopted to optimize the coarse registration matrix.

A largest consistency planes (LCP) criterion is proposed to evaluate the transformation matrixes and used to select the optimal one.

The remainder of this paper is organized as follows. In Section 2, the detailed explanation of the proposed registration method is provided; Section 3 presents the experiments and an explicit discussion and analysis of the derived results; And the paper is concluded in Section 4, as well as the plan for future research is exhibited.

2. Proposed registration method

Our proposed coarse registration method consists of three core steps: (1) voxel-based planes construction, (2) conjugate 2-plane base pairs generation, and (3) registration using corresponding planes.

Step1: The point cloud of two scans is voxelized into the 3D grid structure, respectively. For each voxel, the smoothness of points within the voxel is estimated. Only those voxels that satisfy the criteria of curvature are deemed as planar voxels, and their plane parameters are calculated to form the voxel-based planes.

Step2: A 2-plane base is formed by two planes that satisfy the dihedral angle constraint in each point cloud. The conjugate 2-plane base pairs that have the similar dihedral angle from the pairwise point clouds are used to calculate the rotation matrix , by which the initial set of corresponding planes

is obtained.

Step3: The initial transformation matrix is estimated using

and its corresponding LCP is calculated; Besides,

is updated by

, obtaining the optimal set of corresponding planes

.

With a pair of conjugate 2-plane bases, an initial transformation matrix and its corresponding LCP can be obtained. All 2-plane bases in the source point cloud are traversed to find their conjugate 2-plane bases from the target point cloud, then a series of initial transformation matrixes can be calculated as well as their LCPs. The matrix with the highest LCP is optimized using its corresponding optimal corresponding planes set

, and the optimal matrix

is regarded as the final coarse registration result. In , the processing workflow is sketched.

2.1. Voxel-based planes generation

In this first step, the source and target point clouds are voxelized with a 3D grid structure and the voxels with planar properties are selected firstly; Then, voxel-based planes will be generated. This step includes two major procedures: (1) planar voxels selection and (2) voxel-based planes calculation.

2.1.1. Planar voxels selection

Step1: We adopt the octree-based voxelization to decompose the entire point cloud with 3D cubic grids. The major advantages of using the octree structure were stated by Boerner, Hoegner, and Stilla (Citation2017) and Xu et al. (Citation2017a). In theory, the smaller is the size of the voxel, the larger is the number of details of the scan that can be preserved after the voxelization, as well as the more is the number of extracted voxel-based planes. In our method, voxels of different point clouds from the same dataset are equal in size, but point clouds from different datasets can be voxelized with different voxel sizes. In our study, the size of the voxel is determined according to applications and manually set, which is one of the two important parameters in the process of the proposed registration method.

Step2: The points in voxel are counted as

, if

then the voxel will be removed. is a threshold, which represents the minimum point number in a voxel, and it is another one of the two important parameters. The aim of setting threshold

is to improve the calculation accuracy of the voxel-based planes parameters, because the more points are used to fit a plane, the more robust its parameters are to the noises.

Step3: The voxel property is calculated. Only the voxels with planar property are preserved to reduce the number of the extracted voxel-based planes and improve the accuracy of estimated planar parameters, both of which will highly influent the speed and quality of the registration. The point set inside the voxel is regarded as

, then its covariance matrix

can be constructed by EquationEquation (2)

(2)

(2)

where denotes the point in the voxel;

is the centroid of these points; and

is the points number of the voxel

.

The planar property of voxel

is calculated by the eigenvalues from the eigenvalue decomposition (EVD) of the covariance matrix

by EquationEquation (3)

(3)

(3)

where in the decreasing order of magnitude represents the eigenvalues from EVD of

. If

then the voxel is regarded as a planar voxel and preserved.

is a threshold that determines a voxel is a planar one or not, which was set as 0.03 in this paper.

2.1.2. Calculation of voxel-based planes

The Hessian normal form of plane equation is adopted in this work, i.e. a plane is represented as

where is the unit normal vector of the plane;

is an arbitrary point on the plane; and

is a constant term denoting the perpendicular distance from the origin to the plane.

Regard the plane of the voxel as

, which four parameters, the unit normal vector

and its constant term

, can be calculated by the random sampling consistency (RANSAC) algorithm using the point set

inside the voxel

.

Step1: Select three points from the point set randomly, which are used to calculate a plane

, e.g.

.

Step2: Calculate the distance from the point

in

to the plane

. If

then the point is regarded as inlier; otherwise, as outlier.

is a threshold to determine the inliers, and in this paper, it was set as 0.5 m.

Step3: The plane is refined by the inliers obtained in Step 2, and then set the refined plane

as the plane of the voxel

.

Step4: Repeat the above three Steps. Only when the number of the inliers is larger than a threshold, which was set as 0.8 times of the points in the voxel, or the iterations reach the maximum, which was set as 50 in this paper, the iteration is stopped and the final plane is outputted.

In theory, there are two normal vectors that go in opposite directions for any given plane. The choice of normal vector orientation in source and target coordinate systems may result in incorrect solution of the transformation matrix or inconsistent solvable equation. Therefore, the direction of the normal vector needs to be defined in a consistent way (Khoshelham Citation2016). Here, we just adjust the normal vector to make to eliminate the ambiguity of the direction of the normal vector.

2.2. Conjugate 2-plane base pairs construction

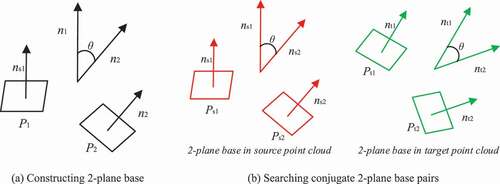

Traditional plane-based registration methods usually select a triple of planes from each scan to form a 3D corner (Xiao et al. Citation2013; Xu et al. Citation2017b), which defines a coordinate system that determines six DOFs. By finding the corresponding planes from conjugate triples of planes, transformation parameters can be achieved (Theiler and Schindler Citation2012). On one hand, there are more geometric constraints between three planes, making it difficult and complex to recognize the conjugate triples of planes from pairwise point clouds; On the other hand, there are six kinds of corresponding plane pairs from two triples of planes, which burdens the calculation of transformation matrix. If one plane was removed from the triple of planes (forming 2-plane base), the geometric constraints between planes and the complexity of plane correspondences constructing (there are only two kinds of corresponding planes between a pair of conjugate 2-plane bases) could be reduced significantly. With this idea, we proposed the congruent voxel-based 2-plane bases for estimating plane correspondences, which are further used to estimate transformation matrix. Compared with the classic triple of planes set base, our method uses neither the simple constraint of the direction of normal vectors nor the positions of intersecting points of planes. Instead, we utilize the invariant congruence formed by the dihedral angle between two planes to find the plane correspondences, which is more efficient and has good correctness.

2.2.1. Generation of 2-plane bases

The plane set extracted from a point cloud is used to generate 2-plane bases via the dihedral angle

of two planes. If

then the plane and

are regarded as a 2-plane base.

represents the dihedral angle between plane

and

;

and

are angle threshold, which were set as 10 degrees and 80 degrees in this paper. Setting

is to avoid to select two approximate parallel planes as a 2-plane base, which cannot be used to calculate the rotation matrix between pairwise point clouds; Setting

is to control the number of generated 2-plane bases, which determines registration efficiency.

As shown in , each 2-plane base consists of two planes and their dihedral angle . In Algorithm 1, we provide a detailed procedure of the generation of the available 2-plane bases in a point cloud.

Table

2.2.2. Recognition of initial plane correspondences by conjugate 2-plane base pairs

To identify the initial corresponding voxel-based planes from source and target point clouds, the procedure is divided into three successive steps: (1) selecting conjugate 2-plane base pairs, (2) calculating rotation matrix, and (3) identifying initial plane correspondences.

Selecting conjugate 2-plane base pairs

As shown in , for each 2-plane base in source point cloud, its nearest neighbour

from target point cloud is found by comparing

and

. Assuming that the

and

are a pair of conjugate 2-plane bases, the plane correspondences can be

and

, but they can also be

and

. Therefore, we can calculate two rotation matrixes using a pair of conjugate 2-plane bases.

Calculating rotation matrix

Taking and

as example, we can formulate

where represents the 3D rotation matrix between the source and target point clouds; and

represents the unit normal vector of the plane

.

Performing the singular value decomposition (SVD) for the EquationEquation (8)(8)

(8)

then the 3D rotation matrix can be represented as

Using a rotation matrix that calculated by a pair of conjugate 2-plane bases, a set of initial plane correspondences can be identified.

Identifying initial plane correspondences

The corresponding voxel-base planes from the pairwise point clouds are identified using the unit normal vector of the plane. Assuming the original unit normal vectors of the planes in source and target point clouds as and

respectively, where

represents the unit normal vector of the i-th plane, and m1 and m2 are the number of the planes in source and target point clouds, respectively. Then, the initial plane correspondences are identified using

,

and

.

Step1: The planes in source point cloud are rotated using the rotation matrix , and the unit normal vector of the rotated planes are regarded as

.

Step2: For each plane normal vector in

, if there exists an element

in

that satisfies the constraint as in EquationEquation (11)

(11)

(11) , then the corresponding planes

and

are considered as a pair of corresponding planes. In other words, only when

was the nearest neighbour to

in

, and

to

in

, then

and

were identified as an initial plane correspondence. All the initial plane correspondences are regarded as

, where

is the i-th initial plane correspondence, and m represents the number of the initial plane correspondences.

where the norm is the 3D Euclidean distance.

By more than three plane correspondences, the transformation matrix of source and target point clouds can be achieved using the parameters of the corresponding planes, e.g. the unit normal vector and constant term d.

2.3. Registration using corresponding planes

The registration consists of two essential steps: (1) estimation of initial transformation parameters and (2) optimization of final transformation parameters. The initial transformation parameters are estimated by the initial plane correspondences identified in Section 2.2. The optimized registration parameters are estimated using optimal corresponding planes recognized by the initial transformation matrix.

2.3.1. Estimation of initial transformation parameters

With the initial plane correspondences , the following Equation can be formulated

where and

are the unit normal vectors of the plane from the source and target point clouds,

represents the unit normal vector of the i-th plane;

and

are the constant term;

and

are the rotation and translation component of the initial transformation matrix.

The rotation component can be obtained by the SVD method using EquationEquation (8)

(8)

(8) -(Equation10

(10)

(10) ), and the translation component

can be solved using the least square (LS) algorithm, as shown in EquationEquation (13)

(13)

(13)

Combing and

, the initial transform matrix

can be obtained

then its corresponding LCP can be calculated and optimal plane correspondences can be obtained based on .

Step1: Assuming the plane parameters as , and the planes in the source point cloud are transformed by

where and

represent the original planes and the transformed ones from the source point cloud, respectively.

Step2: Based on the initial transformation matrix , the optimal plane correspondences are identified. First, the nearest planes are calculated from

and

(

representing the planes of target point cloud) based on their unit normal vector by the EquationEquation (11)

(11)

(11) ; Then, the constant term of the plane parameters is used to confirm the nearest planes are corresponding planes or not. If the nearest planes satisfy the constraint in EquationEquation (16)

(16)

(16) , then they are regarded as a pair of corresponding planes, otherwise not. The values of LCP corresponding to the initial transformation matrix is just equal to the number of the plane correspondences, and all the corresponding planes form the set of optimal plane correspondences.

where is a threshold, which was set as 1 m in this paper.

2.3.2. Recognition of initial plane correspondences by conjugate 2-plane base pairs

Although we have computed the initial transformation parameters and

, it still needs to estimate the optimal transformation parameters

and

. Here, we adopt the method of applying the optimal plane correspondences to get the closed-form solution of transformation matrix estimating (Khoshelham Citation2016; Forstner and Khoshelham Citation2017). It is worth mentioning that for our closed-form solution, we do not apply the normalization using mean-centring and scaling, because translation normalization (i.e. mean-centring) has been realized in the process of initial translation parameter estimation, and scaling is not considered in our study, because the LiDAR technology obtains the real distance between points.

Based on the set of optimal plane correspondences, the EquationEquation (12)(12)

(12) can be updated, and the optimum transformation parameters

and

can be calculated by the SVD and LS algorithm, respectively.

By the before-mentioned steps of the proposed coarse registration scheme, we know that with a pair of conjugate 2-plane bases, an initial transformation matrix as well as its LCP can be calculated. For each 2-plane base in the source point cloud, its corresponding 2-plane base can be found from the target point cloud using the traversing strategy; Then a series of initial transformation matrixes with LCPs are obtained; Finally, the transformation matrix with the highest LCP is optimized using its generated optimal plane correspondences, and the optimized transformation matrix is outputted as the final coarse registration result. To illustrate our method more clearly, in Algorithm 2, we provide a detailed procedure for the proposed coarse registration algorithm.

Table

3. Experimental results and discussion

In this section, the experiments are performed on five datasets, two of which belong to the indoor dataset, and the other three are the outdoor dataset, to test the result of the proposed method in terms of time performance, registration accuracy, root mean square distance (RMSD) and successful registration rate (SRR), as well as comparison with other state-of-the-art algorithms. All the experiments were carried out on a computer with Windows 10, Intel(R) Xeon(R) E-2176 G CPU at 3.70 GHz and 64.0-GB RAM.

3.1. Experimental datasets and evaluation metric

3.1.1. Experimental datasets

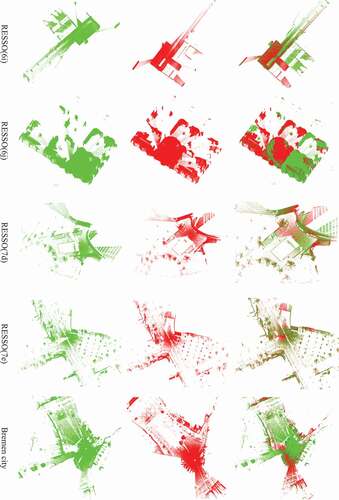

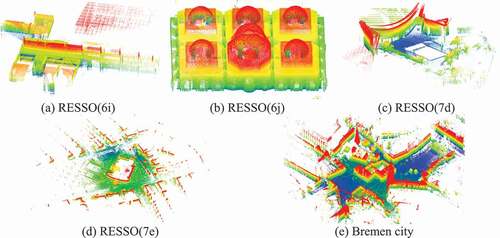

The performance of the proposed registration algorithm is evaluated using five datasets (i.e. RESSO(6i), RESSO(6j), RESSO(7d), RESSO(7e), and Bremen city), which include ground-truths that allows for a proper assessment. The RESSO benchmark is a TLS dataset presented by Chen et al. (Citation2020) to evaluate registering point clouds with a small overlap. The Bremen city benchmark is dataset from the Thermal Mapper project that was acquired by the Jacobs University Bremen (Borrmann, Elseberg, and Nüchter Citation2013). provides a detailed description of the five datasets that used in this paper, and shows the full view of the experimental datasets.

Figure 3. Full view of the five experimental datasets, with shading representing differences in height. (a), (b), (c), (d) and (e) show the RESSO(6i), RESSO(6j), RESSO(7d), RESSO(7e) and Bremen city datasets respectively.

Table 1. Detailed information of the experimental datasets.

3.1.2. Experimental metric

The registration performance is evaluated in terms of registration accuracy, RMSD, SRR, and time performance. The above aspects are commonly adopted by many researchers (Ghorbani et al. Citation2022; Fu et al. Citation2021a, Citation2021b; Xu et al. Citation2019).

The registration accuracy includes rotation error and translation error

, which can be calculated by the comparison with the ground-truth. Given the target point cloud

, the transformation matrix

from the source point cloud

to

can be computed via the registration method. The residual transformation

from

to

is defined as

where is the ground-truth.

The rotation error and translation error

can be calculated based on their corresponding rotational component

and translational component

, as expressed in EquationEquation (18)

(18)

(18)

where denotes the trace of

.

The RMSD is a statistical criterion, which is calculated between the source point cloud after applying the registration algorithm (taken as ) and the same point cloud in its true position (taken as

). The RMSD is computed as follows

where is Euclidean distance and n is the number of source point cloud.

The SRR is used as the percentage of completeness, which indicates how many of the point cloud registration was successful and whether it is possible to enter the fine registration stage. This criterion is defined as expressed in EquationEquation (20)(20)

(20)

where and

are the number of successful registration and the number of two adjacent point clouds for registration, respectively. (In this paper, a registration result is regarded as a successful one, only when its RMSD is less than 1.0 m).

3.2. Registration result

To clearly demonstrate the registration procedure of the proposed method, in , we show the results of extracting voxel-based planes, generating 2-plane bases, calculating the rotation matrix by a pair of conjugate 2-plane bases, and computing the transformation matrix using the conjugate voxel-based planes that are recognized by the obtained rotation matrix.

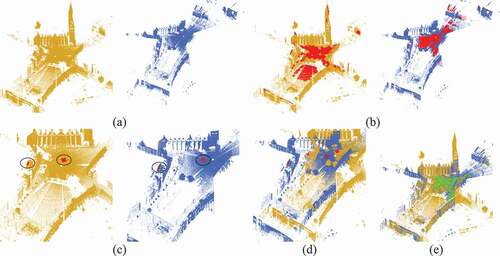

Figure 4. The registration results of the proposed method in each step. (a) Original input point clouds; (b) Voxel-based planes; (c) 2-plane bases; (d) Aligned point clouds by the rotation matrix thar are calculated by the conjugate 2-plane base pairs; (e) Aligned point clouds by the transformation matrix that are calculated by the conjugate voxel-based planes.

In , it is the input point clouds. shows the voxel-based planes extracting results. Then, a pair of conjugate 2-plane bases is recognized from the pairwise point clouds, as shown in . In , it shows the aligned point clouds using the rotation matrix that calculated by a pair of conjugate 2-plane bases, where we can see that it consisted between the directions of the aligned pairwise point clouds, but there are obvious displacements along the x-axis, y-axis and z-axis. The reason is that a pair of conjugate 2-plane bases with five necessary observations (conditions) can only calculate a rotation matrix with three DOFs for a pair of point clouds, it cannot compute a transformation matrix with six DOFs for a pair of point clouds. In other words, a pair of conjugate 2-plane bases can only compute the rotation matrix, but not the translation vector between a pair of point clouds. Therefore, more conjugate voxel-based planes (at least three) are recognized according to the obtained rotation matrix, which are used to perfectly align the pairwise point clouds, as shown in .

shows a few pairs of point clouds registered by our method on the five different experimental datasets. As we can see from , the pairwise point clouds are automatically aligned. There is no significant inconsistency between the two registered point clouds; therefore, it can be qualitatively concluded that the proposed method can feasibly register pairwise point clouds.

3.3. Evaluation and comparison

To quantitatively evaluate the proposed method for point clouds registration, the registration results are compared with ground-truths in terms of rotation and translation errors, RMSD, SRR, and time performance. To further analyse the performance of our method, two well-known initial alignment algorithms are introduced as baseline for performance comparison, namely, the point-based method—-FPFH (Rusu, Blodow, and Beetz Citation2009) and the line-based method—-2DLINE (Tao et al. Citation2020b).

3.3.1. Rotation and translation error evaluation

summarizes the rotation error and translation error (i.e., minimum, maximum, average, and the Root Mean Square Error (RMSE)) on the five testing datasets. Note that, only the successful registrations are utilized to calculate the average and RMSE of rotation and translation errors (It is the same for RMSD in the following calculation). These experimental results demonstrate that the proposed method performs well in registering pairwise point clouds, with average rotation error less than 0.4° and translation error less than 0.4 m, which can satisfy the requirements of object extraction and 3D reconstruction.

Table 2. Quantitative evaluation of the proposed method by rotation and translation error.

3.3.2. SRR, RMSD evaluation and time performance

reports the SRR, RMSD and time performance of the proposed method, where we can find that:

Our method can perfectly register the pairs of point clouds from RESSO(6j), RESSO(7d), RESSO(7e) and Bremen city datasets, but obtain a failing one from RESSO(6i) dataset. The total SRR of the proposed method is about 98%.

The average RMSD is all less than 0.42 m, which demonstrates the good performance of our method.

It takes only a few minutes to register millions of points, which shows that the proposed method acquires high efficiency in registering large-scale and large amounts of point clouds.

Table 3. Quantitative evaluation of the proposed method by SRR, RMSD and time.

In a word, the proposed method can well align pairwise point clouds obtained from indoor (RESSO(6i) and RESSO(6j) datasets) and outdoor (RESSO(7d), RESSO(7e) and Bremen city datasets) scenes.

3.3.3. Performance comparison and analysis

The point-based method—-FPFH (Rusu, Blodow, and Beetz Citation2009) and the line-based method—-2DLINE (Tao et al. Citation2020b) are selected as the benchmark methods to conduct the comparing experiments. lists their SRR, rotation and translation errors, RMSD and the runtime. It is found that the proposed method outperformed the state-of-the-art registration methods on the testing datasets. More specifically, a number of observations can be noted based on these comparison results.

The proposed method (the voxel-plane-based method) outperformed the line-based method (2DLINE method (Tao et al. Citation2020b)) and the point-based method (FPFH method (Rusu, Blodow, and Beetz Citation2009)) in terms of SRR, registration errors and RMSD, which demonstrates that voxel-based planes are better elements of registration than feature lines and feature points.

Our method achieved the best efficiency, which is attributable to the following two factors. First, the number of generated voxel-based planes is less than the feature lines generated by 2DLINE method and the feature points used in FPFH method. Second, compared with FPFH method, which spends a lot of time to calculate the distinctive descriptors for the feature points using their neighbours, the proposed method just needs to decompose the entire point cloud with 3D cubic grids by the octree-based voxelization and calculate the voxel-based planes, which is a more time-efficiency procedure.

Table 4. Performance comparison.

4. Conclusions

In this paper, we proposed a target-less algorithm for fast and coarse registration of pairwise point clouds. The voxel-based planes are separated from the original point cloud using the octree-based voxelization, then the 2-plane bases are constructed. After that, the conjugate 2-plane bases are generated by the dihedral angle from the pairwise point clouds and used to calculate the initial plane correspondences, which are further used to calculate the initial transformation matrix as well as the corresponding LCP. The matrix with the highest LCP is applied to find the optimal plane correspondences, which are further utilized to optimize the former transformation matrix. Based on the geometric feature of points within the voxel structure, the number of selected plane feature is significantly less than the point number, which can improve the efficiency of registration processing compared with traditional point-based methods. And the use of plane feature as basic element for registration can be more robust when handling point clouds with varying point density. Compared with line-based method, which lacks the theoretical support because it cannot determine the corresponding points by the line feature for calculating the translation vector, the proposed registration method using the voxel-based plane as basic element has strict support of mathematical theory. The experimental results using different point clouds reveal that our proposed method is effective and outperforms the baseline methods. For the task of fast orientation between two scans, our proposed method can achieve rotation error less than 0.4 degrees, translation error less 0.4 m, RMSD less than 0.42 m, and SRR about 98%. The registration results satisfy the goal of coarse registration. In the future, we will study on the automatic selecting of the voxel size and minimum points, and add robust estimation to the process of translation vector calculating to further improve its accuracy.

Author contributions

Z. Li. designed the method. Y. Fu. realized the method and wrote the original paper. F. Xiong. got the results of the experiments. H. He. analysed these results. W. Wang. and Y. Deng. checked and revised the paper. All the authors have read and agreed to publish this version of the manuscript.

Acknowledgments

We would like to thank the people of the algorithms and open source code involved in this paper, especially Wuyong Tao for sharing his ideas about line-based registration algorithm and code to us. Meanwhile, we would also like to thank the providers of the datasets used in this paper.

Disclosure statement

No potential conflict of interest was reported by the author(s).

References

- Aiger, D., N. J. Mitra, and D. Cohen-Or. 2008. ”4-Points Congruent Sets for Robust Pairwise Surface Registration.” ACM Transactions on Graphics 27 (3): 1–10. ACM doi:10.1145/1360612.1360684.

- Al-Durgham, K., and A. Habib. 2014. ”Association-Matrix-Based Sample Consensus Approach for Automated Registration of Terrestrial Laser Scans Using Linear Features.” Photogrammetric Engineering and Remote Sensing 80 (11): 1029–1039. ResearchGate doi:10.14358/PERS.80.11.1029.

- Al-Durgham, K., A. Habib, and E. Kwak. 2013. ”RANSAC Approach for Automated Registration of Terrestrial Laser Scans Using Linear Features.” ISPRS Annals of the Photogrammetry, Remote Sensing and Spatial Information Sciences II-5/W2: 11–18. ResearchGate. doi:10.5194/isprsannals-II-5-W2-13-2013.

- Aoki, Y., H. Goforth, R. Srivatsan, and S. Lucey. 2019. “PointNetlk: Robust & Efficient Point Cloud Registration Using PointNet.” In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), 7163–7172. Long Beach, CA, USA: IEEE Press. [ IEEEXplore]. doi10.1109/CVPR.2019.00733

- Awrangjeb, M., S. Gilani, and F. Siddiqui. 2018. ”An E?ective Data-Driven Method for 3-D Building Roof Reconstruction and Robust Change Detection.” Remote Sensing 10 (10): 1512. ResearchGate doi:10.3390/rs10101512.

- Besl, P., and D. McKay. 1992. ”A Method for Registration of 3-D Shapes.” IEEE Transactions on Pattern Analysis and Machine Intelligence 14 (2): 239–256. IEEEXplore doi:10.1109/34.121791.

- Boerner, R., L. Hoegner, and U. Stilla. 2017. ”Voxel Based Segmentation of Large Airborne Topobathymetric Lidar Data.” The International Archives of the Photogrammetry, Remote Sensing and Spatial Information Sciences XLII-1/W1: 107–114. ResearchGate. doi:10.5194/isprs-archives-XLII-1-W1-107-2017.

- Borrmann, D., J. Elseberg, and A. Nüchter. 2013. ”Thermal 3D Mapping of Building Façades.” Intelligent Autonomous Systems 12: 173–182. ResearchGate. doi:10.1007/978-3-642-33926-4_16.

- Chen, S., L. Nan, R. Xia, J. Zhao, and P. Wonka. 2020. ”PLADE: A Plane-Based Descriptor for Point Cloud Registration with Small Overlap.” IEEE Transactions on Geoscience and Remote Sensing 58 (4): 2530–2540. IEEEXplore doi:10.1109/TGRS.2019.2952086.

- Chen, X., K. Yu, and H. Wu. 2018. ”Determination of Minimum Detectable Deformation of Terrestrial Laser Scanning Based on Error Entropy Model.” IEEE Transactions on Geoscience and Remote Sensing 56 (1): 105–116. IEEEXplore doi:10.1109/TGRS.2017.2737471.

- Choi, S., Q. Zhou, and V. Koltun. 2015. “Robust Reconstruction of Indoor Scenes.” In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), 5556–5565. Boston, MA, USA: IEEE Press. [ IEEEXplore]. doi10.1109/CVPR.2015.7299195

- Dong, Z., B. Yang, P. Hu, and S. Scherer. 2018a. ”An Efficient Global Energy Optimization Approach for Robust 3D Plane Segmentation of Point Clouds.” ISPRS Journal of Photogrammetry and Remote Sensing 137: 112–133. Elsevier. doi:10.1016/j.isprsjprs.2018.01.013.

- Dong, Z., B. Yang, F. Liang, R. Huang, and S. Scherer. 2018b. ”Hierarchical Registration of Unordered TLS Point Clouds Based on Binary Shape Context Descriptor.” ISPRS Journal of Photogrammetry and Remote Sensing 144: 61–79. Elsevier. doi:10.1016/j.isprsjprs.2018.06.018.

- Favre, K., M. Pressigout, E. Marchand, and L. Morin. 2021. “A Plane-Based Approach for Indoor Point Clouds Registration.” In Proceedings of the 25th International Conference on Pattern Recognition (ICPR), 7072–7079. Milan, Italy: IEEE Press. [ IEEEXplore]. doi10.1109/ICPR48806.2021.9412379

- Forstner, W., and K. Khoshelham. 2017. “Efficient and Accurate Registration of Point Clouds with Plane to Plane Correspondences.” In Proceedings of the IEEE International Conference on Computer Vision Workshops (ICCVW), 2165–2173. Venice, Italy: IEEE Press. [ IEEEXplore]. doi10.1109/ICCVW.2017.253

- Fu, Y., Z. Li, Y. Deng, S. Zhang, H. He, W. Wang, and F. Xiong. 2021b. ”Pairwise Registration for Terrestrial Laser Scanner Point Clouds Based on the Covariance Matrix.” Remote Sensing Letters 12 (8): 788–798. ResearchGate. doi:10.1080/2150704X.2021.1938734.

- Fu, Y., Z. Li, W. Wang, H. He, F. Xiong, and Y. Deng. 2021a. ”Robust Coarse-To-Fine Registration Scheme for Mobile Laser Scanner Point Clouds Using Multiscale Eigenvalue Statistic-Based Descriptor.” Sensors 21 (7): 2431. ResearchGate doi:10.3390/s21072431.

- Ge, X. 2017. ”Automatic Markerless Registration of Point Clouds with Semantic-Keypoint-Based 4-Points Congruent Sets.” ISPRS Journal of Photogrammetry and Remote Sensing 130: 344–357. Elsevier. doi:10.1016/j.isprsjprs.2017.06.011.

- Gehrung, J., M. Hebel, M. Arens, and U. Stilla. 2016. ”A Framework for Voxel-Based Global Scale Modeling of Urban Environments.” The International Archives of the Photogrammetry, Remote Sensing and Spatial Information Sciences XLII-2/W1: 45–51. ResearchGate. doi:10.5194/isprs-archives-XLII-2-W1-45-2016.

- Ge, X., and H. Hu. 2020. ”Object-Based Incremental Registration of Terrestrial Point Clouds in an Urban Environment.” ISPRS Journal of Photogrammetry and Remote Sensing 161: 218–232. Elsevier. doi:10.1016/j.isprsjprs.2020.01.020.

- Ghorbani, F., H. Ebadi, A. Sedaghat, and N. Pfeifer. 2022. ”A Novel 3-D Local Daisy-Style Descriptor to Reduce the Effect of Point Displacement Error in Point Cloud Registration.” IEEE Journal of Selected Topics in Applied Earth Observations and Remote Sensing 15: 2254–2273. IEEEXplore. doi:10.1109/JSTARS.2022.3151699.

- Kelbe, D., J. Aardt, P. Romanczyk, M. Leeuwen, and K. Cawse-Nicholson. 2016. ”Marker-Free Registration of Forest Terrestrial Laser Scanner Data Pairs with Embedded Confidence Metrics.” IEEE Transactions on Geoscience and Remote Sensing 54 (7): 4314–4330. IEEEXplore doi:10.1109/TGRS.2016.2539219.

- Khoshelham, K. 2016. ”Closed-Form Solutions for Estimating a Rigid Motion from Plane Correspondences Extracted from Point Clouds.” ISPRS Journal of Photogrammetry and Remote Sensing 114: 78–91. Elsevier. doi:10.1016/j.isprsjprs.2016.01.010.

- Kusari, A., C. Glennie, B. Brooks, and T. Ericksen. 2019. ”Precise Registration of Laser Mapping Data by Planar Feature Extraction for Deformation Monitoring.” IEEE Transactions on Geoscience and Remote Sensing 57 (6): 3404–3422. IEEEXplore doi:10.1109/TGRS.2018.2884712.

- Liang, D., H. Wang, X. Liu, and Y. Shen. 2016. ”Automatic Registration of Building’s Points Clouds Based on Planar Primitive Groups.” Geomatics and Information Science of Wuhan University 41 (11): 1613–1618. CNKI. doi:10.13203/j.whugis20140682.

- Li, W., and P. Song. 2015. ”A Modified ICP Algorithm Based on Dynamic Adjustment Factor for Registration of Point Cloud and CAD Model.” Pattern Recognition Letters 65: 88–94. ResearchGate. doi:10.1016/j.patrec.2015.07.019.

- Li, R., X. Yuan, S. Gan, R. Bi, S. Gao, and Y. Guo. 2021b. ”A Point Cloud Registration Method Based on Dual Quaternion Description Under the Constraint of Point and Surface Features.” Geomatics and Information Science of Wuhan University. Advance online publication. [CNKI]. doi:10.13203/j.whugis20210184.

- Li, R. X., S. Yuan, R. Gan, S. Bi, S. Gao, and L. Hu. 2022. ”A Point Cloud Registration Method Based on Dual Quaternion Description of Line-Plane Feature Constraints.” Acta Optica Sinica 42 (2): 167–175. CNKI. doi:10.3788/AOS202242.0214003.

- Li, Z., L. Zhang, P. Mathiopoulos, F. Liu, L. Zhang, S. Li, and H. Liu. 2017. ”A Hierarchical Methodology for Urban Facade Parsing from TLS Point Clouds.” ISPRS Journal of Photogrammetry and Remote Sensing 123: 75–93. Elsevier. doi:10.1016/j.isprsjprs.2016.11.008.

- Li, Z., X. Zhang, J. Tan, and H. Liu. 2021a. ”Pairwise Coarse Registration of Indoor Point Clouds Using 2D Line Features.” ISPRS International Journal of Geo-Information 10 (1): 26. ResearchGate doi:10.3390/ijgi10010026.

- Lu, M. J., Y. Zhao, Y. Guo, and Y. Ma. 2016. ”Accelerated Coherent Point Drift for Automatic Three-Dimensional Point Cloud Registration.” IEEE Geoscience and Remote Sensing Letters 13 (2): 162–166. IEEEXplore. doi:10.1109/LGRS.2015.2504268.

- Mellado, N., D. Aiger, and N. Mitra. 2014. ”Super 4PCS Fast Global Point Cloud Registration via Smart Indexing.” Computer Graphics Forum 33 (5): 205–215. ResearchGate doi:10.1111/cgf.12446.

- Myronenko, A., and X. Song. 2010. ”Point Set Registration: Coherent Point Drift.” IEEE Transactions on Pattern Analysis and Machine Intelligence 32 (12): 2262–2275. IEEEXplore doi:10.1109/TPAMI.2010.46.

- Polewski, P., W. Yao, M. Heurich, P. Krzystek, and U. Stilla. 2018. ”Learning a Constrained Conditional Random Field for Enhanced Segmentation of Fallen Trees in ALS Point Clouds.” ISPRS Journal of Photogrammetry and Remote Sensing 140: 33–44. Elsevier. doi:10.1016/j.isprsjprs.2017.04.001.

- Pujol-Miró, A., J. Casas, and J. Ruiz-Hidalgo. 2019. ”Correspondence Matching in Un-Organized 3D Point Clouds Using Convolutional Neural Networks.” Image and Vision Computing 83: 563–570. ResearchGate. doi:10.1016/j.imavis.2019.02.013.

- Quan, S., J. Ma, F. Hu, B. Fang, and T. Ma. 2018. ”Local Voxelized Structure for 3D Binary Feature Representation and Robust Registration of Point Clouds from Low-Cost Sensors.” Information Sciences 444: 153–171. ResearchGate. doi:10.1016/j.ins.2018.02.070.

- Remondino, F. 2011. ”Heritage Recording and 3D Modeling with Photogrammetry and 3D Scanning.” Remote Sensing 3 (6): 1104–1138. ResearchGate doi:10.3390/rs3061104.

- Renaudin, E., A. Habib, and A. Kersting. 2011. ”Featured-Based Registration of Terrestrial Laser Scans with Minimum Overlap Using Photogrammetric Data.” Etri Journal 33 (4): 517–527. ResearchGate. doi:10.4218/etrij.11.1610.0006.

- Rusu, R., N. Blodow, and M. Beetz. 2009. “Fast Point Feature Histograms (FPFH) for 3D Registration.” In Proceedings of the IEEE International Conference on Robotics and Automation, 3212–3217. Kobe, Japan: IEEE Press. [ IEEEXplore]. doi10.1109/ROBOT.2009.5152473

- Sánchez-Aparicio, L., S. Pozo, L. Ramos, A. Arce, and F. Fernandes. 2018. ”Heritage Site Preservation with Combined Radiometric and Geometric Analysis of TLS Data.” Automation in Construction 85: 24–39. ResearchGate. doi:10.1016/j.autcon.2017.09.023.

- Shi, X., T. Liu, and X. Han. 2020. ”Improved Iterative Closest Point (ICP) 3D Point Cloud Registration Algorithm Based on Point Cloud Filtering and Adaptive Fireworks for Coarse Registration.” International Journal of Remote Sensing 41 (8): 3197–3220. ResearchGate doi:10.1080/01431161.2019.1701211.

- Tao, W., X. Hua, Z. Chen, and P. Tian. 2020b. ”Fast and Automatic Registration of Terrestrial Point Clouds Using 2D Line Features.” Remote Sensing 12 (8): 1283. ResearchGate doi:10.3390/rs12081283.

- Tao, W., X. Hua, R. Wang, and D. Xu. 2020a. ”Quintuple Local Coordinate Images for Local Shape Description.” Photogrammetric Engineering and Remote Sensing 86 (2): 121–132. ResearchGate doi:10.14358/PERS.86.2.121.

- Tao, W., X. Hua, K. Yu, X. He, and X. Chen. 2018. ”An Improved Point-To-Plane Registration Method for Terrestrial Laser Scanning Data.” IEEE Access 6: 48062–48073. IEEEXplore. doi:10.1109/ACCESS.2018.2866935.

- Theiler, P., and K. Schindler. 2012. ”Automatic Registration of Terrestrial Laser Scanner Point Clouds Using Natural Planar Surfaces.” ISPRS Annals of the Photogrammetry, Remote Sensing and Spatial Information Sciences 1-3: 173–178. ResearchGate. doi:10.5194/isprsannals-I-3-173-2012.

- Theiler, P. W., J.D. Wegner, and K. Schindler. 2014. ”Keypoint-Based 4-Points Congruent Sets – Automated Marker-Less Registration of Laser Scans.” ISPRS Journal of Photogrammetry and Remote Sensing 96: 149–163. Elsevier. doi:10.1016/j.isprsjprs.2014.06.015.

- Wang, J., P. Wang, B. Li, R. Fu, S. Zhao, and H. Zhang. 2021. ”Discriminative Optimization Algorithm with Global-Local Feature for LIDAR Point Cloud Registration.” International Journal of Remote Sensing 42 (23): 9003–9023. ResearchGate. doi:10.1080/01431161.2021.1975843.

- Xiao, J., B. Adler, J. Zhang, and H. Zhang. 2013. ”Planar Segment Based Three-Dimensional Point Cloud Registration in Outdoor Environments.” Journal of Field Robotics 30 (4): 552–583. ResearchGate doi:10.1002/rob.21457.

- Xu, Y., R. Boerner, W. Yao, L. Hoegner, and U. Stilla. 2017b. ”Automated Coarse Registration of Point Clouds in 3D Urban Scenes Using Voxel Based Plane Constraint.” ISPRS Annals of the Photogrammetry, Remote Sensing and Spatial Information Sciences IV-2/W4: 185–191. ResearchGate. doi:10.5194/isprs-annals-IV-2-W4-185-2017.

- Xu, Y., R. Boerner, W. Yao, L. Hoegner, and U. Stilla. 2019. ”Pairwise Coarse Registration of Point Clouds in Urban Scenes Using Voxel-Based 4-Planes Congruent Sets.” ISPRS Journal of Photogrammetry and Remote Sensing 151: 106–123. Elsevier. doi:10.1016/j.isprsjprs.2019.02.015.

- Xu, Y., L. Hoegner, S. Tuttas, and U. Stilla. 2017a. ”Voxel- and Graph-Based Point Cloud Segmentation of 3D Scenes Using Perceptual Grouping Laws.” ISPRS Annals of the Photogrammetry, Remote Sensing and Spatial Information Sciences IV (1): 43–50. ResearchGate. doi:10.5194/isprs-annals-IV-1-W1-43-2017.

- Xu, E., Z. Xu, and K. Yang. 2022. ”Using 2-Lines Congruent Sets for Coarse Registration of Terrestrial Point Clouds in Urban Scenes.” IEEE Transactions on Geoscience and Remote Sensing 60: 1–18. IEEEXplore. doi:10.1109/TGRS.2021.3128403.

- Yang, J., Y. Xiao, and Z. Cao. 2019. ”Aligning 2.5D Scene Fragments with Distinctive Local Geometric Features and Voting-Based Correspondences.” IEEE Transactions on Circuits and Systems for Video Technology 29 (3): 714–729. IEEEXplore doi:10.1109/TCSVT.2018.2813083.

- Yang, B., and Y. Zang. 2014. ”Automated Registration of Dense Terrestrial Laser-Scanning Point Clouds Using Curves.” ISPRS Journal of Photogrammetry and Remote Sensing 95: 109–121. Elsevier. doi:10.1016/j.isprsjprs.2014.05.012.

- Yang, B., Y. Zang, Z. Dong, and R. Huang. 2015. ”An Automated Method to Register Airborne and Terrestrial Laser Scanning Point Clouds.” ISPRS Journal of Photogrammetry and Remote Sensing 109: 62–76. Elsevier. doi:10.1016/j.isprsjprs.2015.08.006.

- Zang, Y., R. Lindenbergh, B. Yang, and H. Guan. 2020. ”Density-Adaptive and Geometry-Aware Registration of TLS Point Clouds Based on Coherent Point Drift.” IEEE Geoscience and Remote Sensing Letters 17 (9): 1628–1632. IEEEXplore doi:10.1109/LGRS.2019.2950128.

- Zang, Y., B. Yang, J. Li, and H. Guan. 2019. ”An Accurate TLS and UAV Image Point Clouds Registration Method for Deformation Detection of Chaotic Hillside Areas.” Remote Sensing 11 (6): 647. ResearchGate doi:10.3390/rs11060647.

- Zhang, Z., L. Sun, R. Zhong, D. Chen, Z. Xu, C. Wang, C. Qin, H. Sun, and R. Li. 2019. ”3-D Deep Feature Construction for Mobile Laser Scanning Point Cloud Registration.” IEEE Geoscience and Remote Sensing Letters 16 (12): 1904–1908. IEEEXplore doi:10.1109/LGRS.2019.2910546.