?Mathematical formulae have been encoded as MathML and are displayed in this HTML version using MathJax in order to improve their display. Uncheck the box to turn MathJax off. This feature requires Javascript. Click on a formula to zoom.

?Mathematical formulae have been encoded as MathML and are displayed in this HTML version using MathJax in order to improve their display. Uncheck the box to turn MathJax off. This feature requires Javascript. Click on a formula to zoom.ABSTRACT

Edges are distinct geometric features crucial to higher level object detection and recognition in remote-sensing processing, which is a key for surveillance and gathering up-to-date geospatial intelligence. Synthetic aperture radar (SAR) is a powerful form of remote-sensing. However, edge detectors designed for optical images tend to have low performance on SAR images due to the presence of the strong speckle noise-causing false-positives (type I errors). Therefore, many researchers have proposed edge detectors that are tailored to deal with the SAR image characteristics specifically. Although these edge detectors might achieve effective results on their own evaluations, the comparisons tend to include a very limited number of (simulated) SAR images. As a result, the generalized performance of the proposed methods is not truly reflected, as real-world patterns are much more complex and diverse. From this emerges another problem, namely, a quantitative benchmark is missing in the field. Hence, it is not currently possible to fairly evaluate any edge detection method for SAR images. Thus, in this paper, we aim to close the aforementioned gaps by providing an extensive experimental evaluation for SAR images on edge detection. To that end, we propose the first benchmark on SAR image edge detection methods established by evaluating various freely available methods, including methods that are considered to be the state of the art.

1. Introduction

Remote-sensing satellite images capturing the earth’s surface enable surveillance, analysis of infrastructure agriculture, and natural disaster management (Sharma et al. Citation2008). Optical and synthetic aperture radar (SAR) equipment obtain the two primary forms of the satellite data. Among them, SAR imaging with longer wavelengths can penetrate the weather, making it possible to capture areas under clouds. Moreover, SAR itself actively sends and retrieves signals to the earth’s surface that makes it ideal for surveillance and for analysing the earth’s surface in areas any time of the day, even when it is dark. Thus, being invariant to cloud cover and daylight, SAR images are widely preferred.

Nonetheless, to manually realize surveillance and natural disaster management is infeasible because human resources are expensive, and algorithms are much faster in detecting patterns than humans. Therefore, it is profitable to automate the monitoring tasks by detecting distinct geometric features like lines, edges and blobs so that only the important features are analysed. These features are often used as salient image regions for pre-segmentation for object detection and recognition in remote-sensing image processing. For example, roads can be detected by identifying lines (Chen et al. Citation2018), blob-like structures might give clues on icebergs to avoid risks in ship navigation and offshore installations (Soldal et al. Citation2019), or linear features can emphasize geological lineaments to analyse the formation of minerals, active faults, groundwater controls, earthquakes and geomorphology (Ahmadi and Pekkan Citation2021).

Edges form the most fundamental features of the geometric primitives. They can be utilized to identify more advanced geometric and semantic features such as lines, corners, junctions, contours and boundaries. An edge manifests itself by an abrupt change in pixel intensity values, often identified by a significant shift in first or second derivative. Mostly, a convolutional filter (kernel) approximates the gradients or second derivatives of an image. Applying the kernel yields edge responses and a threshold determines which changes are considered as true edges. The most common optical edge filters are the traditional Sobel, Roberts Cross and Prewitt operators (Duda and Hart Citation1973; Roberts Citation1963; Prewitt Citation1970). More advanced ones such as the Laplacian of Gaussian (LoG) or Canny, include a smoothing operation to prevent detecting noise as false edges (Marr and Hildreth Citation1980; Canny Citation1986). On the other hand, recent state-of-the-art edge detectors use data-driven supervised convolutional neural networks (CNNs) to learn specialized kernels (Xie and Tu Citation2015; Liu et al. Citation2019; He et al. Citation2020).

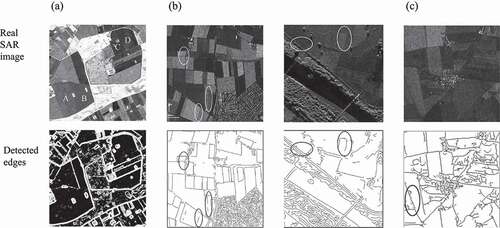

In addition, there exists various SAR-specific edge detectors that deal with the SAR-specific speckle noise characteristics, such as the ratio-based edge detector (RBED), the multiscale edge detector based on Gabor filters, and the constant false alarm rate (CFAR) edge detector, as illustrated in (Wei and Feng Citation2015; Xiang et al. Citation2017a; Schou et al. Citation2003). Although these edge detectors can achieve robust and effective results in their own evaluations, the comparisons tend to include only a couple of (simulated) SAR images. For instance, Xiang et al. (Citation2017a) use a synthetic image corrupted with the speckle noise and a real TerraSAR-X image for the evaluations. Similarly, Luo et al. (Citation2020) use a single-simulated synthetic image and two real Mini-SAR images. A number of commonly utilized examples are provided in .

Figure 1. A) Ratio-based edge detector using σhh (L-band). Credits: (Schou et al. Citation2003) b) RBED edge detector. Credits: (Wei and Feng Citation2015). c) Multiscale edge detector. Credits: (Xiang et al. Citation2017a).

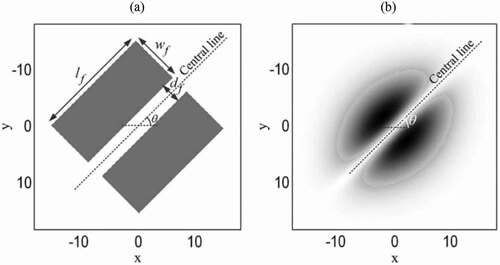

Figure 2. Examples of synthetic images of simulated simple scenarios. a) Wei and Feng (Citation2015) b) Zhan et al. (Citation2013) c) Xiang et al. (Citation2017b).

Synthetically generated images provide ground-truth edge annotations so that the authors calibrate the parameters of their algorithms using quantitative evaluations. The parameters achieving the highest performance are selected and applied to real SAR images to provide qualitative evaluations. Nonetheless, the quantitative evaluations and the parameters selection process are based only on a couple of simple images, as presented in . Therefore, the generalized performance of the proposed methods is not truly reflected as real-world patterns are much more complex and diverse. The main reason behind is the lack of large-scale datasets with ground-truth edge annotations. There also emerges another problem that a quantitative benchmark is missing in the field. Thus, at the moment, it is not possible to fully evaluate a (new or existing) method. Moreover, there is simply no fair way to compare the results to other research since the same evaluation images are not utilized and mostly a small number of comparison methods are evaluated. In that sense, Bachofer et al. (Citation2016) provide the only work on the comparison of different combinations of speckle reduction techniques and optical edge detection methods. However, they evaluate four fundamental methods on only four images with multilooking. Thus, a comprehensive evaluation of the baseline methods is also missing in the field.

Therefore, in this paper, we aim to close all the aforementioned gaps. Recently, Liu et al. (Citation2020) have simulated a large-scale SAR dataset called BSDS500-speckled exploiting an optical imagery dataset of natural scenes to train their CNN for edge detection in SAR images. The dataset is generated by multiplying the greyscale intensity optical images with a 1-look simulated speckle noise. Including different augmentations, the dataset includes training images and

test images. Thus, instead of using a couple of simple synthetic images, we propose to use the training set of the BSDS500-speckled (28,800 images) for parameter tuning. The detectors with the best-performing parameters on the training set are eventually evaluated on the test set to form a benchmark. With this benchmark, we provide the most extensive experimental evaluation for SAR images on edge detection and thereby addressing the lack of large-scale experimental reviews in the remote-sensing field. The review also includes the performance evaluations of a number of denoising algorithms. To that end, we evaluate the following edge detectors: Roberts Cross, Prewitt, Sobel, Scharr, Farid, Frei-Chen, Laplacian of Gaussian (LoG), Differences of Gaussians (DoG), Canny, Gabor filters, a K-means clustering-based edge detector, a 2D wavelet discrete transformation-based edge detector, a subpixel edge detection algorithm based on partial area effect, Touzi, gradient by ratio (GR), Gaussian-Gamma-shaped (GSS) bi-windows, ratio of local statistics with robust inhibition augmented curvilinear operator (ROLSS RUSTICO), a SAR-specific Shearlet transformation-based edge detector, and the supervised CNN model of Liu et al. (Citation2020). In addition, for the first time in literature, we explore a bundle of decision fusion methods for the task, which aims to combine the outputs of different algorithms. We hope that our work will serve as a baseline for future SAR-specific edge detection algorithms.

2. Related work

2.1. Denoising

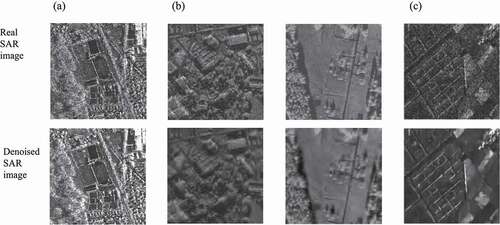

Noise is a random variation of pixel intensities arising from the acquisition process of the digital images. Among different noise patterns, speckle noise gets created because of random interference between the coherent returns due to the differences in the surface within pixels (Boncelet Citation2009). In that sense, unlike optical images, SAR images are highly corrupted with speckle noise. As a result, basic edge detection methods might produce unsatisfactory results for radar images (Touzi et al. Citation1988). The main problem is that the speckle noise patterns may be identified as false edges. Therefore, although SAR images are widely appreciated thanks to their high-resolution, wide-area coverage, and weather and illumination invariant properties, they also bring challenges. The speckle noise characteristics make them exceedingly challenging to process. To that end, denoising algorithms aim to decrease the amount of noise while preserving important structures. Thus, the task is an active area of research. A number of examples are presented in .

Figure 3. A) Denoised with SAR-BM3D. Credits: (Parrilli et al. Citation2011). b) Denoised with soft thresholding. Credits: (Achim et al. Citation2003). c) Denoised with SAR-CNN. Credits: (Molini et al. Citation2020).

It is not possible to entirely denoise the speckled images (Singh and Shree Citation2016). Nevertheless, there are various techniques that aim to reduce the speckle. For instance, by combining statistically uncorrelated speckle patterns, multi-look images can be created. The disadvantage of this method is the decreased system resolution (Ouchi Citation1985). In this paper, we consider the more challenging 1-look images. In addition, most denoising algorithms only model additive noise, making them less fitting for the multiplicative speckle noise. A way to still use the additive denoising algorithms is to convert SAR images to the natural logarithm domain before applying the methods.

The two main categories for the denoising task are spatial and transform domain filtering. Linear and non-linear filters divide the former into two categories. The non-linear filters do not assume a distribution of the random noise. Transform domain filtering first transforms the noisy images and attempts to denoise the transformed image. Preserving image features, including edges and corners, is a major challenge in reducing noise (Jain and Tyagi Citation2016). Low-level denoising methods use only convolutions with hand-crafted filters. The median, mean and Gaussian are the most common filtering options and have the drawback of smearing out some of the true edges.

Here, we iterate over a number of methods that we utilize in our experiments. First of all, with Gaussian smoothing, a Gaussian (the normal distribution) is convolved over an image. It is a local operation that averages neighbourhoods according to a Gaussian distribution with a given standard deviation. The advantage of this filter is that blobs are preserved, while with a strong mean filter, blobs can blend together. It is among the most commonly used methods. In addition, Block-Matching and 3D Filtering (BM3D) is a block-matching algorithm proposed by Dabov et al. (Citation2007). It takes a 2D block of an image and then finds similar blocks within the image. These similar blocks do not only have a similar average intensity but a comparable noise distribution. They are grouped into a 3D array. Then, the 3D arrays are processed with collaborative filtering. This grouping method reveals fine details while preserving important structures. Bilateral filtering is another smoothing method that preserves structures (Tomasi and Manduchi Citation1998). It takes the average of surrounding pixels, which generally becomes problematic at edges since they get averaged out. The variation of intensities is taken into account to prevent this. In addition, anisotropic diffusion takes the average of neighbourhoods, even if these neighbourhoods contain edges. It aims to only smooth out pixels on the same side of an edge, and thus it tends to generate appealing results on the supposedly homogeneous parts (Perona and Malik Citation1990). Finally, instead of just taking a local average with a small kernel, non-local means denoising (NLMD) considers the image as a whole. Then, the averages are weighted. Similar pixels get a higher weight than non-similar pixels. It tends to preserve edges and other details better than local denoising algorithms (Buades et al. Citation2005). On the other hand, more advanced methods utilize powerful deep-learning models (Zhang et al. Citation2017, Citation2018; Kim et al. Citation2019; Tian et al. Citation2020; Byun et al. Citation2021).

There also exists SAR-specific denoising methods. For example, SAR expansions of NLMD, BM3D, anisotropic diffusion, bilateral filtering are proposed (Gupta et al. Citation2013; D’Hondt et al. Citation2013; Zhao et al. Citation2014; Sica et al. Citation2018). Furthermore, supervised deep-learning methods tend to outperform classic methods. Lattari et al. (Citation2019) present an encoder-decoder-based CNN for end-to-end denoising. In addition, Cozzolino et al. (Citation2020) present a non-local filtering method powered by deep learning where the coefficients of the weighted average of neighbours are learned by a CNN. Moreover, Vitale et al. (Citation2021) propose a CNN with a multi-objective loss function considering spatial and statistical properties of the SAR images. Nonetheless, these CNNs need annotated data to train on, which is not always available for real SAR images. To tackle this problem, recently, Molini et al. (Citation2021) introduce a self-supervised Bayesian method with similar or better performance to the supervised training approaches. A detailed review on deep-learning techniques applied to SAR image denoising task and the recent trends can be found in the work of Fracastoro et al. (Citation2021).

2.2. Benchmarks datasets

Benchmark datasets are of great importance for the development of machine-learning algorithms. They allow for fair evaluations and comparisons between different algorithms using quantitative evaluation metrics. They provide overviews about the algorithms’ ability to discover old and also new patterns, their superior sides and also limitations, time and space complexities, and thus their respective strengths and weaknesses. Hence, performances of algorithms against state-of-the-art or other competitive models can be assessed. One example is the famous MNIST dataset consisting of 60,000 training images and 10,000 testing images of 28 x 28 pixels with human annotated examples of handwritten digits (LeCun et al. Citation1998). Modern machine-learning algorithms already achieve around an accuracy of 99% in correctly classifying the digits. As a result, nowadays, it serves as a baseline as the first step for many classifiers to first test on; if the performance of the model is not decent, then there is little chance for it to work on more complex tasks.

Similarly, large-scale benchmark datasets have enabled computer vision research to make significant progress over the last years, especially with the rise of deep learning. The most famous example is the ImageNet project (Russakovsky et al. Citation2015), which is a benchmark in object category classification and detection, currently including more than 14 million images labelled into 20,000 different categories. The extraordinary performance of the revolutionary deep CNN architecture AlexNet (Krizhevsky et al. Citation2012) on the benchmark marks the beginning of the deep-learning era for computer vision. In parallel, many famous CNN models are emerged following the benchmark to beat AlexNet, such as VGGNet (Simonyan and Zisserman Citation2015), Inception (Szegedy et al. Citation2015), ResNet (He et al. Citation2016), DenseNet (Huang et al. Citation2017), Squeeze-and-Excitation (Hu et al. Citation2018) and EfficientNet (Tan and Le Citation2019). Other popular and large-scale computer vision datasets include CIFAR, Microsoft COCO, PASCAL VOC, Cityscapes and SUN (Krizhevsky Citation2009; Lin et al. Citation2014; Everingham et al. Citation2015; Cordts et al. Citation2016; Xiao et al. Citation2016). Thanks to their success on the real world large scale benchmarks, nowadays, CNNs are widely preferred, from face recognition to autonomous driving, in daily life where computer vision is utilized.

In addition, the large-scale benchmark datasets are widely utilized for transfer learning. Since it is not always possible to find or collect large-scale data sources for each problem or use case, models trained on large-scale benchmarks are assumed to learn generic features, such as edges and contours, that can be transferred to different domains. Likewise, instead of using random weights, pre-trained model weights can be used to initialize deep models to accelerate the learning process. Finally, large-scale benchmarks can be employed for self-supervised training of proxy tasks for smart model initialization, such as colorization or guessing the spatial configuration for two pairs of patches as proxy tasks for visual understanding (Larsson et al. Citation2017; Doersch et al. Citation2015). Similar techniques are also successfully applied to remote-sensing images (Tao et al. Citation2020; Stojnic and Risojevic Citation2021).

In parallel with the developments in digital instruments and big data, remote-sensing imagery becomes more and more widely available also introducing benchmark datasets to boost the development of new and improved algorithms. For instance, Yang and Newsam (Citation2010) provide a 21 class land-use image set called UC Merced Land Use dataset, where each class has 100 images, for land-use classification in high-resolution overhead imagery. Additionally, Xia et al. (Citation2018) produce a large-scale dataset for object detection in aerial images called DOTA, together with a challenge. It’s final version consists of 18 categories spanned into 11,000 images and around 1,800,000 instances. Similarly, Li et al. (Citation2020) present DIOR, a large‐scale benchmark for object detection with around 23,000 images and 190,000 instances of 20 object classes. They also evaluate several state-of-the-art approaches to establish a baseline. Besides, PatternNet administers 38 different classes, having 800 images each, for remote-sensing image retrieval and also provide extensive evaluation of various methods to form a baseline (Zhou et al. Citation2018). Moreover, SpaceNet provide large-scale labelled satellite imagery and competitions for automated building footprint extraction and road network extraction for disaster management scenarios (van Etten et al. Citation2019). Likewise, xBD dataset provides before and after event satellite imagery for assessing building damage for disaster recovery research, together with a challenge (Gupta et al. Citation2019). Furthermore, FloodNet dataset provides high-resolution unmanned aerial vehicle imagery captured after Hurricane Harvey over Texas and Louisiana in August 2017 (Rahnemoonfar et al. Citation2021). It also provides two challenges; image classification and semantic segmentation, and visual question answering to boost the developments in the field. A review on benchmarking in photogrammetry and remote-sensing with an overview can be found in the work of Bakula et al. (Citation2019). Finally, ImageNet benchmark has been successfully utilized for remote-sensing applications as well by transfer learning. For instance, Marmanis et al. (Citation2015) use pretrained ImageNet features for classifying remote-sensing data that improves the overall accuracy from 83.1% up to 92.4% on the UC Merced Land Use benchmark. Similarly, Salberg (Citation2015) utilizes generic image features extracted from AlexNet trained on the ImageNet database for automatic detection of seals in remote-sensing images. All in all, from the literature, it can be noticed that existing remote-sensing dataset are mainly limited to high level tasks such as classification or semantic segmentation.

As for SAR-specific datasets, So2Sat LCZ42 provides a benchmark for global local climate zones classification (Zhu et al. Citation2020), OpenSARUrban introduces a benchmark for urban scene classification together with extensive baseline evaluations (Zhao et al. Citation2020). They can be considered as general scene classification challenges. There also exists fine-grained object detection baselines for specific use cases such as ship detection (Huang et al. Citation2018; Wei et al. Citation2020). In addition, as part of the SpaceNet data corpus, MSAW presents dataset, baseline and challenge focusing on building footprint extraction (Shermeyer et al. Citation2020). Moreover, Sen1Floods11 introduces a georeferenced dataset to evaluate methods on flood detection. These can be considered as semantic segmentation benchmarks. On the other hand, there hardly exists datasets or benchmarks for low-level image processing tasks such as edge detection, noise reduction, contrast enhancement, image stitching or image sharpening. Therefore, a number of remote-sensing researchers have compiled their own individual reference data for the evaluations. Moreover, although methods might yield robust and effective results on their reference data, the comparisons tend to include only a couple of competitive approaches. Therefore, a comprehensive evaluation of the baseline methods is also missing in the field. Thus, in this paper, we aim to close the aforementioned gaps by providing extensive experimental evaluations for SAR images on the edge detection task to form the first benchmark and thereby addressing the lack of large-scale experimental reviews in the remote-sensing field.

3. Edge detection

One of the oldest operations in image processing and a building block for more complex algorithms is edge detection (Sponton and Cardelino Citation2015). Edges can give indications for boundaries and contours, and can help describe the (geometric) forms of objects in images. An edge reveals itself by an abrupt change in pixel intensity values. In that sense, for SAR images, an edge can be characterized by the boundary between two homogeneous regions such as the borders between two different croplands or the intersections between land and sea.

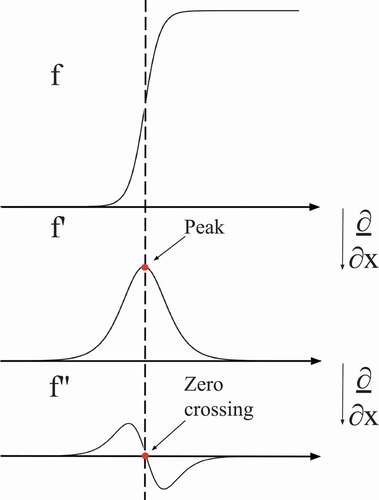

There are two main families of edge detection: first and second derivative based. The first derivative edge detection is the most commonly used. With the first derivative-based edge detection, an edge can be detected by a peak, whereas with the second derivative-based edge detection, it can be detected by a zero-crossing, see . The computational costs of the methods that are based on the first derivatives (e.g. Sobel, Roberts Cross and Prewitt) are low compared to more complex edge operations. Thus, these detectors are at the bottom of the hierarchy, considered as low level and straightforward without any parameters to tune. The methods that are based on second derivatives usually include a smoothing step for noise reduction. Nonetheless, that also brings the challenge of selecting the optimum smoothing parameter. Thus, each edge detector has both advantages and disadvantages.

Figure 4. Difference in first and second derivatives-based edge detection. f indicates the image intensity.

3.1. First-order derivatives

Since edges are defined by an abrupt change in pixel intensity values, first-order derivatives search for the (local) maximum variations in the first derivatives of an image. The most common way to estimate the first derivative is by the first-order Taylor expansion with a small step as provided in Equations 1 and 2 as follows:

Since digital images are discrete, the small step can be considered as 1 (pixel), when , which leads to Equations 3 and 4, where

and

now denote pixel coordinates.

To compute the discrete derivatives (i.e. finite differences), simple 1D filters (kernels) can be utilized:

,

,

where and

(convolutional) filters compute gradient responses in the horizontal and vertical directions. Then, the gradient magnitude (

) (the edge response map) per pixel is calculated by Equation 5 as follows:

The gradient magnitude points to the direction of the most significant change in intensity. Then, the orientation is computed by Equation 6 as follows:

Once the gradient magnitude is computed, a threshold is set to decide from which pixels of the response map to be considered as true edges. Setting the threshold to lower values might capture unnecessary details (e.g. noise), whereas higher values might ignore critical structures. Thus, an optimum threshold is desired. The standard procedure of the first order derivatives-based edge detection is illustrated in .

Obviously, 1D differentiation filters do not consider diagonal edges. To achieve that 2D filters are required. The most commonly used 2D filters are Sobel, Roberts Cross and Prewitt operators (Duda and Hart Citation1973; Roberts Citation1963; Prewitt Citation1970).

3.1.1. Roberts cross

Instead of approximating gradients along the horizontal and vertical directions, diagonal directions can also be considered (i.e. 45 and 135

). To that end, Roberts (Citation1963) offers two kernels to compute the gradient magnitude:

,

.

Therefore, it also generates high responses to changes in diagonal directions. It is mostly preferred for its simplicity. Nonetheless, its small kernels make it very sensitive to noise. Moreover, due to the coefficients of the filters, it can only capture sharp edges.

3.1.2. Prewitt

Different from the Roberts Cross filters, Prewitt (Citation1970) offers 3 x 3 kernels to compute the gradient using eight directions:

,

.

Similar to Roberts Cross, Prewitt is mostly preferred for its simplicity. Moreover, it provides some robustness to noise by differentiating in one direction and averaging in another due to its larger filter size. Nonetheless, due to its coefficients, it is mostly suited for images with high contrast.

3.1.3. Sobel

Sobel filters are also 3 x 3, but they are biased towards the centre pixel by giving significant weight to the centre coefficients of the kernels (Duda and Hart Citation1973):

,

.

Therefore, averaging gives more weight to central pixel, which results in smoother responses than Prewitt. Similar to Prewitt, it computes edges in eight directions. In addition, the filters are separable such that they can be expressed as a matrix product of a 1D column and a 1D row vectors, which can be utilized for faster computations.

3.1.4. Scharr

Scharr filters are very similar to Sobel, but with different coefficients. Nonetheless, unlike Sobel, Scharr provides anisotropic filters that tends to achieve better rotation invariance. The weights are derived by optimizing the weighted mean-squared angular error in Fourier domain (Scharr Citation2000):

,

.

3.1.5. Farid

Similar to Scharr, Farid determines the filter weights by the optimization of the rotation invariance of the gradient operator in the Fourier domain (Farid and Simoncelli Citation2004):

Different from the previous filters, Farid’s coefficients are of float data type which makes it computationally more expensive. Note that we round the weights to 6 decimals for better presentation. Additional decimal places can be found in Python’s scikit-image package.Footnote1 Furthermore, Farid’s smallest filter size is 5 x 5 which is again computationally more demanding than the previous filters. Nonetheless, larger mask size might be beneficial in reducing the the effects of noise by local averaging within the neighbourhood, yet it might also cause more blurring. As a final remark, extended versions (i.e 5 x 5 or 7 x 7) of the aforementioned filters also exist (Lateef Citation2008; Kekre and Gharge Citation2010; Bogdan et al. Citation2020), yet we respect the original algorithms and use them without any extensions.

3.1.6. Frei-Chen

Different from the previous methods which provide horizontal and vertical filters, Frei-Chen method offers nine kernels that contain all of the basis vectors so that the local neighbourhood (i.e 3 x 3) is represented by the weighted sum of those nine basis vectors (Frei and Chen Citation1977):

,

,

,

,

,

,

,

,

.

Filters ,

,

and

capture the edge subspace,

,

,

and

capture the line subspace, and

captures the average subspace. It is computationally more expensive than the previous methods utilizing 3 x 3 kernels as it has seven more kernels and a number of them with float data type coefficients. We exclude the averaging operation

as it does not include derivatives and combine all the responses (

, … ,

) as done in Equation 5.

3.2. Second-order derivatives

It is also possible to reveal edges by exploiting the second-order derivatives by detecting zero crossings. First-order derivatives search for the peaks that are above a certain threshold, whereas the second-order derivatives automatically identify the local maxima. Nonetheless, the first-order derivatives are more robust to noise than the second-order derivatives as further differentiation amplifies the noise. The most commonly used second-order derivative operations are Laplacian of Gaussian (LoG) and Difference of Gaussians (DoG) (Marr and Hildreth Citation1980).

3.2.1. Laplacian

One way to calculate the second derivative of an image is computing the Laplacian. Since the Laplacian is the divergence of a gradient, it can represent the second derivative by highlighting rapid intensity changes. Similar to the first-order derivatives (in Equations 1, 2, 3 and 4), it can be represented as follows:

For an additional comparison between the first and second derivative filters, see . To approximate the effect of the Laplacian, the following 3 x 3 discrete convolution kernel can be utilized by adding the vertical and horizontal derivative filters in :

Figure 6. Examples of directional filters. α is measured counterclockwise from the horizontal axis. Credits: Schowengerdt (Citation2007).

Note that since the Laplacian utilizes a single mask, the edge orientation information is not available. Finally, the Laplacian operator usually is not used individually as it is more sensitive to noise than the first-order based methods due to the additional differentiation. Thus, it is a common practice to combine it with a smoothing operation.

3.2.2. Laplacian of Gaussian (LoG)

The LoG is an extension to the Laplacian filter, where the Laplace response is combined with a Gaussian filter. The Laplacian is good at detecting thin edges, but it is more sensitive to noise than the first derivative variants. To keep the false detection of edges to a minimum, a Gaussian filter is applied to an image, before detecting the edges with a Laplacian convolution. Nonetheless, the smoothing might smear out some of the sharp edges lowering the precision in edge localization. Thus, the smoothing parameter should be treated carefully. Finally, the thresholding is achieved by zero-crossing, which is the key feature of this algorithm. To that end, the Gaussian kernel is estimated by Equation 10, then taking the Laplacian of the Gaussian equation by Equation 11, LoG filter is realized in Equation 12 as follows:

where denotes the standard deviation of the Gaussian.

3.2.3. Difference of Gaussians (DoG)

The DoG takes two Gaussians with different variances of an image and calculates the difference. Edges are identified by the differences of the convolutions of the two Gaussian kernels with the image as follows:

where and

represent the two standard deviations of the Gaussian. Before taking the differences, each Gaussian function is normalized so that the area under the curve is one, making the mean difference is zero. It basically subtracts a highly smoothed version of an image from the less smoothed one, acting as a band-pass filter ignoring high-frequency components that are often attributed to noise. Finally, the thresholding is achieved by zero-crossing, similar to LoG. Additionally, with particular parameter settings DoG becomes an approximation of the LoG. In terms of the computational complexity, there is no significant difference between these two approaches.

3.3. Advanced methods

In addition to the commonly used first- and second-order derivative-based filters, we also iterate over a number of advanced methods that use additional features or steps in their algorithms.

3.3.1. Canny

Canny is one of the most widely used edge detection algorithms (Canny Citation1986). It is composed of noise reduction, gradient calculation, non-maximum suppression, double thresholding and hysteresis. Firstly, it smooths an image with a Gaussian kernel. Secondly, the response is convolved with a low-level edge detector (e.g. Sobel or Roberts) to obtain the gradient image. Afterwards, non-maximum suppression is applied to extract thin edges by identifying the pixel with the maximum value in an edge. Then, by setting a high and a low threshold, strong and weak edges are determined. The strong edge pixels are labelled as final edges. In the ultimate stage, hysteresis decides which of the weak edges are considered as true edges by tracking the edge connections within a neighbourhood. Non-maximum suppression and hysteresis steps greatly contribute to reducing the number of false edges. Nonetheless, these steps also increase the computation time. Finally, the algorithm has three main parameters to tune (sigma for Gaussian smoothing and two thresholds), which makes it more demanding than the previously mentioned methods.

3.3.2. Gabor filters

Gabor filters are mostly used for texture analysis and feature extraction as they have been shown to have optimal localization both in spatial and frequency domains (Daugman Citation1985). Similar to DoG, Gabor filters act as adjustable band-pass filters. A Gabor function is a Gaussian function modulated with a complex sinusoidal carrier signal. To extract features of different shapes, orientations, and scales, it is common practice to combine multiple filters together in a filter-bank, as illustrated in . This is similar to the case of Frei-Chen where different kernels are constructed to capture different patterns. Nonetheless, Gabor filters can be created with an endless amount of orientations, combinations, and smoothing settings. A 2D Gabor function contains real and imaginary parts as described in EquationEquations 14(14)

(14) and Equation15

(15)

(15) :

Figure 7. Example of the real parts of the Gabor filters with different orientations and frequencies to capture various patterns. These filters are also used to create one of our filter banks.

where for the spatial location ,

and

denote the wavelength and the phase offset of the sinusoidal carrier signal,

controls the orientation, and

and

indicate the standard deviation and the spatial aspect ratio of the Gaussian. Finally, the response of each convolution with a real and imaginary Gabor kernel is combined by accumulating the real and imaginary parts as follows:

where indicates a Gabor kernel. To create a filter bank, various Gabor kernels are created with different orientations and frequencies. Then, the response (

) of each kernel can be combined together by summing them to create a superimposed edge response map (gradient magnitude). Other options might include taking the maximum response per pixel or simply computing the average responses over all kernels.

3.3.3. K-means clustering

K-means clustering algorithm can also be utilized to split an image into a number of clusters based on pixel intensities to detect different regions. The number is of clusters is defined by the hyperparameter . It can be particularly useful as the derivatives-based edge detection methods using filters are known to be sensitive to noise as they operate over small neighbourhoods. On the other hand, when an image is grouped into a small number of regions, the boundaries between the clusters are expected to reveal true edges. For example, given an image containing only water and land pixels, and using

, the algorithm is to divide those pixel values in two distinct clusters. Then, applying a low-level edge detector (e.g. Sobel), the boundaries between the clusters can be extracted as edges. It is a suitable option for SAR images since the edges often manifest as boundaries between homogeneous areas.

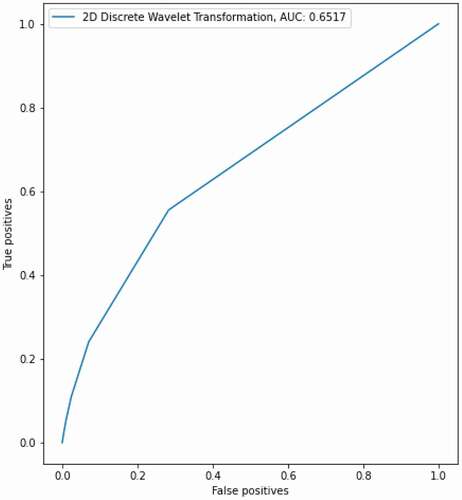

3.3.4. 2D discrete wavelet transformation

A wavelet transformation decomposes image signals to different scales of frequency bands, which may be considered isotropic low-pass and high-pass components (Schowengerdt Citation2007). One level decomposition provides four different sub-bands namely low-low (LL), low-high (LH), high-low (HL) and high-high (HH). Using these four sub-bands, the original image can be reconstructed. The LL sub-band is composed of an approximation of the original image and it can be decomposed further, while the rest of the components are composed of the high-frequency information that are expected to highlight edges. Firstly, we apply the 2D discrete wavelet transformation using a biorthogonal wavelet. Then, the LL component is discarded as it does not contain high-frequency information and the rest of the components are combined into an edge response map similar to the previous cases as follows:

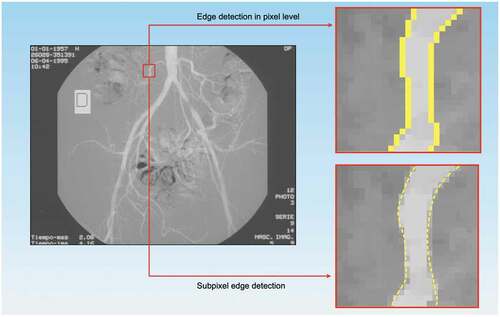

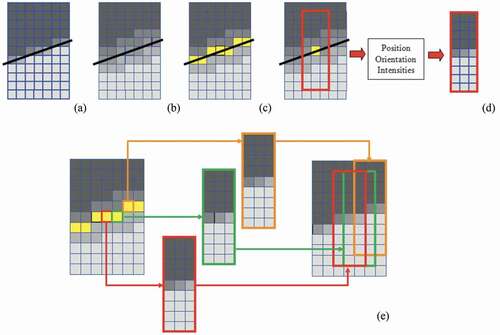

3.3.5. Subpixel edge detection

illustrates the difference between the subpixel edge detection and the derivatives-based methods. Since the derivatives-based edge detection methods operate on the the discrete structure of the digital images, they characterize a pixel into a single region. Nonetheless, object shapes may be projected with inaccuracies or unambiguously registered during the image acquisition process which might affect the true position of an edge pixel. To address these issues, subpixel methods aim at recognizing the location and orientation of an edge within a pixel with high precision. To that end, Trujillo-Pino et al. (Citation2013) propose a subpixel edge detection algorithm based on partial area effect, which assumes a particular discontinuity in the edge location and that pixel values are proportional to the intensities and areas at both sides of an edge. It first applies a 3 x 3 averaging filter to smooth the input image. Then, the partial derivatives of the smoothed image are computed. Afterwards, a 9 x 3 window is centred on the each pixel of the partial derivatives to ensure that an edge crosses the window from left to right. Later, the sum of the right, middle and left column of the window are computed to solve three system of linear equations to obtain the edge features; orientation, curvature and change in intensity in both sides of the edge. Finally, the subpixel position is calculated by measuring the vertical distance from the centre of the pixel to the edge. Edges are detected by a threshold that considers a minimum intensity change. If a pixel is marked as an edge by the algorithm, a restored subimage is generated containing a perfect edge. The process is applied for each pixel position and the generated subimages are combined to achieve one final edge map. The whole procedure can be repeated to refine the results of a previous iteration. It is illustrated in . We refer the reader to the work of Trujillo-Pino et al. (Citation2013) for mathematical derivations and additional details.

Figure 8. Subpixel edge detection where edges are precisely located inside the pixels. Credits: (Trujillo-Pino et al. Citation2013).

Figure 9. Subpixel edge detection algorithm based on partial area effect. a) Input image; b) Smoothed image; c) Detecting edge pixels; d) a synthetic 9×3 subimage is created from the features of each edge pixel; e) Subimages are combined to achieve a complete restored image. Credits: (Trujillo-Pino et al. Citation2013).

3.4. SAR-specific methods

In addition to the traditional optical methods, we analyse eight more edge detectors that are specifically tailored for SAR images; Touzi, gradient by ratio (GR), Gaussian-Gamma-shaped (GSS) bi-windows, ratio of local statistics with robust inhibition augmented curvilinear operator (ROLSS RUSTICO), a SAR-specific Shearlet transformation-based edge detector, GRHED - the supervised CNN model of Liu et al. (Citation2020), and two different fusions of () the ratio of the averages (ROA) and the ratio of exponentially weighted averages (ROEWA)-based methods, and (

) an optical and a SAR-specific method. These methods are designed to better handle the possible false edge artefacts due to the speckle noise.

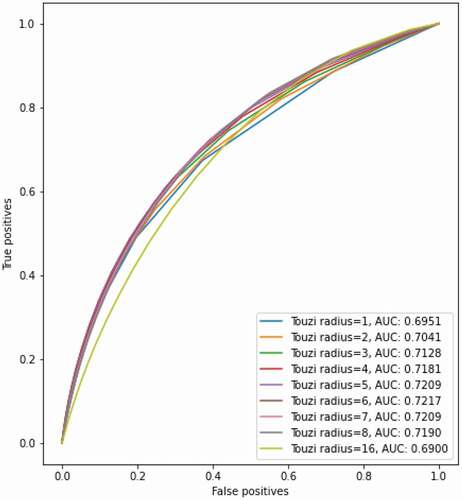

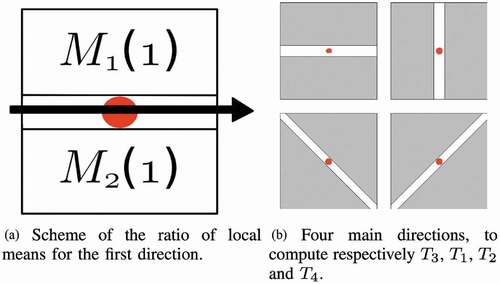

3.4.1. Touzi

Touzi is a CFAR-based edge detector using the ratio of pixel values. It is designed to be more stable against the multiplicative speckle noise than the traditional edge detectors (Touzi et al. Citation1988). Therefore, instead of using differentiation, as in the case of first-order derivatives, it uses the ratio of the averages (ROA) of patches as the rate of false alarms is independent of the average local radiometry. It is computed for the four principal directions (0, 45

, 90

, 135

) to capture horizontal, vertical and diagonal edges as the ratio of the means of the two neighbourhoods on the opposite sides of a pixel along a direction:

where μs are the arithmetic mean values of the two halves of a given window. At a given pixel, is calculated for the given directions and the maximum response corresponds to the edge response of that pixel. Finally, thresholding decides which pixels are true edges. The framework of computing the ratios is also demonstrated in .

Figure 10. Scheme of the ROA method. Credits: Dellinger et al. (Citation2015).

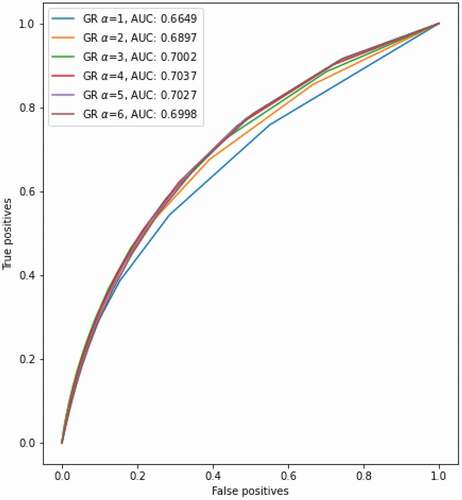

3.4.2. Gradient by ratio (GR)

Gradient by ratio method defines the horizontal and vertical gradient components as follows (Dellinger et al. Citation2015):

where Rs are the ratio of exponentially weighted averages (ROEWA) of the two halves of a given window. Different from the Touzi operator that uses straightforward averages, GR exponentially weights the average values by , which also controls the smoothing of the image at different scales. Finally, the gradient magnitude is calculated similar to the previous cases as the root mean square of the directional gradients:

3.4.3. Gaussian-Gamma-shaped (GSS) bi-windows

Different from the methods that use traditional rectangular bi-windows to compute the local statistics (e.g. Touzi), Shui and Cheng (Citation2012) propose to utilize Gaussian-Gamma-shaped (GGS) bi-windows in ratio-based edge detectors to reduce the number of false edge pixels near true edges. Moreover, it provides flexible parameter selection, dynamic spacing between two windows to capture better curvilinear structures, and favourable smoothness in local statistics estimations. A comparison is presented in . The authors demonstrate that the rectangle window functions are not reliable 2-D smoothing filters causing false edges around the proximity of true edges due to the remaining unwanted high-frequency speckle noise residuals. To overcome the problem of detecting false edges from false local maxima, GGS bi-windows are utilized for the horizontal components as follows:

Figure 11. Difference between (a) rectangle bi-windows and (b) GGS bi-windows at orientation π . Credits: Shui and Cheng (Citation2012).

where controls the window length,

controls the window width,

controls the spacing of the two windows, and

denotes pixel locations. The window is Gaussian shaped at horizontal and Gamma shaped at vertical orientation. Then, for each pixel and an orientation, the ratios of the local means in the GGS bi-windows are calculated.

3.4.4. Ratio of local statistics based on RUSTICO

In order to make full use of the statistical characteristics of SAR images for the edge detection task, Li et al. (Citation2022) introduce a method based on the ratio of local statistics (ROLSS) that is combined with the robust inhibition-augmented curvilinear operator (RUSTICO) which is inspired by the push-pull inhibition in visual cortex differentiating contrast variations (Strisciuglio et al. Citation2019b). It is recognized for its high robustness to noise and texture for the edge detection task. To compute an initial edge map, the method first utilizes an unweighted circle-shaped window to extract the ratio of local statistics (i.e. maximums of mean and standard deviation, similar to Equation 18):

where and

are computed by the statistical ratios over the circle-shape window and defined as follows:

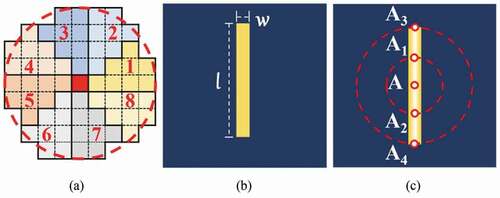

Then, the edge response map, , is augmented with RUSTICO by detecting the contrast variations using a DoG filter for noise robust curvilinear structure detection. Given a prototype pattern (e.g. a synthetic bar) and a reference point, it is computed by considering the positions of the local DoG maxima around a number of concentric circles positioned to the reference point, as illustrated in .

Figure 12. (a) Circular window with 4 pixel radius, (b) prototype pattern (bar) for RUSTICO, (c) positions of local differences of the Gaussians’ maxima along with concentric circles. Credits: Li et al. (Citation2022).

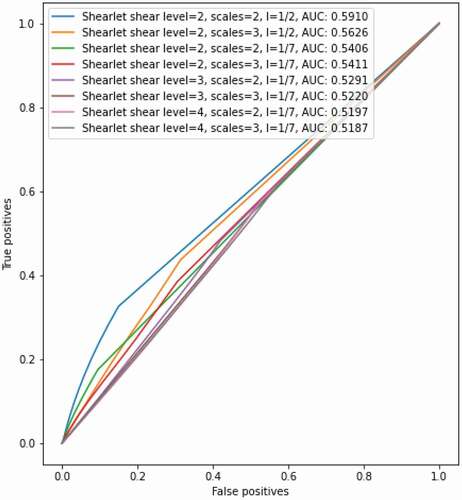

3.4.5. SAR-Shearlet

The standard wavelet transformations for the edge detection task are not robust to noise. Moreover, they tend to have difficulty in distinguishing close edges and have poor angular accuracy due to their isotropic nature. To address these limitations, shearlet transform is proposed, which is highly anisotropic, and defined at diverse scales, locations and orientations (Yi et al. Citation2009). It utilizes an anisotropic directional multiscale transform which produces a directional scale-space decomposition of images. In simple terms, given an image , it is a mapping defined as follows:

where denotes the scale,

denotes the orientation,

denotes the pixel location,

is the generating function of well-localized waveforms which is anisotropically scaled and sheared, and

denotes the shearlet transform (Labate et al. Citation2005). Furthermore, the idea is extended for SAR images for bankline detection, which can be considered as curvilinear structures, and also for edge detection achieving promising results (Sun et al. Citation2021, Citation2022). To achieve that, complex shearlet transform is utilized as follows:

where denotes an even symmetric shearlet,

denotes an odd symmetric shearlet, and

denotes the Hilbert transformation. After applying complex shearlet transform to an image, the largest absolute value among all the coefficients related to the odd symmetric shearlet is determined and the corresponding shearlet direction is set as the main direction of the edge at a pixel. Using this principle direction, the even symmetric shearlet coefficient of the image is computed. Finally, the probability of a pixel being an edge is calculated at the main direction per pixel as follows:

where denotes the related shearlet coefficients and

is the scale parameter. We refer the reader to the works of Sun et al. (Citation2021, Citation2022) and Reisenhofer et al. (Citation2016) for additional details and derivations.

3.4.6. GRHED

GRHED is a convolutional neural network (CNN)-based edge detector for 1-look SAR images (Liu et al. Citation2020). It utilizes the Holistically Nested Edge Detection (HED) (Xie and Tu Citation2015) model together with a special hand-crafted layer. HED is a CNN-based end-to-end deeply supervised edge detection framework. It is designed to learn rich hierarchical features by progressively refining edge maps produced as side outputs using multi-scale learning. The final output is a weighted fusion of those side outputs. The framework adopts the commonly used VGGNet model with special modifications for the task at hand. We refer the reader to the original work of Xie and Tu (Citation2015) for detailed model architecture, parameters and training choices.

Since HED model is designed for optical images, it is not directly suitable for the SAR image edge detection task as illustrated by Liu et al. (Citation2020). It has problems with the speckle noise characteristics, different texture patterns and bright homogeneous regions. To address these issues, (Liu et al. Citation2020) add a hand-crafted layer before the learnable layers of HED. To that end, GR is utilized as the hand-crafted layer that generates gradient future maps which are not affected by the different intensity values and only depend on on the ratio of the mean values. Thus, the model first takes input as a 1-look SAR image, then it process the image with GR to extract gradient features. Finally, the gradient features are processed with a set of learnable convolutional layers to generate a final output containing the predicted edges of the 1-look SAR input.

The model is trained in a supervised fashion using the cross entropy loss utilizing a large-scale dataset by simulating SAR-like noise over the optical BSDS500 dataset (Arbelaez et al. Citation2011). We will use the same dataset for our parameter selection process and benchmark experiments, which is to be explained later in Section 4.

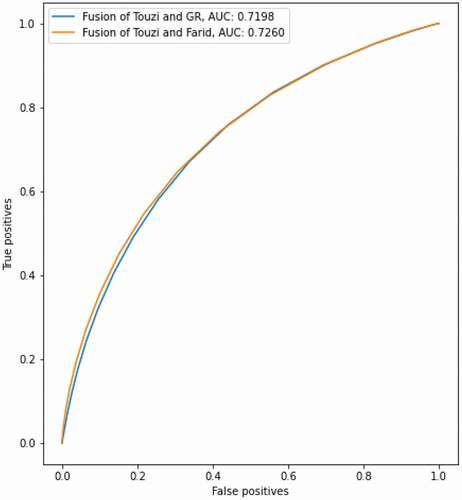

3.4.7. Fusion

Finally, we explore the effect of fusion over the performance. The motivation is that each edge detector has both advantages and disadvantages and we want to combine the advantages of different features while also mitigating the possible disadvantages. To that end, we combine () SAR-specific Touzi and the best-performing optical method, Farid, and (

) ROA-based Touzi and ROEWA-based GR (

) by directly combining the normalized gradient magnitudes (edge responses), and (

) by combining thresholded normalized gradient magnitudes using a voting scheme. In that sense, for the first time in literature, we explore a bundle of decision fusion methods for the task. Surprisingly, the effect of fusion for combining two different SAR-specific edge detectors has never been explored before.

4. Experimental setup

For the experiments, following the setup of Liu et al. (Citation2020) as a guideline, we utilize a large-scale dataset by simulating SAR-like noise over the optical BSDS500 dataset (Arbelaez et al. Citation2011). Then, four different denoising algorithms are applied to the entire dataset as a pre-processing step to achieve the cleanest image set. Afterwards, we utilize a variety of edge detection methods on the best-denoised images. Finally, by iterating over a set of thresholds and various hyperparameters, we evaluate the performances of the edge detectors and establish a benchmark for future evaluations.

4.1. Approach

The fundamental scheme for the edge detection task is demonstrated in . The idea is to first smooth images to reduce the effect of noise, then enhance (identify) important features or details by gradient magnitude computation. Later, a threshold is applied to identify true edges (edge detection). An extra localization step can also be applied by edge thinning and linking to achieve one pixel wide continuous edges (e.g. Canny). If an extra localization step is not already a part of an algorithm, we do not include it. For example, Sobel does not have a localization step, whereas Canny inherently includes one. For our approach, smoothing is achieved by a set of denoising algorithms that are to be elaborated in Section 4.3. Then, for the enhancement step, each edge detection method has a way of calculating the gradient magnitude as provided in Section 3. Finally, for the detection step, we sample 20 threshold values and search for the value that achieves the best F1-score performance and also report a set of quantitative evaluation metrics for additional insights.

4.2. Dataset

The original Berkeley Segmentation Data Set 500 (BSDS500) contains 500 natural optical images, among which 300 images are reserved for training and validating (from now on they will be referred as the training set), and 200 images are for testing (Arbelaez et al. Citation2011). It is widely used for contour and edge detection tasks for optical images as a benchmark. Ground truth edge maps are manually generated by human annotators. The number of annotations differs per image, yet each has five different annotations on average. Following the common practice, we consider any annotated pixel as ground truth for our evaluations, which results in edges that can sometimes be wider than one pixel, see .

Using the training set of BSDS500, Liu et al. (Citation2020) generate 1-look speckled data by multiplying the images by speckle noise patterns following the widely used Goodman model (Goodman Citation1975). The training set is further augmented by rotating images by 16 different angles, flipping horizontally, and rescaling to 50%, 100% and 150% of their original sizes. The resulting speckled optical dataset, named BSDS500-speckled, contains 28,800 images for training, and 200 images for testing and benchmarking. A number of images are presented in . Using this dataset, Liu et al. (Citation2020)train their supervised CNN model for edge detection on SAR images and achieve better results than the gradient by ratio method.

4.3. Denoising

The current literature bases the quantitative evaluations and the parameters’ selection process on a couple simple images, as provided in . As a result, the generalized performance of the proposed methods is not truly reflected as real-world patterns are much more complex and diverse. The main reason behind has been the lack of large-scale datasets with ground-truth edge annotations. Thus, instead of using a couple of simple synthetic images, we propose to use the training set of the real-world BSDS500-speckled (28,800 images) for parameter tuning. The detectors with the best-performing parameters on the training set are eventually evaluated on the test set to form a benchmark. With this benchmark, we provide the most extensive experimental evaluation for SAR images on edge detection and thereby addressing the lack of large-scale experimental reviews in the remote-sensing field. We believe that our work will serve as a baseline for future SAR-specific edge detection algorithms by providing fair experimental evaluation. The dataset and the benchmark are available at https://github.com/readmees/SAR_edge_benchmark.git.

When using denoising as a pre-processing step for the edge detection task, there is a trade-off to consider. The more noise gets removed, the more (micro-)edges disappear so that some of the true edges are also smeared out. Therefore, we consider denoising algorithms that tend to preserve structures better. To that end, block-matching and 3D filtering (BM3D) (Makinen et al. Citation2020), bilateral filtering, anisotropic diffusion and non-local means denoising (NLMD) are to be evaluated. We use a bilateral filtering algorithm designed specifically for SAR (SARBLF) (D’Hondt et al. Citation2013). Both SARBLF and anisotropic diffusion are iterative algorithms. Thus, the noise (and with it micro-edges) is expected to be smoothed out more for higher number of iterations.

All the denoising algorithms are implemented in Python. BM3D implementation is taken from the original repository.Footnote2 SAR-BM3D proposed by Parrilli et al. (Citation2012) provides a SAR version of the algorithm based on a (2007) BM3D implementation. In their work, they state that the homomorphic BM3D performs quite similar. Thus, we assume the current improved version (2020) of BM3D is also sufficient for SAR images. Additionally, we use the OpenCV implementation of NLMD (Bradski Citation2000), and anisotropic diffusion by MedPy library.Footnote3 Finally, SARBLF is also taken from the original repository provided by its authors.Footnote4

All the denoising algorithms use their default settings unless stated otherwise. For BM3D and NLMD, the standard deviation of the noise is assumed to be 0.5 for all experiments. For anisotropic diffusion and SARBLF, we experiment with different number of iterations. Moreover, SARBLF has two parameters; spatial scale parameter (gs) to adjust the spatial extent of the filter, similar to the window size, and radiometric scale parameter (gr) to control the amount of filtering for weighting the local averages of intensities. The code provided by the authors use and

, whereas in their article, they use

and

. Thus, we evaluate both settings. Finally, for the algorithms assuming additive noise, images are transformed to

domain to also transform multiplicative speckle noise to additive noise.

For quantitative assessments, denoised images are compared against the clean images without any speckle (ground truth). To that end, the whole dataset of 29,000 images is evaluated using mean squared error (MSE) measuring per pixel reconstruction quality, peak signal-to-noise ratio (PSNR) measuring the noise difference in ratios, and structural similarity index (SSIM) measuring the similarity considering the perception of the human visual system. Both MSE and SSIM are in the range of . For MSE, 0.0 indicates that the predictions perfectly match the ground-truths, thus the lower the better. On the other hand, for SSIM, 1.0 indicates a perfect match, thus the higher the better. Similarly, higher PSNR values indicate better reconstruction qualities. It is in the range of

. As a reference, SAR-BM3D achieves around 25.66 dB on its simulated speckle experiments.

4.4. Edge detection

For the methods that have parameters, the training set of 28,800 (denoised) images are utilized for parameter tuning. Then, the best-performing set of parameters are used to evaluate the test set of (denoised) 200 images to form the benchmark. For quantitative evaluations, we use the receiver operating characteristic (ROC) curve and its area under the curve (AUC) together with the metrics derived from confusion matrices. This metrics are precision (PPV), accuracy (ACC), F1-score (F1), and the Fowlkes–Mallows index (FM). For these metrics achieving 1 means the highest possible performance, while 0 indicates the lowest.

The ROC curve presents the change in true-positive rate (sensitivity or recall) against the false-positive rate (fall-out) by varying the threshold values. The perfect classification with no false negatives and no false positives generates a point in the upper left corner of the graph, whereas a random classification generates points along the diagonal line. Given that scheme, points above the diagonal indicate good classification results, while points below the diagonal line indicate algorithms of poor quality. Therefore, by varying the threshold values, the performance of a method is analysed. Different thresholds yield different rates so that a curve is generated. Similarly, curves forming the diagonal line indicate random performance. To that end, the area under the curve (AUC) is computed to give a summary of the ROC curve.

For the evaluations, we consider a ranking scheme: F1 FM

PPV

ACC. Following the common practice, we rank F1 as the most important metric, measuring the harmonic mean of the precision and recall, as it takes into account how the data is distributed in the case of imbalanced classes i.e. sparse edge pixels vs. dense non-edge pixels. Then, FM measuring the geometric mean of the precision and recall follows F1. Afterwards, we consider PPV i.e. precision, as a standalone metric because it measures the correctly identified positive cases (edges) from all the predicted positive cases. Lastly, accuracy is considered as it is easy to interpret summarizing the per-pixel performance of the classification model. It has the lowest in importance as it does not consider the case of imbalanced classes. Finally, when there is no clear difference, we consider the guidance of AUC to determine the best-performing setup.

The gradient ratio (GR) images are generated with the MATLAB code provided by Liu et al. (Citation2020)Footnote5 that the authors present as the baseline to compare their supervised CNN model. For SAR-Shearlet, we use the MATLAB code provided by its authors Sun et al. (Citation2021).Footnote6 ROLSS RUSTICO consists of two parts; we implement ROLSS following the description of the paper of Li et al. (Citation2022) in Python, and use the MATLAB code of the original RUSTICO paper to apply it on top of the ROLSS results (Strisciuglio et al. 2019).Footnote7 Further, we utilize the MATLAB source code GSS bi-windows provided by its authors (Shui and Cheng Citation2012).Footnote8 Apart from that, all the edge detection methods are implemented using Python. The majority of the first-order derivatives-based edge detectors are implemented with the scikit-image library (van der Walt et al. Citation2014). Moreover, for creating Gabor and DoG filters, we use the scikit-image library as well. Furthermore, the discrete 2D wavelet transform is based on the PyWavelets library (Lee et al. Citation2019). The K-means-based edge detection utilizes the K-means clustering algorithm of the OpenCV library and the boundaries are extracted with a Sobel filter. For the subpixel edge detection, we use a pure Python implementation that is based on the original MATLAB implementation of Trujillo-Pino et al. (Citation2013).Footnote9 In addition, our LoG implementation consists of applying a Gaussian blur and Laplace operator, both implemented with scikit-image. For convolutions, we use scipy.ndimage.convolve with default parameters. Finally, the Touzi edge detector is provided by Orfeo ToolBox (Grizonnet et al. Citation2017).

Low-level edge detectors use their fixed (default) settings. Farid, Prewitt, Roberts, Scharr and Sobel use the defaults of scikit-image. For Frei-Chen, we separately convolve the image with , …

, as explained in Section 3.1.6. For the K-means-based edge detection, we consider

. Once the clustering is done, we use Sobel (scikit-image) to detect the boundaries between different clusters as edges. For the standard deviation of the Gaussian of the LoG filter, we evaluate for

. The

value of the DoG is composed of

. To determine

values, ratios of 4 and 5 are evaluated for each

. Thus for

,

and

are evaluated and so on. In addition, for the standard deviation of Canny, we evaluate for

and experiment with upper:lower threshold ratios of 2:1 and 3:1. Moreover, we evaluate the subpixel edge detector for

iterations which are oriented for high-noise images. Finally, Gabor relies on Gaussian functions as well. To that end, the Gabor’s sigmas are determined by the choice of the frequency parameters using the default implementation of the scikit-image library. Following the setup of Wang et al. (Citation2019), the parameter settings of the orientations and frequencies for a bank are determined as follows:

where S and O are the number of scales and orientations. Using these formulations, we consider four different Gabor filter-banks. Firstly, , used by Wang et al. (Citation2019) and Khan et al. (Citation2019), which represents a Gabor filter-bank with five scales (5 different frequencies) and eight orientations. Additionally, we evaluate

,

and

which are utilized by Wang et al. (Citation2019). The real and imaginary responses of each filter are combined by EquationEquation 16

(16)

(16) . Finally, the combined responses are summed together to create a superimposed gradient magnitude.

Considering the SAR-specific methods, for GR, we utilize the algorithm with . For the Touzi experiments, we sample radius values from

. In addition, for the GSS bi-windows, we evaluate for

, which regulates the window width. The

handling of the spacing of the two windows, and

adjusting the window length are calculated according to Equations 29 and 30, where we evaluate for

and

for different width and lengths as follows:

For ROLSS RUSTICO, to extract the local statistics, we utilize a circular window with a radius of 4 pixels. Then, RUSTICO is applied over the responses of ROLSS with that regulates the size of the image region in which the noise is suppressed, and

that is the strength of suppression. Finally, regarding SAR-Shearlet, we set

to 0.8 for parabolic scaling. The effective support length of the Mexican hat wavelet is set to

of the width or height (whichever is smaller) of the image, and the effective support length of the Gaussian is set to 1/20 of the width/height (whichever is smaller) of the image. Using these fixed parameters, we set the shear levels to

and use

for scales per octave. Finally, since GRHED already uses the simulated BSDS500 dataset for its parameter selection process, we will not repeat the procedure and directly use the parameters and the model that are already calibrated on the simulated BSDS500 dataset, which is provided by the authors that achieves state-of-the-art results. We refer the reader to the work of Liu et al. (Citation2020) for additional details.

For the fusion methods, we experiment with combining the gradient magnitudes and the thresholded binary edge maps from different edge detectors. To that end, the thresholded binary edge responses are combined by () single voting where at least one of the methods has to classify a pixel an edge and (

) complete agreement where all the methods should classify a pixel an edge. Additionally, instead of combining the binary outputs, we combine the real-valued gradient magnitudes as averages. Both fusion schemes consider two different setups; one best-performing non-SAR and a SAR method, and two different SAR methods with combinations of equal weights.

Each edge detector takes a uint8 denoised image as input and outputs a gradient magnitude (edge response map). The response map is normalized with min-max normalization to the range of [0, 1]. In addition, thresholds are uniformly sampled by 0.05 from [0, 1] to derive various quantitative evaluation metrics and provide confusion matrices for the ROC curves, where applicable. For the methods utilizing double thresholding, we set the lower threshold to 0.0 and iterate over the higher threshold. Finally, for GRHED, we set the threshold to 0.5516 as proposed by the authors. Although it is more granular than our 0.05 sampling scheme and might yield better results, we respect the original implementation and directly use it as it is.

5. Evaluation

5.1. Denoising

For the task, we evaluate BM3D, SARBLF, NLMD and anisotropic diffusion on the entire BSDS500-speckled dataset. All 29,000 images are first multiplied with 1-look speckle noise. Then, we apply the denoising methods and compare the outputs with the clean non-speckled (ground-truth) images. provides the averages of the metrics for the entire dataset, where N indicates the number of iterations.

Table 1. Evaluation of various denoising methods over the entire BSDS500-speckled dataset.

The results show that SARBLF (N = 3, gs = 2.2, gr = 1.33) achieves the best performance on all metrics. Therefore, SARBLF (N = 3, gs = 2.2, gr = 1.33) is utilized as a pre-processing step for enhancement over the entire dataset. The results are also consistent with the findings of the authors of SARBLF in terms of number of iterations and scale parameters (D’Hondt et al. Citation2013). In addition, anisotropic diffusion with 15 iterations appears as the second best method, whereas NLMD performs the worst.

Additionally, shows that the methods are able to preserve relatively sharp edges. BM3D and NLMD seem to create better homogeneous areas, but they tend to create artefacts that might be considered as false edges, especially BM3D. Moreover, both BM3D and NLMD appear to have low contrast and remain darker, even though their pixels are in the same range as anisotropic diffusion and SARBLF. On the other hand, SARBLF appears significantly less noisy than the anisotropic diffusion. SARBLF (N = 3, gs = 2.2, gr = 1.33) yields stronger edges, than SARBLF (N = 3, gs = 2.8, gr = 1.4). Therefore, the qualitative evaluations are consistent with the quantitative evaluations.

5.2. Edge detection

5.2.1. First-order derivatives

The quantitative evaluation results for the first-order derivatives-based edge detectors on the training set are provided in .

Table 2. Evaluation of the first-order derivatives-based edge detectors.

The results show that Frei-Chen stands out with low performance on all metrics. On the other hand, Farid achieves the best performance on F1 and PPV, whereas Scharr achieves the best accuracy, and Prewitt obtains the best FM score, yet the differences are marginal. Farid’s success might be attributed to its rotation invariance property and floating point data type coefficients that are able to capture finer details. On the other hand, the lowest performance of the Frei-Chen operator might be due to the combination of the eight different basis vectors that are each negatively affected by the speckle noise artefacts which are accumulated in all possible directions.

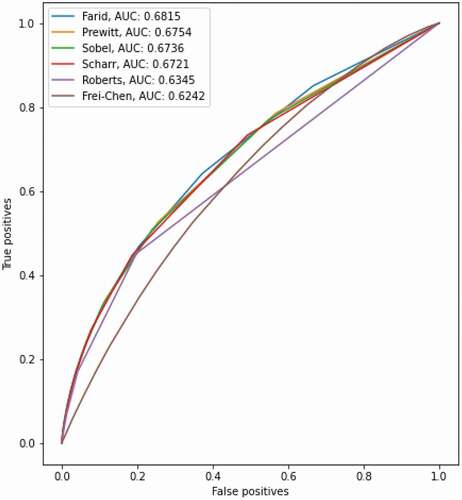

In addition, the ROC curves of the different methods are presented in . It shows that Farid achieves the best AUC results, whereas Frei-Chen achieves the worst performance, while Prewitt, Roberts, Scharr and Sobel obtain comparable results. As a result, ROC curve evaluations further support the quantitative evaluations that the Farid operator achieving the best F1, and PPV metrics emerges as the best first-order derivatives-based edge detection method on the dataset.

5.2.2. Second-order derivatives

5.2.2.1. Laplacian of Gaussian (LoG)

The quantitative evaluation results for the second-order derivative-based LoG method with different parameters over the training set are provided in .

Table 3. Evaluation of the different parameters for LoG.

The results show that as the sigma increases, accuracy and PPV tend to increase, whereas F1 and FM scores tend to decrease. It suggests that with extra smoothing false positives are handled better as the accuracy increases. However, it also smears out the true edges thereby lowering the F1 score. Considering our ranking of the metrics, LoG with having the best F1 and FM scores emerges as the best setup. Since we use the inherit the zero-crossing property of the second-order derivatives-based methods for the detection step, ROC curves and the related AUC scores are not realized.

In addition, compared against the first-order derivatives-based methods of , the performance of the LoG operator is quite poor. For instance, Farid with the highest F1 score achieves 0.3581 and Frei-Chen with the lowest F1 score achieves 0.3053, whereas LoG with the highest F1 can only reach to 0.1894, which is significantly lower. The same pattern is also observed for PPV and FM metrics. This is in compliance with the findings of Bachofer et al. (Citation2016) that LoG is not performing well on SAR images because of the low gradients that are due to the remaining noise negatively influencing the Gaussian filter. As a result, none of the evaluated LoG settings properly suits for SAR images. Nonetheless, we conclude that is the best option for LoG that is to be evaluated on the test data.

5.2.2.2. Difference of Gaussians (DoG)

The quantitative evaluation results for the second-order derivatives-based DoG method with different parameters over the training set are provided in .

Table 4. Evaluation of the different parameters for DoG. Ratio indicates the size ratio of the kernels.

The results show that DoG behaves similarly to LoG; as the sigma increases, accuracy and PPV tend to increase, while F1 and FM scores tend to decrease. Likewise, it shows that with extra smoothing false-positives decrease as the accuracy increases, yet it also results in the loss of some of the true edges. The same behaviour is also observed for the effect of the ratios that the higher ratios achieve higher accuracy and PPV, but lower F1 and MK scores. Therefore, DoG with and

achieving the highest F1 and FM scores is to be evaluated on the test data.

Moreover, compared with the results of , DoG achieves better accuracy (0.7935 vs. 0.7312) and PPV (0.2208 vs. 0.1783) scores than LoG, yet LoG obtains better F1 (0.1894 vs. 0.1757) and FM (0.2009 vs. 0.1818) scores. In addition, compared against the first-order derivatives-based methods of , the performance of the DoG operator is quite poor, similar to the case of LoG. The results further suggests that the first-order derivatives are more robust to noise than the second-order derivatives as further differentiation amplifies the noise. Overall, the evaluations indicate low trustworthiness of the second-order derivatives-based operators for the task.

5.2.3. Advanced methods

5.2.3.1. Canny

The quantitative evaluation results for the advanced Canny method with different parameters over the training set are provided in .

Table 5. Evaluation of Canny edge detection for different sigma options and threshold ratios.

The results show that for all the sigma options, the upper:lower threshold ratio of 2:1 achieves better results on all metrics, with an exception for the setup. It suggests that the ratio of 3:1 is more prone to wrongly classifying the weak edges as true edges. Similar to the case of second-order derivatives-based methods presented in and , accuracy and PPV tend to increase as the smoothing factor increases, while F1 and FM scores deteriorate until

, where the metrics start to fluctuate. Therefore, Canny with

and the ratios of 2:1 achieving the highest F1 and FM scores is to be evaluated on the test data. Finally, non-maximum suppression, double thresholding and hysteresis steps prevent Canny from realizing proper ROC curves. Nonetheless, the AUC results are in parallel with the quantitative results.

In addition, compared against the first-order derivatives-based methods of , Canny achieves higher accuracy which can be attributed to the additional smoothing step. Nonetheless, it falls behind on other scores. The reason Canny performs poorly is due to the fact that Canny generates one pixel wide edge maps, whereas our dataset includes edges wider than one pixel, which hinders the quantitative performance of Canny on this particular dataset. On the other hand, compared against the best parameter settings of the second-order derivatives-based methods of and , Canny achieves significantly better accuracy and PPV scores, while being on par with F1 and FM scores. Therefore, Canny appears as a better option than the second-order derivatives-based methods for the task. As a final note, replacing its Gaussian filter with a bilateral filter might further improve the results (Fawwaz et al. Citation2018).

5.2.3.2. Gabor filters

The quantitative evaluation results for the different Gabor filter-banks with various scales and orientations over the training set are provided in .

Table 6. Evaluation of Gabor edge detection for different scales (S) and orientations (O).

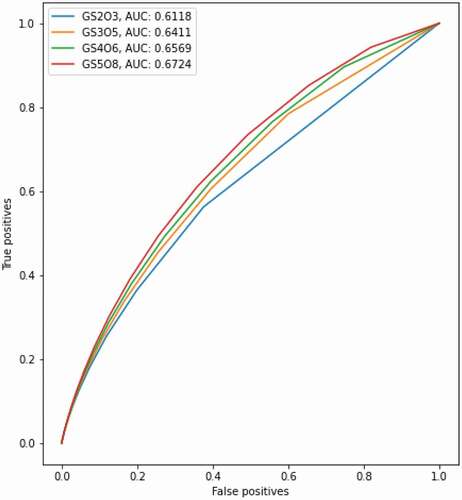

It shows that for all the metrics, the results tend to improve as the number of scales and orientations increases, because the filter-banks are able to capture more diverse patterns. Further improvements might be attainable by increasing the scale and orientation coverage. Nonetheless, the runtime also grows significantly with the additional variations. For instance, it takes around 500 hours to evaluate over the dataset .Footnote10 Thus, we do not gauge additional combinations. In addition, the ROC curves for the different filter banks are presented in .

It supports the metrics that increasing the number of scales and orientations further improves the AUC metric. Thus, the Gabor filter-bank with five scales and eight orientations () emerges as the best setup which is to be evaluated on the test data.

In addition, compared against the first-order derivatives-based edge detection methods in , is superior to Frei-Chen on all metrics, and it achieves the second best FM scores after Prewitt. Moreover, in terms of AUC,

obtains better results than Frei-Chen, Roberts and Scharr, yet its performance is not as good as Sobel, Prewitt or Farid. Similarly, compared with and ,

manages to achieve better results than second-order derivatives-based methods on all metrics except for accuracy. Finally, compared against the best performing Canny of ,

achieves higher F1, FM, and PPV, while obtaining lower accuracy scores. Thus, for applications where attaining high FM levels is more important, the method might be a suitable option. Additionally, as stated by Xiang et al. (Citation2017a) Gabor filters tend to provide better connectivity and smoothness for the SAR image edge detection task, which might be another aspect to consider. Nonetheless, the authors also acknowledge that the computational cost should also be carefully considered, especially given the performance of the Gabor filters is lower or similar compared against the first-order derivatives-based methods. Utilizing log-Gabor wavelets or fuzzified Gabor filter-banks might further improve the results (Nava et al. Citation2011; Tadic et al. Citation2021).

5.2.3.3. K-means clustering

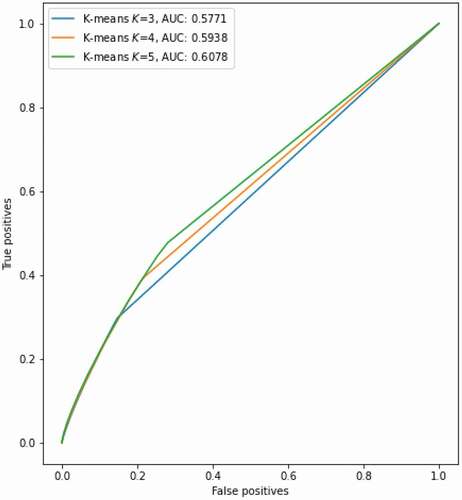

The quantitative evaluation results for the K-means clustering-based edge detection method with different number of clusters over the training set are provided in .

Table 7. Evaluation of different number of clusters for K-means-based edge detection.