ABSTRACT

Evidence-based policy-making has received increasing attention among governments in recent decades. However, comparative research examining patterns of evidence impact and administrative priorities for different forms of evidence remains scarce. In response, this article investigates the impact of different evidence types in two Danish ministries – the Ministry of Employment and the Ministry of Children and Education – characterized by similar analytical capacities but different strategies and criteria for prioritizing and using evidence. Applying a novel document matching method to analyze 1159 research publications and policy decisions from 2015–2021 about Danish active labor market policies and public school policies, the article shows how causal effect evidence has to a greater extent influenced policy decisions in the Ministry of Employment than in the Ministry of Children and Education. The article proposes that the observed variation between the policy domains can be attributed to ministerial evidence strategies, shaping administrative perceptions of the relevance and usefulness of different types of evidence. Based on the results, the article discusses the implications of prioritizing particular evidence types in ministries, considering variations between study designs and their appropriateness for different policy needs and purposes.

Introduction

Responding to widespread pressures for accountability and efficiency, using evidence to inform policy decisions has received increasing attention among governments (Head Citation2016; Liket Citation2017; Reed Citation2018; Sayer Citation2020). Relying on evidence in policy-making has been promoted by international organizations, such as the OECD (Citation2017) and the European Commission (Citation2015), while advances in modern technology and analysis have expanded the supply of research and data to decision-makers (Legrand Citation2012). In relation to this, the notion of “evidence-based policy-making” (EBPM) has been promoted and debated (Botterill and Hindmoor Citation2012; French Citation2019; Pawson Citation2002). EBPM proponents have borrowed heavily from “evidence-based medicine” to argue that evidence of causal effect should take centre stage in policy-making. Systematically consulting evidence about the effects of different policy options to make well-informed decisions has been promoted as contributing to efficient and responsible governance (Andersen and Smith Citation2022; Baron Citation2018; Cairney Citation2016; Hansen and Rieper Citation2010; Head Citation2016; Ingold and Monaghan Citation2016). However, the seemingly frictionless transfer of causal evidence into better policy decisions, as implied by the EBPM notion, has repeatedly been described as problematic. Scholars have emphasized how policy decisions are rarely “based” directly on evidence and problematized how the EBPM notion stipulates a narrow definition of “useful evidence”, favouring evidence of causal effect while marginalizing other forms of knowledge from policy discussions and decision-making processes (Ansell and Geyer Citation2017; Botterill and Hindmoor Citation2012; Daviter Citation2015; Oliver Citation2022; Sanderson Citation2006; Van der Arend Citation2014). Significant debates have addressed how to evaluate the relevance and usefulness of study designs for informing policy, what constitutes appropriate types of evidence for various policy needs, and the implications of adopting evidence evaluation criteria from medicine in other policy domains (Dahler-Larsen Citation2017; Nutley, Powell, and Davies Citation2013; Parkhurst Citation2017; Parkhurst and Abeysinghe Citation2016; Petticrew and Roberts Citation2003).

Theoretical contributions to the EBPM literature have elucidated different functions of evidence and forms of utilization (Beyer Citation1997; Nutley, Walter, and Davies Citation2007; Weiss Citation1979), and it has been proposed that different cultures of evidence use exist across policy domains and government institutions (Boswell Citation2015; Lorenc et al. Citation2014). Such cultures reflect different positions on what constitutes useful evidence and the role that different evidence types should play in policy-making. For example, studies have documented how the medical field adheres to a causal hierarchy of evidence, in which randomized controlled trials (RCTs) are considered the gold standard, and how policy-makers in economics tend to display rationalist and technocratic views on policy-making (Boaz et al. Citation2019; Christensen and Mandelkern Citation2022). Oliver (Citation2022:, 80) explains how the common worldview in such policy domains is that policy is best made using evidence, RCTs and systematic reviews are the best types of evidence, and researchers should conduct more such studies to maximize their use by policy-makers. Contributions to the EBPM literature have shown how such perceptions concerning the relevance and usefulness of different evidence types have travelled to other policy domains, shaping how policy problems are addressed in government institutions (Andersen Citation2020; Baron Citation2018; Clarence Citation2002; Hansen and Rieper Citation2010; Oliver Citation2022). As such, EBPM has influenced administrative priorities and practices in areas like education, criminal justice, employment, and social policy.

A number of scholars have stressed that the diffusion of evidence evaluation criteria from medicine to other policy domains, through the notion of EBPM, is problematic because an unconditional preference for causal effect evidence in government institutions may marginalize certain perspectives from policy discussions and bias policy decisions towards specific kinds of solutions (Andersen Citation2020; Dahler-Larsen Citation2017; Parkhurst Citation2017; Sayer Citation2020; Triantafillou Citation2015). However, comparative research examining patterns of how different forms of evidence impact policy decisions across government institutions remains scarce, thereby limiting the empirical basis for discussing administrative evidence priorities and choices. Existing studies rely on a limited set of perceptual measures, including surveys of self-reported use of evidence (e.g. Head et al. Citation2014; Jennings and Hall Citation2012; Landry, Lamari, and Amara Citation2003) or qualitative case studies of how particular items of research have shaped policy processes (e.g. Hayden and Jenkins Citation2014; Van Toorn and Dowse Citation2016; Zarkin Citation2021). While these studies have provided important empirical insights, the applied measures entail different biases and limitations. Particularly, it has proved challenging for scholars to separate the priorities and values of policy-makers from whether and how various types of evidence inform policy decisions. There is therefore a need for alternative measures to capture variations between government institutions and policy choices concerning evidentiary priorities and needs.

In response, this article studies how different types of evidence have informed policy decisions in two government institutions in Denmark: the Ministry of Employment and the Ministry of Children and Education. The article does so by examining the nature and forms of evidence reflected in active labor market policies (ALMPs) and public school policies from 2016–2021, considering the evidence available to the two ministries as well as their strategies for prioritizing different evidence types in policy development and decision-making. The article is based on a quantitative content analysis of 1159 research publications and policy decisions and makes a threefold contribution: First, the article introduces a novel analytical approach to examine patterns of how different types of evidence is reflected in subsequent policy decisions to approximate levels of evidence impact. Second, the article empirically documents policy domain variations concerning the impact of different evidence types on policy decisions. Third, the article contributes to debates about EBPM by discussing the implications of prioritizing specific evidence types in ministries, considering variations between study designs and the appropriateness of different methods for different policy needs and purposes.

Conceptualising and measuring the impact of evidence in policy-making

The idea that knowledge rather than interests should inform policy-making is longstanding. In recent decades, this idea has gained popularity via the notion of EBPM, originating from medicine and endorsed by governments and international organizations to address the complex challenges facing societies today (Andersen and Smith Citation2022). Young et al. (Citation2002) define EBPM as a prescription for the increased use of evidence in policy-making as well as the methodological character of this evidence. Baron (Citation2018, 40) further specifies how EBPM encompasses “(1) the application of rigorous research methods, particularly randomized controlled trials (RCTs), to build credible evidence about ‘what works’ to improve the human condition; and (2) the use of such evidence to focus public and private resources on effective interventions.” The EBPM notion hence promotes the reliance on causal effect evidence derived from experimental methods to guide the decisions made by policy-makers and government institutions. Yet the EBPM notion has been criticized by several scholars for overrating the extent to which evidence of causal effect can or should inform policy decisions in democratic systems, considering the versatility of policy issues and needs (Ansell and Geyer Citation2017; Botterill and Hindmoor Citation2012). In light of this, the early aspirations of “basing” policies on evidence have been heavily qualified, with many scholars now applying the more modest expression “evidence-informed policy-making” (Boaz et al. Citation2019; Head Citation2016). Interestingly, however, scholars have documented how the notion of EBPM remains a widespread discourse among policy-makers, shaping their attitudes regarding the relevance and usefulness of different types of evidence (Oliver Citation2022). According to Parkhurst (Citation2017), policy-makers tend to display a bias towards favouring quantitative, experimental evidence regardless of the policy issues at stake, which might affect the policy solutions adopted.

While the EBPM literature is long on concepts about the impact of evidence on policy decisions, it is short on measurements (Cozzens and Snoek Citation2010; Knudsen Citation2018). Empirical knowledge to underpin debates about EBPM and the implications of its assumptions is therefore missing. Part of this pertains to a lack of conceptual consistency and clarity in how terms like “evidence”, “utilisation” and “impact” are understood (Blum and Pattyn Citation2022; Boaz and Nutley Citation2019; Cairney Citation2016). For example, a challenge is the lack of distinction between policy-makers deciding to implement a policy that has been tested in a piece of research vis-a-vis policies being informed by or based on evidence. Given the complexity of how evidence is used and influences policy decisions, there have been recent calls for innovation in methods to capture the contribution of evidence to policy-making (Christensen Citation2023; Jørgensen Citation2023; Riley et al. Citation2018). Notably, scholars have emphasized the importance of considering questions of time (when in the policy process to identify impact) and attribution (assuring that a particular item of evidence and not something else has produced a change in policy) (Boaz and Nutley Citation2019). The article deals with these considerations in the following ways.

First, the article considers “evidence” as the written product of research, comprising both quantitative and qualitative studies, but not political know-how, expert advice and professional experience. This is similar to Head’s (Citation2008) definition of scientific (research-based) knowledge; yet the present study is not limited to academic publications. Reports from public and private research organizations and think tanks are included too. Second, the study focuses on national-level “policy decisions”. These decisions comprise various policy instruments, including laws, policy programmes, economic incentives and penalties, public resource allocations, and information campaigns (Cairney Citation2012; Vedung Citation2007). One might object that such a “decisionistic” focus neglects the alternative ways evidence can shape policy processes. There is a wide literature describing how evidence can be used instrumentally, conceptually, and symbolically at various stages of policy-making (Beyer Citation1997; Boswell Citation2009; Knott and Wildavsky Citation1980; Nutley, Walter, and Davies Citation2007). Distinguishing between different forms of utilization and impact, however, has proved methodologically challenging. According to Christensen (Citation2021; Citation2023), the concepts are somewhat overlapping, and separating them may obscure the distinction between the intentions behind using different forms of evidence and their impact. The implications of the different forms of utilization for evidence impact are thus unclear.

Recognizing this, and to enable wide-scale analysis of numerous research publications and policy decisions over time, this study uses a broad conception of “evidence impact” focusing on whether the conclusions and recommendations from research publications are reflected in subsequent policy decisions. This does not equal evidence utilization per se but it enables an approximation of evidence use across a large number of policy decisions, which would be laborious and time-consuming to document qualitatively. Drawing on Landry, Lamari, and Amara (Citation2003), the analysis seeks to capture the “influence stage” of utilization, in which evidence appears to impact policy decisions. This impact is attributed to when there is an apparent match between the conclusions and recommendations of particular research publications and subsequent policy decisions. By sampling research publications, which are accessible and relevant to the ministries under study, employing systematic procedures for identifying evidence–policy matches, and by aggregating the matches across numerous policy decisions, the results are considered good indicators of impact (Jørgensen Citation2023; Knudsen Citation2018).

Administrative priorities regarding different types of evidence

Despite the enthusiasm for EBPM among governments, existing research on the topic provides somewhat ambiguous findings as to whether, and what types of, evidence influences policy decisions. Scholars have identified a range of facilitators and barriers to the influence of evidence on policy (Christensen and Mandelkern Citation2022; Nutley, Walter, and Davies Citation2007; Oliver et al. Citation2014). First, it is commonly recognized that the impact of evidence on policy decisions is positively associated with administrative capacities for producing, accumulating and considering research and data in the policy process (Howlett Citation2009, Citation2015; Jennings and Hall Citation2012; Newman, Cherney, and Head Citation2017). Second, scholars have documented how the timeliness, accessibility and relevance of research are important for whether it finds its way into policy decisions (Oliver et al. Citation2014; Reid et al. Citation2017). Third, an important body of work has focused on the characteristics of the evidence itself to explain why it (does not) inform policy-making. Here, “evidence quality” is considered an important factor for impact (Oliver et al. Citation2014). However, scholars has also shown that different perceptions exist regarding what constitutes relevant and useful evidence for policy-making (Nutley, Powell, and Davies Citation2013; Parkhurst and Abeysinghe Citation2016).

Inspired by practices from medicine, the early proponents of EBPM advocated a “hierarchy of evidence” in which RCTs and systematic reviews were perceived as providing more credible knowledge than research derived from observational or qualitative research approaches (Borgerson Citation2009; Høydal and Tøge Citation2021). Yet as EBPM has spread to other policy domains, this perception of “useful evidence” has been broadened to reflect a wider range of policy concerns and issues (Smith and Haux Citation2017). In light of this development, several scholars have proposed that the relevance and usefulness of different types of evidence should be judged based on their appropriateness for specific policy needs rather than adopting a fixed causal hierarchy with experimental study designs at the top (Nutley, Powell, and Davies Citation2013; Parkhurst and Abeysinghe Citation2016; Petticrew and Roberts Citation2003). Instead of ranking different evidence types based on their ability to document causal effects of policy choices, evidence should be appraised based on how well it serves particular policy needs (Parkhurst et al. Citation2021).

Administrative positions regarding the relevance and usefulness of different evidence types can be observed and might manifest in strategies, vision statements and work plans of government institutions. As argued by Parkhurst et al. (Citation2021, 448), institutional logics shape perceptions of the appropriate forms and applications of evidence for policy needs. In this article, these logics are referred to as “evidence strategies” (understood as the criteria employed to accept or reject available evidence), guiding what forms of evidence are consulted in policy-making processes. According to Head (Citation2016), two dominant positions exist among those who advocate for the increased use of evidence in policy-making. The first position adheres to prescriptions from evidence-based medicine, where the relevance and usefulness of evidence is judged based on a causal hierarchy, which favors experimental designs to programme evaluation, while cross-sectional and case-based, qualitative research is given a lower priority. In such a strategy, which is mostly found in policy areas associated with the natural sciences and economics, evidence serves narrow the purpose of documenting “what works” to solve policy problems most effectively and efficiently (Nutley, Powell, and Davies Citation2013). This could be characterized as a “hierarchical strategy” towards evidence use. The second position is more inclusive, maintaining that the objective good is contingent upon the policy issue at stake and accepting a wider range of research designs and knowledge types to inform policy-making. In this perspective, the relevance and usefulness of different evidence types should be evaluated based on how well they contribute to better deliberations about problems and solutions, as emphasized in the programmatic perspective from Parkhurst et al. (Citation2021). This could be labelled an “inclusive strategy”.

Implications of adopting different evidence strategies

When evidence perceptions and practices from medicine travels to other policy domains and inspires administrative practices in areas like education, employment, or social policy, they may promote particular ways of understanding and addressing policy problems. Oliver (Citation2022:, 78) explains how EBPM prescriptions from medicine “shape policy appetites” for evidence use among policy workers, motivating an unconditional preference for causal effect evidence to address policy issues, notwithstanding the policy issue in question. Parkhurst (Citation2017) argues that such fixed preferences for specific types of evidence can foster “issue biases”, meaning that some concerns and perspectives are systematically marginalized because of the characteristics of the evidence types favored to inform policy processes. Accordingly, calls for basing policy on specific forms of evidence risk motivating that policy decisions are based on what has been counted, not necessarily what counts (Parkhurst Citation2017, 54). Hence, a number of scholars have advocated for replacing fixed hierarchies with a focus on evidence appropriateness for different policy needs (Nutley, Powell, and Davies Citation2013; Parkhurst and Abeysinghe Citation2016; Petticrew and Roberts Citation2003). Such a view does not imply that all study designs are equal, but acknowledges that, for example, RCTs are useful to answer causal effect concerns while qualitative research is appropriate to concerns like explanation or process understanding. Government institutions should thus not apply fixed hierarchies to evaluate evidence types across all concerns.

While these arguments are reasonable, they are often normatively founded, and there is a lack of comparative empirical research documenting the priorities and criteria regarding evidence adopted by government institutions and whether policy decisions are in fact more influenced by some evidence types than others. The few studies that exist provide somewhat contradictory results on these matters: While some scholars have documented policy-maker preferences for quantitative and experimental study designs (Amara, Ouimet, and Landry Citation2004; Ingold and Monaghan Citation2016; Lingard Citation2011; Monaghan and Ingold Citation2018), others find that causal hierarchies play a minor role among policy-makers (Petticrew et al. Citation2004), that the utilization of different evidence types depends on the purpose of their use (Høydal and Tøge Citation2021), or that other factors than the type of evidence are more important determinants of evidence impact (Landry, Lamari, and Amara Citation2003). More research is therefore needed examining the impact of different evidence types across government institutions and policy domains.

Case selection and expectations

To study variations between government institutions regarding the impact of different evidence types, the Ministry of Employment and the Ministry of Children and Education in Denmark are compared, focusing on policy decisions relating to active labor market policies and public school policies from 2016‒2021.Footnote1 The cases are interesting for comparison for three main reasons:

First, the two ministries share a number of similarities. The ministries are comparable (Lijphart Citation1971, 687) in the sense that both are characterized by high analytical capacities for accumulating and considering research and data as well as affiliated agencies and research organizations that regularly provide research-based evidence. The compilation and dissemination of research is also a distinctively marked budget heading in the annual reports of both ministries (STAR Citation2022; STUK Citation2022). The ministries are thus characterized by having close links to research producers and by having a highly skilled workforce – two factors considered conducive to evidence use in government institutions (Howlett Citation2009; Jennings and Hall Citation2012; Oliver et al. Citation2014; Van der Arend Citation2014).

Second, the employment and education domains are relatively similar concerning governance structures and policy conflict dynamics. Both policy domains operate within a framework of centralized regulation and municipal implementation of policies (Bredgaard and Rasmussen Citation2022; OECD Citation2020). The policy domains also deal with similar areas of tension in the sense that both domains are occupied with a dual task of rendering the system more effective (reducing unemployment; increasing student performance) while ensuring good conditions for the citizens in the system (social support; well-being) (Andersen Citation2020; BUVM Citation2023). This has fostered debates regarding work-first versus human capital approaches in the employment domain and discussions about central learning goals and monitoring vis-à-vis professional autonomy in education. The present study does not offer a comprehensive analysis of the extent to which different types of evidence are required by the two ministries in relation to policy priorities and goals in the studied period – a limitation which should be explored in future studies. However, both ministries deal with policy programmes and solutions in which many types of evidence could be useful for informing a single policy question, such as how to get different citizen groups into employment, how to increase student performance in schools, etc.

Third, there are significant differences between the two ministries regarding their strategy towards evidence use, i.e. the criteria employed to accept or reject available evidence. The Ministry of Employment has been following a formal evidence strategy since 2012, which is closely inspired by the EBPM notion with a focus on basing policies on causal effect evidence and a strong preference for experimental designs (STAR Citation2023a). By contrast, the Ministry of Children and Education is characterized by a more inclusive and less explicit strategy, appraising many forms of evidence and aiming to let the “best available evidence” inform policy decisions broadly (STUK Citation2023).

By comparing two ministries with similar background characteristics, but different strategies regarding the prioritization of specific evidence types, the case selection seeks to capture the role of ministerial evidence strategies for whether different types of evidence influence policy decisions. Due to variations in the ministerial strategies adopted, the article expects that decisions in the two policy domains will be influenced by different forms of evidence: Because the Ministry of Employment has been following a strategy that aims at basing policies on causal effect evidence, the article expects to observe a higher impact of causal effect evidence on policy decisions regarding active labor market polices. Contrarily, the article expects a broader prioritization of different evidence types in the Ministry of Children and Education and a less clear focus on causal effect evidence in public school policy decisions. The section below describes how these expectations were examined.

Document matching analysis

The article applies a document-matching approach to study the impact of evidence (Jørgensen Citation2023; Knudsen Citation2018). The analysis involved a sequential process of collecting documents, applying a coding scheme and analyzing the codes using different statistical tools. The analysis sought to identify matches between conclusions and recommendations from research publications and policy decisions, capturing “preference attainment” (Christensen Citation2023, 8) or the “influence stage” of evidence utilization (Landry, Lamari, and Amara Citation2003). The data collection and analysis were carried out in 2022.

Collecting documents

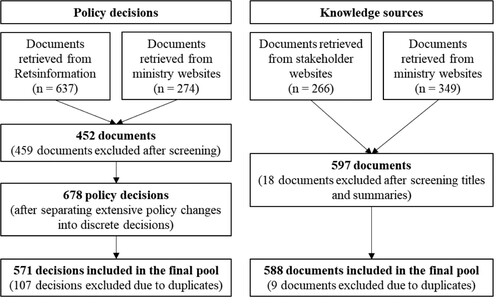

Research publications and policy decisions concerning ALMP and public school policy from 2015‒2021 formed the units of analysis. In early 2022, 588 research publications and 571 policy decisions were retrieved. Collecting documents required important selection criteria. To accommodate issues with timeliness, accessibility and relevancy of research (Oliver et al. Citation2014), the collection of research publications was based on a purposive sampling strategy (Krippendorf Citation2013). Nutley, Walter, and Davies (Citation2007, 62) emphasize that policy-makers predominantly utilize knowledge from research-, government, and specialist organizations, and Van der Arend (Citation2014, 618) has shown how policy officials generally express difficulty locating relevant evidence if not provided by acquainted organizations. The document collection therefore aimed to capture the knowledge horizon of the two ministries under study. A similar strategy was applied by Knudsen (Citation2018), which inspired the analysis. The document collection targeted publications that were timely (i.e. issued within a six-year timeframe), on topic and issued by Danish research-producing organizations referred to on the ministry websites or appearing on public hearing lists in relation to recent employment and education reforms. The documents were thus retrieved from the ministry websites (349) or stakeholder websites (266).

The collection of policy decisions was limited to law documents and decisions issued on ministry website news feeds. Only decisions mentioning “active labor market” or “public school” were included. The study exclusively focused on national-level policy decisions made by political electives and ministries. Such policy decisions comprise different policy instruments, including public spending levels, economic incentives or penalties, laws, orders, regulations, policy programmes, and information measures (Cairney Citation2012; Vedung Citation2007). The decisions were hence identified in laws, amending acts, orders, agreements and notices about policy initiatives and programme funding. Duplicates of policy decisions were removed and technical changes in law documents (e.g. due to decisions taken by other authorities) were discarded. COVID-19 decisions enforced by the Danish Epidemic Act were also removed. If a policy document included several decisions, they were separated into discrete units. The 571 documents thus comprised all relevant national-level decisions.

The selection criteria may have implications for results. The strict delimitation of research publications possibly ignores evidentiary sources that could have informed policy decisions in the studied cases. For example, policy-makers may learn from international sources of evidence (Legrand Citation2012) or from interest groups (Flöthe Citation2020). Yet defining the boundaries for which international actors to include and identifying important actors from other countries is challenging. Second, restricted access to international journals or other knowledge outlets may limit availability for Danish policy-makers. It would have been possible to include publications from interest groups; yet not all of these publications would satisfy the definition of “evidence” employed in the study.

The selection criteria might affect the observed levels of evidence impact. Since the collection exclusively targeted research figuring within the ministries' “knowledge horizons”, the observed impact levels might be higher than in other existing studies investigating the role of evidence in policy–making. Future studies using this matching method would benefit from including more different types of knowledge to capture the role of research vis-à-vis other types of information or explore the kinds of international sources influencing national-level policy decisions .

Applying the coding scheme

The applied coding scheme contained three types of codes: Document attributes, thematic codes, and evidence‒policy matches (see Appendix A). The document attribute codes were used to extract descriptive information from the documents. The research publication attributes included their publication date, provider type, provider name and whether the study was commissioned by the ministry. To investigate ministerial priorities regarding the use of different types of evidence, the documents were further coded using a classification scheme of different evidence types with five categories based on study design. The scheme was inspired by existing literature (Nutley, Powell, and Davies Citation2013; Petticrew and Roberts Citation2003) but was also inductively inferred from the search for research publications (i.e. the available types of evidence in the two policy domains). The classification scheme is shown in .

Table 1. Classification of evidence types.

The policy decision attributes included their release date and whether the decision document included a direct reference to a research publication. Finally, the thematic codes were inductively inferred from systematically browsing the documents, noting down topics and condensing them into larger categories. Ten topics were recorded for each policy domain and validated by cross-checking descriptions on the ministry websites (BUVM Citation2023; STAR Citation2023b). The thematic codes supported the identification of matches between research publications and policy decisions.

The evidence‒policy matches were used to analyze the extent to which conclusions and recommendations from particular research publications align with subsequent policy decisions concerning the same topic (Jørgensen Citation2023; Knudsen Citation2018). The matching was based on whether (1) or not (0) a decision and a research publication addressed the same topic and target group and applied similar wording. A reference to a research publication in a decision was also coded as a match (31 instances). While direct references provide a stronger proof of impact, they are assigned the same score as textual correspondences for the sake of simplicity. Contextual factors were considered when coding matches, including whether the research provider had been consulted in previous, equivalent policy decisions and whether the research publication has been commissioned. The example below illustrates the coding process: A report from the EVA (Citation2015) issued in 2015 showed that 25% of 8th-grade students were not ready for upper-secondary education. The report was coded as commissioned evidence, applying observational-descriptive methods, issued by a public research institution. In June 2017, the Ministry of Children and Education introduced a skills development programme for 8th-grade students not ready for upper-secondary education. The topic was coded as future education. The excerpt below derives from the programme announcement:

About one-quarter of all 8th-grade students are not ready for upper-secondary education, meaning that they have poor prerequisites for finishing public school with a result that can help them into upper-secondary education. A programme focusing on short courses for 8th-grade students who are not ready for upper-secondary education will contribute to putting matters right (BUVM Citation2017).

Analysing the results

After coding the 1159 documents, the data was exported into R (version 4.2.2) for statistical analysis. The analysis included descriptive statistics and tests for differences between policy domains and different evidence types using Pearson χ2-tests. Linear probability models were further estimated to examine variation between policy domains and evidence types (main parameters of interest), controlling for potential confounding factors, including time, provider type, the number of methods per research publication, and whether the research publications were commissioned. Aggregate levels of evidence impact were measured as the share of research publications matching one or more subsequent policy decisions. The measure produces a value between 0 and 1, accounting for how often available and relevant research publications have been reflected in subsequent policy decisions.

Findings

First, a descriptive analysis of the evidence types available to the two ministries and whether they are reflected in subsequent policy decisions is performed. The reflection of evidence in ALMP and public school policy decisions is used as an indicator of ministry use of evidence. Second, linear probability models are used to compare impact levels between policy domains and evidence types.

Available types of evidence and how they are reflected in policy decisions

displays descriptive information about the 588 research publications in the dataset, representing the evidence types available to the ministries regarding ALMP and public school policy. Following the document collection criteria, the research publications were considered both accessible to and relevant for the ministries under study. shows how 246 publications on ALMP and 342 publications on public school policy have been available from 2015–2021. There is no substantial policy domain variation concerning the study designs applied in the research publications, besides a larger share of systematic reviews in the employment domain (16.7%) than in education (7.3%). The systematic reviews on active labor market measures mainly derive from the Ministry of Employment’s evidence bank “Job Effects”, which accumulate causal effect evidence about labor market measures (STAR Citation2023c), while most RCTs and quasi-experimental studies are published by consultancy firms, universities or the Danish Centre for Social Science Research (VIVE). VIVE has also published most of the experimental studies in the education domain. VIVE is a Danish semi-public research organization, which has played a central role in evaluating major employment and education reforms (e.g. Nielsen et al. Citation2020; Pedersen et al. Citation2019; Thuesen, Bille, and Pedersen Citation2017).

Table 2. Available evidence in the two policy domains

displays how 74.8% of the research publications about ALMP have been commissioned by the Ministry of Employment. In the education domain, the Ministry of Children and Education has commissioned a slight majority of the research publications (56.7%). In general, an extensive amount of evidence has been commissioned, which indicates substantial interest in the ministries to accumulate research-based knowledge, as similarly emphasized in the existing literature on the Danish context (e.g. Christensen, Gornitzka, and Holst Citation2017; Rambøll Citation2015).

displays the distribution of matched and unmatched research publications across policy domains and different evidence types (see the corresponding results for each policy domain in Appendices B–C). Although significant only at a 10% level (p = 0.054), the results show a noticeable difference between the distribution of matched and unmatched research publications in the two policy domains: A larger share of the available research publications matches a subsequent ALMP decision (29.3%) compared to public school policy decisions (21.9%). Looking across the two policy domains, the share of research publications matching a subsequent policy decision is 25%. While the lack of studies employing similar measures of evidence impact makes it difficult to compare the results directly to previous research, one-fourth of available research publications being reflected in a subsequent policy decision represents a substantial impact of evidence to policy. Yet it is essential to note that the dataset only contains research publications sampled “close” to the ministries and that 64.3% were commissioned. Relatively high levels of evidence impact were thus expected.

Table 3. Distribution of matched and unmatched research publications.

Regarding evidence types, shows a significant difference (p = 0.01) between the distributions of matched and unmatched research publications. This is due to a larger share of systematic reviews and observational-analytic studies with a match compared to observational-descriptive studies and qualitative studies. Hence, there is a general tendency towards prioritizing quantitative studies to inform policy decisions and to some extent also evidence of causal effect. The match shares, however, are not significantly higher for RCTs/quasi-experimental studies than for observational-analytic studies or observational-descriptive studies. Nevertheless, interesting contrasts between the two policy domains and ministries appear when examining them separately, as described below.

Impact probabilities across policy domains and evidence types

This section presents findings from linear probability models using evidence‒policy matches as the dependent variable; policy domain and evidence types as the main parameters of interest; and fixed effects for provider types, whether the research publications were commissioned, the number of methods per research publication and publication years (see Appendix D for corresponding results using logistic regression). shows descriptive statistics about the dataset. Contrary to the tables presented in the above section, this table shows the total number of matches and non-matches. Research publications matching more than one decision thus appear as multiple observations, thereby resulting in a total of 865 observations across the two policy domains.

Table 4. Descriptive statistics for all variables.

displays correlations between evidence‒policy matches, policy domains and evidence types (see the full regression table in Appendix E). Model 1 shows how research publications about ALMP have a significantly higher probability of matching with a subsequent policy decision (0.158, SE = 0.039, P < 0.001) compared to research publications relating to public school policy. Model 2 displays how systematic reviews (0.330, SE = 0.053, P < 0.001) and observational-analytic studies (0.190, SE = 0.055, P = 0.001) have significantly higher probabilities of matching with a policy decision compared to qualitative studies. The results are consistent when changing the reference category to observational-descriptive studies. However, the results do not indicate a clear-cut relationship between evidence types and match probabilities in the sense that evidence of causal effect is consistently more prioritized than other types of evidence. For example, RCTs/quasi-experimental studies do not exhibit significantly higher probabilities than other evidence types. The findings from Models 1 and 2 remain significant after including both predictor variables in the same model (Model 3).

Table 5. Linear probability models with matches as the dependent variable.

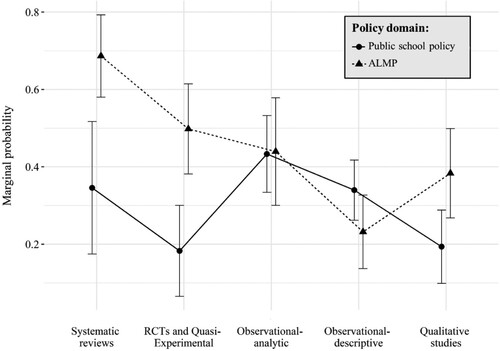

Overall, the results elucidate how the reflection of research publications in policy decisions has been higher for ALMP decisions than for public school policy decisions, and that systematic reviews and observational-analytic studies, respectively, are the most prioritized evidence types to inform policy decisions in the two ministries, although they do not constitute the largest share of available evidence in the studied policy domains. This indicates a stronger orientation towards evidence broadly in the Ministry of Employment than in the Ministry of Children and Education. To further study variations between the two ministries, interactions between policy domains and evidence types were added to the regression model, as displayed in (see the full regression table in Appendix F).

Table 6. Linear probability model with interactions.

Based on the interaction model above, displays the marginal match probabilities across policy domains and evidence types (see Appendix G for documentation). The figure illustrates how systematic reviews (0.71) and RCTs/quasi-experimental studies (0.53) have substantially higher match probabilities for ALMP decisions compared to public school policy decisions (0.35 and 0.19). The marginal probabilities for observational-analytic studies and observational-descriptive studies are similar in the two domains, while qualitative studies have higher match probabilities (0.44) in ALMP decisions than in public school policy decisions (0.12). These results confirm the expectation presented earlier; that evidence prioritization in the Ministry of Employment is more oriented towards evidence of causal effect compared to the Ministry of Children and Education. The formal evidence strategy in the Ministry of Employment, which is inspired by the EBPM notion and strongly values experimental designs for measuring causal effects, seems to have greatly influenced the prioritization of evidence to inform ALMPs in the studied period. Contrarily, the impact of different types of evidence in the Ministry of Children and Education seems less determined by strict methodological priorities towards evidence of causal effect, thus reflecting a more inclusive position towards different evidence types. However, the results do not indicate that the more inclusive strategy in the Ministry of Children and Education has fostered a stronger reliance on observational-descriptive or qualitative studies to inform policy decisions compared to the Ministry of Employment.

Discussion and conclusion

According to EBPM proponents, better and more effective policies are achievable if policy decisions are based on evidence of causal effect. This has inspired several governments to promote the reliance on such evidence in public policy-making. Yet scholars have repeatedly documented that basing policies on evidence is more complicated than assumed by the EBPM notion, rendering the influence of evidence highly uneven across policy domains and government institutions (Amara, Ouimet, and Landry Citation2004; Botterill and Hindmoor Citation2012; Greenberg, Michalopoulos, and Robins Citation2006; Jennings and Hall Citation2012; Landry, Lamari, and Amara Citation2003). Moreover, scholars have criticized the strong prioritization of causal effect evidence implied in the EBPM notion, arguing that democratic and responsible evidence use should draw on different research methods based on their appropriateness for policy needs rather than an unconditional preference for causal effect evidence, marginalizing other forms of evidence from consideration (Davies and Nutley Citation2002; Parkhurst Citation2017; Parkhurst and Abeysinghe Citation2016; Parsons Citation2002). However, empirical research underpinning these debates is limited. Systematic examinations of the impact of different types of evidence across policy domains and policy decisions are rare in the literature.

The present article has provided empirical knowledge to these debates by examining how different types of evidence are reflected in policy decisions in two Danish ministries through the analysis of 1159 research publications and policy decisions from 2015–2021. A document matching method was developed to enable a wide-scale analysis of evidence impact patterns, responding to biases and limitations in existing approaches to study evidence impact (Christensen Citation2023). The analysis results indicate how evidence is a central asset for the Ministry of Employment and the Ministry of Children and Education: A large amount of research publications related to ALMP (N = 246) and public school policy (N = 342) has been available to the ministries from 2015–2021, while 64.3% of this research have been commissioned by the ministries. The broad availability of research reflects the characterization of Denmark as a leading knowledge economy, as suggested by Christensen, Gornitzka, and Holst (Citation2017). The results reveal how 25% of the 588 research publications in the dataset are reflected in a subsequent decision, and the share is even higher for the Ministry of Employment (29.3%). While the results are not directly comparable to existing studies, which mainly rely on survey and interview methods (Christensen Citation2023), one-quarter of research finding its way into policy decisions represents a substantial contribution of evidence to policy. A likely explanation is that the studied ministries have high analytical capacities, including skilled workforce, vast resources for research activities, as well as affiliated agencies and research organizations that regularly provide research and data.

Comparing two similar cases, the article expected to observe variations between the policy domains concerning the prioritization of different evidence types in policy decisions, shaped by differences in ministerial evidence strategies. The article expected that the evidence strategy adopted by the Ministry of Employment in 2012, which closely adheres to the EBPM notion, would motivate a stronger prioritization of causal effect evidence in policy decisions, while the more inclusive approach in the Ministry of Children and Education would place more equal weight on different evidence types. The results somewhat confirm these expectations. Marginal match probabilities across policy domains and evidence types indicate that causal effect evidence is more strongly prioritized in the Ministry of Employment compared to the Ministry of Children and Education; the match probabilities for systematic reviews and RCTs/quasi-experimental studies are significantly higher in ALMP decisions than in public school policy decisions. As similarly observed by Andersen (Citation2020), the adoption of an evidence strategy by the Ministry of Employment has motivated a strong orientation towards research designs that document the causal effects of policies. The results further confirm that the reflection of evidence in policy decisions in the Ministry of Children and Education is less systematically oriented towards the experimental study designs; yet the inclusive approach has not led to significantly higher match probabilities for other evidence types compared to the Ministry of Employment. Inspired by a study of evidence use and methodological preferences among Norwegian policy workers conducted by Høydal and Tøge (Citation2021), the reason might be that, for example, descriptive and qualitative studies are more often used for enlightenment than for guiding policy decisions directly.

The findings of the present study are informative for discussions about EBPM and evidential priorities in government institutions. For example, the evidence strategy adopted by the Ministry of Employment appears to have fostered a strong reliance on causal effect evidence in ALMP decisions. As Parkhurst (Citation2017) has shown in relation to other policy contexts, this might be problematic, since an uncritical favouring of such evidence can generate issue bias, marginalizing certain perspectives and solutions from consideration during policy development and policy discussions. Accordingly, strong administrative preferences for causal effect evidence might privilege scientific ideals (e.g. laws, causality, and generalisability) at the expense of professional and experiential knowledge about meaning, process understanding, etc., which is essential to understand and address the complexity of many policy issues. For example, activation measures in the unemployment system can have different consequences for socio-economically advantaged and disadvantaged citizens (Dahler-Larsen Citation2017). As Sayer (Citation2020:, 250) argues, focusing exclusively on the causal effects of policies may distract policy-makers from more complicated questions regarding public problems and solutions.

In the case of Danish ALMPs, an uncritical orientation towards causal effects may imply that decisions focusing on human capital development (skills enhancement, education) are systematically marginalized, as they have potential retainment effects and do not immediately decrease unemployment levels. By contrast, work-first approaches (rules, sanctions, benefit reductions) are ceteris paribus promoted, as they incentivise people to leave the system and thus reduce the number of people on unemployment benefits. While the latter might increase overall employment figures, the former is essential to support socially disadvantaged people in finding employment. Hence, a recent research report has shown how, despite declining unemployment rates in Denmark, economic costs in job centres have remained high as evidence-based activation measures have been ineffective in moving socially vulnerable and long-term unemployed citizens into employment (Amilon et al. Citation2022).

In Danish public school policy, the prioritization of evidence of causal effect appears less substantial. A reason might be that policy and practice development in the education domain has historically been strongly influenced by professional knowledge and autonomy. However, the results also show that observational-analytic studies appear highly prioritized in public school policy decisions, while recent ministry initiatives indicate a growing interest in causal effect measurements. For example, the Ministry of Children and Education has recently introduced a management tool, which uses causal effect evidence to underpin public budgeting decisions and compare the economic effects of policy options (REFUD Citation2023). Another recent initiative in the Ministry of Children and Education have sought to strengthen quantitative education research (EduQuant Citation2023). The implications of this increased prioritization of casual effect evidence are important. Krejsler (Citation2013) explains how EBPM, which has mainly been encouraged by governments and international agencies, resonates poorly with existing discourses among professionals in the education field. An increasing focus on quantitative measures of causal effect might clash with professional views on what constitutes quality in education. As teachers in Denmark are well-organized and their support is decisive for the implementation of policies, the Ministry of Children and Education might not be able to adopt an evidence strategy equivalent to the Ministry of Employment, as it could trigger substantial contestation. Instead, a more inclusive approach oriented towards different types of evidence has so far been adopted.

In summary, the article has provided new empirical knowledge on the impact of different types of evidence in government ministries. Developing and testing novel ways of measuring how different evidence types are reflected in policy decisions and documenting administrative priorities for different study designs is important for underpinning scholarly debates about methodological preferences and appropriate evidence for policy-making. The findings are further enlightening for policy-makers, as they document the extent to which policy decisions reflect available evidence. Since 64.3% of the research publications analyzed in the study have been commissioned by the ministries, it is critical to know whether these research publications actually find their way into policies.

The study has some limitations. First, the comparison of research publications and policy decisions only provides an approximation of whether different types of evidence are used in and impacts policy as well as a simplified account of policy processes. This allowed for the analysis of a very high number of policy decisions to produce generalizable results about the impact of evidence in the two policy domains. However, the study provides limited information regarding how the research publications were interpreted by policy-makers, the function they played in the policy process or why some research publications were not used. Combining the results with qualitative interviews and surveys could offer insights into the reasons for relying on different sources of evidence (Cherney et al. Citation2015). Second, the study only considered research produced by actors “close” to the ministries, while other studies have documented a broader range of evidentiary sources used by policy-makers (Head Citation2008; Jennings and Hall Citation2012). For instance, it could have been relevant to include knowledge from interest organizations and trade unions, which play a key role in Danish politics. International evidence, such as research publications from neighbouring countries or international organizations, could equally be interesting. Future studies could also focus on examining other policy arenas, such as how different evidence types influences parliamentary debates, political communication or policy implementation processes. Finally, future studies should go more into exploring the features of the issues involved in different policy cases to evaluate the relative appropriateness of different evidence types for different policy needs. This would imply considering what is the “right” proportion of different evidence types vis-à-vis the nature and balance of decisions made by ministries. Such studies are important to advance the discussion about EBPM and evidence priorities in government institutions.

Acknowledgements

I would like to acknowledge Associate Professor Jesper Dahl Kelstrup, Associate Professor Kim Sass Mikkelsen, and Professor Catherine Lyall for their constructive feedback as well as Student Assistant Ida Marie Nyland Jensen for her contribution to the coding of documents.

Disclosure statement

No potential conflict of interest was reported by the author(s).

Additional information

Funding

Notes on contributors

Jonas Videbæk Jørgensen

Jonas Videbæk Jørgensen has a Ph.D. degree from the Department of Social Sciences and Business, Roskilde University, Denmark. He has a master’s degree in Sociology from the University of Copenhagen. His Ph.D. project investigates how ministries in Denmark accumulate, consider and utilise evidence in relation to employment and education policies.

Notes

1 The timeframe is expanded to include 2015 for research publications to capture research possibly influencing policy decisions and debates from 2016 onwards.

References

- Amara, Nabil, Mathieu Ouimet, and Réjean Landry. 2004. “New Evidence on Instrumental, Conceptual, and Symbolic Utilization of University Research in Government Agencies.” Science Communication 26 (1): 75–106. https://doi.org/10.1177/1075547004267491.

- Amilon, Anna, Helle Holt, Kurt Houlberg, Jonathan K. Jensen, Emilie H. Jonsen, Helle B. Kleif, Christian H. Mikkelsen, and Emmy H. Topholm. 2022. Jobcentrenes Beskæftigelsesindsats. Copenhagen: VIVE – The Danish Center for Social Science Research.

- Andersen, Niklas A. 2020. “The Constitutive Effects of Evaluation Systems: Lessons from the Policymaking Process of Danish Active Labour Market Policies.” Evaluation 26 (3): 257–274. https://doi.org/10.1177/1356389019876661.

- Andersen, Niklas A., and Katherine Smith. 2022. “Evidence-Based Policy-Making”. In De Gruyter Handbook of Contemporary Welfare States, edited by Bent Greve, 29–44. Berlin: De Gruyter.

- Ansell, Christopher, and Robert Geyer. 2017. “‘Pragmatic Complexity’ A New Foundation for Moving Beyond ‘Evidence-Based Policy Making’?” Policy Studies 38 (2): 149–167.

- Baron, Jon. 2018. “A Brief History of Evidence-Based Policy.” The ANNALS of the American Academy of Political and Social Science 678 (1): 40–50. https://doi.org/10.1177/0002716218763128.

- Beyer, Janice M. 1997. “Research Utilization: Bridging a Cultural Gap Between Communities.” Review of Policy Research 6 (1): 17–22.

- Blum, Sonja, and Valérie Pattyn. 2022. “How Are Evidence and Policy Conceptualised, and How Do They Connect? A Qualitative Systematic Review of Public Policy Literature.” Evidence & Policy 18 (3): 563–582. https://doi.org/10.1332/174426421X16397411532296.

- Boaz, Anette, Huw T.O. Davies, Alec Fraser, and Sandra M. Nutley. 2019. What Works Now? Evidence Informed Policy and Practice. Bristol: Bristol University Press

- Boaz, Annette, and Sandra M. Nutley. 2019. “Using Evidence”. In What Works Now? Evidence-Informed Policy and Practice, edited by Annette Boaz, Huw T.O. Davies, Alec Fraser, and Sandra M. Nutley, 251–278. Bristol: Policy Press.

- Borgerson, Kirstin. 2009. “Valuing Evidence: Bias and the Evidence Hierarchy of Evidence-Based Medicine.” Perspectives in Biology and Medicine 52 (2): 218–233. https://doi.org/10.1353/pbm.0.0086.

- Boswell, Christina. 2009. The Political Uses of Expert Knowledge: Immigration Policy and Social Research. Cambridge, UK: Cambridge University Press.

- Boswell, Christina. 2015. “Cultures of Knowledge Use in Policymaking: The Functions of Research in German and UK Immigration Policy.” In Integrating Immigrants in Europe, edited by Peter Scholten, Han Entzinger, Rinus Penninx and Stijn Verbeek, 19–38, Cham: Springer.

- Botterill, Linda C., and Andrew Hindmoor. 2012. “Turtles All the Way Down: Bounded Rationality in An Evidence-Based Age.” Policy Studies 33 (5): 367–379. https://doi.org/10.1080/01442872.2011.626315.

- Bredgaard, Thomas, and Stine Rasmussen. 2022. Dansk Arbejdsmarkedspolitik. Edited by Thomas Bredgaard and Stine Rasmussen. 3rd ed. Copenhagen: Djøf Publishing.

- BUVM (Ministry of Children and Education). 2017. “Vær med til at afprøve turboforløb for fagligt udfordrede elever.” Accessed March 27, 2023. https://www.uvm.dk/aktuelt/nyheder/uvm/2017/jun/170619-vaer-med-til-at-afproeve-turboforloeb-for-fagligt-udfordrede-elever.

- BUVM (Ministry of Children and Education). 2023. “Folkeskolen.” Accessed March 27, 2023. https://www.uvm.dk/folkeskolen.

- Cairney, Paul. 2012. Understanding Public Policy. Basingstoke: Palgrave Macmillan.

- Cairney, Paul. 2016. The Politics of Evidence-Based Policy Making. London: Palgrave Macmillan.

- Cherney, Adrian, Brian W. Head, Jenny Povey, Michele Ferguson, and Paul Boreham. 2015. “Use of Academic Social Research by Public Officials: Exploring Preferences and Constraints That Impact on Research Use.” Evidence & Policy 11 (2): 169–188. https://doi.org/10.1332/174426514X14138926450067.

- Christensen, Johan. 2021. “Expert Knowledge and Policymaking: A Multi-Disciplinary Research Agenda.” Policy & Politics 49 (3): 455–471. https://doi.org/10.1332/030557320X15898190680037.

- Christensen, Johan. 2023. “Studying Expert Influence: A Methodological Agenda.” West European Politics 46 (3): 600–613. https://doi.org/10.1080/01402382.2022.2086387.

- Christensen, Johan, Åse Gornitzka, and Cathrine Holst. 2017. “Knowledge Regimes in the Nordic Countries.” In In The Nordic Models in Political Science: Challenged, But Still Viable?, edited by Oddbjørn Knutsen, 239–252. Oslo: Fagbokforlaget.

- Christensen, Johan, and Ronen Mandelkern. 2022. “The Technocratic Tendencies of Economists in Government Bureaucracy.” Governance 35 (1): 233–257. https://doi.org/10.1111/gove.12578.

- Clarence, Emma. 2002. “Technocracy Reinvented: The New Evidence Based Policy Movement.” Public Policy and Administration 17 (3): 1–11. https://doi.org/10.1177/095207670201700301.

- Cozzens, Susan, and Michele Snoek. 2010. “Knowledge to Policy Contributing to the Measurement of Social, Health, and Environmental Benefits”. Paper Prepared for the Workshop on the Science of Science Measurement, Washington, DC, December 2-3, 2010.

- Dahler-Larsen, Peter. 2017. “Critical Perspectives on Using Evidence in Social Policy.” In Handbook of Social Policy Evaluation, edited by Bent Greve, 242–262. Cheltenham: Edward Elgar.

- Davies, Huw T.O. and Sandra M. Nutley. 2002. “Evidence-Based Policy and Practice: Moving from Rhetoric to Reality.” Discussion Paper, St. Andrews, UK: University of St. Andrews.

- Daviter, Falk. 2015. “The Political Use of Knowledge in the Policy Process.” Policy Sciences 48 (4): 491–505. https://doi.org/10.1007/s11077-015-9232-y.

- EduQuant. 2023. “EduQuant. Research Unit for Quantitative Research on Children and Education.” Accessed March 27, 2023. https://www.economics.ku.dk/research/externally-funded-research_new/uddankvant/.

- European Commission. 2015. Strengthening Evidence Based Policy Making Through Scientific Advice. Brussels: Research and Innovation, European Commission.

- EVA (Danish Evaluation Institute). 2015. UPV i 8. klasse. Deskriptiv analyse af uddannelsesparathedsvurderinger i 8. klasse. Copenhagen: Danish Evaluation Institute.

- Flöthe, Linda. 2020. “Representation Through Information? When and Why Interest Groups Inform Policymakers About Public Preferences.” Journal of European Public Policy 27 (4): 528–546. https://doi.org/10.1080/13501763.2019.1599042.

- French, Richard D. 2019. “Is It Time to Give Up on Evidence-Based Policy? Four Answers.” Policy & Politics 47 (1): 151–168. https://doi.org/10.1332/030557318X15333033508220.

- Greenberg, David H., Charles Michalopoulos, and Philip K. Robins. 2006. “Do Experimental and Non-Experimental Evaluations Give Different Answers About the Effectiveness of Government-Funded Training Programs?” Journal of Policy Analysis and Management 25 (3): 523–552. https://doi.org/10.1002/pam.20190.

- Hansen, Hanne F., and Olaf Rieper. 2010. “The Politics of Evidence-Based Policy-Making: The Case of Denmark.” German Policy Studies 6 (2): 87–112.

- Hayden, Carol, and Craig Jenkins. 2014. “‘Troubled Families’ Programme in England: ‘wicked Problems’ and Policy-Based Evidence.” Policy Studies 35 (6): 631–649. https://doi.org/10.1080/01442872.2014.971732.

- Head, Brian W. 2008. “Three Lenses of Evidence-Based Policy” Australian Journal of Public Administration 67 (1): 1–11. https://doi.org/10.1111/j.1467-8500.2007.00564.x.

- Head, Brian W. 2016. “Toward More “Evidence-Informed.” Policy Making?”. Public Administration Review 76 (3): 472–484. doi: 10.1111/puar.12475

- Head, Brian W., Michele Ferguson, Adrian Cherney, and Paul Boreham. 2014. “Are Policy-Makers Interested in Social Research? Exploring the Sources and Uses of Valued Information among Public Servants in Australia.” Policy and Society 33 (2): 89–101. https://doi.org/10.1016/j.polsoc.2014.04.004.

- Howlett, Michael. 2009. “Policy Analytical Capacity and Evidence-Based Policy-Making: Lessons from Canada.” Canadian Public Administration 52 (2): 153–175. https://doi.org/10.1111/j.1754-7121.2009.00070_1.x.

- Howlett, Michael. 2015. “Policy Analytical Capacity: The Supply and Demand for Policy Analysis in Government”. Policy and Society 34 (3–4): 173–182. https://doi.org/10.1016/j.polsoc.2015.09.002.

- Høydal, Øyuun S., and Anne G. Tøge. 2021. “Evaluating Social Policies: Do Methodological Approaches Determine the Policy Impact?” Social Policy & Administration 55 (5): 954–967. https://doi.org/10.1111/spol.12682.

- Ingold, Jo and Mark Monaghan. 2016. “Evidence Translation: An Exploration of Policy Makers’ Use of Evidence.” Policy & Politics 44 (2): 171–190. https://doi.org/10.1332/147084414X13988707323088.

- Jennings, Edward T., and Jeremy L. Hall. 2012. “Evidence-Based Practice and the Use of Information in State Agency Decision Making.” Journal of Public Administration Research and Theory 22 (2): 245–266. https://doi.org/10.1093/jopart/mur040.

- Jørgensen, Jonas V. 2023. “Knowledge Utilisation Analysis: Measuring the Utilisation of Knowledge Sources in Policy Decisions”. Evidence & Policy 20 (2): 205–225. doi: 10.1332/174426421X16917585658729

- Knott, Jack, and Aaron Wildavsky. 1980. “If Dissemination Is the Solution, What Is the Problem ?” Knowledge 1 (4): 537–578. https://doi.org/10.1177/107554708000100404.

- Knudsen, Søren B. 2018. “Developing and Testing a New Measurement Instrument for Documenting Instrumental Knowledge Utilisation: The Degrees of Knowledge Utilization (DoKU) Scale.” Evidence & Policy 14 (1): 63–80. https://doi.org/10.1332/174426417X14875895698130.

- Krejsler, John B. 2013. “What Works in Education and Social Welfare? A Mapping of the Evidence Discourse and Reflections upon Consequences for Professionals.” Scandinavian Journal of Educational Research 57 (1): 16–32. doi: 10.1080/00313831.2011.621141

- Krippendorf, Klaus. 2013. Content Analysis: An Introduction to Its Methodology. Thousand Oaks, CA: SAGE Publications.

- Landry, Réjean, Moktar Lamari, and Nabil Amara. 2003. “The Extent and Determinants of the Utilization of University Research in Government Agencies.” Public Administration Review 63 (2): 192–205. https://doi.org/10.1111/1540-6210.00279.

- Legrand, Timothy. 2012. “Overseas and Over Here: Policy Transfer and Evidence-Based Policy-Making.” Policy Studies 33 (4): 329–348. https://doi.org/10.1080/01442872.2012.695945.

- Lijphart, Arend. 1971. “Comparative Politics and the Comparative Method” American Political Science Review 65 (3): 682–693. https://doi.org/10.2307/1955513.

- Liket, Ken. 2017. “Challenges for Policy-Makers: Accountability and Cost-Effectiveness.” In Handbook of Social Policy Evaluation, edited by Bent Greve, 183–202. Cheltenham: Edward Elgar.

- Lingard, Bob. 2011. “Policy as Numbers: Ac/Counting for Educational Research” The Australian Educational Researcher 38: 355–382. https://doi.org/10.1007/s13384-011-0041-9.

- Lorenc, Theo, Elizabeth F. Tyner, Mark Petticrew, Steven Duffy, Fred P. Martineau, Gemma Phillips, and Karen Lock. 2014. “Cultures of Evidence Across Policy Sectors: Systematic Review of Qualitative Evidence.” European Journal of Public Health 24 (6): 1041–1047. https://doi.org/10.1093/eurpub/cku038.

- Monaghan, Mark, and Jo Ingold. 2018. “Policy Practitioners’ Accounts of Evidence-Based Policy Making: The Case of Universal Credit.” Journal of Social Policy 48 (2): 1–18.

- Newman, Joshua, Adrian Cherney, and Brian W. Head. 2017. “Policy Capacity and Evidence-Based Policy in the Public Service.” Public Management Review 19 (2): 157–174. https://doi.org/10.1080/14719037.2016.1148191.

- Nielsen, Chantal P., Vibeke M. Jensen, Mikkel G. Kjer, and Kasper M. Arendt. 2020. Elevernes Læring, Trivsel og Oplevelser af Undervisningen i Folkeskolen – En Evaluering af Udviklingen i Reformårene 2014‒2018. Copenhagen: VIVE – The Danish Center for Social Science Research.

- Nutley, Sandra, Alison Powell, and Huw T.O. Davies. 2013. What Counts as Good Evidence. London: Alliance for Useful Evidence.

- Nutley, Sandra M., Isabel Walter, and Huw T.O. Davies. 2007. Using Evidence. How Research Can Inform Public Services. Bristol: Policy Press.

- OECD. 2017. “Conference Summary.” In OECD Conference: Governing Better Through Evidence-Informed Policy Making. Paris: OECD.

- OECD. 2020. Education Policy Outlook Denmark. Paris: Organisation for Economic Co-operation and Development (OECD).

- Oliver, Kathryn. 2022. “How Policy Appetites Shape and Are Shaped by Evidence Production and Use.” In Integrating Science and Politics for Public Health, edited by Patrick Fafard, Adèle Cassola, and Evelyne de Leeuw, 77–101. Cham: Palgrave Macmillan.

- Oliver, Kathryn, Simon Innvar, Theo Lorenc, Jenny Woodman, and James Thomas. 2014. “A Systematic Review of Barriers to and Facilitators of the Use of Evidence by Policymakers” BMC Health Services Research 14(1): 1–12. https://doi.org/10.1186/1472-6963-14-1.

- Parkhurst, Justin. 2017. The Politics of Evidence: From Evidence-Based Policy to the Good Governance of Evidence. Abingdon, UK: Routledge.

- Parkhurst, Justin, and Sudeepa Abeysinghe. 2016. “What Constitutes “Good” Evidence for Public Health and Social Policy-Making? from Hierarchies to Appropriateness” Social Epistemology 30 (5‒6): 665–679. https://doi.org/10.1080/02691728.2016.1172365.

- Parkhurst, Justin, Ludovica Ghilardi, Jayne Webster, Jenna Hoyt, Jenny Hill, and Caroline A. Lynch. 2021. “Understanding Evidence Use from a Programmatic Perspective: Conceptual Development and Empirical Insights from National Malaria Control Programmes.” Evidence & Policy 17 (3): 447–466. https://doi.org/10.1332/174426420X15967828803210.

- Parsons, Wayne. 2002. “From Muddling Through to Muddling Up - Evidence Based Policy Making and the Modernisation of British Government.” Public Policy and Administration 17 (3): 43–60. https://doi.org/10.1177/095207670201700304.

- Pawson, Ray. 2002. “Evidence-Based Policy: In Search of a Method.” Evaluation 8 (2): 157–181. https://doi.org/10.1177/1358902002008002512.

- Pedersen, Niels J.M., Niels Ejersbo, Kurt Houlberg, Christophe Kolodziejczyk, and Nicolai Kristensen. 2019. Refusionsomlægning på Beskæftigelsesområdet – Økonomiske og Organisatoriske Konsekvenser. Copenhagen: VIVE – The Danish Center for Social Science Research.

- Petticrew, Mark, and Helen Roberts. 2003. “Evidence, Hierarchies, and Typologies: Horses for Courses.” Journal of Epidemiology & Community Health 57: 527–529. https://doi.org/10.1136/jech.57.7.527.

- Petticrew, Mark, Margaret Whitehead, Sally J. Macintyre, Hilary Graham, and Matt Egan. 2004. “Evidence for Public Health Policy on Inequalities: 1: The Reality According to Policymakers.” Journal of Epidemiology & Community Health 58 (10): 811–816. https://doi.org/10.1136/jech.2003.015289.

- Rambøll. 2015. Den Forskningsbaserede Viden i Politikudviklingen. Rapport til Danmarks Forsknings- og Innovationspolitiske Råd. Copenhagen: Rambøll.

- Reed, Mark S. 2018. The Research Impact Handbook. 2nd ed. St John’s Well: Fast Track Impact.

- REFUD (Calculation Model for Education Investments). 2023. “REFUD. Regnemodel for uddannelsesinvesteringer.” Ministry of Children and Education. Accessed March 27, 2023. https://refud.dk/.

- Reid, Garth, John Connolly, Wendy Halliday, Anne Marie Love, Michael Higgins, and Anita MacGregor. 2017. “Minding the Gap: The Barriers and Facilitators of Getting Evidence Into Policy When Using a Knowledge-Brokering Approach.” Evidence & Policy 13 (1): 29–38. https://doi.org/10.1332/174426416X14526131924179.

- Riley, Barbara L., Alison Kernoghan, Lisa Stockton, Steve Montague, Jennifer Yessis, Cameron D. Willis. 2018. “Using Contribution Analysis to Evaluate the Impacts of Research on Policy: Getting to ‘Good Enough’.” Research Evaluation 27 (1): 16–27. https://doi.org/10.1093/reseval/rvx037.

- Sanderson, Ian. 2006. “Complexity, 'practical Rationality' and Evidence-Based Policy Making.” Policy & Politics 34 (1): 115–132. https://doi.org/10.1332/030557306775212188.

- Sayer, Philip. 2020. “A New Epistemology of Evidence-Based Policy.” Policy & Politics 48 (2): 241–258. https://doi.org/10.1332/030557319X15657389008311.

- Smith, Katherine, and Tina Haux. 2017. “Evidence-Based Policy-Making (EBPM).” In Handbook of Social Policy Evaluation, edited by Bent Greve, 141–160. Cheltenham: Edward Elgar.

- STAR (Danish Agency for Labour Market and Recruitment). 2022. “Årsrapport 2021 - Styrelsen for Arbejdsmarked og Rekruttering.” Accessed March 27, 2023. https://star.dk/om-styrelsen/publikationer/2022/05/%C3%A5rsrapport-2021-styrelsen-for-arbejdsmarked-og-rekruttering/.

- STAR (Danish Agency for Labour Market and Recruitment). 2023a. “Styrelsen for Arbejdsmarked og Rekrutterings strategi for arbejdet med viden.” Accessed March 27, 2023. https://www.star.dk/viden-og-tal/hvad-virker-i-beskaeftigelsesindsatsen/vidensstrategi/.

- STAR (Danish Agency for Labour Market and Recruitment). 2023b. “Indsatser og ordninger på beskæftigelsesområdet.” Accessed March 27, 2023. https://star.dk/indsatser-og-ordninger/.

- STAR (Danish Agency for Labour Market and Recruitment). 2023c. “Jobeffekter.dk – Studiesøgning.” Accessed March 27, 2023. https://www.jobeffekter.dk/studiesoegning/#/.

- STUK (National Agency for Education and Quality). 2022. “Styrelsen for Undervisning og Kvalitet – Årsrapport 2021.” Accessed March 27, 2023. https://www.uvm.dk/-/media/filer/stuk/pdf22/220426-stuk-aarsrapport.pdf.

- STUK (National Agency for Education and Quality). 2023. “Viden og Forskning”. Accessed November 16, 2023. https://www.stukuvm.dk/kvalitet-i-undervisningen/viden-og-forskning.

- Thuesen, Frederik, Rebekka Bille, and Mogens J. Pedersen. 2017. Styring af den kommunale beskæftigelsesindsats – Instrumenter, motivation og præstationer. Copenhagen: VIVE – The Danish Center for Social Science Research.

- Triantafillou, Peter. 2015. “The Political Implications of Performance Management and Evidence-Based Policymaking.” The American Review of Public Administration 45 (2): 167–181. https://doi.org/10.1177/0275074013483872.

- Van der Arend, Jenny. 2014. “Bridging the Research/Policy Gap: Policy Officials’ Perspectives on the Barriers and Facilitators to Effective Links Between Academic and Policy Worlds.” Policy Studies 35 (6): 611–630. https://doi.org/10.1080/01442872.2014.971731.

- Van Toorn, Georgia, and Leanne Dowse. 2016. “Policy Claims and Problem Frames: A Cross-Case Comparison of Evidence-Based Policy in an Australian Context.” Evidence & Policy 12 (1): 9–24. https://doi.org/10.1332/174426415X14253873124330.

- Vedung, Evert. 2007. “Policy Instruments: Typologies and Theories.” In Carrots, Sticks, and Sermons: Policy Instruments and Their Evaluation, edited by Marie-Louise Bemelmans-Videc, Ray C. Rist, and Evert Vedung, 21–58. Somerset, UK: Taylor and Francis.

- Weiss, Carol H. 1979. “The Many Meanings of Research Utilization.” Public Administration Review 39 (5): 426–431. https://doi.org/10.2307/3109916.

- Young, Ken, Deborah Ashby, Annette Boaz, and Lesley Grayson. 2002. “Social Science and the Evidence-Based Policy Movement.” Social Policy and Society 1 (3): 215–224. https://doi.org/10.1017/S1474746402003068.

- Zarkin, Michael. 2021. “Knowledge Utilization in the Regulatory State: An Empirical Examination of Schrefler’s Typology.” Policy Studies 42 (1): 24–41. https://doi.org/10.1080/01442872.2020.1772220.