ABSTRACT

Anti-malware software must be frequently updated in order to protect the system and the user from attack. Makers of this software must choose between interrupting the user to update immediately or allowing them to update later. In either case, assessing the content of the interruption may still require cognitive investment. However, by allowing the user to negotiate a delayed response to these interruptions, users can instead focus on their work. This paper experimentally examines the effect of immediate and negotiated interruptions on user decision time and decision accuracy in multiple stage tasks. For complex tasks, decision performance is higher when the user can negotiate the onset of and response to interruptions. The option to defer response also results in greater subjective perceptions of control, improved task resumption and reduced feelings of interruption and distraction on the part of the user, even within a short period of time. These findings have practical implications for endpoint security and where there is a need to mitigate the effects of user interruptions from computer-mediated communication in complex task situations.

1. Introduction

In the next five minutes, some 2,400 new strains of malware will be discovered (McAfee Labs Citation2018a). This malware aims to discretely infect and undermine the operational integrity of a user’s computing environment, compromising the user’s tasks, communications and confidential information, sometimes using seemingly innocuous messages to compel the user to inadvertently infect their own computer. At the desktop endpoint, the user’s first line of defense against this malware is anti-malware software, also known as anti-virus or AV software, which must be kept up to date in order to effectively disrupt the myriad attack vectors of email, software and network vulnerabilities (Symantec Labs Citation2017). To this end, Sophos (Citation2019, 13) argue,

in contemporary malware attacks, the problem is not limited to a small number of executable file types that must be observed, tracked, and have their behavior monitored. With a wide range of file types that include several ‘plain text’ scripts, chained in no particular order and without any predictability, the challenge becomes how to separate the normal operations of a computer from the anomalous behavior of a machine in the throes of a malware infection.

Managers turning to the research literature for insight into this problem may be disappointed. The vast majority of prior work into malware has examined user perceptions, damage resulting from infections, or organisational policies surrounding malware prevention. Very little work has examined the practical realities of how users defend themselves at the cognitive operational level (e.g. Doherty and Tajuddin Citation2018). Users are typically not waiting to upgrade – rather, they are engrossed in other tasks. Hence, any realistic approach to modelling the decision outcomes requires the user to be undertaking a primary task when responding to an anti-malware update request. However, most prior empirical research into the effects of interruptions on users’ task performance (Avrahami and Hudson Citation2004; Cutrell, Czerwinski, and Horvitz Citation2001; Czerwinski, Cutrell, and Horvitz Citation2000a) have focused only on the immediate nature of user interruptions, with less emphasis on interruptions that allow users to delay their response. Knowledge of malware defense must hence be balanced against an understanding of how users respond while they are completing their tasks.

In this paper, we model two modes of user interaction in the anti-malware context. In the first mode, users must respond immediately to an interruption. In the second mode, users can defer responding to the interruption. We examine these modes against three indicators of decision quality, being decision comprehension time, decision response time and decision accuracy. Our findings indicate that anti-malware providers should encourage users to defer difficult decisions regarding anti-malware upgrades. The results of our modelling show that requiring an immediate response itself encourages users to make bad decisions.

This research makes two contributions to knowledge. First, the rise in malware threats requires a greater understanding of the operational level of malware defense in order to mount an effective response to malware threats. Despite much work into malware, mostly at a technical level, very little work has focused on the cognitive response state in which the user interacts with their anti-malware software in its practical role as the user’s endpoint defense. Prior research has shown that users underestimate infection risk (Menard, Gatlin, and Warkentin Citation2014; Teer, Kruck, and Kruck Citation2007) and to date no study has yet identified the cognitive implications of this perception at the endpoint security level. To fill this gap, our experimental setup emulates the user’s day-to-day operating circumstances in the face of an anti-malware interruption. Second, we identify the effects of negotiated interruptions in mitigating the disruptive effects of interruptions across simple and complex tasks. Our contribution in studying the anti-malware context is to identify the moderating effect of ongoing task complexity on interruptive effects, because users are typically engrossed in other tasks, and only encounter the anti-malware software interruption incidentally. We incorporate both multiple stages and multiple interruptions in each primary task with the inclusion of changes in task complexity. In this regard, the work addresses calls from Basoglu, Fuller, and Sweeney (Citation2009) and Gupta, Sharda, and Greve (Citation2011) to investigate the effects of interruptions across tasks with varying levels of cognitive burden on users.

This paper proceeds as follows. The next section provides background information, followed by an overview of prior work on interruptions. This leads to the study’s research framework and hypotheses, followed by the research design and method. Analysis of the objective experiment and subjective questionnaire is then presented, followed by conclusions.

2. Endpoint security and anti-malware software

Operational security is an important issue for organisational and private user ICTs (Goode et al. Citation2015; Goode and Lacey Citation2011). Substantial prior research has highlighted the risks of malware threats to operational security. However, endpoint security from the perspective of the end user has received comparatively little attention in prior work (e.g. Doherty and Tajuddin Citation2018). To better understand current thinking regarding endpoint security in the end-user context, we searched for and reviewed all published journal articles that examined anti-malware and endpoint security at the user behaviour level. presents the outcomes of this review.

Table 1. Prior studies on use behaviour with anti-malware and endpoint security.

The bulk of prior work has been either at a technical level or at the policy level. In the first group, research has been divided into two main streams: developing more effective anti-malware software by identifying the exploitation vectors of malware, and second in identifying new techniques for malware prosecution. These techniques have progressed from traditional techniques such as signature scanning (Liao and Wang Citation2006) and structural heuristics (Zenkin Citation2001) to more modern techniques such as data mining (Ye et al. Citation2017), machine learning, (Milosevic, Dehghantanha, and Choo Citation2017), and behavioural analysis (Faruki et al. Citation2014). The majority of such work has employed archival data, such as malware signatures (Sukwong, Kim, and Hoe Citation2011), or simulations (Abazari, Analoui, and Takabi Citation2016) to test empirical models.

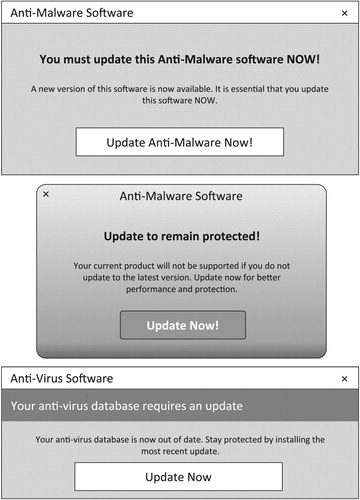

Research in this stream has identified a number of concepts that are relevant to this research. A principal concept underpinning this work is that malware evolves quickly to exploit newly identified vulnerabilities in software. Accordingly, anti-malware software also requires frequent updating in order to maintain a strong defense against this malware. At a technical level, this updating may involve alterations both to malware definition files and anti-malware detection routines more directly. As anti-malware itself is often a malware target, most anti-malware software self-protects (Baskerville, Rowe, and Wolff Citation2018) and alterations to anti-malware applications require elevated user privileges in order to be updated: these elevated privileges frequently require user intervention and permission to update. illustrates several stylized examples of anti-malware pop-up modal dialog windows that require a user's response before they can resume their tasks. In this stream of work, keeping anti-malware definitions and functionality up to date is an accepted and expected part of endpoint defense.

Research in the second group has focused on two main areas. First, a substantial amount of work has examined the development of organisational security policies, especially with regard to policy effectiveness, completeness and structure (Bonny, Goode, and Lacey Citation2015). A key goal of this body of work lies in identifying effective techniques for compelling anti-malware use within organisational ICT resources and their users. The second body of work in this stream has focused on assessing the degree of policy compliance among end users, management, operational staff and, to a lesser extent, home and private users. Surveys of end users (Anderson and Agarwal Citation2010; Dodel and Mesch Citation2018; McGill and Thompson Citation2017) and employees (Blythe and Coventry Citation2018; Chenoweth, Gattiker, and Corral Citation2019; Williams et al. Citation2014) have been the dominant approach to empirical testing within this stream of research.

In contrast to evidence from the first body of work, an undercurrent of this research is that there is a gap between organisational expectations regarding endpoint security, and the degree to which users will adhere to endpoint defense policies. A core goal of these policies is to compel users to make good decisions regarding operational security in order to preserve operational assets: as a result, organisations strive to make their policies clearer (Herath and Rao Citation2009), more user-friendly (Höne and Eloff Citation2002; Safa, Von Solms, and Furnell Citation2016), for example by accommodating BYOD initiatives (Baillette, Barlette, and Leclercq-Vandelannoitte Citation2018), or more punitive by increasing penalties for non-compliance (Shropshire, Warkentin, and Johnston Citation2010). However, independently of the organisational context, private users also appear reluctant to adhere closely to endpoint security directives, such as those established by internet service providers (ISPs).

In both the organisational and private contexts, endpoint policies appear necessary because users may make operational decisions that benefit their immediate user outcomes, rather than those that relate to more distant possibilities such as a potential malware infection or a data breach (Goode et al. Citation2017). However very little work has offered an explanation for this reluctance at a cognitive decision level. There is hence an opportunity to study the effect of user decision making in the face of software interruptions while the user is involved in other tasks. A study into the effect of these interruptions must therefore take the user’s other work processes into account when analysing these disruptive decision-making effects.

3. Interruptions

Interruptions are unpredictable stressors that require additional user effort and attention to address (Galluch, Grover, and Thatcher Citation2015). Interruptions are typically considered disruptive, hindering task performance and effectiveness, especially for interruptions that use the same sensory channels as the individual’s working memory (Jett and George Citation2003; Nystrom et al. Citation2000). Interruptions test the user’s cognitive abilities by forcing them to switch their attention from their primary tasks to another task (Bailey, Konstan, and Carlis Citation2001; Eyrolle and Cellier Citation2000; Hodgetts and Jones Citation2007).

Although extant literature has attributed differences in the effects of user interruptions on task performance to the type of interruptions and tasks that require varying levels of cognitive processing, few empirical studies have sought to provide an insight into the outcomes of such a research undertaking. To identify these effects, we identified prominent empirical studies of computer-mediated interruptions published in journals and leading conferences. summarises the empirical studies on the effects of interruption in computer-mediated contexts.

Table 2. Prior empirical studies on computer-mediated interruption.

Two types of interruptions emerge from prior literature, relating to the onset of the interruption and the amount of control available to the user over when and how to respond (Adamczyk and Bailey Citation2004; Hodgetts and Jones Citation2007; Robertson et al. Citation2004). On one hand, an immediate interruption is an event that demands user attention and expects them to suspend their tasks and interact with it at that time. Immediate interruptions burden people’s cognitive limitations by drawing attention immediately (such as an urgent popup window that requires a quick response) (Altmann and Trafton Citation2004). On the other hand, a negotiated interruption gives a user control over when or whether to deal with the interruption, thus minimising disruptive effects (McFarlane and Latorella Citation2002).

Higher levels of concentration or cognitive effort involved in problem-solving tasks exacerbate the disruptive effects of interruptions (Eyrolle and Cellier Citation2000; Solingen, Berghout, and Latum Citation1998). Users will experience greater disruptive effects of interruptions to their task performance when they undertake complex tasks, due to their increased stress and inability to integrate a high number of information cues for accurate decision-making.

Interruptions can adversely affect tasks that require higher levels of concentration (Solingen, Berghout, and Latum Citation1998), are more difficult (Gillie and Broadbent Citation1989), or require greater involvement (Franke, Daniels, and McFarlane Citation2002).

When interruptions occur during simple tasks, stress increases and attention narrows, resulting in the exclusion of irrelevant information cues thus facilitating decision performance. However, as task complexity increases (Kelton, Pennington, and Tuttle Citation2010; Li et al. Citation2011), people’s cognitive resources decrease and attention is narrowed, resulting in the exclusion of some information cues that may be needed for completing the task successfully. Adverse effects of these interruptions may occur at different stages (phases) in the task being undertaken.

3.1. Interruptions in the anti-malware context

Interruptions from anti-malware software are likely to arise while the user is engaged in other tasks. When anti-malware software issues an update dialog box appears that requires immediate decision-making (like those shown in ), the level of complexity in the interruption will be an important factor in determining how disruptive the interruption is. If the interruption allowed the user to delay their response to the interruption, the user might better manage their decision-making strategies to suit the current task, thereby minimising disruptive effects to their other work.

The anti-malware update process consists of two principal phases. The first stage is an announcement stage, which presents the user with a message calling them to action. This stage requires the user to understand and process the anti-malware announcement. The second stage is an action stage in which the user elects to obey or disobey the message. This stage requires the user to review their available options, given the content of the announcement, and to select their choice. Responding to an anti-malware dialog box is likely to involve decision comprehension (understanding and processing) and evaluation becomes decision response (option review and selection).

4. Research model and hypotheses

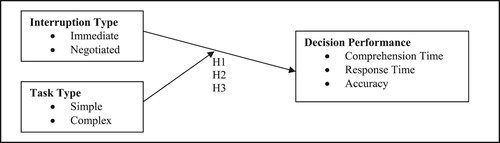

Synthesising prior literature, the effects of user interruptions will be different when users attend to interruptions immediately or when users negotiate a delayed response to the interruptions. Complex tasks exacerbate the differential effects of both interruption types on users’ decision performance. The research posits that immediate interruptions are more disruptive than negotiated interruptions on users’ decision performance in complex tasks, but there are no differential effects between both types of interruptions on users’ decision performance in simple tasks. shows the research model.

Three hypotheses arose from the theoretical perspectives and are empirically tested in this study. First, interruptions may affect the overall time taken by the user to solve the problem and commit to a course of action (Marsden, Pakath, and Wibowo Citation2002). During the decision comprehension stage, the interruption may extend the time taken by a participant to identify and understand a problem task (Beynon, Rasmequan, and Russ Citation2002). This leads to the first major hypothesis concerning task complexity.

H1: Task complexity moderates the effects of immediate interruptions and negotiated interruptions on users’ decision comprehension time

H2: Task complexity moderates the effects of immediate interruptions and negotiated interruptions on users’ decision response time

H3: Task complexity moderates the effects of immediate interruptions and negotiated interruptions on users’ decision accuracy

5. Research design and method

As in prior studies of interruption, we used a controlled laboratory experiment to test our hypotheses. As in prior studies of anti-malware, we then used a survey for post-hoc testing. Details of participants, measures and procedure are provided below.

5.1. Participants

A sample size of 40 participants (Cohen’s d = 0.8, α = 0.05, power level 1-β = 0.8) was calculated to be sufficient and similar to previous experiments (e.g. McFarlane Citation1998). To ease cognitive dissonance and provide a more pragmatic experience for participants (Kim, Barua, and Whinston Citation2002), each participant received a fixed $10 cash payment as recompense for their time, of which they were aware before attending the experiment.

The 25 male and 15 female participants were between the ages of 18 and 37, with a mean age of 22 years. All participants were undergraduate students studying Information Systems, with skills ranging from intermediate to expert usage of computers and reasonable proficiency with computing tasks and general application software such as email.

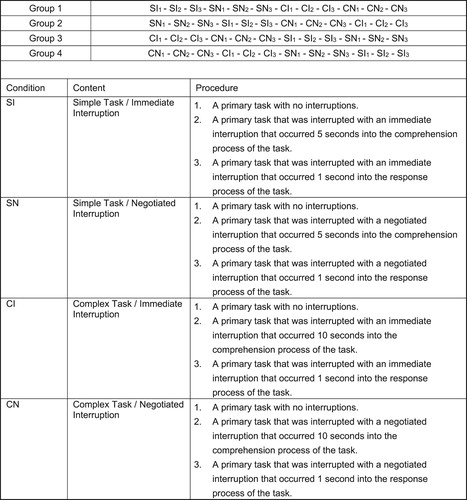

Each participant was randomly assigned to one of four treatment order groups. Each of the four treatments was administered with a discrete combination of the two independent variables: interruption type (immediate and negotiated) and task complexity (simple and complex). Participants received all four treatments, and their decision performance was measured under the four treatment conditions. As shown in , a Kruskal–Wallis test revealed no significant differences in gender, age, level of proficiency and degree of involvement in computing tasks among participants across the treatment conditions.

Table 3. Means and standard deviations for participants’ demographics across order groups.

5.2. Materials and measures

The tasks were performed on a standard PC (LCD monitor, keyboard and mouse) to emulate a standard home or office operating environment. The tasks were run in a single browser window in the centre of the screen. Each interruption was triggered as a pop-up window in the style of an anti-virus update notification. The script was used to control how far the participant could progress before fully completing the current set of tasks. A script captured participant responses to a database unobtrusively in the background.

For the exit survey, we adapted measures of decision quality from prior research, such as comprehension and response time (Bailey, Konstan, and Carlis Citation2001; Burmistrov and Leonova Citation2003; McFarlane Citation2002), and decision accuracy (Eyrolle and Cellier Citation2000). As shown in , questions required the respondent to rank the conditions from easiest to hardest at different stages of the decision.

Table 4. Exit survey decision quality measures and questions.

5.3. Design

A two factor, within-subjects Latin squares experimental design was selected because the dependent variables are measured repeatedly on the same participant under each of the different treatment conditions, thereby reducing error variance due to individual differences. This design also increases statistical power for a given number of subjects compared to a between-subjects design. shows the diagram-balanced Latin squares ordering used as the counterbalanced grouping scheme in this experiment for simple and complex tasks and immediate and negotiated interruptions. This ordering was chosen because it ensures that each condition follows every other condition exactly once, thereby controlling for possible carryover effects (Brooks Citation2012).

Table 5. Counterbalanced grouping scheme used in the experiment.

5.3.1. Tasks

As in prior interruption research (e.g. Hodgetts and Jones Citation2007), we used process backtracking (Xia and Sudharshan Citation2002), and recursive reasoning (Anderson, Albert, and Fincham Citation2005) to authentically simulate user situations where cognitive load requirements are high, such as programming and business process analysis.

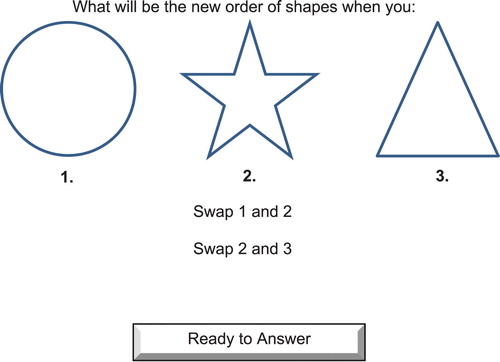

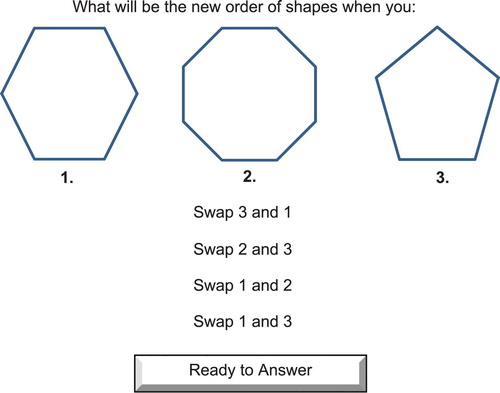

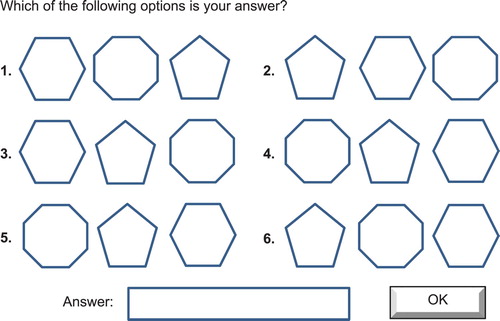

We presented each participant with a sequence of twelve question and answer-based tasks, comprising six simple and six complex tasks. Each task involved three discrete shapes and participants were given instructions to change the order of the shapes. After Bonner’s (Citation1994) definitions of task complexity, each simple task involved examining at least four information cues (two pairs) and required two transpositions during the comprehension process (). Each complex task involved examining at least eight information cues (four pairs) and required four transpositions during the comprehension process (). Both simple and complex tasks involved analysing six decision options during the decision response period ().

Each task was presented to the participant on a new page. By default, each task had a duration limit of 2.5 min, with a maximum total completion time of 30 min for all tasks. Before commencement, each participant practiced two trial tasks to familiarise themselves with the cognitive requirements of the tasks. Task complexity was increased with the number of information cues about shape order (Jarvenpaa Citation1990) and number of consecutive transpositions required (Russell, Clark, and Stepney Citation2003). Participants were not able to write down or otherwise record the stages of their problem solving and so had to manage these information cues and transpositions mentally.

5.3.2. Interruptions

Interruptions were intermittently introduced while participants worked on their primary task. Immediate interruptions were administered as a pop-up window in the form of a modal dialog box in the style of an anti-malware notification, appearing without warning and positioned above the primary task. Negotiated interruptions were administered as a pop-up window positioned at the bottom right corner of the screen. Participants responded to a notification by clicking the hypertext link, which closed the notification window automatically, and displayed a new message window on top of the primary task that the participant was working on. These simulated messages reflected the realism of interruptions from modern anti-malware software.

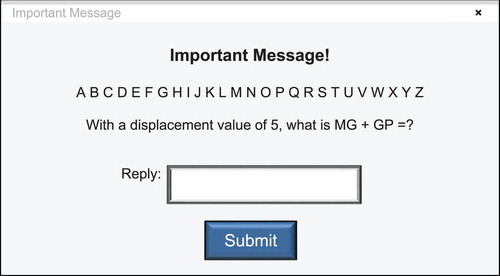

Both interruption types involved arithmetic tasks similar to the mental-arithmetic problems in prior interruptions studies (Gillie and Broadbent Citation1989), that place large demands on working memory (Seitz and Schumann-Hengsteler Citation2000) and can elicit the disruptive effects of user interruptions on task performance. Therefore, calculators were not permitted but the practice tasks and a pilot study were used ensure the mental arithmetic requirements were achievable. The interruption task required participants to add some two-digit numbers, with the numbers coded as letters. To decode the problem, a random displacement value (between two and nine) was given within the message body, indicating which letter represented zero for that task (for example, with a displacement value of two, letter B = 0, C = 1, D = 2, and so on). This random displacement value eliminated learning effects by ensuring that participants could not expect to dismiss each interruption with the same answers. The value range ensured a degree of comparative complexity. The alphabet was displayed in upper-case letters within the message body throughout the interruption task. Participants were required to enter the answer to the problem in digits, and were not expected to recode the answer into letters (Gillie and Broadbent Citation1989).

The pop-up dialog box featured a similar style to anti-malware dialog boxes that the user might encounter in an operational environment, but without being too similar to any existing anti-malware application so as not to bias responses. shows an example of this interruption task.

Participants were compelled to respond to messages immediately without delay, but they were given control over their responses to notifications. Both windows were identical in visual appearance, to eliminate extraneous variables that might influence task performance due to visual differences. After an interruption task was completed, the message window closed automatically, and participants were returned to the primary task that they were working on.

5.3.3. Integrating tasks and interruptions

Interruptions were arbitrarily timed to occur five seconds into the decision comprehension process for simple tasks and 10 s for complex tasks. During the decision response process, participants were engaged in the stages of processing and output, as they analyse the decision options presented to them. Here, interruptions were timed to occur one second into the decision response process for the primary tasks to potentially interrupt before a selection was made. As both comprehension and response processes for problem tasks were displayed as separate pages, these timings appropriately reflect the amount of cognitive processing required by participants at the input, processing and output stages of information processing, and ensured that participants had sufficient time to be involved in the problem tasks for the interruptions to affect them.

summarises the problem tasks, illustrating how participants received their task sequences: four treatment conditions in order, with eight of the tasks administered with the treatments while four problem tasks as base-case control.

5.4. Procedure

The experimental tasks and treatment conditions were pilot-tested and refined using 15 participants, to verify task comprehension and relevance. Most pilot participants managed to perform the tasks within the default timings. Participants reported that the interruption task was too difficult, and the results of the pilot test showed that participants committed a relatively high mean error rate in the interruption task (Mean = 3.7, s.d. = 2.5). The level of difficulty of the interruption task had to be contrived so that it was complex enough for participants to feel disrupted when they attend to it, but not overly complex as to cause participants to despair of performing well (McFarlane Citation2002). The two problem task complexity levels were therefore deemed appropriate for manipulating task complexity and the instructions for participants were reworded for clarity.

The experiment was conducted in an isolated unallocated academic office to remove potential environmental distractions (McFarlane Citation2002). Participants attended one at a time, and each participant signed a consent form before commencing. Participants were briefed on what the research involved and what would be done with their data after the experiment. Participants completed an entrance questionnaire before commencement, documenting their personal demographics, educational background, proficiency and degree of involvement in computing tasks.

Participants were given written instructions containing pictorial examples of the primary and interruption tasks, as well as a description of the four treatment conditions. The treatment conditions were labelled with letters A to D so as not to imply numerical ranking (McFarlane Citation2002). Participants performed the primary tasks and interruption tasks with numeric key-presses and proceeded along each task with a mouse-click. On finishing, participants completed an exit questionnaire to record their perceptions on the treatment conditions.

6. Analysis of data

A repeated measures, two-factor Analysis of Variance (ANOVA) was used to analyse the data using interruption type and primary task complexity as within-subjects factors. For significant interactions, a post hoc analysis of the main effects using a repeated measures one-factor ANOVA was used to assess the effects of interruption type on each level of primary task complexity. Effect sizes for ANOVA analyses are reported using partial η2 (Cohen Citation1973; Richardson Citation2011), which indicates the percentage of variance in the dependent variable that is attributable to the independent variable, and Cohen’s f (Cohen Citation1988). Cohen’s f values of 0.10, 0.25, and 0.50 (or greater) and Partial η2 values of 0.01, 0.06, and 0.14 (or greater) suggest small, medium, and large effect sizes, respectively (Richardson Citation2011).

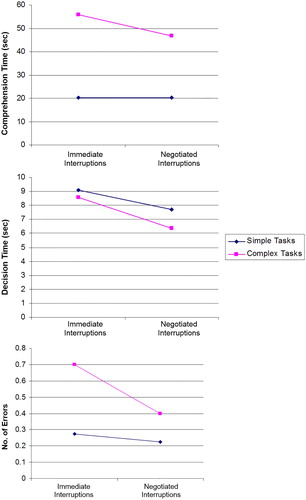

Without interruptions, participants took an average of 18.01 sec (s.d. = 5.77 sec) to comprehend simple primary tasks, and 42.82 sec (s.d. = 12.84 sec) to comprehend complex primary tasks. Without interruptions, participants took an average of 6.81 sec (s.d. = 1.83 sec) for decision responses in simple primary tasks, and 6.21 sec (s.d. = 2.07 sec) in complex primary tasks. Without interruptions, participants had an average decision error rate of 0.15 in 40 for simple primary tasks and 0.7 in 40 for complex primary tasks. presents the means and standard deviations of decision comprehension time, decision response time and decision accuracy across the treatments, with graphical representations shown in .

Figure 8. Variations of participants’ mean decision comprehension, decision time and accuracy across experimental treatments.

Table 6. Means and standard deviations of comprehension time, response time and accuracy (n = 40).

shows a difference of 0.4% between immediate and negotiated interruptions on participants’ mean decision comprehension time for simple primary tasks, but a difference of 19.1% between the interruption effects on mean decision comprehension time for complex primary tasks. There was a difference of 18.2% between the interruption effects on mean decision response time for simple primary tasks, and a 34.5% difference between the interruption effects on mean decision response time for complex primary tasks. Mean decision response time was extended for immediate interruptions compared to negotiated interruptions for both simple and complex primary tasks. There was a difference of 22.2% between the interruption effects on mean decision accuracy for simple primary tasks, and a difference of 75% between the interruption effects on mean decision accuracy for complex primary tasks.

shows the analysis of the interruption effects on decision comprehension time, decision response time and decision accuracy across primary task complexity. A significant interaction effect (F1,39 = 9.811, p = 0.003, Partial η2 = 0.20, f = 0.46) was found between primary task complexity and interruption type, which indicated that the effect of interruption type on decision comprehension time depended on the primary task complexity. For decision response time, no interaction effect (F1,39 = 0.519, p = 0.475, Partial η2 = 0.01, f = 0.00) was found between primary task complexity and interruption type. H2 was therefore rejected. For decision accuracy, a significant interaction effect (F1,39 = 4.149, p = 0.048, Partial η2 = 0.09, f = 0.28) was found between primary task complexity and interruption type, which indicated that the effect of interruption type on decision accuracy was affected by primary task complexity.

Table 7. Repeated measures ANOVA results for comprehension time, response time and accuracy with interruptions across primary task complexity.

The significant interaction effect permits a post hoc analysis on the main effects of interruption type on decision comprehension time and decision accuracy for each level of primary task complexity (see ). For decision comprehension time, there was no significant difference between the interruption effects and decision comprehension time in simple tasks (F1,39 = 0.004, p = 0.951, Partial η2 = 0.01, f = 0.00). The analysis revealed a significant increase in decision comprehension time (F1,39 = 11.594, p = 0.002, Partial η2 = 0.22, f = 0.50) as participants received immediate interruptions compared to negotiated interruptions in complex primary tasks. H1 was therefore accepted. For decision accuracy, there was no significant difference between the interruption effects in simple primary tasks (F1,39 = 0.494, p = 0.486, Partial η2 = 0.02, f = 0.00), but there was a significant decrease in decision accuracy (F1,39 = 6.882, p = 0.012, Partial η2 = 0.14, f = 0.37) when participants received immediate interruptions compared to negotiated interruptions in complex primary tasks. H3 was therefore accepted.

Table 8. Post-hoc analysis of main effects for comprehension time and decision accuracy.

7. Tests of subjective effects

The subjective measurements were derived from participants’ rankings of the treatment conditions based on their preference, ease of control, feeling of interruption, distraction and complexity of primary task resumption in the exit questionnaire. A one-way ANOVA was used to analyse the rankings of the treatment conditions for each level of primary task complexity during the comprehension process and decision-making process, to determine whether participants’ perceptions of the interruption effects were consistent with the experimental findings. presents the results of this testing, showing the outcomes of the subjective decision variables for each decision component.

Table 9. One-way ANOVA results for participants’ rankings for subjective effects.

The analysis revealed significant differences in participants’ perceptions of distraction and interruption, ease of control and complexity of task resumption after immediate interruptions compared to negotiated interruptions in both simple and complex primary tasks during both the decision comprehension and response stages. The analysis also revealed significant differences in participants’ rankings of these constructs for immediate and negotiated interruptions in both simple and complex primary tasks during the decision response stage. These findings corroborate the experimental process and results. As shown in , participants reported that negotiated interruptions mitigate their feelings of distraction and interruption, allow for greater ease of control and alleviated the complexity of primary task resumption compared to immediate interruptions.

Table 10. Mean participant rankings of treatment conditions.

8. Conclusions

Anti-malware software must be kept up to date in order to be effective. Notifications of anti-malware software updates can disrupt a user’s tasks and thought processes. We simulated a personal desktop processing environment to investigate whether immediate interruptions are more disruptive than negotiated interruptions to users’ decision-making performance.

The results showed that both immediate and negotiated interruptions disrupt user’s decision processes and outcomes. Immediate interruptions exhibited poorer decision performance than negotiated interruptions for decision comprehension time (H1 is supported) and decision accuracy (H3 is supported), but not in decision response time (H2 is not supported). Task complexity exacerbated these negative effects. The results also suggest that these disruptive effects are mitigated when users can negotiate when or whether to deal with the interruptions. Subjective effects in post-hoc analysis also indicate that negotiated interruptions were perceived to be less disruptive, affording both control over interruption effects and mitigation of task complexity. Importantly, because respondents had only 20 min to complete the exercise, it can be seen that these outcomes appear even within a short period of time. summarises the experimental findings for each decision variable.

Table 11. Summary of findings from the experiment.

Practically, the results indicate that immediate interruptions disrupt and degrade users’ decision efficiency and accuracy in complex decision processes. Users also found negotiated interruptions to be more desirable than immediate interruptions in their decision processes. Anti-malware application designers should incorporate features that enhance existing negotiation mechanisms. The findings suggest that if anti-malware software manufacturers want users to make good decisions regarding matters of endpoint security, they should allow users to negotiate anti-malware updates and notices. Although this finding may seem counter-intuitive, we argue that this poorer decision-making with regard to endpoint security might undermine the user’s ability to make other decisions regarding the security of their desktop environment. Forcing a user to update without considering the implications of this update may undermine the user’s ability to make other decisions regarding endpoint security.

More broadly, a key implication of this work is that forcing users to update their anti-malware software immediately in effect replicates the pressure techniques employed by malware authors (Symantec Labs Citation2017) to compel users to make bad endpoint security decisions. An extension of our findings is that while denying users the ability to negotiate their anti-malware update might result in more immediate operational security, the user’s decision-making ability suffers. As malware attacks are becoming more sophisticated, it is likely to be increasingly important to ensure that users feel sufficiently empowered to make good endpoint security decisions that is compatible with their primary task commitments. By allowing users to negotiate their anti-malware response, our evidence shows that users feel greater ease of control and lower feelings of distraction and interruption.

A theoretical implication of this research is that task complexity negatively moderates the differential effects between immediate and negotiated types of interruptions on decision efficiency and accuracy. The differential effects between both interruption types widen as cognitive task complexity increases, across decision stages. These findings point to the importance of task complexity as a factor in explaining the differential effects between immediate and negotiated types of user interruptions on users’ decision performance. The findings suggest that interruption effects should not be studied independently of task complexity.

Another implication of this research is that the disruptive effects of immediate interruptions on users’ efficiency and accuracy for complex decision processes can be mitigated by the use of negotiated interruptions. The findings show that it should be possible to alleviate the burden on users’ cognitive limitations with negotiated interruptions, thereby mitigating reduced efficiency and accuracy of their decision performance that might result from these interruptions. This implication reinforces the notion that negotiated interruptions are more desirable for users in complex situations than immediate interruptions.

The study may be open to two limitations. Although the use of controlled experimentation permitted reliable inferences to be made about the findings, the increased control afforded by a laboratory experiment was weighed against limitations of realism and generalizability of problem tasks. Although the tasks were aimed at maximising the external validity by defining the way in which users are cognitively engaged (Bonner Citation1994), the generalizability is limited to where an individual systematically engages their cognitive ability in decision processes. In a real-world setting, tasks and decision processes may not be as well-defined as in the experiment, thereby requiring varying levels of cognitive processing by users. Second, we did not impose any skill requirements on participants beyond their technology ability (Plumlee Citation2002). Users with greater skepticism or risk aversion may approach updating their anti-malware software differently.

The findings suggest several avenues for future research. First, future research could examine the effects of the task resumption process that is experienced by users following an interruption in order to identify the optimal time at which to remind users of their anti-malware updates. In particular, study of the resumption process would provide understanding of the precursors to the disruptive effects. It would also be valuable to examine how and why users would utilise the negotiation mechanism afforded by task-relevant interruptions and whether there are subsequent effects on their decision processes and outcomes. Second, it would be interesting to examine the potential effect of security interruption alerts on user anxiety and mental wellbeing, particularly in the context of ongoing workflow. Research in this space could also examine the degree to which users are avoiding flow disruption by acquiescing to endpoint security notifications. A third area for future research could involve understanding security interruptions across user modalities in different device contexts. If mobile devices afford the user a greater variety of task environments than personal computers, then it is also possible that users exhibit different security-related avoidance/conformance behaviours. We selected a modal window design to reflect a general endpoint security software dialogue box. However, changes to the appearance of these dialogue boxes as a result of operating system updates may have an effect on user propensity to comply with or defy the instruction.

Disclosure statement

No potential conflict of interest was reported by the author(s).

References

- Abazari, F., M. Analoui, and H. Takabi. 2016. “Effect of Anti-Malware Software on Infectious Nodes in Cloud Environment.” Computers & Security 58: 139–148.

- Adamczyk, P. D., and B. P. Bailey. 2004. “If Not Now, When? The Effects of Interruption at Different Moments Within Task Execution.” In Proceedings of the ACM Conference on Human Factors in Computing Systems CHI 2004, edited by Elizabeth Dykstra-Erickson and Manfred Tscheligi, 271–278. Vienna.

- Addas, S., and A. Pinsonneault. 2018. “Theorizing the Multilevel Effects of Interruptions and the Role of Communication Technology.” Journal of the Association for Information Systems 19 (11): 1097–1129.

- Adler, R. F., and R. Benbunan-Fich. 2013. “Self-Interruptions in Discretionary Multitasking.” Computers in Human Behavior 29: 1441–1449.

- Al-Saleh, M. I., A. M. Espinoza, and J. R. Crandall. 2013. “Antivirus Performance Characterisation: System-Wide View.” IET Information Security 7: 126–133.

- Altmann, E. M., and J. G. Trafton. 2004. “Task Interruption: Resumption Lag and the Role of Cues.” In Proceedings of the 26th Annual Conference of the Cognitive Science Society, edited by Kenneth Forbus, Dedre Gentner, and Terry Regier, 43–48. Chicago, IL, United States: Lawrence Erlbaum Associates, Inc..

- Anderson, C. L. and R. Agarwal. 2010. “Practicing Safe Computing: A Multimethod Empirical Examination of Home Computer User Security Behavioral Intentions.” MIS Quarterly 34: 613.

- Anderson, J. R., M. V. Albert, and J. M. Fincham. 2005. “Tracing Problem Solving in Real Time: fMRI Analysis of the Subject-Paced Tower of Hanoi.” Journal of Cognitive Neuroscience 17: 1261–1274.

- Avrahami, D., and S. Hudson. 2004. “QnA: Augmenting an Instant Messaging Client to Balance User Responsiveness and Performance.” In ACM Conference on Computer Supported Cooperative Work CSCW’04, edited by Jim Herbsleb and Gary Olson, 515–518. Chicago, IL, United States: Association for Computing Machinery.

- Bailey, B. P., and S. T. Iqbal. 2008. “Understanding Changes in Mental Workload During Execution of Goal-Directed Tasks and its Application for Interruption Management.” ACM Transactions on Computer-Human Interaction 14: 1–28.

- Bailey, B. P., and J. A. Konstan. 2006. “On the Need for Attention-Aware Systems: Measuring Effects of Interruption on Task Performance, Error Rate and Affective State.” Computers in Human Behavior 22: 685–708.

- Bailey, B. P., J. A. Konstan, and J. V. Carlis. 2001. “The Effects of Interruptions on Task Performance, Annoyance and Anxiety.” In Proceedings of INTERACT’01, edited by M Hirose, 593–601. Tokyo, Japan: IOS Press.

- Baillette, P., Y. Barlette, and A. Leclercq-Vandelannoitte. 2018. “Bring Your Own Device in Organizations: Extending the Reversed IT Adoption Logic to Security Paradoxes for CEOs and End Users.” International Journal of Information Management 43: 76–84.

- Baskerville, R., F. Rowe, and F.-C. Wolff. 2018. “Integration of Information Systems and Cybersecurity Countermeasures: An Exposure to Risk Perspective.” ACM SIGMIS Database: The DATABASE for Advances in Information Systems 49: 33–52.

- Basoglu, K. A., M. A. Fuller, and J. T. Sweeney. 2009. “Investigating the Effects of Computer Mediated Interruptions: An Analysis of Task Characteristics and Interruption Frequency on Financial Performance.” International Journal of Accounting Information Systems 10: 177–189.

- Beynon, M., S. Rasmequan, and S. Russ. 2002. “A New Paradigm for Computer-Based Decision Support.” Decision Support Systems 33: 127–142.

- Blythe, J. M., and L. Coventry. 2018. “Costly but Effective: Comparing the Factors That Influence Employee Anti-Malware Behaviours.” Computers in Human Behavior 87: 87–97.

- Bonner, S. E. 1994. “A Model of the Effects of Audit Task Complexity.” Accounting, Organizations and Society 19: 213–234.

- Bonny, P., S. Goode, and D. Lacey. 2015. “Revisiting Employee Fraud: Gender, Investigation Outcomes and Offender Motivation.” Journal of Financial Crime 22: 447–467.

- Bontchev, V. 1996. “Possible Macro Virus Attacks and How to Prevent Them.” Computers & Security 15: 595–626.

- Brooks, J. L. 2012. "Counterbalancing for Serial Order Carryover Effects in Experimental Condition Orders." Psychological Methods 17:4 600-614

- Bubaš, G., T. Orehovački, and M. Konecki. 2008. “Factors and Predictors of Online Security and Privacy Behavior.” Journal of Information and Organizational Sciences 32: 79–98.

- Burmistrov, I., and A. Leonova. 2003. “Do Interrupted Users Work Faster or Slower? The Micro-Analysis of Computerized Text-Editing Task.” In Human-Computer Interaction: Theory and Practice (Part 1) – Proceedings of HCI International 2003, edited by J. Jacko and C. Stephanidis, 621–625. Crete, Greece: Lawrence Erlbaum Associates.

- Burns, A. J., C. Posey, T. L. Roberts, and P. Benjamin Lowry. 2017. “Examining the Relationship of Organizational Insiders’ Psychological Capital With Information Security Threat and Coping Appraisals.” Computers in Human Behavior 68: 190–209.

- Chen, A., and E. Karahanna. 2014. “Boundaryless Technology: Understanding the Effects of Technology-Mediated Interruptions Across the Boundaries Between Work and Personal Life.” AIS Transactions on Human-Computer Interaction 6: 16–36.

- Chen, H., and W. Li. 2019. “Understanding Commitment and Apathy in is Security Extra-Role Behavior from a Person-Organization Fit Perspective.” Behaviour & Information Technology 38: 454–468.

- Chenoweth, T., T. Gattiker, and K. Corral. 2019. “Adaptive and Maladaptive Coping With an It Threat.” Information Systems Management 36: 24–39.

- Cohen, J. 1973. “Eta-Squared and Partial Eta-Squared in Fixed Factor ANOVA Designs.” Educational and Psychological Measurement 33: 107–112.

- Cohen, J. 1988. Statistical Power Analysis for the Behavioral Sciences. New York, NY: Routledge.

- Cutrell, E., M. Czerwinski, and E. Horvitz. 2001. “Disruption and Memory: Effects of Messaging Interruptions on Memory and Performance.” In Human-Computer Interaction: INTERACT’01, edited by M. Hirose, 263–269. Tokyo: IOS Press (for IFIP).

- Czerwinski, M. E., E. Cutrell, and E. Horvitz. 2000a. “Instant Messaging: Effects of Relevance and Time.” In People and Computers XIV: Proceedings of HCI 2000, edited by Sharon McDonald, Yvonne Waern, and Gilbert Cockton, 71–76. Sunderland, United Kingdom: British Computer Society.

- Czerwinski, M. E., E. Cutrell, and E. Horvitz. 2000b. “Instant Messaging and Interruption: Influence of Task Type on Performance.” In Proceedings of OZCHI 2000: Interfacing Reality in the New Millennium, edited by C. Paris, N. Ozkan, S. Howard, and S. Lu, 356–361. Sydney, Australia: The University of Technology, Sydney.

- Dabbish, L., and R. Kraut. 2003. “Coordinating Communication: Awareness Displays and Interruption.” In Proceedings of the ACM Conference on Human Factors in Computing Systems (CHI’03): Extended Abstracts, edited by Gilbert Cockton and Panu Korhonen, 786–787. New York: ACM Press.

- Dodel, M., and G. Mesch. 2018. “Inequality in Digital Skills and the Adoption of Online Safety Behaviors.” Communication & Society 21: 712–728.

- Doherty, N. F., and S. T. Tajuddin. 2018. “Towards a User-Centric Theory of Value-Driven Information Security Compliance.” Information Technology & People 31: 348–367.

- Eatchel, K. A., H. Kramer, and F. Drews. 2012. “The Effects of Interruption Context on Task Performance.” Proceedings of the Human Factors and Ergonomics Society Annual Meeting 56: 2118–2122.

- Eyrolle, H., and J. M. Cellier. 2000. “The Effects of Interruptions in Work Activity: Field and Laboratory Results.” Applied Ergonomics 31: 537–543.

- Faruki, P., A. Bharmal, V. Laxmi, V. Ganmoor, M. S. Gaur, M. Conti, and M. Rajarajan. 2014. “Android Security: A Survey of Issues, Malware Penetration, and Defenses.” IEEE Communications Surveys & Tutorials 17: 998–1022.

- Fonner, K. L., and M. E. Roloff. 2012. “Testing the Connectivity Paradox: Linking Teleworkers’ Communication Media Use to Social Presence, Stress from Interruptions, and Organizational Identification.” Communication Monographs 79: 205–231.

- Franke, J. L., J. J. Daniels, and D. C. McFarlane. 2002. “Recovering Context After Interruption.” In 24th Annual Meeting of the Cognitive Science Society, edited by Wayne Gray and Christian Schunn, 310–315. Fairfax, VA: Lawrence Erlbaum Associates.

- Furnell, S., and N. Clarke. 2012. “Power to the People? The Evolving Recognition of Human Aspects of Security.” Computers & Security 31: 983–988.

- Galluch, P. S., V. Grover, and J. B. Thatcher. 2015. “Interrupting the Workplace: Examining Stressors in an Information Technology Context.” Journal of the Association for Information Systems 16: 1–47.

- Garrett, R., and J. Danziger. 2008. “Interruption Management? Instant Messaging and Disruption in the Workplace.” Journal of Computer-Mediated Communication 13: 23–42.

- Gartner Research. 2018. Magic Quadrant for Endpoint Protection Platforms. Stamford, CT: Gartner Research Inc.

- Gillie, T., and D. Broadbent. 1989. “What Makes Interruptions Disruptive? A Study of Length, Similarity and Complexity.” Psychological Research 50: 243–250.

- Goode, Sigi, Hartmut Hoehle, Viswanath Venkatesh, and Susan A. Brown. 2017. “User Compensation as a Data Breach Recovery Action: An Investigation of the Sony PlayStation Network Breach.” MIS Quarterly 41 (3): 703–727. 10.25300/MISQ.

- Goode, S., and D. Lacey. 2011. “Detecting Complex Account Fraud in the Enterprise: The Role of Technical and Non-Technical Controls.” Decision Support Systems 50: 702–714.

- Goode, S., C. Lin, J. C. Tsai, and J. J. Jiang. 2015. “Rethinking the Role of Security in Client Satisfaction With Software-as-a-Service (SaaS) Providers.” Decision Support Systems 70: 73–85.

- Gupta, A., H. Li, and R. Sharda. 2013. “Should I Send This Message? Understanding the Impact of Interruptions, Social Hierarchy and Perceived Task Complexity on User Performance and Perceived Workload.” Decision Support Systems 55: 135–145.

- Gupta, A., R. Sharda, and R. A. Greve. 2011. “You’ve Got Email! Does it Really Matter to Process Emails Now or Later?” Information Systems Frontiers 13: 637–653.

- Gurung, A., X. Luo, and Q. Liao. 2009. “Consumer Motivations in Taking Action Against Spyware: An Empirical Investigation.” Information Management & Computer Security 17: 276–289.

- Hanus, B., J. C. Windsor, and Y. Wu. 2018. “Definition and Multidimensionality of Security Awareness: Close Encounters of the Second Order.” ACM SIGMIS Database: The DATABASE for Advances in Information Systems 49: 103–133.

- Herath, T., and H. R. Rao. 2009. “Protection Motivation and Deterrence: A Framework for Security Policy Compliance in Organisations.” European Journal of Information Systems 18: 106–125.

- Herold, R. 1995. “A Corporate Anti-Virus Strategy.” International Journal of Network Management 5: 189–192.

- Highland, H. J. 1997. “Procedures to Reduce the Computer Virus Threat.” Computers & Security 16: 439–449.

- Hodgetts, H. M., and D. M. Jones. 2003. “Interruptions in the Tower of London Task: Can Preparation Minimize Disruption?.” In 47th Annual Meeting of the Human Factors and Ergonomics Society. Denver, CO: Human Factors and Ergonomics Society.

- Hodgetts, H. M., and D. M. Jones. 2007. “Reminders, Alerts and Pop-Ups: The Cost of Computer-Initiated Interruptions.” In Human-Computer Interaction: Interaction Design and Usability – Proceedings of HCI International 2007, Part I (Lecture Notes in Computer Science), edited by Julie Jacko, 818–826. Berlin: Springer.

- Höne, K., and J. H. P. Eloff. 2002. “What Makes an Effective Information Security Policy?” Network Security 2002: 14–16.

- Huang, D.-L., P.-L. P. Rau, H. Su, N. Tu, and C. Zhao. 2010. “Effects of Communication Style and Time Orientation on Notification Systems and Anti-Virus Software.” Behaviour & Information Technology 29: 483–495.

- Ifinedo, P. 2016. “Critical Times for Organizations: What Should Be Done to Curb Workers’ Noncompliance With IS Security Policy Guidelines?” Information Systems Management 33: 30–41.

- Iqbal, S. T., and E. Horvitz. 2007a. “Disruption and Recovery of Computing Tasks: Field Study, Analysis, and Directions.” In Proceedings of the SIGCHI Conference on Human Factors in Computing Systems (CHI’07), edited by Mary Beth Rosson and David Gilmore, 677–686. New York: ACM Press.

- Iqbal, S. T., and E. Horvitz. 2007b. “Conversations Amidst Computing: A Study of Interruptions and Recovery of Task Activity.” In Proceedings of 11th International Conference on User Modeling (UM 2007), (Lecture Notes in Computer Science), edited by C. Conati, K. McCoy, and G. Paliouras, 350–354. Berlin: Springer.

- Jackson, T., R. Dawson, and D. Wilson. 2003. “Reducing the Effect of E-mail Interruptions on Employees.” International Journal of Information Management 23: 55–65.

- Jansen, J., and P. van Schaik. 2018. “Testing a Model of Precautionary Online Behaviour: The Case of Online Banking.” Computers in Human Behavior 87: 371–383.

- Jansen, J., S. Veenstra, R. Zuurveen, and W. Stol. 2016. “Guarding Against Online Threats: Why Entrepreneurs Take Protective Measures.” Behaviour & Information Technology 35: 368–379.

- Jarvenpaa, S. L. 1990. “Graphic Displays in Decision Making – The Visual Salience Effect.” Journal of Behavioral Decision Making 3: 247–262.

- Jett, Q. R., and J. M. George. 2003. “Work Interrupted: A Closer Look at the Role of Interruptions in Organizational Life.” Academy of Management Review 28: 494–507.

- Johnston, A. C., M. Warkentin, M. McBride, and L. Carter. 2016. “Dispositional and Situational Factors: Influences on Information Security Policy Violations.” European Journal of Information Systems 25: 231–251.

- Johnston, A. C., M. Warkentin, and M. Siponen. 2015. “An Enhanced Fear Appeal Rhetorical Framework: Leveraging Threats to the Human Asset Through Sanctioning Rhetoric.” MIS Quarterly 39: 113–134.

- Kelton, A. S., R. R. Pennington, and B. M. Tuttle. 2010. “The Effects of Information Presentation Format on Judgment and Decision Making: A Review of the Information Systems Research.” Journal of Information Systems 24: 79–105.

- Kim, B., A. Barua, and A. B. Whinston. 2002. “Virtual Field Experiments for a Digital Economy: A New Research Methodology for Exploring an Information Economy.” Decision Support Systems 32: 215–231.

- Kim, D. W., P. Yan, and J. Zhang. 2015. “Detecting Fake Anti-Virus Software Distribution Webpages.” Computers & Security 49: 95–106.

- Lee, Y., and K. R. Larsen. 2009. “Threat or Coping Appraisal: Determinants of SMB Executives’ Decision to Adopt Anti-Malware Software.” European Journal of Information Systems 18: 177–187.

- Lee, A. R., S.-M. Son, and K. K. Kim. 2016. “Information and Communication Technology Overload and Social Networking Service Fatigue: A Stress Perspective.” Computers in Human Behavior 55: 51–61.

- Leukfeldt, E. R. 2014. “Phishing for Suitable Targets in The Netherlands: Routine Activity Theory and Phishing Victimization.” Cyberpsychology Behavior, and Social Networking 17: 551–555.

- Lévesque, F. L., S. Chiasson, A. Somayaji, and J. M. Fernandez. 2018. “Technological and Human Factors of Malware Attacks: A Computer Security Clinical Trial Approach.” ACM Transactions on Privacy and Security 21: 1–30.

- Levy, E. C., S. Rafaeli, and Y. Ariel. 2016. “The Effect of Online Interruptions on the Quality of Cognitive Performance.” Telematics and Informatics 33: 1014–1021.

- Li, H., A. Gupta, X. Luo, and M. Warkentin. 2011. “Exploring the Impact of Instant Messaging on Subjective Task Complexity and User Satisfaction.” European Journal of Information Systems 20: 139–155.

- Liao, H.-C., and Y.-H. Wang. 2006. “A Memory Symptom-Based Virus Detection Approach.” International Journal of Network Security 2: 219–227.

- Mano, R. S., and G. S. Mesch. 2010. “E-mail Characteristics, Work Performance and Distress.” Computers in Human Behavior 26: 61–69.

- Mansi, G., and Y. Levy. 2013. “Do Instant Messaging Interruptions Help or Hinder Knowledge Workers’ Task Performance?” International Journal of Information Management 33: 591–596.

- Marsden, J. R., R. Pakath, and K. Wibowo. 2002. “Decision Making Under Time Pressure With Different Information Sources and Performance-Based Financial Incentives—Part 1.” Decision Support Systems 34: 75–97.

- Martens, M., R. De Wolf, and L. De Marez. 2019. “Investigating and Comparing the Predictors of the Intention Towards Taking Security Measures Against Malware, Scams and Cybercrime in General.” Computers in Human Behavior 92: 139–150.

- Marulanda-Carter, L., and T. W. Jackson. 2012. “Effects of E-mail Addiction and Interruptions on Employees.” Journal of Systems and Information Technology 14: 82–94.

- McAfee Labs. 2018a. McAfee Labs Threats Report, December 2018. Santa Clara, CA: McAfee Labs.

- McAfee Labs. 2018b. McAfee Labs Threats Report, March 2018. Santa Clara, CA: McAfee Labs.

- McFarlane, D. C. 1998. “Interruption of People in Human-Computer Interaction.” PhD., George Washington University.

- McFarlane, D. C. 2002. “Comparison of Four Primary Methods for Coordinating the Interruption of People in Human-Computer Interaction.” Human-Computer Interaction 17: 63–139.

- McFarlane, D. C., and K. A. Latorella. 2002. “The Scope and Importance of Human Interruption in Human-Computer Interaction Design.” Human-Computer Interaction 17: 1–61.

- McGill, T., and N. Thompson. 2017. “Old Risks, New Challenges: Exploring Differences in Security Between Home Computer and Mobile Device Use.” Behaviour & Information Technology 36: 1111–1124.

- Menard, P., G. J. Bott, and R. E. Crossler. 2017. “User Motivations in Protecting Information Security: Protection Motivation Theory Versus Self-Determination Theory.” Journal of Management Information Systems 34: 1203–1230.

- Menard, P., R. Gatlin, and M. Warkentin. 2014. “Threat Protection and Convenience: Antecedents of Cloud-Based Data Backup.” Journal of Computer Information Systems 55: 83–91.

- Menard, P., M. Warkentin, and P. B. Lowry. 2018. “The Impact of Collectivism and Psychological Ownership on Protection Motivation: A Cross-Cultural Examination.” Computers & Security 75: 147–166.

- Milosevic, N., A. Dehghantanha, and K.-K. R. Choo. 2017. “Machine Learning Aided Android Malware Classification.” Computers & Electrical Engineering 61: 266–274.

- Min, B., V. Varadharajan, U. Tupakula, and M. Hitchens. 2014. “Antivirus Security: Naked During Updates.” Software: Practice and Experience 44: 1201–1222.

- Moe, W. W. 2006. “A Field Experiment to Assess the Interruption Effect of Pop-Up Promotions.” Journal of Interactive Marketing 20: 34–44.

- Nystrom, L. E., T. S. Braver, F. W. Sabb, M. R. Delgado, D. C. Noll, and J. D. Cohen. 2000. “Working Memory for Letters, Shapes and Locations: fMRI Evidence Against Stimulus-Based Regional Organization in Human Prefrontal Cortex.” NeuroImage 11: 424–446.

- Okane, P., S. Sezer, and K. McLaughlin. 2011. “Obfuscation: The Hidden Malware.” IEEE Security & Privacy Magazine 9: 41–47.

- Ortiz de Guinea, A. 2016. “A Pragmatic Multi-Method Investigation of Discrepant Technological Events: Coping, Attributions, and ‘Accidental’ Learning.” Information & Management 53: 787–802. doi:10.1016/j.im.2016.03.003.

- Ou, C. X. J., and R. M. Davison. 2011. “Interactive or Interruptive? Instant Messaging at Work.” Decision Support Systems 52: 61–72.

- Ou, C. X., C. Ling Sia, and C. K. Hui. 2013. “Computer-Mediated Communication and Social Networking Tools at Work.” Information Technology & People 26: 172–190.

- Peterson, A. P. 1992. “Counteracting Viruses in an MS-DOS Environment.” Information Systems Security 1: 58–65.

- Plumlee, R. D. 2002. “Discussion of Impression Management with Graphs: Effects on Choices.” Journal of Information Systems 16: 203–206.

- Posey, C., T. L. Roberts, and P. B. Lowry. 2015. “The Impact of Organizational Commitment on Insiders’ Motivation to Protect Organizational Information Assets.” Journal of Management Information Systems 32: 179–214.

- Posey, C., T. L. Roberts, P. B. Lowry, and R. T. Hightower. 2014. “Bridging the Divide: A Qualitative Comparison of Information Security Thought Patterns Between Information Security Professionals and Ordinary Organizational Insiders.” Information & Management 51: 551–567.

- Post, G., and A. Kagan. 1998. “The Use and Effectiveness of Anti-Virus Software.” Computers & Security 17: 589–599.

- Ramachandran, S., C. Rao, T. Goles, and G. Dhillon. 2013. “Variations in Information Security Cultures Across Professions: A Qualitative Study.” Communications of the Association for Information Systems 33 (11): 163–204.

- Rennecker, J., and L. Godwin. 2005. “Delays and Interruptions: A Self-Perpetuating Paradox of Communication Technology Use.” Information and Organization 15: 247–266.

- Richardson, J. T. E. 2011. “Eta Squared and Partial Eta Squared as Measures of Effect Size in Educational Research.” Educational Research Review 6: 135–147.

- Robertson, T. J., S. Prabhakararao, M. Burnett, C. Cook, J. R. Ruthruff, L. Beckwith, and A. Phalgune. 2004. “Impact of Interruption Style on End-User Debugging.” In Proceedings of the 2004 Conference on Human Factors in Computing Systems, edited by Elizabeth Dykstra-Erickson and Manfred Tscheligi, 287–294. Vienna, Austria: Association for Computing Machinery.

- Russell, M. D., J. A. Clark, and S. Stepney. 2003. “Making the Most of Two Heuristics: Breaking Transposition Ciphers with Ants.” In CEC 2003: International Conference on Evolutionary Computation IEEE, edited by Rahul Sarker, Robert Reynolds, Hussein Abbass, Kay Chen Tan, Bob McKay, Daryl Essam, and Tom Gedeon, 2653–2658. Canberra, Australia: IEEE Press.

- Russell, E., L. M. Purvis, and A. Banks. 2007. “Describing the Strategies Used for Dealing With Email Interruptions According to Different Situational Parameters.” Computers in Human Behavior 23: 1820–1837.

- Safa, N. S., M. Sookhak, R. Von Solms, S. Furnell, N. A. Ghani, and T. Herawan. 2015. “Information Security Conscious Care Behaviour Formation in Organizations.” Computers & Security 53: 65–78.

- Safa, N. S., R. Von Solms, and S. Furnell. 2016. “Information Security Policy Compliance Model in Organizations.” Computers & Security 56: 70–82.

- Salvucci, D. D., and P. Bogunovich. 2010. “Multitasking and Monotasking: The Effects of Mental Workload on Deferred Task Interruptions.” In Proceedings of the SIGCHI Conference on Human Factors in Computing Systems, CHI ‘10, edited by Elizabeth Mynatt, Geraldine Fitzpatrick, Scott Hudson, Keith Edwards, and Tom Rodden, 85–88. New York, NY: Association for Computing Machinery.

- Seitz, K., and R. Schumann-Hengsteler. 2000. “Mental Multiplication and Working Memory.” European Journal of Cognitive Psychology 12: 552–570.

- Shropshire, J. D., M. Warkentin, and A. C. Johnston. 2010. “Impact of Negative Message Framing on Security Adoption.” Journal of Computer Information Systems 51: 41–51.

- Solingen, R., E. Berghout, and F. Latum. 1998. “Interrupts: Just a Minute Never Is.” IEEE Software 15: 97–103.

- Sophos. 2019. Sophos Labs 2019 Threat Report. Abingdon: Sophos, Ltd.

- Speier, C., I. Vessey, and J. S. Valacich. 2003. “The Effects of Interruptions, Task Complexity, and Information Presentation on Computer-Supported Decision-Making Performance.” Decision Sciences 34: 771–797.

- Steinbart, P. J., M. J. Keith, and J. Babb. 2016. “Examining the Continuance of Secure Behavior: A Longitudinal Field Study of Mobile Device Authentication.” Information Systems Research 27: 219–239.

- Stich, J.-F., M. Tarafdar, and C. L. Cooper. 2018. “Electronic Communication in the Workplace: Boon or Bane?” Journal of Organizational Effectiveness: People and Performance 5: 98–106.

- Stich, J.-F., M. Tarafdar, C. L. Cooper, and P. Stacey. 2017. “Workplace Stress from Actual and Desired Computer-Mediated Communication Use: A Multi-Method Study.” New Technology, Work and Employment 32: 84–100.

- Sukwong, O., H. S. Kim, and J. C. Hoe. 2011. “Commercial Antivirus Software Effectiveness: An Empirical Study.” IEEE Computer 44: 63–70.

- Sykes, E. R. 2011. “Interruptions in the Workplace: A Case Study to Reduce Their Effects.” International Journal of Information Management 31: 385–394.

- Symantec Labs. 2017. Internet Security Threat Report – Ransomware 2017. Mountain View, CA: Symantec Corporation.

- Teer, F. P., S. E. Kruck, and G. P. Kruck. 2007. “Empirical Study of Students’ Computer Security Practices/Perceptions.” Journal of Computer Information Systems 47: 105–110.

- Tsai, H. S., M. Jiang, S. Alhabash, R. LaRose, N. J. Rifon, and S. R. Cotten. 2016. “Understanding Online Safety Behaviors: A Protection Motivation Theory Perspective.” Computers & Security 59: 138–150.

- Visinescu, L. L., O. Azogu, S. D. Ryan, Y. “Andy” Wu, and D. J. Kim. 2016. “Better Safe Than Sorry: A Study of Investigating Individuals’ Protection of Privacy in the Use of Storage as a Cloud Computing Service.” International Journal of Human–Computer Interaction 32: 885–900.

- Wachyudy, D., and S. Sumiyana. 2018. “Could Affectivity Compete Better Than Efficacy in Describing and Explaining Individuals’ Coping Behavior: An Empirical Investigation.” The Journal of High Technology Management Research 29: 57–70.

- Wang, Z., P. David, J. Srivastava, S. Powers, C. Brady, J. D’Angelo, and J. Moreland. 2012. “Behavioral Performance and Visual Attention in Communication Multitasking: A Comparison Between Instant Messaging and Online Voice Chat.” Computers in Human Behavior 28: 968–975.

- Warkentin, M., A. C. Johnston, and J. Shropshire. 2011. “The Influence of the Informal Social Learning Environment on Information Privacy Policy Compliance Efficacy and Intention.” European Journal of Information Systems 20: 267–284.

- White, G., T. Ekin, and L. Visinescu. 2017. “Analysis of Protective Behavior and Security Incidents for Home Computers.” Journal of Computer Information Systems 57: 353–363.

- Williams, C. K., D. Wynn, R. Madupalli, E. Karahanna, and B. K. Duncan. 2014. “Explaining Users’ Security Behaviors With the Security Belief Model.” Journal of Organizational and End User Computing 26: 23–46.

- Xia, L., and D. Sudharshan. 2002. “Effects of Interruptions on Consumer Online Decision Processes.” Journal of Consumer Psychology 12: 265–280.

- Ye, Y., T. Li, D. Adjeroh, and S. S. Iyengar. 2017. “A Survey on Malware Detection Using Data Mining Techniques.” ACM Computing Surveys 50: 41.

- Yoo, C. W., G. L. Sanders, and R. P. Cerveny. 2018. “Exploring the Influence of Flow and Psychological Ownership on Security Education, Training and Awareness Effectiveness and Security Compliance.” Decision Support Systems 108: 107–118.

- Yoon, C., J.-W. Hwang, and R. Kim. 2012. “Exploring Factors That Influence Students’ Behaviors in Information Security.” Journal of Information Systems Education 23: 407–415.

- Yoon, C., and H. Kim. 2013. “Understanding Computer Security Behavioral Intention in the Workplace: An Empirical Study of Korean Firms.” Information Technology & People 26: 401–419.

- Zenkin, D. 2001. “Fighting Against the Invisible Enemy: Methods for Detecting an Unknown Virus.” Computers & Security 20: 316–321.

- Zhang, L., and W. C. McDowell. 2009. “Am I Really at Risk? Determinants of Online Users’ Intentions to Use Strong Passwords.” Journal of Internet Commerce 8: 180–197.