?Mathematical formulae have been encoded as MathML and are displayed in this HTML version using MathJax in order to improve their display. Uncheck the box to turn MathJax off. This feature requires Javascript. Click on a formula to zoom.

?Mathematical formulae have been encoded as MathML and are displayed in this HTML version using MathJax in order to improve their display. Uncheck the box to turn MathJax off. This feature requires Javascript. Click on a formula to zoom.ABSTRACT

Surveillance is ubiquitous. It is well known that the presence of other people (in-person or remote, actual or perceived) increases performance on simple tasks and decreases performance in complex tasks (Zajonc 1965). But little is known about these phenomena in the context of video games, with recent advances finding that they do not necessarily extend to games (Emmerich and Masuch 2018). In Experiment 1 (N=1489; No Observation vs. Researcher Observing), we find that participants observed by a researcher played significantly longer, and performed significantly better, across three video games. Moreover, we find some support that participants observed by a researcher score higher on player experience and intrinsic motivation. In Experiment 2 (N=843; Researcher Observing vs. Professor Observing), we seek to understand whether different roles differing in their perceived evaluativeness would influence the effects of observation. We find that participants observed by a professor had, at times, significantly lower performance, player experience, intrinsic motivation, playing time, and higher anxiety. In Experiment 3 (N=1358; No Observation vs. Researcher Observing), we further validate Experiment 1 by extending our results to three additional game genres. Here, we provide the largest study on observation in video games to date. The study is also the first to show that observer type can significantly influence player outcomes.

1. Introduction

Video games are ubiquitous. In 2020, the Entertainment Software Association (ESA) reported that 64% of American adults play video games and 75% of Americans have at least one gamer in their household (ESA Citation2020). Increasingly, players are subject to various forms of observation and surveillance, both overt and covert. To varying degrees, the use of analytics to ‘nudge’ and monetise player behaviour is now standard practice in the development of most video games (O'Donnell Citation2014; Nieborg Citation2015). At the same time, e-sports are becoming mainstream, and the popular video game streaming website Twitch, where viewers watch Twitch streamers play video games, has an estimated 140 million monthly viewers (Smith Citation2021), and more than 130 billion minutes of gameplay are watched per month (TwitchTracker Citation2021). Moreover, video game audiences in the form of family, friends, and strangers are common at home and at gaming events. Additionally, in-game spectating is a feature of many games whereby players can watch others to learn, to be entertained, or to pass time while waiting for the next round. Despite the increasing normalisation of various forms of surveillance in games, we still know very little about the effects that knowingly being watched has on players. Understanding how observation influences players can help researchers and designers more purposefully create social settings in games that make the intended impacts. For example, if observation can heighten dimensions such as player performance, motivation, and experience, then incorporating features enabling observation can lead to games that are more engaging. More engaging games can lead to better reviews and increase a game's commercial success. However, researchers have suggested that observer effects may not occur at all in digital games (Emmerich and Masuch Citation2018). Therefore, it is important to first understand if observers have an influence in gaming at all. To understand observer effects, we draw from the literature on social facilitation.

Social facilitation refers to the phenomenon whereby individuals perform differently when in the mere presence of others. Social facilitation is known to cause an enhancement of performance (such as speed and accuracy) of well-practiced tasks, but a degradation of performance for less-familiar tasks (Crowne and Liverant Citation1963; Zajonc Citation1965). Researchers posit that this is because the presence of others increases arousal, which in turn increases an individual's tendency to do the things that they are normally inclined to do. For example, a very simple math problem is generally well-learned, so a person's instinctual response is generally the correct response. However, for a complex and novel problem-solving task, a person's instinctual response is often not the correct response. Social facilitation effects are known to be made even stronger when the observers are seen to be acting in an evaluative capacity (Cottrell et al. Citation1968; Nass et al. Citation1995), i.e. judging the performer.

In this paper, we conduct large-scale studies on observation in video games, wherein we explore the applicability of social facilitation effects. We study how the presence of a remote observer influences player experience, intrinsic motivation, anxiety, behaviour, and performance. Player experience of need satisfaction and intrinsic motivation are well-established frameworks that model gaming enjoyment and motivation with vast empirical support across contexts (Ryan, Rigby, and Przybylski Citation2006; Deci and Ryan Citation2012; Deterding Citation2015). When comparing observation by a researcher versus a professor, we additionally measure anxiety. This is because observer status (e.g. faculty versus student observers) has been shown to affect anxiety (Seta et al. Citation1989) which can sometimes help explain social facilitation effects (Bond and Titus Citation1983). We also measure how well players performed, and how long they played. These variables help form a complete picture of different facets of gaming experience, and the influence of observation on those variables. To this end, our overall goal in this work is to investigate the role of observation on performance and related outcomes, including player experience and intrinsic motivation.

2. Related work

Here we describe research in three interrelated domains: social presence, social facilitation, and surveillance.

2.1. Social presence in games

Social presence is ‘the awareness of being socially connected to others’ (Cairns, Cox, and Imran Nordin Citation2014) or ‘the illusion of being present together with a mediated person’ (Weibel et al. Citation2008). Social presence inherently requires surveillance and observation, since other participants or players are aware of and watching each other's activities. Social presence in games can be traced back to early mediated environments, such as telecommunication systems (Short, Williams, and Christie Citation1976). Minsky coined the term telepresence (Minsky Citation1980): ‘the illusion of being present in a mediated room’ (Weibel et al. Citation2008). Lombard and Ditton (Citation2006) examined presence in mediated environments and found three aspects of social presence: presence as social richness, as being a social actor, and as sharing a space with other social actors. These three factors constitute social presence in mediated environments, including digital games.

Social presence in digital games manifests in a variety of forms, from simple two player games to massively multiplayer online games (MMOs) such as World of Warcraft. The interaction can be competitive, collaborative, or both. Weibel et al. (Citation2008) examined the effect of human- versus computer-controlled opponents and found that players who experienced social presence were more likely to report experiences of flow, enjoyment, and immersion. Similarly, Gajadhar, De Kort, and IJsselsteijn (Citation2008) found that increased social presence in games increases enjoyment. Recent work suggests that social presence increases enjoyment but decreases immersion; it may, for example, be a distraction or disruption to feeling present in a different space (Cairns, Cox, and Imran Nordin Citation2014). While social presence defines the ways that people connect to and become aware of others in games, social facilitation explicates the actual mechanisms and effects.

2.2. Social facilitation

Social facilitation refers to the phenomenon whereby people perform differently in the presence of others than when alone. Social facilitation theory posits that people perform better on simple tasks (a facilitation effect), and worse on complex tasks (an inhibition effect) when in the presence of others (Zajonc Citation1965). This has been found in a variety of areas, including sports (Triplett Citation1898), writing (Allport Citation1920), and verbal tasks (Pessin Citation1933). Interestingly, there is support for social facilitation not only in humans (Bond and Titus Citation1983; Baumeister Citation1984; Strauss Citation2002), but also in non-humans, including monkeys (Munkenbeck Fragaszy and Visalberghi Citation1990; Harlow and Yudin Citation1933; Visalberghi and Addessi Citation2001). There are three main theories as to why social facilitation happens: activation, evaluation, and attention. Activation posits that social facilitation arises primarily from arousal. Zajonc argues that the presence of others increases arousal, and this heightened arousal improves performance on well-learned tasks but impairs performance on not-well-learned tasks. Evaluation takes the approach that it is not mere presence of observers, but rather the fear of being evaluated by those observers, that creates social facilitation effects (Henchy and Glass Citation1968). Research has also suggested that social facilitation is a product of peoples' desire to maintain a positive image in front of other people (Bond Citation1982; Bond and Titus Citation1983). Attention focuses on changes in performer attention as being the cause of social facilitation effects. Researchers argue that observation causes a distraction which increases motivation. This increased motivation improves performance for well-learned tasks. However, the increase in motivation is unable to compensate for the detrimental effects of distraction on not-well-learned tasks, which are more cognitively demanding (Sanders, Baron, and Moore Citation1978). Researchers argue that when performers try to focus both on the distraction and the difficult task, this causes excess demands on working memory (Strauss Citation2002). Regardless of why social facilitation occurs, it is important for this particular work to understand the applications of social facilitation in digital contexts.

2.3. Virtual social facilitation

Several studies have examined social facilitation in a digital context. In a study by Emmerich and Masuch (Citation2018), participants were randomly assigned to one of three conditions while playing a video game: the presence of the experimenter; the presence of a virtual agent; and a control condition, in which the participant was alone. Overall, they found that there was no evidence of different performance by players across the different conditions. In contrast, a study by Bowman et al. (Citation2013) showed that in the context of a low-challenge first-person shooter, players in the presence of observers had significantly increased game performance (Bowman et al. Citation2013). In the context of more complex tasks, monitoring has been shown to negatively impact performance, such as in website work (Rickenberg and Reeves Citation2000), pattern recognition and categorisation tasks (Hoyt, Blascovich, and Swinth Citation2003), and public speaking (Pertaub, Slater, and Barker Citation2002). Researchers believe that one aspect that strengthens social facilitation effects is the perception of the observers as being evaluative (Hoyt, Blascovich, and Swinth Citation2003). In other words, it is the performer's fear that the observers are judging their performance which increases social facilitation effects, regardless of whether the observers are actual humans (Pertaub, Slater, and Barker Citation2002; Zanbaka et al. Citation2007; Hall and Henningsen Citation2008). Such effects can occur even when the monitoring is electronic.

Aiello and Svec (Citation1993) showed that social facilitation effects occurred through electronic monitoring of a complex task (in this case, solving an anagram), and that performance was significantly impaired for participants who were being monitored. In a study that compared performance in both simple and complex tasks, Park and Catrambone (Citation2007) assigned participants to perform several tasks of varying degrees of difficulty. Participants were randomly assigned to one of three conditions: alone, in the company of another person, or in the company of a virtual human. For easy tasks, participants in the virtual-human conditions performed better than those performing alone; however, those in the alone condition performed better on the difficult tasks compared to the virtual-human condition. Examining the effects of monitoring on task performance, Thompson, Sebastianelli, and Murray (Citation2009) conducted an experiment to determine the impact of monitoring on students who used web-based training to learn online search skills. The researchers found that participants who were told that their training was being monitored performed worse on a post-training skills test than participants who were unaware of the monitoring. The research thus far supports social facilitation theory in non-game contexts. In the context of video games, however, the research is inconclusive. Researchers have argued that games create distinctive universes with the suspension of reality; and as such, standard ‘real world-based’ theories may be less effective (Emmerich and Masuch Citation2018).

This argument requires further investigation to understand whether, and at what point, ‘reality’ might be suspended in game worlds to the extent that standard theories of human behaviour no longer apply (Adams Citation2014; Emmerich and Masuch Citation2018). The findings of the four studies by Emmerich and Masuch led them to challenge the general applicability of social facilitation theory to video games (Emmerich and Masuch Citation2018). In issuing this broad challenge, they acknowledged that the four games used in their study were not representative of all game genres (which included two puzzle games, a shooter game, and a side-scrolling adventure game), but they cautioned that the presence of observer effects should not be assumed without investigation. Much prior research in social facilitation dates back to a time when online games, e-sports, Twitch streaming, and embedded surveillance were exceptional cases, rather than the norm, as they are today. However, given the contradictory evidence of social facilitation effects in the context of digital games, it is necessary to first establish whether it occurs at all. Our studies contribute to this ongoing discussion through a series of large-scale remote observation experiments. In addition to social facilitation, it is important to understand how surveillance, which implies evaluative observation, affects people.

2.4. General surveillance

Surveillance is increasingly a topic of interest. Researchers have found that increasing public awareness of surveillance can create ‘chilling effects’ on online behaviour (Marthews and Tucker Citation2014; Penney Citation2016; Stoycheff Citation2016). For example, traffic to Wikipedia articles that raise sensitive privacy concerns has significantly decreased since 2013, when an American whistleblower leaked highly classified information regarding the National Security Agency (NSA) (Penney Citation2016). Similarly, employee surveillance is now increasingly common in the workplace. Studies show that 45% of employers track content, keystrokes, and time spent at the computer; 73% use tools to automatically monitor e-mail; and 40% assign individuals to manually read e-mails (American Management Association Citation2008). Employee monitoring has even been codified in scientific-management theory (or ‘Taylorism’), which posits that unobserved workers are less efficient (Saval Citation2014). Games are another area where surveillance and monitoring are becoming commonplace through game analytics, e-sports, and Twitch streaming.

2.5. Surveillance in games

Although few empirical studies exist on the topic, it is clear that surveillance is prevalent in gaming. Surveillance can be understood as being either institutional surveillance or social/peer surveillance (Raynes-Goldie Citation2010). Institutional surveillance takes place when the game company is monitoring player behaviour, while social surveillance takes place when players are monitoring each other's behaviour.

Social surveillance is inherently a part of multiplayer games. For example, in Minecraft, players continuously provide feedback to one another (Hope Citation2016). This trend of social surveillance in the form of feedback, observation, and sharing outside of the game world can be observed more broadly with the popularity of Twitch, Let's Play videos, and e-sports. Other forms of social surveillance include self and social policing, which are a mainstay of Multiplayer Online Battle Arena Games (MOBAs) such as League of Legends and Dota 2, where social policing measures punish players for behaving badly. Social surveillance and policing dynamics can be observed in World of Warcraft through the game's guild system (Collister Citation2014). Similarly, Club Penguin encourages children to become ‘secret agents’ and to undertake secret missions to report players for breaking rules (Club Penguin Citation2009).

As an example of how game companies can leverage and monetise social surveillance, Vie and DeWinter (Citation2016) describe how King, the company behind Candy Crush Saga, designed the game to show real-time performance of friends on Facebook to encourage competition, thereby driving the growth and viral spread of the game. As an example of peer surveillance, the game shows the performances of friends on Facebook and shows how other players are doing by comparison on a real-time basis to drive competition. Similarly, Taylor (Citation2006) describes a mod for World of Warcraft called CTRA (or CT_RaidAssist) which, while being very useful as a tool for coordinating large-scale, in-game events, also allows the leader to watch, ‘at a very micro level,’ each player's performance. In a sense, companies are also using their institutional surveillance tools – the data and in-game analytics that they have collected – and providing them to enhance social surveillance, thereby also changing game dynamics and, thus, player experiences. This, in turn, can also impact how effectively a game is monetised.

Indeed, institutional surveillance is increasingly becoming part of the creation and playing of video games. The use of analytics in games to understand and influence player behaviour, as well as guide monetisation strategies, is now standard practice in the development of video games, especially those categorised as ‘casual’ or ‘free to play’ (O'Donnell Citation2014; Nieborg Citation2015). Specifically, data collection can help companies better understand how to improve game play or influence purchasing behaviours (Hope Citation2018). At the same time, data collection is also becoming a monetisation model in itself. It is, in the case of Nantic's Ingress (a successor of the hugely popular Pokémon Go) what Hulsey and Reeves (Citation2014) describe as ‘datafication of one's mobile life in exchange for the gift of play.’ Specifically, Ingress gave players a free game in exchange for their gameplay data. Ingress included players taking photos and gathering geographical location data, which was then used to populate Pokémon Go. This took the monetisation of surveillance into a new realm of actual content creation in a new product.

While social and institutional surveillance are now ubiquitous in video games through monetisation strategies, Twitch, and e-sports, we still understand very little about surveillance's concrete impacts on in-game behaviour. As a first step, this paper aims to understand the effects of remote observation in a series of single-player digital games.

2.6. Player experience, motivation, and behaviour

To understand the effects of remote observation, we draw upon theories of player experience of need satisfaction (Ryan, Rigby, and Przybylski Citation2006) and intrinsic motivation (McAuley, Duncan, and Tammen Citation1989). Additionally, we measure behavioural outcomes including performance and time played. These measures can be understood to be broadly important in video game play since they refer to the satisfaction of the game play experience (player experience of need satisfaction), the inherent enjoyability of the game (intrinsic motivation), and objective game play metrics (behaviour).

2.6.1. Player experience

The Player Experience of Need Satisfaction (PENS) framework (Ryan, Rigby, and Przybylski Citation2006) posits that the following dimensions are crucial to player experience: Competence, Autonomy, Relatedness, Presence/Immersion, and Intuitive Controls. PENS is based on self-determination theory (SDT) (Deci and Ryan Citation2012). PENS contends that the psychological ‘pull’ of games is largely due to their ability to engender three needs – competence (developing mastery (White Citation1959)), relatedness (connecting with others (Baumeister and Leary Citation1995)), and autonomy (having choices and free will (Chirkov et al. Citation2003)) (Ryan, Rigby, and Przybylski Citation2006). PENS is considered a robust framework for assessing player experience (Deterding Citation2015).

2.6.2. Intrinsic motivation

Intrinsic motivation refers to motivation that arises from interest and enjoyment in the task itself (McAuley, Duncan, and Tammen Citation1989). Intrinsic motivation facilitates involvement and performance in an activity and promotes greater well-being (Levesque et al. Citation2010). The Intrinsic Motivation Inventory (IMI) is the primary self-report measure for assessing intrinsic motivation. The IMI assesses dimensions including Interest/Enjoyment (e.g. ‘I enjoyed doing this activity very much’); Effort/Importance (e.g. ‘I put a lot of effort into this’); Pressure/Tension (e.g. ‘I felt very tense while doing this activity’); and Value/Usefulness (e.g. ‘I believe this activity could be of some value to me’) (McAuley, Duncan, and Tammen Citation1989).

2.6.3. Behaviour

The last primary outcome of interest is player behaviour. We collect two crucial measures of behaviour across all experiments: time played (often used in research studies as an objective measure of motivated behaviour, e.g. Sansone et al. (Citation1992)) and performance (an objective measure of in-game achievement, which is an important indicator as to whether social facilitation is occurring).

3. Hypotheses

3.1. Experiment 1

Our first experiment sought to compare players in an observed versus anonymous condition. We used three simple games, and therefore, we hypothesised that performance will be increased in the observed condition as in prior studies (Bowman et al. Citation2013).

H1. Performance: Participants in the observed condition will outperform participants in the anonymous condition.

Observation, however, may influence gaming outcomes other than performance. For example, higher performance is generally tied to feelings of competence (Ryan, Rigby, and Przybylski Citation2006), and being observed may result in feelings of social connectedness. Observers can also enhance motivation (Bond Citation1982; Bond and Titus Citation1983). Therefore, using the Player Experience of Need Satisfaction (PENS) and Intrinsic Motivation Inventory (IMI), we measure player experience and motivation-related aspects. For Experiment 1, we hypothesise increases in these outcomes due to observation.

H2. Player Experience: Participants in the observed condition will have higher player experience of need satisfaction than participants in the anonymous condition.

H3. Intrinsic Motivation: Participants in the observed condition will have higher intrinsic motivation than participants in the anonymous condition.

Finally, higher levels of competence and relatedness have been shown to be positively associated with hours per week played (Ryan, Rigby, and Przybylski Citation2006). We therefore predict an increase in motivated behaviour.

H4. Motivated Behaviour: Participants in the observed condition will play longer than participants in the anonymous condition.

3.2. Experiment 2

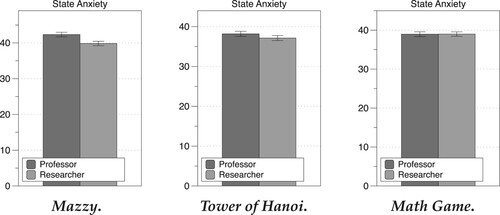

Experiment 2's goal was to assess if the observer's status could play a role in social facilitation effects. We compared a professor observing the player versus a researcher observing the player. Observer status has been shown to affect anxiety and influence social facilitation (Seta et al. Citation1989), therefore we hypothesised that the professor would lead to greater anxiety due to their being perceived as more evaluative.

H5. Anxiety: Participants in the professor condition will have higher anxiety than participants in the researcher condition.

Greater anxiety in social facilitation contexts is directly related to concerns around self-presentation (Bond Citation1982; Bond and Titus Citation1983) and an increased feeling of needing to impress or meet expectations (Baumeister and Hutton Citation1987). Accordingly, we hypothesised that this anxiety and concern about making a good impression in a condition with an observer viewed as more judging of the performer would cause social facilitation to induce an inhibition effect on performance. Such an effect would be due to the arousal exceeding the optimal level for this task (Zajonc Citation1965).

H6. Performance: Participants in the researcher condition will outperform participants in the professor condition.

Such an inhibition of performance in the professor condition would lead to a corresponding decrease in player experience of need satisfaction measures, such as competence (Ryan, Rigby, and Przybylski Citation2006). Need satisfaction is a pre-requisite for intrinsic motivation to exist (McAuley, Duncan, and Tammen Citation1989), and hence we would expect to see a corresponding decrease in intrinsic motivation.

H7. Player Experience: Participants in the researcher condition will have higher player experience of need satisfaction than participants in the professor condition.

H8. Intrinsic Motivation: Participants in the researcher condition will have higher intrinsic motivation than participants in the professor condition.

Because player experience of need satisfaction measures are positively associated with time played (Ryan, Rigby, and Przybylski Citation2006), we predict a decrease in motivated behaviour in the professor condition.

H9. Motivated Behaviour: Participants in the researcher condition will play longer than participants in the professor condition.

3.3. Experiment 3

Experiment 3 is a replication of Experiment 1 across three additional game genres. Because we again use relatively simple games, we use identical hypotheses across our measures.

H10. Performance: Participants in the observed condition will outperform participants in the anonymous condition.

H11. Player Experience: Participants in the observed condition will have higher player experience of need satisfaction than participants in the anonymous condition.

H12. Intrinsic Motivation: Participants in the observed condition will have higher intrinsic motivation than participants in the anonymous condition.

H13. Motivated Behaviour: Participants in the observed condition will play longer than participants in the anonymous condition.

4. Experimental setting

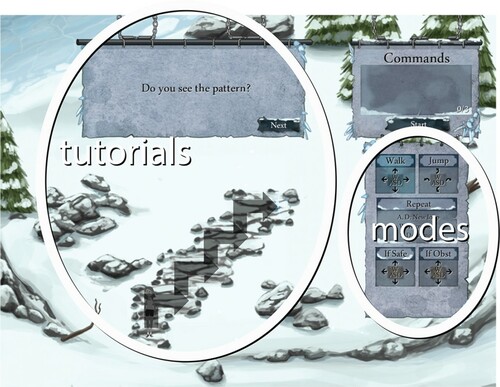

We designed three experiments to measure the effects of observer presence on game players under different conditions. All three experiments were between-subjects studies with two conditions. Experiment 1 and 3 used the following two conditions: Anonymous and Observed. Players were shown text that indicated either that nobody was observing or that a researcher was observing. Experiment 2 used the following two conditions: Researcher and Professor. Players were shown text that indicated either that a researcher was observing or that a professor was observing. In Experiments 1 and 2, players were exposed to three different games (Mazzy, a Tower of Hanoi game, and a math game). In Experiment 3, players were exposed to three additional games (an infinite runner, a 2D platformer, and an action RPG). All games are listed in detail below.

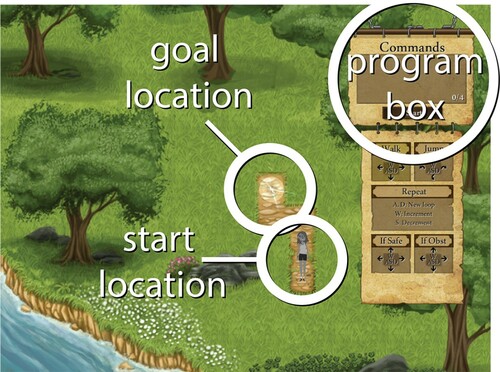

4.1. Mazzy: A CS programming game

Mazzy is a STEM learning game (Kao and Harrell Citation2015a)Footnote1. Mazzy is a game in which players solve levels by creating short computer programs. In total, there are 12 levels in this version of Mazzy. Levels 1–5 require only basic commands. Levels 6–9 require using loops. Levels 10–12 require using all preceding commands in addition to conditionals (See and ). Mazzy has been used previously as an experimental testbed for evaluating the impacts of avatar type on performance and engagement in an educational game (Kao and Harrell Citation2015b, Citation2015c, Citation2015d, Citation2016a, Citation2016b, Citation2016c, Citation2017, Citation2018; Kao, Citation2018).

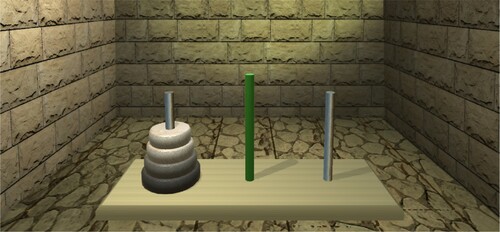

4.2. Tower of Hanoi: a problem-solving puzzle

The Tower of Hanoi is a popular puzzle game that is also widely used as a problem-solving assessment (Kotovsky, Hayes, and Simon Citation1985) (see .) The goal in the game is to move all the disks from the leftmost rod to the middlemost rod, with the following rules: (1) only one disk can be moved at a time, (2) only the topmost disk on a rod can be moved, and (3) disks can only be placed on top of larger disks.

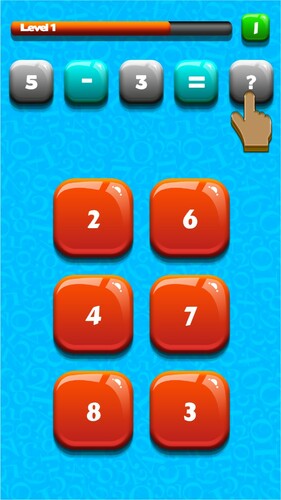

4.3. Math game: a time-pressure math-solving game

In this math game which has been used in previous studies (Kao Citation2019), players answer increasingly difficult fill-in-the-blank math questions. Questions are timed, and with each level increase, players are given less and less time to complete five questions. If the timer reaches 0 before the player has selected the correct answer, the player loses and the game ends. Incorrect answers decrease the timer by a fixed amount. Players can repeat playing the game as many times as they wish. (See .)

4.4. Action RPG

We developed an action role-playing game (RPG) which has been used in previous studies (Kao Citation2020). The game itself plays similarly to other action RPGs (e.g. Diablo). Players can move around a 3-dimensional world, cast spells, and use weapons. Players are provided with quests to complete (e.g. defeat five slimes). They can also improve their character by collecting and equipping different items, such as better weapons, armour, or potions. Players earn experience points from defeating enemies and completing quests. When players level up, they can distribute attribute points (e.g. attack, defense, etc.) and learn new abilities/magic.

There are two character types to choose from: Knight or Ranger. The Knight can use abilities such as ‘bash’ and ‘firebolt.’ The Ranger can use abilities such as ‘multi-shot arrow’ and ‘summon wolf.’ The Knight can learn up to 16 different abilities, and the Ranger can learn up to 15 different abilities. (See for a screenshot of the game.)

4.5. Infinite runner

The infinite runner is a 2D side-scroller which requires the player to navigate a ship while avoiding obstacles. The player's score is increased through collecting coins, which they do by flying over them with their ship. The player increases their ship's altitude by pressing and holding a single button (space bar, or the left mouse button). Releasing the button causes the ship to plummet. (See for a screenshot of the game.)

4.6. 2D platformer

The 2D platformer is similar to Mario Bros. Players can use melee/ranged attacks to defeat enemies, and they can also jump. There are a total of 36 levels (4 game worlds, 9 levels each). Players can play until they decide to quit, or until they have completed all 36 levels. (See for a screenshot of the game.)

4.7. Difficulty of testbed games

In order to demonstrate that our testbed includes games that are both higher and lower in difficulty, we ran studies with 141 Amazon Mechanical Turk (AMT) participants (for the first three games), and 130 AMT participants (for the last three games), in which participants played the three respective games for as much time as they wanted. They then rated each game on a scale of 1 (Not Difficult) to 5 (Very Difficult). The mean difficulty scores for each game can be seen in . The game difficulty scores range from 2.16 (the math game) to 3.75 (the infinite runner), indicating that our testbed includes games that are both easier and harder in difficulty.

Table 1. Difficulty on a scale of 1 (Not Difficult) to 5 (Very Difficult).

5. Experiment 1: anonymous vs. observed

5.1. Methods

5.1.1. Experiment phrasing

To ensure our language to operationalise the observation conditions was clear, we conducted a study of 105 AMT participants. All participants were given two screenshots in a randomised order. Each of the screenshots was taken from one of the three games at random, with either the phrase ‘No researcher connected’ or ‘Researcher0X connected’ (where X was replaced by 1, 2, or 3 at random). Participants were asked to interpret the phrase. Because participants interpreted the phrases the way we expected them to (either that nobody was observing, or that a researcher was observing), the wording was kept. The percentage of participants who had a different interpretation was <2%.

5.1.2. Conditions

The two conditions were:

| (1) | Anonymous | ||||

| (2) | Observed | ||||

In the Anonymous condition, participants were shown the phrase ‘No researcher connected’ at the bottom left of the screen on a semi-transparent black bar. In the Observed condition, participants were instead shown the phrase ‘Researcher0X connected,’ where X was replaced by 1, 2, or 3 at random. From a participant's perspective, all other aspects of the experiment are exactly identical (See and ). Condition assignment is done on a per game basis, so a player might be observed anywhere from zero to three times.

To maintain realism, participants' actions were recorded in real-time. Keystrokes and mouse-clicks were recorded and transmitted to a server, regardless of what condition participants were assigned to for a particular game. However, one data field, anonymous, was set to 1 in the case of participants being assigned to the anonymous condition and set to 0 in the case of the observed condition. The server then filtered out the anonymous condition participants after they were received so that the researcher assigned to the experiment could only see the data from observed-condition participants. The researcher also monitored this data during the experiment.

5.1.3. Quantitative and qualitative measures

Performance. Performance data were collected for each game, including levels completed (Mazzy), completion (Tower of Hanoi), and high score (Math Game).

Player Experience of Need Satisfaction. We use the 21-item Player Experience of Need Satisfaction (PENS) scale (Ryan, Rigby, and Przybylski Citation2006) which measures the following dimensions: Competence, Autonomy, Relatedness, Presence/Immersion, and Intuitive Controls on a scale of 1: Do Not Agree to 7: Strongly Agree. Average Cronbach's alpha for each dimension of the PENS was 0.90, 0.83, 0.74, 0.92, and 0.84 in this paper.

Intrinsic Motivation Inventory. We use four dimensions of the Intrinsic Motivation Inventory (IMI) on a scale of 1: Not At All True to 7: Very True. These dimensions consist of Interest/Enjoyment (e.g. ‘I enjoyed doing this activity very much’); Effort/Importance (e.g. ‘I put a lot of effort into this’); Pressure/Tension (e.g. ‘I felt very tense while doing this activity’); and Value/Usefulness (e.g. ‘I believe this activity could be of some value to me’) (McAuley, Duncan, and Tammen Citation1989). Average Cronbach's alpha for each dimension of the IMI was 0.92, 0.85, 0.87, and 0.97 in this paper.

Time Played. We directly measure motivation as operationalised by the amount of time spent playing each game.

5.1.4. Participants

A total of 1489 participants were recruited through AMT. The data set consisted of 768 male and 721 female participants. Participants self-identified their races/ethnicities as white (79%), black or African American (8%), Chinese (2%), Asian Indian (2%), American Indian (1%), Filipino (1%), Korean (1%), Japanese (1%), and other (5%). Participants were between the ages of 18 and 84 (M=36.4, SD=11.3), and were all from the United States. Participants were reimbursed $3.00 USD to participate in this experiment.

5.1.5. Design

The condition was a between-subjects factor. Participants were randomly assigned, on a per-game basis, to one of the two conditions.

5.1.6. Protocol

Participants were first informed that their game play may be observed for research purposes. Participants then played all three games in a random order. Participants were informed, before each game, that they could quit at any time without penalty. In two of the three games (Mazzy and the Tower of Hanoi game), the game could also be ended through completion (due to the games' design). For each game played, participants completed both the PENS and the IMI scales. Participants then filled out demographics.

5.1.7. Analysis

Data were extracted and imported into Statistical Package for Social Science (SPSS) version 22 for data analysis using multivariate analysis of variance (MANOVA). Separate MANOVAs were run separately for each game – Mazzy, the Tower of Hanoi game, and the math game – with the independent variable observed. The dependent variables were Time Played, Levels Completed (Mazzy), Completed (Tower of Hanoi game), High Score (the math game), the PENS scale, and the IMI. To detect the significant differences between conditions, we utilised one-way MANOVA. These results are reported as significant when p < 0.05 (two-tailed).

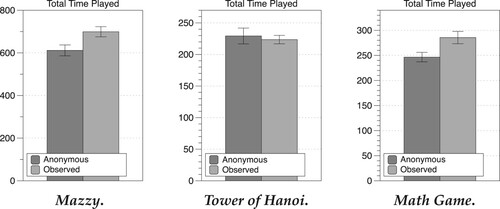

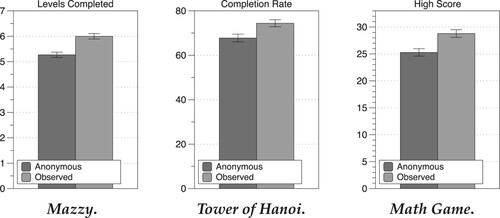

5.2. Results

5.2.1. Mazzy

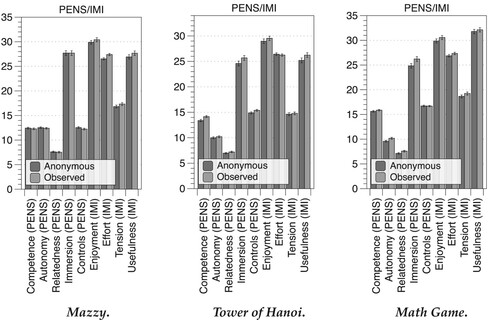

The MANOVA was statistically significant across our two conditions in Mazzy, F(11, 1477) = 3.25, p < .0005; Wilk's , partial

.

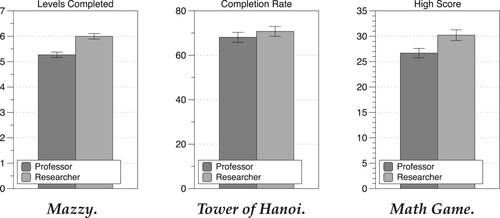

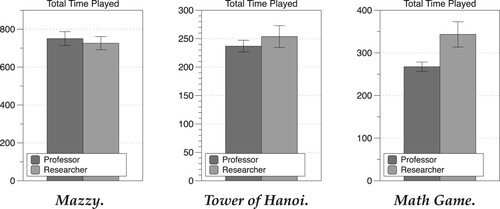

Participants in the observed condition (M = 699.23) played Mazzy for a significantly longer period of time than participants in the anonymous condition (M = 611.75), F(1, 1487) = 6.29; p < .05; partial . (See .) Supports H4.

Participants in the observed condition (M = 6.00) completed significantly more levels in Mazzy than participants in the anonymous condition (M = 5.27), F(1, 1487) = 24.06; p < .0001; partial . (See .) Supports H1.

Participants in the observed condition (M = 5.48) scored significantly higher on effort/importance than participants in the non-observed condition (M = 5.31), F(1, 1487) = 6.81; p < .01; partial . (See .) Supports H3.

5.2.2. Tower of Hanoi

The MANOVA was significant for Tower of Hanoi, F(11, 1477) = 1.86, p < .05; Wilk's , partial

.

Participants in the observed condition () completed Tower of Hanoi significantly more often than participants in the anonymous condition (

), F(1, 1487) = 8.03; p < .005; partial

. (See .) Supports H1.

Participants in the observed condition (M = 4.72) scored significantly higher on competence than participants in the anonymous condition (M = 4.46), F(1, 1487) = 7.44; p < .01; partial . (See .) Supports H2.

5.2.3. Math game

The MANOVA was significant for the Math Game, F(11, 1477) = 2.47, p < .005; Wilk's , partial

.

Participants in the observed condition (M = 285.57) played the Math Game for a significantly longer period of time than participants in the anonymous condition (M = 246.59), F(1, 1487) = 6.37; p < .05; partial . (See .) Supports H4.

Participants in the observed condition (M = 28.8) ended with a significantly higher high score than participants in the anonymous condition (M = 25.3), F(1, 1487) = 12.20; p < .0005; partial . (See .) Supports H1.

Participants in the observed condition (M = 3.40) scored significantly higher on autonomy than participants in the anonymous condition (M = 3.20), F(1, 1487) = 5.23; p < .05; partial . Participants in the observed condition (M = 2.91) scored significantly higher on presence/immersion than participants in the anonymous condition (M = 2.76), F(1, 1487) = 4.15; p < .05; partial

. (See .) Supports H2.

5.2.4. Summary

Our results show that observation unanimously increases performance (H1), time spent (H4), and in some cases, player experience (H2) and intrinsic motivation (H3).

6. Experiment 2: researcher vs. professor

We wanted to explore how different types of observers might influence player behaviour. We chose to contrast a researcher and a professor since they are both expected roles in the administering of research experiments. Moreover, we suspected that the professor role may be perceived as more highly evaluative than the researcher role, which is one attribute shown to amplify social facilitation effects (Cottrell et al. Citation1968; Nass et al. Citation1995). Other roles could be considered for future studies (such as other players, professional e-sports players, etc.). However, experiment design would have to account for potentially confounding influences (such as competition with a concurrent player which could increase suspicion that the experiment had to do with the observation itself).

6.1. Methods

6.1.1. Experiment phrasing

We validated phrasing in a similar way to Experiment 1. Four studies were conducted (totaling 132 AMT participants) to refine the phrasing for this experiment. All participants were given two screenshots in a randomised order. Each of the screenshots was taken from one of the three games at random, with a phrase that was meant to represent either that a researcher was connected or that a professor was connected. Participants were asked to interpret the phrase. With our initial phrasings, there were often misinterpretations. For instance, ‘Professor connected’ was interpreted by some as a user that literally had the username ‘Professor’. We tweaked the wording until misinterpretations were less than 1%.

6.1.2. Conditions

The two conditions were:

| (1) | Researcher | ||||

| (2) | Professor | ||||

In the Researcher condition, participants were shown the phrase ‘A researcher is monitoring you.’ In the Professor condition, participants were shown ‘A professor is monitoring you.’ The Professor condition essentially replaced the Anonymous condition from Experiment 1, and as such there was no data that was flagged as anonymous and all data collected was visible to the experimenter. From the perspective of participants, all other aspects of the experiment were identical. (See and .) Condition assignment is done on a per-game basis. As with Experiment 1, all participants' actions were recorded in real-time. Finally, the real person monitoring the incoming data had an actual role both as researcher and professor.

6.1.3. Validating evaluativeness

In order to validate that players feel more highly evaluated by a professor versus a researcher, we conducted a validation study with 105 AMT participants. All participants were randomly assigned to either the Researcher or the Professor condition. Participants then played one of the three games at random. Participants were informed that they could quit at any time without penalty. After playing the game, participants were asked to rate the question ‘I felt highly evaluated while playing the game’ on a scale of 1: Not At All to 7: Very Much So. Participants in the Professor condition (M = 5.03, ) scored significantly higher than participants in the Researcher condition (M = 4.24,

); t(103) = 2.66, p = 0.009.

6.1.4. Quantitative and qualitative measures

In addition to the measures used in Experiment 1 (performance, the PENS, the IMI, and time played), we use the State-Trait Anxiety Inventory (STAI). The STAI measures the anxiety level of participants after each game. There is considerable evidence for the reliability and validity (Spielberger Citation1989) of the STAI. The scale consists of 20 questions (e.g. I feel calm, I feel secure, I am tense) on a Likert scale of 1: Not At All to 4: Very Much So.

6.1.5. Participants

A total of 843 participants were recruited through AMT. The data set consisted of 390 male and 453 female participants. Participants self-identified their races/ethnicities as white (77%), black or African American (11%), Chinese (2%), Asian Indian (1%), American Indian (1%), Filipino (2%), Korean (1%), Vietnamese (1%), Japanese (1%), and other (3%). Participants were between the ages of 19 and 74 (M = 35.1, SD = 10.6), and were all from the United States. Participants were reimbursed $3.00 USD to participate in this experiment.

6.1.6. Design

The condition was a between-subjects factor. Participants were randomly assigned, on a per-game basis, to one of the two conditions.

6.1.7. Protocol

Participants were first informed that their game play might be observed for research purposes. Participants then played all three games in a randomised order. Participants were informed, before each game, that they could quit at any time without penalty. In two of the three games (Mazzy and the Tower of Hanoi game), the game could also be ended through completion. For each game, participants completed the PENS, the IMI, and the STAI. Participants then filled out demographics.

6.1.8. Analysis

Data were analysed in a similar fashion to Experiment 1. Separate MANOVAs were run for each separate game – Mazzy, the Tower of Hanoi game, and the math game – with the independent variable audience role (Researcher or Professor). The dependent variables are Time Played, Levels Completed (Mazzy), Completed (the Tower of Hanoi game), High Score (the math game), the PENS scale, the IMI, and the STAI. To detect the significant differences between conditions, we utilised one-way MANOVA. These results are reported as significant when p < 0.05 (two-tailed).

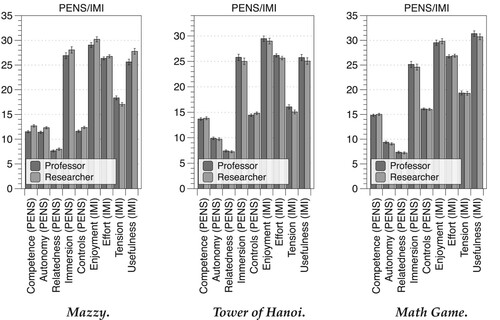

6.2. Results

6.2.1. Mazzy

The MANOVA was statistically significant across our two conditions in Mazzy, F(12, 830) = 1.78, p < .05; Wilk's , partial

.

Participants in the researcher condition (M = 4.23) scored significantly higher on the dimension of competence than participants in the professor condition (M = 3.84), F(1, 841) = 12.98; p < .0005; partial . Participants in the researcher condition (M = 4.10) scored significantly higher on the dimension of autonomy than participants in the professor condition (M = 3.80), F(1, 841) = 8.16; p < .005; partial

. Participants in the researcher condition (M = 4.13) scored significantly higher on the dimension of controls than participants in the professor condition (M = 3.87), F(1, 841) = 5.91; p < .05; partial

. Participants in the researcher condition (M = 3.41) scored significantly lower on the dimension of pressure and tension than participants in the professor condition (M = 3.67), F(1, 841) = 5.86; p < .05; partial

. Participants in the researcher condition (M = 3.97) scored significantly higher on the dimension of value and usefulness than participants in the professor condition (M = 3.66), F(1, 841) = 6.81; p < .01; partial

. (See .) Supports H7 and H8.

Participants in the researcher condition (M = 39.84) scored significantly lower on state anxiety than participants in the professor condition (M = 42.34), F(1, 841) = 7.90; p < .01; partial . (See .) Supports H5.

6.2.2. Tower of Hanoi

The MANOVA was not statistically significant across our two conditions in Tower of Hanoi, F(12, 830) = 1.78, p >.05; Wilk's , partial

.

6.2.3. Math game

The MANOVA was statistically significant across our two conditions in Math Game, F(12, 830) = 1.95, p < .05; Wilk's , partial

.

Participants in the researcher condition (M = 30.22) had a significantly higher high score than participants in the professor condition (M = 26.68), F(1, 841) = 6.39; p < .05; partial . (See .) Supports H6.

Participants in the researcher condition (M = 343.50) played Math Game for a significantly longer period of time than participants in the professor condition (M = 267.59), F(1, 841) = 5.84; p < .05; partial . (See .) Supports H9.

6.2.4. Summary

Our results show partial support for each of our hypotheses (H5 – H9).

7. Experiment 3: anonymous vs. observed

Experiment 3's purpose was to see if our original findings from Experiment 1 would still hold across three additional game genres: an action RPG, an infinite runner, and a platformer game. This was important, as these game genres reflect several popular game genres, thus helping us test the applicability of our research to a broader set of video games. The experiment was conducted in a similar fashion to Experiment 1.

All games were extensively customised for use in this experiment and to ensure consistency across all games. For example, we added extensive data tracking affordances. Additionally, we also simplified the flow of gameplay by removing the main menu, making the gameplay linear, and removing the player death limit.

7.1. Methods

7.1.1. Conditions

The two conditions were:

| (1) | Anonymous | ||||

| (2) | Observed | ||||

In the Anonymous condition, participants were shown the phrase ‘No researcher connected’ at the bottom left of the screen on a semi-transparent black bar. In the Observed condition, participants were instead shown the phrase ‘Researcher0X connected,’ where X is replaced by 1, 2, or 3 at random. From participants' perspectives, all other aspects of the experiment are identical. Condition assignment is done on a per game basis, so a player might be observed anywhere from zero to three times.

To maintain realism, participants' actions were recorded in real-time. Keystrokes and mouse-clicks were recorded and transmitted to a server, regardless of what condition participants were assigned to for a particular game. However, one data field, anonymous, was set to 1 in the case of participants being assigned to the anonymous condition and set to 0 in the case of the observed condition. The server then filtered out the anonymous condition participants after it was received, so that the researcher assigned to the experiment could only see the data from observed-condition participants. The researcher monitored this data during the experiment.

7.1.2. Quantitative and qualitative measures

In addition to the measures used in Experiment 1 (the PENS scale, the IMI, time played), we tracked additional performance measures in this experiment. For the Action RPG, we tracked Character Level, Total Attacks (Melee/Ranged), Total Magic Uses (Spells/Abilities), Total Monsters Defeated, and Total Keyboard/Mouse Actions. For the 2D Platformer, we tracked Levels Completed, Total Score, Total Attacks (Melee/Ranged), Total Monsters Defeated, Total Player Deaths, and Total Keyboard/Mouse Actions. For the Infinite Runner, we tracked Playthroughs, Total Score, High Score, and Total Keyboard/Mouse Actions.

7.1.3. Participants

A total of 1358 participants were recruited through AMT. The data set consisted of 710 male and 648 female participants. Participants self-identified their races/ethnicities as white (78%), black or African American (10%), Chinese (3%), Asian Indian (2%), American Indian (1%), Filipino (1%), Korean (1%), Japanese (1%), and other (3%). Participants were between the ages of 19 and 78 (M = 36.3, SD = 10.5), and were all from the United States. Participants were reimbursed $3.00 USD to participate in this experiment.

7.1.4. Design

The condition was a between-subjects factor. Participants were randomly assigned, on a per-game basis, to one of the two conditions.

7.1.5. Protocol

Participants were first informed that their game play may be observed for research purposes. Participants then played all three games in a random order. Participants were informed, before each game, that they could quit at any time without penalty. In one of the three games (2D Platformer), the game could also be ended through completion. For each game, participants completed the PENS and the IMI. Participants then filled out demographics.

7.1.6. Analysis

Data were extracted and imported into Statistical Package for Social Science (SPSS) version 22 for data analysis using multivariate analysis of variance (MANOVA). Separate MANOVAs were run for each separate game – Action RPG, 2D Platformer, Infinite Runner – with the independent variable observed. The dependent variables are Time Played, Performance Statistics, the PENS scale, and the IMI. To detect the significant differences between conditions, we utilised one-way MANOVA. These results are reported as significant when p < 0.05 (two-tailed).

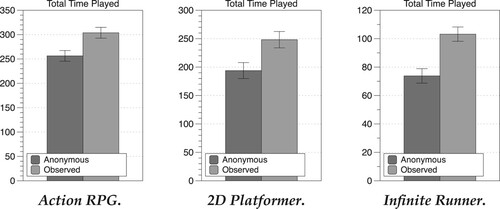

7.2. Results

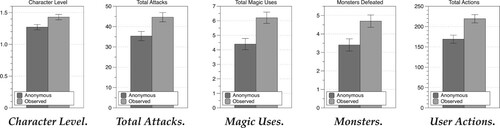

7.2.1. Action RPG

The MANOVA was statistically significant across our two conditions in the Action RPG, F(15, 1342) = 1.89, p < .05; Wilk's , partial

.

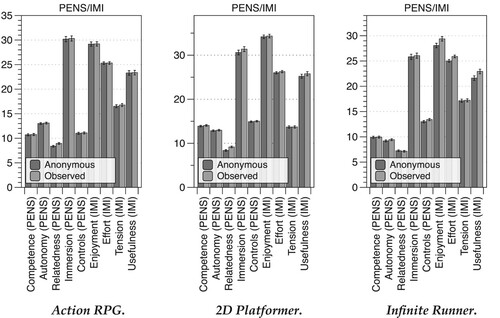

Participants in the observed condition (M = 303.76) played the Action RPG for a significantly longer period of time than participants in the anonymous condition (M = 256.36), F(1, 1356) = 9.22; p < 0.005; partial . (See .) Supports H13.

Participants in the observed condition (M = 1.43) had a significantly higher character level in the Action RPG than participants in the anonymous condition (M = 1.27), F(1, 1356) = 7.12; p < .01; partial . (See .) Supports H10.

Participants in the observed condition (M = 44.61) had a significantly higher number of total attacks in the Action RPG than participants in the anonymous condition (M = 35.33), F(1, 1356) = 8.32; p <.005; partial . (See .) Supports H10.

Participants in the observed condition (M = 6.21) had a significantly higher number of total magic uses in the Action RPG than participants in the anonymous condition (M = 4.40), F(1, 1356) = 10.90; p < .001; partial . (See .) Supports H10.

Participants in the observed condition (M = 4.70) had a significantly higher number of monsters defeated in the Action RPG than participants in the anonymous condition (M = 3.41), F(1, 1356) = 7.51; p < .01; partial . (See .) Supports H10.

Participants in the observed condition (M = 219.33) had a significantly higher number of total actions in the Action RPG than participants in the anonymous condition (M = 168.91), F(1, 1356) = 12.51; p < .0005; partial . (See .) Supports H10.

Participants in the observed condition (M = 2.98) scored significantly higher on relatedness than participants in the anonymous condition (M = 2.80), F(1, 1356) = 4.73; p < .05; partial . (See .) Supports H11.

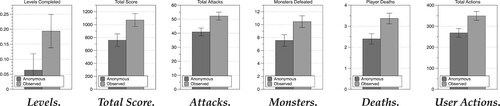

7.2.2. 2D platformer

The MANOVA was significant for the 2D Platformer, F(16, 1341) = 1.87, p < .05; Wilk's , partial

.

Participants in the observed condition (M = 248.20) played the 2D Platformer for a significantly longer period of time than participants in the anonymous condition (M = 193.87), F(1, 1356) = 7.30; p < 0.01; partial . (See .) Supports H13.

Participants in the observed condition (M = 1070.27) had a significantly higher total score in the 2D Platformer than participants in the anonymous condition (M = 758.37), F(1, 1356) = 5.05; p < .05; partial . (See .) Supports H10.

Participants in the observed condition (M = 52.22) had a significantly higher number of total attacks in the 2D Platformer than participants in the anonymous condition (M = 40.91), F(1, 1356) = 8.26; p < .005; partial . (See .) Supports H10.

Participants in the observed condition (M = 10.46) had a significantly higher number of total monsters defeated in the 2D Platformer than participants in the anonymous condition (M = 7.54), F(1, 1356) = 5.24; p < .05; partial . (See .) Supports H10.

Participants in the observed condition (M = 3.37) had a significantly higher number of total player deaths in the 2D Platformer than participants in the anonymous condition (M = 2.40), F(1, 1356) = 7.61; p < .01; partial . (See .) Taken with the other results, this result neither supports nor refutes H10.

Participants in the observed condition (M = 349.08) had a significantly higher number of total actions in the 2D Platformer than participants in the anonymous condition (M = 268.27), F(1, 1356) = 7.46; p < .01; partial . (See .) Supports H10.

Participants in the observed condition (M = 3.06) scored significantly higher on relatedness than participants in the anonymous condition (M = 2.79), F(1, 1356) = 9.00; p < .005; partial . (See .) Supports H11.

7.2.3. Infinite runner

The MANOVA was significant for the Infinite Runner, F(14, 1343) = 2.40, p < .005; Wilk's λ = 0.976, partial .

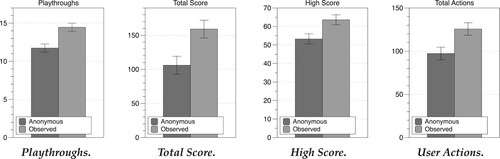

Participants in the observed condition (M = 103.18) played the Infinite Runner for a significantly longer period of time than participants in the anonymous condition (M = 73.81), F(1, 1356) = 16.80; p < .00005; partial . (See .) Supports H13.

Participants in the observed condition (M = 14.46) played the Infinite Runner a significantly higher number of times than participants in the anonymous condition (M = 11.73), F(1, 1356) = 13.01; p < 0.0005; partial . (See .) Supports H10.

Participants in the observed condition (M = 159.04) had a significantly higher total score in the Infinite Runner than participants in the anonymous condition (M = 105.97), F(1, 1356) = 8.20; p < 0.005; partial . (See .) Supports H10.

Participants in the observed condition (M = 63.56) had a significantly higher high score in the Infinite Runner than participants in the anonymous condition (M = 53.24), F(1, 1356) = 7.15; p < 0.01; partial . (See .) Supports H10.

Participants in the observed condition (M = 125.65) had a significantly higher number of total actions in the Infinite Runner than participants in the anonymous condition (M = 97.24), F(1, 1356) = 7.69; p < 0.01; partial . (See .) Supports H10.

Participants in the observed condition (M=4.20) scored significantly higher on interest/enjoyment than participants in the anonymous condition (M = 4.01), F(1, 1356) = 4.35; p < .05; partial . (See .) Supports H12.

Participants in the observed condition (M = 5.18) scored significantly higher on effort/importance than participants in the anonymous condition (M = 5.01), F(1, 1356) = 5.28; p < .05; partial . (See .) Supports H12.

Participants in the observed condition (M = 3.28) scored significantly higher on value/usefulness than participants in the anonymous condition (M = 3.09), F(1, 1356) = 4.04; p < .05; partial . (See .) Supports H12.

7.2.4. Summary and discussion

Our results show that observation increases performance (H10) and time spent (H13) across all three games. However, we found only partial support with respect to player experience (H11) and intrinsic motivation (H12). Overall, the results for Experiment 3 are consistent with Experiment 1, suggesting that our findings are in agreement across a wide variety of game genres.

8. Discussion

Overall, we find that remote observation significantly increases player performance (H1, H10) and time played (H4, H13), and it sometimes increases player experience (H2, H11) and intrinsic motivation (H3, H12). We also find that the type of observer (professor versus researcher) can influence state anxiety (H5), performance (H6), player experience (H7), intrinsic motivation (H8), and time played (H9). This has significant implications for game design. Our results suggest that purposefully integrating observation and surveillance can potentially improve a variety of player experience, motivation, and behavioural outcomes.

In Experiment 1, there is strong support that remote observation increases performance and time spent. This was consistent across all three games. Moreover, we find some support that participants observed by a researcher score higher on player experience and intrinsic motivation. In Experiment 2, we found that different types of observers affect the results. Those participants who were told they were being observed by a professor had at times lower performance, player experience, intrinsic motivation, and time spent, and higher state anxiety, than participants told they were observed by a researcher. Experiment 3 reinforced the results from Experiment 1 in three additional game genres.

Experiment 1 and Experiment 3 demonstrate that when participants are observed, they consistently play longer and perform better. One argument as to why this occurred is that participants simply felt compelled to play longer in order to ensure they received payment. However, it was specifically highlighted that participants could quit at any time without penalty, both at the beginning of the experiment and before each game. Given these clear indicators, and given that feedback responses also did not support the argument that players were worried about not being paid, this is likely not the case. Interestingly, in Experiment 1, we find that participants in the observed condition reported higher autonomy in the math game as compared to participants in the anonymous condition. This is counter-intuitive, since one might assume that surveillance decreases autonomy, but this could be because the observer was viewed as being autonomy-supportive (Deci, Nezlek, and Sheinman Citation1981) rather than controlling (Amabile Citation2018). Realistically, the observer in this scenario does not have control over user actions, and it may have been perceived as such. On the other hand, in Experiment 2, we found that in Mazzy participants being observed by a professor scored lower on autonomy compared to those observed by a researcher, so observer status can moderate whether the observer is perceived as autonomy-thwarting.

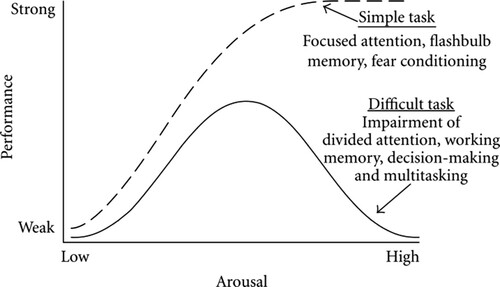

Although the additional arousal from being observed improved outcomes in Experiment 1, this actually worked against players when a professor was observing in Experiment 2. Drive theory, proposed by Zajonc (Citation1965, 1980), suggests that an individual has an optimal level of arousal for a given task. The reasoning is based on the Yerkes–Dodson law (1908), which is illustrated graphically as a bell-shaped curve. Performance increases then decreases with higher levels of arousal. (See .) One explanation is that the added arousal with a professor observing pushed participants past the optimal point for performance. However, such a conclusion also warrants further investigation. While participants felt more highly evaluated by a professor in Experiment 2, the participant assumptions that lead to feeling more evaluated are not clear. These different assumptions could range from believing that the professor has some special expertise in evaluating the task, to believing that if a professor is watching the experiment must be important. Therefore, better elucidating participant thought-processes (e.g. think-aloud) may be useful in future studies to understand participants' mental models of observers.

Figure 24. Yerkes–Dodson law (Yerkes and Dodson Citation1908; Diamond et al. Citation2007).

In demonstrating that different observer types (audience-role) can affect outcomes, Experiment 2 is important in both interpreting existing studies and in conducting future studies on social facilitation in games, as the experimenter(s) present during such studies can impact players in different ways. Work that varies different attributes of observers (e.g. gender, status, age, clothing, race) has not been extensively studied in games. Future research could vary the number of observers present in addition to these attributes.

Based on these findings, we believe there are several promising future directions for this work in HCI. These include investigating additional game genres (e.g. card games like Hearthstone); investigating additional observer types (e.g. novice players versus expert players); and investigating interactive platforms (e.g. Twitch, and how Twitch's interactive affordances such as chat, donations from viewers, emoticons, channel subscriptions, etc., affect players). For example, because remote observation influenced player experience and intrinsic motivation only in certain games, future research could more systematically study game genre as a moderator.

Bowman et al. (Citation2013) found that the presence of a physical audience positively influenced game performance. This effect was limited to games that were less challenging. On the contrary, Emmerich and Masuch (Citation2018) found that the presence of observers did not influence game performance, regardless of game difficulty. The sometimes-conflicting results of social facilitation effects in games deserve further investigation. Games create distinct universes with their own rules and meanings. These universes are sometimes referred to as a ‘magic circle’ in which the reality of the world is temporarily suspended (Huizinga Citation2014; Salen and Zimmerman Citation2004; Adams Citation2014). Social facilitation researchers have argued that within this ‘pretend reality’ that exists inside of games, the social rules of the real world, including social facilitation, could be less effective (Emmerich and Masuch Citation2018). While we don't attempt to invalidate such an argument, our studies in this paper do provide evidence that social facilitation effects exist in digital games. Our study sought to understand, using a large-scale sample, whether social facilitation effects would occur with remote observation. We find that across all six games used, that there was a significant and positive effect from social facilitation, similar to Bowman et al. (Citation2013). However, it may be that certain untested variables, such as the degree of interaction between the observer and the performer, the number of observers present, and/or the characteristics of the observer, render the social facilitation effect stronger or weaker. For example, in Bowman et al. (Citation2013) the audience consisted of two observers in the room who were, ostensibly, other participants waiting their turn, whereas in Emmerich and Masuch (Citation2018) the audience consisted of a single observer who was one of the researchers. Future research could better understand how variables such as the specific social context (environment, game device, spatial positioning of observers relative to the player, observer dialog during gameplay, number of observers, status of observers) moderate and lead to social facilitation or lack thereof. Another promising direction would be to analyse the neurophysiological effects of social facilitation (e.g. FMRI). Additionally, determining and developing more complex models of how contextual factors (e.g. game genre or modality such as virtual reality (Kao et al. Citation2020; Liu et al. Citation2020)) interact with social facilitation would be illuminating for further work in this area.

Despite that observation influenced outcomes in a positive direction in this study, we are not advocating that players be observed in games. Players should have the freedom to experience and learn, free from observation and evaluation. But with the rise of Twitch, e-sports, and digital monitoring more generally, this is increasingly an important area of research. Our findings describe some of the implications of observation on gameplay.

Foucault described Bentham's Panopticon as giving those contained within it a ‘state of conscious and permanent visibility’ (Foucault Citation1978). With the advent of technologies such as the Num8, which uses GPS and text messaging for parents to track the locations of their children (Johnson Citation2009), the penetration of surveillance technologies such as CCTV in the UK (more than 4.2 million cameras, or roughly one camera for every 14 people (McCahill and Norris Citation2002)), and the increasingly ubiquitous monitoring and data collection practices of software companies (Hope Citation2016), it can be said we already live in a digital Panopticon. Moreover, players often implicitly invite observation through video game streaming, competition, or social play. For example, the ESA estimates that 65% of video game players play with others (ESA Citation2020). Furthermore, with 64% of American adults playing video games (ESA Citation2020), it has become increasingly important to understand how observation influences players.

8.1. Limitations

Our effect sizes fall in the range of small to medium (.01 < < .06). We feel that these findings are nonetheless important and impactful for a few reasons. First, with the number of gamers, even small effect sizes can have wide-ranging consequences. Second, the effects here are likely underestimated given AMT is a relatively anonymous space (personal and identifying details are not shared), making reputational concerns less of a factor. And finally, observer salience is minimised (here we've used text, but observer(s) could also be represented using icons, an avatar, a live-video feed, etc.), as is interaction.

On Twitch, for example, players are not only being observed and evaluated, but there is also a heavy interactive communication component. Twitch viewers can cheer, condemn, or make comments. Future studies could begin to look at interactivity in a controlled manner. For example, a spectator represented by an avatar could be used to provide reactions to the gameplay (reactions could be manually triggered by the observer or automatically through gameplay events).

One limitation of the current study is all six of the games studied were single-player. This is in contrast to games embedded in social contexts, e.g. League of Legends, World of Warcraft, Candy Crush Saga. Moreover, the games selected in Experiment 1 were mainly educational in nature, involving programming, puzzle, and math problem game mechanics. For this reason, Experiment 3 aimed to broaden the game genres tested to an infinite runner, a platformer game, and an action RPG. Nevertheless, across all six games, there are many mechanics (especially those that are social in nature and might involve collaborating or competing against others) that are not present. Additionally, typical observers in games are not researchers, but other players. Therefore, one limitation of our work here is both the scope of gaming context and the type of observer. It would be important for future studies to investigate the influence of typical social elements in games (e.g. collaborating on a mission, spectating a player while waiting to be revived) across a wider variety of gaming contexts. This would further illuminate the influence of social gaming context and observer identity on social facilitation effects. Although our current studies were limited to single-player games, and a researcher as observer, our main goal in these studies was to understand the applicability of social facilitation to digital games.

Finally, it would also be worthwhile to study these effects in a longitudinal context. If participants' gameplay is observed over the course of weeks, months, or years, what types of effects will we see on their game experience? Will there ultimately be benefits, or will the effects become increasingly detrimental? What if observation is administered only occasionally? These questions require long-term studies to answer.

9. Conclusion

In Experiment 1 and Experiment 3, there is strong support that observation increases performance and time spent. This was consistent across six different games. Moreover, there is some support for the hypothesis that observation increases player experience, and intrinsic motivation. In Experiment 2, there is support for the hypothesis that different types of observers affect the results. Those participants who were told they were being observed by a professor had, at times, lowered performance, player experience, intrinsic motivation, time spent, and higher state anxiety, than participants told they were observed by a researcher.

Our studies demonstrate that observation affects players of video games. We provide a contribution to research on video games and observers. This is an increasingly important domain of research, with the rise of technologies like Twitch, e-sports, free-to-play games (which rely on player surveillance to inform monetisation strategies and overall game design), and digital observation more generally. Our findings contribute to the ongoing discussion on the applicability of social facilitation to games, and are valuable for game designers, researchers, and platforms, such as Steam and Twitch, in understanding user behaviour and improving the player experience. Here, we've contributed several large-scale studies on observation in the context of games.

Disclosure statement

No potential conflict of interest was reported by the author(s).

Notes

1 Gameplay video: http://youtu.be/n2rR1CtVal8

References

- Adams, E. 2014. Fundamentals of Game Design. Pearson Education. https://books.google.com/books?hl=en&lr=&id=L6pKAgAAQBAJ&oi=fnd&pg=PR6&dq=adams+2014+fundamentals+of+game+design&ots=1DHnQEX-Tm&sig=9K9Umu-1JU72c8dJM5r2B5FEwF4#v=onepage&q=adams%202014%20fundamentals%20of%20game%20design&f=false

- Aiello, J. R., and C. M. Svec. 1993. “Computer Monitoring of Work Performance: Extending the Social Facilitation Framework to Electronic Presence 1.” Journal of Applied Social Psychology 23 (7): 537–548.

- Allport, F. H. 1920. “The Influence of the Group Upon Association and Thought.” Journal of Experimental Psychology 3 (3): 159–182.

- Amabile, T. M. 2018. Creativity in Context: Update to the Social Psychology of Creativity. Milton Park: Routledge.

- American Management Association. 2008. “2007 Electronic Monitoring & Surveillance Survey.” Technical Report. http://press.amanet.org/press-releases/177/2007-electronic-monitoring-surveillance-survey.

- Baumeister, R. 1984. “Choking Under Pressure: Self Consciousness and Paradoxical Effects of Incentives on Skilful Performance.” Journal of Personality and Social Psychology 46 (3): 610–620. doi:10.1037/0022-3514.46.3.610.

- Baumeister, R. F., and D. G. Hutton. 1987. “Self-Presentation Theory: Self-Construction and Audience Pleasing.” In Theories of Group Behavior. doi:10.1007/978-1-4612-4634-3_4.

- Baumeister, R. F., and M. R. Leary. 1995. “The Need to Belong: Desire for Interpersonal Attachments As a Fundamental Human Motivation.” Psychological Bulletin 117 (3): 497–529.

- Bond, C. F. 1982. “Social Facilitation: A Self-Presentational View.” Journal of Personality and Social Psychology 42 (6): 1042–1050. doi:10.1037/0022-3514.42.6.1042.

- Bond, C. F., and L. J. Titus. 1983. “Social Facilitation: A Meta-Analysis of 241 Studies.” Psychological Bulletin 94 (2): 265–292. doi:10.1037/0033-2909.94.2.265.

- Bowman, N. D., R. Weber, R. Tamborini, and J. Sherry. 2013. “Facilitating Game Play: How Others Affect Performance At and Enjoyment of Video Games.” Media Psychology 16 (1): 39–64. doi:10.1080/15213269.2012.742360.

- Cairns, P., A. Cox, and A. Imran Nordin. 2014. “Immersion in Digital Games: Review of Gaming Experience Research.” In Handbook of Digital Games. doi:10.1002/9781118796443.ch12.

- Chirkov, V., R. M. Ryan, Y. Kim, and U. Kaplan. 2003. “Differentiating Autonomy From Individualism and Independence: A Self-Determination Theory Perspective on Internalization of Cultural Orientations and Well-Being.” Journal of Personality and Social Psychology 84 (1): 97–110.

- Club Penguin. 2009. “Factual Information Spy Handbook.” https://clubpenguin.fandom.com/wiki/The_F.I.S.H.

- Collister, L. B. 2014. “Surveillance and Community: Language Policing and Empowerment in a World of Warcraft Guild.” Surveillance and Society 12 (3): 337–348. doi:10.24908/ss.v12i3.4956.

- Cottrell, N. B., D. L. Wack, G. J. Sekerak, and R. H. Rittle. 1968. “Social Facilitation of Dominant Responses by the Presence of An Audience and the Mere Presence of Others.” Journal of Personality and Social Psychology 9 (3): 245–250.

- Crowne, D. P., and S. Liverant. 1963. “Conformity Under Varying Conditions of Personal Commitment.” The Journal of Abnormal and Social Psychology 66 (6): 547–555.

- Deci, E. L., J. Nezlek, and L. Sheinman. 1981. “Characteristics of the Rewarder and Intrinsic Motivation of the Rewardee.” Journal of Personality and Social Psychology 40 (1): 1–10.

- Deci, E. L., and R. M. Ryan. 2012. Motivation, Personality, and Development Within Embedded Social Contexts: An Overview of Self-Determination Theory. Oxford University Press.

- Deterding, S. 2015. “The Lens of Intrinsic Skill Atoms: A Method for Gameful Design.” Human-Computer Interaction 30 (3–4): 294–335. doi:10.1080/07370024.2014.993471.

- Diamond, D. M., A. M. Campbell, C. R. Park, J. Halonen, and P. R. Zoladz. 2007. “The Temporal Dynamics Model of Emotional Memory Processing: A Synthesis on the Neurobiological Basis of Stress-Induced Amnesia, Flashbulb and Traumatic Memories, and the Yerkes-Dodson Law.” Neural Plasticity 2007: 60803.

- Emmerich, K., and M. Masuch. 2018. “Watch Me Play: Does Social Facilitation Apply to Digital Games?” In Proceedings of the SIGCHI Conference on Human Factors in Computing Systems – CHI'18, 1–12. doi:10.1145/3173574.3173674.

- ESA. 2020. “2020 Essential Facts About the Video Game Industry.” ESA Report 2020.

- Foucault, M. 1978. “Discipline and Punish: The Birth of the Prison.” Contemporary Sociology 7 (5): 566. doi:10.2307/2065008.

- Gajadhar, B. J., Y. A. De Kort, and W. A. IJsselsteijn. 2008. “Influence of Social Setting on Player Experience of Digital Games.” In Proceedings Conference on Human Factors in Computing Systems. doi:10.1145/1358628.1358814.

- Hall, B., and D. D. Henningsen. 2008. “Social Facilitation and Human–Computer Interaction.” Computers in Human Behavior 24 (6): 2965–2971.

- Harlow, H. F., and H. C. Yudin. 1933. “Social Facilitation of Feeding in the Monkey and Its Relation to Attitudes of Ascendance and Submission.” Journal of Comparative Psychology 16 (2): 171–185. doi:10.1037/h0071690.

- Henchy, T., and D. C. Glass. 1968. “Evaluation Apprehension and The Social Facilitation of Dominant and Subordinate Responses.” Journal of Personality and Social Psychology 10 (4): 446–454.

- Hope, A. 2016. “Surveillance Futures.” http://www.tandfebooks.com/isbn/9781315611402.

- Hope, A. 2018. “Creep: The Growing Surveillance of Students' Online Activities.” Education and Society 36 (1): 55–72.