ABSTRACT

We explored the critical factors associated with iLearning that impact students’ learning performance and identified the factors with a notable influence to help managers in higher education institutions increase the effectiveness of iLearning for students. We initially synthesised 4 main dimensions (including 26 criteria): performance expectancy, lecturers’ influence, quality of service, and personal innovativeness. Subsequently, we conducted surveys in two stages. First, by studying a group of students with experience using iLearning at Taiwanese universities, we extracted 5 critical dimensions (including 18 criteria) through a factor analysis. Second, by studying a group of senior educators and practitioners in Taiwan, we prioritised the dimensions and criteria through the analytic hierarchy process (AHP). We found that performance expectancy is the top critical dimension, and the top five critical criteria pertain to enhancing the learning performance, increasing the learning participation, altering learning habits, ensuring access at all times, and enabling prompt use of learning resources. Moreover, we recommend several suggestions for the relevant managers to enhance the students’ iLearning performance.

1. Introduction

Technological advancement has significantly changed the way in which students learn and has challenged traditional pedagogy in higher education (Rossing et al. Citation2012; Thongsri, Shen, and Bao Citation2019). To learn, students in the past had to attend face-to-face classes or read books (Summers, Waigandt, and Whittaker Citation2005). However, in recent years, an increasing number of different ways to learn have emerged, for example using Facebook, iTunes, and podcasts, or registering for courses provided by online course venders such as Coursera and Udemy. In other words, students can learn using portable devices such as mobile phones and laptops (i.e. mLearning; e.g. Al-Emran, Arpaci, and Salloum Citation2020; Irwin et al. Citation2012; McGarr Citation2009; McKinney, Dyck, and Luber Citation2009). Many studies have found that at universities, mLearning is a key way to learn and teach, and this mode contributes to the students’ learning motivation and performance (e.g. Crompton, Burke, and Gregory Citation2017; Major, Haßler, and Hennessy Citation2017; Miller and Cuevas Citation2017). However, mLearning has been criticised in terms of several aspects. For example, mLearning creates a sense of isolation for students and a sense of being out of the loop for both teachers and students (e.g. Rahman Citation2020); additionally, systems to monitor the learning processes and performance in real time are lacking (e.g. Corbeil and Valdes-Corbeil Citation2007). Therefore, intelligent learning (iLearning) has emerged as another mainstream way to enable learning and teaching in higher education frameworks because it addresses many of the existing drawbacks of mLearning and provides additional benefits for learning and teaching.

iLearning refers to a smart campus that enables intelligent learning and teaching; related applications, such as interactive learning systems, smart classrooms, synchronous distance teaching and learning, virtual learning communities, and gaming competitions; and collaborative learning across classrooms (e.g. Aini, Rahardja, and Hariguna Citation2019; Aini, Dhaniarti, and Khoirunisa Citation2019; Abbasy and Quesada Citation2017; Araya et al. Citation2016; AjazMoharkan et al. Citation2017; Marquez et al. Citation2016; Muhamad et al. Citation2017). In the iLearning environment, instructors/lecturers and students are involved in the learning processes in a real-time manner. Although recent studies have indicated that iLearning may contribute to the students’ learning performance (e.g. Nik-Mohammadi and Barekat Citation2015; Yarmatov, & Ahmedova, Citation2020), the factors associated with iLearning platforms that influence the students’ learning performance and the relevant factors that must be considered by university managers to enhance the effectiveness of iLearning for students remain unclear. Addressing these gaps in the literature is crucial for universities to effectively improve the overall quality of education and, in turn, their competitive advantages in the long run. The reason is that the resources in any organisation may be limited; hence, it is important for universities to invest their limited resources in addressing the factors that may have a greater impact on the students’ learning performance by adopting iLearning.

Considering these aspects, we aimed to explore the critical factors associated with iLearning platforms that may influence the students’ learning performance and identify the factors with the most notable influence. First, we extracted the factors pertaining to iLearning platforms that have been found to influence the students’ learning performance based on an extensive literature review. Using these factors, we conducted a student survey to identify the key factors for iLearning and prioritise these factors based on an expert survey of other stakeholders (academic staff and practitioners from different industries and the government) to identify the factors that significantly influence the students’ learning performance. We contribute to the literature in the following way: we present novel insights regarding iLearning by specifying the elements derived from iLearning platforms that affect the students’ learning performance, thereby also contributing to the literature related to higher education by demonstrating an efficient technique to increase the effectiveness of iLearning for students. Specifically, although iLearning has gained importance in recent years, few studies have attempted to explore the critical elements (e.g. dimensions and criteria) that may influence students’ iLearning performance. We believe that by focusing on enhancing the key elements, managers of higher education institutions can effectively optimise the students’ iLearning performance in an efficient and effective manner.

2. Key factors derived from ilearning platforms that influence iLearning for students

Learning is a personal act, and learning performance may be affected by the conditions of the learning environment (Milošević et al. Citation2015). Technological advancements alone may not ensure a student’s learning performance because specific strategies, tools, and resources are necessary to support different types of learning (Milošević et al. Citation2015). Venkatesh and collaborators (Citation2003) introduced four dimensions that may influence individuals’ use of new technology: performance expectancy, effort expectancy, social influences, and facilitating conditions. These dimensions have been tested in existing studies (e.g. Wang et al. Citation2020; Wang, Wu, and Wang Citation2009), and several researchers have demonstrated that the performance expectancy should be particularly emphasised compared to effort expectancy (e.g. Oh, Lehto, and Park Citation2009). Additionally, the use of new technology may be impacted by not only how effectively and efficiently individuals receive support but also the overall experience of service that the technology delivers (Choi et al. Citation2020). Moreover, recent studies have highlighted that individual differences such as personal innovativeness may influence the effective use of new technology (e.g. Patil et al. Citation2020). Considering these aspects, we adopted performance expectancy, lecturers’ influence, quality of service, and personal innovativeness as the measurement dimensions in our research. Notably, due to the nature of our study, rather than focusing on social influence in general, we particularly focused on the lecturers’ influence since lecturers frequently interact with students during the iLearning journey and are therefore more likely to influence students through teacher – student interactions.

‘Performance expectancy’ concerns the degree to which individuals believe that adopting an information platform/system will facilitate them in functioning effectively in a given environment or in achieving a given outcome (Venkatesh et al. Citation2003). When individuals perceive that the use of a specific information platform/system will help them achieve the desired outcome, they may be more likely to use it (Do Nam Hung, Azam, and Khatibi Citation2019). Empirical studies have supported this observation by demonstrating that enhancing the individuals’ performance expectancy may significantly alter their attitude toward a given information platform/system as well as their behavioural intent (e.g. Lowenthal, Citation2010; Purwanto and Loisa Citation2020). Similar findings have been obtained in learning-related studies. In an iLearning context, the performance expectancy may be viewed as the students’ perception of whether the use of a specific learning platform/system (e.g. an iLearning platform) will benefit their learning performance. Wang, Wu, and Wang (Citation2009) and Chaka and Govender (Citation2017) found that as part of learning in a digital and intelligent manner, performance expectancy was a strong determinant that motivated students to adopt this mode of learning. Recent studies have indicated that intelligent and digital learning may enrich students’ learning performance by providing new learning experiences, increasing learning participation (e.g. Chen Citation2011; Yarmatov & Ahmedova, Citation2020), and facilitating collaborative learning (e.g. Hirsch and Ng Citation2011; Abbasy and Quesada Citation2017) in an integrated environment. Additionally, iLearning may help students strengthen their learning efficiency, enhance their learning performance (e.g. Abu-Al-Aish and Love Citation2013; Wang, Wu, and Wang Citation2009), and further extend their learning effect across different domains (e.g. Karimi Citation2016). Therefore, it is conceivable that these elements may help enhance the students’ iLearning performance expectancy.

‘Lecturers’ influence’ originates from social influence (Abu-Al-Aish and Love Citation2013). Social influence concerns the degree to which individuals perceive others’ expectations regarding the use of an information platform/system (Sung et al. Citation2015; Venkatesh et al. Citation2003). In other words, if individuals strongly perceive that other individuals expect them to use a specific information platform/system, the individuals are more likely to be motivated to use it (Alshurideh et al. Citation2020; Liu and Li Citation2010; Lu and Viehland Citation2008; Nassuora Citation2012; Venkatesh and Morris Citation2000). Existing studies have revealed that social influence, similar to performance expectancy, is a key element that can significantly influence the individuals’ behavioural intent to adopt new technology (e.g. Alshurideh et al. Citation2020; Venkatesh & Davis, Citation2000; Venkatesh, Morris, and Ackerman Citation2000). The concept of social influence has been widely extended to the education and learning literature (e.g. Chen Citation2011; Fagan Citation2019; Iqbal and Qureshi Citation2012; Sabah Citation2016; Vrana Citation2018).

In a learning context, the ‘lecturers’ influence’ is defined as the degree to which instructors/lecturers encourage, support, and motivate students to adopt a specific learning platform/system for learning (Abu-Al-Aish and Love Citation2013). Existing studies have reported findings that support this aspect (e.g. Badwelan, Drew, and Bahaddad Citation2016; Izkair, Lakulu, and Mussa Citation2020; Milošević et al. Citation2015). For example, in their study based on a Chinese university, Hao, Dennen, and Mei (Citation2017) discovered that the lecturers’ influence may motivate the students in using a new learning platform for digital learning. Similarly, Nassuora (Citation2012) indicated that lecturers’ positive word of mouth may enhance the students’ willingness to learn through a digital learning platform. Other studies have claimed that to effectively motivate and support students in adopting a digital learning platform (e.g. an iLearning platform), instructors/lecturers may alter their teaching methods from a traditionally physical method to a method that is both intelligent and digital, thereby providing students with a higher learning quality through the digital learning platform, enabling them to experience mobile learning in which they can learn at any location, and increasing real-time discussions between instructors and their students via the platform (e.g. Abu-Al-Aish and Love Citation2013; Badwelan, Drew, and Bahaddad Citation2016; Chen Citation2011; Milošević et al. Citation2015; Iqbal and Qureshi Citation2012; Sabah Citation2016; Vrana Citation2018). Specifically, iLearning platforms allow a teacher to teach in real time, and the students have opportunities to have face-to-face interactions and discussions with the teacher (e.g. Kose and Deperlioglu Citation2012). Such frameworks also allow the teacher to teach students to use the platform to learn more promptly and effectively. Therefore, these elements may enhance the lecturers’ influence on the students’ iLearning.

The ‘quality of service’ in the information system and human–computer interaction fields is not the same as that in service industry management (Ameen et al. Citation2019; Kuan, Bock, and Vathanophas Citation2008). The former type concerns individuals’ assessment of the reliability and response, content quality, and security of an information platform/system, and the latter type refers to the expectation and satisfaction of customers with regard to the services being provided (Abu-Al-Aish and Love Citation2013; Celesti et al. Citation2019; Rai, Lang, and Welker Citation2002; Venkatesh et al. Citation2003). Existing studies have found that when a service is provided in a satisfactory manner, individuals are more likely to adopt new technology (Abu-Al-Aish and Love Citation2013); however, a low quality of service (e.g. inferior facilitating conditions) may impede the adoption of new technology (Lim and Khine Citation2006). In a learning context, Lee showed that students’ perception of the quality of service of a digital learning platform may significantly affect their willingness to use the platform for learning. Similarly, Abu-Al-Aish and Love (Citation2013) indicated that in a digital learning environment, the quality of service plays a crucial role in predicting the students’ acceptance and adoption of the learning platform in the environment.

To enhance the quality of service of iLearning platforms, recent studies have suggested that instructors should provide ample learning resources (Shukla Citation2021; Swanson Citation2020), enhance their teaching quality (Zakariaa, Maatb, and Khalidc Citation2019), promote a pleasant intelligent campus experience (Lin and Su Citation2020; Wu, Wu, and Su Citation2019), and improve traditional learning methods for students (Ehsanpur and Razavi Citation2020). Moreover, researchers have suggested that learning platform providers should not only ensure the students’ privacy and safety but also assist them in using learning resources efficiently and in logging onto the platform at any time. Additionally, it has been noted that encouraging students to learn via cloud computing technology, increasing the efficiency with which messages are transferred between instructors/lecturers and students, enhancing the operational stability of the learning platform, increasing the number of campus receivers, and making the platform simple and easy to use are critical to advance the quality of service of the learning platform (e.g. Abu-Al-Aish and Love Citation2013; Badwelan, Drew, and Bahaddad Citation2016; Hassanzadeh, Kanaani, and Elahi Citation2012; Kim and Ong Citation2005; Kim et al. Citation2012; Lee Citation2006; Liu, Han, and Li Citation2010; Milošević et al. Citation2015; Ng et al. Citation2010; Mohammadi Citation2015; Salloum et al. Citation2019). These findings may be viewed as elements that may enhance the quality of service of iLearning for students.

‘Personal innovativeness’ concerns the degree to which individuals are willing to try and use a new information platform/system (Agarwal and Prasad Citation1998; Rogers Citation2003). Compared to their counterparts, individuals with a high degree of personal innovativeness are more likely to adopt and deal with the uncertainty associated with a new information platform/system (Abu-Al-Aish and Love Citation2013; Lu, Yao, and Yu Citation2005). Existing studies have reported supportive evidence that indicates that personal innovativeness can motivate an individual to use new technology (e.g. Agarwal and Prasad Citation1998; Agarwal and Karahanna Citation2000; Fang, Shao, and Lan Citation2009; Hung and Chang Citation2005; Lassar, Manolis, and Lassar Citation2005; Lewis, Agarwal, and Sambamurthy Citation2003; Lu, Yao, and Yu Citation2005; Lian and Lin Citation2008; Matute-Vallejo and Melero-Polo Citation2019; Yi et al. Citation2006). Although personal innovativeness has been widely investigated in different fields, such as online shopping and blogs (e.g. Bhagat and Sambargi Citation2019; Bigne-Alcaniz et al. Citation2008; Wang, Chou, and Chang Citation2010), it is particularly crucial in a digital and intelligent learning context (Abu-Al-Aish and Love Citation2013; Agarwal and Prasad Citation1998; Badwelan, Drew, and Bahaddad Citation2016; Handoko Citation2019; Joo, Lee, and Ham Citation2014; Fagan, Kilmon, and Pandey Citation2012; Liu, Li, and Carlsson Citation2010; Milošević et al. Citation2015; Shorfuzzaman et al. Citation2019; Salloum et al. Citation2019).

For example, Fagan, Kilmon, and Pandey (Citation2012) discovered that students with a high level of personal innovativeness are more willing to learn by adopting virtual reality simulations. Abu-Al-Aish and Love (Citation2013) and Karimi (Citation2016) indicated that personal innovativeness is crucial in any learning environment involving information technology. To effectively support students’ personal innovativeness, existing studies have highlighted the importance of altering students’ learning habits and strengthening their courage to try and enhance their self-efficacy in learning with a new learning platform (e.g. Abu-Al-Aish and Love Citation2013; Badwelan, Drew, and Bahaddad Citation2016; Joo, Lee, and Ham Citation2014; Karimi Citation2016; Milošević et al. Citation2015; Shorfuzzaman et al. Citation2019; Salloum et al. Citation2019). Notably, these suggestions may be elements that can enhance the students’ personal innovativeness with regard to iLearning.

In conclusion, through a literature analysis summarising the most referenced and commonly used factors in the relevant studies, we propose four dimensions with 26 criteria (i.e. elements that may help enhance the dimensions) for iLearning. summarises the proposed dimensions and sources of the proposed criteria.

Table 1. Dimensions and criteria that influence iLearning of students.

3. Research methods

3.1. Research procedure and strategy

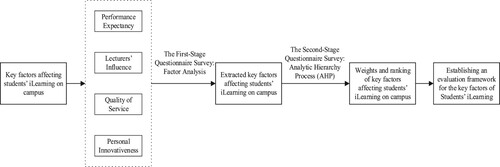

The research procedure of this study is presented in . We aimed to explore the critical factors that may affect students’ iLearning on campus at a university and identify the factors that have a more notable influence by calculating the relative importance of the critical dimensions and criteria (i.e. factors). To develop an iLearning research framework, we used keywords such as mLearning, eLearning, iLearning, acceptance and use of new technology, and teaching and learning in a high education context to seek the articles necessary for an extensive literature review. The reviewed and considered studies are summarised in . Based on the extensive literature review, we developed an iLearning research framework that encompasses four critical dimensions with 26 critical criteria. These dimensions and criteria were used to develop a questionnaire. The study was performed in two stages. In the first stage, questionnaire surveys were distributed, through convenience sampling, to respondents (i.e. students who have used iLearning on campus at a university) physically by the researchers. The survey required approximately one month to complete. Subsequently, we performed factor analysis (FA) to extract the critical factors, which were employed to construct the hierarchical evaluation structure of the second-stage questionnaire. In the second stage, the analytic hierarchy process (AHP) was adopted to obtain a consensus based on domain expert opinions. Using the judgment sampling method, the researchers consulted the experts personally across three different sectors (e.g. industry, government, and academia). Next, we analyzed the relative importance of and prioritised each critical dimension and criterion. Based on the analysis results, we formulated managerial and educational recommendations, with this study’s findings serving as a valuable reference for university managers to enhance the effectiveness of iLearning for students. In the following sections, we briefly introduce the relevant data analysis methods (including FA and AHP) used in this study.

3.2. FA

FA is a multivariate technique applied to address the problem of interdependence between variables. Factor loadings can be used to extract the main factors based on the explained maximum variance for all variables. The main purpose/advantage of FA is to reduce the number of variables without losing the representativeness of the original data by identifying the hidden dimensions that may/may not be directly apparent from the analyses. However, FA results may be controversial. The interpretations of the results may be debatable since different interpretations may be derived from the same factors. Therefore, naming or renaming factors would require researchers to have specialised knowledge (Navlani Citation2019). This study used principal component analysis with varimax rotation for factor extraction in the first stage to confirm that no correlations existed among the factors and to satisfy the requirement of independence among the elements of the hierarchical structure in the second-stage AHP analysis. Based on these results, we examined the reliability of the extracted dimensions based on the relevant criteria.

3.3. AHP

The AHP, which was first proposed by Saaty in 1971, is a systematic and hierarchical analysis tool that combines qualitative and quantitative methods (Saaty Citation1980). This approach can practically and effectively solve complex decision-making problems by dividing the complex decision-making situations into small components. These small components are organised in a tree-like hierarchy, and expert opinions are collected to evaluate the relative importance of each component (i.e. dimension/criterion) on a scale ranging from 1 to 9. Specifically, pairwise comparisons are performed between the evaluation factors; next, comparison matrixes are established to reflect the decision makers’ subjective preferences. The eigenvectors and eigenvalues are calculated, and the eigenvectors determine the relative weights between the criteria, representing the priority of each component (i.e. dimension/criterion) at each level, which can provide decision makers with sufficient decision information, help define the relevant criteria and selected weights, and reduce the risk of decision errors.

In summary, the AHP helps simplify complex problems into hierarchies, thereby facilitating the comparison of factors in a pairwise manner, and it represents a useful tool for addressing the ambiguity and complexity in decision problems (Ramanathan Citation2001). Existing studies have highlighted several advantages of the AHP. For example, Macharis and collaborators (Citation2004) proposed that the AHP allows a decision problem to be decomposed into constitutive parts that allow the development of hierarchies of the dimensions and criteria, thereby clarifying the level of importance pertaining to each dimension and criterion. Zahir (Citation1999) claimed that the AHP enables group decision-making via group consensus. Millet and Wedley (Citation2002) indicated that the AHP is beneficial in situations that are uncertain or risky because it allows researchers or practitioners to develop scales in cases in which ordinary evaluations cannot be applied. Another advantage of using the AHP is that a large sample size is not required to achieve statistically robust results, and in several cases, the results derived from even a single qualified expert may be sufficiently representative since the AHP is based on judgments of field/specialised experts (e.g. Darko et al. Citation2019; Tavares, Tavares, and Parry-Jones Citation2008). The sample size used in many AHP studies normally ranges from 4–9 (e.g. Pan, Dainty, and Gibb Citation2012; Akadiri, Olomolaiye, and Chinyio Citation2013; Chou, Pham, and Wang Citation2013), although a few studies have been known to adopt a sample size larger than 30 (e.g. Ali and Al Nsairat Citation2009; El-Sayegh Citation2009). In other words, sample selection is more important than the sample size for an AHP study. This common practice also justifies the appropriateness of the sample size of this study. One of the most criticised aspects of the AHP, however, is that it does not consider the interrelationship between the dimensions and criteria. In this study, the AHP was used as an analytical framework for establishing an evaluation model to determine the order of importance of the critical factors derived from iLearning platforms that may influence students’ iLearning at a university.

Consistency analysis is the main approach that has been used to test the reliability of the experts’ judgments obtained in AHP studies (e.g. Saaty Citation1980; Saaty and Vargas Citation2013). This approach has also been used to support the fact that although the data collected are subjective judgments, the AHP can reduce bias and ensure that the judgments are reliable (Abudayyeh et al. Citation2007; Hsu, Wu, and Li Citation2008). In this research, based on the results of the AHP, we further adopted consistency analysis with the threshold of consistency (value <1) suggested by Saaty (Citation1980) on the studied dimensions and the overall research framework to test their reliability.

4. Data analysis and results

4.1. Respondents’ demographics

The study adopted questionnaire surveys that were administered in two stages. In the first stage, FA was performed to analyze the data collected by convenience sampling from university students with experience using iLearning. For both the pretest (50 valid responses received) and actual study (355 valid responses received), the participants’ characteristics are summarised in .

Table 2. Profiles of the respondents in the first-stage surveys.

In the second stage, the AHP was employed to determine the priorities of the dimensions and criteria based on expert consensus. Judgment sampling was used to solicit feedback from experts who had work experience in fields related to iLearning. A total of 17 valid responses were obtained from experts, including 5, 5, and 7 experts from the industry, government, and academia, respectively. details the backgrounds of the responding experts. Approximately half of the experts had 10 or more years of relevant work experience.

Table 3. Profiles of the responding experts in the second-stage AHP survey.

4.2. Critical factors extracted using FA

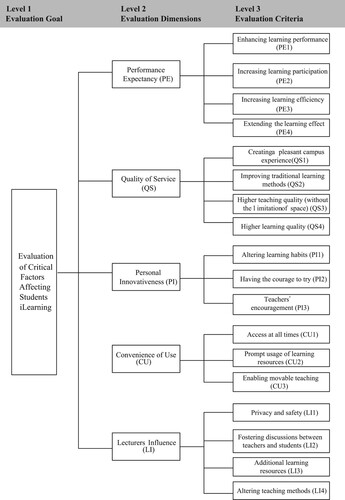

During the first stage, the questionnaire (4 dimensions and 26 criteria) was designed based on the related important factors that may affect students’ iLearning with reference to the criteria shown in . The participants were asked to make their evaluations through a 5-point Likert scale ranging from ‘strongly agree’ (5) to ‘strongly disagree’ (1). After the pretest, one item (numerous campus receivers [C6]) was deleted. In the actual survey, using principal component analysis (PCA) with iterations and varimax rotation, the factors (5 dimensions and 18 criteria) were extracted after eliminating all criteria with factor loadings less than 0.5. The five dimensions were renamed ‘performance expectancy’ (PE) (4 criteria), ‘quality of service’ (QS) (4 criteria), ‘personal innovativeness’ (PI) (3 criteria), ‘convenience of use’ (CU) (3 criteria), and ‘lecturers’ influence’ (LI) (4 criteria), which accounted for 64.65% of the total variance. The data regarding the factor loadings of each criterion, Cronbach’s α values and variance explained by the factors (dimensions), are summarised in . The extracted critical factors (5 dimensions and 18 criteria) were used to construct a hierarchical structure ().

Table 4. Results of the extracted critical dimensions and criteria of iLearning.

4.3. Relative weights of the critical factors examined in the AHP

In the second stage, as shown in , the AHP was employed to prioritise the factors, i.e. the dimensions and criteria. Pairwise comparisons among the factors were performed by experts with work experience and knowledge regarding iLearning. The opinions (assessments) of the 17 experts were averaged to obtain expert consensus. The consistency ratio (C.R.) values of the 5 dimensions, i.e. PE, QS, PI, CU, and LI, were 0.000, 0.076, 0.080, 0.091, and 0.059, respectively, which were less than 0.1 and thus satisfied the threshold of consistency suggested by Saaty (Citation1980). The C.R. for the whole hierarchy (0.062) was also less than 0.1. Therefore, the experts’ opinions were considered consistent. In other words, the AHP analysis results were reliable. presents the relative weights (importance) and rankings of the dimensions and criteria. The most critical dimension that affected students’ iLearning was PE (weight=0.349), followed by CU (0.185), PI (0.169), QS (0.168), and LI (0.130). Additionally, the top five critical criteria were enhancing the learning performance (PE1) (0.140), increasing the learning participation (PE2) (0.110), altering learning habits (PI1) (0.092), ensuring access at all times (CU1) (0.075), and enabling prompt usage of learning resources (CU2) (0.067).

Table 5. Results of the prioritised critical factors for iLearning.

5. Discussion

This study was aimed at exploring the critical factors associated with iLearning platforms that may impact students’ learning performance and identifying the criteria with a more notable impact to enable university managers to enhance the effectiveness of iLearning for students. First, through an extensive literature review, we synthesised four critical dimensions (e.g. performance expectancy, lecturers’ influence, quality of service, and personal innovativeness) and 26 critical criteria. Subsequently, we extracted five critical dimensions (prompt usage of learning resources is a new dimension extracted based on the FA result) with 18 critical criteria by studying a group of students from universities in Taiwan. We further prioritised these criteria by surveying a group of senior educators and practitioners in Taiwan. Our results indicated that enhancing the learning performance, increasing the learning participation, altering learning habits, ensuring access at all times, and enabling prompt usage of learning resources are the criteria that university managers must emphasise to enhance the effectiveness of iLearning for students.

In terms of the dimensions, our results indicated that PE is the most crucial dimension that may motivate students to adopt iLearning, which aligns with existing related findings (e.g. Chaka and Govender Citation2017; Lowenthal Citation2010; Wang, Wu, and Wang Citation2009). In other words, to successfully implement iLearning in a university, it is crucial for the managers of the university to place emphasis on helping students perceive that they can make progress in their learning performance through iLearning, which in turn may stimulate the students to be more willing to learn by adopting iLearning. In addition, our results indicated that enhancing the learning performance and increasing learning participation are two key factors that strengthen the students’ iLearning PE, which is in line with the findings of many existing studies (e.g. Abu-Al-Aish and Love Citation2013; Chen Citation2011; Wang, Wu, and Wang Citation2009). This finding implies that to effectively help students perceive that they can enhance their learning performance with iLearning, the design of iLearning may need to be goal oriented. For example, a clear yet challenging learning goal may be needed to ensure that the extent to which the students’ learning performance is enhanced can be measured by instructors/lecturers and perceived by the students.

Furthermore, this finding indicates that it may be necessary to focus on designing iLearning that can effectively stimulate students to proactively participate during their learning journey to strengthen the cognitive connection between the use of iLearning and subsequent learning performance. Empirical studies have shown that when students are engaged in learning, they are more likely to perceive an improved learning outcome (e.g. Blasco-Arcas et al. Citation2013; McLaughlin et al. Citation2013). However, the learning participation and learning performance may not always be proportional. Therefore, with respect to managerial implications, the relevant units of iLearning management should not only focus on the students’ learning performance but also consider whether the students’ involvement and inputs in learning can be enhanced through iLearning. Existing studies have indicated that if students find that their inputs are polished or advanced, they are more likely to perceive better learning outcomes (e.g. Chemosit Citation2012). Among the relevant factors, enhancing the learning performance and increasing the learning participation represent the most crucial factors that must be considered the main goals while implementing iLearning in a university settings.

According to our results, CU is the second most important dimension, which is in line with existing findings regarding the importance of the efficiency of using an information platform/system in a digital and intelligent learning context (e.g. Abu-Al-Aish and Love Citation2013; Badwelan, Drew, and Bahaddad Citation2016; Hassanzadeh, Kanaani, and Elahi Citation2012; Kim and Ong Citation2005; Kim et al. Citation2012; Lee Citation2006; Liu et al., 2010; Milošević et al. Citation2015; Ng et al. Citation2010; Mohammadi Citation2015; Salloum et al. Citation2019). This finding implies that the ease of use of iLearning can enhance the students’ willingness to use and continue to use iLearning. Our results also indicate that ensuring access at all times and enabling the prompt use of learning resources are critical to increase the students’ efficiency in using iLearning platforms. This finding reflects that it may be crucial for university managers to allow students to learn at their own pace. Different students have a different pace of learning, and thus, students may be more likely to learn effectively and successfully when learning at their own pace (Chou and Liu Citation2005; Vermunt Citation2005). Other studies based on the iLearning platform infrastructure have suggested investing in smart campuses (e.g. Hirsch and Ng Citation2011), ensuring seamless connectivity, and supporting integrated learning across various devices (laptops, tablets and smartphones) for ubiquitous learning (e.g. Atif, Mathew, and Lakas Citation2015).

Additionally, our findings reflect the need for instructors/lecturers to upload learning resources in a timely manner and to ensure the fit and consistency between teaching contents and learning resources to ensure that students can use the learning resources efficiently when needed. Supporting this point, existing studies have highlighted the importance of the timely provision of learning resources and usefulness of learning from/with these resources, especially in a digital and intelligent learning environment (e.g. Ma et al. Citation2010; Otieno Citation2010; Ross and Grinder Citation2002). Certain studies also emphasised the need to ensure that iLearning platforms allow students to use learning resources from any device (e.g. Atif, Mathew, and Lakas Citation2015; Hirsch and Ng Citation2011). Notably, ensuring access at all times and enabling prompt usage of learning resources should be considered the main goals to be fulfilled while performing iLearning in a university setting, and these aspects represent two of the top five factors.

PI is ranked as one of the top three dimensions in this study, echoing existing studies that highlight its importance in a digital and intelligent learning environment (Abu-Al-Aish and Love Citation2013; Agarwal and Prasad Citation1998; Badwelan, Drew, and Bahaddad Citation2016; Joo, Lee, and Ham Citation2014; Fagan, Kilmon, and Pandey Citation2012; Liu et al., 2010; Milošević et al. Citation2015; Shorfuzzaman et al. Citation2019; Salloum et al. Citation2019). This finding implies that to effectively implement iLearning, university managers may need to develop students’ interest in trying and adopting iLearning. Our results further indicate that altering the learning habits of students is crucial in assisting managers with this task. Recent studies have suggested that iLearning must be designed to provide opportunities for students to frequently interact with learning since an interactive design and application in a digital and intelligent learning environment is beneficial in changing students’ behaviour in specific ways (e.g. Ibrahim, Halim, and Ibrahim Citation2012).

In general, among the 18 criteria, the accumulated importance weight (0.538) of the top six criteria, i.e. enhancing the learning performance, increasing the learning participation, altering learning habits, ensuring access at all times, enabling prompt usage of learning resources, and increasing the learning efficiency, accounts for more than half of the overall importance weight. These results indicate that except for the criterion of altering learning habits, which ranks third, all five of the remaining criteria belong to the PE and CU dimensions. This result is consistent with the finding of Milošević et al. (Citation2015), who have showed that, especially for higher-level education, iLearning should be an integral mode way of learning that provides prompt access to learning resources to enhance the learning performance. In higher education institutions, investments regarding technologies and specialists in iLearning to enhance the learning performance are a notable concern. The importance of iLearning design in changing students’ learning habits and increasing learning participation should be emphasised. The implication may be that university students are no longer satisfied with traditional ways of learning.

Interestingly, the LI dimension has a relatively low impact on students’ iLearning. This result may be explained by the fact that most students are older than 18 and have their own thoughts and opinions. The students likely adopt iLearning because they perceive that their learning performance is enhanced by iLearning in addition to lectures. Notably, however, although this dimension is the least important compared to the others and corresponds to the lowest ranking criterion, altering teaching methods, in practical applications, the teaching methods of instructors/lecturers still influence the students to a certain extent since students may not proactively adopt this mode of learning without the cooperation of instructors/lecturers. Therefore, instructors/lecturers should be encouraged to adopt new teaching methods related to iLearning-related application systems to facilitate the students’ use of iLearning. Implementing this aspect through the CU dimension, which ranks second and consists of both the fourth and fifth highest ranking criteria (i.e. access at all times and prompt usage of learning resources), instructors/lecturers can successfully alter students’ learning habits, which is the third most important criterion.

6. Research Limitations and future recommendations

Although this study strived to be sufficiently rigorous, it involves certain limitations. The recommendations for future follow-up research are as follows. First, because iLearning is still under development despite its increased global application and technological advancement, more dimensions and criteria may be explored or developed in research and practice in the immediate future. Therefore, we suggest that future research should expand our framework by integrating more relevant dimensions and criteria into the framework for assessment. Through this aspect, the research results can be rendered more practical and applicable. Second, future research may be aimed at having student respondents prioritise the dimensions and criteria and compare the results with those obtained in this study. It is likely that the viewpoints of senior educators and practitioners and those of students are not identical. Since the primary users of iLearning platforms are students, implementing iLearning in universities may be more effective and efficient if the critical factors were identified by incorporating the opinions of students.

Third, while prioritising the dimensions and criteria for iLearning, we did not consider the interrelationships between the dimensions and criteria in our framework. A change in one factor may influence other factors. In this regard, we suggest that future research should adopt the analytic network process (ANP) for prioritisation. The ANP can more effectively address multicriteria decision-making issues, such as those investigated in this study, in which the dimensions and/or criteria may be interdependent (Liao et al. Citation2018). Fourth, the generalizability of this study may be enhanced. In this study, due to limited resources and time, we focused only on students and experts in Taiwan. Hence, future research may diversify the respondents by studying students and experts from different countries to enhance the generalizability of our results. Fifth, the dimensions and criteria used in this study may be consolidated by using other techniques to determine the significance among the dimensions and criteria, which may be another avenue for future research before our study framework is retested. Finally, subsequent research may be supplemented by case studies, interviews, and other qualitative analyses to more clearly understand and implement iLearning on campus.

7. Conclusion

Technology has significantly changed our way of life, including the way in which individuals gain knowledge. In recent years, students at many universities have ceased relying on conventional ways of learning. Instead, students have increasingly adopted iLearning on campus. While the importance of iLearning has been highlighted in research and practice, little is known regarding the influence of factors derived from iLearning on the students’ learning performance at a university and the factors that must be focused on by relevant management to enhance the effectiveness of iLearning for students. Considering these aspects, we initially proposed a framework encompassing four critical dimensions with 26 critical criteria based on an extensive literature review. Moreover, by conducting questionnaire surveys in two stages, we advanced the framework and prioritised the critical dimensions and criteria for iLearning. The research results may provide university managers with valuable reference to not only promote the use of iLearning on campus but also enhance the effectiveness of iLearning for students.

Disclosure statement

No potential conflict of interest was reported by the author(s).

Correction Statement

This article has been republished with minor changes. These changes do not impact the academic content of the article.

References

- Abbasy, M. B., and E. V. Quesada. 2017. “Predictable Influence of IoT (Internet of Things) in the Higher Education.” International Journal of Information and Education Technology 7 (12): 914–920.

- Abu-Al-Aish, A., and S. Love. 2013. “Factors Influencing Students’ Acceptance of m-Learning: An Investigation in Higher Education.” The International Review of Research in Open and Distance Learning 14 (5): 86–111. doi:10.19173/irrodl.v14i5.1631.

- Abudayyeh, O., S. J. Zidan, S. Yehia, and D. Randolph. 2007. “Hybrid Prequalification-Based, Innovative Contracting Model Using AHP.” Journal of Management in Engineering 23 (2): 88–96. doi:10.1061/(ASCE)0742-597X(2007)23:2(88).

- Agarwal, R., and E. Karahanna. 2000. “Time Flies When You're Having fun: Cognitive Absorption and Beliefs About Information Technology Usage.” MIS Quarterly, 665–694. doi:10.2307/3250951.

- Agarwal, R., and J. Prasad. 1998. “A Conceptual and Operational Definition of Personal Innovativeness in the Domain of Information Technology.” Information Systems Research 9 (2): 204–215. doi:10.1287/isre.9.2.204.

- Aini, Q., I. Dhaniarti, and A. Khoirunisa. 2019. “Effects of ILearning Media on Student Learning Motivation.” Aptisi Transactions on Managemen 3 (1): 1–12.

- Aini, Q., U. Rahardja, and T. Hariguna. 2019. “The Antecedent of Perceived Value to Determine of Student Continuance Intention and Student Participate Adoption of ILearning.” Procedia Computer Science 161: 242–249. doi:10.1016/j.procs.2019.11.120.

- AjazMoharkan, Z., T. Choudhury, S. C. Gupta, and G. Raj. 2017, February. “Internet of Things and its Applications in E-Learning.” In 2017 3rd International Conference on computational intelligence & Communication Technology (CICT) (pp. 1-5). IEEE. doi:10.1109/CIACT.2017.7977333.

- Akadiri, P. O., P. O. Olomolaiye, and E. A. Chinyio. 2013. “Multi-criteria Evaluation Model for the Selection of Sustainable Materials for Building Projects.” Automation In Construction 30: 113–125. doi:10.1016/j.autcon.2012.10.004.

- Al-Emran, M., I. Arpaci, and S. A. Salloum. 2020. “An Empirical Examination of Continuous Intention to use m-Learning: An Integrated Model.” Education and Information Technologies 25 (4): 2899–2918. doi:10.1007/s10639-019-10094-2.

- Alam, T., and M. Aljohani. 2020. “M-Learning: Positioning the Academics to the Smart Devices in the Connected Future.” International Journal on Informatics Visualization 4 (2): 76–79. doi:10.30630/joiv.4.2.347.

- Ali, H. H., and S. F. Al Nsairat. 2009. “Developing a Green Building Assessment Tool for Developing Countries–Case of Jordan.” Building and Environment 44 (5): 1053–1064. doi:10.1016/j.buildenv.2008.07.015.

- Almahri, F. A. J., D. Bell, and M. Merhi. 2020. “Understanding Student Acceptance and Use of Chatbots in the United Kingdom Universities: A Structural Equation Modelling Approach.” In 2020 6th International Conference on Information Management (ICIM) (pp. 284-288). IEEE. doi:10.1109/ICIM49319.2020.244712.

- Almaiah, M. A., and A. Al Mulhem. 2019. “Analysis of the Essential Factors Affecting of Intention to use of Mobile Learning Applications: A Comparison Between Universities Adopters and non-Adopters.” Education and Information Technologies 24 (2): 1433–1468. doi:10.1007/s10639-018-9840-1.

- Almaiah, M. A., and O. A. Alismaiel. 2019. “Examination of Factors Influencing the use of Mobile Learning System: An Empirical Study.” Education and Information Technologies 24 (1): 885–909. doi:10.1007/s10639-018-9810-7.

- Alshurideh, M., B. Al Kurdi, S. A. Salloum, I. Arpaci, and M. Al-Emran. 2020. “Predicting the Actual use of m-Learning Systems: a Comparative Approach Using PLS-SEM and Machine Learning Algorithms.” Interactive Learning Environments, 1–15. doi:10.1080/10494820.2020.1826982.

- Alshurideh, M., S. A. Salloum, B. Al Kurdi, A. A. Monem, and K. Shaalan. 2019. “Understanding the Quality Determinants That Influence the Intention to use the Mobile Learning Platforms: A Practical Study.” International Journal of Interactive Mobile Technologies 13 (11): 157–183.

- Ameen, A., K. Alfalasi, N. A. Gazem, and O. Isaac. 2019, December. “Impact of System Quality, Information Quality, and Service Quality on Actual Usage of Smart Government.” In 2019 first International Conference of intelligent Computing and Engineering (ICOICE) (pp. 1-6). IEEE. doi:10.1109/ICOICE48418.2019.9035144.

- Araya, R., C. Aguirre, M. Bahamondez, P. Calfucura, and P. Jaure. 2016, September. “Social Facilitation due to Online Inter-Classrooms Tournaments.” In European Conference on Technology Enhanced learning (pp. 16-29). Springer, Cham. doi:10.1007/978-3-319-45153-4_2.

- Aremu, B. V. 2019. “Availability and Utilization of M-Learning Devices for Mathematics at the Universities in Southwest, Nigeria.” FUOYE Journal of Education 2 (2).

- Atif, Y., S. S. Mathew, and A. Lakas. 2015. “Building a Smart Campus to Support Ubiquitous Learning.” Journal of Ambient Intelligence and Humanized Computing 6 (2): 223–238. doi:10.1007/s12652-014-0226-y.

- Badwelan, A., S. Drew, and A. A. Bahaddad. 2016. “Towards Acceptance m-Learning Approach in Higher Education in Saudi Arabia.” International Journal of Business and Management 11 (8): 12–30. doi:10.5539/ijbm.v11n8p12.

- Bai, H. 2019. “Pedagogical Practices of Mobile Learning in K-12 and Higher Education Settings.” TechTrends 63 (5): 611–620. doi:10.1007/s11528-019-00419-w.

- Bhagat, R., and S. Sambargi. 2019. “Evaluation of Personal Innovativeness and Perceived Expertise on Digital Marketing Adoption by Women Entrepreneurs of Micro and Small Enterprises.” International Journal of Research and Analytical Reviews 6 (1): 338–351.

- Bigne-Alcaniz, E., C. Ruiz-Mafé, J. Aldás-Manzano, and S. Sanz-Blas. 2008. “Influence of Online Shopping Information Dependency and Innovativeness on Internet Shopping Adoption.” Online Information Review 32 (5): 648–667. doi:10.1108/14684520810914025.

- Blasco-Arcas, L., I. Buil, B. Hernández-Ortega, and F. J. Sese. 2013. “Using Clickers in Class. The Role of Interactivity, Active Collaborative Learning and Engagement in Learning Performance.” Computers & Education 62: 102–110. doi:10.1016/j.compedu.2012.10.019.

- Buabeng-Andoh, C. 2021. “Exploring University Students’ Intention to use Mobile Learning: A Research Model Approach.” Education and Information Technologies 26 (1): 241–256. doi:10.1007/s10639-020-10267-4.

- Cavus, N. 2020. “Evaluation of MobLrN m-Learning System: Participants’ Attitudes and Opinions.” World Journal on Educational Technology: Current Issues 12 (3): 150–164.

- Celesti, A., D. Mulfari, A. Galletta, M. Fazio, L. Carnevale, and M. Villari. 2019. “A Study on Container Virtualization for Guarantee Quality of Service in Cloud-of-Things.” Future Generation Computer Systems 99: 356–364. doi:10.1016/j.future.2019.03.055.

- Chaka, J. G., and I. Govender. 2017. “Students’ Perceptions and Readiness Towards Mobile Learning in Colleges of Education: A Nigerian Perspective.” South African Journal of Education 37 (1): 1–12. doi:10.15700/saje.v37n1a1282.

- Chemosit, C. C. 2012. College Experiences and Student Inputs: Factors That Promote the Development of Skills and Attributes That Enhance Learning among College Students. Ann Arbor: Illinois State University.

- Chen, J. L. 2011. “The Effects of Education Compatibility and Technological Expectancy on e-Learning Acceptance.” Computers & Education 57 (2): 1501–1511. doi:10.1016/j.compedu.2011.02.009.

- Choi, Y., M. Choi, M. Oh, and S. Kim. 2020. “Service Robots in Hotels: Understanding the Service Quality Perceptions of Human-Robot Interaction.” Journal of Hospitality Marketing & Management 29 (6): 613–635. doi:10.1080/19368623.2020.1703871.

- Chou, S. W., and C. H. Liu. 2005. “Learning Effectiveness in a Web-Based Virtual Learning Environment: a Learner Control Perspective.” Journal of Computer Assisted Learning 21 (1): 65–76. doi:10.1111/j.1365-2729.2005.00114.x.

- Chou, J. S., A. D. Pham, and H. Wang. 2013. “Bidding Strategy to Support Decision-Making by Integrating Fuzzy AHP and Regression-Based Simulation.” Automation in Construction 35: 517–527. doi:10.1016/j.autcon.2013.06.007.

- Corbeil, J. R., and M. E. Valdes-Corbeil. 2007. “Are you Ready for Mobile Learning?” Educause Quarterly 30 (2): 51.

- Crompton, H., D. Burke, and K. H. Gregory. 2017. “The use of Mobile Learning in PK-12 Education: A Systematic Review.” Computers & Education 110: 51–63. doi:10.1016/j.compedu.2017.03.013.

- Darko, A., A. P. C. Chan, E. E. Ameyaw, E. K. Owusu, E. Pärn, and D. J. Edwards. 2019. “Review of Application of Analytic Hierarchy Process (AHP) in Construction.” International Journal of Construction Management 19 (5): 436–452. doi:10.1080/15623599.2018.1452098.

- Darmaji, D., D. Kurniawan, A. Astalini, A. Lumbantoruan, and S. Samosir. 2019. “Mobile learning in higher education for the industrial revolution 4.0: Perception and response of physics practicum.” International Association of Online Engineering. Retrieved March 14, 2021 from https://www.learntechlib.org/p/216574/.

- Do Nam Hung, J. T., S. F. Azam, and A. A. Khatibi. 2019. “An Empirical Analysis of Perceived Transaction Convenience, Performance Expectancy, Effort Expectancy and Behavior Intention to Mobile Payment of Cambodian Users.” International Journal of Marketing Studies 11 (4): 77–90. doi:10.5539/ijms.v11n4p77.

- Ehsanpur, S., and M. R. Razavi. 2020. “A Comparative Analysis of Learning, Retention, Learning and Study Strategies in the Traditional and M-Learning Systems.” European Review of Applied Psychology 70 (6): 100605. doi:10.1016/j.erap.2020.100605.

- El-Sayegh, S. M. 2009. “Multi-Criteria Decision Support Model for Selecting the Appropriate Construction Management at Risk Firm.” Construction Management and Economics 27 (4): 385–398.

- Fagan, M. H. 2019. “Factors Influencing Student Acceptance of Mobile Learning in Higher Education.” Computers in the Schools 36 (2): 105–121. doi:10.1080/07380569.2019.1603051.

- Fagan, M., C. Kilmon, and V. Pandey. 2012. “Exploring the Adoption of a Virtual Reality Simulation: The Role of Perceived Ease of use, Perceived Usefulness and Personal Innovativeness.” Campus-Wide Information Systems 29 (2): 117–127. doi:10.1108/10650741211212368.

- Fang, J., P. Shao, and G. Lan. 2009. “Effects of Innovativeness and Trust on web Survey Participation.” Computers in Human Behavior 25 (1): 144–152. doi:10.1016/j.chb.2008.08.002.

- Gonzalez, D. C. B., C. L. B. Gonzalez, and C. B. Huidobro. 2019. “Intelligent Learning Ecosystem in M-Learning Systems.” In International Congress of telematics and computing (pp. 213-229). Springer, Cham. doi:10.1007/978-3-030-33229-7_19.

- Handoko, B. L. 2019. “Technology Acceptance Model in Higher Education Online Business.” Journal of Entrepreneurship Education 22 (5): 1–9.

- Hao, S., V. P. Dennen, and L. Mei. 2017. “Influential Factors for Mobile Learning Acceptance among Chinese Users.” Educational Technology Research and Development 65 (1): 101–123. doi:10.1007/s11423-016-9465-2.

- Hassanzadeh, A., F. Kanaani, and S. Elahi. 2012. “A Model for Measuring e-Learning Systems Success in Universities.” Expert Systems with Applications 39 (12): 10959–10966. doi:10.1016/j.eswa.2012.03.028.

- Hirsch, B., and J. W. P. Ng. 2011. “Education Beyond the Cloud: Anytime-Anywhere Learning in a Smart Campus Environment.” International Conference for Internet Technology and secured transactions, Abu Dhabi, United Arab Emirates, 718-723.

- Hsu, P. F., C. R. Wu, and Z. R. Li. 2008. “Optimizing Resource-Based Allocation for Senior Citizen Housing to Ensure a Competitive Advantage Using the Analytic Hierarchy Process.” Building and Environment 43 (1): 90–97. doi:10.1016/j.buildenv.2006.11.028.

- Hung, S. Y., and C. M. Chang. 2005. “User Acceptance of wap Services: Test of Competing Theories.” Computer Standards & Interfaces 28: 359–370. doi:10.1016/j.csi.2004.10.004.

- Ibrahim, N., S. A. Halim, and N. Ibrahim. 2012. “The Design of Persuasive Learning Pills for m-Learning Application to Induce Enthusiastic Learning Habits among Learners.” In 2012 4th International Congress on Engineering education (pp. 1-5). IEEE.

- Ichaba, M., F. Musau, and S. Mwendia. 2020. “An Empirical Approach to Mobile Learning on Mobile Ad Hoc Networks (MANETs).” In 2020 IST-Africa Conference (IST-Africa) (pp. 1-14). IEEE.

- Iqbal, S., and I. A. Qureshi. 2012. “M-learning Adoption: A Perspective from a Developing Country.” The International Review of Research in Open and Distributed Learning 13 (3): 147–164. doi:10.19173/irrodl.v13i3.1152.

- Irwin, C., L. Ball, B. Desbrow, and M. Leveritt. 2012. “Students’ Perceptions of Using Facebook as an Interactive Learning Resource at University.” Australasian Journal of Educational Technology 28: 7. doi:10.14742/ajet.798.

- Izkair, A. S., M. M. Lakulu, and I. H. Mussa. 2020. “Intention to Use Mobile Learning in Higher Education Institutions.” International Journal of Education, Science, Technology, and Engineering 3 (2): 78–84.

- Johnson, L., Becker, S. A., Estrada, V., & Freeman, A. (2015). NMC Horizon Report: 2015 Library Edition (pp. 1-54). Austin, Texas: The New Media Consortium.

- Joo, Y. J., H. W. Lee, and Y. Ham. 2014. “Integrating User Interface and Personal Innovativeness Into the TAM for Mobile Learning in Cyber University.” Journal of Computing in Higher Education 26 (2): 143–158. doi:10.1007/s12528-014-9081-2.

- Joseph, A. F., O. S. Sunday, S. Jarkko, and T. Markku. 2019. “Smart Learning Environment for Computing Education: Readiness for Implementation in Nigeria.” In EdMedia+ Innovate Learning, edited by J. Theo Bastiaens, 1382–1391. Amsterdam: Association for the Advancement of Computing in Education (AACE).

- Kacetl, J., and B. Klímová. 2019. “Use of Smartphone Applications in English Language Learning—A Challenge for Foreign Language Education.” Education Sciences 9 (3): 179. doi:10.3390/educsci9030179.

- Karimi, S. 2016. “Do Learners’ Characteristics Matter? An Exploration of Mobile-Learning Adoption in Self-Directed Learning.” Computers in Human Behavior 63: 769–776. doi:10.1016/j.chb.2016.06.014.

- Kim, G. M., and S. M. Ong. 2005. “An Exploratory Study of Factors Influencing m-Learning Success.” Journal of Computer Information Systems 46 (1): 92–97.

- Kim, K., S. Trimi, H. Park, and S. Rhee. 2012. “The Impact of CMS Quality on the Outcomes of e-Learning Systems in Higher Education: An Empirical Study.” Decision Sciences Journal of Innovative Education 10 (4): 575–587. doi:10.1111/j.1540-4609.2012.00360.x.

- Kose, U., and O. Deperlioglu. 2012. Intelligent learning environments within blended learning for ensuring effective C programming course. arXiv preprint arXiv:1205.2670. doi:10.5121/ijaia.2012.3109.

- Kuan, H. H., G. W. Bock, and V. Vathanophas. 2008. “Comparing the Effects of Website Quality on Customer Initial Purchase and Continued Purchase at e-Commerce Websites.” Behaviour & Information Technology 27 (1): 3–16. doi:10.1080/01449290600801959.

- Kumar, J. A., and B. Bervell. 2019. “Google Classroom for Mobile Learning in Higher Education: Modelling the Initial Perceptions of Students.” Education and Information Technologies 24 (2): 1793–1817. doi:10.1007/s10639-018-09858-z.

- Kumar, B. A., and S. S. Chand. 2019. “Mobile Learning Adoption: A Systematic Review.” Education and Information Technologies 24 (1): 471–487. doi:10.1007/s10639-018-9783-6.

- Lassar, W. M., C. Manolis, and S. S. Lassar. 2005. “The Relationship Between Consumer Innovativeness, Personal Characteristics, and Online Banking Adoption.” International Journal of Bank Marketing 23 (2): 176–199. doi:10.1108/02652320510584403.

- Latip, M. S. A., I. Noh, M. Tamrin, and S. N. N. A. Latip. 2020. “Students’ Acceptance for e-Learning and the Effects of Self-Efficacy in Malaysia.” International Journal of Academic Research in Business and Social Sciences 10 (5): 658–674. doi:10.6007/IJARBSS/v10-i5/7239.

- Lee, Y. C. 2006. “An Empirical Investigation Into Factors Influencing the Adoption of an e-Learning System.” Online Information Review 30 (5): 517–541. doi:10.1108/14684520610706406.

- Lewis, W., R. Agarwal, and V. Sambamurthy. 2003. “Sources of Influence on Beliefs About Information Technology use: an Empirical Study of Knowledge Workers.” MIS Quarterly 27: 657–678. doi:10.2307/30036552.

- Li, X. 2020. “Students’ Acceptance of Mobile Learning: An Empirical Study Based on Blackboard Mobile Learn.” In Mobile Devices in Education: Breakthroughs in Research and Practice, 354–373. IGI Global. doi:10.4018/978-1-7998-1757-4.ch022.

- Lian, J., and T. Lin. 2008. “Effects of Consumer Characteristics on Their Acceptance of Online Shopping: Comparisons among Different Product Types.” Computers in Human Behavior 24 (1): 48–65. doi:10.1016/j.chb.2007.01.002.

- Liao, H., X. Mi, Z. Xu, J. Xu, and F. Herrera. 2018. “Intuitionistic Fuzzy Analytic Network Process.” IEEE Transactions on Fuzzy Systems 26 (5): 2578–2590. doi:10.1109/TFUZZ.2017.2788881.

- Lim, C. P., and M. Khine. 2006. “Managing Teachers’ Barriers to ICT Integration in Singapore Schools.” Journal of Technology and Teacher Education 14 (1): 97–125.

- Lin, X., and S. Su. 2020. “Chinese College Students’ Attitude and Intention of Adopting Mobile Learning.” International Journal of Education and Development Using Information and Communication Technology 16 (2): 6–21.

- Liu, Y., S. Han, and H. Li. 2010. “Understanding the Factors Driving m-Learning Adoption: A Literature Review.” Campus-Wide Information Systems 27 (4): 210–226. doi:10.1108/10650741011073761.

- Liu, Y., and H. Li. 2010. “Mobile Internet Diffusion in China: An Empirical Study.” Industrial Management & Data Systems 110 (3): 309–324. doi:10.1108/02635571011030006.

- Liu, Y., H. Li, and C. Carlsson. 2010. “Factors Driving the Adoption of m-Learning: An Empirical Study.” Computers & Education 55 (3): 1211–1219. doi:10.1016/j.compedu.2010.05.018.

- Lowenthal, J. 2010. “Using Mobile Learning: Determinates Impacting Behavioral Intention.” The American Journal of Distance Education 24 (4): 195–206. doi:10.1080/08923647.2010.519947.

- Lowenthal, P. R. 2010. “The Evolution and Influence of Social Presence Theory on Online Learning.” In Social computing: Concepts, Methodologies, Tools, and Applications, 113–128. IGI Global.

- Lu, X., and D. Viehland. 2008. “Factors influencing the adoption of mobile learning.” ACIS 2008 proceedings, 56.

- Lu, J., J. E. Yao, and C. S. Yu. 2005. “Personal Innovativeness, Social Influences and Adoption of Wireless Internet Services via Mobile Technology.” Journal of Strategic Information Systems 14 (3): 245–268. doi:10.1016/j.jsis.2005.07.003.

- Ma, H., Z. Zheng, F. Ye, and S. Tong. 2010, November. “The Applied Research of Cloud Computing in the Construction of Collaborative Learning Platform Under e-Learning Environment.” In 2010 International Conference on System science, Engineering design and manufacturing informatization Vol. 1, (pp. 190-192). IEEE. doi:10.1109/ICSEM.2010.58.

- Macharis, C., J. Springael, K. De Brucker, and A. Verbeke. 2004. “Promethee and AHP: The Design of Operational Synergies in Multicriteria Analysis. Strengthening Promethee with Ideas of AHP.” European Journal of Operational Research 153: 307–317. doi:10.1016/S0377-2217(03)00153-X.

- Major, L., B. Haßler, and S. Hennessy. 2017. “Tablet use in Schools: Impact, Affordances and Considerations.” In Handbook on Digital Learning for K-12 Schools, 115–128. Springer, Cham. doi:10.1007/978-3-319-33808-8_8.

- Malik, S., M. Al-Emran, R. Mathew, R. Tawafak, and G. AlFarsi. 2020. “Comparison of E-Learning, M-Learning and Game-Based Learning in Programming Education–A Gendered Analysis.” International Journal of Emerging Technologies in Learning 15 (15): 133–146.

- Marquez, J., J. Villanueva, Z. Solarte, and A. Garcia. 2016. “IoT in Education: Integration of Objects with Virtual Academic Communities.” In New Advances in Information Systems and Technologies, 201–212. Springer, Cham. doi:10.1007/978-3-319-31232-3_19.

- Matute-Vallejo, J., and I. Melero-Polo. 2019. “Understanding Online Business Simulation Games: The Role of Flow Experience, Perceived Enjoyment and Personal Innovativeness.” Australasian Journal of Educational Technology 35 (3): 71–85. doi:10.14742/ajet.3862.

- McGarr, O. 2009. “A Review of Podcasting in Higher Education: Its Influence on the Traditional Lecture.” Australasian Journal of Educational Technology 25 (3), doi:10.14742/ajet.1136.

- McKinney, D., J. L. Dyck, and E. S. Luber. 2009. “iTunes University and the Classroom: Can Podcasts Replace Professors?” Computers & Education 52 (3): 617–623. doi:10.1016/j.compedu.2008.11.004.

- McLaughlin, J. E., L. M. Griffin, D. A. Esserman, C. A. Davidson, D. M. Glatt, M. T. Roth, N. Gharkholonarehe, and R. J. Mumper. 2013. “Pharmacy Student Engagement, Performance, and Perception in a Flipped Satellite Classroom.” American Journal of Pharmaceutical Education 77 (9): 196. doi:10.5688/ajpe779196.

- Miller, H. B., and J. A. Cuevas. 2017. “Mobile Learning and its Effects on Academic Achievement and Student Motivation in Middle Grades Students.” International Journal for the Scholarship of Technology Enhanced Learning 1 (2): 91–110.

- Millet, I., and W. C. Wedley. 2002. “Modelling Risk and Uncertainty with the Analytic Hierarchy Process.” Journal of Multi−Criteria Decision Analysis 11: 97–107.

- Milošević, I., D. Živković, D. Manasijević, and D. Nikolić. 2015. “The Effects of the Intended Behavior of Students in the use of M-Learning.” Computers in Human Behavior 51: 207–215. doi:10.1016/j.chb.2015.04.041.

- Mohammadi, H. 2015. “Investigating Users’ Perspectives on e-Learning: An Integration of TAM and IS Success Model.” Computers in Human Behavior 45: 359–374. doi:10.1016/j.chb.2014.07.044.

- Muhamad, W., N. B. Kurniawan, S. Suhardi, and S. Yazid. 2017. “Smart Campus Features, Technologies, and Applications: A Systematic Literature Review.” 2017 International Conference on Information Technology Systems and Innovation (ICITSI), 384-391. doi:10.1109/ICITSI.2017.8267975.

- Nassuora, A. B. 2012. “Students Acceptance of Mobile Learning for Higher Education in Saudi Arabia.” American Academic & Scholarly Research Journal 4 (2): 24–30.

- Navlani, A. 2019. Introduction to Factor Analysis in Python. Accessed in March 2021 at https://www.google.com/url?sa=t&rct=j&q=&esrc=s&source=web&cd=&cad=rja&uact=8&ved=2ahUKEwigg8W_pJ_vAhXBoFwKHXiXAtcQFjAAegQIAxAD&url=https%3A%2F%2Fwww.datacamp.com%2Fcommunity%2Ftutorials%2Fintroduction-factor-analysis&usg=AOvVaw3BHduLbDErkH1EWARHRqyE.

- Ng, J. W., N. Azarmi, M. Leida, F. Saffre, A. Afzal, and P. D. Yoo. 2010. “The Intelligent Campus (ICampus): End-to-end Learning Lifecycle of a Knowledge Ecosystem.” In 2010 sixth International Conference on intelligent environments (pp. 332-337). IEEE. doi:10.1109/IE.2010.68.

- Nielit, S. G., and S. Thanuskodi. 2020. “E-discovery Components of E-Teaching and M-Learning: An Overview.” Mobile Devices in education: Breakthroughs in research and practice, 928-936. doi:10.4018/978-1-7998-1757-4.ch053.

- Nik-Mohammadi, F., and Q. H. Barekat. 2015. “Evaluating the Effect of Creating Smart Schools with Educational Performance of Teachers at Elementary Schools in Dezful City.” International Journal on New Trends in Education and Literature 7 (1): 37–50.

- Nyembe, B. Z. M., and G. R. Howard. 2019, August. “The Utilities of Prominent Learning Theories for Mobile Collaborative Learning (MCL) with Reference to WhatsApp and m-Learning.” In 2019 International Conference on Advances in Big data, Computing and data Communication Systems (icABCD) (pp. 1-6). IEEE. doi:10.1109/ICABCD.2019.8851042.

- Oh, S., X. Y. Lehto, and J. Park. 2009. “Travelers’ Intent to use Mobile Technologies as a Function of Effort and Performance Expectancy.” Journal of Hospitality Marketing & Management 18 (8): 765–781. doi:10.1080/19368620903235795.

- Okai-Ugbaje, S., K. Ardzejewska, A. Imran, A. Yakubu, and M. Yakubu. 2020. “Cloud-Based M-Learning: A Pedagogical Tool to Manage Infrastructural Limitations and Enhance Learning.” International Journal of Education and Development Using Information and Communication Technology 16 (2): 48–67.

- Otieno, K. O. 2010. “Teaching/Learning Resources and Academic Performance in Mathematics in Secondary Schools in Bondo District of Kenya.” Asian Social Science 6 (12): 126.

- Palalas, A., and N. Wark. 2020. “The Relationship Between Mobile Learning and Self-Regulated Learning: A Systematic Review.” Australasian Journal of Educational Technology 36 (4): 151–172. doi:10.14742/ajet.5650.

- Pan, W., A. R. Dainty, and A. G. Gibb. 2012. “Establishing and Weighting Decision Criteria for Building System Selection in Housing Construction.” Journal of Construction Engineering and Management 138 (11): 1239–1250. doi:10.1061/(ASCE)CO.1943-7862.0000543.

- Patil, P., K. Tamilmani, N. P. Rana, and V. Raghavan. 2020. “Understanding Consumer Adoption of Mobile Payment in India: Extending Meta-UTAUT Model with Personal Innovativeness, Anxiety, Trust, and Grievance Redressal.” International Journal of Information Management 54: 102144. doi:10.1016/j.ijinfomgt.2020.102144.

- Purwanto, E., and J. Loisa. 2020. “The Intention and use Behaviour of the Mobile Banking System in Indonesia: UTAUT Model.” Technology Reports of Kansai University 62 (06): 2757–2767.

- Rahman, M. M. 2020. “EFL Learners’ Perceptions About the Use of Mobile Learning During COVID-19.” Journal of Southwest Jiaotong University 55 (5): 1–7. doi:10.35741/issn.0258-2724.55.5.10.

- Rai, A., S. S. Lang, and R. B. Welker. 2002. “Assessing the Validity of IS Success Models: An Empirical Test and Theoretical Analysis.” Information Systems Research 13 (1): 50–69. doi:10.1287/isre.13.1.50.96.

- Ramanathan, R. 2001. “A Note on the use of the Analytic Hierarchy Process for Environmental Impact Assessment.” Journal of Environmental Management 63: 27–35. doi:10.1006/jema.2001.0455.

- Rogers, E. M. 2003. Diffusion of Innovations. New York: The Free Press.

- Ross, R. J., and M. T. Grinder. 2002. “Hypertextbooks: Animated, Active Learning, Comprehensive Teaching and Learning Resources for the web.” In Software Visualization, edited by S. Diehl, 269–283. Berlin, Heidelberg: Springer.

- Rossing, J. P., W. M. Miller, A. K. Cecil, and S. E. Stamper. 2012. “iLearning: The Future of Higher Education? Student Perceptions on Learning with Mobile Tablets.” Journal of the Scholarship of Teaching and Learning 12 (2): 1–26.

- Saaty, T. L. 1980. The Analytical Hierarchy Process. New York: McGraw-Hill.

- Saaty, T. L., and L. G. Vargas. 2013. The Logic of Priorities: Applications of Business, Energy, Health and Transportation. New York: Springer Science & Business Media.

- Sabah, N. M. 2016. “Exploring Students’ Awareness and Perceptions: Influencing Factors and Individual Differences Driving m-Learning Adoption.” Computers in Human Behavior 65: 522–533. doi:10.1016/j.chb.2016.09.009.

- Salloum, S. A., M. Al-Emran, K. Shaalan, and A. Tarhini. 2019. “Factors Affecting the E-Learning Acceptance: A Case Study from UAE.” Education and Information Technologies 24 (1): 509–530. doi:10.1007/s10639-018-9786-3.

- Sekaran, K., M. S. Khan, R. Patan, A. H. Gandomi, P. V. Krishna, and S. Kallam. 2019. “Improving the Response Time of m-Learning and Cloud Computing Environments Using a Dominant Firefly Approach.” IEEE Access 7: 30203–30212. doi:10.1109/ACCESS.2019.2896253.

- Senaratne, S. I., S. M. Samarasinghe, and G. Jayewardenepura. 2019. “Factors Affecting the Intention to Adopt m-Learning.” International Business Research 12 (2): 150–164. doi:10.5539/ibr.v12n2p150.

- Seralidou, E., C. Douligeris, and C. Gralista. 2019. “EduApp: A Collaborative Application for Mobile Devices to Support the Educational Process in Greek Secondary Education.” In 2019 IEEE Global Engineering Education Conference (EDUCON) (pp. 189-198). IEEE. doi:10.1109/EDUCON.2019.8725175.

- Shorfuzzaman, M., M. S. Hossain, A. Nazir, G. Muhammad, and A. Alamri. 2019. “Harnessing the Power of big Data Analytics in the Cloud to Support Learning Analytics in Mobile Learning Environment.” Computers in Human Behavior 92: 578–588. doi:10.1016/j.chb.2018.07.002.

- Shukla, S. 2021. “M-learning Adoption of Management Students’: A Case of India.” Education and Information Technologies 26 (1): 279–310.

- Sidik, D., and F. Syafar. 2020. “Exploring the Factors Influencing Student’s Intention to use Mobile Learning in Indonesia Higher Education.” Education and Information Technologies 25 (6): 4781–4796. doi:10.1007/s10639-020-10271-8.

- Summers, J. J., A. Waigandt, and T. A. Whittaker. 2005. “A Comparison of Student Achievement and Satisfaction in an Online Versus a Traditional Face-to-Face Statistics Class.” Innovative Higher Education 29 (3): 233–250. doi:10.1007/s10755-005-1938-x.

- Sung, H., D. Jeong, Y. S. Jeong, and J. I. Shin. 2015. “The Relationship among Self-Efficacy, Social Influence, Performance Expectancy, Effort Expectancy, and Behavioral Intention in Mobile Learning Service.” International Journal of u-and e-Service, Science and Technology 8 (9): 197–206. doi:10.14257/ijunesst.2015.8.9.21.

- Swanson, J. A. 2020. “Assessing the Effectiveness of the use of Mobile Technology in a Collegiate Course: A Case Study in M-Learning.” Technology, Knowledge and Learning 25 (2): 389–408. doi:10.1007/s10758-018-9372-1.

- Tavares, R. M., J. L. Tavares, and S. L. Parry-Jones. 2008. “The use of a Mathematical Multicriteria Decision-Making Model for Selecting the Fire Origin Room.” Building and Environment 43 (12): 2090–2100. doi:10.1016/j.buildenv.2007.12.010.

- Thomas, B. J., and J. Hajiyev. 2020. “The Direct and Indirect Effects of Personality on Data Breach in Education Through the Task-Related Compulsive Technology use: M-Learning Perspective.” International Journal of Computing and Digital Systems 9 (03): 459–469. doi:10.12785/ijcds/090310.

- Thongsri, N., L. Shen, and Y. Bao. 2019. “Investigating Factors Affecting Learner’s Perception Toward Online Learning: Evidence from ClassStart Application in Thailand.” Behaviour & Information Technology 38 (12): 1243–1258. doi:10.1080/0144929X.2019.1581259.

- Venkatesh, V., and F. D. Davis. 2000. “A Theoretical Extension of the Technology Acceptance Model: Four Longitudinal Field Studies.” Management Science 46 (2): 186–204.

- Venkatesh, V., and M. G. Morris. 2000. “Why Don’t men Ever Stop to ask for Directions? Gender, Social Influences, and Their Role in Technology Acceptance and Usage Behavior.” MIS Quarterly 24 (1): 115–139. doi:10.2307/3250981.

- Venkatesh, V., M. G. Morris, and P. L. Ackerman. 2000. “A Longitudinal Field Investigation of Gender Differences in Individual Technology Adoption Decision Making Processes.” Organizational Behavior and Human Decision Processes 83 (1): 33–60. doi:10.1006/obhd.2000.2896.

- Venkatesh, V., M. G. Morris, G. B. Davis, and F. D. Davis. 2003. “User Acceptance of Information Technology: Toward a Unified View.” MIS Quarterly 27 (3): 425–478. doi:10.2307/30036540.

- Vermunt, J. D. 2005. “Relations Between Student Learning Patterns and Personal and Contextual Factors and Academic Performance.” Higher Education 49 (3): 205–234. doi:10.1007/s10734-004-6664-2.

- Vrana, R. 2018. “Acceptance of Mobile Technologies and m-Learning in Higher Education Learning: An Explorative Study at the Faculty of Humanities and Social Science. at the University of Zagreb.” 2018 41st International convention on Information and Communication Technology, electronics and microelectronics (MIPRO), 738-743. doi:10.23919/MIPRO.2018.8400137.

- Wang, C. Y., S. C. T. Chou, and H. C. Chang. 2010. Exploring an Individual's intention to use blogs: The roles of social, motivational and individual factors. In PACIS (p. 161).

- Wang, H., D. Tao, N. Yu, and X. Qu. 2020. “Understanding Consumer Acceptance of Healthcare Wearable Devices: An Integrated Model of UTAUT and TTF.” International Journal of Medical Informatics 139: 104156. doi:10.1016/j.ijmedinf.2020.104156.

- Wang, Y., M. Wu, and H. Wang. 2009. “Investigating the Determinants and age and Gender Differences in the Acceptance of Mobile Learning.” British Journal of Educational Technology 40 (1): 92–118. doi:10.1111/j.1467-8535.2007.00809.x.

- Wu, H. Y., H. S. Wu, and Y. P. Su. 2019, June. “Exploring the Critical Factors Affecting College Students’ Intelligent Learning.” In EdMedia+ Innovate Learning, edited by J. Theo Bastiaens, 806–817. Amsterdam, Netherlands: Association for the Advancement of Computing in Education (AACE).

- Yarmatov, R., and M. Ahmedova. 2020. “The Formation of Positive Motivation through Intellectual Games in Teaching English in Continuous Education.” Архив Научных Публикаций JSPI, 1-7.

- Yi, M. Y., J. D. Jackson, J. S. Park, and J. C. Probst. 2006. “Understanding Information Technology Acceptance by Individual Professionals: Toward an Integrative View.” Information & Management 43 (3): 350–363. doi:10.1016/j.im.2005.08.006.

- Zahir, S. 1999. “Clusters in Group: Decision Making in the Vector Space Formulation of the Analytic Hierarchy Process.” European Journal of Operational Research 112: 620–634. doi:10.1016/S0377-2217(98)00021-6.

- Zakariaa, M. I., S. M. Maatb, and F. Khalidc. 2019. “A Systematic Review of M-Learning in Formal Education.” People 7 (11): 1–24.