ABSTRACT

The use of virtual reality (VR) has seen significant recent growth and presents opportunities for use in many domain areas. The use of head-mounted displays (HMDs) also presents unique opportunities for the implementation of audio feedback congruent with head and body movements, thus matching intuitive expectations. However, the use of audio in VR is still undervalued and there is a lack of consistency within audio-centedd research in VR. To address this shortcoming and present an overview of this area of research, we conducted a scoping review (n = 121) focusing on the use of audio in HMD-based VR and its effects on user/player experience. Results show a lack of standardisation for common measures such as pleasantness and emphasize the context-specific ability of audio to influence a variety of affective, cognitive, and motivational measures, but are mixed for presence and generally lacking for social experiences and descriptive research.

1. Introduction

The use of audio in virtual reality (VR) applications presents opportunities for novel uses and has received considerable attention (Kiss et al. Citation2020; Serafin et al. Citation2018). Audio can serve several functions in VR applications, such as providing action sounds based on interactions (Serafin et al. Citation2018) which can be sourced/localized to specific virtual objects (Kiss et al. Citation2020) and can be used to direct users’ gaze and attention (Eames Citation2019; McArthur et al. Citation2017; Ulsamer et al. Citation2020). Audio can also create environment sounds or soundscapes that provide information about the surrounding virtual environment (Serafin et al. Citation2018) and play a crucial role in constructing perceived virtual spaces (Nordahl and Nilsson Citation2014). However, while the importance of audio for the overall VR user experience is recognized (Kern and Ellermeier Citation2020; Serafin et al. Citation2018), there is still a lack of secondary research which reviews the existing corpus of research regarding the effects of audio strategies on the user experience (UX) and player experience (PX) of VR applications.

In the broader context of human–computer interaction (HCI), audio has received considerable attention for enhancing a range of contexts through the sonification of interfaces and information (Papadopoulos et al. Citation2016; Sanchez Citation2010). There are many advantages of using audio as part of interaction mechanisms, such as the fact that audio feedback is often linked with shorter interaction times than visual feedback (Westerberg and Schoenau-Fog Citation2015), emits feedback omnidirectionally and constantly since we cannot close our ears (Serafin et al. Citation2018), does not clutter the visual real-estate (Palani and Giudice Citation2014), and can cater to users with visual impairments (Guillen et al. Citation2021; Rocchesso et al. Citation2019).

In the context of audio delivery and manipulation, VR affords unique interactions through catering for more intuitive interactions, i.e. sensorimotor contingencies (SCs) than typical desktop computer environments, such as using head tracking as an input mechanism for perception within a 3-D environment (Slater Citation2009). These SCs afford unique interactions relating to the psychoacoustic perception of audio, such as the fact that users of VR can use head movements to help localise audio in 3-D virtual spaces (Noisternig et al. Citation2003) which can lead to an enhanced sense of ‘kinesonic congruence’ between physical actions and resulting audio cues (Collins Citation2013, 57). Audio can also help solve some of the problems of content delivery in headset-based VR, such as the inability for designers to frame content visually due to freedom of head movement, which can be done with audio instead (McArthur et al. Citation2017).

While previous work in VR has investigated the effects of various qualities of visual displays on UX (Dickinson et al. Citation2019; Latoschik et al. Citation2019; Men et al. Citation2017), comparatively little focus has been given to the audio modality, which is often neglected in the development of various media projects (McArthur et al. Citation2017). There is still also a lack of clear design guidelines that consider the effects of various aspects of audio on UX in different contexts for VR applications (Kelling et al. Citation2018; Kiss et al. Citation2020; McArthur et al. Citation2017) and, as such, there is a general consensus that the audio modality is still underutilised in VR (Serafin et al. Citation2018).

Previous review papers in the broad area of virtual environments and audio have investigated the role of audio in games (Guillen et al. Citation2021) as well an overview of audio games, i.e. games driven primarily by audio feedback (Urbanek and Güldenpfennig Citation2019). Specific to VR, review papers have investigated the UX of VR systems in a healthcare context (Mäkinen et al. Citation2022) and more generally (Kim, Rhiu, and Yun Citation2020). However, neither of the aforementioned papers focused on the role of audio in UX. Thus, this points toward a gap in comprehensive studies that analyze the field of VR in terms of audio effects on the user experience of VR. Filling this gap could benefit from pointing out the domain areas where audio is currently being studied which, in turn, provides guidance on areas where such a focus is lacking. Similarly, investigating which aspects of user/player experience are being studied and how the introduction of audio feedback affects these aspects would provide insight in a similar vein. Finally, such knowledge would provide implications for researchers and practitioners of audio-focused VR, such as pointing toward opportunities for more focused systematic reviews (Arksey and O’Malley Citation2005), as well as insight for practitioners as to how audio might be used in VR applications. Therefore, this article aims to provide a basis for the utilisation of sound in VR by using a scoping review to identify the range of contexts and outcomes being studied and how these are being studied (Munn et al. Citation2018) as well as an overview of the experiential outcomes

The literature review was guided by the following research questions:

In which contexts are the effects of audio in HMD-based VR being studied?

Which aspects of experience are being studied with regards to audio in HMD-based VR research?

What insights does the literature research provide about the effect of audio on HMD-based VR experiences?

What implications from the literature can be drawn regarding the design of future HMD-based VR audio research?

RQ 1–3 all pertain to the current state of the research and are addressed in the Results and Discussion sections, whereas RQ4 pertains to future work and research agenda regarding audio in VR and is answered in the Future Agenda.

1.1. Defining VR

Although interest in VR has grown significantly since its inception, there is still little consensus on what the term refers to (Bujic and Hamari Citation2020; Høeg et al. Citation2021). For example, the term is often used to refer to ‘immersive’ technologies that utilise head-mounted displays (HMDs) (Hruby Citation2019; Rogers et al. Citation2018) or CAVE-based systems (Sahai et al. Citation2012), but also to more traditional desktop-and-screen systems, e.g. (Buele et al. Citation2020; Lim et al. Citation2017), and some have even posited that a wide variety of media, such as film, photography, and music can also be considered a type of ‘virtual reality’ (Blascovich and Bailenson Citation2011, chap. 3; van Elferen Citation2011). Furthermore, it is also possible to mix reality and virtuality to varying degrees in different modalities, such as providing a visual augmented reality and an aural virtual reality (Larsson et al. Citation2010). Thus, to define our scope more clearly, we use the term VR to refer to systems that use a variety of display and tracking devices to allow for a set of intuitive interactions, i.e., valid SCs, thus facilitating a degree of natural interaction with a VE, and provides the user with feedback relevant to the VE in any number of modalities (Larsson et al. Citation2010; Nordahl and Nilsson Citation2014; Slater Citation2009). Furthermore, we limit our scope to that of VR created by HMDs for the reasons explained below.

1.2. Audio in VR

Perhaps the most frequently discussed topic in the context of VR audio is that of binaural or spatial audio, which refers to audio that is delivered in such a way as to mimic human perception of audio in real 3-dimensional environments, thus providing each 3-D object with a localisable 3-D audio source (Geronazzo et al., Citation2013). The use of binaural audio in VR presents opportunities for novel uses of audio, such as providing virtual objects with their own localisable audio sources, which can make better use of the spatiality of VR environments without being as invasive as visual feedback (Kiss et al. Citation2020). Spatialization of this kind is enabled by the use of high-fidelity tracking technology which caters for valid SCs in the form of moving one's head and hearing the resulting change in audio (Noisternig et al. Citation2003). One audio-focused area of concern where this might be valuable is that of soundscape studies which focuses on the impact of sound and acoustics on the experiential properties of architectural and urban spaces (Brambilla and Di Gabriele Citation2009; Oberman, Bojanić Obad Šćitaroci, and Jambrošić Citation2018). This includes the investigation of different types of sounds, such as natural vs. artificial, and what effects they might have on human experiences, such as annoyance or restorativeness (Harriet and Murphy Citation2015; Uebel et al. Citation2021).

The use of VR in various contexts presents many opportunities but also new challenges to overcome, some of which can be addressed by the effective use of audio (McArthur et al. Citation2017; Serafin et al. Citation2018). In a narrative context where users/players are free to look around their environment, audio cues can be used to direct users’ gaze and attention to critical points (Eames Citation2019; McArthur et al. Citation2017; Ulsamer et al. Citation2020), prevent cluttering the limited visual real-estate (Kiss et al. Citation2020; Shaw et al. Citation1993), and to provide a constant stream of information to be used for discerning spatial attributes of the environment itself (Nordahl and Nilsson Citation2014). Sound also possesses the property of being ‘always turned on’ (Larsson et al. Citation2010; Nordahl and Nilsson Citation2014), the ability to convey spatial characteristics, such as through reverberation (Larsson et al. Citation2010), and an omnidirectional quality (Jørgensen Citation2006 ; Turner et al. Citation2009) which does not rely on the limited visual field of view of display devices such as HMDs (Collins and Kapralos Citation2012). In contrast to visual feedback which might be static, the temporal nature of sound creates feedback which is always changing and ‘in motion’ (Larsson et al. Citation2010; Serafin et al. Citation2018). Combined with the spatial properties of sound, this is especially relevant for facilitating the ‘place illusion’, i.e., the illusion of finding oneself in a different physical environment, with minimal cognitive effort (Slater Citation2009; Turner et al., Citation2009). This temporal quality also means that our ability to judge temporal differences is much more precise in the audio than in the visual domain (Serafin Citation2020). However, while these place-like qualities of effective audio feedback provide the potential for sound to be a critical factor for users to feel present in VEs, the extent of the effect and of different audio types remains unclear (Kern and Ellermeier Citation2020).

Conversely, some of the properties of VR technology have the potential to be advantageous in various audio-driven contexts, such as music listening (Janer et al. Citation2016) and music composition (Turchet, Carraro, and Tomasetti Citation2022). For music listening, VR offers the potential for immersive experiences of recorded performances and could provide a degree of flexibility, such as focusing on specific instruments in an orchestra (Janer et al. Citation2016). For music and soundscape composition, the immersive aspect of VR has been found to increase the enjoyability and engagement of the experience (Turchet, Carraro, and Tomasetti Citation2022) and presents the possibility of utilising the technology's gestural tracking technology to extend the limited range of gesture-controlled instruments today (Cavdir and Wang Citation2022) as well as the flexibility to customise one's environment (Schlagowski et al. Citation2022). However, these areas are still relatively underexplored and lack clarity on how best to utilise VR (Schlagowski et al. Citation2022; Turchet, Carraro, and Tomasetti Citation2022). A logical starting point for addressing such concerns is a deeper understanding of the experience created by audio feedback in VR.

The tight coupling between interaction in the form of head movements and perceiving a change in the environment is principally tied to one of the most-discussed topics in VR research, i.e. that of presence (Kern and Ellermeier Citation2020; Nordahl and Nilsson Citation2014) since VR users adopt an ‘egocentric virtual body representation’ (Slater and Usoh Citation1993) or a sense of ‘body ownership’ (Nordahl and Nilsson Citation2014). This allows them to experience the virtual environment as if from a virtual body representation and allows for a sense of ‘kinesonic congruity’ between embodied player action and resulting action sounds (Collins Citation2013, p. 63). For this reason, the current study will focus on the use of HMDs with head-tracking, since their high-fidelity tracking allows for valid SCs in the form of head movements and more closely resembles a natural listening experience (Olko et al. Citation2017; Ooi et al. Citation2017) and generally focus on gestural input which may strengthen users’ identification with virtual characters/avatars (Isbister Citation2016, 102). It should be noted that since the focus of this research is on the use of audio, we also include audio-only VR solutions provided they make use of head-tracking and adapt audio accordingly, e.g. (Garcia et al. Citation2015).

1.3. Shortcoming in VR audio research

Although there has been a significant growth in interest regarding audio in HCI (Rocchesso et al. Citation2019), in VR (Kiss et al. Citation2020; Serafin et al. Citation2018), and games (Collins Citation2020; van Elferen Citation2020), there is still much confusion and contradiction regarding how the use of audio affects user- and player experience while using HMD-based VR (Kapralos et al. Citation2017; Kern and Ellermeier Citation2020; Rogers Citation2017; Rogers et al. Citation2020). There are many possible reasons for this, including the novelty bias associated with newer technologies such as HMDs (Rogers et al. Citation2020) and limited understanding of various concepts in audio design (Jørgensen Citation2011; Ribeiro et al. Citation2020), especially pertaining to VR (Jain et al. Citation2021).

Research in VR audio is often limited to pure performance-driven metrics, such as being able to accurately localise the position of audio in 3-D space, at the expense of other perceptual/experiential measures that might benefit from further investigation, both quantitative and qualitative (Jenny and Reuter Citation2021). There is also a general lack of comparability between different studies with regards to differences in research design, such as how audio cues were presented, which aspects were studied, what technologies were used, and the differing roles played by audio (Jørgensen Citation2017; Kern and Ellermeier Citation2018; Kern and Ellermeier Citation2020; Kern et al. Citation2020). Audio classification also tends to focus on the characteristics of sound sources at the expense of considering other factors that could be useful, such as the intent (Jain et al. Citation2021). By investigating the use of audio in various HMD-based VR contexts and conducting secondary research on aspects concerning the experiences created by these contexts, we aim to provide insight into the aspects of user- and player experience being studied and how this has been done, as well to provide a basis for future research in the field of audio for HMD-based VR.

2. Method

This study uses a scoping review for assessing the effect of HMD-based audio on user experience in VR. The goal of the review is to present and analyze the current state of the art in this area using a protocol that allows for rigour and accountability. More specifically, a scoping review aims to map the findings from an often-heterogeneous area of research to provide an overview of the range of research and to point out gaps (Arksey and O’Malley Citation2005). A scoping review was thus selected as the broader topic of audio-driven VR has not been explored in previous reviews and, as such, a broader approach which provides an entry point into this area and takes on an exploratory approach was deemed appropriate (Chang Citation2018). The following three sections detail the protocol with regards to search and screening of articles and analysis and presentation of data, which was based on the PRISMA-ScR checklist for scoping reviews (Tricco et al. Citation2018).

2.1. Search strategy

A search string was created which targeted the three main areas being investigated, namely VR, audio, and user/player experience. To cover the various ways audio feedback is referred to in the literature, a variety of audio-related terminology was used in the search string, as shown below. Furthermore, in order to include the widest possible interpretation of the concepts of ‘user experience’ and ‘player experience’, the more general term ‘experience’ was used instead. The two databases targeted were Scopus and ACM DL and the search strings retrieved matches from the title, abstract, and/or keywords. The exact search strings used for each database were as follows:

Scopus:

TITLE-ABS-KEY ((VR OR (virtual AND reality)) AND (sound* OR music* OR audio* OR audito* OR aural* OR acoustic* OR sonic*) AND (experience* OR UX OR PX)) AND (LIMIT-TO (DOCTYPE,“cp”) OR LIMIT-TO (DOCTYPE,“ar”) OR LIMIT-TO (DOCTYPE,“ch”))

ACM:

(Abstract: (VR OR (virtual AND Reality)) OR Title: (VR OR (virtual AND Reality)) OR Keywords: (VR OR (virtual AND Reality)))

AND

(Abstract: (sound* OR music* OR audio* OR audito* OR aural* OR acoustic* OR sonic*) OR Title: (sound* OR music* OR audio* OR audito* OR aural* OR acoustic* OR sonic*) OR Keywords: (sound* OR music* OR audio* OR audito* OR aural* OR acoustic* OR sonic*))

AND

(Abstract: (experience* OR UX OR PX) OR Title: (experience* OR UX OR PX) OR Keywords: (experience* OR UX OR PX))

2.2. Review procedure

The following were selected as selection criteria on which to include or reject retrieved papers:

The paper is published in a peer-reviewed venue

The paper is in English

The full paper is available

The paper discusses the influence of audio on user or player experience in the context of HMD-based virtual reality. Checking whether papers are in scope thus involved three criteria:

VR: the study made use of or discussed the use of HMDs or, for purely audio-based solutions, head-tracking systems

Audio: the effects of audio were studied on their own, either quantitatively or qualitatively

Experience: any aspect of user or player experience were studied empirically

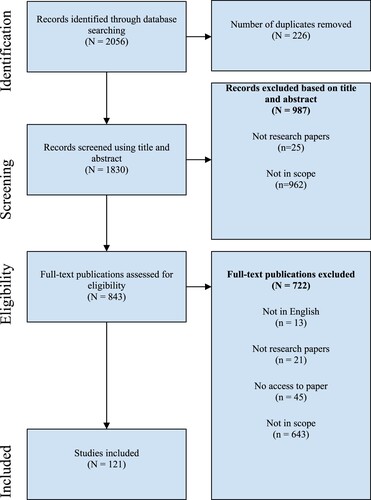

The procedure for finalising the list of articles to analyze was as follows: first, the search string was used to retrieve all records from the aforementioned databases, which were compiled into a single file and duplicates were removed, leaving 1830 papers. This list was then screened using the title and abstract of each publication using the selection criteria listed above, which resulted in 987 articles being excluded. Of these rejections, 25 were for records that were identified as not being research papers (criteria 1), such as calls for proposals, while the rest were for not fitting the scope of the review (criteria 4).

The remaining list of publications was then assessed using the full text, again based on the selection criteria. In the case where we did not have access to the publication, we contacted the authors via email or ResearchGate; 33 publications were accessed this way. During the full-text assessment, an additional 722 publications were excluded, 21 being for records that were not full research papers, such as extended abstracts (criteria 1), 13 based on the publications not being in English (criteria 2), 45 based on us not being able to access these publications (criteria 3), and the remaining 643 were for not fitting the scope of the review (criteria 4). This left 121 publications to be used for analysis.

2.3. Analysis

The list of variables extracted from each article is described in as per the PRISMA-Scr requirements (Tricco et al., Citation2018):

Table 1. Extracted data from articles.

These variables were selected to provide a broad overview of the area of study and to be able to point out possible gaps/shortcomings. The publication venue and domain area indicate the context in which each was conducted, which was expected to provide insights into areas where audio-driven research might be lacking. Study design, data collection tools, and analysis methods provide an overview of methodological concerns that guide the research and can provide specific suggestions for addressing such concerns in future research. Measured outcomes, questionnaires/scales, and effect of audio specify the topical focus of the various studies and the insights gleaned from each which point toward experiential outcomes where audio is effective, ineffective, unexplored, etc.

The variables were extracted from each article by the first author. During the process, there was frequent communication between authors to discuss and resolve issues relating to scope, data coding, and reporting. The synthesis of results involved an initial open coding process for all variables, except publication venue and year, and categories were inductively created for each variable through author discussion in a similar process as recent review papers, e.g. (Pater et al. Citation2021). This was followed by axial coding (Scott and Medaugh Citation2017) for the domain areas, measured outcomes, and effects of audio where codes were grouped into higher-level categories and existing classifications were retroactively updated. Finally, the data were compiled into tables using these categories, which were used to derive insights and implications.

3. Results

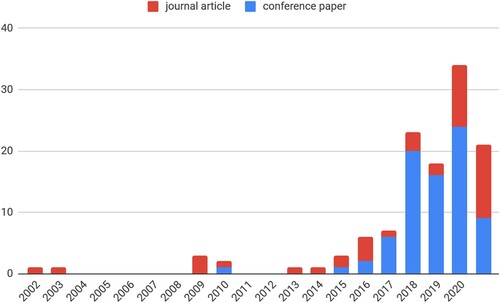

3.1. Publication year

As can be seen from below, the number of studies that focus on the use of audio in VR applications has seen a significant growth over the past 20 years. It must also be noted that the database searches were conducted in September 2020 which significantly lowers the number of citations for the year 2020 .

3.2. Publication venue

The breakdown of publication venues for the final list of included publications is listed in .

Table 2. Publication venues of included papers.

The corpus was drawn from 83 unique venues. The most frequent were the INTER-NOISE conference (8 papers), ACM CHI conference (5 papers), IEEE Conference on Virtual Reality and 3D User Interfaces (VR) (5 papers), Liebert Cyberpsychology, Behavior, and Social Networking journal (4 papers), IOS Press Studies in Health Technology and Informatics (3 papers), IEEE VR Workshop on Sonic Interactions for Virtual Environments (SIVE) (3 papers), and Elsevier Landscape and Urban Planning journal (3 papers). All other venues had either two or one paper per venue. The corpus did not include any book chapters, possibly due to the inclusion requirement pertaining to empirical research of one/more experiential aspects.

3.3. Domain areas

The list of domain areas for all included publications is shown in . Note that the list of domain areas was created inductively and iteratively during the coding process through discussion between all authors and that some papers fall into more than one domain category.

Table 3. Domain areas of included papers.

The most frequent area for studying the effects of audio on VR experiences is for the purposes of entertainment. Likely due to the focus on audio, this is most often done in the realm of studies related to music, such as enabling new techniques or interfaces for musical expression (Chang, Kim, and Kim Citation2018; Deacon, Stockman, and Barthet Citation2017) as well as for music education in the form of ear training (Fletcher, Hulusic, and Amelidis Citation2019; Pedersen et al. Citation2020), and enabling new ways of viewing and listening to recorded music (Droste, Letellier, and Sieck Citation2018; Holm, Väänänen, and Battah Citation2020; Kasuya et al. Citation2019; Lind et al. Citation2017; Rees-Jones and Daffern Citation2019; Shin et al. Citation2019). The corpus also contains a relatively small number of studies that focus specifically on audio and player experiences within VR games (Bombeke et al. Citation2018; Rogers et al. Citation2018; Wedoff et al. Citation2019), although it is possible that this number stems from the choices of databases consulted. There is also a focus on audio in other types of narrative experiences, such as experiences that are linear and video-based (Dumlu and Demir Citation2020; Gospodarek et al. Citation2019; Vosmeer and Schouten Citation2017) or interactive and audio-driven (Geronazzo et al. Citation2019), and finally one instance using novel interaction techniques to create an immersive scuba diving experience (Jain et al. Citation2016 ).

Another popular area for studying the effects of audio on VR experiences is for the purpose of soundscape studies. Soundscape research in this context often generally focuses on the effects of different types of sounds in architectural and urban contexts, using VR as a tool for visually and aurally recreating various environments. This includes focusing on the effects of artificial noise in urban environments (Aalmoes and Zon Citation2019; Cranmer et al. Citation2020; Echevarria Sanchez et al., Citation2017a, Citation2017b; Guang-yu, Yu-an, and Haochen Citation2019; Jeon and Jo Citation2019; Letwory, Aalmoes, and Miltenburg Citation2018; Maffei et al. Citation2013; Masullo, Maffei, and Pellegrino Citation2019; White et al. Citation2014) and, to a much lesser extent, focusing on natural soundscapes (Lindquist et al. Citation2020).

Where audio is studied in the broader domain of psychotherapy, the most frequently studied use for VR is for exposure therapy, such as for the treatment of post-traumatic stress disorder (PTSD) (Difede and Hoffman Citation2002; Josman et al. Citation2008), phobias (Brinkman et al. Citation2009; North et al. Citation2008), and disorders related to sensory processing (Johnston, Egermann, and Kearney Citation2020; Rossi et al. Citation2018), as well as the general study of underlying processes related to exposure therapy (Andreano et al. Citation2009). Mindfulness practice is also a topic of key interest, such as in a rehabilitative or analgesic context (Gomez et al. Citation2017; Nararro-Haro et al. Citation2016; Pizzoli et al. Citation2019) or more generally in investigating how VR technologies might contribute to mindfulness practice (Prpa et al. Citation2017, Citation2018; Seabrook et al. Citation2020). The experiential role of audio in VR is also studied in various therapeutic and/or rehabilitative contexts (Byrns et al. Citation2020; Optale et al. Citation2001; Park et al. Citation2020; Sorkin et al. Citation2008; Zeroth, Dahlquist, and Foxen-Craft Citation2019). The enabling of physical activity and/or rehabilitation is also studied, albeit to a much lesser extent, specifically for physical rehabilitation through music therapy (Baka et al. Citation2018) and the effect of VR and music on the experience of exercise (Bird et al. Citation2019, Citation2020).

In the arts and cultural heritage domain, audio is used in the design of experiences that aim to emulate traditional arts/museum experiences (Bialkova and Gisbergen Citation2017; Kelling et al. Citation2018; Y. Li, Tennent, and Cobb Citation2019; Rzayev et al. Citation2019; Sprung et al. Citation2018; Tennent et al. Citation2020) as well as to create new immersive experiences such as exploring archeological sites (Falconer et al. Citation2020) and using breath-input for novel purposes (Prpa et al. Citation2017, Citation2018). In the domain of engineering, audio is used for simulating diagnostics (Barlow et al. Citation2019; Michailidis et al. Citation2019) and enabling multimodal feedback for assembly tasks (Yin et al. Citation2019). In a built environment context, VR is used to study building acoustics (Ishikawa Citation2019) and traffic crossings (Wu et al. Citation2018). There is also one instance of using VR to investigate experience in a culinary context (Ibrahim and Wan Bashir Citation2019).

Lastly, most papers that include some investigation of audio effects on the experience of VR did so in a broader context not specific to a single domain/discipline but rather as part of research into the medium of VR itself. This includes studies that focus on accessible or assistive technologies, including audio-only VR for people with visual impairments (Bălan et al. Citation2018; Picinali et al. Citation2014; Siu et al. Citation2020; Wedoff et al. Citation2019) and the investigation of age (Souza Silva et al. Citation2019) and visual impairments (Dong, Wang, and Guo Citation2017) with regards to audio feedback in VR. Many of these medium-specific studies have a focus on technical aspects of audio, investigating the experiential effects of technological factors such as 3D/binaural audio (Brinkman, Hoekstra, and van Egmond Citation2015; Davies et al. Citation2017; Hong et al. Citation2018; Huang et al. Citation2019; Olko et al. Citation2017; Rummukainen et al. Citation2017; Schoeffler et al. Citation2015; Ulsamer et al. Citation2020; Yan, Wang, and Li Citation2019) and specific technological factors within this area such as HRTFs (Geronazzo et al. Citation2018; Suarez et al. Citation2017), head-tracking (Kurabayashi et al. Citation2014; Steadman et al. Citation2019), room acoustical properties (Garcia et al. Citation2015; Stecker et al. Citation2018), sound source displacement (McArthur Citation2016; Moraes et al. Citation2020), and different sound propagation techniques (Mehra et al. Citation2015). The ‘no domain’ remainder of these studies exploring the medium of VR refers to the large group of papers studying some other aspect of the VR medium.

3.4. Study design

The number of papers using different study designs is found in . Note that the total number of studies exceeds 121 since three papers respectively consisted of two (Rogers et al. Citation2018), two (Wu et al. Citation2018), and three (Ghosh et al. Citation2018) separate studies that were within the scope of this review and one paper (Shin et al. Citation2019) utilised a design combining a quasi-experiment and a randomised crossover.

Table 4. Study designs of included papers.

The overwhelming majority of this research involves exposing participants to some type of technological intervention, either developed/recorded for the purposes of the study (n = 110) or using existing VR content (n = 13), and then, in the case of experimental or crossover studies, collecting quantitative data regarding the experience. Note that some studies utilised more than one VR application. The phenomenological studies listed also involved exposing participants to some type of technological intervention/exhibition but focused on qualitative experiential data. Descriptive studies investigated populations interacting with existing technologies, in other words, the studies did not involve exposing participants to any type of intervention. Finally, one study (Ghosh et al. Citation2018) employed a co-creative workshop design to collect qualitative data.

3.5. Data type

The list of data collection tools is given in .

Table 5. Data collection tools used in the included papers.

Due to the focus on experimental and crossover studies, the majority of studies focused on collecting quantitative data and many studies used a combination of several questionnaires in a single study. In total there were 223 instances of using questionnaires used across all studies with 79 of them being existing questionnaires and the remaining 144 being custom created for the purposes of the study. Note that this number does not account for reuse of existing questionnaires, but it does assume that each custom questionnaire created to measure a single experiential outcome is unique. As such, a common approach was to create new quantitative self-report instruments, possibly due to the large number of experiential measures and the fact that many of these measures are not commonly studied.

Usage data broadly refer to data gathered from system use whereas performance data specifically refer to cases where usage was framed as having a better/worse outcome; both were only considered if their use was explicitly linked to some experiential outcome. Interviews and qualitative surveys were the most common instruments used for collecting qualitative data although observations were also commonly used in the form of direct observation or post-hoc analysis of audio and/or video material. The only use of focus groups was in (Ghosh et al. Citation2018) which also involved the generation and analysis of visual sketches as participant output from a co-creative workshop exercise.

3.6. Analysis methods

The list of analysis techniques for both quantitative and qualitative data as discussed in the corpus are respectively listed in and below.

Table 6. Quantitative analysis techniques used.

Table 7. Qualitative analysis techniques used.

For quantitative data, the most common analysis methods relied on descriptive statistics and quantitative comparisons, owing to the large number of studies that developed multiple VR-based implementations and compared some experiential aspects between them. While eight studies used only descriptive statistics to infer results (Byrns et al. Citation2020; Falconer et al. Citation2020; Hong et al., Citation2018; Kelling et al. Citation2018; Michailidis et al., Citation2019; Yan, Wang, and Li Citation2019; Zhang et al. Citation2018; Zhao et al. Citation2017), the majority of studies used descriptive statistics in conjunction with other methods.

Analysis of qualitative data largely made use of thematic analysis or grounded theory to create common themes in data, with two studies using Cohen's kappa (Oh, Herrera, and Bailenson Citation2019; Wedoff et al. Citation2019) and one using Krippendorf's alpha (Jain et al. Citation2016) for reliability measures. Other approaches for verbal data included using a linguistic inquiry word count to measure affective valence (Oh, Herrera, and Bailenson Citation2019) and the use of verbal protocol analysis to generate semantic scales for audio attributes (Olko et al. Citation2017). Finally, three studies used observation data by analyzing these data based on user interactions (Deacon, Stockman, and Barthet Citation2017), based on the ‘camera’ movement generated by users (Dumlu and Demir Citation2020) and by creating and analyzing transition diagrams representing decisions made (Brinkman et al. Citation2009).

3.7. Measured outcomes

The experiential outcomes measured in the corpus are listed in below. During the coding process, categories were inductively and iteratively derived from the outcomes listed in the corpus through discussion between the authors. Similarly, outcome variables that were considered similar, such as fear and anxiety, as well as being inversely equivalent, such as comfort and discomfort, were grouped inductively based on discussions between authors. Since this list specifically refers to discretely measurable outcomes, the list does not include qualitative data from the corpus. Where objective measures were explicitly linked to experience-outcomes, said outcome is listed.

Table 8. Measured outcomes organised by categories related to experience.

When combining self-reported and other measures, the experiential outcome that was measured the most across all studies was that of presence (n = 25), followed by realism (n = 15), and immersion (n = 13). Pleasantness was also frequently measured (n = 12), which is largely attributable to the frequency of soundscape research in the corpus, as nine of the studies that include pleasantness as an outcome fall under this domain. While activation/arousal (n = 10) is measured with self-reports it is also the outcome that was most often measured using physiological measures, such as skin conductance level, galvanic skin response, electrodermal activity, etc.

For measures relating to emotion/affect, significantly more emphasis was placed on studying outcomes generally associated with positive affect than with negative affect. Of all instances of measuring emotion/affect, 71 outcomes were for positive affective measures, such as pleasantness, enjoyment, liking/affective attraction, etc. while 43 outcomes related more with negative outcomes, such as simulator sickness, fear/anxiety, tension/annoyance, etc. (with two outcomes more broadly investigating valence). Three common focus areas related to affect were that of perceived stimulation from audio stimuli, i.e. activation, arousal, eventfulness, excitement, and stimulation, that of perceived effects from interest in the stimuli, i.e. interest, novelty, and fascination, and that of calming effects of audio stimuli, i.e. relaxation and calmness.

Many perceptual measures where audio was studied were related to quality measures and how experiences conformed to expectations. This was commonly done using perceived realism or naturalness and one instance of believability. More general measures of perceived overall quality and preference might be said to relate more closely to personal preferences. Other measures in this category refer either to perceived aspects of the environment or of embodied experience. The former includes the effect of audio on perceived audio/visual synchrony, integration of visual elements into the environment, removal of audio/visual stimuli, virtual crowd density, and extent of biodiversity. The latter includes ownership and identification with an avatar, but mostly focuses on specific bodily sensations including awareness of bodily movement, heart rate, and parasympathetic activity as well as an illusory sense of movement, haptic feedback, and increased weight.

Perhaps unsurprisingly, the most common cognitive measures are that of presence and immersion. Other aspects in this category can be generally related to the ability to understand and interact with virtual environments through mediating technologies, such as general usability/perspicuity, task load, ability to recall information, and understandability or recognition of stimuli for these purposes. Conversely, some measures were focused on distraction or disturbance from tasks or restorativeness gained during respite from performing tasks. The most common outcomes related to motivation were those relating to absorption within tasks, including flow, focus, engagement, and involvement. The ability of audio to aid in task completion was studied through perceived competence, efficiency, sense of task performance accuracy, and helpfulness of stimuli in completing tasks. Measures of a call to action included aspects of the stimuli itself, specifically noticeability and urgency, enabling factors toward behaviour change, specifically perceived support for creativity and recreational value, and direct behaviour changes in the form of an intent toward financial support.

With the exception of loudness and audio quality, outcomes related to audio properties most often related to the spatial perception of audio, such as sound source distance, width, reverberance, perception as coming from a specific direction or as from originating external to one's head, and a sense of accuracy in identifying the spatial position of audio sources. Another common audio-related focus was on listener preference, such as preference for specific ambiences, ambience levels, and different models for presenting audio. The least-studied experiential outcome category was that of parasocial/interpersonal experiences which could generally be grouped into outcomes related to the general perception of other social beings, i.e. social presence, parasocial interaction, and peripersonal space perception, and general perception or affective social measures, i.e. perceived humanness, interpersonal liking, and the minimum comfortable distance with robots. Finally, some physiological measures were not explicitly linked to experiential outcomes but were reported as is since it could be argued that they are still directly related to specific experiences.

As mentioned in section 3.5 above, there were 79 instances of existing questionnaires being used, which includes some questionnaires being used across several different studies. In total there were 49 different questionnaires used. The full list of questionnaires used and how often each was used is given in . Again, items with the same number of instances used are listed together for brevity.

Table 9. Questionnaires used.

Perhaps the most notable disparity between the frequency of outcomes measured and that of questionnaires used is that while simulator sickness (n = 7) was not as commonly measured as several other experiential outcomes, the simulator sickness questionnaire (SSQ) was one of the most commonly used questionnaires. This can be attributed to the relatively standardised way to measure simulator sickness, especially when compared to measuring other outcomes such as presence or positive affect which were measured with eight and seven different existing questionnaires respectively, in addition to those created for individual studies. In contrast, none of the questionnaires listed was used to study perceived pleasantness; in other words, although pleasantness was one of the most-commonly investigated outcomes, it was exclusively studied with questionnaires created for the purpose of each study. The same applies to outcomes for Audio Properties, with the exception of the use of the magnitude estimation method for perceived loudness (Ong et al. Citation2017), spatial audio quality inventory for perceived quality (SAQI) (Geronazzo et al. Citation2018), overall listening experience questionnaire for perceived overall listening experience (Schoeffler et al. Citation2015), and Witmer and Singer questionnaire for perceived sound source identification (Shin et al. Citation2019).

It should also be noted that most questionnaires that are used twice are used in two related studies by one/more of the same author. Specifically, this includes the feeling scale (FS), felt arousal scale (FAS), Borg CR10 scale, physical activity enjoyment scale (PACES), and Tammen’s attentional scale used in (Bird et al. Citation2019, Citation2020) and the use of DBT® diary cards in (Gomez et al. Citation2017; Nararro-Haro et al. Citation2016). As such, of the 49 different questionnaires used, only the following 12 were used more than once in unrelated studies: Simulator sickness questionnaire (SSQ), Igroup presence questionnaire (IPQ), Witmer and Singer questionnaire, Positive and negative affect scale (PANAS), Subjective unit of discomfort scale (SUD), game experience questionnaire (GEQ), Slater Usoh Steed questionnaire (SUS), self-assessment manikin (SAM), NASA TLX questionnaire, State-trait anxiety inventory (STAI), ITC sense of presence inventory (ITC-SOPI), and user experience questionnaire (UEQ).

3.8. Study outcomes – effects of audio

The list of measured outcomes and how each was affected by the introduction of audio is listed in . Similar to above, the list only includes discretely measurable outcomes and thus does not include qualitative data. Positive and negative effects indicate that a measured outcome was significantly increased or decreased with the introduction of audio stimuli. Furthermore, it should be noted that these results only represent instances where it is possible to note the effect of the introduction of audio on its own as opposed to instances where the differences between factors such as types/implementations of audio, properties of participants, etc. are being studied and that case studies are excluded.

Table 10. The effects of audio on user/player experience organised by categories related to experience.

One notable finding is that some experiential outcomes are simultaneously affected in seemingly contradictory ways, specifically in terms of experiential outcomes that are positively and negatively affected by audio in the same study, such as pleasantness (Echevarria Sanchez et al. Citation2017a; Hong et al. Citation2019; Jo and Jeon Citation2020), positive affect (Park et al. Citation2020), and discomfort, relaxation, and calmness (Jo and Jeon Citation2020). This can be attributed to the fact that all these studies fall within the domain of soundscape studies and all study the differences between natural and artificial/human ambiences. For each case listed here, natural ambience is found to increase positive affect (pleasantness, relaxation, calmness) and decrease negative affect (discomfort) and artificial/human ambience has the inverse effect. Other soundscape research also reports natural ambiences as increasing pleasantness or restorativeness and artificial/human ambiences as decreasing pleasantness and increasing anxiety effects (Li and Xie Citation2018; Ong et al. Citation2017; Ooi et al. Citation2017). Related to this (Lindquist et al. Citation2020), studied the experiential differences between generic ambiences, hich had no effect on perceived recreational value, and detailed ambience created for specific visuals, leading to increased perceived recreational value.

The large variability in study outcomes for emotional/affective measures can be partially explained by the differing use of audio in this area, for example, using it for short-term increases in discomfort or fear/anxiety (Brinkman, Hoekstra, and van Egmond Citation2015; Josman et al. Citation2008) as well as for decreasing them with repeated exposure (Johnston, Egermann, and Kearney Citation2020; North et al. Citation2008). While one study (Lee, Bruder, and Welch Citation2017) found no effect of audio on fear/anxiety, it should be noted that the base rates for negative affect was perceived as relatively high, which might explain the lack of a significant change. Another example of seemingly contradicting findings is using audio to reduce arousal/stimulation and increase calmness (Pizzoli et al. Citation2019), such as for meditation practices, or have the opposite effect, such as for entertainment or exercise purposes (Bird et al. Citation2019; Chen et al. Citation2017a). There are also some notable outcomes where the introduction of audio did not have an effect, such as enjoyment, awe, and the relatively large number of studies on presence. Finally, there is also a shortage of research investigating the role of audio in parasocial/interpersonal experiences.

4. Discussion

This paper has reviewed the literature regarding how audio is used to affect aspects of the user/player experience in VR in various domains.

4.1. Domain areas of concern

This section serves as an answer to RQ1 (In which contexts are the effects of audio in HMD-based VR being studied?). For the studies that did focus on a specific domain area, the majority focused on entertainment, soundscape research, and therapy/rehabilitation while the largest focus was on medium-specific studies of VR itself. Within this discussion, however, the potential effects of a bias introduced by the choice of databases consulted must be acknowledged as a potentially biasing factor.

4.1.1. Entertainment focuses on new approaches for music but does not represent the size of the games market

The corpus shows that the effects of audio are commonly studied in the domains of entertainment (19 studies), soundscape research (18 studies), and therapy (18 studies). The research in entertainment generally focused on music, with 10 of the 19 studies in this category enabling ways of music listening, training, or performing (Chang, Kim, and Kim Citation2018; Deacon, Stockman, and Barthet Citation2017; Droste, Letellier, and Sieck Citation2018; Fletcher, Hulusic, and Amelidis Citation2019; Holm, Väänänen, and Battah Citation2020; Kasuya et al. Citation2019; Lind et al. Citation2017; Pedersen et al. Citation2020; Rees-Jones and Daffern Citation2019; Shin et al. Citation2019). The corpus also contains only three studies focusing on games (Bombeke et al. Citation2018; Rogers et al. Citation2018; Wedoff et al. Citation2019) which is surprising given not only the current size of the market for VR games (Grand View Research Citation2020) but also considering that most of the studies were published between 2017 and 2020, by which point the VR games market was already worth several million USD (Statista Citation2022). The role of audio in games is often noted as being underutilised (Ekman Citation2005; Ribeiro et al. Citation2020) and research is disproportionately slanted toward certain genres, especially horror games (Ribeiro et al. Citation2020; Rogers et al. Citation2018). While the influence of our choice of databases must be acknowledged as a potential influence on the representation of this field in the corpus, there is still a notable discrepancy between the size of the market (as a proxy measure for interest in the field) and the number of studies focusing on audio.

4.1.2. Soundscapes research emphasizes the dichotomy between artificial and natural soundscapes but lacks a validated measure for pleasantness

Soundscape research generally made use of VR to reproduce urban/architectural environments and study the effects of noise (Aalmoes and Zon Citation2019; Cranmer et al. Citation2020; Echevarria Sanchez et al., Citation2017a, Citation2017b; Guang-yu, Yu-an, and Haochen Citation2019; Jeon and Jo Citation2019; Letwory, Aalmoes, and Miltenburg Citation2018; Maffei et al. Citation2013; Masullo, Maffei, and Pellegrino Citation2019; White et al. Citation2014) and generally confirm the utility of using VR for this purpose. The large representation of soundscape studies in the corpus is not surprising given the focus on audio in the search terms. This is also the area where the most consistent and generalisable findings can be found, i.e. natural ambiences are associated with positive affect measures, most commonly pleasantness (Echevarria Sanchez et al. Citation2017a; Hong et al. Citation2019; Jo and Jeon Citation2020; Park et al. Citation2020), while artificial/human ambiences are associated with negative affect measures, such as discomfort and tension/annoyance (Jeon and Jo Citation2019; Maffei et al. Citation2013; White et al. Citation2014). While the domain of soundscape research has seen an argument has been made against an overly simplistic dichotomous categorisation of natural and artificial ambiences (Harriet and Murphy Citation2015) the corpus not only suggests that this dichotomy is still common in VR soundscape research, but also confirms the pleasant/restorative effects of natural ambiences (Echevarria Sanchez et al. Citation2017a; Hong et al. Citation2019; Jo and Jeon Citation2020; Park et al. Citation2020) and opposite effect for artificial/human ambiences (Jeon and Jo Citation2019; Maffei et al. Citation2013; White et al. Citation2014).

Previous research has suggested that increased immersion would be beneficial to this field and suggests VR as one possible tool to improve soundscape research (Erfanian et al. Citation2019). While the comparison of different approaches, e.g. VR vs. in-situ, was out of the scope of the current study, the fact that the results for soundscape studies in VR agree with the findings of in-situ studies, e.g. the restorativeness of natural ambience and the opposite effect of artificial ambience (Uebel et al. Citation2021) provides evidence for the usefulness of VR as a tool for soundscape appraisal and investigation into the psychological effects of different sound environments. However, this field of research also highlights what is perhaps the largest methodological shortcoming in the corpus, which is the lack of a pleasantness questionnaire, as discussed in section 4.3.1 below.

4.1.3. Audio is effective in exposure therapy, but the focus is primarily on passive listening

The two therapeutic areas where VR was most common were exposure therapy (7 studies) and mindfulness practice (6 studies). The use of audio was found to be effective both in terms of creating the experience of anxiety in the short term (Brinkman, Hoekstra, and van Egmond Citation2015; Josman et al. Citation2008) and in reducing it through repeated exposure (Johnston, Egermann, and Kearney Citation2020; North et al. Citation2008), which provides supporting evidence for the effectiveness of audio in a virtual reality exposure therapy (VRET) context. There was also some support for the use of audio in a mindfulness context to increase relaxation and decrease activation/arousal (Gomez et al. Citation2017; Nararro-Haro et al. Citation2016; Pizzoli et al. Citation2019; Prpa et al. Citation2017, Citation2018; Seabrook et al. Citation2020), although the bulk of these results focused on qualitative data which means that the reporting and analysis of these results was out of the scope of the current study; these might be explored further in other types of review papers, such as narrative reviews. Other studies highlight the potential for audio to aid in various therapeutic outcomes, such as using music therapy to reduce frustration and increase recall for Alzheimer patients (Byrns et al. Citation2020), as well as using audio as a distraction to reduce pain intensity (Zeroth, Dahlquist, and Foxen-Craft Citation2019). While these findings highlight the importance of audio in creating the desired experiential outcomes, further investigation into these studies shows that they are overwhelmingly focused on passive listening rather than active participation in the creation of audio. The implications of this are not clear, since both active participation and passive listening have been linked to improvements in mental wellbeing (Rogers and Nacke Citation2017), although active participation affords other potential benefits such as social interactions (a category of experiences which is severely lacking in the corpus). Examples of other audio-focused phenomena that present the potential to be useful in a therapeutic context include binaural beats (Rahman et al. Citation2021) or autonomous sensory meridian response (ASMR) (Fredborg et al. Citation2021); the effects of these in VR, however, have not been studied.

4.1.4. Accessibility highlights the promise of VR to create audio-only experiences for those with visual impairments

While relatively sparse, the six accessibility-focused studies show some evidence of the potential utility for VR as an accessibility tool (Bălan et al. Citation2018; Dong, Wang, and Guo Citation2017; Picinali et al. Citation2014; Siu et al. Citation2020; Souza Silva et al. Citation2019; Wedoff et al. Citation2019). Four of these studies specifically make use of ‘audio-only’ VR that makes use of head-tracking to control audio feedback (Bălan et al. Citation2018; Picinali et al. Citation2014; Siu et al. Citation2020; Wedoff et al. Citation2019) which bears some resemblance to audio-based games that deliver information about the virtual environment using audio, either primarily, e.g. Beowulf (Liljedahl, Papworth, and Lindberg Citation2007) or exclusively, e.g. Papa Sangre (Collins and Kapralos Citation2012). In traditional gaming contexts, these games demonstrate the effectiveness of audio as a tool to convey spatial information and to create immersive gameplay experiences for those with visual impairments (Collins and Kapralos Citation2012). These games, however, are still quite limited compared to the affordances of VR technology. For example, Papa Sangre, which is a mobile game, utilises the built-in accelerometer of the mobile device, allowing the player to ‘look around’ by moving their device. While novel, the underlying technology for this implementation is still comparatively limited as it cannot provide the accuracy nor intuitiveness of moving one's own head relative to the environment. The head-tracking technology of VR devices thus provides opportunities to utilise the designs of these existing games in a variety of contexts, including games. In a more theoretical sense, the use of such audio-only solutions also provides the opportunity to study the effect of audio in HMD-based VR completely separate from the influence of visual feedback. However, the six studies listed either focus on qualitative data or comparisons of audio types or participant characteristics, which was not in the scope of this study. Further research in accessible VR, specifically for those with visual impairments, might thus consider the effect of such systems with more focused systematic reviews.

4.1.5. Arts and cultural heritage primarily focus on recreating museum experiences although other possibilities are being explored

Out of nine studies exploring the use of VR in some context related to arts and cultural heritage, 6 of these focused on creating a virtual version of a traditional, i.e. brick-and-mortar museum (Bialkova and Gisbergen Citation2017; Kelling et al. Citation2018; Li, Tennent, and Cobb Citation2019; Rzayev et al. Citation2019; Sprung et al. Citation2018; Tennent et al. Citation2020). The implications for the use of sound in these contexts are unclear with music having both a positive effect on pleasantness (Kelling et al. Citation2018) and a negative effect on liking/affective attraction (Bialkova and Gisbergen Citation2017). Most of these studies also focused on the use of qualitative data, which was out of the scope of the current study. Rather than recreating museum spaces, one study focused on the use of VR to create an archeological space (Falconer Citation2017) and two studies explored a completely different use of VR technology in creating an art installation (Prpa et al. Citation2017, Citation2018). While the use of VR in contexts for creating art installations and exhibitions is receiving increased attention (Kim and Lee Citation2022; Parker and Saker Citation2020), the effect of VR within the experience of these contexts is still comparatively unexplored. Furthermore, the focus remains on recreating existing spaces rather than exploring new possibilities afforded by VR technologies, including the use of audio feedback.

4.1.6. VR as a medium is rapidly growing but mass adoption is still far away

The largest group of studies, however, did not fall into a clear domain area but rather investigated the use of audio in VR or, more broadly, the medium of VR itself (Çamci Citation2019; Chen et al. Citation2017a, Citation2017b, Citation2018; Chirico and Gaggioli Citation2019; Chittaro Citation2012; Feng, Dey, and Lindeman Citation2016; Gao, Kim, and Kim Citation2018; Ghosh et al. Citation2018; Kruijff et al. Citation2016; Lee, Bruder, and Welch Citation2017; Lee and Lee Citation2017; Liao et al. Citation2020; Narciso et al. Citation2019; Oh, Herrera, and Bailenson Citation2019; O’Hagan, Williamson, and Khamis Citation2020; Peng et al. Citation2020; Sawada et al. Citation2020; Shimamura et al. Citation2020; Sikström et al. Citation2015, Citation2016a, Citation2016b; Van den Broeck, Pamplona, and Fernandez Langa Citation2017; Zhang et al. Citation2018; Zhao et al. Citation2017). This serves as an indication that, while the medium of VR has seen substantial growth, there is still much uncertainty for both researchers and practitioners as to how this medium should be used and studied. There is still a common view that VR technology is in its infancy (Radianti et al. Citation2020; Zaini et al. Citation2022) and the large number of studies that do not focus on VR in a specific domain area but rather investigate audio or VR at large suggests that mass adoption of VR technologies in domain areas is yet to be achieved.

4.2. Notable effects of audio

This section provides answers to RQ2 (Which aspects of experience are being studied with regards to audio in HMD-based VR research?) and RQ3 (How does audio affect various aspects of experience in HMD-based VR?). The aspects of experience studied most frequently are presence, realism, immersion, and pleasantness. The effect of audio on pleasantness is one of the most consistent findings across the corpus, i.e. natural or artificial soundscapes are respectively perceived as pleasant or unpleasant. The effect of audio on realism is also very consistent, although perhaps unsurprising: the introduction of audio increases perceived realism. What is notable here, however, is the narrow focus on verisimilitude rather than perspectives on realism that provide for unreal or impossible scenarios. Perhaps more surprisingly, the sense of presence is mostly unaffected by the introduction of audio and only one study investigated the effect of the introduction of audio on its own on the perception of immersion. Furthermore, it must be acknowledged that the search string used imposes a necessary requirement on certain keywords, which could have affected the results obtained.

4.2.1. Presence remains the most important measure in VR studies but is often unaffected by audio along with enjoyment and awe

The fact that presence is the most-studied outcome in the corpus (n = 25) concurs with the long- and widely held stance that presence is one of the most important aspects of a VR experience (Heineken and Schulte Citation2007; Ijsselsteijn et al. Citation2000; Kern and Ellermeier Citation2020; Steuer Citation1992). The term, however, is not without contention (Slater Citation2009) and, as pointed out by (Slater Citation2003) the meaning in VR research is often confused or equated with that of immersion. There is also a large number of results showing a lack of increase in certain outcomes, including presence (Bialkova and Gisbergen Citation2017; Feng, Dey, and Lindeman Citation2016; Oh, Herrera, and Bailenson Citation2019; Sawada et al. Citation2020; Wu et al. Citation2018) but also enjoyment (Bird et al. Citation2019, Citation2020; Sikström et al. Citation2016a) and awe (Chirico and Gaggioli Citation2019) which is perhaps surprising given the emphasis on audio in entertainment (Collins Citation2020; Kauhanen et al. Citation2017) and the general view emphasizing the role of audio in perceiving space (Larsson et al. Citation2010; Nordahl and Nilsson Citation2014). It is important to note that each of the studies on awe and enjoyment made use of music and used different processes for selecting music, which might have different effects based on the preferences of participants (Chirico and Gaggioli Citation2019). More rigorous protocols for audio selection, especially music, would thus be a valuable contribution to improve the validity of audio studies in VR.

4.2.2. VR experiences are frequently evaluated in terms of verisimilitude with real environments

One of the most frequently measured outcomes was realism (n = 15), i.e. the level of verisimilitude between some aspect of the VR experience and its counterpart in physical reality. Audio is found to consistently increase the sense of realism, which highlights the importance of audio in contexts where realism is a desirable outcome. However, this perspective of realism as verisimilitude also presents several flaws, such as the fact that what we perceive to be realistic often deviates from reality, e.g. sound design for film and games, i.e. foley often creates sound effects from completely unrelated source materials, such as using audio tape that is bunched together to create footstep sounds (Garner Citation2017, 76). Users of VR are also able to attribute a sense of realism and adapt their sensory responses accordingly to physically impossible scenarios, such as experiencing a sense of touch from blocks that float in mid-air (Bosman, de Beer, and Bothma Citation2021). Perhaps more importantly, however, is the fact that VR is not limited to recreating reality, but can create entirely fictional experiences, which limits the usefulness of realism as a construct (Bergstrom et al. Citation2017; Rogers et al. Citation2022). As such, several alternatives to realism have been proposed, including credibility (Bouvier Citation2008), authenticity (Gilbert Citation2016), plausibility (Bergstrom et al. Citation2017), and believability (Togelius et al. Citation2012); for an overview of how the term ‘realism’ is used across various media/domains, the reader is referred to (Rogers et al. Citation2022). However, the corpus shows that assessing realism is still a common outcome within the category of outcomes related to perception and/or embodiment within VEs, although there are a few instances of using alternatives such as naturalness (Bialkova and Gisbergen Citation2017; Chang, Kim, and Kim Citation2018; Shimamura et al. Citation2020; Sikström et al. Citation2016b) or believability (Gao, Kim, and Kim Citation2018).

4.2.3. Parasocial/interpersonal experiences are underexplored

With regards to the types of experience being studied, there is a shortage of research investigating the role of audio in parasocial/interpersonal experiences. Given the recent significant increase in using remote collaboration not only for work but for all manner of social interactions, due largely to the COVID-19 pandemic (Choi and Diehm Citation2021), VR has gained new relevance as a medium for meaningful social interaction (Choi and Diehm Citation2021; Lee, Joo, and Jun Citation2021), including the recent resurgence of interest in the concept of immersive, interconnected, virtual social spaces known as the Metaverse (Mystakidis Citation2022). Previous work in social VR has investigated the role of haptic feedback (Hoppe et al. Citation2020), gaze-input (Seele et al. Citation2017), and biofeedback (Desnoyers-Stewart et al. Citation2019). A variety of audio properties, including verbal and non-verbal, play a key role in the general experience of social interactions and social environments (Poudyal et al. Citation2021) and social interaction, in turn, plays a key role in some audio-driven practices such as music (D’Ausilio et al. Citation2015). Given the importance of audio properties, such as being able to localise audio for social interaction (Wie, Pripp, and Tvete Citation2010), it thus follows that an investigation into the range of audio possibilities and their effects on social experiences would be beneficial towards guiding the design of audio in social VR applications.

4.3. Methodological concerns

This section provides a preliminary answer to RQ4 (What implications from the literature can be drawn regarding the design of future HMD-based VR audio research?). There is a general reliance on subjective self-reports in the corpus, although the fact that many of these were developed for the purposes of the study might bring the validity of the results from these instruments into question. Novelty bias is also noted as a likely confounding variable, although the possibility of simulator sickness makes the implications for this less clear. The vast majority of studies were laboratory-based and made use of experimental or crossover designs, which leads to a lack of descriptive research that draws on natural settings. Finally, there were some instances of using alternative data collection and analysis methods, which might prove beneficial for measuring especially the most common outcomes, such as presence. The implications for future research are discussed in depth in section 5.

4.3.1. Most studies relied on subjective self-reports but often used questionnaires created for the study

While pleasantness is one of the most common outcomes in the realm of soundscape studies, none of the studies investigating perceived pleasantness used a validated questionnaire for this purpose, which might bring the validity and generalizability of these results into question. The creation and validation of a pleasantness questionnaire would thus be an invaluable contribution toward the domain of soundscape research. Similarly, most measured outcomes in the ‘Audio Properties’ category listed were measured using a questionnaire created for the purposes of each study. Furthermore, the frequent occurrence of questionnaires created for the purposes of individual studies might impact the validity of results gleaned from these instruments, since they are generally not validated and often rely on single-item scales. However, given the large variety of outcomes from the corpus, a broad standardisation of instruments across studies would require a more focused analysis that considers types and roles of audio in this context.

There are some outcomes with more standardised instruments of measurement, such as the use of the simulator sickness questionnaire (SSQ). While this standardisation can be considered beneficial for the generalizability of such results obtained, there has been criticism against the use of the SSQ in HMD-based VR (Hirzle et al. Citation2021). It should also be noted that similar criticisms have been raised against the game experience questionnaire (GEQ) (Law, Brühlmann, and Mekler Citation2018) and against how the measuring of presence is approached in VR research (Slater Citation2009) with the suggestion that subjective and objective measures should be combined (Ijsselsteijn et al. Citation2000). This argument is also supported by evidence of the disconnect between self-reported predictions of behaviour and actual behaviours in VR (Slater et al. Citation2020). While such a combined approach is used sporadically to measure presence and social presence, the overwhelming majority of studies rely primarily on subjective self-reports for presence measurement.

4.3.2. Novelty bias is a likely confounding variable

Another possible reason for the lack of increase in certain outcomes with the introduction of audio is that of novelty bias of using a HMD to experience VR, since the experience might initially be new or unusual to many participants after which they acclimatise with repeated exposure, thus leading to unexpected results. Several of the included studies note this as a potential confounding factor, e.g. (Lind et al. Citation2017; McArthur Citation2016; Moraes et al. Citation2020; Oberman, Bojanić Obad Šćitaroci, and Jambrošić Citation2018; Rogers et al. Citation2018; Sikström et al. Citation2016a; Suarez et al. Citation2017; Vosmeer and Schouten Citation2017). The effect of this on the measured outcomes connected to audio feedback is expected to be more pronounced than for visual feedback since the experience of mediated binaural/spatial audio, which is the standard for many VR experiences, is expected to be novel to many participants within the context of media experiences (Lind et al. Citation2017). This might limit participants’ conceptual framework to understand and express their experiences connected to such sensory feedback within these types of experiences (Garner Citation2017, 38).

The impact of initial novelty/unfamiliarity which dissipates throughout the course of data collection is especially noteworthy when considering the large number of crossover studies where the same participants were exposed to two or more experimental conditions. Furthermore, given the highly subjective responses to different stimuli such as music (Rogers et al. Citation2018; Schoeffler et al. Citation2015) and the ability of differing technologies such as headphones to influence factors related to experience (Larsson et al. Citation2010), this also likely explains some of the variability. This emphasizes the need for thorough familiarisation tasks prior to data collection to reduce the effects of this bias (Moraes et al. Citation2020; Suarez et al. Citation2017) as well as to consider participants’ level of experience with VR (Sikström et al. Citation2016b; Vosmeer and Schouten Citation2017). However, the implications this carries for future research is also made more complex by the possibility of inducing simulator sickness that might also introduce a confounding factor with prolonged exposure (Bird et al. Citation2019).

4.3.3. There is an overall lack of descriptive research

The majority of study methodologies involved exposing participants to some type of technological intervention. This means that there is a general lack of descriptive research that draws from the large VR user/player base, which points to a lack of insight regarding how these technologies are used and experienced outside of laboratory/exhibition settings. Previous descriptive research in, for example, game music has revealed insights that might challenge designers’ assumptions regarding player habits regarding in-game audio, e.g. (Rogers and Weber Citation2019). The overrepresentation of research in lab environments versus natural settings also has negative implications for ecological validity (Kong et al. Citation2020). Given the large market for consumer VR hardware (Kugler Citation2021; Baker Citation2022), similar research would be feasible and might provide useful insights and design guidelines for VR audio. As an example, the impact of different audio types and roles (sound effects/music, diegetic/non-diegetic, etc.) can be investigated through descriptive research to provide practitioner design guidelines. While guidelines and practitioner examples do exist for some audio properties, such as for audio diegesis (Phillips Citation2018; Stein and Goodwin Citation2019), these tend to be speculative and would benefit from empirical research contextualised in their respective domain areas.

4.3.4. Alternative data collection and analysis methods present a promising supplement to subjective self-reports

The corpus also includes a few instances of exploratory research which makes use of alternative data collection methods such as collaborative workshops (Ghosh et al. Citation2018) as well as data analysis methods such as linguistic inquiry word count (Oh, Herrera, and Bailenson Citation2019), verbal protocol analysis (Olko et al. Citation2017), and interaction analysis (Deacon, Stockman, and Barthet Citation2017). Given the dynamic nature of interactive VR experiences, there has been criticism against using conventional techniques to measure audio quality in these contexts (Yan, Wang, and Li Citation2019) and alternative approaches have been employed that incorporate varying degrees of interactivity (Robotham et al. Citation2022). Alternative analysis techniques have been explored in game studies to measure experiential outcomes, such as using grounded theory to measure immersion (Farkas et al. Citation2020) and facial expression analysis to measure emotions (Roohi et al. Citation2018). In VR research there has been previous work investigating the use of linguistic analysis to measure presence (Kramer, Oh, and Fussell Citation2006) as well as audio analysis to measure valence and arousal (Alzayat, Hancock, and Nacenta Citation2019). This suggests that such approaches could also provide fruitful alternatives for collecting and analyzing experience data in audio-driven VR research. Future research could thus employ more such approaches, possibly alongside more standardised approaches such as subjective-self reports.

5. Future agenda

The previous section presented a discussion of the results from the corpus within the wider contexts of various domain areas of study and pointed out gaps in these areas. This section summarises these gaps in the form of future agendas for research on the effects of audio in HMD-based VR. By providing a thematic and methodological agenda for future audio-focused research in VR, we provide an answer to RQ4 (What implications from the literature can be drawn regarding the design of future HMD-based VR audio research?).

5.1. Thematic agenda

While it could be argued that audio can benefit any of the domain areas listed in , including those with very few studies, the most notable discrepancy in terms of a domain that is receiving much attention commercially but not in research is that of games. While there is no shortage of previous work emphasizing the importance of audio in digital games at large (Collins Citation2020), digital games research seems slow to catch up within the audio modality in particular. There is also a severe lack of investigation into the use of audio in parasocial/interpersonal VR, which is proving to be a growing area of interest due to the resurgence of the Metaverse concept which promotes multi-user VR across interconnected virtual environments (Mystakidis Citation2022). It should also be restated that this is not limited to verbal sounds but may include any number of other non-verbal sounds such as music and environmental sounds (Poudyal et al. Citation2021). Further research is thus needed to understand the role of audio in both of these rapidly growing markets.

While there is more research in the broader domain of therapeutic studies, future research in this area can address the lack of studies that make use of interactive audio. The capabilities of interactive media afford user participation with the audio stimuli being received, which creates opportunities for co-creation rather than passive consumption of such stimuli (van Elferen Citation2020). One notable benefit of such co-creative affordances in the therapeutic domain is the potential to create social experiences, which might be especially suitable to combat the social isolation brought on by the COVID-19 pandemic (Choi and Diehm Citation2021). The audio domain at large has also seen an uptake in investigating other audio-focused phenomena, such as binaural beats and ASMR, which could prove useful in therapeutic or mindfulness contexts; future research might investigate the use of such practices in VR-driven therapy. In terms of arts and cultural heritage, the small number of studies focused primarily on recreating existing spaces for art exhibits, which does not realise the full potential of immersive VR in reimagining how arts and heritage may be experienced. Future research might utilise interdisciplinary approaches to designing art exhibitions and experiences and a logical starting point would be a systematic review on the design of such installations and exhibitions.