?Mathematical formulae have been encoded as MathML and are displayed in this HTML version using MathJax in order to improve their display. Uncheck the box to turn MathJax off. This feature requires Javascript. Click on a formula to zoom.

?Mathematical formulae have been encoded as MathML and are displayed in this HTML version using MathJax in order to improve their display. Uncheck the box to turn MathJax off. This feature requires Javascript. Click on a formula to zoom.ABSTRACT

Recent crises like the COVID-19 pandemic provoked an increasing appearance of misleading information, emphasising the need for effective user-centered countermeasures as an important field in HCI research. This work investigates how content-specific user-centered indicators can contribute to an informed approach to misleading information. In a threefold study, we conducted an in-depth content analysis of 2382 German tweets on Twitter (now X) to identify topical (e.g. 5G), formal (e.g. links), and rhetorical (e.g. sarcasm) characteristics through manual coding, followed by a qualitative online survey to evaluate which indicators users already use autonomously to assess a tweet's credibility. Subsequently, in a think-aloud study participants qualitatively evaluated the identified indicators in terms of perceived comprehensibility and usefulness. While a number of indicators were found to be particularly comprehensible and useful (e.g. claim for absolute truth and rhetorical questions), our findings reveal limitations of indicator-based interventions, particularly for people with entrenched conspiracy theory views. We derive four implications for digitally supporting users in dealing with misleading information, especially during crises.

1. Introduction

In an era of complex global crises, including the Russian war of aggression against Ukraine and the COVID-19 pandemic, the world is struggling with an overabundance of accurate and false information, leading to what the World Health Organisation has coined an ‘infodemic’ (World Health Organization Citation2020). Social media channels such as Twitter (now X), TikTok, and Facebook have emerged as essential and compelling platforms for individuals to share information (Reuter and Kaufhold Citation2018). However, the dissemination of misleading information is increasingly facilitated by social media because of characteristics such as low costs and anonymity (Steinebach et al. Citation2020). Experts have studied misleading information during crises from various perspectives and disciplines. Our work is interested in misleading information that was deliberately created as well as unintentionally, following Geeng, Yee, and Roesner (Citation2020) and their understanding of misinformation to collect false information ‘agnostic to the intention of the original creator or poster’ for our sample. In line with previous scholarship (Geeng, Yee, and Roesner Citation2020; Giglietto et al. Citation2016), we define misleading information as an umbrella term encompassing both intentionally misleading information (i.e. ‘disinformation’ or ‘fake news’) and unintentionally misleading information (i.e. ‘misinformation’). In our understanding, misleading information comprises false information and misleading satire, whereas non-misleading information encompasses true information, opinions that are not absolute, or satire that is not misleading in the specific context.

Research in Human-Computer Interaction (HCI), psychology, and information systems has started to investigate how to tackle the pervasive issue of misleading information using technology. This multifaceted exploration encompasses the development of very technical, often machine-learning-based, automatic detection approaches (Shu et al. Citation2017; Wu and Liu Citation2018) alongside approaches with stronger user-centered HCI focus on post-detection decisions or aspects detached from detection (e.g. providing corrections).

Digital misinformation interventions are employed to assist users in processing misleading information online and are typically applied after automatic (and sometimes manual) pre-filtering problematic content. They build upon and expand educational and journalistic initiatives. The term ‘digital misinformation intervention’ has gained recognition within the research community (Bak-Coleman et al. Citation2022; Saltz, Barari, et al. Citation2021; Saltz, Leibowicz, and Wardle Citation2021), where user-centered misinformation interventions ‘go beyond a purely algorithmic back-end solution and exert a direct influence on the user in the form of information presentation or withholding’ (Hartwig, Doell, and Reuter Citation2023, 2). For instance, some approaches correct misinformation by displaying a link to a fact-checking website, debunking videos, or corrections within the comment section (Ardevol-Abreu, Delponti, and Rodriguez-Wanguemert Citation2020; Bhargava et al. Citation2023; Martel, Mosleh, and Rand Citation2021), and others provide binary labels to flag false content (Barua et al. Citation2019). Even after extensive research on interventions to combat misleading information and the contentious debates surrounding their effectiveness, the field has yet to come to a definitive solution. Therefore, it is vital to persist in examining users' particular requirements, abilities, and viewpoints in a user-focused manner.

Previous research suggests that transparency plays a critical role in establishing trust among users in digital interventions (Kirchner and Reuter Citation2020) and minimising the likelihood of reactance or other backfire effects (Müller and Denner Citation2019; Nyhan and Reifler Citation2010). In response to this need, some studies have started to develop and evaluate indicator-based interventions that present cues for users to evaluate information. These interventions have demonstrated promising outcomes and continue to be a topic of controversy and investigation. Indicator-based misinformation interventions are defined as digital countermeasures using identifiable misinformation indicators to assess if a piece of information is credible (Hartwig et al. Citation2024). Typically, these indicators (e.g. emotional wording, hashtags including conspiracy theories) are displayed to users for immediate feedback and educational purposes, facilitating the development of skills to assess the credibility of content autonomously (Hartwig et al. Citation2024). Often, indicator-based interventions involve highlighting indicators within the content using color-code (Martino et al. Citation2020).

The objective of our article is to contribute to the design of user-centered interventions based on indicators by determining which indicators are suitable in terms of perceived usefulness and comprehensibility. Addressing this research gap in HCI research, our paper offers a comprehensive exploration of qualitative and user-centered insights into an indicator-based approach. We apply this approach specifically to short-text content on Twitter, aiming to understand its applicability in real-world scenarios. As previous studies have suggested that misinformation during crises varies from everyday content, we apply our analyses and user studies to the particular long-term crisis of the COVID-19 pandemic. Additionally, we demonstrate how our approach can be applied to the Russian war of aggression against Ukraine as a distinct crisis. Our perspective on German content contributes to the predominantly U.S.-focused research on misinformation.

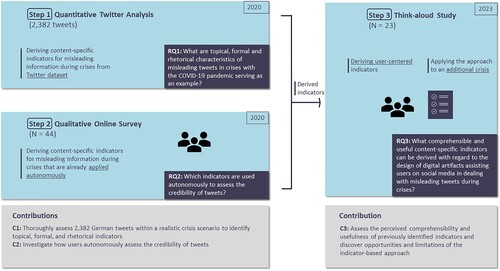

Our study consists of a threefold research design, comprising (1) a content analysis of Twitter data to get a sense of what misleading information in our crisis context looks like, (2) an online survey to investigate which indicators users have already been applying autonomously to assess tweets, and (3) a think-aloud study to evaluate the comprehensibility and perceived usefulness of previously identified indicators. The sequential studies offer a chance to triangulate data and obtain comprehensive findings.

We advance misinformation research by applying existing knowledge about indicator-based interventions to specific crises and preserving a user perspective of a diverse group of participants. Our core contributions (C) and findings (F) are first (C1) thoroughly assessing 2382 German tweets within a realistic crisis context. In doing so, we identified (F1) topical indicators including context-specific themes like ‘Restriction of Liberty’, formal indicators such as links and hashtags that occur in similar measure in credible tweets but are a valuable cue for misleading information when being nudged to take a closer look at what they refer to, and rhetorical indicators for misleading information like claim to ‘Absolute Truth’. We then (C2) conducted an online survey to investigate how users autonomously assess the credibility of tweets. Through this survey, we (F2) gained insights into several indicators that users apply autonomously (e.g.buzzwords like ‘mainstream media’ as topical indicator), and found that they are in particular not aware of rhetorical indicators of misleading information, revealing the benefit to display them in an assisting tool. Finally, we (C3) assessed the comprehensibility and usefulness of our previously identified indicators and discovered (F3) a positive perception of the overall indicator-based approach. Evaluating in particular how the topical, formal, and rhetorical indicators enhance the perception and evaluation of users when presented as a digital intervention, we gained (F4) positive feedback regarding comprehensibility and perceived usefulness for instance for topical indicators when kept rather general, emotional emojis, and capitalisation, but also criticism for others (e.g. ‘Punctuation’ being too excluding for non-native speakers). Additionally, we identified (F5) the opportunities, challenges, and limitations associated with the indicator-based approach for short-text content, such as indicators not being valid if within a quote and drawing attention to misleading content even more when highlighting specific parts.

2. Related work and research gap

Our work contributes to the design of user-centered digital interventions to counter misleading information on Twitter/X, which is widely used by people during crises to access as well as share information. We discuss related work regarding misleading information during crises and shed light on crisis informatics as a research area (see Section 2.1). We further present different ways to assist users of social media in dealing with misleading information from an HCI perspective, including digital interventions of various forms that strongly motivated the user-centered proceeding of our work (see Section 2.2). Subsequently, we illustrate research gaps and our research questions (see Section 2.3).

2.1. Misleading information in crises

As we investigate misleading information on social media during crises like the COVID-19 pandemic and the Russian war of aggression against Ukraine, it is crucial to shed light on previous works of crisis informatics which have focused on different user perspectives and their motivation to create misleading content in both long- and short-term crises. While we focus on the user perspective and resulting implications for the design of digital interventions, many existing studies investigate motivations for creating misleading information which are partly founded in antisemitism (e.g. believing in an international elite controlling government) (Hansson Citation2017; Starbird Citation2017) or an anti-globalist view (Molina, Sundar, and Lee Citation2021; Starbird Citation2017), or propagating actors and communities (Memon and Carley Citation2020). Other studies focus on specific conspiracy theories (e.g. 5G theory) (Ahmed et al. Citation2020) or contents of misleading narratives in English, Hindi, Bangla, or Telugu tweets during the COVID-19 pandemic (Akbar et al. Citation2021; Sharma et al. Citation2020). These contributions are especially valuable for our research goals as topics and, therefore, reflected motivations may allow us to derive comprehensible indicators for users with different backgrounds and varying degrees of vulnerability towards certain discourses and deception strategies. We conducted our study based on these findings, identifying similarities and complementing insights.

There are valid assumptions that misleading information during crises differs from misleading information in everyday life (Huang et al. Citation2015; Mendoza, Poblete, and Castillo Citation2010), for instance in regard to the speed at which it is transmitted (Zeng, Starbird, and Spiro Citation2016). In addition, the information needs during a public health crisis differ from those of other types of crises (Gui et al. Citation2017). Moreover, information, especially in the early stages of the crisis, is often incomplete and contradictory (Gui et al. Citation2017). This period is particularly characterised by great uncertainty (Burel et al. Citation2020). Considering worldwide spread of COVID-19-related misinformation, studies have frequently focused on English-speaking or US-centric debates (Schmid-Petri et al. Citation2023). For example, Evanega et al. (Citation2020) identified themes such as ‘New World Order’, ‘Wuhan Lab / Bioweapon’, ‘Bill Gates’, ‘5G’, ‘Antisemitic Conspiracies’, and ‘Plandemic’ to be prominent. While reflecting several of these themes in our study as well, regional differences can be identified as our German-language data set did not comprise tweets referring to ‘Democratic Party Hoax’ or ‘Dr. Anthony Fauci’ (Evanega et al. Citation2020). Another study, concerned with the perception of legitimacy of vaccine-related disinformation in the context of the COVID-19 pandemic, is also based on data from the U.S. (Di Domenico, Nunan, and Pitardi Citation2022). Connecting to works on the German COVID-19 social media debate (Schmid-Petri et al. Citation2023; Zeng and Chan Citation2021), it is indicated that overarching topics such as science denial or conspiracies can be seen as phenomena across the globe, with context-specific signifiers (e.g. politicians) diverging according to the national context.

Dedicated to the cooperative and collaborative use of information and communication technology in disruptive situations (Palen and Anderson Citation2016), crisis informatics has focused on the spread of false information in the course or aftermath of crises (Burnap et al. Citation2014; Goggins, Mascaro, and Mascaro Citation2012; Vieweg Citation2012). Further, Starbird, Arif, and Wilson (Citation2019) argue that information operations on social media, often led by political actors, are an important part of HCI research: such information operations ‘function to undermine the integrity of the information space and reduce human agency by overwhelming our capacity to make sense of information’. Indeed, finding adequate ways to assist users in dealing with misleading information (in crises) is a socio-technological challenge within the HCI research community. Characteristics of misleading information identified in the previously presented studies are a central part of developing effective countermeasures. In the following, we present how HCI works and related research designs have evaluated user-centered digital interventions.

2.2. Assisting users in dealing with misleading information

The dissemination of misleading information represents a worldwide issue with efforts from multiple perspectives to combat its impact. Potential solutions encompass professional fact-checking, media literacy campaigns, policy implementations, and digital interventions on social media. The strategies differ depending on various factors, including geographical location, culture, socioeconomic context, and political climate (Haque et al. Citation2020). Additionally, the type of assistance required can vary among individuals, for instance, based on their political ideology and age (Guess et al. Citation2020). The computer science research community, including HCI, addresses the challenge of misleading information by supporting media literacy efforts, policy implementations, and professional journalism by implementing technological solutions. These may comprise of automatic detection approaches (Shu et al. Citation2017), especially applying machine learning techniques, but also user-centered decisions and implementations on what to do with successfully (manually or automatically) detected misleading information. In particular, post-detection digital misinformation interventions (Bak-Coleman et al. Citation2022; Saltz, Barari, et al. Citation2021) have been investigated taking various forms (Hartwig, Doell, and Reuter Citation2023). This includes corrections, for instance underneath a social media post, in the comment section, or as a link to fact-checking websites (Bhargava et al. Citation2023), visibility reduction by reducing opacity or size (Ardevol-Abreu, Delponti, and Rodriguez-Wanguemert Citation2020), giving (binary) labels to mark content as right or wrong (Barua et al. Citation2019), or highlighting specific characteristics of misleading content as cues for users (Hartwig et al. Citation2024; Bhuiyan, Whitley, et al. Citation2021; Schmid et al. Citation2022).

While digital interventions vary widely; research suggests that users prefer those that come with a certain degree of transparency, allowing for informed decisions and the ability to comprehend why content is potentially misleading (Kirchner and Reuter Citation2020). Giving explanations or comprehensible cues can be significant to establish trust in the intervention (Hartwig et al. Citation2024; Kirchner and Reuter Citation2020) and counter feelings of reactance or related backfire effects (Nyhan and Reifler Citation2010) that are controversially discussed in research (Wood and Porter Citation2019). Indeed, a higher level of transparency can be used to enhance the media literacy and critical thinking competence of users to establish their own assessment strategies instead of forcing a total dependency on the intervention's feedback. In line with other researchers, we define media literacy as the ability to decode, evaluate, analyze, and produce media and internalise ‘critical autonomy’ (Aufderheide Citation1993). While the concept of media literacy has received some criticism for oversimplifying the complex information space (Hassoun et al. Citation2023), relying too much on rationality (Boyd Citation2017), and creating a false sense of confidence (Bulger and Davison Citation2018), research has demonstrated its beneficial effects to alter behaviour for the better (Spezzano Citation2021; Webb and Martin Citation2012) – an insight we draw on in our study.

In the context of different digital interventions to enhance media literacy (e.g. giving generic tips on how to detect misleading contents before users are even confronted with social media posts or news articles (Domgaard and Park Citation2021; Guess et al. Citation2020; Hameleers Citation2022)), indicator-based interventions have gained some attention in related HCI research (Hartwig et al. Citation2024; Martino et al. Citation2020). These indicator-based approaches may be applied in two ways:

| (1) | Integrated into a fully automatic tool that aims to detect misleading information (e.g. using machine learning) and, in addition, automatically detects previously defined user-centered indicators on successfully detected misleading content. This allows us to make the output comprehensible and useful for laypersons. | ||||

| (2) | Integrated in combination with manual professional fact-checking, where fact-checkers manually label user-centered indicators in addition to a binary decision or conduct the detection of previously defined user-centered indicators automatically (e.g. via natural language processing). | ||||

Several studies have started to investigate how characteristics of misleading or credible content can be derived and utilised either for statistic-based automatic detection approaches or as a more user-centered intervention. These characteristics or indicators can refer to diverse parts of a social media post, for instance, information about the author (Di Domenico, Nunan, and Pitardi Citation2022) (e.g. suspicious profile, name, followers, other posts), interaction with the posts (Hartwig et al. Citation2024) (e.g.likes, comments), or the content itself (Diaz Ruiz and Nilsson Citation2023) (e.g.rhetorical strategies, linking to questionable websites). For example, Shu et al. (Citation2017) have pointed out different topics, lexical, and syntactical features of fake news and Horne and Adali (Citation2017) evaluated how fake news articles differ from reliable content and found that fake news articles tend to be shorter, use less punctuation and more lexical redundancy, and titles can be considered a ‘strong differentiating factor between fake and real news’ (Horne and Adali Citation2017). Schoenmueller, Blanchard, and Johar (Citation2023) have investigated the relations of socio-demographic factors, social media activity, and personality traits of disinformation-sharing of users with textual and linguistic features based on their post-histories. They identified distinctive characteristics such as linguistic markers, high-arousal negative emotions (e.g. anger and anxiety), religion, and power-related vocabulary.

Recent attempts to capture specific rhetorical structures and tactics have been pursued by Diaz Ruiz and Nilsson (Citation2023) who have identified how identity-driven controversies and pre-existing culture wars are rhetorically exploited by the disseminators of disinformation. Their focus is on the particular example of flat Earth conspiracies, but more fundamentally, they unravel interconnections with broader narratives of faith and religion, societal agency, anti-elitism, and anti-intellectualism, leaving a much more diverse group of social media users susceptible to such tactics. Central to this is their perception that disinformation intertwines with general beliefs (Diaz Ruiz and Nilsson Citation2023). Di Domenico, Nunan, and Pitardi (Citation2022) additionally found through their study of social media users' behaviour in the context of vaccination misinformation in the U.S. that sharing behaviour depends on how legitimate users consider a piece of information to be. They explain how the perception of legitimacy can be described by five dimensions (cognitive, pragmatic, moral, expert, and algorithmic legitimacy), which must be addressed differently in an intervention. Furthermore, they identify specific ‘themes’ and cues through which the legitimisation can be realised (e.g. ‘Power Dynamics’, ‘Media-State Agenda-Setting’ and ‘Outsiders Thinking’ for cognitive legitimacy) and emphasise particularly how ‘expert cues’ (i.e. the representation of an author as an expert in the field) increase perceived legitimacy of misinformation. Our work builds on existing studies on misinformation indicators by systematically applying the derivation and user-centered evaluation of content-specific indicators (differentiating topical, formal, and rhetorical forms) referring to a specific crisis from an HCI perspective. We thereby were strongly inspired by related work (see ). We distinguish topical content, i.e. the meaning communicated by a post, from more formal indicators, such as punctuation, which is usually also considered as part of news content (Shu et al. Citation2017). Additionally, we search for rhetorical indicators as related structures have been proposed to complement detection based on lexicosemantic analyses (Rubin and Lukoianova Citation2015).

Table 1. The coding scheme is oriented by related scholarly contributions, as indicated by references, as well as tweets (*).

In addition to the deriviation of diverse indicators from text, images or videos, research has started to implement them within digital interventions, often using color-code to highlight indicators as cues for end users for immediate feedback and education (Bhuiyan, Horning, et al. Citation2021). Martino et al. (Citation2020) developed a system that analyses text and automatically highlights propaganda techniques such as exaggeration or oversimplification within the text in different colors. Similarly, Schmid et al. (Citation2022) present a web app that allows users to explore and comprehend patterns of potentially misleading and non-misleading tweets based on a social network analysis, revealing the potential to encourage the development of their own assessment skills. Evaluating derived indicators from the perspective of end users (e.g. regarding their comprehensibility) is necessary to develop user-centered and effective interventions. Some studies have started to investigate within this research direction (e.g. for video content (Hartwig et al. Citation2024), for voice messages (Hartwig, Sandler, and Reuter Citation2024), or for expert annotators instead of layperson (Zhang et al. Citation2018)).

Indeed, research on indicator-based interventions has revealed promising findings regarding trust (Bhuiyan, Whitley, et al. Citation2021; Kirchner and Reuter Citation2020), utility (Grandhi, Plotnick, and Hiltz Citation2021), and the development of autonomous assessment skills (Hartwig et al. Citation2024; Schmid et al. Citation2022), but also revealed challenges and limitations (e.g. not being transparent enough or having a biased design Grandhi, Plotnick, and Hiltz Citation2021). We build on those findings by complementing them with a crisis-related perspective and a specific focus on social media users as laypersons.

The majority of existing research on indicators has focused on social media content in everyday life instead of crises. However, specific conditions such as indicators or user requirements, may vary during a crisis (Plotnick et al. Citation2019). These conditions are essential for technology-based user assistance in both automatic detection and subsequent countermeasures. van der Meer and Jin (Citation2020) investigate the efficacy of correcting misleading information as a countermeasure against its spread specifically in crisis contexts. The authors posit that such measures may prove even more effective during crises, as the dissemination of misleading information concerns new information only, and therefore would not require individuals to alter their attitudes, identity, or ideology, but only their beliefs regarding the current crisis situation. It is uncertain whether this assumption is applicable to a crisis such as the COVID-19 pandemic, as a long-term and disruptive event. Furthermore, Plotnick et al. (Citation2019) conducted an online survey to assess general user behaviour and attitudes towards fake news. The analysis results indicate that users consider grammar and the sender's trustworthiness as key indicators of trustworthy content. Additionally, the majority of participants expressed a desire for a color-coded graphic to aid them in evaluating the trustworthiness of information on social media. Although the authors did not specifically concentrate on crises, they underscore the significance of evaluating the credibility of social media content during such situations. For example, they propose examining whether grammar can still effectively indicate untrustworthy content during a crisis when content creation time is limited. These findings drive our research and seeks to understand user perspectives on comprehensible and useful indicators as potential interventions during crises, which represent exceptional and extraordinary circumstances for misleading information.

2.3. Research gaps and research questions

Our work contributes to existing literature on HCI regarding misleading information during crises by shedding light on the user perspective, filling the following two gaps:

1st gap: Prior studies have investigated misleading information with respect to the COVID-19 pandemic, yet clearly focused on the U.S.. We offer a vantage point for research on transnational discourses of misleading information, revealing regional specifics or patterns of German tweets that are similar to U.S. conspiracist narratives (e.g. Akbar et al. Citation2021; Sharma et al. Citation2020) on COVID-19. We differentiate between topical, formal, and rhetorical indicators. This also allows us to reveal relationships between these three types of characteristics and accompany studies that have been interested in social media behaviour with regards to different topics.

2nd gap: Studies have already investigated features of misleading contents in terms of applicability for automatic detection (Zeng, Starbird, and Spiro Citation2016), the motivations for the creation of misleading information (Starbird Citation2012, Citation2017), and a user perspective on (digital) misinformation interventions (Aslett et al. Citation2022; Horne and Adali Citation2017; Martino et al. Citation2020; Pennycook, Epstein, et al. Citation2021). However, the user perspective regarding countermeasures is still to be addressed even more extensively (Gui et al. Citation2017; Kirchner and Reuter Citation2020) regarding indicator-based approaches to encourage media literacy. By identifying user-centered, i.e. comprehensible and useful content-specific indicators for misleading tweets, we contribute to existing research (Kahne and Bowyer Citation2017; Kirchner and Reuter Citation2020; Mihailidis and Viotty Citation2017; Potthast et al. Citation2017) that focuses on the subsequent countermeasures after (automatic or manual) detection. Recently, researchers have stressed the importance of designing user-centered measures that counter reactance (Müller and Denner Citation2019; Nyhan and Reifler Citation2010) and follow the needs and preferences of end users for comprehensible and transparent information (Kirchner and Reuter Citation2020). Building on other approaches for user assistance (Bhuiyan et al. Citation2018; Fuhr et al. Citation2018; Hartwig and Reuter Citation2019), we follow up on those findings and complement them with a focus on specific crises. As the time at the beginning of a crisis is particularly relevant for the investigation of misleading information due to high uncertainty (Burel et al. Citation2020), we focus on this period in our study when thoroughly analysing tweets. Instead of working towards an explanation of AI models, we are specifically interested in comprehensible content-specific indicators, independently from the preceding detection method.

We are convinced that approaches governing users' online activities need to offer the necessary ground for reflection or learning and that research on assistance for users in dealing with misleading information online during a crisis may help to achieve this. Thus, our overarching goal is to answer the following research questions:

| RQ1: | What are topical, formal, and rhetorical characteristics of misleading tweets in crises, with the COVID-19 pandemic serving as an example? | ||||

| RQ2: | Which indicators are used autonomously to assess the credibility of tweets? | ||||

| RQ3: | What comprehensible and useful content-specific indicators can be derived with regard to the design of digital artifacts assisting users on social media in dealing with misleading tweets during crises? | ||||

We present our contribution within a threefold study design. First, we perform a content analysis of Twitter data to get a sense of what misleading information in the context of crises such as the COVID-19 pandemic looks like and to identify content-specific characteristics. Second, we conduct an online survey to qualitatively assess which indicators users already apply autonomously to estimate the credibility of tweets. Third, our think-aloud study gains qualitative insights into how comprehensible and useful people from different backgrounds perceive the previously identified indicators to be, extending our approach to the Russian war of aggression against Ukraine as an additional recent crisis event.

3. Methodology

In this paper, we employed a threefold study design to provide the foundation for future digital misinformation interventions after (manual or automatic) pre-filtering. Our primary goal was to derive user-centered indicators to help users evaluate the credibility of tweets, specifically regarding misinformation during crises like the COVID-19 pandemic or the Russian war of aggression against Ukraine. The study did not develop any intervention but aimed to identify and evaluate potential indicators for integration into subsequent interventions. The threefold methodology is motivated by related work (Saaty et al. Citation2022) that emphasises using social media data as a source of information to provide an extension to traditional methods such as the think-aloud method.

Step 1 of the study entailed a quantitative Twitter analysis. Here, we established a thorough knowledge base to ‘get a sense of what [misleading information] looks like in the context that [we] are interested in’ (Pennycook, Binnendyk, et al. Citation2021). Tweets concerning COVID-19 were gathered and examined by researchers, who distinguished between misleading and non-misleading content. A content analysis was carried out, identifying several characteristics of misleading tweets, which were sorted into topical, rhetorical, and formal characteristics. These characteristics could be potential indicators assisting users in assessing the credibility of tweets. However, to evaluate this, the subsequent user-centered steps are still necessary.

Moving on to Step 2, the researchers aimed to discover the characteristics that Twitter users utilise when assessing the credibility of tweets autonomously. They conducted a qualitative online survey, where participants rated the credibility of a set of tweets and were asked to provide explanations for the characteristics or indicators used for their credibility assessments. This phase provided researchers with insights into the indicators that individuals autonomously use when evaluating the credibility of tweets and might be particularly beneficial for users with lower media literacy (e.g.due to young age) to be displayed as cues.

In Step 3, the study integrated the findings from Steps 1 and 2. The identified characteristics of misleading tweets from Step 1, both on the topical, rhetorical, and formal levels, were combined with the indicators autonomously used by participants in Step 2. In this final phase, we examined in a think-aloud study how users perceived the usefulness and comprehensibility of these indicators when presented alongside actual tweets regarding multiple current crises. This encompasses indicators that can be considered rather definitive for credibility assessment and others that are more subtle but still provide a fuller picture of creators' intentions or beliefs, and overall encourage critical reflection. In the following, we present Twitter as our case selection and a detailed description of the methodologies in Steps 1 to 3 (for a graphical representation of our threefold study design see ).

Figure 1. Graphical representation of our threefold study design comprising of (1) a quantitative Twitter analysis, (2) a qualitative online survey, and (3) a think-aloud study.

3.1. Case selection

Twitter (now X) as an important social media platform (Economic and Social Research Council Citation2020) allows fast information dissemination across national borders. Assuming the network constitutes a sphere of public discourse, comprising users with different levels of educational and political backgrounds, we decided to select Twitter during crises as our research case. We addressed this case in three different case analyses that build on each other (see Section 3 and ). We conducted an analysis of tweets regarding the COVID-19 crisis in 2020. Focusing on German tweets may reveal important insights as both conspiracy theories and vivid debates by civil society have a long history. It further allows for the derivation of indicators in the German language. To gain additional insights into the transferability to other recent crises, we extended our stimuli to the Russian war of aggression against Ukraine in Step 3. While the COVID-19 pandemic as a health-related crisis primarily revolves around public health and safety, tweets regarding the Russian war against Ukraine are fundamentally of a more security-related nature. Despite their differences, both crises share commonalities in their political relevance, the occurrence of common conspiracy theories, the exploitation of the insecure situation by manipulative groups, and their high potential to influence public opinion. As many other social media platforms, Twitter has consistently adapted its handling of misleading information during the pandemic, reaching from removal or labeling of harmful contents to generic warnings that the information conflicts with public health experts' guidance (Coleman Citation2020). While less specific warnings may be time-efficient, users could have problems understanding their reasoning (Haasch Citation2021). Recently, Twitter has started the pilot project ‘Birdwatch’ in the USA as a community-based approach (Coleman Citation2020). However, at the time of this study, this approach was not yet accessible in Germany. While this work was conducted when the platform was still named Twitter, recent changes have demonstrated an increasing interaction with disinformation sources after accounts controlled by a state are no longer marked as such. This shows a wider relevance of the platform now named X for misleading content in general (Sadeghi, Brewster, and Wang Citation2023).

3.2. Step 1: Quantitative twitter study updated -- deriving content-specific indicators for misleading tweets during crises

Step 1 aims to contribute to the knowledge base on characteristics of misleading tweets during the COVID-19 pandemic as a specific crisis. Thus, we derived characteristics on different levels as potential indicators to assist users in assessing tweets' credibility.

3.2.1. Data collection

To create our sample, we used a Twitter API, scraping a wide range of COVID-19-related tweets using the query ‘Coronavirus OR Corona OR Covid OR Coronakrise OR coronadeutschland OR Corona19 OR covid19 OR covid-19 OR #COVID-19’. We initially collected a total of about 360,000 tweets. The first data collection phase on Twitter took part during a very early stage of the pandemic (27 February 2020 to 6 March 2020) while the second data collection phase on Twitter took part from 14 April 2020 to 22 April 2020. Public life in Germany was still quite ordinary during the first observation period, and a strong focus of reporting was on foreign countries such as China and Italy. Only after the first observation period were contagions detected in all German states, and Chancellor Merkel announced the first Germany-wide lockdown. During the second observation period, more and more COVID-19 cases were recorded in Germany. Germany was still in the first lockdown, and public life had reached a standstill. However, a slow return to public life had already been introduced. The debate about compulsory vaccination was present in the public discourse.

3.2.2. Data processing

To extract a meaningful number of tweets for subsequent examination for indicators, we used Python to process the tweets as follows. (1) We excluded all non-German tweets and eliminated duplicated content like retweets. (2) We generated a random sample to include all potential kinds of misleading information, independent of topic and motivation. (3) We ensured that a sufficient number of misleading tweets was included during a group of researchers' initial review of the tweets. (4) A thorough introduction and a background in political science qualified the researchers to label the tweets with the corresponding codes. A detailed description of the individual steps can be found in the appendix (see Section A.1). To gain a balanced proportion, we randomly excluded some non-misleading tweets from the analysis as they were overrepresented after the final coding. Hence, our final sample for analysis consisted of 2382 tweets in total, including 50% misleading information such as false information or misleading satire, and 50% tweets that were marked as not misleading. This entails tweets that were labeled as true information, opinions that are not absolute, or satire that is not misleading in the COVID-19 context, both for the sample of the early stage and the second stage of the crisis.

3.2.3. Analysis

After extracting a reasonable amount of tweets, we conducted a manual content analysis to thoroughly derive characteristics of misleading in comparison to non-misleading tweets. The content analysis (Flick Citation2014) consisted of a manual coding process using RQDA. We chose to perform the analysis manually instead of computationally, to not overlook any previously unexpected characteristics and allow for diving deep into the data. A training phase initiated the coding process to obtain a common understanding of the codes. Code categories, codes, and sub-codes were developed both deductively and inductively, taking into consideration both theory and the empirical material (see Coding Scheme in ). It was an iterative process, enabled by a research group of three. Three researchers with expertise in political science and misleading information, as well as HCI, labeled the tweets according to a pre-established coding scheme by one researcher. Each tweet was at least tagged as misleading or non-misleading. As we are interested in identifying potential indicators for misleading tweets, many non-misleading tweets were not assigned to any of the codes reflecting topics.

We were looking for immediately visible and potentially comprehensible indicators for misleading tweets and thereby differentiated between topical, formal, and rhetorical characteristics of tweets as potential indicators, inspired by related work (see Coding Scheme in and, for examples, see , App.). With topical indicators, we aim to capture topics that are recurring in misleading posts. Thus, we want to gain insight into what message posts communicate and differentiate lexical or syntactical indicators, such as punctuation, from a post's meaning. We also identify related formal indicators, which may also represent system functions, such as external links or hashtags (Shu et al. Citation2017). Codes capturing these formal and more general content indicators were inspired by the literature on the detection of misleading information (Castillo, Mendoza, and Poblete Citation2011) and related contemporary ideological narratives (Hansson Citation2017; Starbird Citation2017). In contrast to topical and rhetorical characteristics and the majority of formal characteristics, the mere existence of hashtags or links is not an indicator of misleading information. Instead, users can conclude a closer look at the hashtags or links contained, e.g. whether they lead to conspiracy theory websites. Specific topical indicators (e.g. ‘Laboratory Wuhan’) were based on the exploration of tweets as were the majority of codes capturing rhetorical indicators of tweets. Thus, regarding the latter, we did not dive into rhetorical structure theory (Rubin and Lukoianova Citation2015) or intend to expose the existence of more abstract underlying rhetorical tactics (e.g. the construction of echo chambers (Diaz Ruiz and Nilsson Citation2023)). Instead, our focus lies on more basic stylistic means, (e.g. ‘Exaggeration’, ‘Sarcasm’), which could be identified in the empirical data. We thereby implicitly agree with the assumption that the dissemination of disinformation can operate through underlying rhetorical tactics, but instead focus on identifying the specific stylistic and rhetorical devices employed in the process.

Coders were free to add codes to the existing coding scheme whenever necessary. In that case, the information on the new code was shared with the other coders. In all other cases, after the training phase, the three coders worked independently to achieve a sufficient level of objectivity. Subsequent analyses were based on majority decisions, determining the codings. Intercoder-reliability was tested and reached a value of .

3.3. Step 2: Qualitative online survey updated -- autonomous assessment strategies of tweets during crises

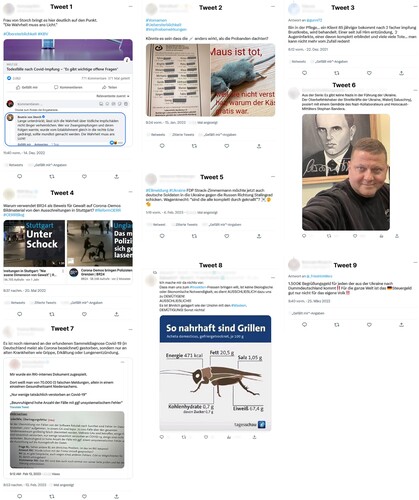

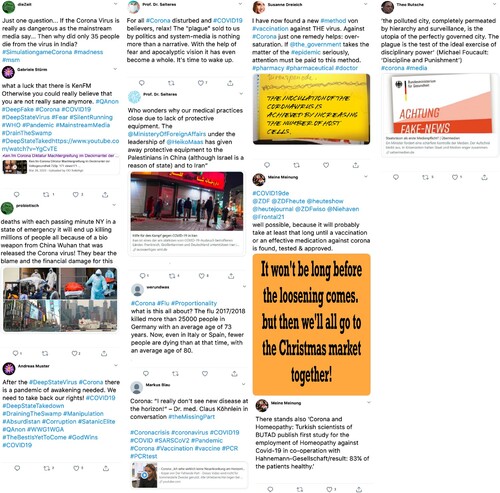

Step 2 extends the discovery of characteristics of misleading tweets during the quantitative Twitter analysis of Step 1 using a user-centered approach. This phase enables us to gain insights into the indicators that individuals already autonomously utilise to evaluate tweet credibility. We created twelve tweets (see ; App.) inspired by real-world examples. Tweet contents such as text and referenced images, links or videos were maintained. However, the original real-world profile names and pictures were changed to include potentially interesting characteristics (e.g. tweets by an unknown fictional doctor) and avoid biases towards well-known personalities or the exposure of real-world Twitter users.

3.3.1. Participants

We asked 49 participants of different socio-demographic backgrounds whether they thought statements to be true or false as well as, openly, how they came to their respective conclusions. This was conducted in an online survey format (SoSci Survey), with adult participants getting an expense allowance of €25 each. For the analysis, we excluded participants who withdrew prematurely from the study, resulting in N=44 valid data sets. The sample size is in accordance with common user studies in HCI research (Caine Citation2016). Participants were acquired mainly through a university website and Facebook groups. Of the participants, 38% were male; 62% were female. The age was collected in clusters with most participants being between 20 and 29 years old (38%) or older than 54 years (33%). Regarding the highest formal education, five participants had completed vocational training (‘Lehre’), two had a (general) certificate of secondary education, four had a vocational diploma, nine had A levels, and 17 had a university diploma. Seven participants made no entry for education. Our goal was to receive a diverse qualitative input, effects of socio-demographic differences were not evaluated. The questionnaire was part of a longer study spanning over seven days, with the fourth day focusing on the issue of online misleading information regarding the COVID-19 pandemic. Our study design did not explicitly introduce the context of misleading information beforehand to minimise a framing effect. The survey answers were collected in open text format.

3.3.2. Analysis

We used RQDA for a qualitative content analysis of the open-text questions. Consensus coding (Wilson, Zhou, and Starbird Citation2018) with discussion to resolve disagreements was conducted by two researchers trained in social sciences, referring to a coding scheme similar to the one of the first quantitative study (for an excerpt of survey answers and their according codes see , App.).

3.4. Step 3: Think-aloud study updated -- perceived comprehensibility and usefulness of content-specific indicators

Building on the derived potential indicators during the content analysis in Step 1 and the additional insights on autonomously applied indicators during the online survey in Step 2, we investigated if and how the derived indicators are perceived as comprehensible and useful when assessing tweets. Thus, in Step 3, we get a thorough user perspective on identified indicators as central characteristics to be applicable within a user-centered digital misinformation intervention. Comprehensibility and usefulness are central concepts when gaining first insights into the perceived effectiveness of an intervention in contrast to evaluating the objective and quantitatively measurable effectiveness, for instance, in large-scale field studies or experiments. Comprehensibility refers to the extent to which our proposed indicators are easily understandable in general and in regard to a specific tweet to assess its credibility. On the other hand, perceived usefulness refers to the extent to which our proposed indicators are helpful in achieving the intended goal of enabling users to assess the credibility of a tweet.

3.4.1. Stimuli

We included a total of nine tweets as stimuli in our think-aloud study. This includes one tweet with completely correct information (tweet 4) and one tweet, that although having the potential to cause harm, has been professionally checked and consists of correct information as well (i.e. the picture and names mentioned are correct, however the picture is cropped). All remaining seven tweets were officially identified as misleading. For all nine tweets, we have resorted to official fact-checking websites that have performed professional fact-checking on the content mentioned. To allow for comparison with Step 1 and 2, we focused on recent tweets concerning COVID-19 and added three tweets concerning the Russian-Ukranian war as another current crisis. Inspired by the guidelines by Pennycook, Binnendyk, et al. (Citation2021) for selecting misleading and non-misleading tweets we visited official fact-checking websites to first identify suitable topics that are not completely likely to be familiar to participants. We further made sure to select content that had not already been outdated when conducting the study. After identifying recent and relevant topics on the fact-checking website, we searched Twitter for current tweets referring to COVID-19 from both a favourable and critical perspective on official COVID-19 measures, as well as tweets referring to the war from both a pro-Russian and pro-Ukrainian perspective. To avoid biases towards well-known personalities and to focus solely on the tweet content which is our special interest in this study, we blurred profile pictures and profile names of the authors as well as statistics (i.e. number of likes, shares, and retweets). However, when a tweet content included a name or profile picture, we kept the original display as we consider this part of the content itself. Information on the posting date was kept visible as well (see all tweets in ).

Figure 2. Tweets that were used as stimuli during the think-aloud study. Tweet 4 contains accurate information that has been officially fact checked by Correctiv (https://correctiv.org/faktencheck/2022/06/03/br-tauschte-irrefuehrendes-vorschaubild-in-video-beitrag-ueber-corona-demos-aus/). While tweet 6 has the potential to cause harm, official fact-checking found that the picture and mentioned names are correct (https://correctiv.org/faktencheck/2023/01/04/ja-auf-diesem-foto-steht-ein-ukrainischer-general-vor-einem-stepan-bandera-gemaelde/). All other tweets contain misleading information that was officially disproved.

3.4.2. Procedure

The study was conducted online using Zoom, where sessions were recorded separately. See Section A.2 in the Appendix for a detailed description of the study procedure including all items. At first, we assessed demographic information and political attitudes in a questionnaire. We then presented nine real-world tweets. Participants were instructed to keep thinking aloud while performing the following tasks and were told that there was no wrong or right, we were only interested in their individual assessment (Fonteyn, Kuipers, and Grobe Citation1993). We asked the 23 participants about their familiarity with the tweet, its perceived importance, and its perceived credibility. For the two tweets containing reliable information, we asked the participants to imagine that a reliable fact checker (human, organisation or algorithm) found the tweet‘s content to be correct. In contrast, for all seven tweets containing misleading information, we asked the participants to imagine that the reliable fact checker found the tweet to be misleading and therefore, in addition, an algorithm looked for indicators as guidance on evaluating misleading tweets. We then presented a list of indicators next to the given tweet and asked to elaborate on the perceived comprehensibility and usefulness of each indicator. For this, we highlighted the indicators and referred parts of the tweet with color (see ). Afterwards, participants were asked again to rate the tweet's credibility before the researcher informed participants about the actual truthfulness of the tweet. After all nine tweets were addressed, we asked the participants to summarise their overall impression of the comprehensibility and usefulness of the approach of highlighting indicators for misleading tweets and if it made a difference if decisions on tweets’ credibility were performed by an algorithm or by a fact-checking human/organisation. We did (not) explicitly introduce the topic of misleading information to avoid biases at the beginning of the study. However, the context of the study became clear very early on.

3.4.3. Participants

A total of 23 participants took part in our think-aloud study, ranging from 20 to 66 years (Median = 32) and covering a diverse set of educational levels (six participants with basic education, ten participants with medium level of education and seven with high level of education). The sample size is in accordance with common user studies in HCI research (Caine Citation2016). We explicitly decided against recruiting solely college students as these are already overrepresented in misinformation studies due to their easy accessibility, and they do not represent the average user. Of the participants, 14 were male, eight were female, and one was of diverse gender. All participants, except for two, stated German to be their native language. To gain information on the social media usage and expertise of our participants, we asked how often they use social media. The majority stated using social media daily (N = 20) or several times a week (N = 3). More specifically, six stated using Twitter daily, eleven several times a week or weekly, three once or two times a month and six never use Twitter. As our stimuli address misleading and reliable information on the topics of COVID-19 and the Russian war against Ukraine, we further evaluated central general attitudes towards these topics and the general political orientation of our participants. Of the participants, 20 fully or partially agree with COVID-19 measures being useful overall, while three tend to disagree. All participants fully or partially agree with the statement that the coronavirus exists. All participants, despite one, fully agree with Russia waging a war of aggression against Ukraine. The single participant stated to fully disagree. We asked our participants to place themselves on a scale from 1 (extreme left) to 11 (extreme right) regarding politics. Six participants placed themselves on the far left between 1 and 2, nine rather left between 3 and 4, eight in the middle between 5 and 7, and none further right than this. Participants were acquired through the panel provider Prolific and got an expense allowance of €12 for an average duration of 60 minutes.

3.4.4. Analysis

For data analysis, we automatically transcribed speech to text using whisperFootnote1 and manually checked for better quality. We then performed a content analysis with two researchers regarding the dimensions of perceived comprehensibility and usefulness of each indicator as well as more general findings regarding the perceived comprehensibility and usefulness of the whole indicator-based approach. Thus, Step 3 results in a thorough overview with which previously derived indicators are perceived as useful and comprehensible and can be considered suitable to be integrated into a future indicator-based misinformation intervention.

4. Results

In the following, we present the results of our threefold study. We start by reporting the identified characteristics of misleading information disseminated on Twitter in the course of the COVID-19 pandemic (RQ1) which we assessed in the quantitative content analysis of tweets as Step 1. Subsequently, we present our findings on which indicators are already being used autonomously by users to assess tweet credibility (Step 2: qualitative online survey) to answer RQ2. Finally, we report how users perceive the comprehensibility and usefulness of identified indicators (Step 3: think-aloud study) to answer RQ3.

4.1. Deriving content-specific indicators for misleading tweets during crises

In Step 1, we systematically derived characteristics of misleading tweets in comparison to non-misleading tweets on the topical, formal, and rhetorical levels to create a knowledge base on what misleading information during a specific crisis looks like. Our sample consisted of misleading tweets (N = 1191), which contain claims of holding true information while they are inaccurate. Some of these tweets may have been formulated for satirical reasons, yet they may mislead perceiving users in case they do not clearly reflect satire while referring to virus-related issues. For example, some tweets indicated the healing potential of alcohol: ‘In Russia, nobody has come down with COVID-19. So, don't prep yourself with any cans of sausages, of fish, any ready-to-serve meals or water. Buy a lot of vodka!’ (#0046) Because people have believed similar statements with regard to the origin, effect, and options of healing of COVID-19, we categorised such tweets as misleading. The second group of tweets (N = 1077) consists of accurate information, own and thus non-falsifiable experiences or feelings as well as satirical tweets which do not suggest misleading users. Satirical posts (N = 114) like ‘To be fair, there is nothing more frightening than German bureaucracy. I am not sure whether the #coronavirus wants to deal with it. Viruses also have standards’. (#0779) are assumed to be not misleading as they, again, personalise the COVID-19 virus and put it in an unrelated semantic field (i.e. bureaucracy) to criticise politics.

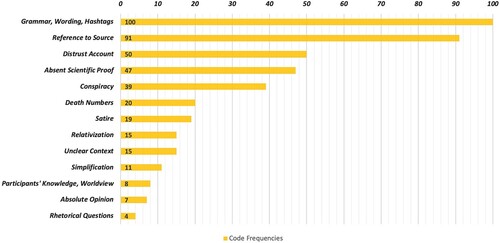

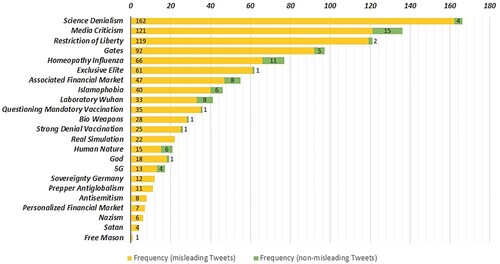

In the following, we present topical, formal as well as rhetorical indicators of both misleading and non-misleading tweets related to the COVID-19 crisis in Germany (see for an overview of all indicators).

4.1.1. Topical indicators for misleading tweets

Regarding the first group of topical indicators, the content analysis revealed that codes of the category of ‘Traditional Conspiracies’ like ‘Free Mason’, ‘Nazism’, ‘Prepper AntiGlobalism’, ‘Sovereignty Germany’ appeared very rarely while codes referring to new, more context-specific themes (e.g. ‘Restriction of Liberty’ or ‘Gates’) were much more prevalent (see ). At the same time, misleading tweets were still characterised by some traditional conspiracist topics like the ‘Exclusive Elite’. For example, one user made clear that

[i]t is about something else, but you will soon learn about it; it is not about the coronavirus. Soon, the hospitals will be overcrowded with children, who will be freed from the underground, where they have been living in underground bunkers for years. Who of you knows about adrenochrome, just google it. (#1331)

Attesting confidence about having complete, yet exclusive, knowledge of the world is a conventional feature of conspiracy theories, which, in this instance, was put into a contemporary context, referring to adrenochrome (a metabolite which plays an important role for the QAnon conspiracy theory). Related statements may also carry antisemitic content: ‘There are some people who have strong doubts about the official version of China regarding the coronavirus (…). There are theories and assumptions that, for example, Soros has something to do with the virus, which would perhaps be very interesting’. (#0271). Further, new trending themes like ‘Gates’ or comparing COVID-19 to conventional influenza regarding its effects (cf. ‘Homeopathy Influenza’) may follow more established science denialist, anti-vaccination narratives:

This is a very big #put-on of the German people! Whether ‘refugees’, whether #corona, whether the new app and the compulsory vaccination with a chip, everything is a plan by criminals like the #WHO #BillGates #RKI #Merkel #Spahn #Rothschild #Rockefeller and their organisations! (#1219)

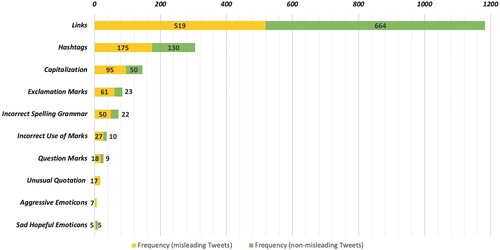

4.1.2. Formal indicators of misleading tweets

Regarding formal indicators, we observed a relatively balanced distribution across types of tweets (see ). Links were used in 1183 tweets, of which 519 were misleading. Although links may make a tweet seem more credible, this may not always be the case. Thus, taking a closer look at the referenced link can lead to a suitable indicator for misleading content (e.g. a link to a conspiracy website). The same applies to hashtags, which on their own are not an indicator of misleading information. Again, however, a nudge to look more closely can lead to a helpful indicator (e.g. hashtag naming a conspiracy theory). Interested in cross-media use and the types of media shared by embedded URLs in tweets, it was revealed that links in misleading tweets were most frequently referring to YouTube videos and images (see , App.). While we did not perform statistical analysis on that particular topic, our findings reveal some first impressions. For instance, 2.56% of non-misleading tweets contained an embedded link to a YouTube video; for misleading tweets, that was the case in 21.58% – such a large difference cannot be seen in any other type of embedded links. Well-known online news sites (Focus, N-TV) were shared to a similar extent across non-misleading and misleading tweets. This may be due to the rather superficial, short-term nature of fast online journalism. Additionally, smaller, less professionally held blogs were used as references. While we derived the share of links that related to URLs containing the word ‘blog’, we did not list the various, relatively unestablished homepages that may account for the greatest share of links in misleading tweets. At the same time, ‘traditional’ conspiracist or misleading websites (Russia Today, Sputnik, Anonymous, pi-news) were not used as much as expected in misleading, but never in non-misleading tweets. Formal indicators common for crisis situations, like capitalisation of words or incorrect spelling, were comparatively more present in misleading tweets. Yet, and potentially as the COVID-19 pandemic is no short-term event fueling overwhelmingly highly emotional tweets, misleading tweets were rarely characterised by such formal elements. Looking at misleading tweets, the use of links was comparatively most frequent, suggesting users' interest in legitimisation or increased spread of content being important. All formal indicators indicating incorrect language use were more indicative of misleading content than non-misleading, with the use of unusual quotations being solely present in misleading tweets. Thereby, users questioned the existence of the ‘virus’ (#1795) or related ‘deaths’ (#0024).

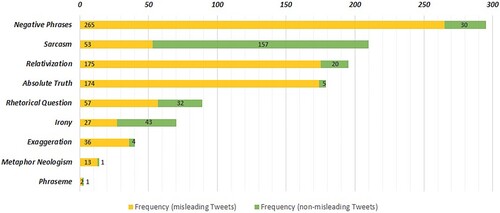

4.1.3. Rhetorical indicators for misleading tweets

The third group of indicators comprised rhetorical devices that are applied commonly in the context of the creation of misleading information (see ). With respect to negative, absolute language that may intensify conversational interactions, we made out two recurring themes of swear words. First, terms relating to fascism, dictatorship, or the Weimar Republic were used to target the current political system and incumbents (#1398, #1720). Thus, one user notes that ‘[t]he Merkel Regime is finally dropping its masks and turning out as an axis-mirrored fascist dictatorship of “Mini-fascists” End the masquerade [sic]! #corona #FRG2020’. (#1668)

Second, stigmatising political measures and (scientific) public discourse on the COVID-19 pandemic was performed by pathologization. For example, ‘hysteria of speculators’ (#1383) was defined as the reason for the outbreak of COVID-19 while support for political measures was achieved because of irrational fears or (creation of) panic (#1749). In sum, 7.81% of misleading tweets contained the words ‘panic’, ‘hyster*’, ‘insane’, or ‘delusional’. Such discursive undertakings were also reflected by the constructions of neologisms like ‘Merkill’ (#1170), ‘schizophrenic framing-channel’ (#0028), or ‘Coronavirus-Hysteria’ (#0108). While ‘Sarcasm’, ‘Irony’, and ‘Rhetorical Questions’ were also, partly predominantly, used in non-misleading tweets, all other rhetorical indicators could most frequently be studied by looking at misleading tweets.

4.2. Autonomous assessment strategies of tweets during crises

To shed more light on the user perspective, the aim of our online survey (OS) in Step 2 was to investigate which content-specific indicators people have already been using autonomously for assessment of tweets, assuming that these are indicators from which they might benefit when highlighted in an assisting tool. We see this step as a complement of the identified characteristics from our quantitative Twitter analysis which allows us to potentially add additional indicators, conform others, or identify particular relevance. While some indicators were autonomously identified by the participants, others, such as specific rhetorical indicators, were not. Adding those indicators to a user-centered assisting tool might have the potential to help users expand their knowledge and encourage media literacy through offering an explanation of ‘tendentious’ (OS02:17) content. In the following, we address topical indicators, formal indicators, and rhetorical indicators successively.

Regarding topical indicators, we can observe that ‘Science Denialism’ indeed played a significant role in our participants identifying tweets as misleading. The absence of scientific references when stating alleged scientific information was emphasised (see ). The participants were often ‘skeptic in general’ (OS02:01) when vaccination statements were combined with COVID-19 topics, especially when scientific references were missing. As instances of ‘Strong Denial of Vaccination’ were also exclusively prevalent in misleading tweets of our second period of observation, it is indicated that users may identify misleading information of a potentially more deliberate nature more easily. In our large sample of tweets for the quantitative study, many were tagged as containing explicit media criticism, referring to the ‘mainstream media’. To our participants, this buzzword constituted a well-known indicator of problematic content (OS13:02). However, some participants assessed tweets by critical journalism as misleading information as well, solely based on the occurrence of the word ‘media’ (OS02:01; OS02:03; OS13:04). Hence, further investigations on how to help differentiate between media ‘criticism’ in misleading information and media criticism that is a form of critical journalism may be a valid effort.

Figure 4. Overview of code frequencies in study 2. For participants, tendentious phrasing was mostly an indicator of misleading information, followed by an absence of references supporting the respective statements or critical opinions regarding sources. Only one participant was convinced by a tweet because the reference suggested proof of accurate information. More specific stylistic or rhetorical indicators were less often relied upon when assessing tweets.

While we observed a strikingly large co-occurrence of different conspiracy theories in the same tweets (e.g. ‘Gates’ and ‘Science Denialism’), participants in our survey did not explicitly reflect on specific conspiracy theories or their combined appearance. Instead, they rather mentioned the dominance of conspiracy theories in general (OS02:05; OS02:06; OS02:07). This suggests that some users may find a more general indication of the ‘Conspiracy Theory’ category satisfactory while highlighting more well-known conspiracist vocabulary or strong statements may also increase comprehensibility. At the same time, evaluation of the answers to the online survey also indicates that it is not necessary to overload users with information on relationships between conspiracist topics and narratives.

When looking at the formal indicators, our quantitative results revealed that, although a relevant system feature, ‘Links’ were not a distinguishing characteristic of misleading content. Participants also considered links in their assessment and found links to traditional news to be a comprehensible indicator of non-misleading content (OS03:07). During our survey, it became clear that the content was decisive. Participants referred to ‘#DeepState’ or ‘#Simulation Game’ (OS02:08; OS13:09) as an indicator. Thus, respondents stressed their problematic content while also emphasising that hashtags may be relatively easily identifiable as relevant formal indicators. Some survey participants considered a lack of punctuation or excessive punctuation (e.g. ‘…’) indicative of misleading information (OS02:10; OS02:11; OS02:12; OS02:13). This is consistent with results of the Twitter analysis, which show the prevalence of incorrect spelling or grammar in misleading tweets, and backed by prior research (Plotnick et al. Citation2019).

Regarding rhetorical indicators, while they appear to be significant indicators for misleading information, respondents did not make many statements. Individuals named provoking language and contentious terms as indicators or declared that they ‘assess this statement as extremely exaggerated’ (OS03:14). However, they did not evaluate tweets as misleading based on the occurrence of absolute phrases (i.e.‘Absolute Truth’) or ‘Relativization’. Considering the online survey, it appears difficult to transparently evaluate tweets as misleading, referring to these rhetorical indicators. ‘Exaggeration’, ‘Absolute Truth’, ‘Relativization’, and ‘Negative Phrases’ (including swear words) were valid indicators for misleading tweets in our quantitative analysis. Hence, interested in comprehensible user assistance, future HCI research may benefit from looking deeper into how these rhetorical indicators can be used as indicators for end users, providing a learning effect regarding the identification of misleading information.

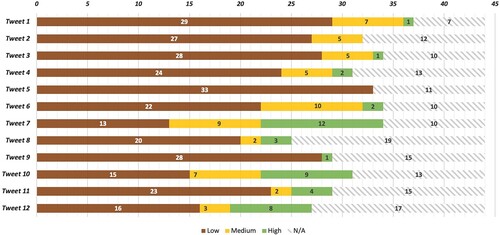

Summarizing the findings of our online survey, the strong indication of sources as indicators implies that users may not want to invest too much time into assessing content and rather prefer to quickly evaluate based on obvious indicators. At the same time, regarding each tweet, roughly one-fifth of participants evaluated the tweet incorrectly (see , App.). These participants, in particular, might benefit from our user-centered approach of giving comprehensible content-specific indicators to encourage media literacy instead of labeling tweets as misleading without explanation. We use the insights gained here on indicators already applied autonomously and not yet used consciously as input for our final Step 3.

4.3. Perceived comprehensibility and usefulness of content-specific indicators

Building on our findings in the two previous steps, we made sure to include tweets containing the following formal indicators: ‘Emoticons’, ‘Capitalization’, ‘Punctuation Marks’, and ‘Incorrect Spelling Grammar’. We decided to include ‘Hashtags’ and ‘Links’ as formal indicators as well, as they were specifically mentioned as helpful in Step 2. For rhetorical indicators, we included ‘Negative Phrases’, ‘Rhetorical Question’, ‘Exaggeration’, ‘Relativization’, and ‘Absolute Truth’. We decided to exclude ‘Irony’, ‘Satire’, ‘Sarcasm’, and ‘Phraseme’ as they did not appear significantly more often in misleading tweets and were not mentioned by participants in Step 2. ‘Metaphor Neologism’ was excluded as it appeared only 14 times in sum within our comprehensive sample in Step 1 and could not be identified for any additional tweets for Step 3. The assessment of rhetorical indicators in Step 3 is of special interest, as they were barely used by participants in Step 2 while being highly relevant. For topical indicators, Step 1 revealed an overabundance of all indicators in misleading tweets. We exemplarily included ‘Restriction of Liberty’ and ‘Science Denialism’ as topical indicators. See our final selection of stimuli in and an example of a stimulus with guidance in .

4.3.1. Comprehensibility and usefulness of topical indicators

The comprehensibility of the included topical indicators ‘Restriction of Liberty’ and ‘Science Denialism’ was overall very high. For instance, in tweet 1, 20 participants were fully able to explain correctly what ‘Restriction of Liberty’ meant in that particular tweet and in general, indicating a high level of comprehensibility. Regarding the perceived usefulness of the indicators to assess a tweet's credibility, the results were also very positive, but with a few drawbacks. For example in tweet 8, 16 participants (fully) agreed with ‘Restriction of Liberty’ being useful, while three participants completely or partly disagreed. Reasons for participants disagreeing with the usefulness of ‘Restriction of Liberty’ was having an inappropriate naming, not being specific enough, or referring to an unsuitable part of the tweet: ‘Exactly, and in this case I would even say that if this is recognised as a restriction of freedom, then someone would have to look at the algorithm again’ (P15). For ‘Science Denialism’, some participants referred to this indicator as being the most useful for assessing tweets, while other participants did not agree and stated that it was not useful at all, mostly because they rated the referred part of the tweet as not suitable.

Building on our findings of Step 2, we kept topical indicators very general instead of naming specific conspiracy theories. With a few exceptions, this approach was rated as positive by our participants. As we included a study part where participants were asked to explain in detail how they assessed the tweets' credibility before showing our indicators, we found that many participants autonomously referred to their prior knowledge on a specific topic as central, not yet naming ‘Science Denialism’ or the ‘Restriction of Liberty’ specifically as own criteria. However, when having seen the topical indicators in a previous tweet, participants referred to them autonomously in subsequent tweets, often embedded as part of their prior knowledge on the topic. While we did not aim to quantitatively evaluate efficacy (e.g. to reduce the sharing of misleading information), this indicates a tendency towards a potential learning effect for this short-time situation. Indeed, this tendency for applying indicators to novel content is repeated for other indicators as well.

4.3.2. Comprehensibility and usefulness of formal indicators

The overall comprehensibility and perceived usefulness of formal indicators was rather positive with a few exceptions and limitations. All but one participant rated the comprehensibility of ‘Emotional Emojis’ very high and were easily able to explain what this indicator refers to for both tweets it occurred in. Similarly, the usefulness was overall rated very positively with few exceptions, emphasising the unprofessional character of using highly emotion evoking emojis within a tweet. It is noticeable that participants find this indicator particularly helpful when there are a lot of emojis in the tweet and do not only pay attention to whether the emoji is particularly emotional (e.g. red siren or screaming emoji).

For ‘Capitalization’, all participants were fully able to explain what this indicator stands for, emphasising a high level of comprehensibility. The vast majority further fully agreed or tended to agree that it is useful to assess the credibility of a tweet.

Exactly, I think that's a good hint. […] Because capitalisation on the Internet is easy to put with shouting in real life. And who must shout, however, in the discussion, has simply no arguments. And so that's simply an exclusion criterion for me when someone writes like that. (P12)

When evaluating the indicator ‘Punctuation’ we found that the majority of participants again were able to understand what it means and why excessive punctuation (e.g. ‘!!!’) might indicate misleading information. However, some criticised that this may include wrong punctuation as well (e.g. missing commas in a sentence) and emphasised that this can easily be found in non-misleading tweets as well, as not everyone is capable of writing correctly. Again, ‘Incorrect spelling’ was easily comprehensible as an indicator, as all participants were fully able to explain what it means and what it refers to. However, there were mixed results regarding its usefulness, as seven participants for tweet 5 and five participants for tweet 3 fully or partly disagreed with it being a useful indicator to assess a tweet's credibility. Some highlighted the relevance of non-native speakers when considering spelling and punctuation or:

I wouldn't want to reconcile the content with the spelling. So the credibility doesn't get better because somebody has better spelling. So that would be a fallacy for me. So I can also believe a person who has a worse spelling. (P4)

While the previous indicators were overall very comprehensible without further explanation, ‘Hashtags’ and ‘Links’ were often not immediately self-explanatory. Many participants were unsure if it highlighted all ‘Links’ and ‘Hashtags’ within a tweet regardless of their content or quality or if it highlighted only suspicious ‘Links’ and ‘Hashtags’. As links to credible sources can be a highly relevant indicator of reliable content, it is crucial to more clearly nudge users to take a closer look at the link's quality and not make the mere appearance of links look suspicious. The same applies to hashtags. Still, the overall concept was rated rather positively and participants were able to understand and explain its meaning as an indicator easily after having seen an example in a previous tweet.

4.3.3. Comprehensibility and usefulness of rhetorical indicators

We were particularly interested in rhetorical indicators, as these were identified as highly relevant from existing literature and confirmed by us in Step 1, but were not consciously attended to at all by participants in Step 2. When taking a closer look at ‘Negative Phrases’, our findings reveal a high comprehensibility. For instance, all participants were fully able to explain what this indicator means in tweets 8 and 9, and only two participants slightly struggled to explain it within the other tweets. The usefulness of ‘Negative Phrases’ as an indicator to assess a tweet's credibility was perceived as high as well for 10 to 19 participants. Participants further noted, however, that this depends on the context of the actual tweet, leaving two to six participants rather critical.