?Mathematical formulae have been encoded as MathML and are displayed in this HTML version using MathJax in order to improve their display. Uncheck the box to turn MathJax off. This feature requires Javascript. Click on a formula to zoom.

?Mathematical formulae have been encoded as MathML and are displayed in this HTML version using MathJax in order to improve their display. Uncheck the box to turn MathJax off. This feature requires Javascript. Click on a formula to zoom.ABSTRACT

Internet of Things (IoT) devices have become increasingly important within the smart home domain, making the security of the devices a critical aspect. The majority of IoT devices are black-box systems running closed and pre-installed firmware. This raises concerns about the trustworthiness of these devices, especially considering that some of them are shipped with a microphone or a camera. Remote attestation aims at validating the trustworthiness of these devices by verifying the integrity of the software. However, users cannot validate whether the attestation has actually taken place and has not been manipulated by an attacker, raising the need for HCI research on trust and understandability. We conducted a qualitative study with 35 participants, investigating trust in the attestation process and whether this trust can be improved by additional explanations in the application. We developed an application that allows users to attest a smart speaker using their smartphone over an audio channel to identify the attested device and observe the attestation process. In order to observe the differences between the applications with and without explanations, we performed A/B testing. We discovered that trust increases when additional explanations of the technical process are provided, improving the understanding of the attestation process.

1. Introduction

Due to their variety, scalability, and simplicity, Internet of Things (IoT) devices face many security challenges that are difficult to cope with (Alrawi et al. Citation2019; Hassan Citation2019; Zhang et al. Citation2014). For instance, the limited computing power of IoT systems impedes the execution of antivirus software (Zhang et al. Citation2014). Furthermore, the majority of IoT devices are equipped with closed and pre-installed firmware, which makes them black-box systems. As a consequence, the user has little assurance on whether the system is indeed operating the intended task and not performing malicious tasks (e.g. spying). Incidents such as the well-known Mirai botnet, which targeted consumer devices like routers and IP cameras, have demonstrated the large impact of attacks against vulnerable IoT devices (Kolias et al. Citation2017). Hence, it comes as no surprise that users have little trust in IoT devices, especially if these devices are equipped with microphones or cameras like smart speakers or smart screens (Zeng, Mare, and Roesner Citation2017; Zheng et al. Citation2018).

One prominent research direction to increase trust and security of IoT devices is remote attestation (Ammar, Crispo, and Tsudik Citation2020; Carpent, Rattanavipanon, and Tsudik Citation2018; Conti, Dushku, and Mancini Citation2019; Dushku et al. Citation2020; Kuang et al. Citation2019, Citation2020). Remote attestation is a security service that allows a device, the verifier, to verify the integrity of a remote, untrusted device, the prover. To perform the attestation, the prover inherits a self-measurement routine. Remote attestation is typically implemented using a challenge-response protocol: the verifier sends an attestation request to the prover, which performs a self-measurement and sends the result back to the verifier (Nunes et al. Citation2019). Remote attestation can assure different security guarantees, varying from firmware integrity to more complex run-time properties such as control-flow (Abera, Asokan, Davi, Ekberg et al. Citation2016) or data-flow integrity (Abera et al. Citation2019; Kuang et al. Citation2020; Nunes, Jakkamsetti, and Tsudik Citation2021), thereby detecting attacks like persistent malware (Nunes et al. Citation2019), return-oriented programming attacks (Roemer et al. Citation2012), and even data-oriented programming techniques that do not alter the control flow (Hu et al. Citation2016). Remote attestation is an abstract concept. Its security relies on cryptographic protocols and functions, to which users are often oblivious. Existing research shows that users tend to discard or circumvent mechanisms they do not understand or trust (Angela Sasse and Flechais Citation2005). Users, however, are a critical part of the IoT ecosystem as they are responsible for the devices, and their integrity. Many security mechanisms eventually rely on the user correctly using them, such as virus scanners, firewalls, and passwords (Furnell and Clarke Citation2012). Today, many security risks in IoT devices already arise due to incautious users that utilise insecure or default passwords, do not apply updates, or configure devices insecurely (OWASP Citation2018).

However, so far, all remote attestation schemes have been black boxes, which can lead to misuse by users who do not understand and trust the system (backfire-effect Nyhan and Reifler Citation2010). Bringing the user into the context of remote attestation can increase trust in these security mechanisms. Therefore, HCI research is needed to design new usable security solutions. Recently, the first user-observable remote attestation scheme was presented (Surminski et al. Citation2023). The authors conducted a user study claiming good usability. However, this still raises the question of whether remote attestation can help to increase trust in IoT devices, e.g. by removing security concerns like the leakage of sensitive information to third parties (Babun et al. Citation2021; Mare, Roesner, and Kohno Citation2020). In particular, it has not been investigated how users treat compromised devices and devices that were formerly compromised but have been restored to a benign state. Hence, it remains largely unclear whether users trust the abstract cryptographic concepts of remote attestation schemes, raising the demand for usable security mechanisms.

In this paper, we investigate for the first time whether user-friendly and understandable techniques as well as transparency enhance users' understanding and trust in remote attestation. Examples of user-friendly and understandable techniques are explanations that are not only understood by experts and an easy-to-use design of the application. The concept of trust used in this study entails the users' trust in the correctness and effectiveness of the attestation process, as well as the user's trust, that the attested device runs as intended and no third-party privacy violations are possible. We aim to use transparency as an HCI intervention mechanism to inform the users about the attestation to enable the users to comprehend the process and the promises of remote attestation. Therefore, our main research question is: ‘Does transparency enhance trust in remote attestation?’ We implemented an application of a user-observable remote attestation scheme that has recently been developed (Surminski et al. Citation2023) into a custom-made demonstrator including a real smart speaker and evaluated it in a user study.

2. Background and related work

In this section, we provide background information on the topics of attestation and trust in IoT devices and present related research. We conclude by pointing out the research gap resulting from the presented related work.

2.1. Remote attestation

Remote attestation allows the verification of the integrity of an untrusted device (Aman et al. Citation2020). For example, a server can verify whether a client system has an uncompromised version of a software. The main problem in attestation is obtaining a trustworthy measurement of an untrusted device. Even if the system is completely compromised, this measurement must not be altered. To this end, the attested device requires logic to prove its integrity, which is why it is referred to as prover, while the device verifying the reports of the prover is called verifier (Aman et al. Citation2020). There exist three categories of attestation schemes, depending on their architecture, that is, hardware-based, software-based, and hybrid attestation schemes (Abera, Asokan, Daviet, Koushanfar al. Citation2016). Hardware-based attestation schemes rely on a trusted computing base inside the untrusted device (Architecure ARM Citation2009; Costan and Devadas Citation2016; Kil et al. Citation2009; Parno, McCune, and Perrig Citation2010). Software-based attestation schemes rely on precise timing measurements to perform the attestation (Seshadri et al. Citation2004; Surminski et al. Citation2021). Hybrid attestation schemes combine hardware and software designs to perform trusted self-measurements (Brasser et al. Citation2015; Koeberl et al. Citation2014; Nunes et al. Citation2019). These attestation protocol schemes were developed for a setting in which a trusted verifier attests one or more prover devices. Typically, remote attestation schemes inherit a challenge-response protocol. The verifier starts the attestation by sending a nonce to the prover to ensure freshness and prevent replay attacks (Nunes et al. Citation2019).

For IoT devices, there exist many attestation schemes with different advantages. Since IoT systems often consist of many different devices, most existing remote attestation schemes lack the scalability to attest a network of IoT devices. Therefore, the Collective Remote Attestation (CRA) scheme was developed which can perform the attestation of IoT networks (Ambrosin et al. Citation2020). Kuang et al. (Citation2019) propose a many-to-one remote attestation scheme that eliminates the need for a fixed verifier and thus, reduces the possibility for an attacker to tamper with the attestation process. For the specific threat of roving malware, i.e. malware that relocates itself in the memory, Aman et al. (Citation2020) developed an attestation scheme that can detect this malware. During most remote attestation schemes, the device must not be interrupted. This means that in order to attest a network of devices, all devices must interrupt their normal operation until the attestation is performed. In order to avoid such a network-wide interruption, Dushku et al. (Citation2020) developed an asynchronous attestation scheme allowing parts of the network to operate normally while another part of the network is being attested. Attestation is not only used for IoT devices but inter alia also used for hardware security tokens (HST). It was shown by Pfeffer et al. (Citation2021) that existing authenticity checks have usability issues that cause users to base their trust on ineffective security features such as visual damage of a product. They suggested to use combinations of automation and user interaction to improve the security of attestation procedures.

2.2. Trust in IoT

Trust in IoT devices is essential since they gain more importance in the everyday lives of many people. Smart light bulbs, for example, create easily adjustable ambient light, smart speakers that can be controlled by voice make it easy to adjust the music playing in the background and smart thermostats allow to control the temperature at home from anyplace at anytime. However, IoT devices are notoriously insecure (Alrawi et al. Citation2019). Manifold prominent examples, such as insecure security cameras (Seralathan et al. Citation2018), attacks on smart speakers (Yan et al. Citation2022), and large-scale attacks such as the Mirai botnet (Kolias et al. Citation2017) have customers worried about the security of home IoT devices (Lafontaine, Sabir, and Das Citation2021; Nemec Zlatolas, Feher, and Hölbl Citation2022; Zubiaga, Procter, and Maple Citation2018). Users are also worried that sensitive or personal information could be leaked to third parties (Asplund and Nadjm-Tehrani Citation2016; Babun et al. Citation2021; Mare, Roesner, and Kohno Citation2020; Park, Kim, and Jeong Citation2018). Furthermore, IoT devices are designed as black box systems to the user (Zeng, Mare, and Roesner Citation2017) and are used as is, which is why users do not have control over the software running on the devices. Research showed that security, besides other factors, is an important aspect for people in order to trust an IoT device (AlHogail Citation2018). The generally positive attitude of the user to use an IoT device (Korneeva, Olinder, and Strielkowski Citation2021) can then be affected by this trust (Alraja, Farooque, and Khashab Citation2019) as well as the complexity associated with the usage (van Deursen et al. Citation2021). Recent work has also shown that many users are unaware of the privacy risk posed by devices that do not have a camera or microphone (Zheng et al. Citation2018) as well as IoT devices in general (Emami-Naeini et al. Citation2021; McDermott, Isaacs, and Petrovski Citation2019; Oser et al. Citation2020; Remesh et al. Citation2020) leading to incomplete threat models (Abdi, Ramokapane, and Such Citation2019; Gerber, Reinheimer, and Volkamer Citation2018). It was also shown that users often express low privacy concerns (Nemec Zlatolas, Feher, and Hölbl Citation2022; Williams, Nurse, and Creese Citation2017) even when using applications which pose security and privacy threats (Park, Kim, and Jeong Citation2018; Saeidi et al. Citation2021). This is amplified by the fact that many owners of smart home devices do not fully understand their own data practices (Alshehri et al. Citation2022; Oser et al. Citation2020; Williams, Nurse, and Creese Citation2017). Consequently, while users see privacy and security risks with devices that have built-in cameras or microphones, they often trade them for functionality (Abbott et al. Citation2022; Remesh et al. Citation2020; Zeng, Mare, and Roesner Citation2017) or availability (Asplund and Nadjm-Tehrani Citation2016; Williams, Nurse, and Creese Citation2017). This shows the need for solutions that increase the privacy and security of users but minimise the costs of using them (Abbott et al. Citation2022; Edu, Such, and Suarez-Tangil Citation2020). Especially, it was shown that these solutions need to establish not only privacy and security but also trust in the devices (Aldowah, Rehman, and Umar Citation2021).

2.3. Research gap

Trust in an IoT device plays an important role during the adoption process (AlHogail Citation2018; Menard and Bott Citation2020). Since security is an integral aspect for people in order to trust an IoT device, mechanisms which increase the security of IoT devices are needed. A key part of security responsibility lies within user awareness as careless behaviour can lead to security breaches (Mahmoud et al. Citation2015). Consequently, HCI research towards aiding user security awareness and behaviour in dealing with IoT is necessary. While there are mechanisms enhancing the security of consumer IoT devices (Aman et al. Citation2020; Ambrosin et al. Citation2020; Dushku et al. Citation2020; Kuang et al. Citation2019), there is also a need for mechanisms that are easy for end users of the devices to use (Lafontaine, Sabir, and Das Citation2021). Here, one way to improve the security of IoT devices is remote attestation. A problem with many traditional remote attestation concepts is that the remote attestation process is completely nontransparent to the user. Thus, the user is neither part of the attestation process, nor can observe the attestation process, and thus often has an incomplete threat and security model (Oser et al. Citation2020; Zeng, Mare, and Roesner Citation2017). Previous work showed that it is possible to make remote attestation user-observable (Surminski et al. Citation2023). However, it has yet to be proven, that this increases the trust in this process. Therefore, we investigate whether users trust the attestation process and explore whether making it more transparent can further improve the trust in remote attestation. Furthermore, we examine another important aspect of remote attestation, concerning the issue of how to deal with a compromised device. Remote attestation by design can only detect a compromise but does not specify how to react to it. In end-user scenarios, it is therefore relevant to determine whether users trust the attestation and whether they trust benign – but formerly compromised – devices.

3. Attestation application

In this section, we present the attestation application used for our study. Based on a remote attestation scheme (Surminski et al. Citation2023), we built an application that can be used to simulate the attestation process of a smart speaker. The reason for choosing a smart speaker is that these devices have a widespread use and have microphones installed, which poses risks for privacy but enables the attestation via an audio side channel.

3.1. User-observable attestation

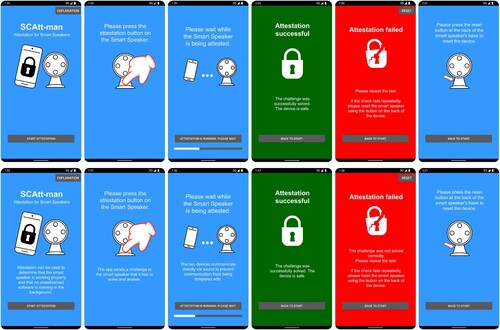

SCAtt-man is a remote attestation solution specifically designed for IoT devices (Surminski et al. Citation2023). To solve the problem of lack of device authentication in software-based attestation and ensure reliable one-hop communication, side channels such as sound and light are used. In SCAtt-man, the missing device authentication is replaced by the user. By using user-observable communication channels, the user can monitor the attestation process and identify the device currently being attested. shows a depiction of this process. To perform the attestation, users can use their smartphones with an attestation app. For practical usage, this functionality could potentially also be integrated into the configuration apps already used for smart speakers, such as the Amazon Alexa app. The app guides the user through the attestation process. First, the user presses the attestation button on the device they want to attest. Then, the app performs the attestation and asks the user to wait. After completing the attestation, the app informs the user about the result. If the attestation fails, the app shows the user how to resolve the problem. A failed attestation can have different reasons: There has been a communication failure, either on the audio communication or the network transmission, or the device has actually been compromised. To rule out transmission problems, the user can repeat the attestation process. After multiple failures, the user can resolve a compromise by resetting the device to factory settings and repeating the attestation process to verify the device's integrity. In an initial user study, it was shown that SCAtt-man has good usability and people trust the attestation (Surminski et al. Citation2023). However, the prototype only had limited smart speaker functionality as the focus was on the development of the attestation scheme.

3.2. Smart speaker for attestation

To tackle the problem of limited functionality, we re-implemented the SCAtt-man attestation scheme into a state-of-the-art smart speaker. As smart speakers such as Amazon Alexa and Google Home are closed systems, a full implementation of SCAtt-man was not possible as this requires full access to the hardware and software. Therefore, the only solution is to imitate the behaviour of the SCAtt-man attestation scheme.

We developed a smart speaker application for the Amazon Alexa voice assistant that resembles the SCAtt-man attestation process. Amazon Alexa is the most popular smart speaker with a market share of 28.3% (Business Wire Citation2021). Amazon Alexa offers a wide range of services and keywords and can be used for daily tasks, such as setting timers and alarms, creating shopping lists, telling jokes, or playing music. Many IoT devices allow integration so that Amazon Alexa can be used to control smart homes with voice commands. It can also be used for more complex tasks such as online shopping. Amazon Alexa allows third-party apps and integrations. These apps are called skills and can further extend the functionality of Amazon Alexa. Our implementation consists of three parts: (1) An Alexa skill to implement the attestation behaviour of the smart speaker; (2) The installation of the attestation app on the smartphone running Android 13; (3) A coordination service to allow communication between the app and the skill.

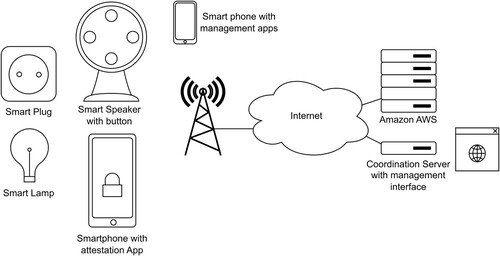

shows the architecture of our setup. For the smart speaker, we used a standard Amazon Echo Dot. We built a smart home setup with a smart lamp and a smart plug. For the attestation, we required a button to start the attestation. For this, we used a Flic button that communicates over Bluetooth LE using a companion app. We used a hardware button to trigger the attestation because we wanted the attestation feature to be easily accessible to the users. Since we investigate user-observable attestation, it would be detrimental to hide the attestation feature inside a menu. A voice command would be an alternative, but in a real-world scenario, this would require every user to read the manual to find this function. We ran the app for the Flic button as well as all other management apps on a dedicated Android smartphone to separate these apps from the user. We designed a custom 3D-printed stand for the Echo Dot and the Flic button to provide the look and feel of a complete, professional device. shows a photo of the final setup. Furthermore, we enhanced the case with a reset button, to perform a reset process during the user study. The Alexa app, like any other Alexa app, was deployed to Amazon AWS. It was not possible to implement actual audio communication between smartphone and smart speaker due to limitations in audio processing in the Alexa API. We, therefore, developed a coordination service that allows coordination between the app and the Alexa skill. This setup allows straightforward usage anywhere as long as Internet connectivity is available. We implemented this service using PHP and an SQL database. This service also has a dedicated web interface to allow the experiment supervisor to influence the attestation result (success/failure) and to activate the extended explanations in the smartphone app, thereby allowing simple control of the complete experiment. We modified the smartphone app so that instead of audio communication it uses our coordination server to start the attestation process. Furthermore, it was extended with explanations. These explanations as well as the result of the attestation can be controlled by the coordination service. The setup for our user study is described in detail in Section 4.1.3.

3.3. Application design

We modified the smartphone application (Surminski et al. Citation2023) to make it work with our simulated attestation process.

3.3.1. Layout

In , the different screens of the application for different steps in the attestation process are shown. On the start screen, the user sees the application logo and two buttons. After clicking the explanation button, a pop-up window is shown which explains the attestation process using the following text:

Attestation is used to check the security of devices. To do this, you send a device a task that it must solve and return the result. If the result is correct, the device is safe. However, how can you get a reliable reading from a hacked device? After all, if there is a virus on the device, it can influence the measurement and claim that everything is OK. In order to prevent this, the device is sent a mathematically so complex task that the capacities of the device are fully utilised by it. In this way, any additional load caused by malicious code is noticed immediately. That is why the measurement takes a few seconds. However, it is necessary to prevent the device from forwarding the task to another device, on which the solution for the task could be calculated. Therefore, during the scan, the device is automatically disconnected from the Internet and communication is performed over sound. This way you can tell which device is responding and no virus can forward the task.

The other button starts the attestation process. After it is clicked, a screen with instructions on how to start the attestation process on the smart speaker is displayed. The instruction is supported by a picture indicating where the attestation button is located on the smart speaker. After clicking the attestation button on the smart speaker, the simulated audio communication is started. First, the smartphone emits a sequence of tones, then the smart speaker sends a sequence of tones. During this process, the user sees a picture indicating the communication and a text saying that the user should wait until the communication and thus the attestation is done. The progress of the attestation is visualised by a progress bar at the bottom of the display (see third picture in ). When the attestation process is completed, the user is shown the result of the attestation. In case of a successful attestation, the user observes a green result screen saying that the attestation was successful (see fourth picture in ). The ‘failed’ screen in red colour informs the user that the attestation may have failed either because the device is compromised or because of a transmission error (see fifth picture in ). In this case, it is recommended to the user to conduct the attestation again. For this purpose, the user can get back to the start screen by clicking the respective button. If the attestation repeatedly fails, the user should reset the smart speaker by pressing the reset button. A button in the top left corner of the ‘failed’ screen can be pressed to get further information on where the reset button is located (see sixth picture in ).

Besides the standard version of the application, we also developed a transparent version of the app (cf. bottom row). This application consists of the same screens as the standard version but with additional explanations. At each step, additional explanations are displayed describing what is done in the background to inform the users about the mechanism behind the attestation. Solely the success screen and the screen providing explanations on the location of the reset button were not altered. We developed this version of the application to be able to examine whether additional explanations can improve the understanding of and trust in the attestation process.

4. Qualitative evaluation

In this section, we present the design of our study on user-observable remote attestation and the results from the 35 interviews we conducted.

4.1. Methodology

As mentioned in Section 1, the main research question we aim to address is: ‘Does transparency enhance trust in remote attestation?’ In order to answer this question, it is necessary to understand how trust is generated. We investigate trust in two dimensions: The trust users place in the correctness of the attestation and the trust users place in their devices. Literature indicates that users have less trust when confronted with a black-box system or when they do not understand how their data is processed (Alshehri et al. Citation2022; Zeng, Mare, and Roesner Citation2017). This can be translated to: Users are more likely to trust concepts that they can comprehend. Therefore, understanding a concept is key to building trust. As related work shows (Zeng, Mare, and Roesner Citation2017), software systems are often black-box systems, which makes it hard to understand how a mechanism is working. We aim to make the internal functionality transparent to end users, in order to support their comprehension of security mechanisms. However, literature (Bawden and Robinson Citation2020) shows, that too much information can cause information overload and confuse the user, which would be detrimental to trust. From this, we derive our first sub-question: ‘How does transparency affect the users’ understanding of remote attestation?'

In this study, we use two approaches to provide the users with transparency: First, we provide users with two different versions of the app: a version providing just the basic functionality, serving as a control group, and a transparent version which contains information on what is happening during each step. Other researchers showed that too much information can lead to information overflow and thus contradict our aim of educating the users about security mechanisms. Therefore, we chose to add small text in every step of the attestation process, explaining what is happening at the moment. Due to space limitations, we only used short text, which should not be overwhelming for users. The investigation of abstract concepts like understanding and trust is prone to biases such as the acquiescence bias (Moss Citation2016). To combat this, we use a two-fold approach: We construct questionnaire items (see ) to measure the self-reported understanding of the participants and secondly, we interview the participants after the interaction with the demonstrators and ask them to reiterate how they think the attestation works. We then can compare the self-reported understanding with their ability to reiterate the process in the experiment. This way we can limit biases and validate the self-reported understanding to get a more detailed impression of the actual understanding of the participants. Questionnaire items B1 (I understood how the app works) and B2 (I understood how the process behind attestation works) investigate the degree of understanding of the process of remote attestation. We decided to split these items in two, because participants could confuse the usage of the app with the theory behind the process if not asked for both separately. All items are presented with a 6-point Likert scale and a ‘no answer’-option. To answer the first sub-question for the first transparency condition, we compare the derived level of understanding of both groups of the A/B testing.

For the second approach, we embedded an explanation into the app (cf. Section 3.3). On the title screen, users could use the ‘explanation’-button in the top right corner to read the explanation text on how the remote attestation works. This text describes the process behind the attestation in an easily accessible way and was designed for users who would like to learn more about the process. Similar to the first examination, we investigated the effect of reading the explanation text on the understanding of the attestation process by utilising the combined approach of questionnaire items and interview to compare the understanding between the participants who read the explanation text and those who did not.

Since we are investigating whether transparency improves understanding, we also have to investigate, whether understanding leads to more trust in order to answer our main research question. From this we derive our second sub-question: ‘How does the users’ trust change by gaining a deeper understanding of remote attestation?'

To investigate this, we interviewed the participants about their prior experience with smart speakers and how they perceived the attestation process. For this purpose, we utilised the guiding questions from our interview guideline (‘Do you use smart home devices at home?’; ‘How does attestation change your interaction with smart home devices?’; ‘How well were you able to understand how the attestation process works? (Please reiterate in your own words how you think the attestation works.)’). Depending on their reports on prior knowledge, as well as their ability to reiterate the process, we validated whether key features such as audio transmission were understood and whether this understanding increased the participants' trust in the attestation.

Finally, to test the trust in remote attestation we have to consider the case of a compromised device. Therefore, our third sub-question is: ‘Do users trust formerly compromised devices, that have been restored and verified with remote attestation?’.

To answer this, we use two smart speakers in our setup. The user interacts with both, but the first one that is attested should succeed the attestation, while the second one should fail. Then a reset scenario is conducted and the attestation is repeated. In the interview, we investigate with our guiding questions (see Appendix A.1) whether the users would change their behaviour towards the formerly compromised device and which role the attestation process plays in their answer.

4.1.1. Ethical considerations

Since we are gathering personal and personal-relatable information from our participants, we consulted our university's ethics committee and obtained the approval (date of approval and approval number deleted for anonymization). To inform the participants about the aims of the study and the data to be collected, we provided an informed consent form. Following the guidelines of the ethics committee, the form contained information on how the data would be processed, when it would be deleted, and whom to contact to withdraw consent. Personal data collected from the participants were age, gender, education degree, and their IBAN to be able to transfer the compensation of 20. The consent form was mailed to the participants before the evaluation to allow them to read it unaffected by a study situation in which it would be more difficult to reject the terms of the consent form. Before each session, participants were given a hard copy of the consent form, and they were given time to review and sign the document. At the end of the study session, the participants were informed that the attestation process was simulated and no actual attestation was performed. The reason for this is that in our evaluation scenario, one device should be compromised. Since we consider it unethical to use real malware in this evaluation setting, we chose to simulate the attestation results. The participants were informed about this fact at the end of the sessions and asked whether this would change their view on the attestation process. None of the participants stated that it would change their opinion on the attestation process, while some even stated that they expected something like this since they were in a study setting. We concluded each session by asking for the participants' secrecy on the content of the study because it would negatively affect the results of the study if other participants knew how to use the app, which would render the usability evaluation useless. Making transparent why it is important not to disclose the study content to other participants was successful, since we received feedback from multiple participants that they asked other participants beforehand what to expect, but were turned down because of our request.

4.1.2. Participants

We recruited 35 participants for our study from the university environment, by advertising our study on the campus and offering a link on a QR-Code for self-registration for possible time slots. The ages of the participants ranged from 20 to 42 years, with a mean age of 24.6 years, a standard deviation of 4.07, and a median of 23 years. Nine of them identified as female, 26 as male. Although the subject areas of the participants covered a wide variety of topics, this led to a bias in the sample due to the mostly young and educated participants (cf. Appendix A.2, ). However, this participant group is similar to the main user group of smart speakers.Footnote1 Interesting for our study is that the participants had different experiences with smart devices (cf. Appendix A.2, ). Thus, we can examine differences between both groups of users, who on the one hand have experience with smart speakers and on the other hand do not have experience with smart speakers.

Table A1. Demographic information on the participants.

Table A2. Prior experience of the participants with smart devices on a scale from 1–6 (Fully Disagree – Fully Agree).

4.1.3. Materials

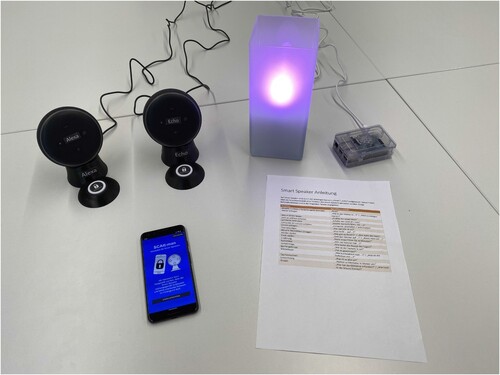

For our study, we built a setup to allow the participants to get used to a smart speaker and be able to compare the behaviour of them with compromised and non-compromised smart speakers. The setup consists of the two Amazon Echo Dots with stands described in Section 3.2, a lamp with a smart light bulb and a smart plug connected to a Raspberry Pi to show the function of turning devices on and off via a smart speaker. Thus, a smart home environment should be simulated. A picture of the evaluation setup is shown in . Both smart speakers were required for our study, because in our scenario, one of the smart speakers was compromised, i.e. the simulated attestation of it would fail. Thus, we could examine how a user reacts when finding out that one smart speaker is compromised. Additionally, we were able to observe and ask the participants how much trust they have in the compromised smart speaker and how that changes when the smart speaker is reset. After a press on the reset button, the attestation would succeed because the simulated malware would be removed by resetting the device. We provided the participants with a laptop to fill out the questionnaires for our study, which we prepared in a self-hosted instance of LimeSurvey.

4.1.4. Procedure

The study was conducted between 01/18/2023 and 01/31/2023. Each session consisted of several parts which were completed by a single participant in about 30 to 50 minutes. In the beginning, we asked participants for demographic information and four questions about their experience with smart speakers (cf. ). All participants were given a short introduction, explaining the scenario and telling them about their tasks. Afterward, the participants were asked to interact with both smart speakers. In order to know what they could do with the devices, a handout with possible actions was provided. The described actions were regular smart speaker commands such as setting a timer or telling a joke. The actions we included to simulate a smart home environment were turning on and off a lamp, changing its colour, and activating a connected device (a Raspberry Pi in our setup) via a smart plug. After the phase of familiarising with the smart speakers, users were asked to perform an attestation. We alternated the versions of the app for each participant, i.e. every participant had either the transparent version or the standard version. First, we assigned the app versions alternately to the time slots for the study, but when some participants did not show up, we alternated the app versions in the order of appearance. In the study scenario, the participant chooses one of the smart speakers to be attested. This first attestation succeeds and the participant is asked to attest the second smart speaker. When attesting this second device, the attestation should fail. On the ‘fail’ screen, the participant is informed about the next steps. The first proposed advice is to retry the attestation in case of a transmission error. When the attestation fails repeatedly, the participant should reset the device using the button on the back of the device. After resetting the device, the participant is asked to attest the device again. This time, the attestation succeeds showing the successful reset of the smart speaker. After testing the attestation app, the participants answered the UEQ-S (Schrepp, Hinderks, and Thomaschewski Citation2017) giving us information about the user experience when using the app. Additionally, the questionnaire contains questions about the understanding of the process (see Appendix A.3.2). We continued with a semi-structured interview for which we prepared some guiding questions to get more information about the users' perceptions. The study material can be found in Appendix A.3.

4.2. Results

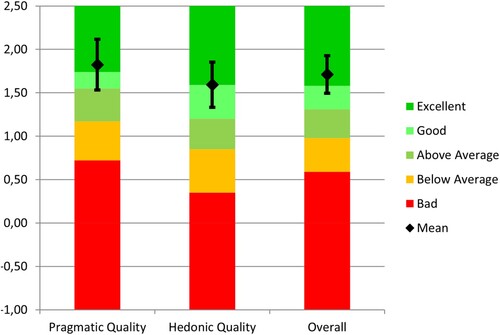

Since we used a smartphone application to evaluate the attestation mechanism, we wanted to ensure that the usability of our application was high enough to not influence our results. For this reason, we used the UEQ-S questionnaire. The results (cf. ) show that our application has a good to excellent usability and thus does not influence our evaluation negatively.

4.2.1. Measuring understanding based on the app version

In order to examine the effects of the app version on the understanding of the attestation process, we analysed the Think-Aloud study. There, we found evidence that many participants with the nontransparent application asked for more explanations on what to do. Participant P1 asked, for example: ‘Are there more explanations?’ Similarly, P3 told us that we should implement an explanation in the app. P5, P13, and P28 asked for more information on ‘Why should I do the attestation process?’, which shows that the meaning behind attestation was not understood. Some participants requested to be able to follow individual process steps better. For example, P9 wished ‘to see what the application tests at the moment’ and said that ‘there were no explanations what I have done or how the attestation checks that the system is secure’. Similar comments were made by P16, who asked for information ‘that explain what is happening and why’ and stated that ‘I could see that something is happening but not what exactly happens’ and P28 remarked ‘What is happening in the background and what do the tones mean?’. Confusion was also expressed by P10, who stated: ‘Normally I am no fan of tutorials but in this case, a more obvious explanation would be great’. P25 admitted ‘I only have a vague guess’ about what is happening during the attestation process and wished for ‘a more transparent overview of the methodology of the attestation’. P30 said ‘I have not understood what the application does’ and wished for ‘small pieces of information on every screen’, which describes our transparent version. P34 described the missing information as a reason for lack of trust in the process, as he is ‘not as convinced as he would be when having a technical explanation’. P14 even concluded that the process was ‘not at all’ comprehensible.

On the other hand, participants who received the transparent version of the application but did not read the explanatory text also asked for more information. Here, P4 stated: ‘I don't know what I did or what the background behind this process is.’ and P29 said ‘I think I did not understand well how this works’. P12 reflected on the fact that the explanation button was not initially seen or used, and thus the attestation process could not be comprehended. More generally, P27 wished to have more information on why attestation should be used and explained that he knew what to do because of the texts provided by the transparent version, but could understand the process ‘only a little bit’. The type of information requested can be categorised into more general information on the attestation process, and technical details for further information on the topic. One participant (P1) asked for both. Six of the participants with the standard version and four of the transparent version asked for general information on the process. Nine participants with the standard version and eight with the transparent version asked for further technical details.

We measured the understanding of the process in two different ways: The self-rating of the participants using questionnaire item B2 (I understood how the process behind attestation works) and the question in the interview to describe the process in their own words. Regarding questionnaire item B2, participants with the transparent version answered with a total score of 71 which is a mean score of 3.94 (4 = ‘Slightly Agree’), whereas the participants with the non-transparent version answered with a score of 59, resulting in a mean score of 3.47 (3 = ‘Slightly Disagree’). When the participants in the discussion were asked how well they could comprehend the process of the attestation, there was a slight difference between the participants with the transparent and the nontransparent version: We found discrepancies between the self-rating and the observed understanding of some participants: P4, P10, P14 and P29 claimed to understand the process in the questionnaire, but could not explain it in the interview. On the other hand, P19 underestimated her understanding in the questionnaire. While those who had little to no understanding at all were evenly distributed among the versions (6 transparent; 8 non-transparent), those who were able to freely reiterate how the process works, which was considered as high understanding by us, were more likely to be in the transparent version (8 transparent; 2 non-transparent). Participants with medium understanding were considered as those who had a general idea but could not explain the process in detail (4 transparent; 7 non-transparent).

4.2.2. Measuring understanding based on the explanation text

For the examination of the influence of the explanation text on the understanding of the attestation process, we analysed the interviews and compared the results to the self-reported understanding in the questionnaire. One remarkable finding is that some users who did not read the explanation wished for an explanation. P4 said that it would be great if he could read somewhere about how the process works. And P5 objected to the missing ‘?’-button for an explanation of functions. Similarly, P16 wished for an ‘i-button which explains what is happening and why’. P33 liked that the app ‘is very simple’, but would like ‘to have a possibility to get more information’.

The positive influence of the explanations on the understanding of the attestation process was confirmed by several participants. For example, P11 read the explanation and reflected after conducting the attestation that before reading the explanation, he was not sure whether the attestation would work. This was confirmed by P21 who said ‘You could see that something is happening but not what exactly happens; with the explanations, it became clear what happens’. P15 said that the process could be well comprehended and ‘I have a plan [of what is happening]’. P17 elaborated that ‘there was an explanation button’ and that the process was ‘explained well’. In addition, the suitability even for technology non-experts was noted. In this regard, P17 elaborated that the process ‘seems to be plausible, also for people who have not a high affinity for technology’ and it is ‘quite foolproof’. P18 explained that the process could be comprehended well and that ‘the idea could be understood’. P19 said that ‘the explanation was very good, short but detailed and not overloaded’ and ‘I could follow it [the explanation] as a student who does not study computer science’. P32 stated ‘I think the explanation is good. I am not so familiar with the devices. I study politics. I think it is well explained for people who know almost nothing [about IT-Security].’ P22 who only read the explanation after conducting the attestation (using the nontransparent application) said ‘I would have understood the process better if I read the explanation first’. P23 confirmed ‘it was nice that there was an explanation; without that, I would have pushed a button without knowing what was happening’. Thus the process could be ‘100% comprehended’. P35 said ‘I understood how it works; the explanation was fairly clear’. Several other participants (P15, P17, P18, P35) concluded that because of the explanations, the process as well as the general idea behind the process could be well comprehended.

Similar to the analysis of the app version's impact, we analysed those who asked for more information in the app. Out of ten participants who requested general information on the process, only one read the explanation, while nine did not. Among the 17 who wanted further technical details to look for more information on the topic eleven read the explanation and six did not. Regarding questionnaire item B2 (I understood how the process behind attestation works), participants who read the explanation answered with a score of 74 which is a mean score of 4.63 (5 = ‘Agree’), whereas the participants with the nontransparent version answered with a score of 56 resulting in a mean score of 2.95 (3 = ‘Slightly Disagree’). However, when the participants were asked in the discussion how well they could comprehend the process of the attestation, there was a clear difference between the participants who read the explanation and those who did not. In total, 16 participants read the explanation and 19 did not. 13 of those who had little to no understanding at all did not read the explanation, while only one of the participants who read the explanation could not comprehend how the attestation process worked. Furthermore, six of those who did not read the explanation and five of those who read it had a medium understanding of the process. Most significantly, ten of those who read the explanation were able to freely reiterate how the process worked, while none of those who did not read the explanation were able to do so.

4.2.3. The connection of trust and understanding

Please note, that the evaluation of the perceived trust and the understanding is indirectly affected by the two different app versions and whether the explanation was read since these conditions affected the understanding. In the following, we will not distinguish between the versions since these effects are already covered above.

General trust issues with companies were brought up by several participants: P12 said: ‘I lose the control over my data and do not know what the companies actually do with it’. The struggle to minimise the amount of data that is given away is described by P28: ‘I try to minimise tracking in my life. I make the three extra clicks to decline tracking in cookie banners and get angry with my friends when they click on ‘accept all’. I studied computer science and am aware of the risks associated with data collection. However, sometimes you get ads for something you were talking about a few days earlier and then you know: somewhere data was collected’. The fear that companies may monetise the collected data was shared by P5, who stated the concern that people can be manipulated to buy products. On the other hand, P1 had little concerns about giving away data: ‘I know that companies get my data but I am interested in the technology. Furthermore, I am young and have nothing to hide’. Another factor for indifference towards data collection despite lacking trust in companies was stated by multiple participants: Resignation. After experiencing that their data already was disclosed to companies, many only could 'trust' the companies that their data was not misused.

Tracking happens everywhere: via smartphone, browsers, or social networks. This could provide benefits to save time but then you get a suspicious offer for things you may need and then it gets creepy. It all depends on what is actually done with our data by the companies (P30).

‘I am aware that companies gather my data. It is the same issue with my smartphone’ (P33). This attitude was shared by P11. P29 continued: ‘Google has my data anyway via my phone. You can't do anything against it’. Similarly, P27 stated:

They know all of us already. As soon as you start using the service of one company you lose all inhibitors. In my social environment, I frequently hear: ‘Google already has my data thus I do not care anymore about my data’.

A similar statement was made by P9. However, even those who were not concerned about the data collected by companies showed signs of increased trust in smart speakers after learning about attestation: For example, P17 does not think that Amazon cares about what he talks about in his living room, but still finds the concept of a device listening to his voice a bit creepy: ‘You do not know who else is listening’. Therefore, attestation is useful to increase the trust of users who think similarly.

When investigating the trust of the participants towards the security provided by attestation, it became clear that the mental models of the users played a major role. These depended on how the participants imagined the motives and possibilities of different actors. P3 and P7 thought that hackers were no threat to them because they were not interesting enough to be targeted. P21 shared this belief, but still wanted to take action to prepare: ‘I do not believe that I get personally hacked, but I do want to secure my data’. P35 distinguishes between different devices to form a threat model: 'Regarding privacy invasion I am more afraid of my smartphone than a smart speaker. The smart speaker is only in one room, but my phone is always by my side'. And finally, many still were convinced that data collection by companies is a bigger threat than malware and hacker attacks: ‘I am not sure whether a hacker attack is the biggest problem. I am more afraid that a company misuses my data. […] Big companies gathering my data is more scary than hackers’ (P35). P24 underlined the perceived threat by data collection: ‘I think a bigger threat than a private hacker is the power amassed by big companies. This could pose a threat to society’. The evaluation of different actors and their capabilities was summarised by P21:

I am not concerned that secret services are getting data from smart speakers. I got a smartphone after all. I am not afraid of governmental surveillance, but I do not want companies to use my data for marketing; My biggest fear is not a specific ‘hacker organisation’ but security breaches in general.

These differences in the perceived power of different actors are similar to the misplaced illusionary trust in attestation. Users have specific mental models and these influence their threat models and the level of trust they place in someone or something. Therefore, prior knowledge is a major factor in evaluating threats and trust. This can be achieved by transparency during the interaction with a tool or by prior experience as P34 illustrates:

I turn a blind eye to the influence of big companies in my everyday life. I once requested my data from Google and they had data from my childhood. Until now nothing bad happened to me, but it depends on how much you trust the company.

4.2.4. Trust in formerly compromised devices

Regarding our third hypothesis, we examined (1) whether device handling would change due to the possibility of attestation and (2) whether the interaction with the device hacked in our study would change after successful attestation following a reset (see guiding questions (iv) and (vi) in Appendix A.1). Regarding the first question, six participants stated that the attestation increased their trust in the smart speaker (P5, P20, P21, P23, P25, P28). Although they described an improved trust in the device, some users stated that the handling of the smart speaker still would be the same (P20, P21, P25). Three users reported that the attestation leads to an increased sense of security (P6, P16, P35). The greatest influence of attestation was on five participants, who stated that the possibility of attestation would be a reason to purchase the device, although they do not currently own a smart speaker because they do not trust them (P11, P12, P18, P19, P28).

For the second question, 17 participants responded that a formerly compromised device would not be handled any differently than a non-compromised device after a reset and subsequent successful attestation. For participant P4, the reset with a successful attestation would increase the trust in the device. Seven participants stated that they would be more skeptical about the formerly compromised device and would do the attestation more frequently. Four participants would ask themselves how the smart speaker could be compromised (P5, P6, P22, P33). For six participants the compromised device would lose their trust and would not be used anymore. Two of those would also lose trust in similar devices, even if they had not been compromised (P1, P7). In conclusion, attestation influences the trust and sense of security positively and can also be a reason to buy a device. One participant even stated that he would pay extra for a device with a built-in attestation function (P4): In case of a device costing 50, he would be willing to pay

10–15 extra for the attestation function. Otherwise, a fundamental skepticism towards smart speakers and their manufacturers cannot be changed even by attestation. However, most of the participants trust the attestation when a formerly compromised device is attested successfully after a reset.

5. Discussion and conclusion

In this section, we reflect on the results to test our hypotheses and answer our research questions.

5.1. Findings and implications

First, we examined the difference in understanding of the attestation process between users of the transparent version of the app and those using the nontransparent version. During the interviews, we observed that participants with the nontransparent version of the app asked more frequently for more information on the process of the attestation. However, this might be an effect of the sample size, the design of the versions itself, or the expectations of the participants. The latter can be derived from the quotes of P5 and P16 who asked for a ‘?’- or ‘i’-button for more information. This implies that users do not expect transparency in each step but a central source of information. In the interviews, we could observe that participants who had the transparent version of the app had a slightly better understanding of the process than those who received the nontransparent version. Therefore, we formulate the hypothesis that the transparency difference in the app version does not have a relevant influence on the understanding of the attestation process.

Second, we analysed the difference in understanding of the attestation process between users who read the explanation text and those who did not. We could show that the majority of those who wanted general information on the process did not read the explanation, while the majority of those who read it requested further technical details to dive deeper into the topic. Additionally, we could confirm that all participants who were able to reiterate how the process works in the interview had read the explanation. Overall, we formulate the hypothesis that users understand the process of attestation better when reading the explanation text in the app.

Therefore, we show that making security mechanisms transparent for end users supports their understanding of the overall mechanism. This combats the problem of users not understanding how their data is processed, as stated by Alshehri et al. (Citation2022) and the practice of designing smart speakers as black-box devices (Zeng, Mare, and Roesner Citation2017). Therefore, we can answer our first research question (‘How does transparency affect the users’ understanding of remote attestation?') with: Increased transparency led to an increase in understanding in our sample.

When investigating how understanding and trust are related, we found that the mental models of the participants played a major role. Those who had experience with security issues or heard of privacy violations were more skeptical than others. Furthermore, many participants thought that they were not interesting enough to be targeted by hackers. However, in the context of IoT, this is not the correct threat model. Users are more likely to fall victim to broadly deployed ransomware or botnets than being targeted as individuals by hacker groups. Therefore, educating these users is highly important to achieve actual security. By utilising transparency the understanding of users can be enhanced, which proved to be essential for building trust in the security mechanism and to reduce false mental models such as misplaced trust. This illusionary trust was placed in the attribution process due to false mental models and by attributing properties to the system due to lacking understanding.

Therefore, we can answer the second research question (‘How does the users’ trust change by gaining a deeper understanding of remote attestation?') with: Understanding affects the mental models of the users and enables them to estimate the correct level of protection. Furthermore, false attributions are revoked and real trust can be built.

Our third sub-goal was the investigation of whether participants would trust a formerly compromised device after it was reset and successfully attested. During the interviews we received mixed feedback: On the one hand, resetting and attesting a corrupted device increased the trust of some participants. On the other hand, some participants indicated that they would become suspicious of all similar devices if they discovered a corrupted device in their vicinity. Most participants agreed that the possibility of attestation does not affect how they interact with IoT devices in general. Some indicated that their trust is strengthened, but they would not share more information with the smart speaker than they would without attestation. Even if the device was compromised and restored, the behaviour towards the device would not change. However, participants stated that they would be alarmed and conduct the attestation process more often after the discovery of a corrupted device. Some stated that they would test the device once a day for at least some weeks after regaining trust in the device. Others stated that they would stop using the smart speaker if it was compromised again after the reset. This aligns with findings from literature pointing out that security is an important factor for users to trust IoT devices (AlHogail Citation2018). With these findings, we can answer our third research question (‘Do users trust formerly compromised devices, that have been restored and verified with remote attestation?’) with: Yes, most users do trust formerly compromised devices if they have been restored and verified via remote attestation.

Overall we could investigate that transparency indeed increased the trust in remote attestation. By giving the users the possibility to comprehend the process, they were able to evaluate the result of the attestation themselves and could take action as they deemed necessary. The fact that most participants of our study would use a formerly compromised device after reset and successful attestation shows the level of trust towards the attestation.

5.2. Limitations and future work

Our study is subject to several limitations. First, our sample consists of relatively young and well-educated participants. Although this resembles the target group of smart speakers closely, it still makes our study not representative of a broader target group. Second, our methodology is a qualitative study. While qualitative studies allow for a more in-depth investigation of a topic, they are not representative on a large scale. By reaching saturation during the interviews due to the lack of new input from the participants, we can claim that most perspectives are represented. However, even though we interviewed participants of different nationalities, a sample from a different country may bring up other results since risk cultures differ based on the background of people. For example, Reuter et al. (Citation2019) showed that Germany had the strongest privacy concerns of all investigated European countries when interacting with social media. Therefore, we encourage other researchers to use our study design as a basis to conduct comparative studies or even quantitative studies on this topic in future work to broaden the perspective of the research community on this topic. Another limitation is the suspicion of some participants that the smart speakers were not really compromised. This indicates a different perception of the situation in this study compared to the real world which could result in a different behaviour in the real world. Future work could also develop other methods of transparency to evaluate their effect on the trust of users towards attestation. Another interesting approach would be testing the limits of transparency. In our study, we found that transparency enhances the understanding of the users and thus the trust in the attestation process. The results of the usability scale and the fact that the participants could reiterate more or less how the process worked led to the conclusion that our setup provided a useful amount of information. However, including too much information could lead to information overload and thus reduce the level of understanding. Testing the limits of the amount of information which is helpful and the point when it becomes detrimental could greatly enhance the research on information overload and decision fatigue.

5.3. Conclusion

Finally, we can summarise our findings to answer our main research question: Does transparency enhance trust in remote attestation? In our study, we confirmed that transparency as an HCI intervention does increase the understanding of processes such as remote attestation. We were also able to confirm that understanding the process of remote attestation leads to increased trust in the overall process. One example of this is the audio communication between the devices. Most participants thought it would just be feedback for them to show that the attestation is in process. However, by realising that audio communication is useful to avoid remote attackers, users did trust the attestation process more. This trust even allows them to rebuild trust in formerly compromised devices which were restored and verified by remote attestation. Therefore, we conclude that trust is based on prior knowledge as well as mental models. Transparency affects both by increasing the understanding and thus the knowledge about a security mechanism, and on the other hand it reduces illusionary trust which originates from mental models and false attributions. Thus, transparency helps build real trust in the actual functionality of security and privacy features. Therefore, transparency indeed supports users to calibrate their trust to the trustworthiness of the system and we can answer our main research question in the affirmative, and conclude that transparency enhances trust in remote attestation.

Disclosure statement

No potential conflict of interest was reported by the author(s).

Additional information

Funding

Notes

References

- Abbott, Jacob, Jayati Dev, Donginn Kim, Shakthidhar Gopavaram, Meera Iyer, Shivani Sadam, Shrirang Mare, et al. 2022. “Privacy Lessons Learnt from Deploying an IoT Ecosystem in the Home.” In Proceedings of the 2022 European Symposium on Usable Security (Karlsruhe, Germany) (EuroUSEC '22), 98–110. New York, NY, USA: Association for Computing Machinery. https://doi.org/10.1145/3549015.3554205.

- Abdi, Noura, Kopo M. Ramokapane, and Jose M. Such. 2019. “More than Smart Speakers: Security and Privacy Perceptions of Smart Home Personal Assistants.” In Fifteenth Symposium on Usable Privacy and Security (SOUPS 2019), 451–466. Santa Clara, CA: USENIX Association. https://www.usenix.org/conference/soups2019/presentation/abdi.

- Abera, Tigist, N. Asokan, Lucas Davi, Jan-Erik Ekberg, Thomas Nyman, Andrew Paverd, Ahmad-Reza Sadeghi, and Gene Tsudik. 2016. “C-FLAT: Control-Flow Attestation for Embedded Systems Software.” In Proceedings of the 2016 ACM SIGSAC Conference on Computer and Communications Security (Vienna, Austria) (CCS '16), 743–754. New York, NY, USA: Association for Computing Machinery. https://doi.org/10.1145/2976749.2978358.

- Abera, Tigist, N. Asokan, Lucas Davi, Farinaz Koushanfar, Andrew Paverd, Ahmad-Reza Sadeghi, and Gene Tsudik. 2016. “Invited: Things, Trouble, Trust: On Building Trust in IoT Systems.” In Proceedings of the 53rd Annual Design Automation Conference, 1–6. Austin, TX, USA: ACM, IEEE. https://doi.org/10.1145/2897937.2905020.

- Abera, Tigist, Raad Bahmani, Ferdinand Brasser, Ahmad Ibrahim, Ahmad-Reza Sadeghi, and Matthias Schunter. 2019. “DIAT: Data Integrity Attestation for Resilient Collaboration of Autonomous Systems.” In NDSS, 1–15. San Diego, CA, USA: Network and Distributed Systems Security (NDSS).

- Aldowah, Hanan, Shafiq Ul Rehman, and Irfan Umar. 2021. “Trust in IoT Systems: A Vision on the Current Issues, Challenges, and Recommended Solutions.” In Advances on Smart and Soft Computing, edited by Faisal Saeed, Tawfik Al-Hadhrami, Fathey Mohammed, and Errais Mohammed, 329–339. Singapore: Springer Singapore.

- AlHogail, Areej. 2018. “Improving IoT Technology Adoption Through Improving Consumer Trust.” Technologies 6 (3): 64. https://doi.org/10.3390/technologies6030064.

- Alraja, Mansour Naser, Murtaza Mohiuddin Junaid Farooque, and Basel Khashab. 2019. “The Effect of Security, Privacy, Familiarity, and Trust on Users' Attitudes Toward the Use of the IoT-based Healthcare: The Mediation Role of Risk Perception.” IEEE Access 7:111341–111354. https://doi.org/10.1109/Access.6287639.

- Alrawi, Omar, Chaz Lever, Manos Antonakakis, and Fabian Monrose. 2019. “SoK: Security Evaluation of Home-Based IoT Deployments.” In IEEE Symposium on Security and Privacy (SP), 1362–1380. San Francisco, CA, USA: IEEE. https://doi.org/10.1109/SP.2019.00013.

- Alshehri, Ahmed, Joseph Spielman, Amiya Prasad, and Chuan Yue. 2022. “Exploring the Privacy Concerns of Bystanders in Smart Homes From the Perspectives of Both Owners and Bystanders.” Proceedings on Privacy Enhancing Technologies 2022 (3): 99–119. https://doi.org/10.56553/popets-2022-0064.

- Aman, Muhammad Naveed, Mohamed Haroon Basheer, Siddhant Dash, Jun Wen Wong, Jia Xu, HW Lim, and Biplab Sikdar. 2020. “HAtt: Hybrid Remote Attestation for the Internet of Things with High Availability.” IEEE Internet of Things Journal 7 (8): 7220–7233. https://doi.org/10.1109/JIoT.6488907.

- Ambrosin, Moreno, Mauro Conti, Riccardo Lazzeretti, Md. Masoom Rabbani, and Silvio Ranise. 2020. “Collective Remote Attestation At the Internet of Things Scale: State-of-the-Art and Future Challenges.” IEEE Communications Surveys & Tutorials 22 (4): 2447–2461. https://doi.org/10.1109/COMST.9739.

- Ammar, Mahmoud, Bruno Crispo, and Gene Tsudik. 2020. “SIMPLE: A Remote Attestation Approach for Resource-Constrained IoT Devices.” In 2020 ACM/IEEE 11th International Conference on Cyber-Physical Systems (ICCPS), 247–258. Sydney, NSW, Australia: IEEE. https://doi.org/10.1109/ICCPS48487.2020.00036.

- Architecure ARM. 2009. ARM Security Technology: Building a Secure System using TrustZone Technology. white paper. ARM Limited.

- Asplund, Mikael, and Simin Nadjm-Tehrani. 2016. “Attitudes and Perceptions of IoT Security in Critical Societal Services.” IEEE Access 4:2130–2138. https://doi.org/10.1109/ACCESS.2016.2560919..

- Babun, Leonardo, Z. Berkay Celik, Patrick McDaniel, and A. Selcuk Uluagac. 2021. “Real-Time Analysis of Privacy-(un) Aware IoT Applications.” Proceedings on Privacy Enhancing Technologies 2021 (1): 145–166. https://doi.org/10.2478/popets-2021-0009.

- Bawden, D., and L. Robinson. 2020. “Information Overload: An Overview.” In Oxford Encyclopedia of Political Decision Making. Oxford: Oxford University Press. https://doi.org/10.1093/acrefore/9780190228637.013.1360.

- Brasser, Ferdinand, Brahim El Mahjoub, Ahmad-Reza Sadeghi, Christian Wachsmann, and Patrick Koeberl. 2015. “TyTAN: Tiny Trust Anchor for Tiny Devices.” In Proceedings of the 52nd annual Design Automation Conference, 1–6. San Francisco, CA, USA: ACM, IEEE. https://doi.org/10.1145/2744769.2744922.

- Business Wire. 2021. Strategy Analytics: Global Smart Speaker Sales Cross 150 Million Units for 2020 Following Robust Q4 Demand. 2023 Business Wire, Inc. https://www.businesswire.com/news/home/20210303005852/en/Strategy-Analytics-Global-Smart-Speaker-Sales-Cross-150-Million-Units-for-2020-Following-Robust-Q4-Demand.

- Carpent, Xavier, Norrathep Rattanavipanon, and Gene Tsudik. 2018. “Remote Attestation of IoT Devices via SMARM: Shuffled Measurements Against Roving Malware.” In 2018 IEEE International Symposium on Hardware Oriented Security and Trust (HOST), 9–16. Washington, DC, USA: IEEE.

- Conti, Mauro, Edlira Dushku, and Luigi V. Mancini. 2019. “RADIS: Remote Attestation of Distributed IoT Services.” In 2019 Sixth International Conference on Software Defined Systems (SDS), 25–32. Rome, Italy: IEEE.

- Costan, Victor, and Srinivas Devadas. 2016. Intel SGX explained. Cryptology ePrint Archive Paper 2016, 086 (2016), 118. https://eprint.iacr.org/2016/086.

- Dushku, Edlira, Md. Masoom Rabbani, Mauro Conti, Luigi V. Mancini, and Silvio Ranise. 2020. “SARA: Secure Asynchronous Remote Attestation for IoT Systems.” IEEE Transactions on Information Forensics and Security 15:3123–3136. https://doi.org/10.1109/TIFS.10206.

- Edu, Jide S., Jose M. Such, and Guillermo Suarez-Tangil. 2020. “Smart Home Al Assistants: A Security and Privacy Review.” ACM Computing Surveys (CSUR) 53 (6): 1–36. https://doi.org/10.1145/3412383.

- Emami-Naeini, Pardis, Janarth Dheenadhayalan, Yuvraj Agarwal, and Lorrie Faith Cranor. 2021. “Which Privacy and Security Attributes Most Impact Consumers' Risk Perception and Willingness to Purchase IoT Devices?” In 2021 IEEE Symposium on Security and Privacy (SP), 519–536. San Francisco, CA, USA: IEEE.

- Furnell, Steven, and Nathan Clarke. 2012. “Power to the People? The Evolving Recognition of Human Aspects of Security.” Computers & Security 31 (8): 983–988. https://doi.org/10.1016/j.cose.2012.08.004.

- Gerber, Nina, Benjamin Reinheimer, and Melanie Volkamer. 2018. “Home Sweet Home? Investigating Users' Awareness of Smart Home Privacy Threats.” In Proceedings of an Interactive Workshop on the Human aspects of Smarthome Security and Privacy (WSSP). Baltimore, MD, USA: USENIX Association.

- Hassan, Wan Haslina. 2019. “Current Research on Internet of Things (IoT) Security: A Survey.” Computer Networks 148:283–294. https://doi.org/10.1016/j.comnet.2018.11.025.

- Hu, Hong, Shweta Shinde, Sendroiu Adrian, Zheng Leong Chua, Prateek Saxena, and Zhenkai Liang. 2016. “Data-Oriented Programming: On the Expressiveness of Non-Control Data Attacks.” In 2016 IEEE Symposium on Security and Privacy (SP), 969–986. San Jose, CA, USA: IEEE.

- Kil, Chongkyung, Emre C. Sezer, Ahmed M. Azab, Peng Ning, and Xiaolan Zhang. 2009. “Remote Attestation to Dynamic System Properties: Towards Providing Complete System Integrity Evidence.” In 2009 IEEE/IFIP International Conference on Dependable Systems & Networks, 115–124. Lisbon, Portugal: IEEE. https://doi.org/10.1109/DSN.2009.5270348.

- Koeberl, Patrick, Steffen Schulz, Ahmad-Reza Sadeghi, and Vijay Varadharajan. 2014. “TrustLite: A Security Architecture for Tiny Embedded Devices.” In Proceedings of the Ninth European Conference on Computer Systems (EuroSys '14), 14. Amsterdam, Netherlands: ACM. https://doi.org/10.1145/2592798.2592824.

- Kolias, Constantinos, Georgios Kambourakis, Angelos Stavrou, and Jeffrey Voas. 2017. “DDoS in the IoT: Mirai and Other Botnets.” Computer 50 (7): 80–84. https://doi.org/10.1109/MC.2017.201.https://doi.org/10.1109/MC.2017.201.

- Korneeva, Elena, Nina Olinder, and Wadim Strielkowski. 2021. “Consumer Attitudes to the Smart Home Technologies and the Internet of Things (IoT).” Energies 14 (23): 7913. https://doi.org/10.3390/en14237913.

- Kuang, Boyu, Anmin Fu, Shui Yu, Guomin Yang, and Mang Su, and Yuqing Zhang. 2019. “ESDRA: An Efficient and Secure Distributed Remote Attestation Scheme for IoT Swarms.” IEEE Internet of Things Journal 6 (5): 8372–8383. https://doi.org/10.1109/JIoT.6488907.

- Kuang, Boyu, Anmin Fu, Lu Zhou, Willy Susilo, and Yuqing Zhang. 2020. “DO-RA: Data-Oriented Runtime Attestation for IoT Devices.” Computers & Security 97:101945. https://doi.org/10.1016/j.cose.2020.101945.

- Lafontaine, Evan, Aafaq Sabir, and Anupam Das. 2021. “Understanding People's Attitude and Concerns Towards Adopting IoT Devices.” In Extended Abstracts of the 2021 CHI Conference on Human Factors in Computing Systems (Yokohama, Japan) (CHI EA '21), Article 307, 10. New York, NY, USA: Association for Computing Machinery. https://doi.org/10.1145/3411763.3451633.

- Mahmoud, Rwan, Tasneem Yousuf, Fadi Aloul, and Imran Zualkernan. 2015. “Internet of Things (IoT) Security: Current Status, Challenges and Prospective Measures.” In 2015 10th International Conference for Internet Technology and Secured Transactions (ICITST), 336–341. London, UK: IEEE. https://doi.org/10.1109/ICITST.2015.7412116.

- Mare, Shrirang, Franziska Roesner, and Tadayoshi Kohno. 2020. “Smart Devices in Airbnbs: Considering Privacy and Security for Both Guests and Hosts.” Proceedings on Privacy Enhancing Technologies 2020 (2): 436–458. https://doi.org/10.2478/popets-2020-0035.

- McDermott, Christopher D., John P. Isaacs, and Andrei V. Petrovski. 2019. “Evaluating Awareness and Perception of Botnet Activity Within Consumer Internet-of-Things (IoT) Networks.” In Informatics, Vol. 6, 8. Basel, Switzerland: MDPI.

- Menard, Philip, and Gregory J. Bott. 2020. “Analyzing IOT Users' Mobile Device Privacy Concerns: Extracting Privacy Permissions Using a Disclosure Experiment.” Computers & Security 95:101856. ISSN: 0167-4048. https://doi.org/10.1016/j.cose.2020.101856.

- Moss, Simon. 2016. Acquiescence Bias. Sicotests. Accessed 23 May, 2023. https://www.sicotests.com/newpsyarticle/Acquiescence-bias.

- Nemec Zlatolas, Lili, Nataša Feher, and Marko Hölbl. 2022. “Security Perception of IoT Devices in Smart Homes.” Journal of Cybersecurity and Privacy 2 (1): 65–73. https://doi.org/10.3390/jcp2010005.

- Nunes, Ivan De Oliveira, Karim Eldefrawy, Norrathep Rattanavipanon, Michael Steiner, and Gene Tsudik. 2019. “VRASED: A Verified Hardware/Software Co-Design for Remote Attestation.” In Proceedings of the 28th USENIX Conference on Security Symposium (Santa Clara, CA, USA) (SEC'19), 1429–1446. USA: USENIX Association.

- Nunes, Ivan De Oliveira, Sashidhar Jakkamsetti, and Gene Tsudik. 2021. “Dialed: Data Integrity Attestation for Low-End Embedded Devices.” In 2021 58th ACM/IEEE Design Automation Conference (DAC), 313–318. San Francisco, CA, USA: IEEE.

- Nyhan, Brendan, and Jason Reifler. 2010. “When Corrections Fail: The Persistence of Political Misperceptions.” Political Behavior 32 (2): 303–330. https://doi.org/10.1007/s11109-010-9112-2.

- Oser, Pascal, Sebastian Feger, Paweł W. Woźniak, Jakob Karolus, Dayana Spagnuelo, Akash Gupta, Stefan Lüders, Albrecht Schmidt, and Frank Kargl. Sep 2020. “SAFER: Development and Evaluation of An IoT Device Risk Assessment Framework in a Multinational Organization.” Proceedings of the ACM on Interactive, Mobile, Wearable and Ubiquitous Technologies 4 (3): 1–22. https://doi.org/10.1145/3414173.https://doi.org/10.1145/3414173.

- OWASP. 2018. Internet of Things (IoT) Top. Accessed 3 July, 2022. https://owasp.org/www-pdf-archive/OWASP-IoT-Top-10-2018-final.pdf.

- Park, Chankook, Yangsoo Kim, and Min Jeong. 2018. “Influencing Factors on Risk Perception of IoT-based Home Energy Management Services.” Telematics and Informatics 35 (8): 2355–2365. ISSN: 0736-5853. https://doi.org/10.1016/j.tele.2018.10.005.