?Mathematical formulae have been encoded as MathML and are displayed in this HTML version using MathJax in order to improve their display. Uncheck the box to turn MathJax off. This feature requires Javascript. Click on a formula to zoom.

?Mathematical formulae have been encoded as MathML and are displayed in this HTML version using MathJax in order to improve their display. Uncheck the box to turn MathJax off. This feature requires Javascript. Click on a formula to zoom.Abstract

This paper addresses the drawbacks of the macroeconomic approach to estimate downturn loss given defaults (LGD) that is currently used in the EU banking regulation. Using a large dataset of more than 186.000 defaulted credit exposures covering a time span of 18 years we demonstrate by Monte Carlo simulations that the macroeconomic approach proposed by the European Banking Authority (EBA) does not reach a confidence level of 99.9%. As an alternative we develop a downturn LGD estimation based on a latent variable approach. Our simulations demonstrate that this approach outperforms the macroeconomic approach in two dimensions: It induces capital requirements that are sufficient to cover potential losses at a 99.9% confidence level and compared to all regulatory eligible macroeconomic approaches that provide sufficient loss coverage, our approach requires the least amount of capital. The latent variable approach, developed in this paper is applicable for market based LGDs as well as for workout LGDs, i.e., for defaulted exposures with a long workout period. We argue that banking regulation as well as banks’ risk management can be improved if the latent variable approach is used in addition to the traditional macroeconomic approach for estimating downturn LGDs.

1. Introduction

Regulatory capital requirements for banks are designed to ensure that financial institutions’ own funds are sufficient to absorb potential losses. Under the Advanced Internal Ratings Based Approach (AIRBA), financial institutions are allowed to determine the potential unexpected credit portfolio losses from defaulted loans, by using own estimates of the exposure at default, the probability of default (PD), and the loss given default (LGD), i.e., the fraction of the exposure at default that is expected to be lost, if a default occurs. The most challenging part of calculating a portfolio credit loss is to estimate the stochastic dependencies between all loan exposures.

Under the AIRBA, this problem is solved in an elegant way for the PD by using a latent variable approach. The latent variable approach captures the idea that the occurrence of a default event depends on both, on a systematic component affecting all credit exposures and on idiosyncratic features. The unobservable latent variable represents the overall macroeconomic situation to model the systematic component. While the idiosyncratic part of the loss risk can be diversified away by holding a finely granular credit portfolio, the systematic part is decisive for determining the credit portfolio risk. In the AIRBA, systematic risk is measured as the sensitivity of each exposure to the latent variable and these sensitivities reflect at the same time the default correlations of all exposures within a credit portfolio. Stressing the latent variable to its 99.9% percentile then calculates the credit portfolio loss at its 99.9% confidence level. The latent variable approach has several advantages: First, it is not necessary to specify the exact macroeconomic conditions governing the systematic risk. Second, it allows to allocate a capital requirement to each credit exposure in accordance with its contribution to the portfolio risk, the so-called portfolio-invariance. Third, it is easy to implement in banking regulation, because it allows to express the PD conditional on the latent variable within a closed formula.

Despite its merits, the latent variable approach is not used for calculating unexpected losses with respect to the LGD. Instead, the regulatory approach laid down in the Capital Requirements Regulation (CRR) relies on the concept of a macroeconomic downturn LGD. The European Banking Authority (EBA) has recently specified the nature and severity of an economic downturn as the most severe values observed on a list of macroeconomic and credit-related factors over the last 20 years. Among these factors are GDP growth, unemployment rate, and aggregate default rates. The 20 years time span is chosen to cover approximately two economic cycles (EBA, Citation2018). Depending on banks’ data availability, the EBA has proposed several methods appropriate to estimate a downturn LGD (EBA, Citation2019). This macroeconomic approach has several drawbacks: First, the macroeconomic parameters identifying an economic downturn solely rely on intuition and lack any empirical evidence. Second, the approach does not adequately address the stochastic dependencies between the LGDs of the individual exposures as well as stochastic dependencies between LGDs and PDs. Koopman et al. (Citation2011) report that even more than 100 macroeconomic covariates are not sufficient to replace the need for latent components. Betz et al. (Citation2018) show that macroeconomic variables, in general, are not suitable to capture the true systematic effects when modelling LGDs. Third, the 20-year time span is incompatible with a confidence level of 99.9% normally used for regulatory purposes. Fourth, the treatment of downturn LGDs is inconsistent with the treatment of PDs in the AIRBA.

In this paper, we demonstrate the inadequateness of the downturn LGD methods proposed by the EBA. We propose a latent variable based approach for downturn LGDs that is consistent with the regulatory treatment of PDs and performs better than EBAs macroeconomic approach. Performance is measured by the ability to correctly estimate unexpected losses with respect to the LGD. More precisely, we are looking for an approach ensuring 1) that potential losses are covered by own funds at a confidence level of 99.9% (henceforth called the survival criterion) and 2) that this is achieved with a minimum of regulatory capital requirements (henceforth called the waste criterion). The waste criterion reflects that a banks average cost of capital increases with the equity ratio. Higher average cost of capital lead to higher loan interest rates, thus decreasing investments and dampening GDP growth. To foster economic development, it is therefore important that regulatory capital requirements are smart in the sense that they ensure financial stability without being too burdensome. In addition, a better estimation of unexpected losses helps financial institutions to improve their internal capital allocation.

To achieve this, the research goal of this paper can be differentiated to two steps. First, we study the relationship between the expected LGD value and the latent variable. Second, we test the performance of the macroeconomic approach and our alternative approach. The first step is in general more challenging for LGDs than for PDs. Whereas considering only the state of the economy at the time of default may be sufficient for market based recovery rates, i.e., for recoveries based on the market price at which defaulted exposures trade typically 30 days after default, the situation is more complicated for workout recovery rates, i.e., for recoveries calculated as the present value of all cash flows stemming from a defaulted exposure during the workout process. The workout process often lasts several years and during that period the state of the economy may fluctuate and can impact the potential LGD systematically. Thus, more than one time-point may be needed to model the workout LGD. The central idea is to incorporate a latent variables time series in the LGD model instead of only one latent variable.

Based on a database containing 186,000 resolved default cases between 2000 and 2017, our results confirm that the LGD sensitivities towards the latent variables vary with the default age. We then construct some basic latent variable based downturn LGD estimation methods and test their performance by Monte Carlo simulations. Our alternative method for downturn LGD can uphold the 99.9% survivability, while the EBA’s macroeconomic approach only shows an 81% survival chance. In comparison with the Foundation IRBA (FIRBA), our method likewise passes the performance test by survival chance, but it is superior in terms of waste, i.e., avoiding excessive capital requirements (our method: 16%, FIRBA: 22%).

The results of this paper are particularly interesting for policymakers and risk practitioners as well as for academics specialising in credit risk. In regard of downturn LGD, this paper shows plainly the inadequacy of the macroeconomic approach, which is an interesting observation for both, regulators and banks. We offer an alternative approach, which can be used by risk practitioners in parallel with the EBA’s macroeconomic approach as a benchmark. For policymakers, this paper contains insights useful to develop an adequate downturn LGD guideline. From the research perspective, this paper contributes further to the research of a latent variable based downturn LGD.

The remainder of this paper is structured as follows: section 2 reviews the literature with a focus on LGD models based on a latent variables approach; section 3 describes our methods, the performance test via Monte Carlo simulations, including the data description; section 4 shows the results from the given model and introduces our alternative downturn LGD models, followed by the performance comparison of our models with the FIRBA and AIRBA; and lastly, section 5 closes this paper with a discussion.

2. Review of the literature

Early attempts to model the downturn LGD with latent variables can be found for example in Frye (Citation2000a), Frye (Citation2000b), Pykhtin and Dev (Citation2002), and Pykhtin (2003). The central idea of modelling LGDs by a latent variable approach is that a single common systematic factor (denoted by X) drives both, the default event and the expected LGD. Whereas the impact of X on the default event is mediated by the asset value, the impact of X on the LGD is mediated by the collateral value. The use of X is originated from the asymptotic single-risk factor (ASRF) model, which serves as the theoretical backbone for the IRBA.

Using US corporate bonds data, Frye (Citation2000b) estimates a sensitivity of 23% with respect to the asset value and 17% with respect to the collateral value. Düllmann and Gehde-Trapp (Citation2004) use a database containing bonds, corporate loans, and debt instruments in the US. They report 20% and 2%–3% for the respective sensitivities depending on the distribution assumptions. Aside from single-factor LGD models, some papers introduce multi-factor models to accommodate the demand for more model flexibility. These factors can be assumed to be independent, such as Pykhtin (Citation2004), or with a particular dependence structure, such as Schönbucher (Citation2001). The variations using a latent variables time-series may also assume a point-in-time dependency structure, as found in Bade et al. (Citation2011), or an autoregressive process, as found in Betz et al. (Citation2018).

One of the obstacles in modelling the systematic impact is to identify the relationship between expected LGD and X. This additional assumption is necessary to calculate the theoretical form of the downturn LGD. The simplest one is the linear relationship introduced by Frye (Citation2000a), Frye (Citation2000b), and Pykhtin and Dev (Citation2002). The linearity implies the conditional LGD, to be normally distributed, if - as usually assumed - X is normally distributed. Motivated by the restriction of

an exponential transformation can be used to ensure a log-normally distributed

as can be found in Pykhtin (2003) and Barco (Citation2007). Other suggestions include application of a beta distribution, such as work from Tasche (Citation2004), or modelling LGD directly from PD, found in the work of Giese (Citation2005), Hillebrand (Citation2006), as well as Frye and Jacobs Jr (Citation2012). Furthermore, Frye (Citation2013) suggests that modelling the systematic risk in LGD can be replaced by modelling the default rates in LGD instead. The proposed alternatives in section 4.2 use a multilinear relationship. We argue that the fact that more than one latent variable will be used is more important than the choice of a specific relationship.

Compared to other studies in the literature, our methods are superior in two ways: 1) they are designed to be applicable under the IRBA and 2) they are tested for adequacy (in the sense of 99.9% confidence level) rather than accuracy (typically in the sense of an accuracy measure, e. g. R2 in a regression analysis) using a large dataset with mixed facility type to replicate a real bank.

3. Methods and data

3.1. Methods for analysis

To accommodate a long workout duration, this paper extends the ASRF model to include more than one latent variable in regard to LGD. Let denote the recovery capability of a credit facility i.

is calculated as the present value of all recoveries divided by the exposure at default. We propose that

is influenced by the latent variables

where T denotes the workout duration and by

capturing the idiosyncratic component. The LGD reflects the accumulated impact of the latent variables during the whole workout duration. Inherited from the ASRF model, we assume

the asset value at the time of default, to depend on the realisation of the latent variable at that time and on the idiosyncratic component

The coefficient is an element inside the

-dimensional unit circle excluding the origin. Unlike other latent variable based models in the literature, we do not impose any assumption on how Xt and Xs depend on each other for any given year t and s. In the ASRF model, an obligor is defaulted, if its asset value falls below a certain threshold (

where PDi is the probability of default of credit facility i). A similar link can also be assumed for

and the expected LGD. This paper diverges from other studies in that we do not assume a specific function for this link (see section 2).

The coefficient p describes the sensitivity of the asset value towards

The restriction for p to be positive reflects the assumption that financial distress causes a higher default rate. Similarly, the coefficients

describe the sensitivity of

towards the latent variables during the workout years. The restriction of qt to be positive (for each t) is not necessary from a technical point of view. Especially for workout LGDs, a negative qt cannot be ruled out for exposures with a long workout duration. To include these cases as well we refrain from restricting LGD to be positive. Furthermore, we impose no restriction on the length of the workout process because the model is intended to cover a typical bank, which may have a mixed portfolio containing exposures with workout LGDs and exposures with market-based LGDs.

To estimate p, this paper applies the maximum likelihood (ML) method similar to Frye (Citation2000b). According to Gordy and Heitfield (Citation2002), the ML method produces less bias (coming from lack of data) than the method of moments. The likelihood function can be derived through the theoretical distribution of the conditional PD, since

and gA is invertible and differentiable in X. The change-of-variable technique produces the density function of

which is

where

is the density function of the standard normal distribution. If the parameter PDi is known, the estimation of p is reduced to a one-dimensional problem. The maximum of the likelihood function can be approximated numerically, which yields the estimated p. By applying the estimated p in

the implied Xt can be calculated for each year t. The coefficient q cannot be estimated with the ML method without an assumption on the dependence structure of

Without this structure and a link between

and LGD, no likelihood function can be derived. To solve this issue, the coefficient q is estimated via a regression method through the realisations of

and the observed average LGD at a given default year and workout duration. If the expected LGD can be approximated with a linear function of

then we get:

The OLS coefficients estimate The coefficient q can be solved algebraically, since the variance of the residuals should be equal to

Thus,

Without overcomplicating the issue, it is sufficient to test for the required 99.9% survivability of the resulted downturn LGD methodologies, whether the expected LGD can be adequately approximated with a linear function of (see section 4.2). From the perspective of adequacy, there is no need to find the true function. If it fails the test, then it is inadequate and we should consider non-linear alternatives. The choice of the linearity is motivated by its simplicity, since an overly complex function is unlikely to be adopted by regulators.

3.2. Monte Carlo simulations

The performance analysis is done via Monte Carlo simulations. A random portfolio will be drawn to represent a randomly chosen bank. The simulation tests whether the downturn LGD exceeds the realised LGD. For each year t, any parameter required to calculate a downturn LGD (or its alternative) will be calculated out-of-time, i. e. the required parameters can only be estimated using available data up to t. In our view, a loss database of five years would be the absolute minimum to calculate any decent estimate. Therefore, we evaluate the performance test only from 2005 onwards. For each iteration, 1,000 default cases are randomly drawn from the defaults population, which are resolved in the year t. The process is repeated 10,000 times.

This article introduce two concepts for performance measurement: the institution’s survival chance and waste. In the LGD context, an institution survives LGD-wise if the downturn LGD at t given the portfolio composition at t is at least as high as the realised LGD for all defaults resolved at t on the portfolio level. If the calculated downturn LGD is lower than the realised one, we refer to this event as a failure. In line with the requirements under the IRBA, we impose a 99.9% survival rate.

Under waste, we want to measure the LGD overestimation, since an overly conservative downturn LGD implies a higher capital requirement. From the banks’ perspective, higher capital requirements are associated with higher weighted average costs of capital (WACC), as is empirically shown by Cosimano and Hakura (Citation2011) and Miles et al. (Citation2013). Van den Heuvel (Citation2008) shows a surprisingly high welfare cost of capital requirements. Mikkelsen and Pedersen (Citation2017) argue that increasing capital requirements entail a trade-off between benefits and social costs. In the case of downturn LGD methodologies, this translates into a trade-off between survival chance and waste. At some point, the bank’s survival chance is sufficiently large that being even more conservative increases social costs more than social benefits. Regulatory requirements usually imply that the social costs and benefits are regarded as balanced if the confidence level of a bank survival achieves 99.9%. For a given downturn LGD method, its waste describes the degree of the LGD overestimation. Per definition, the downturn LGD should always be higher than the expected LGD. Thus, some degree of overestimation compared to the realised LGD is expected. In particular, we define waste of a survived institution as the difference of the calculated downturn LGD mean and the realised LGD mean for a particular portfolio at t.

An overly conservative rule would obviously produce higher downturn LGD estimations than the realised LGD in expectation, e. g. setting the downturn LGD to be equal to 100% for all cases would ensure high survivability but a high waste as well.

3.3. Data

We obtain two databases: 1) the PD and Rating Platform and 2) the LGD and EAD Platform, from Global Credit Data (GCD)Footnote1. They contain the observed number of defaults counted by banks within a predefined segment and any information related to credit failures in contract level leading to LGD and EAD. All participating banks are obliged to specify default and loss in the same way to ensure data comparability within the sample. The definitions of default and loss are set by the relevant authorities to ensure comparable PDs and LGDs between institutions.

3.3.1. PD and rating platform

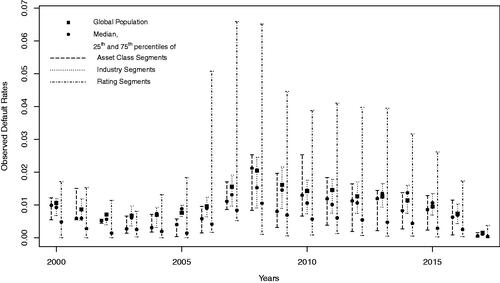

In the PD and Rating Platform, the numbers of defaulted and non-defaulted loans for defined segments from 1994 to 2017 are reported. These numbers are low before 2000, so its representatively is uncertain in these early years. Starting from 2000, the yearly number of loans rises to over 50,000 and reaches its peak to over 710,000 in 2014. The dataset composition on rating, asset class, or industry segment fluctuates yearly.

The platform contains pooled numbers of defaulted as well as non-defaulted loans in various segments. In total, the dataset contains over 6.2 million non-defaulted loan-years distributed over the 18 years interval. Assuming that the typical duration to maturity or default time is about two years, the dataset contains information on over 3 million different loans internationally. Three of the most represented asset classes are: SME (50.71%), Large Corporates (22.14%), and Banks and Financial Companies (14.67%) and three of the most represented industries are: Finance and Insurance (15,33%), Real Estate, Rental, and Leasing (14,88%), and Wholesale and Retail Trade (13,62%)Footnote2.

shows the observed default rates in the dataset throughout the years between 2000 and 2016. A detailed description of the dataset is reported by Thakkar and Crecel (Citation2019). The dataset classifies every default into the defined

Figure 1. Observed default rates in the global population and segmented by asset class, industry, and rating.

asset class: SME, Large Corporates, Banks and Financial Companies, Ship Finance, Aircraft Finance, Real Estate Finance, Project Finance, Commodities Finance, Sovereigns, Public Services, Private Banking;

industry: Agriculture, Hunting and Forestry, Fishing and its Products, Mining, Manufacturing, Utilities, Construction, Wholesale and Retail, Hotels and Restaurants, Transportation and Storage, Communications, Finance and Insurance, Real Estate and Rental and Leasing, Professional, Scientific and Technical Services, Public Administration and Defence, Education, Health and Social Services, Community, Social and Personal Services, Private Sector Services, Extra-territorial Services, and Individual; and

rating: mapped to S&P rating categories (from AAA to C), as well as defaulted.

In each category, shows the observed default rates of the 25% best and the 25% worst segment, as well as the median (only if there are at least 100 loans in the particular subcategory).

3.3.2. LGD and EAD platform

The LGD platform contains extensive information about defaults on loan level for non-retail exposures including information regarding collateral. The LGD is pre-calculated based on the realised loss per outstanding unit. Slightly different LGD definitions do not affect the results significantly.

The dataset contains over 186,000 defaulted loans after 2000, both resolved (92.5%) and unresolved cases (7.5%). The number of resolved loans between 2000 and 2017 with non-zero exposure is 161,365 from 93,775 different obligors with an average EAD of 3 million Euros. The three most represented asset classes are SME (62.57%), Large Corporates (16.61%), and Real Estate Financing (12.42%). The three most represented industries are Manufacturing (16.44%), Real Estate, Rental, and Leasing (15.94%) and Wholesale and Retail Trade (14.01%).

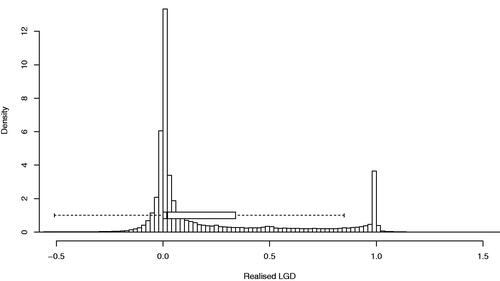

LGD samples outside the -interval are possible and are not rare for workout LGDs. Typically, the realised workout LGDs in loan level inhibit the bimodal distribution, as also shown in for our dataset. The mean of the realised LGDs (referenced by default year) are highly correlated with the observed default rates as shown by . However, some of the defaults in the dataset are not resolved yet, leaving low average realised LGDs in the last five years. A report regarding the LGD distribution of Large Corporate exposures in this dataset (Rainone & Brumma, Citation2019) and a study about a downturn effect in the LGD pattern (Brumma & Rainone, Citation2020) were conducted.

4. Result

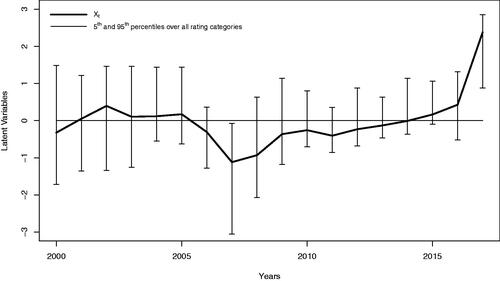

For the observed default rates, the maximum likelihood method gives an estimated (equivalent to an asset correlation of

). For a comparison, Frye (Citation2000b) reports a p of 23% (for bonds) and Düllmann and Gehde-Trapp (Citation2004) report p to be approximately 20% (for bonds and loans). Under the IRBA, institutions have to apply an asset correlation between 3% and 24% in most cases.

depicts the implied latent variables time-series. During the global financial crisis, which started in 2007, the downturn effect is observed soon after the Lehmann fall in 2008. The implied latent variables in both years are at the lowest points compared to others. In comparison, the implied latent variable in 2017 is high due to the low observed default rate in 2017, as shown in .

4.1. LGD’s systematic sensitivity

To avoid a potential bias originated from cases with an extraordinarily long workout duration, samples with an excessively long workout duration are excluded. About 95% of the resolved defaults in the database have workout durations less than six years, and we choose this to be the cutting point. We successively extend the maximum workout duration length in the analysis to replicate a portfolio of a randomly chosen financial institution. Due to the model design, the results should be interpreted at a portfolio level.

The results presented in confirm that the economic conditions during the workout years influence the expected LGD. Thus, an approach using only a single latent variable for the LGD might be inappropriate. Obviously, the sensitivity is highest in the default year and diminishes with increasing default age.

Table 1. LGD’s systematic sensitivity coefficient towards the latent variables from different years during the workout period.

4.2. Performance analysis

The results obtained from the previous section suggest that incorporating multiple latent variables from several workout years for a downturn LGD estimation increases the explanatory power. Four basic downturn LGD estimation procedures based on a latent variable approach are chosen for this paper. The idiosyncratic risk will not be evaluated since the focus of the downturn LGD methods is to measure the systematic risk. It is necessary to measure the performance parameters independent from bias correction methods (if any bias in the data exists). Otherwise, it can be argued that the good performance of our method could not only come from the use of a latent variable approach but also from the chosen bias correction. Thus, neither bias correction nor conservatism add-on will be applied.

The performance of the following downturn LGD estimations is compared for the year t (we refer t as today). Up to the time point t, the latent variables are assumed to be available.

(1) A forward-looking single-factor estimation. This procedure assumes that the expected LGD depends only on the (fully-weighted) today’s latent variable, which will be stressed (set to ).

(A1)

(A1)

(2) A backward-looking single-factor estimation. This procedure assumes that the expected LGD depends on the (fully-weighted) default year’s latent variable. The latent variable is stressed only, if the default year is t.

(A2)

(A2)

(3) A three-years-factors estimation. Compared to the previous methods, this estimation method incorporates multiple latent variables. If the default age is shorter than three years, the last latent variable will be stressed. Otherwise, only the first three realised latent variables after default are included in the estimation (in this particular case, there is no stressed latent variable). This procedure weights the relevant latent variables equally to avoid overfitting.

(A3)

(A3)

(4) A complete-history based estimation. Different from the last one, this approach incorporates the complete history of past latent variables within the workout duration and stresses only today’s latent variable. All latent variables are equally weighted.

(A4)

(A4)

Both parameters and

are estimated by the expected value and the standard deviation of the institutions’ portfolio LGD, which are estimated solely from past information for an out-of-time analysis. In practice, this will be heavily influenced by resolution bias, which generally leads to an underestimation of LGD. Instead of q acquired from the previous result in , we use equal weights on all of these estimators to avoid overfitting, since the dataset used in the Monte Carlo simulations and for the estimation of q is identical.

It is not difficult to give a first estimate about the degree of conservatism of the four procedures. The number of exposures in a random portfolio which are affected by the stressed latent variable varies in different procedures. A1 is the most conservative estimation procedure (likely to be borderline excessive) since it always includes a stressed component for all exposures, while A2 is the least conservative because it stresses the latent variable only, if the loan defaults in the current year. Both A3 and A4 lie in-between in terms of conservativeness. For instance, if today is 2020 and for a loan, which defaulted in 2017 but is still unresolved, A1 considers only one single stressed component (2020), A2 considers only the 2017s (unstressed) latent variable, A3 considers the 2017–2019s (unstressed) latent variables, and A4 considers the 2017–2019s (unstressed) latent variables and the stressed component (2020).

4.2.1. Comparison to the FIRBA

In this section, we compare the performance of the LGD estimation methods A1-A4 with the LGD methodology under the FIRBA. The FIRBA will become more important, because the recent Basel reforms (Basel Committee on Banking Supervision, Citation2017) forbid the use of the AIRBA for exposures to some asset classes such as exposures to corporates with consolidated revenues above €500 millions and financial institutions. Under the FIRBA, senior claims on financial institutions and other corporates will be assigned a 45% LGD and a 40% LGD, respectively. The LGD applicable to a collateralised transaction is calculated as the exposure weighted average of the LGD applicable to the unsecured part of an exposure and the LGD applicable to the collateralised part of an exposure.

The relevant asset classes for the FIRBA, which are available in the dataset, are Large Corporates, Banks and Financial Companies, Sovereigns, and Private Banking. Where assumptions are necessary to calculate LGDs under the FIRBA, they are always generous in the sense that the simulation should produce the least amount of waste. For secured loans, the collateral is always treated as eligible and financial collateral’s haircut is assumed to be 0%. Nonetheless, both A3 and A4 produce significantly lower waste than the regulatory downturn LGD under the FIRBA, while maintaining a similar survival chance.

The average of the survival rates is calculated as a geometric mean, while the average of the waste is an arithmetic mean, due to the nature of each parameter. As expected, the FIRBA achieves the 99.9% survival rate on average since it is a very conservative approach. However, even under generous assumptions, confirms that the LGD based on the FIRBA is wasteful compared to other methods. In fact, its performance is similar to A1 on average, both the survival chance and the waste. However, A1 is adapting to the economic situation as more information becomes available. Its waste values are higher than the FIRBA’s waste only during 2005–2008. If more data becomes available, a more accurate estimation by A1 may be achievable compared to the FIRBA. In summary, the downturn LGD is overestimated by about 22% under the FIRBA, whereas methods A3 and A4 result in an overestimation of approximately 13% and 16%, respectively, while maintaining at the same time a high survivability of 99.9%.

Table 2. Comparison of survival chance and waste of different downturn LGD estimation models with the FIRBA for relevant asset classes in % for each year from 2005 until 2017.

4.2.2. Comparison to the AIRBA

Similarly to the previous simulation, we compare our proposed methods with the AIRBA. According to the Basel Committee on Banking Supervision (Citation2017), supervisors may permit the use of the AIRBA to the corporate asset class with a consolidated revenue of less than €500 millions. In our dataset, the relevant asset class would be exposures to SMEs.

Although the EBA has spent a lot of efforts to harmonise the calculation of capital requirements under the AIRBA (EBA, Citation2019), institutions still have some leeway in determining own downturn LGD estimates. Choosing a specific downturn LGD estimation method appropriate for the AIRBA may reduce the representativeness of our analysis. Therefore, we choose three methods that are eligible under the EBA guideline and represent different level of conservatism:

(AIRBA-L)

(AIRBA-L)

(AIRBA-M)

(AIRBA-M)

(AIRBA-H)

(AIRBA-H)

where

denotes the maximum,

the second maximum observed LGD until t, and

the observed average LGD of facilities defaulted in s and resolved in t. Assuming downturn periods are associated with high LGDs, the

should be a good proxy of the worst downturn year. Obviously, DLGDL yields the lowest downturn LGD and DLGDH is the most conservative method, which is introduced as a backstop for the case the two other methods cannot be applied.

The simulation will be done with the application of the LGD input floors, as introduced by the Basel Committee on Banking Supervision (Citation2017), and without them. Input floors are 25% for unsecured exposures and vary between 0% and 15% depending on the type of collateral.

The results shown in are very concerning. Briefly summarised, the downturn LGD methods as proposed by the EBA (AIRBA-L and AIRBA-M) are inadequate to ensure the 99.9% survival chance. Most of the failures happen in the post-crisis period. Most likely, banks will identify 2008/2009 as the downturn periods in compliance with the proposed regulatory technical standard (EBA, Citation2018). Even though institutions are required for thorough impact assessment in compliance to the proposed guideline (EBA, Citation2019), the information on the downturn impact associated with the financial crisis is simply not available in 2010 yet. This is due to the fact that the workout duration can exceed two years. The impacts observed from the past are unfortunately still inadequate. Even the AIRBA-M estimate only shows a survival rate of 80.9% without input floors mostly due to failures during post-crisis periods.

Table 3. Comparison of survival chance and waste of different downturn LGD estimation models with the AIRBA for SME asset class in % for each year from 2005 until 2017.

The inclusion of input floors brings the survival chance to 99.4%. On the one hand, this is a reassurance that input floors help approaching the 99.9% survivability, assuming banks will at least adopt an (M)-type estimate. On the other hand, they should be understood as a makeshift solution and do not really solve the conceptual drawbacks of a macroeconomic based downturn LGD estimation. Moreover, input floors generally give the wrong incentive for low-risk banks to increase their risks, since they are not risk sensitive.

In comparison, method A4 gives a stunning precision towards the required 99.9% survivability, without any input floors. As expected, the LGD calculated under AIRBA-H is sufficiently conservative as well, but with a 10% LGD overestimation compared to a 5% waste from A4.

While the results presented in and are calculated under the assumption of a large diversified loan portfolio with negligible idiosyncratic risk, our calculations show that the results also hold for smaller default portfolios (see ).

Table 4. Average survival chance and average waste in % when only default cases are resolved per year.

4.3. Regulatory application

The latent variable approach can be used to improve banking regulation as well as banks’ internal risk management. As the regulatory given LGD values under the FIRBA are not based on a rigorous econometric approach, our results can serve the regulatory authorities as a benchmark indicating whether these LGD values are adequately conservative or overly burdensome. The latent variable approach requires as input the long-run average LGD at the portfolio level, its associated standard deviation

the default year td, the current year t, and the latent variables of all relevant years

These parameters can be estimated from the data banks are obliged to provide to regulatory authorities, while the latent variables can be estimated from default rates from a given system, independent from banks.

The results presented in suggest that downturn LGDs following the EBA guidelines fail the 99.9% criterion. Therefore, the macroeconomic approach, adopted by the EBA, should be complemented by a latent variable approach based on method A4 as a kind of backstop. Regulatory authorities should provide banks with the input parameters relevant for the latent components, since they represent the overall economic condition. To allow for some degree of model flexibility, institutions should be allowed to estimate their own idiosyncratic factor. The result of the performance test is independent of the idiosyncratic factor model banks want to adopt.

5. Conclusion and discussion

A recently published guideline (EBA, Citation2019) specifies a macroeconomic approach to estimate downturn LGDs. This approach introduces a methodological mismatch in the downturn definitions and will potentially result in an underestimation of capital requirements. A latent variable approach provides a remedy against these weaknesses. This approach is able to estimate downturn LGDs at a 99.9% confidence level, whereas a macroeconomic approach with a medium level of conservatism achieves only a confidence level of 99.4%, only if an input floor is imposed. Input floors however, cannot remedy the conceptual flaws of the macroeconomic downturn approach and induce disincentives. A confidence level of 99.9% can be reached, if the downturn LGD is determined using an overly conservative macroeconomic approach, e. g. the backstop method introduced in the guideline. But, this method induces a significantly higher level of capital compared to the latent variable approach. This in turn, reduces a bank’s lending capacity and leads to higher borrowing costs. A latent variable approach is efficient in the sense that it ensures a given level of safeness, i.e., a confidence level of 99,9%, with lower capital requirements than any other method proposed in the aforementioned guideline. By using a time series of latent variables rather than a single latent variable, a latent variable approach can be applied to estimate market based LGDs for defaulted exposures with a short workout period as well as workout LGDs for defaulted exposures with a long workout period.

In spite of its strengths the latent variable approach has a major drawback that may impede its use in regulatory requirements and in banks’ risk management. The concept of a latent variable is less intuitive than a macroeconomic approach and may be considered as a kind of black box. Due to this reason, we recommend to complement rather than to substitute the macroeconomic approach by a latent variable approach proposed in this paper.

Disclosure statement

No potential conflict of interest was reported by the authors.

Notes

1 GCD is a non-profit association owned by its member banks from around the world and active in data-pooling for historical credit data. As of 2020, it has 55 members across Europe, Africa, North America, Asia, and Australia. For details: https://www.globalcreditdata.org

2 Counted in loan-years. Assuming the typical duration to maturity or default time is similar throughout the segments, the composition remains unchanged when counted in number of loans.

References

- Bade, B., Rösch, D., & Scheule, H. (2011). Default and recovery risk dependencies in a simple credit risk model. European Financial Management, 17(1), 120–144. Retrieved from https://doi.org/10.1111/j.1468-036X.2010.00582.x

- Barco, M. (2007). Going downturn. Risk, 20(8), 70–75.

- Basel Committee on Banking Supervision. (2017). Basel III: finalising post-crisis reforms.

- Betz, J., Kellner, R., & Rösch, D. (2018). Systematic effects among loss given defaults and their implications on downturn estimation. European Journal of Operational Research, 271(3), 1113–1144. Retrieved from https://doi.org/10.1016/j.ejor.2018.05.059

- Brumma, N., & Rainone, N. (2020). Downturn LGD study 2020. Global Credit Data.

- Cosimano, T., & Hakura, D. (2011). Bank behavior in response to Basel III: A cross-country analysis. IMF Working Papers, 11(119), 1. Retrieved from https://doi.org/10.5089/9781455262427.001

- Düllmann, K., & Gehde-Trapp, M. (2004). Systematic risk in recovery rates: an empirical analysis of US corporate credit exposures. Deutsche Bundesbank, Discussion Paper Series 2: Banking and Financial Supervision.

- European Banking Authority (EBA). (2018). Final Draft Regulatory Technical Standards: On the specification of the nature, severity and duration of an economic downturn in accordance with Articles 181(3)(a) and 182(4)(a) of Regulation (EU) No 575/2013.

- European Banking Authority (EBA). (2019). Final Report: guidelines for the estimation of LGD appropriate for an economic downturn (‘Downturn LGD estimation’).

- Frye, J. (2000a). Collateral damage: A source of systematic credit risk. Risk, 13(4), 91–94.

- Frye, J. (2000b). Depressing recoveries. Risk, 13(11), 106–111.

- Frye, J. (2013). Loss given default as a function of the default rate. Federal Reserve Bank of Chicago.

- Frye, J., & Jacobs Jr, M. (2012). Credit loss and systematic loss given default. Journal of Credit Risk, 8(1), 1–32. Retrieved from https://doi.org/10.1142/9789814417501_0011

- Giese, G. (2005). The impact of PD/LGD correlations on credit risk capital. Risk, 18(4), 79–84.

- Gordy, M., & Heitfield, E. (2002). Estimating default correlations from short panels of credit rating performance data. [Working paper].

- Hillebrand, M. (2006). Modeling and estimating dependent loss given default. Risk, 19(9), 120–125.

- Koopman, S. J., Lucas, A., & Schwaab, B. (2011). Modeling frailty-correlated defaults using many macroeconomic covariates. Journal of Econometrics, 162(2), 312–325. Retrieved from https://doi.org/10.1016/j.jeconom.2011.02.003

- Mikkelsen, J. G., & Pedersen, J. (2017). A cost-benefit analysis of capital requirements for the Danish economy. Danmarks Nationalbank: Working Paper, 123.

- Miles, D., Yang, J., & Marcheggiano, G. (2013). Optimal bank capital. The Economic Journal, 123(567), 1–37. Retrieved from https://doi.org/10.1111/j.1468-0297.2012.02521.x

- Pykhtin, M. (2003). Unexpected recovery risk. Risk, 16(8), 74–79.

- Pykhtin, M. (2004). Multi-factor adjustment. Risk, 17(3), 85–90.

- Pykhtin, M., & Dev, A. (2002). Analytical approach to credit risk modelling. Risk, 15(3), S26–S32.

- Rainone, N., & Brumma, N. (2019). LGD report 2019 – Large corporate borrowers. Global Credit Data.

- Schönbucher, P. (2001). Factor models: Portfolio credit risks when defaults are correlated. The Journal of Risk Finance, 3(1), 45–56. Retrieved from https://doi.org/10.1108/eb043482

- Tasche, D. (2004). The single risk factor approach to capital charges in case of correlated loss given default rates. [Working Paper].

- Thakkar, D., & Crecel, R. (2019). PD benchmarking report 2019. Global Credit Data.

- Van den Heuvel, S. J. (2008). The welfare cost of bank capital requirements. Journal of Monetary Economics, 55(2), 298–320. Retrieved from https://doi.org/10.1016/j.jmoneco.2007.12.001