?Mathematical formulae have been encoded as MathML and are displayed in this HTML version using MathJax in order to improve their display. Uncheck the box to turn MathJax off. This feature requires Javascript. Click on a formula to zoom.

?Mathematical formulae have been encoded as MathML and are displayed in this HTML version using MathJax in order to improve their display. Uncheck the box to turn MathJax off. This feature requires Javascript. Click on a formula to zoom.Abstract

Throughout its history, Operational Research has evolved to include methods, models and algorithms that have been applied to a wide range of contexts. This encyclopedic article consists of two main sections: methods and applications. The first summarises the up-to-date knowledge and provides an overview of the state-of-the-art methods and key developments in the various subdomains of the field. The second offers a wide-ranging list of areas where Operational Research has been applied. The article is meant to be read in a nonlinear fashion and used as a point of reference by a diverse pool of readers: academics, researchers, students, and practitioners. The entries within the methods and applications sections are presented in alphabetical order. The authors dedicate this paper to the 2023 Turkey/Syria earthquake victims. We sincerely hope that advances in OR will play a role towards minimising the pain and suffering caused by this and future catastrophes.

Operations research is neither a method nor a technique; it is or is becoming a science and as such is defined by a combination of the phenomena it studies. Ackoff (Citation1956)

1. IntroductionFootnote1

The year 2024 marks the 75th anniversary of the Journal of the Operational Research Society, formerly known as Operational Research Quarterly. It is the oldest Operational Research (OR) journal worldwide. On this occasion, my colleague Fotios Petropoulos from University of Bath proposed to the editors of the journal to edit an encyclopedic article on the state of the art in OR. Together, we identified the main methodological and application areas to be covered, based on topics included in the major OR journals and conferences. We also identified potential authors who responded enthusiastically and whom we thank wholeheartedly for their contributions.

Modern OR originated in the United Kingdom during World War II as a need to support the operations of early radar-detecting systems and was later applied to other operations (McCloskey, Citation1987). However, one could argue that it precedes this period in history since it is partly rooted in several mathematical fields such as probability theory and statistics, calculus, and linear algebra, developed much earlier. For example, the Fourier-Motzkin elimination method (Fourier, Citation1826a, Citation1826b) constitutes the main basis of linear programming. Queueing theory, which plays a central role in telecommunications and computing, already existed as a distinct field of study since the early 20th century (Erlang, Citation1909), and other concepts, such as the economic order quantity (Harris, Citation1913) were developed more than one century ago. Interestingly, while many recent advances in OR are rooted in theoretical or algorithmic concepts, we are now witnessing a return to the practical roots of OR through the development of new disciplines such as business analytics.

After the war ended, several industrial applications of OR arose, particularly in the manufacturing and mining sectors which were then going through a renaissance. The transportation sector is without doubt the field that has most benefited from OR, mostly since the 1960s. The aviation, rail, and e-commerce industries could simply not operate at their current scale without the support of massive data analysis and sophisticated optimisation techniques. The application of OR to maritime transportation is more recent, but it is fast gaining in importance. Other areas that are less visible, such as telecommunications, also deeply depend on OR. The success of OR in these fields is partly explained by their network structures which make them amenable to systematic analysis and treatment through mathematical optimisation techniques. In the same vein, OR also plays a major role in various branches of logistics and project management, such as facility location, forecasting, inventory planning, scheduling, and supply chain management.

The public sector and service industries also benefit greatly from OR. Healthcare is the first area that comes to mind because of its very large scale and complexity. Decision making in healthcare is more decentralised than in transportation and manufacturing, for example, and the human issues involved in this sector add a layer of complexity. OR methodologies have also been applied to diverse areas such as education, sports management, natural resources, environment and sustainability, political districting, safety and security, energy, finance and insurance, revenue management, auctions and bidding, and disaster relief, most of which are covered in this article.

Among OR methodologies, mathematical programming occupies a central place. The simplex method for linear programming, conceived by Dantzig in 1947 but apparently first published later (Dantzig, Citation1951), is arguably the single most significant development in this area. Over time, linear programming has branched out into several fields such as nonlinear programming, mixed integer programming, network optimisation, combinatorial optimisation, and stochastic programming. The techniques most frequently employed for the exact solution of mathematical programs are based on branch-and-bound, branch-and-cut, branch-and-price (column generation), and dynamic programming. Game theory and data envelopment analysis are firmly rooted in mathematical programming. Control theory is also part of continuous mathematical optimisation and relies heavily on differential equations.

Complexity theory is fundamental in optimisation. Most problems arising in combinatorial optimisation are -hard and typically require the application of heuristics for their solution. Much progress has been made in the past 40 years or so in the development of metaheuristics based on local search, genetic search, and various hybridisation schemes. Many problems in fields such as vehicle routing, location analysis, cutting and packing, set covering, and set partitioning can now be solved to near optimality for realistic sizes by means of modern heuristics. A recent trend is the use of open-source software which not only helps disseminate research results, but also contributes to ensuring their accuracy, reproducibility and adoption.

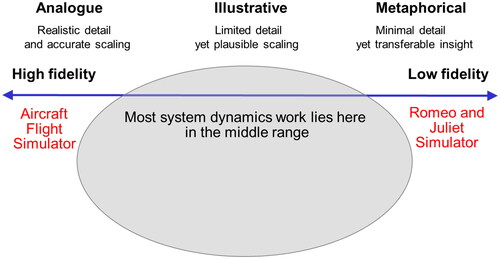

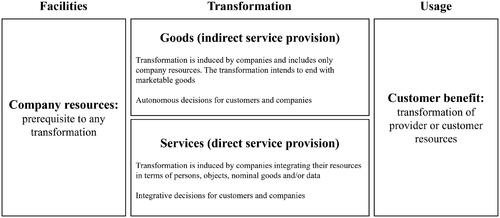

Several modelling paradigms such as systems thinking and systems dynamics approach problems from a high-level perspective, examining the inter-relationships between multiple elements. Complex systems can often be analysed through simulation, which is also commonly used to assess the performance of heuristics. Decision analysis provides a useful framework for structuring and solving complex problems involving soft and hard criteria, behavioural OR, stochasticity, and dynamism. Recently, issues related to ethics and fairness have come to play an increasing role in decision making.

Because the various topics of this review paper are listed in alphabetical order, the subsection on “Artificial intelligence, machine learning and data science” comes first, but this topic constitutes one of the latest developments in the field. It holds great potential for the future and is likely to reshape parts of the OR discipline. Already, machine learning-based heuristics are competitive for the solution of some hard problems.

This paper begins with a quote from Russell L. Ackoff who has been a pioneer of OR. In 1979, he published in this journal two articles (Ackoff, Citation1979a, Citation1979b) that presented a rather pessimistic view of our discipline. The author complained about the lack of communications between academics and practitioners, and about the fact that some OR curricula in universities did not sufficiently prepare students for practice, which is still true to some extent. One of his two articles is entitled “The Future of Operational Research is Past”, which may be perceived as an overreaction to this diagnosis. In my view, the present article provides clear evidence to the contrary. Soon after the publication of the two Ackoff papers, we witnessed the development of micro-computing, the Internet and the World Wide Web. It has become much easier for researchers in our community to access information, software and computing facilities, and for practitioners to access and use our research results. We are now fortunate to have access to sophisticated open-source software, data bases, bibliographic sources, editing and visualisation tools, and communication facilities. Our field is richer than it has ever been, both in terms of theory and applications. It is constantly evolving in interaction with other disciplines, and it is clearly alive and well and has a promising future.

2. Methods

2.1. Artificial intelligence, machine learning, and data scienceFootnote2

Machine learning (ML) comprises techniques for modelling predictive tasks, i.e., tasks that involve the prediction of an unknown quantity from other observed quantities. Ideas of learning in an artificial system and the term machine learning were first discussed in the 1950s (Samuel, Citation1959) and their development and popularity have seen enormous growth over the last two decades in part due to the availability of large-scale datasets and increased computational resources to model them.

Mitchell (Citation1997) provides this concrete definition of machine learning “A computer program is said to learn from experience E with respect to some class of tasks T, and performance measure P, if its performance at tasks in T, as measured by P, improves with experience E”. The program is a model or a function and its experience E is the type of data it has access to. There are three types of experiences supervised, unsupervised, and reinforcement learning. The performance measure (P) allows for model evaluation and comparison including model selection.

Supervised learning is an experience where a model aims at predicting one or more unobserved target (dependent) variables given observed ibackprout (independent) variables. In other words, a supervised model is a function that map inputs to outputs. The process of solving a supervised problem involves first learning a model, that is adjusting its parameters using a training dataset with both input and target variables. The training set is drawn IID (independently and identically distributed) from an underlying distribution over inputs and targets. Once trained, the model can provide target predictions for new unseen samples from the same distribution. The most common tasks in supervised learning are regression (real dependent variable) and classification (categorical dependent variable). Evaluating a supervised system is usually performed using held-out data referred to as the test data while held-out validation data is used for model development and selection using procedures such as k-fold cross-validation.

Supervised models can be dichotomised into linear and nonlinear models. Linear models perform a linear mapping from inputs to outputs (e.g., linear regression). Machine learning mostly investigates nonlinear supervised models including deep neural network (DNN) models (Goodfellow et al., Citation2016). DNNs are composed of a succession of parametrised nonlinear transformations called layers and each layer contains a set of transformations called neurons. Layers successively transform an input datum into a target. The parameters of the layers are adjusted to iteratively obtain better predictions using a procedure called backpropagation, a form of gradient descent (Goodfellow et al., Citation2016, §6.5). DNNs are state-of-the-art methods for many large-scale non-structured datasets across domains (see also §3.19). DNNs can be adapted to different sizes of inputs and targets as well as variable types. They can also be specialised for specific types of data. Recurrent neural networks (RNNs) are auto-regressive models for sequential data (Rumelhart et al., Citation1986). The sequential data are tokenised and an RNN transforms each token sequentially along with a transformation of the previous tokens. Convolutional neural networks (CNNs) are specialised networks for modelling data that is arranged on a grid (e.g., an image Lecun, Citation1989). Their layers contain a convolution operation between an input and a parameterised filter followed by a nonlinear transformation, and a pooling operation. Each layer processes data locally and so requires fewer parameters compared to vanilla DNNs. As a result, CNNs can model higher-dimensional data. Graphical neural networks (GNNs) are specialised architectures for modelling graph data (e.g., a social network; Scarselli et al., Citation2009). In GNNs, the data are transformed by following the topology of the graph. Last, attention layers dynamically combine their inputs (tokens) based on their values. Transformer models use successions of attention and feed-forward layers to model sequential input and output data (Vaswani et al., Citation2017). Transformers are more efficient to train than RNNs and can be trained on internet-scale data given enormous computational power. The availability of such broad datasets especially in the text and image domains has given rise to a class of very-large-scale models (also referred to as foundation models) that display an ability to adapt to and obtain high performance across a diversity of downstream supervised tasks (Bommasani et al., Citation2021)

Last, attention is a mechanism that considers data to be unordered and uses transformations dynamically. Transformers are models based on attention. They provide more efficient training than RNNs for very large-scale datasets (Vaswani et al., Citation2017).

Neural networks currently outperform other methods when learning from unstructured data (e.g., images and text). For tabular data, data that is naturally encoded in a table and that has heterogeneous features (Grinsztajn et al., Citation2022), best-performing methods use ideas first proposed in tree-based classifiers, bagging, and boosting. They include random forests (Breiman, Citation2001), XGBoost (Chen & Guestrin, Citation2016) which both scale to large-scale datasets as well as kernel methods including support vector machines (SVMs see, e.g., Schlkopf et al., Citation2018) and probabilistic Gaussian Processes (GPs see, e.g., Rasmussen & Williams, Citation2005). These methods are used across regression and classification tasks.

In unsupervised learning, the second type of experience, the data consist of independent variables (features or covariates) alone. The aim of unsupervised learning is to model the structure of the data to better understand their properties. As a result, evaluating an unsupervised model is often task and application-dependant (Murphy, Citation2022, §1.3.4). The prototypical unsupervised-learning task is clustering. It involves learning a function that groups similar data together according to a similarity measure and desiderata often expressed as an objective function. Several standard algorithms divided into hierarchical and non-hierarchical methods exist. The former uses the similarity between all pairs of data and finds a hierarchy of clustering solutions with a different number of clusters using either a bottom-up or top-down approach. Agglomerative clustering is a standard hierarchical approach. Non-hierarchical methods tend to be more computationally efficient in terms of dataset size. For example, K-means clustering is a well-known non-hierarchical method that finds a single solution using K clusters (MacQueen, Citation1967). Other unsupervised learning tasks include dimensionality reduction for example for visualisation or to prepare data for further analysis. Density modelling is another unsupervised task where a probabilistic model learns to assign a probability to each datum (Murphy, Citation2022, §1.3). Probabilistic models can be used to learn the hidden structure in large quantities of data (e.g., Hoffman et al., Citation2013). Further, probabilistic models are also used to generate high-dimensional data (e.g., images of human faces or English text) with high fidelity (Karras et al., Citation2021) and often referred in this context as generative models. Large Language Models are examples of such generative models (Bommasani et al., Citation2021).

Reinforcement learning (RL) is the third type of experience. RL models collect their own data by executing actions in their environment to maximise their reward. RL is a sequential decision-making task and is formalised using Markov decision processes (MDPs) (Sutton & Barto, Citation2018, §3.8). An MDP encodes a set of states, available actions, distribution over next states given current states and action, a reward function, and a discount factor. Partially observable MDPs (or POMDPs) extend the formalism to environments where the exact current state is unknown (Kaelbling et al., Citation1998). In RL, an agent’s objective is to learn a policy, a distribution over actions for each state in an environment. Tasks are defined by rewards attached to different states. Exact and approximate methods exist for solving RL problems. Whereas exact solutions are appropriate for smaller tabular problems only, deep neural networks are widely used for solving larger-scale problems that require approximate solutions yielding a set of techniques known as deep reinforcement learning (Mnih et al., Citation2015). An RL agent can also learn to imitate an expert either by learning a mapping from states/observations to actions as in supervised learning (a technique known as imitation learning; for a survey, see Hussein et al., Citation2017) or by trying to learn the expert’s reward function (inverse reinforcement learning Russell, Citation1998).

In addition to learning models for solving prediction tasks using one of the three experiences above, machine learning also studies methods for enabling the reuse of information learned from one or multiple datasets and environments to other similar ones. Representation learning studies how to learn such reusable information and it can use both supervised and unsupervised experiences (Murphy, Citation2023, §32). When using a deep learning model, a representation is obtained after one or more layer transformations of the data. Representation learning is used in a variety of situations including for transfer learning tasks, where a trained model is reused to solve a different supervised task (for a survey, see Zhuang et al., Citation2021).

In the last decade, machine learning models have achieved high performance on a variety of tasks including perceptual ones (e.g., recognising objects in images and words from speech) as well as natural language processing ones thereby becoming a core component of artificial intelligence (AI) methods. The goal of AI methods is to develop intelligent systems. Some of these advances shine a bright light on the ethical aspects of machine learning techniques and are active areas of study (see, e.g., Dignum, Citation2019; Barocas et al., Citation2019). Another area of active study is explainability (Phillips et al., Citation2021). Some of the most effective ML tools make predictions and recommendations that are hard to explain to users (for example when neural networks are employed). Clearly, lack of explainability slows down ML use in those contexts where decisions made due to those predictions and recommendations are life changing and involve a human in the loop, healthcare (applying a treatment), finance (refusing a mortgage), or justice (granting parole) to mention a few. So, explainability is currently one of the most crucial challenges for ML and AI and, at the same time, a tremendous opportunity for their wider applicability.

Further, advances in machine learning alongside statistics, data management, and data processing, as well as the wider availability of datasets from a variety of domains have led to the popularisation and development of data science (DS), a discipline whose goal is to extract insights and knowledge from these data. DS uses statistics and machine-learning techniques for inference and prediction, but it also aims at enabling and systematising the analysis of large quantities of data. As such, it includes components of data management, visualisation, as well as the design of (efficient) data processing algorithms (Grus, Citation2019).

2.1.1. Resources

Murphy (Citation2022) provides a thorough introduction to the field following a probabilistic approach and its sequel (Murphy, Citation2023) introduces advanced topics. Goodfellow et al. (Citation2016) provide a self-contained introduction to the field of deep learning (the field evolves rapidly and more advanced topics are covered through recent papers and in Murphy, Citation2023). Open-source software packages in Python and other languages are essential. They include data-wrangling libraries such as pandas (McKinney, Citation2010) and plotting ones such as matplotlib (Hunter, Citation2007). The library scikit-learn (Pedregosa et al., Citation2011) in Python offers an extensive API that includes data processing, a toolbox of standard supervised and unsupervised models, and evaluation routines. For deep learning, PyToch (Paszke et al., Citation2019) and TensorFlow (Abadi et al., Citation2015) are the standard.

2.1.2. Learning for combinatorial optimisation

The impressive success of machine learning in the last decade made it natural to explore its use in many scientific disciplines, such as drug discovery and material sciences. Combinatorial optimisation (CO; §2.4) is no exception to this trend and we have witnessed an intense exploration (or, better, revival) of the use of machine learning for CO. Two lines of work have strongly emerged. On the one side, ML has been used to learn crucial decisions within CO algorithms and solvers. This includes imitating an algorithmic expert that is computationally expensive like in the case of strong branching for branch and bound, the single application that has attracted the largest amount of interest (Lodi & Zarpellon, Citation2017; Gasse et al., Citation2019). The interested reader is referred to two recent surveys (Bengio et al., Citation2021; Cappart et al., Citation2021), the latter highlighting the relevance of GNNs for effective CO representation. On the other side, ML has been used end to end, i.e., for solving CO problems directly or leveraging ML to devise hybrid methods for CO. The area is surveyed in Kotary et al. (Citation2021).

2.2. Behavioural ORFootnote3

Behavioural OR (BOR) is concerned with the study of human behaviour in OR-supported settings. Specifically, BOR examines how the behaviour of individuals affects, or is affected by, an OR-supported interventionFootnote4. The individuals of interest are those who, acting in isolation or as part of a team, design, implement and engage with OR in practice. These individuals include OR practitioners playing specific intervention roles (e.g., modellers, facilitators, consultants), and other individuals with varying interests and stakes in the intervention (e.g., users, clients, domain experts, sponsors).

A concern with the behavioural aspects of the OR profession can be traced back to past debates in the 1960s, 1970s and 1980s (Churchman, Citation1970; Dutton & Walton, Citation1964; Jackson et al., Citation1989). Although these debates dwindled down in subsequent years, the emergence of BOR as a field of study represents a return to these earlier concerns (Franco & Hämäläinen, Citation2016; Hämäläinen et al., Citation2013). What motivates this resurgence is the recognition that the successful deployment of OR in practice relies heavily on our understanding of human behaviour. For example, overconfidence, competing interests, and the willingness to expend effort in searching, sharing, and processing information are three behavioural issues that can negatively affect the success of OR activities. Attention to behavioural issues has been central in disciplines such as economics, psychology and sociology for decades, and BOR studies draw heavily from these reference disciplines (Franco et al., Citation2021).

It is important to distinguish between the specific focus of BOR and the broader focus of behavioural modelling. The creation of models that capture human behaviour has a long tradition within OR, but it is not necessarily concerned with the study of human behaviour in OR-supported settings. For example, in the last 20 years operational researchers have produced an increasing number of robust analytical models that describe behaviour in, and predict its impact on, operations management settings (Cui & Wu, Citation2018; Donohue et al., Citation2020; Loch & Wu, Citation2007). Operational researchers also have produced simulation models that capture human behaviour within a system with different levels of complexity. For example, systems dynamics models incorporate high-level variables representing average behaviour (Morecroft, Citation2015; Sterman, Citation2000, §2.22), and discrete event simulation models capture human processes controlled by simple behavioural rules (Brailsford & Schmidt, Citation2003; Robinson, Citation2014, §2.19). More complex agent-based simulation models represent behaviour as emergent from the interactions of agents with particular behavioural attributes (Sonnessa et al., Citation2017; Utomo et al., Citation2018, §2.19). Overall, behavioural modelling within the OR field is concerned with examining human behaviour in a system of interest in order to improve that systemFootnote5. In contrast, BOR takes an OR-supported intervention as the core system of interest where human behaviour is examined. The ultimate goal of BOR is to generate an improved understanding of the behavioural dimension of OR practice, and use this understanding to design and implement better OR-supported interventions.

Another important distinction worth stating is that between BOR and Soft OR. At first glance, this distinction may seem unnecessary as BOR is a field of study within OR, while Soft OR refers to a specific family of problem structuring approaches (§2.20). Soft OR approaches have been developed to help groups reach agreements on problem structure and, often, appropriate responses to a problem of concern (Franco & Rouwette, Citation2022; Rosenhead & Mingers, Citation2001). However, while Soft OR intervention design and implementation typically require the consideration of behavioural issues, this is not the same as choosing human behaviour in a Soft OR intervention context as the unit of analysis. Of course, a study with such a focus would certainly fall within the BOR remit (e.g., Tavella et al., Citation2021). But note that BOR is also concerned with the study of human behaviour in other OR-supported settings, such as those involving the use of ‘hard’ and ‘mixed-method’ OR approaches.

Studies of behaviour in OR-supported settings assume implicitly or explicitly that human behaviour is either influenced by cognitive and external factors, or is in itself an influencing factor (Franco et al., Citation2021). In the first case, observed individual and collective action is taken to be guided by cognitive structures (e.g., personality traits, cognitive styles) manifested during OR-related activity – behaviour is influenced. In contrast, the second case assumes that individuals and collectives are responsible for determining how OR-related activity will unfold – behaviour is influencing. This raises the practical possibility that the same OR methodology, technique, or model could be used in distinctive ways by various individuals or groups according to their cognitive orientations, goals and interests (Franco, Citation2013). Whilst behaviour in practice is likely to lie somewhere between the influenced and influencing assumptions, BOR studies tend to foreground one of the extremes as the focus, while backgrounding the other.

BOR studies can adopt three different research methodologies to examine behaviour: variance, process, and modelling. A variance methodology uses variables that represent the important aspects or attributes of the OR-supported activity being examined. Variance explanations of behavioural-related phenomena take the form of causal statements captured in a theoretically-informed research model that incorporates these variables (e.g., A causes B, which causes C). The research model is then tested with data generated by the activity, and the research findings are assessed in terms of their generality (Poole, Citation2004). Adopting a variance research methodology typically requires the implementation of experimental, quasi-experimental, or survey research designsFootnote6. This involves careful selection of independent variables, which might be either manipulated or left untreated, and of dependent variables that act as surrogates for specific behaviours. Once information about all variables is collected, data is quantitatively analysed using a wide range of variance-based methods (e.g., analysis of variance, regression, structural equation modelling).

Behavioural studies that use a variance research methodology can produce a good picture of the generative mechanisms underpinning behavioural processes if they test hypotheses about those mechanisms. For example, variance studies in BOR have examined the impact of individual differences in cognitive motivation and cognitive style on the conduct of OR-supported activity (Fasolo & Bana e Costa, Citation2014; Franco et al., Citation2016b; Lu et al., Citation2001). There is also a long tradition of testing the behavioural effects of reconfiguring different aspects of OR-supported activity such as varying model or information displays (Bell & O’Keefe, Citation1995; Gettinger et al., Citation2013), and preference elicitation procedures (Cavallo et al., Citation2019; Hämäläinen & Lahtinen, Citation2016; Pöyhönen et al., Citation2001; von Nitzsch & Weber, Citation1993).

A process methodology is used to examine OR-supported activity as a series of events that bring about or lead to some behaviour-related outcome. Specifically, it considers as the unit of analysis an evolving individual or group whose behaviour is led by, or leading, the occurrence of events (Poole, Citation2004). Process explanations take the form of theoretical narratives that account for how event dynamics lead to a final outcome (Poole, Citation2007). These narratives are often derived from observation, but it is also possible to use an established narrative (e.g., a theory) to guide observation that further specifies the narrative.

Diverse and eclectic research designs are used to implement a process research methodology. Central to these designs is the task of identifying or reconstructing the process through the analysis of events taking place over time. For example, there is an important stream of BOR studies that examines the process of building models by experts and novices (Tako, Citation2015; Tako & Robinson, Citation2010; Waisel et al., Citation2008; Willemain, Citation1995; Willemain & Powell, Citation2007). There is also an increasing interest to use process methodologies to take a closer look at actual behaviour in OR-supported settings both, before, during and after OR-related activity is undertaken (Franco & Greiffenhagen, Citation2018; Käki et al., Citation2019; Velez-Castiblanco et al., Citation2016; White et al., Citation2016).

The variance and process approaches may seem opposite to each other, but instead they should be seen as complementary (Franco et al., Citation2021; Van de Ven & Poole, Citation2005). BOR studies using a variance research methodology can explore and test the mechanisms that drive process explanations of behaviour, while BOR studies adopting a process research methodology can explore and test the narratives that ground variance explanations of behaviour. One way of combining a variance and process approach within a single BOR study is by adopting modelling as a research methodology. A modelling approach would create models that capture the mechanisms that generate a process of interest such as, for example, trust on an OR-derived solution, and the model can be run to generate the characteristics of that process. Model parameters and structure can then be varied systematically to enable variance-based comparisons of trust levels. Furthermore, the trajectory of trust levels over time can be used to gain insights into the nature of the trust development process. As already mentioned, there is a long behavioural modelling tradition within OR but, as far as we know, its potential as a research methodology tool to specifically examine behaviour in OR-supported settings is yet to be realised.

In sum, the variance, process and modelling methodologies offer rich possibilities for the study of human behaviour in OR-supported settings. Which is best for a particular study will depend on the types of question being addressed by BOR researchers, their assumptions about human behaviour, and the data they have access to. Ultimately, a thorough understanding of behaviour in OR-supported settings is likely to require all three research methodologies.

For a detailed review of BOR studies the reader is referred to Franco et al. (Citation2021). A review of behavioural studies in the context of OR in health has been written by Kunc et al. (Citation2020). There are also two collections edited by Kunc et al. (Citation2016) and White et al. (Citation2020). The European Journal of Operational Research published a feature cluster on BOR edited by Franco and Hämäläinen (Citation2016a). Finally, BOR-related news and events can be found on the sites of the European Working Group on Behavioural ORFootnote7, and the UK BOR Special Interest GroupFootnote8.

2.3. Business analyticsFootnote9

Business Analytics has its origins in practice, rather than theory, as illustrated by some of the earliest publications on the subject (e.g., Kohavi et al., Citation2002). Senior executives began to realise the importance of analytics in the first decade of the new millennium because of the ready availability of large amounts of data, the maturity of business performance management, the emergence of self-service analytics and business intelligence, and the declining cost of computing power, data storage and bandwidth (Acito & Khatri, Citation2014).

Davenport and Harris (Citation2007) gave examples of companies becoming ‘analytical competitors’ by using analytics to support distinctive organisational capabilities. To achieve this level of maturity, it was argued that analytics needs to become a strategic competency. In the 1990s, Fildes and Ranyard (Citation1997) reported on the closure or dispersal of Operational Research groups. Davenport et al. (Citation2010) reflected a reversal of that trend, by focusing on how analytical talent can be organised as an internal resource. They suggested that there are four categories of people to be considered when finding, developing and managing analysts: champions, professionals, semi-professionals and amateurs. In 2012/13, the Institute for Management Science and Operations Research (INFORMS) introduced the Certified Analytics Professional program and examination. This covers the broad spectrum of skills required of analytics professionals, including business problem framing, analytics problem framing, data (handling), methodology selection, model building, deployment and lifecycle management (INFORMS, Citation2022).

The development of talent is just one of the prerequisites for Business Analytics to create value. Vidgen et al. (Citation2017) recommended ‘coevolutionary change’, aligning their analytics strategy with their strategies for Information and Communications Technology, human resources and the whole business. This helps to ensure that the necessary data assets are available, the right culture is developed to build data and analytics skills, and that there is alignment with the business strategy for value creation. Hindle and Vidgen (Citation2018) proposed a Business Analytics Methodology based on four activities, namely problem situation structuring, business model mapping, business analytics leverage and analytics implementation. They advocated a soft OR approach, Soft Systems Methodology (Checkland & Poulter, Citation2006), to support structuring and mapping activities.

Many definitions of Business Analytics have been proposed; for a review of early definitions, see Holsapple et al. (Citation2014). According to Davenport (Citation2013), “By analytics we mean the extensive use of data, statistical and quantitative analysis, explanatory and predictive models, and fact-based management to drive decisions and actions” (p. 7). Mortenson et al. (Citation2015) suggested that analytics is at the intersection of quantitative methods, technologies and decision making. Rose (Citation2016) considered analytics as the union of Data Science (which is data centric) and Operational Research (which is problem centric). Power et al. (Citation2018) proposed the following definition: “Business Analytics is a systematic thinking process that applies qualitative, quantitative and statistical computational tools and methods to analyse data, gain insights, inform and support decision-making”. Delen and Ram (Citation2018) pointed out that, although analytics includes analysis, it also involves synthesis and subsequent implementation. These broad perspectives, emphasising synthesis as well as analysis, and qualitative as well as quantitative approaches, are consistent with earlier writings on the use of a broad range of methods in Management Science (e.g., Mingers & Brocklesby, Citation1997; Pidd, Citation2009).

Business Analytics can be viewed from different orientations. From a methodological viewpoint, the subject covers descriptive, predictive and prescriptive methods (Lustig et al., Citation2010). These three categories are sometimes extended to four, with a distinction being drawn between ‘descriptive’ and ’diagnostic’ analytics, following the Gartner analytics ascendancy model (Maoz, Citation2013). Lepenioti et al. (Citation2020) argue that it is preferable to maintain the threefold categorisation to ensure consistency, with each category addressing both ‘What?’ and ‘Why’ questions. (Descriptive: ‘What happened?’, ‘Why did it happen?’; Predictive: ‘What will happen?’, ‘Why will it happen?’; Prescriptive: ‘What should I do to make it happen?’, ‘Why should I make it happen?’). For detailed literature reviews on descriptive, predictive and prescriptive analytics, the reader is directed to Duan and Xiong (Citation2015), Lu et al. (Citation2017), and Lepenioti et al. (Citation2020), respectively.

From a technological viewpoint, Business Analytics is facilitated by the integration of transactional data with big data streaming from social media platforms and the Internet of Things into a unified analytics system (Shi & Wang, Citation2018). These authors suggest that this integration can be achieved in two stages, starting with integration of traditional Enterprise Resource Planning (ERP) and big data, and proceeding to integration of big-data ERP with Business Analytics. Ruivo et al. (Citation2020) reported that analytics ranked second in extended ERP capabilities (behind collaboration) according to the views of 20 experts engaged in a Delphi study. Romero and Abad (Citation2022) suggested that cloud-based big data analytics software will not provide competitive advantage to firms that have not installed a large ERP system, although it will ensure that they do not lag further behind their sector-leading competitors.

From an ethical viewpoint, Business Analytics faces a number of challenges. Davenport et al. (Citation2010) recognised that issues of data privacy can be difficult to address, especially if an organisation operates in a wide range of territories or industries. Ram Mohan Rao et al. (Citation2018) summarised major privacy threats in data analytics, namely surveillance, disclosure, discrimination, and personal embarrassment and abuse, and reviewed privacy preservation methods, including randomisation and cryptographic techniques. A further ethical issue is that AI algorithms are likely to replicate and reinforce existing social biases (O’Neil, Citation2016). Such algorithmic bias is said to occur when the outputs of an algorithm benefit or disadvantage certain individuals or groups more than others without a justified reason. Kordzadeh and Ghasemaghaei (Citation2022) reviewed the literature on algorithmic bias and showed that most studies had examined the issue from a conceptual standpoint, with only a limited number of empirical studies. Similarly, Vidgen et al. (Citation2020) reviewed papers on ethics in Business Analytics and found that most were at the level of guiding principles and frameworks, with little of direct applicability for the practitioner. Their case study demonstrated how ethical principles (utility, rights, justice, common good and virtue) can be embedded in analytics development. For further discussions on ethics and OR, the reader is referred to Ormerod and Ulrich (Citation2013), Le Menestrel and Van Wassenhove (Citation2004), and Mingers (Citation2011a) but also §3.8.

Analytics maturity models have been developed to describe, explain and evaluate the development of analytics in an organisation. Król and Zdonek (Citation2020) reviewed 11 maturity models and assessed them in terms of the number of assessment dimensions, scoring mechanism, number of maturity levels, and the public availability of the methodology. They found that the most common assessment dimensions were technical infrastructure, analytics culture and human resources, including staff’s analytics competencies. Lismont et al. (Citation2017) undertook a survey of companies, based on the DELTA maturity model (Davenport et al., Citation2010) of data, enterprise, leadership, targets and analysts. They identified four analytics maturity levels from their survey. The most advanced companies tended to use a wider variety of analytics techniques and applications, to organise analytics more holistically, and to have a more mature data governance policy.

A crucial empirical question is whether Business Analytics adds value to an organisation. An early study on the effect of Business Analytics on supply chain performance was conducted by Trkman et al. (Citation2010). They examined over 300 companies, showing a statistically significant relationship between self-assessed analytical capabilities and performance. Oesterreich et al. (Citation2022) conducted a meta-analysis of 125 firm-level studies, spanning ten years of research in 26 countries. They found evidence of Business Analytics having a positive impact on operational, financial and market performance. They also found that human resources, management capabilities and organisational culture were major determinants of value creation, whereas technological factors were less important.

2.4. Combinatorial optimisationFootnote10

A Combinatorial Optimisation (CO) problem consists of searching for the optimal element in a finite collection of elements. More formally, given a set of elements and a family of its subsets, each defining a feasible solution and having an associated value, a CO problem is to find a subset having the minimum (or, alternatively, the maximum) value. The subsets may be proper, like, e.g., in the knapsack problem, or represented by permutations, like, e.g., in the assignment problem (see below). Typically, the feasible solutions are not explicitly listed, but are described in a concise manner (like a set of equalities and inequalities, or a graph structure) and their number is huge, so scanning all feasible solutions to select the optimal one is intractable. A CO problem can usually be modelled as an Integer Program (IP, see also §2.15) with linear or nonlinear objective function and constraints, in which the variables can take a finite number of integer values.

Consider for example the problem of assigning n tasks to n agents, by knowing the time that each agent needs to complete each task, with the objective of finding a solution that minimises the overall time needed to complete all tasks (Assignment Problem, AP). The solution could obviously be found by enumerating all permutations of the integers and selecting the best one. However, this number is so huge that such approach is ruled out even for small-size problem instances: for n = 30, we have

and the fastest supercomputer on hearth would need millions of years to scan all solutions. The challenge is thus to find more efficient methods. For example, one of the most famous CO algorithms (the Hungarian algorithm) can solve assignment problem instances with millions of variables in few seconds on a standard PC.

The algorithm mentioned above can be implemented so as to solve any AP instance in a time of order n3, i.e., in a time bounded by a polynomial function of the input size. Unfortunately, only for relatively few CO problems we know algorithms with such property, while for most of them (-hard problems) the best known algorithms can take, in the worst case, a time that grows exponentially in the size of the instance. In addition, Complexity theory (see also §2.5) suggests that the existence of polynomial-time algorithms for such problems is unlikely. On the other hand, CO problems arise in many industrial sectors (manufacturing, crew scheduling, telecommunication, distribution, to mention a few) and hence there is the prominent and practical need to obtain good quality solutions, especially to large-size instances, in reasonable times.

2.4.1. Origins

Many problems arising on graphs and networks (see §2.12) belong to CO (the AP discussed above can be described as that of finding a minimum weight perfect matching in a bipartite graph), and hence the origins of CO date back to the eighteen century. In the following, we narrow our focus to modern CO (see Schrijver, Citation2005). Its roots can be found in the first decades of the past century, when Central European mathematicians developed seminal studies on matching problems (König, Citation1916), paths Menger (Citation1927), and Shortest Spanning Trees (SST) (Jarník, Citation1930; Borůvka, Citation1926, results independently rediscovered by Prim, Citation1957 and Kruskal, Citation1957). The Fifties produced major results on the AP (Kuhn, Citation1955; Citation1956, on the basis of the results by König, Citation1916 and Egerváry, Citation1931, also see Martello, Citation2010), the Travelling Salesman Problem (Dantzig et al., Citation1954), and Network Flows (Ford & Fulkerson, Citation1962), as well as fundamental studies on basic methodologies: dynamic programming (DP; Bellman, Citation1957, see §2.9), cutting planes (Gomory, Citation1958, see §2.15), and branch-and-bound (Land & Doig, Citation1960).

2.4.2. Problems and complexity

The most important CO problems, for which we know there are polynomial algorithms, are the basic graph-theory problems mentioned in the previous section. Other important problems, which are relevant both from the theoretical point of view and from that of real-world applications, are instead -hard. The main

-hard CO problems arise in the following areas.

Scheduling. Given a set of tasks which must be processed on a set of processors, a scheduling problem asks to find a processing schedule that satisfies prescribed conditions and minimises (or maximises) an objective function, frequently related to the time needed to complete all tasks. This huge area, that includes literally hundreds of problems and variants (mostly -hard), is also discussed in §3.27.

Travelling Salesman Problem (TSP). Given a weighted (directed or undirected) graph, the problem is to find a circuit that visits each vertex exactly once (Hamiltonian tour) and has minimum total weight. This is one of the most intensively studied problems of CO, and is treated in detail in §2.12.

Vehicle Routing Problems (VRP). A VRP is a generalisation of the TSP which consists of finding a set of routes for a fleet of vehicles, based at one or more depots, to deliver goods to a given set of customers by satisfying a set of conditions and minimising the overall transportation cost.

Facility Location. These problems require to find the best placement of facilities on the basis of geographical demands, installation costs, and transportation costs, so as to satisfy a set of conditions and to minimise the total cost (see §3.13 for a detailed treatment).

Steiner Trees. Given a weighted graph and a subset S of vertices, it is requested to find an SST connecting all vertices in S (possibly containing additional vertices). These problems, which generalise both the shortest path problem and the SST, are treated in detail in §2.12.

Set Covering. Given a set of elements and a collection of its subsets, each having a cost, we want to find the least cost sub-collection whose union includes (covers) all the elements.

Maximum Clique (MC). A clique is a complete subgraph of a graph (i.e., it is defined by a subset of vertices all adjacent to each other). Given a graph, the MC problem is to find a clique of maximum cardinality (or, if the graph is weighted, a clique of maximum weight). We refer the reader to §2.12 for a detailed analysis.

Cutting and Packing (C&P). Given a set of “small” items, and a set of “large” containers, a problem in this area asks for an optimal arrangement of the items into the containers. Items and containers can be in one dimension (Knapsack Problems (KP), Bin Packing problems) or in more - usually two or three – dimensions (C&P). See §3.3 for more details.

Quadratic Variants of CO problems. A currently hot research area concerns CO problems whose “normal” linear objective function is replaced by a quadratic one. This greatly increases difficulty: in most cases problems which, in their linear formulation, can be solved in polynomial time (e.g., the AP) or in pseudo-polynomial time (e.g., the KP) become strongly -hard.

2.4.3. Exact methods for

-hard problems

-hard problems

For heuristic and approximation algorithms, we refer the reader to §2.13 and §2.5. With the exception of DP methods (§2.9), most exact algorithms for -hard CO problems, as well as most commonly used ILP solvers, are based on implicit enumeration. In the worst case, they can require the evaluation of all feasible solutions, and hence computing times growing exponentially with the problem size. The most common methods can be classified as

Branch-and-Bound (B&B);

Branch-and-Cut (B&C);

Branch-and-Price (B&P).

We will describe B&B, the other methods (and their combinations, as B&C-and-Price) being its extensions described in §2.15.

We consider a maximisation CO problem having an IP model with inequality constraints of ‘’ type. For a problem P, having feasible solution set F(P), z(P) denotes its optimal solution value, and ub(P) an upper bound on z(P). The main ingredients of B&B are branching scheme and upper bound computation.

Branching scheme. The solution is obtained as follows:

subdivide P into m subproblems, each having the same objective function as P and a feasible solution set contained in F(P), such that the union of their feasible solution sets is F(P). The optimal solution of P is thus given by the optimal solution of the subproblem having the maximum objective function value;

iteratively, if a subproblem cannot be immediately solved, subdivide it into additional subproblems.

The resulting method can be represented through a branch-decision tree, where the root node corresponds to P and each node corresponds to a subproblem.

A node of the tree can be eliminated if the feasible solution set of the corresponding subproblem is empty, or its upper bound is not greater than the value of the best feasible solution to P found so far.

Upper bound computation. A valid upper bound ub(P) can be computed as the optimal solution value of a Relaxation of the IP model of P, defined by:

a feasible solutions set containing F(P);

an objective function whose value is not smaller than that of P for any solution in F(P).

A relaxation is “good” if the resulting upper bound ub(P) is “close” to z(P) (i.e., if the gap between the two values, is “small”), and the relaxed problem is “easy” to solve, i.e., its optimal solution can be obtained with a computational effort much smaller than that required to solve P.

2.4.4. Relaxations

The most common relaxation methods are:

Constraint elimination: a subset of constraints is removed from the IP model of P, so that the resulting problem is easy to to solve. The most widely used case is the linear relaxation;

Linear relaxation: when the model is an Integer Linear Problem (ILP), removing the constraints that impose integrality of the variables leads to a Linear Program (LP), which is polynomially solvable, commonly used in ILP solvers (see §2.15);

Surrogate relaxation: a subset

of inequality constraints is replaced by a single surrogate inequality, so that the corresponding relaxed problem is easy to solve. The surrogate inequality is obtained by multiplying both sides of each inequality of

by a non-negative constant, and summing, respectively, the left-hand and right-hand sides of the resulting inequalities;

Lagrangian relaxation: a subset

of inequality constraints is removed from the model and “embedded”, in a Lagrangian fashion, into the objective function. For each inequality of

the difference between left-hand and right-hand sides (slack) multiplied by a non-negative constant is added to the objective function.

The relaxations can be strengthened by adding one or more valid inequalities (cuts) to the IP model of P, such that they are redundant for the IP model, but can become active when the IP model is relaxed (see §2.15).

2.4.5. Further readings

We refer the reader to the following selection of references for more details on the topics covered in this section. Well known, pre-1990 books are those by Garfinkel and Nemhauser (Citation1972, IP), Christofides (Citation1975, algorithmic graph theory), Garey and Johnson (Citation1979, complexity), Burkard and Derigs (Citation1980, AP), Lawler et al. (Citation1985, TSP), and the CO specific volumes by Lawler (Citation1976), Christofides et al. (Citation1979), Papadimitriou and Steiglitz (Citation1982), Martello et al. (Citation1987), and Nemhauser and Wolsey (Citation1988). We list more recent contributions in the order in which the topics were introduced:

CO: Cook et al. (Citation1998), Schrijver (Citation2003);

AP: Burkard et al. (Citation2012) for linear and quadratic AP, Cela (Citation2013) for quadratic AP;

Network Flows: Ahuja et al. (Citation1993);

Scheduling: Błażewicz et al. (Citation2001, Citation2007), Pinedo (Citation2012);

TSP: Gutin and Punnen (Citation2006), Applegate, et al. (Citation2007), Cook (Citation2011);

VRP: Golden et al. (Citation2008), Toth and Vigo (Citation2014);

Facility Location: Mirchandani and Francis (Citation1990), Laporte et al. (Citation2015);

Steiner trees: Hwang et al. (Citation1992), Prömel and Steger (Citation2012). Also see the recent survey by Ljubić (Citation2021);

Cutting and packing: Martello and Toth (Citation1990), Kellerer et al. (Citation2004). Also see the recent survey by Cacchiani et al. (Citation2022a, Citation2022b).

2.5. Computational complexityFootnote11

Operational Research develops models and solution methods for problems arising from practical decision making scenarios. Often, these solution methods are algorithms. The difficulty of a problem can be assessed empirically by evaluating the running times of corresponding algorithms, which requires careful implementations and meaningful test data. Moreover, this can be time-consuming and yields insights that depend on the skills of the programmer and are limited to the available test instances. Computational complexity represents an alternative approach that allows for a more general assessment of a problem’s difficulty that is independent of specific problem instances or solution algorithms.

2.5.1. Problem encoding and running times of algorithms

In complexity theory, the running time of an algorithm is expressed in terms of the size of the input, i.e., the amount of data necessary to encode an instance of the problem. Since computers store data in the form of binary digits (bits), the standard binary encoding represents all data of a problem instance in the form of binary numbers. The number of required bits (the encoding length) of an integer is roughly given by the binary logarithm of its absolute value. As an example, consider the binary encoding of instances of the well-known 0-1 knapsack problem (KP). An instance of KP consists of n items – each with a non-negative, integer weight and profit – and a positive, integer knapsack capacity c. We can assume that all n item weights are bounded by the capacity c and denote the value of the largest item profit by Then, the encoding length of a KP instance is bounded by

Rational numbers can be straightforwardly represented by their (integer) numerator and denominator, but their presence in the input might already influence a problem’s computational complexity (Wojtczak, Citation2018). Irrational numbers cannot be encoded in binary without rounding them appropriately, which means that a different kind of complexity theory is required when general real numbers are part of the input (see Blum et al., Citation1998, for details). Hence, the following exposition is restricted to the case of integer inputs, where the encoding length of an instance can be bounded by the number of integers needed to represent it multiplied with the binary logarithm of the largest among their absolute values (see the bound for KP instances provided above as an example).

To allow universal running time analyses of algorithms that are independent of specific computer architectures, asymptotic running time bounds described using the so-called -notation (Cormen et al., Citation2009) are used. Informally, every polynomial in n with largest exponent k is in

All terms with exponents smaller than k and the constant coefficient of nk are ignored. One is then often interested in polynomial-time algorithms whose running time is in

for some constant k, where

denotes the encoding length of instance I. A less preferred outcome would be a pseudopolynomial-time algorithm, where the running time is only required to be polynomial in the number of integers in the input and the largest among their absolute values (or, equivalently, in the exponentially larger encoding length of the input when using unary encoding, where the encoding length of an integer is roughly its absolute value).

2.5.2. The complexity classes

and

and

Most application scenarios encountered in Operational Research finally lead to an optimisation problem (often a combinatorial problem – see §2.4), where a feasible solution is sought that minimises or maximises a given objective function. Every optimisation problem immediately yields an associated decision problem, asking a yes-no question. For example, a minimisation problem consisting of a set of feasible solutions and an objective function f can be written as

For a given target value v, the associated decision problem then asks: Does there exist a feasible solution

such that

? Solving an optimisation problem to optimality trivially answers the associated decision problem for any given v. On the other hand, every algorithm for the decision problem can be used to solve the underlying optimisation problem. Given upper and lower bounds, the optimal solution value can be identified in polynomial time by performing binary search between these bounds using the decision problem to answer the query in every iteration of the binary search (assuming that the range of objective function values and the encoding lengths of the bounds are polynomially bounded).

Motivated by the above, the computational complexity of an optimisation problem follows from the complexity of its associated decision problem. Here, the most relevant complexity classes in Operational Research are probably and

which are often used to draw the line between “easy” and “hard” problems in this context. Formally, the class

(“polynomial”) consists of all decision problems for which a polynomial-time solution algorithm exists on a deterministic Turing machine (or, equivalently, in any other “reasonable” deterministic model of computation), while the class

(“nondeterministic polynomial”) consists of all decision problems for which the same holds on a nondeterministic Turing machine. Equivalently,

is the class of all decision problems such that, for any yes instance I, there exists a certificate with encoding length polynomial in

and a deterministic algorithm that, given the certificate, can verify in polynomial time that the instance is indeed a yes instance. Since the most natural certificate is often a (sufficiently good) solution of the problem,

can informally be defined as the class of decision problems for which solutions can be verified in polynomial time. For example, when considering the travelling salesman problem (TSP) on a given edge-weighted graph, the associated decision problem asks whether or not there exists a tour (Hamiltonian cycle) of at most a given length v. While no polynomial-time algorithm for this decision problem is known to date, the problem can easily be seen to be in

since the natural certificate to provide for a yes instance is simply a tour with length at most v, whose feasibility and length can be easily verified in polynomial time.

Observe that these definitions directly imply that Most researchers believe that

or, equivalently, that there are problems in

that do not admit polynomial-time solution algorithms. However, formally proving that

(or that

) is still one of the most famous open problems in theoretical computer science to date.

This so-called versus

problem can be equivalently expressed using the well-known notion of

-completeness (see, e.g., Garey & Johnson, Citation1979). Intuitively,

-complete problems are the hardest problems in

in the sense that, if one of these problems admits a polynomial-time solution algorithm, then so does every problem in

(and, thus, we would obtain

). A decision problem (not necessarily in

) with this property is also called

-hard. This means that a problem is

-complete if and only if it is both

-hard and contained in

The first problem shown to be

-complete in Cook’s famous theorem (Cook, Citation1971) is the (Boolean) satisfiability problem (SAT). Shortly after, Karp (Citation1972a) gave a list of 21 fundamental problems that are

-complete. While Cook’s proof that SAT is

-complete required considerable effort, proving that further problems are

-complete became significantly easier with this knowledge and hundreds – if not thousands – of problems were shown to be

-complete.

A decision problem is -complete if and only if Equation(1)

(1)

(1) it is contained in

and Equation(2)

(2)

(2) some

-complete problem (and, therefore, all problems in

) can be reduced to it via a polynomial-time reduction. Such a polynomial-time reduction works as follows: For any instance of the known

-complete problem (e.g., SAT or TSP), one has to construct an instance of the investigated problem in polynomial time such that the two instances are equivalent, i.e., the constructed instance is a yes instance if and only if the given instance is a yes instance. Note that the requirement that the instance must be constructed in polynomial time (and, therefore, have encoding length polynomial in the encoding length of the original instance) is crucial. A common error in reductions is that the encoding length of the constructed instance depends polynomially on the size of numerical values in the given instance (instead of their encoding length).

The importance of the encoding can be illustrated by the 0-1 knapsack problem (KP), which is -hard if binary encoding is used, but can be solved in polynomial time (via dynamic programming) if unary encoding is used (so

-hardness of the unary-encoded version would imply that

). Problems like this, i.e., problems whose binary-encoded version is

-hard, but whose unary-encoded version can be solved in polynomial time, are called weakly

-hard, while problems (such as SAT) that remain

-hard also under unary encoding are called strongly

-hard. The existence of a pseudopolynomial-time algorithm is possible for weakly

-hard problems, but not for strongly

-hard problems (unless

).

2.5.3. Approximation algorithms

While some realistic-size instances of -hard problems might still be solvable in reasonable time, this is not the case for all instances. In general, one can deal with

-hardness by relaxing the requirement of finding an optimal solution and instead settling for a “good-enough” solution. This leads to heuristics, whose aim is producing good-enough solutions in reasonable time (see §2.13 for details) and approximation algorithms (Vazirani, Citation2001; Williamson & Shmoys, Citation2011; Ausiello et al., Citation1999). Given

an

-approximation algorithm for an optimisation problem is a polynomial-time algorithm that, for each instance of the problem, produces a solution whose objective value is at most a factor

worse than the optimal objective value. The factor

which can be a constant or a function of the instance size, is then called the approximation ratio or performance guarantee of the approximation algorithm. While it is standard to use

for minimisation problems, there is no clear consensus in the literature as to whether

or

should be used for maximisation problems. For example, the simple extended greedy algorithm for the knapsack problem produces a solution with at least half of the optimal objective value on each instance, i.e., it is a 1/2- or a 2-approximation algorithm.

While inapproximability results can be shown for some -hard problems (see Hochbaum, Citation1997, ch. 10), others allow for approximation algorithms with approximation ratios arbitrarily close to one, i.e., they admit a polynomial-time approximation scheme (PTAS). A PTAS is a family of algorithms that contains a

-approximation algorithm for every

If the running time is additionally polynomial in

the PTAS is called a fully polynomial-time approximation scheme (FPTAS). If all objective function values are integers, every FPTAS can be turned into a pseudopolynomial-time exact algorithm, so strongly

-hard problems do not admit an FPTAS (unless

). Conversely, pseudopolynomial-time algorithms, in particular dynamic programming algorithms, often serve as a starting point for designing an FPTAS (Woeginger, Citation2000; Pruhs & Woeginger, Citation2007).

2.5.4. Further complexity classes

Theoretical computer science developed a wide range of complexity classes far beyond the vs.

dichotomy. Considering algorithms requiring polynomial space, i.e., for which the encoding length of the data stored at any time during the algorithm’s execution is polynomial in the encoding length of the input (but no bound on the running time is required), gives rise to the class PSPACE. It is widely believed that

but even whether

holds is not known.

In the theoretical analysis of bilevel optimisation problems (see, e.g., Labbé & Violin, Citation2016) the complexity class plays an important role (see Woeginger, Citation2021). Here, a yes instance I is characterised by the existence of a certificate of encoding length polynomial in

such that a certain polynomial-time-verifiable property holds true for all elements of a given set

As an example, consider the 2-quantified (Boolean) satisfiability problem. Here, an instance consists of two sets X and Y of Boolean variables and a Boolean formula over

The question then is whether there exists a truth assignment of the variables in X such that the formula evaluates to true for all possible truth assignments of the variables in Y. This definition immediately sets the stage for a bilevel problem, where the decision x of the upper level (the leader) should guarantee a certain outcome for every possible decision y at the lower level (the follower). It is widely believed that

although

-hardness does not rule out the existence of a PTAS (Caprara et al., Citation2014). Under this assumption,

-hardness does, however, rule out the existence of a compact ILP-formulation, which can be a valuable finding for bilevel optimisation problems.

For some -hard problems, one can construct algorithms with running time

for an arbitrary computable function f, where the parameter k describes a property of the instance I. Such problems are called fixed-parameter tractable. For example, the satisfiability problem SAT is fixed-parameter tractable with respect to the parameter k that represents the tree-width of the primal graph of the SAT instance. In this graph, the vertices are the variables and two vertices are joined by an edge if the associated variables occur together in at least one clause, see Szeider (Citation2003). This parametric point of view is captured in the W-hierarchy of complexity classes – see Niedermeier (Citation2006) and the seminal book by Downey and Fellows (Citation1999).

2.6. Control theoryFootnote12

Control theory deals with designing a control signal so that the state or output variables of the system meet certain criteria. It is a broad umbrella term that covers a variety of theories and techniques. Control theory has been widely applied in the studies of economics (Tustin, Citation1953; Grubbström, Citation1967), operations management (Simon, Citation1952; Vassian, Citation1955, also see (Sarimveis et al., Citation2008) for a recent review), and finance (Sethi & Thompson, Citation1970). Here, we do not intend to provide an exhaustive or comprehensive review. Instead, we try to structurally organise the concepts and techniques commonly applied in operations research, which means that technical details will be omitted. We direct interested readers to a number of textbooks in the reference list, and an excellent review by Åström and Kumar (Citation2014) for those interested in the development of control theory.

The major distinction between control theory and other optimisation theories is that the control variable to be designed is normally a time-varying, dynamic function. The control signal can either be dependent on the state variables (which is referred to as feedback control or closed-loop control) or independent (feedforward control or open-loop control). The design of control signals and control policies (defined as the function between the state of the system and the control, also known as “control laws” or “decision rules”) is based on the structure of the system to be controlled (sometimes called the “plant” in the control engineering literature). Thus, the type of the dynamical system often define the type of control problem. In continuous systems, the time variable is defined on the real axis, suitable to describe continuous processes such as fluid processing and finance. In discrete systems, time is defined as integers, suitable in cases such as production and inventory control, where the production quantity is released every day. Linear systems are comprised of linear (or affine) state equations, while nonlinear systems contain nonlinear elements. Nonlinear systems are more difficult to analyse and control, and may lead to complex system behaviours such as bifurcation, chaos and fractals (Strogatz, Citation2018). But there are linearisation strategies which approximate the nonlinear system locally as linear systems (Slotine et al., Citation1991). Based on whether random input is present, the dynamical system can be categorised into deterministic and stochastic.

There are two fundamental methods in the analysis of the system and control. The first relies on time-frequency transformations (Laplace transform for continuous systems and z-transform for discrete systems). A transfer function in the frequency domain can be used to represent and analyse the system (Towill, Citation1970). This method saves computational effort; however, it can only deal with linear system models and each transfer function only describes the relation between a single input and a single output (SISO). The second method directly tackles the state equations in the time domain and describes the movement of system state in the state space. It is suitable for nonlinear systems and multi-input-multi-output (MIMO) systems. With the advancement of computing technology, the computational burden faced by the time-based method becomes less significant. The literature refers to the frequency-based method as classic control theory (Ogata et al., Citation2010) and the time-based method as modern control theory.

The system under the effect of the control policy must be examined with respect to its properties and dynamic performance. Stability is a property of the dynamical system, that the system can return to its steady state after receiving a finite external disturbance. Stability is a fundamental precondition that almost all control designs must meet, with few exceptions such as clocks and metronomes, where a periodic or cyclic response is desired. The stability criterion is straightforward to derive for linear systems, where both frequency-based (e.g., Routh-Hurwitz stabibility criteria and Jury’s inners approach, Jury & Paynter, Citation1975) and time-based (e.g., the eigenvalue approach) methods exist. However, stability analysis for nonlinear systems is more challenging (Bacciotti & Rosier, Citation2005). Other important properties of the control system include controllability, defined as the ability to move the system to preferred state using only the control signal; and observability, defined as the ability to infer the system state using the observable output signals (Gilbert, Citation1963).

In addition to these intrinsic properties, the system can also be evaluated by the system’s response to some characteristic input functions. The step function (sometimes referred to as the Heaviside function) takes the value of zero before the reference time point, and one thereafter. The impulse function (the Dirac function) takes the value of infinity at the reference time point and zero otherwise. These two input functions usually represent an abrupt change in the external environment. The sinusoidal function can be used to describe the periodic and seasonal externalities. The Bode plot describes the amplitude and phase shift between the sinusoidal input and output. For stochastic environments, the white noise is used to mimic random disturbances. It is a random signal that follows an identical and independent Gaussian distribution and has a constant power spectrum. The noise bandwidth of the system determines the ratio between output and input variances when the input is iid. The value of the noice bandwidth can be derived from either the transfer function or the state space representation. This concept is used in analysing the amplification phenomena in supply chains (see §3.24).

In practise, the system state and even the system structure may be unknown. Therefore, statistical techniques, known as state estimation and system identification, have been developed. State estimation uses observable output data to estimate the unobservable system states. A popular technique for this purpose is the Kalman filter (Kalman, Citation1960), essentially an adaptive estimator that can be applied not only in linear, time-invariant cases (LTI, where the system is linear and does not change over time), but also non-linear and time-variant cases. For example, it has been applied to estimate the demand process from the observed sales data (Aviv, Citation2003). System identification attempts to “guess” the structure of the system from the input and output.

Along with the development of control theory, various control strategies have been proposed. They are designed to fit the structure of the system, the objective of the control, and most importantly, to offer a paradigm to design the control policy. In what follows, we provide a brief summary of control strategies. Linear control strategies can be represented linearly (in the form of transfer function). They offer great analytical tractability and satisfactory performance, especially when the open-loop system is also linear. Two widely adopted policies in this family are proportional-integral-derivative (PID) control and full-state feedback (FSF) control. In PID control, an error signal between the output and the reference input (e.g., a Heaviside function) is computed. The control signal is a linear combination of the error, the integral, and the derivative of the error. These three components can appear separately. The proportional control has been applied in mechanical and managerial mechanisms such as the centrifugal governor and production planning (Chen & Disney, Citation2007). The full-state feedback control defines the control signal as a linear combination of the full system state vector, where the coefficient vector (the “gain”) shares the same dimensionality as the state vector. By tuning the gain, the poles of the closed-loop system (the eigenvalue of the transition matrix or the roots of the characteristic equation) will change their position in the complex plane, adjusting the system performance. The full-state feedback policy can also be applied in production and inventory control (Gaalman, Citation2006).