?Mathematical formulae have been encoded as MathML and are displayed in this HTML version using MathJax in order to improve their display. Uncheck the box to turn MathJax off. This feature requires Javascript. Click on a formula to zoom.

?Mathematical formulae have been encoded as MathML and are displayed in this HTML version using MathJax in order to improve their display. Uncheck the box to turn MathJax off. This feature requires Javascript. Click on a formula to zoom.Abstract

With a large number of baseline covariates, we propose a new semiparametric modeling strategy for heterogeneous treatment effect estimation and individualized treatment selection, which are two major goals in personalized medicine. We achieve the first goal through estimating a covariate-specific treatment effect (CSTE) curve modeled as an unknown function of a weighted linear combination of all baseline covariates. The weight or the coefficient for each covariate is estimated by fitting a sparse semiparametric logistic single-index coefficient model. The CSTE curve is estimated by a spline-backfitted kernel procedure, which enables us to further construct a simultaneous confidence band (SCB) for the CSTE curve under a desired confidence level. Based on the SCB, we find the subgroups of patients that benefit from each treatment, so that we can make individualized treatment selection. The innovations of the proposed method are 3-fold. First, the proposed method can quantify variability associated with the estimated optimal individualized treatment rule with high-dimensional covariates. Second, the proposed method is very flexible to depict both local and global associations between the treatment and baseline covariates in the presence of high-dimensional covariates, and thus it enjoys flexibility while achieving dimensionality reduction. Third, the SCB achieves the nominal confidence level asymptotically, and it provides a uniform inferential tool in making individualized treatment decisions. Supplementary materials for this article are available online.

1 Introduction

Personalized medicine aims to tailor medical treatments according to patient characteristics, and it has gained much attention in modern biomedical research. The success of personalized medicine crucially depends on the development of reliable statistical tools for estimating an optimal treatment regime given the data collected from clinical trials or observational studies (Kosorok and Laber Citation2019). In the literature, there are two general statistical approaches and their hybrids for deriving an optimal individualized treatment rule (ITR) based on clinical trial data. The first general approach targets at direct optimization of the population average outcome under an ITR. Several independent research groups have proposed a new framework of deriving the ITR that recasts the problem of maximizing treatment benefit as a weighted classification problem (Rubin and van der Laan Citation2012; Zhang et al. Citation2012; Zhao et al. Citation2012; Chen, Zeng, and Kosorok Citation2016; Zhou et al. Citation2017). Several semiparametric and nonparametric methods have been further proposed under this framework Zhao et al. Citation2012; Huang and Fong Citation2014; Huang Citation2015; Laber and Zhao Citation2015; Zhu, Huang, and Zhou Citation2018, and they are more robust against model misspecification than the parametric approaches. Under this framework, the convergence rates of the resulting estimators were thoroughly studied in Zhao et al. (Citation2012) and Zhang et al. (Citation2018), among others, but it is generally difficult to build statistical inference upon the estimated ITRs (Laber and Qian Citation2019). To solve this problem, Jiang et al. (Citation2019) proposed an entropy learning method, and Wager and Athey (Citation2018) established asymptotic normality of a causal forest estimator.

The second general approach is to model the difference in average outcomes between two treatment groups conditional on predictive biomarkers that provide information about the effect of a therapeutic intervention. There are hybrids from the two approaches using mean-modeling Zhang et al. Citation2012; Taylor, Cheng, and Foster Citation2015; Zhang and Zhang Citation2018; Luckett et al. Citation2020, but the hybrid methods also inherit the difficulty of developing inferential tools from the first general approach. In this article, we focus on the idea of the second approach. With a single predictive biomarker, several authors have proposed to plot the estimated conditional treatment difference against the biomarker’s values or its percentiles (e.g., a covariate-specific treatment effect (CSTE) curve) obtained from nonparametric smoothing, along with its confidence bands, to derive an optimal ITR (Zhou and Ma Citation2012; Janes et al. Citation2014; Ma and Zhou Citation2014; Han, Zhou, and Liu Citation2017). It provides a direct and effective visual way of using the predictive biomarker for deriving an ITR, but the nonparametric smoothing method suffers from “curse of dimensionality.” With multiple biomarkers, Qian and Murphy (Citation2011) considered a parametric conditional mean model that involves higher order terms, and employed a penalized method for estimation. Cai et al. (Citation2011) proposed a two-step method, and Kang, Janes, and Huang (Citation2014) devised a boosting iterative algorithm to reduce the bias caused by the model misspecification of a working model. Moreover, Shi et al. (Citation2018) proposed a penalized multi-stage A-learning, and Zhu, Zeng, and Song (Citation2019) developed a high-dimensional Q-learning method to simultaneously estimate the optimal dynamic treatment regimes and select important variables. The existing methods try to either provide a flexible modeling strategy by relaxing the restrictive structural assumption embodied in parametric models, or handle high-dimensional covariates, but not both.

In our article, we aim at developing a new method that estimates the outcome model in a flexible way as well as selecting important variables simultaneously, when a large number of baseline characteristics such as genetic variables are present. Moreover, we would like to provide a uniform inferential procedure that takes into account uncertainty associated with the estimated optimal ITR, and graphically explores heterogeneity of treatment effects based on varying levels of biomarkers. To achieve these goals, we propose a generalization of the CSTE curve suitable for high dimensional baseline covariates. The CSTE curve represents the predictive ability of covariates in evaluating whether a patient responds better to one treatment over another. It depicts the heterogeneous treatment effects on the outcome through an unknown function of covariates, and thus it can visually represent the magnitude of the predictive ability of the covariates.

We propose a penalized semiparametric modeling approach for estimating the CSTE curve and selecting variables simultaneously. The proposed semiparametric model enjoys flexibility while achieving dimensionality reduction. It is motivated by the logistic varying-coefficient model considered in Han, Zhou, and Liu (Citation2017) for treatment selection with one covariate, and it meets the immediate needs from modern biomedical studies which can have a large number of baseline covariates. To make use of all covariates, one simple but effective way is to derive a weighted linear combination of all covariates as a summary predictor, and model the CSTE curve as an unknown function of this summary predictor. The weight or the coefficient for each covariate represents how important the covariate is for the prediction of the outcome. As a result, our proposed model involves two sets of high-dimensional coefficients fed into additive unknown functions for the two treatment groups. The development of the estimation procedure and the associated statistical properties is challenging and it needs different tools from the high-dimensional single-index model (Radchenko Citation2015). Moreover, we establish different convergence rates for the estimators of the high-dimensional coefficients and the estimators for the unknown additive functions, respectively. This new theoretical result makes it possible to further construct a simultaneous confidence band (SCB) for the CSTE curve based on the asymptotic extreme value distribution of a spline-backfitted kernel estimator (Wang and Yang Citation2007; Liu, Yang, and Härdle Citation2013; Zheng et al. Citation2016) for the unknown function of interest, while Radchenko (Citation2015) and other related works only provide a nonseparable convergence rate for the estimators of the coefficients and the unknown function in single-index models. Based on the SCB, we identify the subgroups of patients that benefit from each treatment, and the proposed method is flexible enough to depict both local and global associations between the treatment and baseline covariates.

The rest of the article is organized as follows. In Section 2, we introduce the CSTE curve and the proposed semiparametric logistic single-index coefficient model. Section 3 presents the estimation procedure and the asymptotic properties of the proposed estimators. Section 4 illustrates the application of the CSTE curves and the SCBs for treatment selection. In Section 5, we evaluate the finite sample properties of the proposed method via simulation studies, while Section 6 illustrates the usefulness of the proposed method through the analysis of a real data example. A discussion is given in Section 7. All technical proofs are relegated to the online supplementary materials.

2 Methodology

We consider a sample of n subjects, a binary treatment, denoted by Zi

= 1 if the subject i is assigned to treatment and otherwise, a p-dimensional vector of covariates, denoted by Xi

, and binary-valued outcomes, denoted by Yi

. Let

, be independent and identically distributed (iid) copies of

. The goal is to estimate the optimal treatment regime using the observed data. We consider the CSTE curve given in Han, Zhou, and Liu (Citation2017), which has the following form:

where

, and Y(1) and Y(0) denote the potential outcomes if the active treatment and the control treatment are received, respectively (Rubin Citation2005). Moreover,

. Under the unconfoundeness assumption such that

, the CSTE curve can be re-expressed as

Denoting , we model the logarithm of odds ratio as

(1)

(1) where

and

are unknown single-valued functions of p variables,

and

are two p-vectors of unknown parameters. We see

The two sets of high-dimensional coefficients and the two unknown functions in (1) are chosen to simultaneously maximize the log-likelihood function of the binomial distribution. The proposed model is a flexible semiparametric model and is robust against model misspecification. We call it sparse logistic single index coefficient model (SLSICM). Our SLSICM contains the varying coefficient (VC) model considered in Han, Zhou, and Liu (Citation2017) as a special case. We have the same form when p = 1. As an extension of the VC model, the varying-index coefficient model considered in Ma and Song (Citation2015) can be applied to the cases with several covariates and continuous responses. Moreover, an important and related work, Song et al. (Citation2017), proposed a semiparametric model in which g1 has a single-index structure and g2 is a pure nonparametric function of X. This model suffers from the curse of dimensionality when the number of covariates is large. In our article, we allow the number of covariates to be much larger than sample size. As a result, our proposed SLSICM involves two sets of high-dimensional unknown coefficients built into additive unknown functions with a nonlinear link function, and it is considered as a hybrid of the high-dimensional single index model (HSIM) and the nonparametric additive model. Developing the estimation procedure and the statistical theories for SLSICM is challenging, and it needs different tools from HSIM. It is worth noting that estimation of HSIM has been studied in the past several years, and most existing works use a sliced inverse regression approach to estimate the index coefficients in HSIM under a linearity condition on the covariates; see Jiang and Liu (Citation2014) and Neykov, Liu, and Cai (Citation2016), among others. This method is not applicable to our setting, and it does not directly estimate the unknown function. Radchenko (Citation2015) proposed to estimate the coefficients and the unknown function in HSIM jointly, but they only provide a nonseparable convergence rate for the resulting estimators of the coefficients and the unknown function. In our proposed SLSICM, we establish different convergence rates for the estimators of the coefficients β1 and β2 and the estimators of the unknown functions g1 and g2, respectively. This new theoretical result makes it possible to further construct the asymptotic SCB based on the asymptotic extreme value distribution of a spline-backfitted kernel estimator for the CSTE curve.

Unlike parametric models, the parameter vectors βk

are not identified without further assumptions. For the purpose of model identification, we assume that βk

for k = 1, 2 belong to the parameter space where

denotes the l2 norm of a vector. This restriction together with Assumption 2 given in Section 3.2 will make the model (1) identifiable. We will provide a formal proof of the model identifiability in Section 1.1 of the supplementary materials. Based on the constraint that

, we eliminate the first component in βk

and obtain the resulting parameter space:

. Let

. The derivative with respect to the coefficients

can be easily obtained using the chain rule under the above parameter space. For high-dimensional problems (with a large number of covariates), p can be much larger than n but only a small number of covariates are important or relevant for treatment selection. To this end, we assume that the number of nonzero elements increases as n increases, but it is much smaller than n. Without loss of generality, we assume that only the first

components of βk

are nonzeros, that is, we can write the true values as βk

. For model identifiability, we require one component in βk

to be nonzero. Without loss of generality, we let the first component

to be nonzero.

3 Estimation and Theory

3.1 Algorithm

In this subsection, we discuss the estimation of model (1). We minimize the negative log-likelihood function simultaneously with respect to the parameters βk

and the functions gk

for (k = 1, 2). The SLSICM has the form Therefore, we seek the minimizer of the following negative log-likelihood function given

:

(2)

(2)

To overcome the problem of high-dimensional covariates, we exploit the sparsity through parameter regularization. With the sparsity constraint of ’s, we minimize the following penalized negative log-likelihood

(3)

(3) where

is a penalty function with a tuning parameter λ that controls the level of sparsity in βk

, k = 1, 2.

The functions , k = 1, 2, are unspecified and are estimated using B-splines regression. Next, we introduce the B-splines that will be used to approximate the unknown functions. For k = 1, 2, we assume the support of

is

Let

be an equally spaced partition of

, called interior knots. Then,

is divided into subintervals

and

, satisfying

uniformly in n for some constant

, where

increases with the sample size n. We write the normalized B spline basis of this space (de Boor Citation2001) as

, where the number of spline basis functions is

, and qk

is the spline order. For computational convenience, we let

and

so that

. In practice, cubic splines with order q = 4 are often used. By the result in de Boor (Citation2001), the nonparametric function can be approximated well by a spline function such that

for some

For notational simplicity, we write

as

. Therefore, the estimates of the unknown index parameters βk

and the spline coefficients δk

are the minimizers of

(4)

(4)

Denote for k = 1, 2. For notational simplicity, we use U to denote Uk

by suppressing k. To allow for possibly unbounded support

of U, according to the method given in Chen and Christensen (Citation2015), we can weigh the B-spline basis functions by a sequence of nonnegative weighting functions

given by

if

and

otherwise, where

is compact, convex and has nonempty interior and

for all n. For the choices of

, we refer to Chen and Christensen (Citation2015) for the discussions. When

is compact, we simply set

for all

. Then we obtain a set of new B-spline basis functions:

, and replace

by

in the above minimization problem for obtaining the estimators

and

. As given in Chen and Christensen (Citation2015), the asymptotic properties including consistency and asymptotic distribution for the estimator of the unknown function

still hold for

.

In principle, we would like to obtain the estimates of βk

and δk

by minimizing the penalized negative log-likelihood given in (4). In practice, this minimization is different to achieve, and thus an iterative algorithm (Watson and Engle Citation1983) is often applied. To this end, we obtain the estimates of and

through an iterative algorithm described as follows, although our theoretical properties given in Section 3.2 are established for the estimators which minimize (4).

Step 1: Given βk

, the solution of δk

is easily obtained. Reexpressing the model gives

This can be viewed as a logistic regression model using as the regressors without intercept term.

Step 2: Given δk

, it remains to find the solution that minimizes (3) with respect to βk

. Let and

be the current estimates for βk

and

, respectively. Let

. We approximate

where

is the Jacobian matrix of size p by p – 1. To obtain the sparse estimates of

, we carry out a regularized logistic regression with

as the regressors with a known intercept term given as

This produces an updated vector . Then we set

, for k = 1, 2. Steps 1 and 2 are repeated until convergence.

We obtain the initial value of βk

through fitting a regularized logistic regression by assuming that . In Step 2, we use the coordinate descent algorithm (Breheny and Huang Citation2011) to fit the regularized regression. Moreover, we choose to use the nonconvex penalties such as MCP and SCAD which induce nearly unbiased estimators. The MCP (Zhang Citation2010) has the form

and the SCAD (Fan and Li Citation2001) penalty is

where γ is a parameter that controls the concavity of the penalty functions. In particular, both penalties converge to the L1 penalty as

. We put γ in the subscript to indicate the dependence of these penalty functions on it. In practice, we treat

as a fixed constant. The B-spline basis functions and their derivatives are calculated using the bsplineS function in R package fda.

From the above algorithm, we obtain the spline estimators of the functions and

. However, the spline estimator only has convergence rates but its asymptotic distribution is not available in the additive model settings with multiple unknown functions (Stone Citation1985), so no measures of confidence can be assigned to the estimators for conducting statistical inference (Wang and Yang Citation2007). The spline-backfitted kernel (SBK) estimator is designed to overcome this issue for generalized additive models (Liu, Yang, and Härdle Citation2013), which combines the strengths of kernel and spline smoothing, is easy to implement and has asymptotic distributions. Denote the SBK estimator of

as

. We obtain

for a new input vector X0 as follow:

Step 3: Given the spline estimate , the loss criterion for a local linear logistic regression can be expressed as the following negative quasi-likelihood function:

where

is a kernel function with bandwidth h. We obtain the estimate

by minimizing the above loss function. Then the predicted CSTE value at X0 is

(5)

(5)

Based on the above SBK estimator of CSTE, we can construct a SCB which is used for optimal treatment selection. The details will be discussed in Section 4.

3.2 Asymptotic Analysis

For any positive sequences and

, let

denote

for a constant

and

denote

. For a vector

denote

and

. For a matrix

, denote

, and

, where Ai

is the ith row. For a symmetric matrix A, let

and

be the smallest and largest eigenvalues of A, respectively. We assume that the nonsparsity size

and the dimensionality satisfies

for some

. Denote

Then we write

Denote the Jacobian matrix as

, and

which is a block diagonal matrix. We use the superscript “0” to represent the true values.

Denote the first components of Xi

as

and the last

components as

Denote

as the sigmoid function. Then the true expected value of the response given (X, Z) is

Define the space as a collection of functions with finite L

norm on

by

For a given random variable U, define its projection onto the space as

where

and

. For a vector

, let

Moreover, we definewhere

for

, and

where

.

To establish asymptotic properties, we need the following regularity conditions.

Assumption 1.

The penalty function is a nondecreasing symmetric function and concave on

. For some constant a > 0,

is a constant for all

and

.

exists and is continuous except for a finite number of t and

.

Assumption 2.

For k = 1, 2, for any given Θ, gk

is a nonconstant function on

, where

is compact, convex and has nonempty interior, and

for some r > 1, where

is the collection of all functions such that the

order derivative satisfies the Hölder condition of order γ with

and

, that is, for any

, there is a

such that

for all u1 and u2 in the support of gk

.

Assumption 3.

For for some constant

. For

, for some

and

.

Assumption 4.

There exist such that

almost surely, and

, where

, for

.

Assumption 5.

Let Assume that

.

Assumption 1 is a typical condition on the penalty function, see Fan and Lv (Citation2011). The concave penalties such as SCAD and MCP satisfy Assumption 1. Assumption 2 is a typical smoothness condition on the unknown nonparametric function, see, for instance, Condition (C3) in Ma and He (Citation2016). Moreover, we require that the unknown functions be nonconstant functions to identify the index parameters βk

. Assumption 3 is required for the covariates, see Condition (A5) in Ma and Yang (Citation2011). Moreover, the design matrix needs to satisfy Assumption 4. A similar condition can be found in Fan and Lv (Citation2011). Assumption 5 assumes that half of the minimum nonzero signal in is bounded by some thresholding value, which is allowed to go to zero as

. This assumption is needed for variable selection consistency established in Theorem refth1.

Denote . Let

. Theorem refth1 establishes the consistency for the parameters in model (1).

Theorem 1.

Under Assumptions (A1)–(A5), and ,

, there exists a strict local minimizer

of the loss function given in (3) such that

for

with probability approaching 1 as

, and

.

Remark.

Based on the assumption given in Theorem refth1, the number of spline basis functions needs to satisfy .

The following Theorem presents the convergence rate for the spline estimator of the unknown functions.

Theorem 2.

Under conditions given in Theorem refth1, we have , for k = 1, 2.

To estimate the SCB, we need the following assumptions, see Assumptions (A4) and (A6) in Zheng et al. (Citation2016).

Assumption 6.

Let r = 2. The kernel function K is symmetric probability density function supported on and has bounded derivative. The bandwidth h satisfies

and

for some constant

.

Assumption 7.

The joint density of and

is a bounded and continuous function. The marginal probability density functions have continuous derivatives and the same bound as the joint density. When

, the probability density function of

is bounded, continuous, and has continuous derivative.

We borrow some notations in Zheng et al. (Citation2016):(7)

(7)

where and f(u) is the density function of

. Define the quantile function

for any

, where

and

. Without loss of generality, assume

is in the range

and let

be a set of x such that

. Theorem refband:thm is an adaptation from Theorem 1 in Zheng et al. (Citation2016). It provides a method to construct the SCB for g1.

Theorem 3.

Under Assumptions 1–7, and and

, we have

where

.

Remark 1.

Based on the proof for Theorem refband:thm, we can readily obtain that in distribution, for given

. Thus, the

pointwise confidence interval for

is

, where

is the

quantile of the standard normal distribution.

Remark 2.

For details of implementations of kernel and spline estimation for functions in (7), we refer to Zheng et al. (Citation2016). As suggested in Zheng et al. (Citation2016), we use a data-driven under smoothing bandwidth and

is given in Zheng et al. (Citation2016). We let the number of spline interior knots be

. The

SCB for

is

Remark 3.

From Theorem refband:thm, we see that the width of the SCB has the order of . The width of the pointwise confidence interval given in Remark 1 has the order of

. Based on the assumption for the bandwidth h, the SCB is wider than the pointwsie confidence interval asymptotically. In deriving the asymptotic distribution of the SBK estimator for the unknown function, it also involves the estimation error from the parameter estimation, which is given in Theorem refth1. We assume that s increases with n in a slow rate, so that this estimation error is negligible in the construction of the asymptotic SCB. Our interest focuses on constructing the SCB for the CSTE curve, based on which we make treatment recommendations to a group of new patients. For conducting inference for the parameters, it can be a future interesting research topic to explore.

The theoretical result in Theorem refband:thm is obtained from the asymptotic extreme value distribution of the SBK estimator for the CSTE curve. This is the key result for obtaining our asymptotic SCB, which achieves the nominal confidence level asymptotically. It is worth noting that we provide different convergence rates for the estimators of the high-dimensional coefficients and the estimators for the unknown functions in Theorems 1 and 2, respectively. This theoretical result enables us to further derive the asymptotic distribution of , when the number of important covariates satisfies certain order given in Theorem refband:thm. The SCB provides an important uniform inferential tool for identifying subgroups of patients that benefit from each treatment and make treatment recommendations to the subgroups, while the pointwise confidence interval can only provide treatment selection for a given patient.

4 Treatment Selection via Confidence Bands

In this section, attention is focused on making individualized treatment decision rule for patients. We provide an example to illustrate how to select the optimal treatment based on the CSTE curve and its SCBs. The goal of treatment selection is to find which group of patients will benefit from new treatment based on their covariates. By the definition of CSTE curve, if we assume that the outcome of interest is death, the CSTE curve is the odds ratio of the treatment effect in reducing the probability of death. That is, a positive value means that the patients will not benefit from new treatment since they may have a higher death rate than patients who receive old treatment. We define the cutoff points as the places where the upper and lower confidence bands equal to 0. Based on these cutoff points, we are able to identify the regions with the positive and negative values of

, respectively, so that it will guide us to select the best treatment for a future patient. To summarize, this treatment selection method consists of the following steps:

Step 1. Use the existing dataset to obtain the SBK estimator of the CSTE curve and the corresponding SCBs

at a given confidence level

.

Step 2. Identify the cutoff points for and the regions of positive and negative values of the constructed SCBs, as illustrated in .

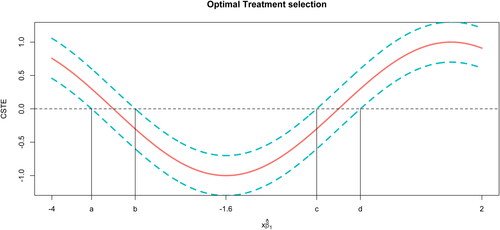

Fig. 1 A simulated example, demonstrating CSTE estimation, SCBs, and cutoff points. The solid curve is the estimate of the CSTE function. The dashed curves are the corresponding SCBs of the CSTE curve. Four vertical lines indicate the locations of the cutoff points.

Step 3. For a new patient, we first calculate , where

is the observed value of the covariates for this new patient. Then we make treatment recommendation for this patient based on what region the value of

falls into.

We use the following example to illustrate the method of using the SCBs to select optimal treatment for patients. We assume that has a range of

. In , the solid line represents a CSTE curve and dashed lines above and below the curve are the corresponding 95% SCBs. We assume that the outcome variable Y is the indicator of death. As shown in , the CSTE is decreasing when the value of

is from –4 to –1.6 and it is increasing when the value of

is from –1.6 to 2. In general, when the

value of a patient is within the range of

, a larger value implies that the patient more likely benefits from the new treatment than from the old treatment. On the other hand, if the patient’s

value falls into

, the new treatment is more beneficial when a smaller value of

is observed. Moreover, the results in also imply that we are 95% confident that a new patient with the

value falling into the region

or

will benefit from the old treatment, since the lower bands are all above zero in this region. If

falls into region

, then we have 95% confidence that the patient should receive the new treatment, as the upper bands are all below zero in this region. For the intervals

and

, zero is covered in the confidence band indicating that there is no significant difference between the effects of the new and old treatments.

It is worth nothing that the regions of indifference can also be constructed based on a parametric model using the limiting distribution of the parametric estimators; see more discussions in Wu (Citation2016). However, this approach is only directly applicable to the settings with low-dimensional covariates and to each given patient instead of a group of patients. Han, Zhou, and Liu (Citation2017) defined a modified version of the CSTE curve using the quantile of the covariate to compare covariate’s capacities for predicting responses to a treatment. Then they use the “best” covariate as a guidance to select treatment. This may be time-consuming when there is a large number of covariates. Our method selects relevant covariates automatically in the estimation procedure and combines information from all covariates through a weighted combination of those covariates with the weights estimated from the data. The weight reflects how important the corresponding covariate is for predicting the response. As a result, our method is more convenient and flexible in selecting optimal treatment.

5 Simulation Study

In this section, we investigate the finite-sample performance of our proposed method via simulated datasets. We run all simulations in R in a linux cluster. We consider the following examples:

Example 1.

Example 2.

Example 3.

Example 4.

Example 5.

.

The simulated data are generated as follows: the outcome Y is sampled from a binomial distribution with probability of success equals to ; the covariates X are generated from a truncated multivariate normal distribution with mean vector 0, covariance matrix with

, and each covariate is truncated by

; the binary scale covariate Z is sampled from Binomial

, which means that each subject is randomly assigned to either control or treatment group. We set

, and

. For Examples 1–3, we set

, and sample size n = 500, 750, 1000. For Examples 4 and 5, we consider that g2 and g1 are misspecified respectively. We choose p = 50, n = 1000, and

. For each pair of n and p, we repeat the simulations J = 300 times.

To obtain sparse estimates of βk

for high-dimensional cases, we choose SCAD as the penalty function and let (Fan and Li Citation2001). The optimal tuning parameter λ is chosen from a geometrically increasing sequence of 30 parameters by minimizing the modified Bayesian information criterion (Wang, Li, and Leng Citation2009):

where

is the loss function in (3),

is the number of nonzero elements in βk

,

.

We evaluate the following metrics for the nonparametric function based on 200 equally spaced grid points on the range of

: the mean squared error (MSE) of its estimator; the mean absolute error (MAE) of its estimator; the average coverage probability (CP) of its SCBs. Since the models in Example 1–3 are correctly specified, we compute the average number of parameters that are incorrectly estimated to be nonzero, the average number of parameters that are incorrectly estimated as zero; the proportions that all relevant covariates are correctly selected and the proportions that some relevant covariates are not selected.

We define that the oracle estimator of is obtained when the true indexes of nonzero components in β1 and β2 are given in Examples 1–3. The results are summarized in and . In , we see that the coverage probabilities are slightly less than 95% but close to 90% when the sample size is 750. When the sample is 1000, the empirical coverage is close to the nominal 95% confidence level. The mean square error and mean absolute error also decrease as sample size n increases. This indicates that the estimates of confidence bands become more accurate as the sample size increases. shows that the proposed asymptotic SCB performs well when the sample size is relatively large. However, with small sample size, a bootstrap procedure is recommended to construct the SCB. This can be a future research topic to explore. In , when c is small (i.e., the degree of misspecification is small), the numerical results are comparable to those for c = 0 with no misspecification. However, the performance deteriorates when c becomes large. Moreover, the method is more robust to the misspecification of g2 than that of g1. The model selection results are summarized in . The false positive and false negative are decreasing when n increases. It is worth noting that in practice we often need to make a reliable treatment recommendation strategy to a group of patients instead of a specific given patient, and the SCBs can serve the former purpose well. In , we also compare the performance of the pointwise confidence interval (CI) with that of the SCB for a group of randomly generated new patients with group size 1, 10, 100, 200, 500, respectively. The CI and SCB are constructed based on the data with sample

. We see that the pointwise CI has low empirical coverage rates when the group size is greater than 1. The pointwise CI can work well when we only have one patient. However, when we have a group of patients with different covariate values, we can make an incorrect treatment decision based on the pointwise CI for these patients.

Table 1 Simulation results for Examples 1–3.

Table 2 Simulation results for Examples 4 and 5 (misspecified cases) with p = 50 and n = 1000.

Table 3 Model selection results for Examples 1–3.

Table 4 Comparisons between the pointwise confidence interval and the SCBs for the empirical coverage rate of a group of randomly generated new patients with group size 1, 10, 100, 200, and 500, respectively.

6 A Real Data Example

In this section, we present and discuss the results of applying the procedure described in previous sections to a real dataset. We illustrate the applications of the CSTE curve in a real-world example and provide some insight into the interpretation of the results. The goal is to analyze the effect of Zhengtianwan in the treatment of migraines. This is a multicenter, randomized, double-blind, placebo-controlled trial on the effectiveness of Zhengtian pill on treating patients with migraines. A migraine is a common neurological disorder characterized by recurrent headache attacks. Migraine treatment involves acute and prophylactic therapy. The objective of this study is to evaluate the efficacy and safety of Zhengtian Pill for migraine prophylaxis. Zhengtian Pill, a Chinese Patented Medicine approved by the State Food and Drug Administration of China in 1987, has been used in clinical practice for more than 20 years in China to stimulate blood circulation, dredge collaterals, alleviate pains, and nourish the liver. To evaluate the effectiveness of Zhengtian Pill in preventing the onset of migraine attacks, a large-scale, randomized and prospective clinical study was conducted. Eligible patients were monitored during a baseline period of four weeks, during which the headache characteristics were recorded as baseline data. In this period, any use of migraine preventive medications was prohibited. After the baseline period, a 12-week treatment period and four-week follow-up period were carried out. Patients were requested to keep a headache diary throughout the whole study period, from which investigators were able to extract detailed information of migraine attacks including migraine days, frequency, duration, and intensity as well as the use of acute medication during the study period. The outcome measures were evaluated at 4, 8, and 12 weeks, and during the follow-up period. The patients who met the inclusion criteria were randomly assigned into the experimental group and control group in a 1:1 ratio using a computer-generated stochastic system.

In our analysis, the response variable Y is a binary outcome indicating if the number of days that headaches occur has decreased 8 weeks after patients were treated. Z is another indicator: Z = 1 means the subject is assigned in the experimental group; Z = 0 means in the control group. The covariates x1 to x3 are gender (0 for male, 1 for female), height and body weight, x4 to x12 are overall scores of Traditional Chinese Medicine Syndromes (Huozheng, Fengzheng, Xueyu, Tanshi, Qixu, Yuzheng, Xuexu, Yinxu, and Yangxu) for migraine. Those scores, with a range of 0–20, are commonly used to describe the severity of migraine symptoms in Traditional Chinese Medicine. The higher of the score means that the syndrome is more severe. All covariates are centered and standardized as input. We have 204 observations where 99 are in the experimental group and 105 are in the control group. The purpose of this exercise is to model the odds ratio as a function of those covariates. The number of covariates p = 12 might be regarded as small, so we estimate the CSTE curve using the algorithm in Section 3.1 with and without model selection. We use SCAD as our penalty function and the optimal tuning parameter is selected via the modified BIC criterion. The corresponding SCBs for the CSTE curve are calculated. The results are not intended as definitive analyses of these data.

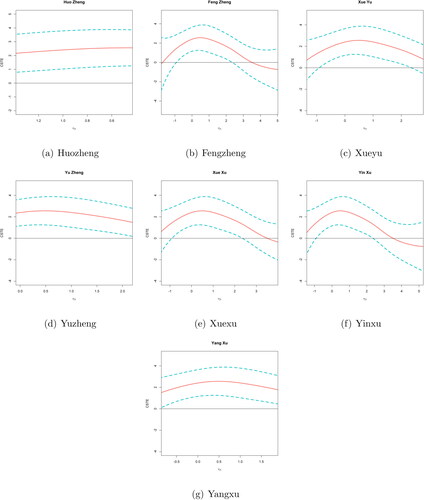

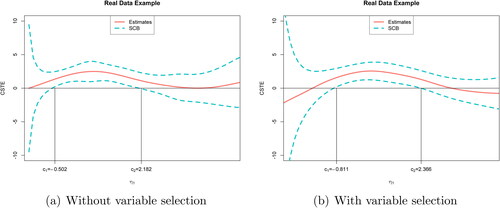

summarizes the point estimates of β1 and the corresponding standard errors. shows two estimated CSTE curve and their SCBs: (a) using all 12 variables; (b) using all variables except x7 and x8 (not selected). To aid in interpretation for each covariate, we depict each covariate versus g1 where other covariates are projected onto their mean values in . As we can see, Huozheng has a monotonic dependence on the CSTE, but the corresponding relationships for other overall scores are highly nonlinear; most of them have a quadratic appearance. On the basis of these figures, we can conclude that the odds ratio does depend on the linear combination of the covariates in a nonlinear manner.

Fig. 2 Real data example. Red curve is the CSTE curve; blue dashed curves are the SCBs. (a) Two cutoff points are –0.502 and 2.182; (b) two cutoff points are –0.811 and 2.366.

Table 5 Estimates of β1 and the corresponding standard errors (in parentheses).

When all variables are used to estimate the curve, the two cutoff points are . The estimates of the overall rating of biomarker values are

where the majority of the points (>95%) fall into the interval

. Two cutoff points divide this interval into three parts. Since the response variable y = 1 represents headache improvements after 8 weeks treatment, higher CSTE value means that the patient more likely benefits from the treatment. Suppose a new patient with biomarker value x0. When

falls into

and

, the treatment does not have a significant effect. When it falls into (c1, c2), then this treatment can improve the migraine of this patient. In the Zhengtianwan data, there are 37.7% of patients fall into the interval. On the other hand, when x7, x8 are removed from the model, we obtain a new estimate

of β1. As we can see from , the positive region of the CSTE curve becomes wider than that in (a), and the two cutoff points become –0.811 and 2.366. The majority of

falls into

. When

is in the range of

, the patient should receive Zhengtianwan treatment and 50.98% of all subjects in the training dataset fall into this interval.

The number of covariates is not very large in this dataset, so it is possible to fit a sparse linear model with high order terms and make treatment recommendation based on the parametric estimate of the CSTE curve. To this end, we model gk

for k = 1, 2 as a multivariate polynomial function of the covariates X with degree of two, that is, We obtain the estimates (

) of the unknown coefficients (

) by fitting regularized L1 logistic regression with the tuning parameter selected by 3-fold cross-validation. For a new patient with the observed covariates

, we obtain the predicted value for the CSTE curve as

. For this parametric modeling method, we can only use the predicted value for g1 to make treatment recommendation. Thus, based on the rule that a subject would benefit from the treatment if

is positive, 67.64% of the subjects should receive the treatment. We see that this percentage is higher than that obtained by our proposed method, as the parametric method only uses the point estimate for treatment selection. The construction of confidence intervals for g1 by the parametric method is very challenging, as it involves finding the joint asymptotic distribution of (

) after model selection, which is a difficult problem. Moreover, SCBs are not available based on the parametric modeling method. As discussed and shown in the simulation studies given in Section 5, SCBs are more reliable than the pointwise CIs for treatment recommendation for a group of patients.

7 Discussion

Both the simulation and real-world studies in Sections 5 and 6 suggest that the modeling procedure for CSTE curve can successfully detect and model complicated nonlinear relationships between binary response and high-dimensional covariates. In practice, the nonlinear dependencies we suggest are not characteristic of all situations. We adapt the spline-backfitted kernel smoothing to construct the SCBs for the nonlinear functions to choose the optimal treatment. Moreover, the SCBs can be used to verify the presence of nonlinear relationships as well.

Our model is motivated by the desire to provide an individualized decision rule for patients along with the ability to deal with high-dimensional covariates when the outcome is binary. The semiparametric modeling approach can be viewed as a generalization of the CSTE curve with one covariate proposed in Han, Zhou, and Liu (Citation2017) in the sense that the odds ratio depends on a weighted linear combination of all covariates. Although we consider a single decision with two treatment options, our model can be readily generalized to multiple treatment arms.

To identify model (1), we require that the unknown functions and

be nonconstant functions on their supports. Otherwise, the index parameters β1 and β2 are not identified. That is, if

for all

, then βk

can take an arbitrary value. Our treatment recommendation method is proposed based on the prior information that at least one baseline covariate is useful, and the treatment effect is not zero for some values of the covariates. Based on this prior information, our goal is to estimate the optimal treatment regimes and provide recommendations for individual patients based on their observed baseline covariates. For example, and show that the estimated values of

are nonzero in some regions, so that one can apply our method to identify those regions and then make treatment recommendations to patients. In practice, if we conjecture that all covariates are irrelevant, then for an arbitrarily given value of βk

,

for all

. We can conduct a test for this conjecture by choosing a value for βk

, estimating the unknown function

and then testing whether the function is a constant or not. Likewise, we can use this approach to testing whether

for all

, that is, whether the treatment has effect at all. If they are rejected, next we can fit our model to more precisely find the optimal value of βk

. Since this article focuses on making individualized treatment recommendations to patients based on their available covariates, we leave this interesting topic as a future work to explore.

7 Acknowledgments

The authors are grateful to the editor, the associate editor, and two anonymous reviewers for their constructive comments that helped us improve the article substantially.

uasa_a_1865167_sm3131.pdf

Download PDF (290.1 KB)7 Supplementary Materials

Supplementary materials include the technical proofs for all the theoretical results.

Additional information

Funding

References

- Breheny, P., and Huang, J. (2011), “Coordinate Descent Algorithms for Nonconvex Penalized Regression, With Applications to Biological Feature Selection,” The Annals of Applied Statistics, 5, 232–253. DOI: 10.1214/10-AOAS388.

- Cai, T., Tian, L., Wong, P. H., and Wei, L. J. (2011), “Analysis of Randomized Comparative Clinical Trial Data for Personalized Treatment Selections,” Biostatistics, 12, 270–282. DOI: 10.1093/biostatistics/kxq060.

- Chen, G., Zeng, D., and Kosorok, M. R. (2016), “Personalized Dose Finding Using Outcome Weighted Learning,” Journal of the American Statistical Association, 111, 1509–1547. DOI: 10.1080/01621459.2016.1148611.

- Chen, X., and Christensen, T. M. (2015), “Optimal Uniform Convergence Rates and Asymptotic Normality for Series Estimators Under Weak Dependence and Weak Conditions,” Journal of Econometrics, 188, 447–465. DOI: 10.1016/j.jeconom.2015.03.010.

- de Boor, C. (2001), A Practical Guide to Splines, Applied Mathematical Sciences, New York: Springer-Verlag.

- Fan, J., and Li, R. (2001), “Variable Selection via Nonconcave Penalized Likelihood and Its Oracle Properties,” Journal of the American Statistical Association, 96, 1348–1360. DOI: 10.1198/016214501753382273.

- Fan, J., and Lv, J. (2011), “Nonconcave Penalized Likelihood With NP-Dimensionality,” IEEE Transactions on Information Theory, 57, 5467–5484. DOI: 10.1109/TIT.2011.2158486.

- Han, K., Zhou, X.-H., and Liu, B. (2017), “CSTE Curve for Selection the Optimal Treatment When Outcome Is Binary,” Scientia Sinica Mathematica, 47, 497–514.

- Huang, Y. (2015), “Identifying Optimal Biomarker Combinations for Treatment Selection Through Randomized Controlled Trials,” Clinical Trials, 12, 348–56. DOI: 10.1177/1740774515580126.

- Huang, Y., and Fong, Y. (2014), “Identifying Optimal Biomarker Combinations for Treatment Selection via a Robust Kernel Method,” Biometrics, 70, 891–901. DOI: 10.1111/biom.12204.

- Janes, H., Brown, M. D., Huang, Y., and Pepe, M. S. (2014), “An Approach to Evaluating and Comparing Biomarkers for Patient Treatment Selection,” The International Journal of Biostatistics, 10, 99–121. DOI: 10.1515/ijb-2012-0052.

- Jiang, B., and Liu, J. (2014), “Variable Selection for General Index Models via Sliced Inverse Regression,” The Annals of Statistics, 42, 1751–1786. DOI: 10.1214/14-AOS1233.

- Jiang, B., Song, R., Li, J., and Zeng, D. (2019), “Entropy Learning for Dynamic Treatment Regimes,” Statistica Sinica, 29, 1633–1655.

- Kang, C., Janes, H., and Huang, Y. (2014), “Combining Biomarkers to Optimize Patient Treatment Recommendations,” Biometrics, 70, 695–707. DOI: 10.1111/biom.12191.

- Kosorok, M. R., and Laber, E. B. (2019), “Precision Medicine,” Annual Review of Statistics and Its Application, 6, 263–286. DOI: 10.1146/annurev-statistics-030718-105251.

- Laber, E. B., and Qian, M. (2019), “Generalization Error for Decision Problems,” Wiley StatsRef: Statistics Reference Online (accepted).

- Laber, E. B., and Zhao, Y. Q. (2015), “Tree-Based Methods for Individualized Treatment Regimes,” Biometrika, 102, 501–514. DOI: 10.1093/biomet/asv028.

- Liu, R., Yang, L., and Härdle, W. K. (2013), “Oracally Efficient Two-Step Estimation of Generalized Additive Model,” Journal of the American Statistical Association, 108, 619–631. DOI: 10.1080/01621459.2013.763726.

- Luckett, D. J., Laber, E. B., Kahkoska, A. R., Maahs, D. M., Mayer-Davis, E., and Kosorok, M. R. (2020), “Estimating Dynamic Treatment Regimes in Mobile Health Using V-Learning,” Journal of the American Statistical Association, 115, 692–706. DOI: 10.1080/01621459.2018.1537919.

- Ma, S., and He, X. (2016), “Inference for Single-Index Quantile Regression Models With Profile Optimization,” The Annals of Statistics, 44, 1234–1268. DOI: 10.1214/15-AOS1404.

- Ma, S., and Song, P. X.-K. (2015), “Varying Index Coefficient Models,” Journal of the American Statistical Association, 110, 341–356. DOI: 10.1080/01621459.2014.903185.

- Ma, S., and Yang, L. (2011), “A Jump-Detecting Procedure Based on Spline Estimation,” Journal of Nonparametric Statistics, 23, 67–81. DOI: 10.1080/10485250903571978.

- Ma, Y., and Zhou, X.-H. (2014), “Treatment Selection in a Randomized Clinical Trial via Covariate-Specific Treatment Effect Curves,” Statistical Methods in Medical Research, 26, 124–141. DOI: 10.1177/0962280214541724.

- Neykov, M., Liu, J., and Cai, T. (2016), “L1-Regularized Least Squares for Support Recovery of High Dimensional Single Index Models With Gaussian Designs,” Journal of Machine Learning Research, 17, 1–37.

- Qian, M., and Murphy, S. A. (2011), “Performance Guarantees for Individualized Treatment Rules,” The Annals of Statistics, 39, 1180–1210. DOI: 10.1214/10-AOS864.

- Radchenko, P. (2015), “High Dimensional Single Index Models,” Journal of Multivariate Analysis, 139, 266–282. DOI: 10.1016/j.jmva.2015.02.007.

- Rubin, D. B. (2005), “Causal Inference Using Potential Outcomes,” Journal of the American Statistical Association, 100, 322–331. DOI: 10.1198/016214504000001880.

- Rubin, D. B., and van der Laan, M. J. (2012), “Statistical Issues and Limitations in Personalized Medicine Research With Clinical Trials,” The International Journal of Biostatistics, 8, 18. DOI: 10.1515/1557-4679.1423.

- Shi, C., Fan, A., Song, R., and Lu, W. (2018), “High-Dimensional a-Learning for Optimal Dynamic Treatment Regimes,” The Annals of Statistics, 46, 925–957. DOI: 10.1214/17-AOS1570.

- Song, R., Luo, S., Zeng, D., Zhang, H., Lu, W., and Li, Z. (2017), “Semiparametric Single-Index Model for Estimating Optimal Individualized Treatment Strategy,” Electronic Journal of Statistics, 11, 364–384. DOI: 10.1214/17-EJS1226.

- Stone, C. (1985), “Additive Regression and Other Nonparametric Models,” The Annals of Statistics, 13, 689–705. DOI: 10.1214/aos/1176349548.

- Taylor, J. M. G., Cheng, W., and Foster, J. C. (2015), “Reader Reaction to ‘a Robust Method for Estimating Optimal Treatment Regimes’ by Zhang et al. (2012),” Biometrics, 71, 267–273. DOI: 10.1111/biom.12228.

- Wager, S., and Athey, S. (2018), “Estimation and Inference of Heterogeneous Treatment Effects Using Random Forests,” Journal of the American Statistical Association, 113, 1228–1242. DOI: 10.1080/01621459.2017.1319839.

- Wang, H., Li, B., and Leng, C. (2009), “Shrinkage Tuning Parameter Selection With a Diverging Number of Parameters,” Journal of the Royal Statistical Society, Series B, 71, 671–683. DOI: 10.1111/j.1467-9868.2008.00693.x.

- Wang, L., and Yang, L. (2007), “Spline-Backfitted Kernel Smoothing of Nonlinear Additive Autoregression Model,” The Annals of Statistics, 35, 2474–2503. DOI: 10.1214/009053607000000488.

- Watson, M. W., and Engle, R. F. (1983), “Alternative Algorithms for the Estimation of Dynamic Factor, Mimic and Varying Coefficient Regression Models,” Journal of Econometrics, 23, 385–400. DOI: 10.1016/0304-4076(83)90066-0.

- Wu, T. (2016), “Set Valued Dynamic Treatment Regimes,” Ph.D. thesis, The University of Michigan.

- Zhang, B., Tsiatis, A. A., Davidian, M., Zhang, M., and Laber, E. (2012), “Estimating Optimal Treatment Regimes From a Classification Perspective,” Stat, 1, 103–114. DOI: 10.1002/sta.411.

- Zhang, B., and Zhang, M. (2018), “C-Learning: A New Classification Framework to Estimate Optimal Dynamic Treatment Regimes,” Biometrics, 74, 891–899. DOI: 10.1111/biom.12836.

- Zhang, C.-H. (2010), “Nearly Unbiased Variable Selection Under Minimax Concave Penalty,” The Annals of Statistics, 38, 894–942. DOI: 10.1214/09-AOS729.

- Zhang, Y., Laber, E. B., Davidian, M., and Tsiatis, A. A. (2018), “Interpretable Dynamic Treatment Regimes,” Journal of the American Statistical Association, 113, 1541–1549. DOI: 10.1080/01621459.2017.1345743.

- Zhao, Y., Zeng, D., Rush, A. J., and Kosorok, M. R. (2012), “Estimating Individualized Treatment Rules Using Outcome Weighted Learning,” Journal of the American Statistical Association, 107, 1106–1118. DOI: 10.1080/01621459.2012.695674.

- Zheng, S., Liu, R., Yang, L., and Härdle, W. K. (2016), “Statistical Inference for Generalized Additive Models: Simultaneous Confidence Corridors and Variable Selection,” TEST, 25, 607–626. DOI: 10.1007/s11749-016-0480-8.

- Zhou, X., Mayer-Hamblett, N., Khan, U., and Kosorok, M. R. (2017), “Residual Weighted Learning for Estimating Individualized Treatment Rules,” Journal of the American Statistical Association, 112, 169–187. DOI: 10.1080/01621459.2015.1093947.

- Zhou, X.-H., and Ma, Y. B. (2012), “BATE Curve in Assessment of Clinical Utility of Predictive Biomarkers,” Science China Mathematics, 55, 1529–1552. DOI: 10.1007/s11425-012-4473-0.

- Zhu, K., Huang, Y., and Zhou, X.-H. (2018), “Tree-Based Ensemble Methods for Individualized Treatment Rules,” Biostatistics & Epidemiology, 2, 61–83.

- Zhu, W., Zeng, D., and Song, R. (2019), “Proper Inference for Value Function in High-Dimensional Q-Learning for Dynamic Treatment Regimes,” Journal of the American Statistical Association, 114, 1404–1417. DOI: 10.1080/01621459.2018.1506341.