?Mathematical formulae have been encoded as MathML and are displayed in this HTML version using MathJax in order to improve their display. Uncheck the box to turn MathJax off. This feature requires Javascript. Click on a formula to zoom.

?Mathematical formulae have been encoded as MathML and are displayed in this HTML version using MathJax in order to improve their display. Uncheck the box to turn MathJax off. This feature requires Javascript. Click on a formula to zoom.Abstract

We study empirical Bayes estimation of the effect sizes of N units from K noisy observations on each unit. We show that it is possible to achieve near-Bayes optimal mean squared error, without any assumptions or knowledge about the effect size distribution or the noise. The noise distribution can be heteroscedastic and vary arbitrarily from unit to unit. Our proposal, which we call Aurora, leverages the replication inherent in the K observations per unit and recasts the effect size estimation problem as a general regression problem. Aurora with linear regression provably matches the performance of a wide array of estimators including the sample mean, the trimmed mean, the sample median, as well as James-Stein shrunk versions thereof. Aurora automates effect size estimation for Internet-scale datasets, as we demonstrate on data from a large technology firm.

1 Introduction

Empirical Bayes (EB) (Efron Citation2012; Robbins Citation1956, Citation1964) and related shrinkage methods are the de facto standard for estimating effect sizes in many disciplines. In genomics, EB is used to detect differentially expressed genes when the number of samples is small (Smyth Citation2004; Love, Huber, and Anders Citation2014). In survey sampling, EB improves noisy estimates of quantities, like the average income, for small communities (Rao and Molina Citation2015). The key insight of EB is that one can often estimate unit-level quantities better by sharing information across units, rather than analyzing each unit separately.

Formally, EB models the observed data as arising from the following generative process:

(1)

(1)

The goal here is to estimate the mean parameters, for

, from the observed data

. If G and F are fully specified, then the optimal estimator (in the sense of mean squared error) is the posterior mean

, which achieves the Bayes risk. EB deals with the case where F or G is unknown, so the Bayes rule cannot be calculated. Most modern EB methods (Jiang and Zhang Citation2009; Brown and Greenshtein Citation2009; Muralidharan Citation2012; Saha and Guntuboyina Citation2020) assume that F is known (say,

) and construct estimators

that asymptotically match the risk of the unknown Bayes rule, without making any assumptions about the unknown prior G.

We examine the same problem of estimating the μi s when the likelihood F is also unknown. Indeed, knowledge of F is an assumption that requires substantial domain expertise. For example, it took many years for the genomics community to agree on an EB model for detecting differences in gene expression based on microarray data (Baldi and Long Citation2001; Lönnstedt and Speed Citation2002; Smyth Citation2004). Then, once this technology was superseded by bulk RNA-Seq, the community had to devise a new model from scratch, eventually settling on the negative binomial likelihood (Love, Huber, and Anders Citation2014; Gierliński et al. Citation2015).

Unfortunately, there is no way to avoid making such strong assumptions when there is no information besides the one Zi

per μi

. If F is even slightly underspecified, then it becomes hopeless to disentangle F from G. To appreciate the problem, consider the Normal–Normal model:(2)

(2)

Here, Zi

is marginally distributed as , and the observations Zi

only provide information about

. Now, when

is known, A can be estimated by first estimating the marginal variance and subtracting

. Indeed, Efron and Morris (1973) showed that by plugging in a particular estimate of A into the Bayes rule

, one recovers the celebrated James-Stein estimator (James and Stein Citation1961). Yet, as soon as

is unknown, then A (and hence, G) is unidentified, and there is no hope of approximating the unknown Bayes rule.

However, as any student of random effects knows, the Normal–Normal model (2) becomes identifiable if we simply have independent replicates Zij for each unit i. The driving force behind this work is an analogous observation in the context of empirical Bayes estimation: replication makes it possible to estimate μi with essentially no assumptions on F or G. The method we propose, described in the next section, performs well in practice and nearly matches the risk of the Bayes rule, which depends on the unknown F and G.

2 The Aurora Method

First, we formally specify the EB model when replicates are available.

(3)

(3)

Again, the quantity of interest is the mean parameter . The additional parameter αi

is a nuisance parameter that allows for heterogeneity across the units, while preserving exchangeability (Galvao and Kato Citation2014; Okui and Yanagi Citation2020). For example, αi

is commonly taken to be the conditional variance

to allow for heteroscedasticity. However, αi

could even be infinite-dimensional—for instance, a random element from a space of distributions. The αi

have no impact on our estimation strategy and are purely a technical device.

Given data from model (3), one approach would be to collapse the replicates into a single observation per unit—say, by taking their mean—which would bring us back to the setting of model (1). We could appeal to the central limit theorem to justify knowing that the likelihood is Normal. An important message of this paper is that we can do better by using the replicates.

2.1 Proposed Method

Since we have replicates, we can split the Zij

into two groups for each i. First, consider the case of K = 2 replicates so that we can write (Xi

, Y i

) for . Now, Xi

and Yi

are conditionally independent given

. illustrates the relationship between Xi

and Yi

under two different settings. The key insight is that the conditional mean

is (almost surely) identical to the posterior mean

, by the following calculation (Krutchkoff Citation1967). (For convenience, we suppress the dependence of the expected values on G, F.)

(4)

(4)

Fig. 1 EB with replicates: Two simulations with N = 1000 and K = 2. First, we draw , where

in the left panel and G is the uniform distribution on the discrete set

in the right panel. Then, for each i, we draw

and plot the points (Xi

, Y i

). The line shows the posterior mean

, which in light of (4), is identical to the conditional mean

.

![Fig. 1 EB with replicates: Two simulations with N = 1000 and K = 2. First, we draw μi∼G, where G=N(0.5,4) in the left panel and G is the uniform distribution on the discrete set {−3, 0, 3} in the right panel. Then, for each i, we draw Xi,Yi | μi ∼iid N(μi,1) and plot the points (Xi , Y i ). The line shows the posterior mean E[μi | Xi], which in light of (4), is identical to the conditional mean E[Yi | Xi].](/cms/asset/8c91677f-b837-4093-92e4-32dfc5c1c3ec/uasa_a_1967164_f0001_c.jpg)

This suggests that we can estimate the Bayes rule based on Xi

(i.e., the posterior mean ) by simply regressing Yi

on Xi

using any black-box predictive model, such as a local averaging smoother. Let

be the fitted regression function; our estimate of each μi

is then just

.

To extend this method to K > 2, we can again split the replicates into two parts:

(5)

(5) where

is an arbitrary fixed index for now. (We suppress j in the notation below.) Now, one approach is to summarize the vector

by the mean of its values

and regress Yi

on

to learn

(Coey and Cunningham Citation2019). This works for essentially the same reason as the K = 2 case. However, if

is not sufficient for

in model (3) with K – 1 replicates, then

may be different from and suboptimal to

.

Instead, we propose learning directly. The rationale is contained in the following result.

Proposition 1.

Let Zij

be generated according to EquationEquation (3)(3)

(3) and assume that

. Define

and Yi

as in EquationEquation (5)

(5)

(5) . Let

be the vector of order statistics of

:

That is, is simply a sorted version of

. Then, almost surely

(6)

(6)

Proof.

The same argument as EquationEquation (4)(4)

(4) shows that

almost surely. Now, under exchangeable sampling, the order statistics

are sufficient for

and therefore it follows that

almost surely, with no assumptions on F. □

EquationEquation (6)(6)

(6) suggests that we should regress Yi

on the order statistics

to learn a function

that approximates the posterior mean. The fitted values

can be used to estimate μi

. There is one more detail worth mentioning. The estimate

depends on the arbitrary choice of j in EquationEquation (5)

(5)

(5) . To reduce the variance of the estimate, we average over all choices of

. This method, summarized in , is called Aurora, which stands for “Averages of Units by Regressing on Ordered Replicates Adaptively.”

Table 1 A summary of Aurora, which is the proposed method for estimating the means μi when the data come from model (3).

3 Related Work

The Aurora method is closely related to the extensive literature on empirical Bayes, which we cite throughout this paper. One work that is worth emphasizing is Stigler (Citation1990), who motivated empirical Bayes estimators such as James-Stein, through the lens of regression to the mean; for us, regression is not just a motivation but the estimation strategy itself. We were surprised to find a similar idea to Aurora in a forgotten manuscript, uncited to date, that Johns (Citation1986) contributed to a symposium for Herbert Robbins (Van Ryzin Citation1986). Although Johns (Citation1986) used a fairly complex predictive model (projection pursuit regression, Friedman and Stuetzle Citation1981), we show theoretically (Sections 4 and 5) and empirically (Sections 6 and 7) that the order statistics encode enough structure that linear regression and k-nearest neighbor regression can be used as the predictive model.

Models similar to EquationEquation (3)(3)

(3) with replicated noisy measurements of unobservable random quantities have been studied in the context of deconvolution and error-in-variables regression (Devanarayan and Stefanski Citation2002; Schennach Citation2004). In econometrics, panel data with random effects are often modelled as in EquationEquation (3)

(3)

(3) with the additional potential complication of time dependence, that is,

indexes time, while

may correspond to different geographic regions (Horowitz and Markatou Citation1996; Hall and Yao Citation2003; Neumann Citation2007; Jochmans and Weidner 2018). Fithian and Ting (Citation2017) used observations from model (3) to learn a low-dimensional smooth parametric model that describes the data well. However, their goal was testing, whereas our goal is mean estimation.

The Aurora method is also related to a recent line of research that leverages black-box prediction methods to solve statistical tasks that are not predictive in nature. For example, Chernozhukov et al. (Citation2018) considered inference for low dimensional causal quantities when high dimensional nuisance components are estimated by machine learning. Boca and Leek (Citation2018) reinterpreted the multiple testing problem in the presence of informative covariates as a regression problem and estimated the proportion of null hypotheses conditionally on the covariates. The estimated conditional proportion of null hypotheses may then be used for downstream multiple testing methods (Ignatiadis and Huber Citation2021). Black-box regression models can also be used to improve empirical Bayes point estimates in the presence of side-information (Ignatiadis and Wager Citation2019).

Finally, a crucial ingredient in Aurora is data splitting, which is a classical idea in statistics (Cox Citation1975), typically used to ensure honest inference for low-dimensional parameters. In the context of simultaneous inference, Rubin, Dudoit, and Van der Laan (Citation2006) and Habiger and Peña (Citation2014) used data-splitting to improve power in multiple testing.

4 Properties of the Aurora Estimator

We provide theoretical guarantees for the general Aurora estimator described in Section 2. Throughout this section, we split into

and

as in EquationEquation (5)

(5)

(5) , and we write

for the order statistics of

. We also write

and

for the concatenation of all the

and

, respectively. As before, we omit j whenever a definition does not depend on the specific choice of j.

4.1 Three Oracle Benchmarks

In this section, we define three benchmarks for assessing the quality of a mean estimator in model (3) (in terms of mean squared error). These benchmarks provide the required context to interpret the theoretical guarantees of Aurora that we establish in Section 4.2.

First, it is impossible to improve on the Bayes rule, so the Bayes risk serves as an oracle. We denote the Bayes risk (based on all K replicates) by(7)

(7)

As explained in Proposition 1, the function we seek to mimic (for each j in the loop of the Aurora algorithm in ) is the oracle Bayes rule based on the order statistics of K – 1 replicates,(8)

(8)

The risk of is the Bayes risk based on

replicates,

(9)

(9)

In the Aurora algorithm, we average over the choice of held-out replicate j. Thus, we also define an oracle rule that averages over all j. We use the following notation for this oracle and its risk:

(10)

(10)

It is immediate that . Also, by the definition of

in EquationEquation (10)

(10)

(10) and by Jensen’s inequality, it holds that,

so

. This bound can be improved through the following insight:

are jackknife estimates of the posterior mean

and

is their average. By a fundamental result for the jackknife (Efron and Stein Citation1981, theor. 2), it holds that1

. Armed with this insight, in Supplement A.1 we prove the following Proposition.

Proposition 2.

Under model (3) with , it holds that

(11)

(11)

Remark 1.

For sufficiently “regular” problems, will typically be of order

, while

will be of order

; we make this argument rigorous for location families in Section 5.2 and Supplement E. The correction term on the right-hand side of EquationEquation (11)

(11)

(11) will also be of order

in such problems. In our next example, we demonstrate the importance of this additional term.

Example 1

(Normal likelihood with Normal prior). We consider the Normal–Normal model (2) from the introduction with prior variance A and noise variance , and with replicates

as in EquationEquation (3)

(3)

(3) . In this case,2 we can analytically compute that

, and so it follows that

. The right-most inequality in (11) is an equality and

. We conclude that, in the Normal-Normal model, the averaged oracle

has risk closer to the full Bayes estimator than to the Bayes estimator based on K – 1 replicates.

4.2 Regret Bound for Aurora

In this section, we derive our main regret bound for Aurora. The basic idea is that the in-sample error of the regression method directly translates to bounds on the estimation error for the effect sizes μi

, and thus, Aurora can leverage black-box predictive models. To this end, we define the in-sample prediction error for estimating the averaged oracle

,

(12)

(12)

When the predictive mechanism of the Aurora algorithm () is the same for all j,3 then Jensen’s inequality provides the following convenient upper bound on EquationEquation (12)(12)

(12) :

(13)

(13)

is the in-sample error from approximating

by

. We are ready to state our main result:

Theorem 3.

The mean squared error of the Aurora estimator (described in ) satisfies the following regret bound under model (3) with

:

(i.e., the Aurora estimator based on a single held-out response replicate) satisfies the above regret bound with

replaced by

.

As elaborated in Remark 1, the second error term in the above decomposition will typically be negligible compared to ; this is the price we pay for making no assumptions about F and G. Hence, beyond the irreducible Bayes error, the main source of error depends on how well we can estimate

. Crucially, this error is the in-sample estimation error of

, which is often easier to analyze and smaller in magnitude than out-of-sample estimation error (Hastie, Tibshirani, and Friedman Citation2009; Chatterjee Citation2013; Rosset and Tibshirani Citation2020).

4.3 Aurora With k-Nearest-Neighbors is Universally Consistent

Theorem 3 d

emonstrated that a regression model with small in-sample error translates, through Aurora, to mean estimates with small mean squared error. Now, we combine this result with results from nonparametric regression to prove that under model (3), it is possible to asymptotically (in N) match the oracle risk (10) and in view of Proposition 2, to outperform the Bayes risk based on K – 1 replicates.

Theorem 4

(Universal consistency with k-Nearest-Neighbor (kNN) estimator). Consider model (3) with . We estimate μi

with the Aurora algorithm where

is the k-Nearest-Neighbor (kNN) estimator with

, that is, the nonparametric regression estimator which predicts4

and is the Euclidean norm. If

satisfies

as

, then

This result is a consequence of universal consistency in nonparametric regression (Stone Citation1977; Györfi et al. Citation2002). It demonstrates that Aurora can asymptotically nearly match the Bayes risk with substantial generality,5 and suggests the power and expressivity of the Aurora algorithm.

To apply Aurora with kNN, a data-driven choice of k is required. In Supplement B.2 we describe a procedure that chooses the number of nearest neighbors kj per held-out response replicate j through leave-one-out (LOO) cross-validation on the units, and we study its performance empirically in the simulations of Section 6. Nevertheless, Aurora-kNN tuned by LOO, is computationally involved. This motivates our next section; there we study a procedure that is interpretable, easy to implement and that scales well to large datasets.

5 Aurora With Linear Regression

In this section, we analyze the Aurora algorithm when linear regression is used as the predictive model. That is, is a linear function of the order statistics

(14)

(14) where

are the ordinary least squares coefficients of the linear regression

. We call the method Auroral, with the final “l” signifying “linear” and write

for the resulting estimates of μi

. Our main result is that Auroral matches the performance of the best estimator that is linear in the order statistics based on K – 1 replicates. To state this result formally, we first define the minimum risk among estimators in class

:

(15)

(15)

The class of interest to us specifically is the class of estimators linear in the order statistics(16)

(16)

This is a broad class that includes all estimators of μi

based on that proceed through the following two steps: (i) summarization, where an appropriate summary statistic of the likelihood F is used, that is linear in the order statistics, and (ii) linear shrinkage, where the summary statistic is linearly shrunk toward a fixed location. Schematically

The summarization step can apply nonlinear functions of the original data, such as the median and the trimmed mean, because the input to the linear model are the order statistics, rather than the original data. Such linear combinations of the order statistics are known as L-statistics, which is a class so large that it includes efficient estimators of the mean in any smooth, symmetric location family, see Van der Vaart (Citation2000) and Section 5.2.

We now show that Auroral matches asymptotically in N in the setting of Theorem 3.

Theorem 5

(Regret over linear estimators).

1. Assume there exists CN

> 0 such that ,6 then:

2. Assume there exists , such that

, then:

If , then the conclusion of Theorem 3 holds for

with the term

bounded by

(under Assumption (1)), resp.

(under (2)).

In datasets, we often encounter . Then, Theorem 5 implies that Auroral will almost match the risk

of the best L-statistic based on K – 1 replicates. This result is in the spirit of restricted empirical Bayes (Griffin and Krutchkoff Citation1971; Maritz Citation1974; Norberg Citation1980; Robbins Citation1983), which seeks to find the best estimator among estimators in a given class, such as the best Bayes linear estimators of Hartigan (Citation1969). In these works, linearity typically refers to linearity in

and not

; Lwin (Citation1976), however, used empirical Bayes to learn the best L-statistic from the class

, when the likelihood takes the form of a known location-scale family.

5.1 Examples of Auroral Estimation

In this section, we give three examples in which Auroral satisfies strong risk guarantees.

Example 2

(Point mass prior). Suppose the prior on μ is a point mass at ; that is,

. Then, the Bayes rule based on the order statistics,

, has risk 0 and is trivially a member of

, so

. Therefore, by Theorem 5, provided that

almost surely (

), the risk of the Auroral estimator satisfies

Example 3

(Normal likelihood with Normal prior). We revisit Example 1,7 wherein we argued that the averaged oracle (10) has risk equal to the Bayes risk

plus

. Auroral attains this risk, plus the ordinary least squares estimation error that decays as

.

In addition to Auroral, we consider two estimators, that take advantage of the fact that is sufficient for μi

in the Normal model with K replicates:

Coey and Cunningham (Citation2019) (CC-L): We proceed similarly to the Auroral algorithm with one modification. For the jth held-out replicate, we regress

on

using ordinary least squares to obtain

and

. Finally we estimate μi by

.

James and Stein (Citation1961) (JS): We estimate μi by

.

CC-L implicitly uses the assumption of Normal likelihood by reducing the replicates to their mean, which is the sufficient statistic. Similar to Auroral, its risk is equal to plus

and the least-square estimation error, which in this case is

(only the slope and intercept need to be estimated for each held-out replicate). James-Stein makes full use of the model assumptions (Normal prior, Normal likelihood, and known variance) and is expected to perform best. JS achieves the Bayes risk based on K observations,

plus an error term that decays as

. The price Auroral pays compared to CC-L and JS for using no assumptions whatsoever, when reduction to the mean was possible, is modest.

Example 4

(Exponential families with conjugate priors). Let ν be a σ-finite measure on the Borel sets of and

be the interior of the convex hull of the support of ν. Let

. Suppose

is open, that both

are nonempty and that

is differentiable for

with strictly increasing derivative

.

Now, suppose are generated from model (3) as follows (with G, F implicitly defined). First, θi

is drawn from a prior with Lebesgue density proportional to

on Θ for some

and

. Next,

and

is drawn from the distribution with ν-density equal to

, where

. Diaconis and Ylvisaker (Citation1979, theor. 2) then prove that,

Thus, and Auroral matches the risk of

up to the error term in Theorem 5.

5.2 Auroral Estimation in Location Families

In this section, we provide another example of Auroral estimation. In contrast to the rest of this article, which treats K as fixed, we consider an asymptotic regime in which both K and N tend to (with K growing substantially slower than N). In doing so, we hope to provide the following conceptual insights: first, we provide a concrete setting in which Auroral dominates any method that first summarizes

as

. Second, we elaborate on the expressivity of the class

(16).

We consider model (3) with for a smooth prior G and

, where

has density

with respect to the Lebesgue measure and

is a density that is symmetric around 0.

We proceed with a heuristic discussion, that we will make rigorous in the formal statements below. Suppose for now that is sufficiently regular with Fisher Information,8

(18)

(18)

Then, by classical parametric theory, we expect that as

9. On the other hand, another classical result in the theory of robust statistics (Bennett Citation1952; Jung Citation1956; Chernoff, Gastwirth, and Johns Citation1967; Van der Vaart Citation2000) states that for smooth location families, there exists an L-statistic (i.e., a linear combination of the order statistics) that is asymptotically efficient. By Theorem 5, we thus anticipate that the risk of Auroral is equal to

.

In contrast, if we first summarize by

, then the best estimator we can possibly use is the posterior mean,

. However, for K large (so that the likelihood swamps the prior),

, and so the risk will behave roughly as

, where

. We summarize our findings in the Corollary below, and provide the formal proofs in Supplement E.

Corollary 6 (Smooth location families). Suppose that G satisfies regularity Assumption 1 and satisfies regularity Assumption 2 (where both assumptions are stated in Supplement E.1). Then, in an asymptotic regime with

, it holds that,

where

can be either the CC-L estimator

or the Bayes estimator

.

Recalling that with equality when

is the Gaussian density, we see that Auroral adapts10 to the unknown density

and outperforms any estimator that first averages

.

What about location families that are not regular? Below we give an example, namely the Rectangular location family with , B > 0 in which a similar conclusion to Corollary 6 holds. The advantage of Auroral is even more pronounced in this case (

risk for Auroral, versus

for any method that first averages

).

Corollary 7 (Rectangular location family). Suppose is the uniform density on

for a B > 0, that G satisfies regularity Assumption 1 in Supplement E.1 and that

. Then,

6 Empirical Performance in Simulations

In this section, we study the empirical performance of Aurora and competing empirical Bayes algorithms in three scenarios: homoscedastic location families (Section 6.1), heteroscedastic location families (Section 6.2) and a heavy-tailed likelihood (Section 6.3).

6.1 Homoscedastic Location Families

We start by empirically studying the location family problem from Section 5.2 for K = 10 replicates and units. We first generate the means μi

from one of two possible priors G, parameterized by a simulation parameter A: the Normal prior

and the three-point discrete prior that assigns equal probabilities to

. Both prior distributions have variance A.

We then generate the replicates Zij

around each mean μi

from one of three location families: Normal, Laplace, or Rectangular. The parameters of these distributions are chosen so that the noise variance is .

We compare eight estimators of μi :

Aurora-type methods: Auroral, Aurora-kNN (Aur-kNN) with k chosen by leave-one-out cross-validation from the set

(as described in Supplement B.2) and CC-L (described in Example 3).

Standard estimators of location: The mean

, the median and the midrange (that is,

).

Standard empirical Bayes estimators applied to the averages

: James-Stein (positive-part) shrinking toward

(which we provide with oracle knowledge of

) and the nonparametric maximum likelihood estimator (NPMLE) of Koenker and Mizera (Citation2014) (as implemented in the REBayes package [Koenker and Gu Citation2017] in the function “GLmix”), which is a convex programming formulation of the estimation scheme of Kiefer and Wolfowitz (Citation1956) and Jiang and Zhang (Citation2009). For the NPMLE we estimate the standard deviation for each unit by the sample standard deviation

over its replicates and use the working approximation

.

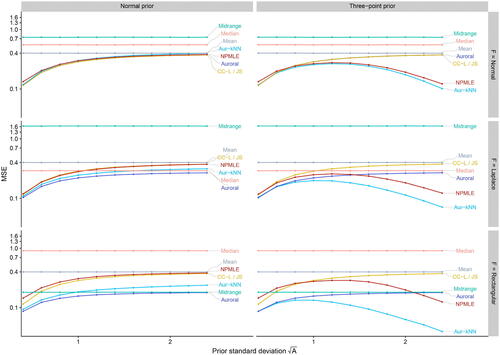

The results11 are shown in . The standard location estimators have constant mean squared error (MSE) in all panels, since they do not make use of the prior.

Fig. 2 Homoscedastic location families: The MSE of the 8 estimators, as a function of the prior standard deviation. Each column represents a different prior distribution: Normal (left) and a three-point distribution (right). Each row represents a different location family for the likelihood (Normal, Laplace, Rectangular).

We discuss the case of a Normal prior first: here the MSE of all methods is non-decreasing in A. Auroral closely matches the best estimator for every A and likelihood. In the case of Normal likelihood, James-Stein (with oracle knowledge of ), CC-L and Auroral perform best,12 followed closely by Aurora-kNN and the NPMLE. The standard location estimators are competitive when the prior is relatively uninformative (i.e., A is large). Among these, the mean performs best for the Normal likelihood, the median for the Laplace likelihood and the midrange for the Rectangular likelihood.

The three component prior highlights a case in which nonlinear empirical Bayes shrinkage can be helpful. Here, Aurora-kNN performs best across all settings, closely followed by the NPMLE in the case of the Normal likelihood13. The NPMLE is also the second most competitive method for the Laplace likelihood with large prior variance A. The behavior of all other methods tracks closely with their behavior under a Normal prior.

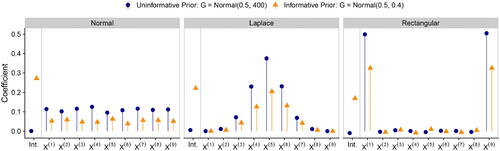

One may at this point wonder: How do the Auroral weights (coefficients) in EquationEquation (14)

(14)

(14) look like? In , we show these weights from a single replication (with

and K = 10) for each of the three likelihoods considered above and for two Normal priors. First, we focus on the points in blue, which correspond to an uninformative prior (

). When the likelihood F is Normal, the Auroral weights are roughly constant and equal to 1/9. In other words,

, that is the Auroral estimate with held-out replicate j, is (approximately) the sample mean

of

. When the likelihood F is Laplace, the Auroral weights pick out the median

and a few order statistics around it. When the likelihood F is Rectangular, Auroral assigns approximately 1/2 weight each to the minimum and the maximum and 0 weight to all of the other order statistics. In other words,

is (approximately) the midrange of

. Notice that Auroral did not know the likelihood F in any of these examples. Rather, it adaptively learned an appropriate summary from the data.

Fig. 3 The coefficients of the intercept and the order statistics in the linear regression model for in Auroral: The colors represent different choices of prior G (defined in the legend), while the panels represent different choices of likelihood F. The coefficients shown have been averaged over the held-out replicate j of the Auroral algorithm ().

Next, we examine the difference between using informative versus uninformative priors G. When the prior is informative (, orange in ), Auroral automatically learns a nonzero intercept, which is determined by the prior mean, and the remaining weights are shrunk toward zero.

6.2 Heteroscedastic Location Families

In our second simulation setting, we study location families where is also random and so we find ourselves in the heteroscedastic location family problem. Again we benchmark Auroral, Aurora-kNN (k chosen by leave-one-out cross-validation from the set

), CC-L, the Gaussian NPMLE and the sample mean. We also consider two estimators which have been proposed specifically for the heteroscedastic Normal problem, the SURE (Stein’s Unbiased Risk Estimate) method of Xie, Kou, and Brown (Citation2012) that shrinks toward the grand mean and the GL (Group-linear) estimator of Weinstein et al. (Citation2018). We apply these estimators to the averages

. Both of these estimators have been developed under the assumption that the analyst has exact knowledge of

; so we provide them with this oracle knowledge (SURE (or.) and GL (or.)—the other methods are not provided this information). Furthermore, we apply the Group-linear method that uses the sample variance

calculated based on the replicates.

We use three simulations, inspired by simulation settings (a), (c) and (f) of Weinstein et al. (Citation2018): in all three simulations we let . First we draw

, where

is a simulation parameter that we vary. Then for the first setting we draw

, while for the last two settings we let

. Weinstein et al. (Citation2018) used the latter as a model of strong mean–variance dependence. The methods that have access to

can in principle predict perfectly (i.e., the Bayes risk is equal to 0). Finally we draw

where

and F is either the Normal location-scale family (first two settings) or the Rectangular location-scale family (last setting).

Results from the simulations are shown in . The oracle SURE and oracle Group-linear estimators perform best in the first panel and oracle Group-linear strongly outperforms all other methods in the last two panels. This is not surprising, since oracle Group-linear has oracle access to and the method was developed for precisely such settings with strong mean-variance relationship. Among the other methods, Auroral and Aurora-kNN remain competitive. In the first panel, they match CC-L, while in the last two panels they outperform Group-linear with estimated variances. We point out that Auroral outperforms CC-L in the second panel, despite the Normal likelihood. This is possible, because the mean is no longer sufficient in the heteroscedastic problem.

Fig. 4 Heteroscedastic location families: Data are generated as follows: First , with

varying on the x-axis. Then

(in the left panel) or

. Finally

,

where K = 10,

and

is a Normal location-scale family (first two panels) or Rectangular (last panel). The y-axis shows the mean squared error of the estimation methods.

![Fig. 4 Heteroscedastic location families: Data are generated as follows: First σ¯i2∼U[0.1,σ¯max2], with σ¯max2 varying on the x-axis. Then μi∼N(0,0.5) (in the left panel) or μi=σ¯i2. Finally Zij|μi,σi2∼F(·|μi,σi2), j=1,…,K where K = 10, σi2=σi¯2K and F(·|μi,σi2) is a Normal location-scale family (first two panels) or Rectangular (last panel). The y-axis shows the mean squared error of the estimation methods.](/cms/asset/c3b5e872-8774-46d5-aa9b-c028f1d65238/uasa_a_1967164_f0004_c.jpg)

6.3 A Pareto Example

For our third example, we consider a Pareto likelihood, which is heavy tailed and non-symmetric. Concretely, we let (with

a varying simulation parameter) and

is the Pareto distribution with tail index α = 3 and mean μi

. We compare Auroral, Aurora-kNN (Aur-kNN) with k chosen by leave-one-out cross-validation from the set

(as described in Supplement B.2), CC-L, the sample mean and median, as well as the maximum likelihood estimator for the Pareto distribution (assuming the tail index is unknown). For this example we also vary

. The results are shown in . Throughout all settings, Auroral performs best, followed by Aurora-kNN. All methods improve as K increases. Auroral and Aurora-kNN also improve as N increases.

7 Application: Predicting Treatment Effects at Google

In this section, we apply Auroral to a problem encountered at Google and other technology companies—estimating treatment effects at a fine-grained level. All major technology firms run large randomized controlled experiments, often called A/B tests, to study interventions and to evaluate policies (Tang et al. Citation2010; Kohavi et al. Citation2013; Kohavi and Longbotham Citation2017; Athey and Luca Citation2019). Estimation of the average treatment effect from such an experiment (e.g., comparing a metric between treated users and control users) is a well-understood statistical task (Wager et al. Citation2016; Athey and Imbens Citation2017). In the application we consider below, instead, interest lies in estimating treatment effects on fine-grained groups – a separate treatment effect for each of thousands of different online advertisers. In this setting, EB techniques, such as Auroral, can stabilize estimates by sharing information across advertisers.

To apply Auroral for the task of fine-grained estimation of treatment effects, we require replicates. Interestingly, the data from the technology firm we work with is routinely organized and analyzed using “streaming buckets,” that is, the experiment data is divided into K chunks of approximately equal size that are called streaming buckets. The buckets correspond to (approximately) disjoint subsets of users, partitioned at random, and so data across different buckets are independent to sufficient approximation. We refer to Chamandy et al. (Citation2012) for a detailed description of the motivation for using streaming buckets to deal with the data’s scale and structure, as well as statistical and computational issues involved in the analysis of streaming bucket data. For the purpose of our application, each streaming bucket corresponds to a replicate in EquationEquation (3)(3)

(3) .

We model the statistical problem of our application as follows. The metric of interest is the cost-per-click (CPC), which is the price a specific advertiser pays for an ad clicked by a user. The goal of the experiment is to estimate the change in CPC before and after treatment. We have data for each of N advertisers and two treatment arms: control (w = 0) and treated (w = 1). The data are further divided into K buckets. For each advertiser , bucket

, and treatment arm w = 0, 1, we record the total number of clicks

and the total cost of the clicks Aijw

. We define

, the empirical cost-per-click for advertiser i in bucket j and treatment arm w.

In this application, the advertisers are the units and the buckets are the replicates. Each observation is , and the goal is to estimate the treatment effect

(19)

(19)

from model (3) capture advertiser-level idiosyncrasies. We consider the following estimation strategies:

The aggregate estimate: we pool the data in all buckets and then compute the difference in CPCs, that is,

.

The mean of the Zij , that is,

.

The CC-L estimator, wherein for each j we use ordinary least squares to regress

onto the aggregate estimate in the other K – 1 buckets, that is, onto

.

The CC-L estimator, wherein for each j we use ordinary least squares to regress

onto the mean of the other K – 1 buckets, that is, onto

The Auroral estimator that (for each j) regresses

on the order statistics

.

We empirically evaluate the methods as follows: we use data from an experiment running at Google for one week, retaining only the top advertisers based on number of clicks, resulting in 50,000. The number of replicates is equal to K = 4. As ground truth, we use the aggregate estimate based on experiment data from the 3 preceding and 3 succeeding weeks. Then, we compute the mean squared error of the estimates (calculated from the one week data) against the ground truth and report the percent change compared to the aggregate estimate.

The results are shown in . The table also shows the results of the same analysis applied to a second metric, the change in click-through rate (CTR), which is the proportion of times that an ad by a given advertiser which is shown to a user is actually clicked. We observe that the improvement in estimation error through Auroral is substantial. Furthermore, Auroral outperforms both variants of CC-L, which in turn outperform estimators that do not share information across advertisers (i.e., the aggregate estimate and the mean of the Zij ).

Table 2 Empirical performance on the advertiser-level estimation problem: Percent change in mean squared error for estimating change in cost-per-click () and change in click-through rate (

) compared to the aggregate estimate (± standard errors).

8 Conclusion

We have presented a general framework for constructing empirical Bayes estimators from K noisy replicates of N units. The basic idea of our method, which we term Aurora, is to leave one replicate out and regress this held-out replicate on the remaining replicates. We then repeat this process over all choices of held-out replicate and average the results. We have shown that if the

replicates are first sorted, then even linear regression produces results that are competitive with the best methods, which usually make parametric assumptions, while our method is fully nonparametric.

We conclude by mentioning some direct extensions of Aurora that are suggested by its connection to regression.

8.1 More Powerful Regression Methods

In this article, we have used linear regression and k-Nearest Neighbor regression to learn . But we can go further; for example, we could use isotonic regression [Guntuboyina and Sen Citation2018] based on a partial order on

. Or we could combine linear and isotonic regression by considering single index models with nondecreasing link function (Balabdaoui, Durot, and Jankowski Citation2019), that is, predictors of the form

, where

and t is an unknown non-decreasing function. Other possibilities include recursive partitioning (Breiman et al. Citation1984; Zeileis, Hothorn, and Hornik Citation2008), in which linear regression is fit on the leaves of a tree, or even random forests aggregated from such trees (Friedberg et al. Citation2021; Künzel et al. Citation2019).

8.2 More General Targets

We have only considered estimation of in EquationEquation (3)

(3)

(3) . As pointed out in Johns (Citation1957, Citation1986) this naturally extends to parameters

where h is a known function. The only modification needed to estimate θi

is that we fit a regression model to learn

instead. We may further extend Vernon Johns’ observation to arbitrary U-statistics. Concretely, given

and a fixed function

, we can use Aurora to estimate

. In this case we need to hold out r replicates to form the response. For example, with r = 2 and

, we can estimate the conditional variance

. Denoising the variance with empirical Bayes is an important problem that proved to be essential for the analysis of genomic data (Smyth Citation2004; Lu and Stephens Citation2016). However, these articles assumed a parametric form of the likelihood, while Aurora would permit fully nonparametric estimation of the variance parameter.

8.3 External Covariates

Model (3) posits a priori exchangeability of the N units. However, in many applications, domain experts also have access to side-information ζi

about each unit. Hence, multiple authors (Fay and Herriot Citation1979; Tan Citation2016; Kou and Yang 2017; Banerjee, Mukherjee, and Sun Citation2020; Coey and Cunningham Citation2019; Ignatiadis and Wager Citation2019) have developed methods that improve mean estimation by utilizing information in the ζi

. Aurora can be directly extended to accommodate such side information. Instead of regressing Yi

on , one regresses Yi

on both

and ζi

.

9 Software

We provide reproducible code for our simulation results in the following Github repository: https://github.com/nignatiadis/AuroraPaper. A package implementing the method is available at https://github.com/nignatiadis/Aurora.jl. The package has been implemented in the Julia programming language (Bezanson et al. Citation2017).

Supplemental Material

Download PDF (469 KB)Acknowledgments

We are grateful to Niall Cardin, Michael Sklar, and Stefan Wager for helpful discussions and comments on the article. We would also like to thank the associate editor and the anonymous reviewers for their insightful and helpful suggestions.

Supplementary Material

The supplementary materials contain details and proofs for theoretical claims made in the article and a description of the implementation of Aurora with k-Nearest-Neighbors.

Notes

1 Here we use the fact that the entries of are independent conditionally on

. This inequality is also known in the theory of U-statistics (Hoeffding Citation1948, theor. 5.2).

2 The details are given in Supplement D.

3 That is, the map is the same for all j. This does not imply that

for

, since the training data changes.

4 The definition below assumes no ties. In the proof we explain how to randomize to deal with ties.

5 Vernon Johns (Citation1957) established a result similar to Theorem 4. We find this remarkable, since Johns (Citation1957) anticipated later developments in nonparametric regression, independently proving universal consistency for partition-based regression estimators as an intermediate step.

6 By the law of total variance, almost surely, it holds that(17)

(17)

Thus, for example, when have bounded support, there exists C > 0 such that

almost surely and so, the stated assumption holds with

.

7 See Supplement D for details regarding the regret bounds we discuss here.

8 In location families, the Fisher information is constant as a function of the location parameter μi.

9 Since , the likelihood swamps the prior. Furthermore, we will place regularity assumptions on the prior to rule out the possibility of superefficiency.

10 This adaptivity is perhaps expected, in light of existing theory on semiparametric efficiency in location families. For example, it is known that even for N = 1 one can asymptotically (as ) match the variance of the parametric maximum likelihood estimator in symmetric location families, even without precise knowledge of F (Stein Citation1956; Bickel et al. Citation1998). However, the simulations of Section 6.1 demonstrate that Auroral adapts to the unknown likelihood already for K = 10, while semiparametric efficiency results are truly asymptotic in K, requiring an initial nonparametric density estimate.

11 Throughout this section, we calculate the mean squared error by averaging over 100 Monte Carlo replicates.

12 CC-L and James-Stein perform so similarly in the simulations of this subsection that they are indistinguishable in all panels of with the difference of their MSEs smaller than in all cases. In the first panel (Normal likelihood), Auroral is also indistinguishable from CC-L and James-Stein.

13 Even for the Normal likelihood, the assumptions of the implemented NPMLE are not fully satisfied, since we use estimated standard deviations for each unit.

References

- Athey, S., and Imbens, G. W. (2017), “The Econometrics of Randomized Experiments,” in Handbook of Economic Field Experiments, Vol. 1, pp. 73–140. Elsevier.

- Athey, S., and Luca, M. (2019), “Economists (and Economics) in Tech Companies,” Journal of Economic Perspectives, 33, 209–30. DOI: 10.1257/jep.33.1.209.

- Balabdaoui, F., Durot, C., and Jankowski, H. (2019), “Least Squares Estimation in the Monotone Single Index Model,” Bernoulli, 25, 3276–3310. DOI: 10.3150/18-BEJ1090.

- Baldi, P., and Long, A. D. (2001), “A Bayesian Framework for the Analysis of Microarray Expression Data: Regularized t-Test and Statistical Inferences of Gene Changes,” Bioinformatics, 17, 509–519. DOI: 10.1093/bioinformatics/17.6.509.

- Banerjee, T., Mukherjee, G., and Sun, W. (2020), “Adaptive Sparse Estimation With Side Information,” Journal of the American Statistical Association, 115, 2053–2067. DOI: 10.1080/01621459.2019.1679639.

- Bennett, C. A. (1952), “Asymptotic Properties of Ideal Linear Estimators,” Unpublished dissertation, University of Michigan.

- Bezanson, J., Edelman, A., Karpinski, S., and Shah, V. B. (2017), “Julia: A Fresh Approach to Numerical Computing,” SIAM Review, 59, 65–98. DOI: 10.1137/141000671.

- Bickel, P. J., Klaassen, C. A., Ritov, Y., and Wellner, J. A. (1998), Efficient and Adaptive Estimation for Semiparametric Models. Johns Hopkins Series in the Mathematical Sciences. New York: Springer.

- Boca, S. M., and Leek, J. T. (2018), “A Direct Approach to Estimating False Discovery Rates Conditional on Covariates,” PeerJ, 6, e6035. DOI: 10.7717/peerj.6035.

- Breiman, L., Friedman, J., Stone, C. J., and Olshen, R. A. (1984), Classification and Regression Trees, Boca Raton, FL: CRC Press.

- Brown, L. D., and Greenshtein (2009), “Nonparametric Empirical Bayes and Compound Decision Approaches to Estimation of a High-Dimensional Vector of Normal Means,” The Annals of Statistics, 1685–1704. DOI: 10.1214/08-AOS630.

- Chamandy, N., Muralidharan, O., Najmi, A., and Naidu, S. (2012), “Estimating Uncertainty for Massive Data Streams,” Technical report, Google. https://research.google/pubs/pub43157/

- Chatterjee, S. (2013), “Assumptionless Consistency of the Lasso,” arXiv:1303.5817.

- Chernoff, H., Gastwirth, J. L., and Johns, M. V. (1967), “Asymptotic Distribution of Linear Combinations of Functions of Order Statistics With Applications to Estimation,” The Annals of Mathematical Statistics, 38, 52–72. DOI: 10.1214/aoms/1177699058.

- Chernozhukov, V., Chetverikov, D., Demirer, M., Duflo, E., Hansen, C., Newey, W., and Robins, J. (2018), “Double/Debiased Machine Learning for Treatment and Structural Parameters,” The Econometrics Journal, 21, C1–C68. DOI: 10.1111/ectj.12097.

- Coey, D., and Cunningham, T. (2019), “Improving Treatment Effect Estimators Through Experiment Splitting,” in The World Wide Web Conference (WWW ’19), pp. 285–295. New York, NY: Association for Computing Machinery. DOI: 10.1145/3308558.3313452.

- Cox, D. R. (1975), “A Note on Data-Splitting for the Evaluation of Significance Levels,” Biometrika, 62, 441–444. DOI: 10.1093/biomet/62.2.441.

- Devanarayan, V., and Stefanski, L. A. (2002), “Empirical Simulation Extrapolation for Measurement Error Models With Replicate Measurements,” Statistics & Probability Letters, 59, 219–225.

- Diaconis, P., and Ylvisaker, D. (1979), “Conjugate Priors for Exponential Families,” The Annals of Statistics, 7, 269–281. DOI: 10.1214/aos/1176344611.

- Efron, B. (2012), Large-Scale Inference: Empirical Bayes Methods for Estimation, Testing, and Prediction, Vol. 1. Cambridge: Cambridge University Press.

- Efron, B., and Morris, C. (1973), “Stein’s Estimation Rule and Its Competitors—An Empirical Bayes Approach,” Journal of the American Statistical Association, 68, 117–130.

- Efron, B., and Stein, C. (1981), “The Jackknife Estimate of Variance,” The Annals of Statistics, 9, 586–596,. DOI: 10.1214/aos/1176345462.

- Fay, R. E., and Herriot, R. A. (1979), “Estimates of Income for Small Places: An Application of James-Stein Procedures to Census Data,” Journal of the American Statistical Association, 74, 269–277. DOI: 10.1080/01621459.1979.10482505.

- Fithian, W., and Ting, D. (2017), “Family Learning: Nonparametric Statistical Inference With Parametric Efficiency,” arXiv:1711.10028.

- Friedberg, R., Tibshirani, J., Athey, S., and Wager, S. (2021), “Local Linear Forests,” Journal of Computational and Graphical Statistics, 30, 503–517. DOI: 10.1080/10618600.2020.1831930.

- Friedman, J. H., and Stuetzle, W. (1981), “Projection Pursuit Regression,” Journal of the American statistical Association, 76, 817–823. DOI: 10.1080/01621459.1981.10477729.

- Galvao, A. F., and Kato, K. (2014), “Estimation and Inference for Linear Panel Data Models Under Misspecification When Both n and t Are Large,” Journal of Business & Economic Statistics, 32, 285–309, 2014. DOI: 10.1080/07350015.2013.875473.

- Gierliński, M., Cole, C., Schofield, P., Schurch, N. J., Sherstnev, A., Singh, V., Wrobel, N., Gharbi, K., Simpson, G., and Owen-Hughes, T. (2015), “Statistical Models for RNA-seq Data Derived From a Two-Condition 48-Replicate Experiment,” Bioinformatics, 31, 3625–3630. DOI: 10.1093/bioinformatics/btv425.

- Griffin, B. S., and Krutchkoff, R. G. (1971), “Optimal Linear Estimators: An Empirical Bayes Version With Application to the Binomial Distribution,” Biometrika, 58, 195–201. DOI: 10.1093/biomet/58.1.195.

- Guntuboyina, A., and Sen, B. (2018), “Nonparametric Shape-Restricted Regression,” Statistical Science, 33, 568–594. DOI: 10.1214/18-STS665.

- Györfi, L., Kohler, M., Krzyzak, A., and Walk, H. (2002), A Distribution-Free Theory of Nonparametric Regression, New York: Springer, 88.

- Habiger, J. D., and Peña, E. A. (2014), “Compound p-value Statistics for Multiple Testing Procedures,” Journal of Multivariate Analysis, 126, 153–166. DOI: 10.1016/j.jmva.2014.01.007.

- Hall, P., and Yao, Q. (2003), “Inference in Components of Variance Models With Low Replication,” The Annals of Statistics, 31, 414–441. DOI: 10.1214/aos/1051027875.

- Hartigan, J. (1969), “Linear Bayesian Methods,” Journal of the Royal Statistical Society, Series B, 31, 446–454. DOI: 10.1111/j.2517-6161.1969.tb00804.x.

- Hastie, T., Tibshirani, R., and Friedman, J. H. (2009), The Elements of Statistical Learning: Data Mining, Inference, and Prediction (2nd ed.), Springer Series in Statistics. New York: Springer.

- Hoeffding, W. (1948), “A Class of Statistics With Asymptotically Normal Distribution,” Annals of Mathematical Statistics, 19, 293–325. DOI: 10.1214/aoms/1177730196.

- Horowitz, J. L. and Markatou, M. (1996), “Semiparametric Estimation of Regression Models for Panel Data,” The Review of Economic Studies, 63, 145–168. DOI: 10.2307/2298119.

- Ignatiadis, N., and Huber, W. (2021), “Covariate Powered Cross-Weighted Multiple Testing,” Journal of the Royal Statistical Society, Series B, DOI: 10.1111/rssb.12411.

- Ignatiadis, N., and Wager, S. (2019), “Covariate-Powered Empirical Bayes Estimation,” in Advances in Neural Information Processing Systems, pp. 9620–9632.

- James, W., and Stein, C. (1961), “Estimation With Quadratic Loss,” in Proceedings of the Fourth Berkeley Symposium on Mathematical Statistics and Probability, Vol. 1, pp. 361–379.

- Jiang, W., and Zhang, C.-H. (2009), “General Maximum Likelihood Empirical Bayes Estimation of Normal Means,” The Annals of Statistics, 37,1647–1684. DOI: 10.1214/08-AOS638.

- Jochmans, K., and Weidner, (2018), “Inference on a Distribution From Noisy Draws,” arXiv:1803.04991.

- Johns, M. V. (1957), “Non-Parametric Empirical Bayes Procedures,” The Annals of Mathematical Statistics, 28, 649–669. DOI: 10.1214/aoms/1177706877.

- Johns, M. V. (1986), “Fully Nonparametric Empirical Bayes Estimation Via Projection Pursuit,” in Adaptive Statistical Procedures and Related Topics, eds. John Van Ryzin, pp. 164–178. Hayward, CA: Institute of Mathematical Statistics.

- Jung, J. (1956), “On Linear Estimates Defined by a Continuous Weight Function,” Arkiv für Matematik, 3, 199–209. DOI: 10.1007/BF02589406.

- Kiefer, J., and Wolfowitz, J. (1956), “Consistency of the Maximum Likelihood Estimator in the Presence of Infinitely Many Incidental Parameters,” The Annals of Mathematical Statistics, 27, 887–906. DOI: 10.1214/aoms/1177728066.

- Koenker, R., and Gu, J. (2017), “REBayes: Empirical Bayes Mixture Methods in R,” Journal of Statistical Software, 82, 1–26. DOI: 10.18637/jss.v082.i08.

- Koenker, R., and Mizera, I. (2014), “Convex Optimization, Shape Constraints, Compound Decisions, and Empirical Bayes Rules,” Journal of the American Statistical Association, 109, 674–685. DOI: 10.1080/01621459.2013.869224.

- Kohavi, R., and Longbotham, R. (2017), “Online Controlled Experiments and A/B Testing,” Encyclopedia of Machine Learning and Data Mining, 7,922–929.

- Kohavi, R., Deng, A., Frasca, B., Walker, T., Xu, Y., and Pohlmann, N. (2013), “Online Controlled Experiments at Large Scale,” in Proceedings of the 19th ACM SIGKDD International Conference on Knowledge Discovery and Data Mining, pp. 1168–1176.

- Kou, S., and Yang, J. J. (2017), “Optimal Shrinkage Estimation in Heteroscedastic Hierarchical Linear Models,” in Big and Complex Data Analysis, eds. S. Ahmed. Springer, Cham. DOI: 10.1007/978-3-319-41573-4.

- Krutchkoff, R. G. (1967), “A Supplementary Sample Non-Parametric Empirical Bayes Approach to Some Statistical Decision Problems,” Biometrika, 54, 451–458. DOI: 10.1093/biomet/54.3-4.451.

- Künzel, S. R., Saarinen, T. F., Liu, E. W., and Sekhon, J. S. (2019), “Linear Aggregation in Tree-Based Estimators,” arXiv:1906.06463.

- Lönnstedt, I., and Speed, T. (2002), “Replicated Microarray Data,” Statistica Sinica, 12, 31–46.

- Love, M. I., Huber, W., and Anders, S. (2014), “Moderated Estimation of Fold Change and Dispersion for RNA-seq Data With DESeq2,” Genome Biology, 15, 550. DOI: 10.1186/s13059-014-0550-8.

- Lu, M., and Stephens, M. (2016), “Variance Adaptive Shrinkage (vash): Flexible Empirical Bayes Estimation of Variances,” Bioinformatics, 32, 3428–3434. DOI: 10.1093/bioinformatics/btw483.

- Lwin, T. (1976), “Optimal Linear Estimators of Location and Scale Parameters Using Order Statistics and Related Empirical Bayes Estimation,” Scandinavian Actuarial Journal, 1976, 79–91. DOI: 10.1080/03461238.1976.10405604.

- Maritz, J. S. (1974), “Aligning of Estimates: An Alternative to Empirical Bayes Methods,” Australian Journal of Statistics, 16, 135–143. DOI: 10.1111/j.1467-842X.1974.tb00928.x.

- Muralidharan, O. (2012), “High Dimensional Exponential Family Estimation Via Empirical Bayes,” Statistica Sinica, 22, 1217–1232.

- Neumann, M. H. (2007), “Deconvolution From Panel Data With Unknown Error Distribution,” Journal of Multivariate Analysis, 98, 1955–1968. DOI: 10.1016/j.jmva.2006.09.012.

- Norberg, R. (1980), “Empirical Bayes Credibility,” Scandinavian Actuarial Journal, 1980, 177–194. DOI: 10.1080/03461238.1980.10408653.

- Okui, R., and Yanagi, T. (2020), “Kernel Estimation for Panel Data With Heterogeneous Dynamics,” The Econometrics Journal, 23, 156–175. DOI: 10.1093/ectj/utz019.

- Rao, J., and Molina, I. (2015), Small Area Estimation. Wiley Series in Survey Methodology. Wiley.

- Robbins, H. (1956), “An Empirical Bayes Approach to Statistics,” in Proceedings of the Third Berkeley Symposium on Mathematical Statistics and Probability, Volume 1: Contributions to the Theory of Statistics, ed. J. Neyman, pp. 157–163. Berkeley and Los Angeles: University of California Press.

- Robbins, H. (1964), “The Empirical Bayes Approach to Statistical Decision Problems,” Annals of Mathematical Statistics, 35, 1–20.

- Robbins, H. (1983), “Some Thoughts on Empirical Bayes Estimation,” The Annals of Statistics, 11, 713–723.

- Rosset, S., and Tibshirani, R. J. (2020), “From Fixed-X to Random-X Regression: Bias-Variance Decompositions, Covariance Penalties, and Prediction Error Estimation,” Journal of the American Statistical Association, 115, 138–151. DOI: 10.1080/01621459.2018.1424632.

- Rubin, D., Dudoit, S., and Van der Laan, M. (2006), “A Method to Increase the Power of Multiple Testing Procedures Through Sample Splitting,” Statistical Applications in Genetics and Molecular Biology, 5, . DOI: 10.2202/1544-6115.1148.

- Saha, S., and Guntuboyina, A. (2020), “On the Nonparametric Maximum Likelihood Estimator for Gaussian Location Mixture Densities With Application to Gaussian Denoising,” Annals of Statistics, 48, 738–762.

- Schennach, S. M. (2004), “Estimation of Nonlinear Models With Measurement Error,” Econometrica, 72, 33–75. DOI: 10.1111/j.1468-0262.2004.00477.x.

- Smyth, G. K. (2004), “Linear Models and Empirical Bayes Methods for Assessing Differential Expression in Microarray Experiments,” Statistical Applications in Genetics and Molecular Biology, 3, 1–25. DOI: 10.2202/1544-6115.1027.

- Stein, C. (1956), “Efficient Nonparametric Testing and Estimation,” in Proceedings of the Third Berkeley Symposium on Mathematical Statistics and Probability, Volume 1: Contributions to the Theory of Statistics, eds. J. Neyman, pp. 187–195. Berkeley and Los Angeles: University of California Press.

- Stigler, S. M. (1990), “The 1988 Neyman Memorial Lecture: A Galtonian Perspective on Shrinkage Estimators,” Statistical Science, 5, 147–155. DOI: 10.1214/ss/1177012274.

- Stone, C. J. (1977), “Consistent Nonparametric Regression,” The Annals of Statistics, 5, 595–620. DOI: 10.1214/aos/1176343886.

- Tan, Z. (2016), “Steinized Empirical Bayes Estimation for Heteroscedastic Data,” Statistica Sinica, 5, 1219–1248,. DOI: 10.5705/ss.202014.0069.

- Tang, D., Agarwal, A., O’Brien, D., and Meyer, M. (2010), “Overlapping Experiment Infrastructure: More, Better, Faster Experimentation,” in Proceedings of the 16th ACM SIGKDD International Conference on Knowledge Discovery and Data Mining, pp. 17–26.

- Van der Vaart, A. W. (2000), Asymptotic Statistics, Vol. 3. Cambridge: Cambridge University Press.

- Van Ryzin, J. (1986), Adaptive Statistical Procedures and Related Topics: Proceedings of a Symposium in Honor of Herbert Robbins, June 7–11, 1985, Brookhaven National Laboratory, Upton, New York: Institute of Mathematical Statistics.

- Wager, S., Du, W., Taylor, J., and Tibshirani, R. J. (2016), “High-Dimensional Regression Adjustments in Randomized Experiments,” Proceedings of the National Academy of Sciences, 113, 12673– 12678. DOI: 10.1073/pnas.1614732113.

- Weinstein, A., Ma, Z., Brown, L. D., and Zhang, C.-H. (2018), “Group-Linear Empirical Bayes Estimates for a Heteroscedastic Normal Mean,” Journal of the American Statistical Association, 113, 698–710. DOI: 10.1080/01621459.2017.1280406.

- Xie, X., Kou, S., and Brown, L. D. (2012), “SURE Estimates for a Heteroscedastic Hierarchical Model,” Journal of the American Statistical Association, 107, 1465–1479. DOI: 10.1080/01621459.2012.728154.

- Zeileis, A., Hothorn, T., and Hornik, K. (2008), “Model-Based Recursive Partitioning,” Journal of Computational and Graphical Statistics, 17, 492–514. DOI: 10.1198/106186008X319331.

![Fig. 5 Pareto distribution example: Data are generated as μi∼U[2,μmax], with μmax varying on the x-axis and Zij|μi∼F(·|μi), where F(·|μi) is the Pareto distribution with mean μi and tail index α = 3. The panels correspond to different choices for K and N. The y-axis shows the mean squared error of the estimation methods.](/cms/asset/d8caf191-eea8-4c85-ab39-538f189c9c84/uasa_a_1967164_f0005_c.jpg)