?Mathematical formulae have been encoded as MathML and are displayed in this HTML version using MathJax in order to improve their display. Uncheck the box to turn MathJax off. This feature requires Javascript. Click on a formula to zoom.

?Mathematical formulae have been encoded as MathML and are displayed in this HTML version using MathJax in order to improve their display. Uncheck the box to turn MathJax off. This feature requires Javascript. Click on a formula to zoom.ABSTRACT

We propose a contemporaneous bilinear transformation for a p × q matrix time series to alleviate the difficulties in modeling and forecasting matrix time series when p and/or q are large. The resulting transformed matrix assumes a block structure consisting of several small matrices, and those small matrix series are uncorrelated across all times. Hence, an overall parsimonious model is achieved by modeling each of those small matrix series separately without the loss of information on the linear dynamics. Such a parsimonious model often has better forecasting performance, even when the underlying true dynamics deviates from the assumed uncorrelated block structure after transformation. The uniform convergence rates of the estimated transformation are derived, which vindicate an important virtue of the proposed bilinear transformation, that is, it is technically equivalent to the decorrelation of a vector time series of dimension max(p, q) instead of p × q. The proposed method is illustrated numerically via both simulated and real data examples. Supplementary materials for this article are available online.

1 Introduction

Let be a p × q matrix time series, that is, there are p × q recorded values at each time from, for example, p individuals and over q indices or variables. Data recorded in this form are increasingly common in this information age, due to the demand to solve practical problems from, among others, signal processing, medical imaging, social networks, IT communication, genetic linkages, industry production and distribution, economic activities and financial markets. Extensive developments on statistical inference for matrix data under iid settings can be found in Negahban and Wainwright (Citation2011), Rohde and Tsybakov (Citation2011), Xia and Yuan (Citation2019), and the references therein. Many matrix sequences are recorded over time, exhibiting significant serial dependence which is valuable for modeling and future prediction. The surge of development in analyzing matrix time series includes bilinear autoregressive models (Hoff Citation2015; Chen, Xiao, and Yang Citation2021; Xiao, Han, Chen Citation2022), factor models based on Tucker’s decomposition for tensors (Wang, Liu, and Chen Citation2019; Chen, Tsay, and Chen Citation2020; Chen et al. Citation2020; Han et al. Citation2020; Chen, Yang, and Zhang Citation2022; Han, Chen, and Zhang Citation2022), and factor models based on the tensor CP decomposition (Chang et al. Citation2021; Han, Zhang, and Chen Citation2021; Han and Zhang Citation2022).

A common feature in the aforementioned approaches is dimension-reduction, as the so-called “curse-of-dimensionality” is more pronounced in modeling time series than iid observations, which is exemplified by the limited practical usefulness of vector ARMA (VARMA) models. Note that an unregularized VAR(1) model with dimension p involves at least p2 parameters. Hence, finding an effective way to reduce the number of parameters is of fundamental importance in modeling and forecasting high dimensional time series. In the context of vector time series, most available approaches may be divided into three categories: (i) regularized VAR or VARMA methods incorporating LASSO or alternative penalties (Basu and Michailidis Citation2015; Guo, Wang, and Yao Citation2016; Lin and Michailidis Citation2017; Zhou and Raskutti Citation2018; Gao et al. Citation2019; Ghosh, Khare, and Michailidis Citation2019; Han and Tsay Citation2020; Han, Chen, and Wu Citation2020; Han, Tsay, and Wu Citation2023), (ii) factor models of various forms (Pena and Box Citation1987; Bai and Ng Citation2002; Forni et al. Citation2005; Lam and Yao Citation2012), (iii) various versions of independent component analysis (Back and Weigend Citation1997; Tiao and Tsay Citation1989; Matteson and Tsay Citation2011; Huang and Tsay Citation2014; Chang, Guo, and Yao Citation2018). Note that the literature in each of the three categories is large, it is impossible to list all the relevant references here.

In this article we propose a new parsimonious approach for analyzing matrix time series, which is in the spirit of independent component analysis. It transforms a p × q matrix series into the new matrix series of the same size by a contemporaneous bilinear transformation (i.e., the values at different time lags are not mixed together). The new matrix series is divided into several submatrix series and those submatrix series are uncorrelated across all time lags. Hence, an overall parsimonious model can be developed as those submatrix series can be modeled separately without the loss of information on the overall linear dynamics. This is particularly advantageous for forecasting future values. In general cross-serial correlations among different component series are valuable for future prediction. However, the gain from incorporating those cross-serial correlations directly in a moderately high dimensional model is typically not enough to offset the error resulted from estimating the additional large number of parameters. This is why forecasting a large number of time series together based on a joint time series model can often be worse than that forecasting each component series separately by ignoring cross-serial correlations completely. The proposed transformation alleviates the problem by channeling all cross-serial correlations into the transformed submatrix series and those subseries are uncorrelated with each other across all time lags. Hence, the relevant information can be used more effectively in forecasting within each small models. Note that the transformation is one-to-one, therefore, the good forecasting performance of the transformed series can be easily transformed back to the forecasting for the original matrix series. Our empirical study in Section 4 also demonstrates that, even when the underlying model does not exactly follow the assumed uncorrelated block structure, the decorrelation transformation often produces superior out-of-sample prediction, as the transformation enhances the within block autocorrelations, and the cross-correlations between the blocks are weaker or too weak to be practically useful.

The basic idea of the proposed transformation is similar to the so-called principal component analysis for time series (TS-PCA) of Chang, Guo, and Yao (Citation2018). Actually one might be tempted to stack a p × q matrix series into a vector time series, and apply TS-PCA directly. This requires to search for a

transformation matrix. In contrast, the proposed bilinear transformation is facilitated by two matrices of size, respectively, p × p and q × q. Indeed our asymptotic analysis indicates that the proposed new bilinear transformation is technically equivalent to a TS-PCA transformation with dimension max(p, q) instead of

; see Remark 6 in Section 3. Furthermore the bilinear transformation does not mix rows and columns together, as they may represent radically different features in the data. See, for example, the example in Section 4.2 for which the bilinear transformation also outperforms the vectorized TS-PCA approach in a post-sample forecasting.

The rest of the article is organized as follows. The targeted bilinear decorrelation transformation is characterized in Section 2. We introduce a new normalization under which the required transformation consists of two orthogonal transformations. The orthogonality allows us to accumulate the information from different time lags without cancelation. The estimation of the bilinear transformation boils down to the eigenanalysis of two positive-definite matrices of sizes p × p and q × q, respectively. Hence, the computation can be carried out on a laptop/PC with p and q equal to a few thousands. Theoretical properties of the proposed estimation are presented in Section 3. The non-asymptotic error bounds of the estimated transformation are derived based on some concentration inequalities (e.g., Rio Citation2000; Merlevède, Peligrad, Rio Citation2011); further leading to the uniform convergence rates of the estimation. Numerical illustration with both simulated and real data is reported in Section 4, which shows superior performance in forecasting over the methods without transformation and TS-PCA. Once again it reinforces the fact that the cross-serial correlations are important and useful information for future prediction for a large number of time series, and, however, it is necessary to adopt the proposed decorrelation transformation (or other dimension-reduction techniques) in order to use the information effectively. All technical proofs are relegated to a supplementary material.

2 Methodology

2.1 Decorrelation Transformations

Again, let be a p × q matrix time series. We assume that Xt is weakly stationary in the sense that all the first two moments are finite and time-invariant. Our goal is to seek for a bilinear transformation such that the transformed matrix series admits the following segmentation structure:

(1)

(1) where A and B are unknown, respectively, q × q and p × p invertible constant matrices, and random matrix Ut is unobservable,

is a

matrix with unknown pi and qj,

, and

. Furthermore, cov

for any

and any integer τ, that is, all those submatrix series are uncorrelated with each other across all time lags.

Remark 1.

The decorrelation bilinear transformation (1) is in the same spirit as TS-PCA of Chang, Guo, and Yao (Citation2018) which transforms linearly a vector time series to a new vector time series of the same dimension but segmented into several subvector series, and those subvectors are uncorrelated across all time lags. Thus, as far as the linear dynamics is concerned, one can model each of those subvector time series separately. It leads to appreciable improvement in future forecasting, as the transformed process encapsulates all the cross-serial correlations into the auto-correlations of those uncorrelated subvector processes.

In the matrix time series setting, one would be tempted to stack all the elements of Xt into a long vector and to apply TS-PCA of Chang, Guo, and Yao (Citation2018) directly, though it destroys the original matrix structure. This requires to search for the

decorrelation transformation matrix

such that

. The proposed model (1) is to impose a low-dimensional structure

, where ⊗ denotes the matrix Kronecker product, such that the technical difficulty is reduced to that of estimating two transformation matrices of size p × p and q × q, respectively, with significant faster convergence rate; see Remark 6 in Section 3. The empirical results in Section 4 also demonstrate the benefit of maintaining the matrix structure in out-sample prediction performance.

The segmentation structure in (1) may be too rigid and the division of the submatrices may not be that regular. In practice a small number of row blocks and/or column blocks may be resulted from applying the estimation method proposed in Section 2.3. Then applying the same method again to each submatrix series

may lead to a finer segmentation with irregularly sized small blocks.

Similar to TS-PCA, the desired segmentation structure (1) may not exist in real applications. Then the estimated bilinear transformation, by the method in Section 2.3, leads to an approximate segmentation which encapsulates most significant correlations across different component series into the segmented submatrices while ignoring some small correlations. With enhanced auto-correlations, those submatrix processes become more predictable while those ignored small correlations are typically practically negligible. Consequently the improvement in future prediction still prevails in spite of the lack of an exact segmentation structure. See Chang, Guo, and Yao (Citation2018), and also a real data example in Section 4.2 in which the three versions of segmentation (therefore, at least two of them are approximations) uniformly outperform the various methods without the transformation. A simulation study in Section 4.1 also shows that when the underlying true model deviates from the desired segmentation structure by a moderate amount, the approximated segmentation model produces superior out-sample prediction performance than those without the transformation.

The use of bilinear form in (1) is common in matrix and tensor data analysis. For example, it is used in regression with matrix-type covariates (Basu et al. Citation2012; Zhou, Li, Zhu Citation2013; Wang, Zhang, and Dunson Citation2019), matrix autoregressive model (Hoff Citation2015; Chen, Xiao, and Yang Citation2021) and matrix factor model (Wang, Liu, and Chen Citation2019; Chen, Tsay, and Chen Citation2020; Chen, Yang, and Zhang Citation2022).

For dealing with time series with structural changes, it is possible to allow A and B to be time varying, which is technically more demanding and will be explored elsewhere.

2.2 Normalization and Identification

To simplify the statements, let . This amounts to center the data by the sample mean first, which will not affect the asymptotic properties of the estimation under the stationarity assumption. Thus,

.

The terms , and

on the RHS of (1) are not uniquely defined, and any

, and

satisfying the required condition will serve the decorrelation purpose. Therefore, we can take the advantage from this lack of uniqueness to identify the “ideal” A and B to improve the estimation effectiveness. More precisely, by applying a new normalization, we identify A and B to be orthogonal, which facilitates the accumulation of the information from different time lags without cancelation. To this end, we first list some basic facts as follows.

in (1) can be replaced by

for any invertible block diagonal matrices D1 and D2 with the block sizes, respectively,

and

. In particular, D1 and D2 can be the permutation matrices which permute the columns and rows within each block. In addition, D1 and D2 can be the block matrices that permute nr row blocks and nc column blocks, respectively. Note both

and

are defined by (1) only up to any permutation.

The q columns of

can be divided into nc uncorrelated blocks of sizes

, that is, the columns of

resemble the same pattern of the uncorrelated blocks as those of

. Thus,

is a block diagonal matrix with the block sizes

. In the same vein,

is a block diagonal matrix with the block sizes

.

Put

and

, where Bi is

and Ai is

. Then linear spaces

and

are uniquely defined by (1) up to any permutation, though B and A are not, where

denotes the linear space spanned by the columns of matrix G. Note that for any q × q positive definite matrix

and p × p positive definite matrix

,

Put and

. Proposition 1 indicates that if we replace Xt in (1) by a normalized version

, we can treat both A and B in (1) as orthogonal matrices. This orthogonality plays a key role in combining together the information from different time lags in our estimation (see the proof of Proposition 2 in the Appendix, supplementary materials).

Proposition 1.

Let both and

be invertible. Then it holds that

(2)

(2) where

admits the same segmentation structure as

in (1), and

and

are, respectively,

and p × p orthogonal matrices. More precisely,

(3)

(3)

The proof of Proposition 1 is almost trivial. First note that both are invertible. Then (2) follows from (1) and (3) directly. The orthogonality of, for example,

follows from equality

, (2) and (3). Also note that

and

are of the same block diagonal structure as, respectively,

and

. This implies that

admits the same segmentation structure as

.

Write , where

has qj columns and

has pi columns. Then

. Note that

in (2) are (still) not uniquely defined, similar to the property (i) above. In fact, only the linear spaces

and

are uniquely defined. Proposition 2 shows that we can take the orthonormal eigenvectors of two properly defined positive definite matrices as the columns of

and

. With

and

specified, the segmented

can be solved from (2) directly. Let

where

is the unit matrix with 1 at position (i, j) and 0 elsewhere, and

is p × p in the first equation, and q × q in the second equation. For a prespecified integer

, let

(4)

(4)

Proposition 2.

Let both and

be invertible, and all the eigenvalues of

be distinct, i = 1, 2. Also let

. Then the q orthonormal eigenvectors of

can be taken as the columns of

, and the p orthonormal eigenvectors of

can be taken as the columns of

.

The proposition above does not give any indication on how to arrange the columns of and

, which should be ordered according to the latent uncorrelated block structure (1). We address this issue in Section 2.3.

Remark 2.

The representation (1) suffers from the indeterminacy due to the fact that the column blocks and row blocks can be permuted, and the columns and rows within each block can be rotated, see property (i) above. However, this indeterminacy does not have material impact on identifying the desired structure (1), as any one of such representations will serve the purpose. The developed asymptotic theory guarantees that the proposed estimator converges to a representation which fulfills the conditions imposed on (1).

Remark 3.

In case that have tied eigenvalues and the corresponding eigenvectors across different blocks, Proposition 2 no longer holds. Then the blocks sharing the tied eigenvalues cannot be separated. To avoid the tied eigenvalues, we may use different values of τ0, or different form of

. For example, we may replace

by

where f(V) is a function of a symmetric matrix V in which

is the eigen-decomposition for V, and

is the diagonal-element-wise transformation of the diagonal matrix D of the eigenvalues.

In fact the condition that all eigenvalues of are different can be relaxed. For example, we only require any two eigenvalues of

corresponding two different blocks to be different while the eigenvalues corresponding to the same black can be the same. See also a similar condition in the eigen-gap Δ in (14) in Section 3.

Remark 4.

In practice, we need to specify τ0 in (4). In principle any can be used for the purpose of segmentation. A larger τ0 may capture more lag-dependence, but may also risk adding more “noise” when the dependence decays fast. Since autocorrelation is typically at its strongest at small lags, a relatively small τ0, such as

, is often sufficient (Lam, Yao, and Bathia Citation2011; Chang, Guo, and Yao Citation2018; Wang, Liu, and Chen Citation2019; Chen, Yang, and Zhang Citation2022).

2.3 Estimation

The estimation for and

, defined in (3), is based on the eigenanalysis of the sample versions of matrices defined in (4). To this end, let

(5)

(5)

(6)

(6)

(7)

(7)

Performing the eigenanalysis for , and arranging the order of the resulting q orthonormal eigenvectors

by the algorithm below, we take the reordered eigenvectors as the columns of

. The estimator

is obtained in the same manner via the eigenanalysis for

. Then by (2), we obtain the transformed matrix series

(8)

(8)

Note that the estimation for and that for

are carried out separately. They do not interfere with each other.

Now we present an algorithm to determine the order of the columns for . By (2), the columns of

are divided into the nc uncorrelated blocks. Define

(9)

(9)

We divide the columns of into uncorrelated blocks according to the pairwise maximum cross-correlations between the columns. Specifically, the maximum cross-correlation between the kth and

th columns is defined as

(10)

(10)

The second equality above follows from (6), (7), and (9). In the above expression, is a user-defined tuning parameter (See Remark 5). To determine all significantly correlated pairs of variable, rearrange

, in the descending order:

and define

(11)

(11) where

is a small constant. We take the

pair of columns corresponding to

as correlated pairs, and treat the rest as uncorrelated pairs. The intuition is that

is

if

but

. The use of δT is to smooth out the large variation of

when both

and

are small. Similar ideas have also been used in determining the number of factors in Lam and Yao (Citation2012), Ahn and Horenstein (Citation2013), and Han, Chen, and Zhang (Citation2022).

With the identified significantly correlated pairs, a connection graph is built, with the column indexes as the vertices and the edges between the identified correlated column pairs. The number of unconnected sub-graphs is the estimated number of blocks

, and each of maximum connected sub-graphs forms the estimated groups

. The corresponding

groups of

are taken as the columns of

,

. Algorithmically, start with q groups with one column in each group; then iteratively check all pairs of groups and merge two groups together if there exists at least one pair of columns (one in each group) are significantly correlated; and stop when no groups can be merged.

The finite sample performance of the above algorithm can be improved by prewhitening each column time series of . This makes

, for different

, more comparable. See Remark 2(iii) of Chang, Guo, and Yao (Citation2018). In practice the prewhitening can be carried out by fitting each column time series a VAR model with the order between 0 and 5 determined by AIC. The resulting residual series is taken as a prewhitened series.

Remark 5.

The ratio estimator in (11) picks the

most correlated pairs of columns, and ignores the other small correlations in constructing the segmentation structure. Theorem 3 in Section 3 shows that the partition of

into

, determined by the above algorithm, provides a consistent estimation for the column segmentation of

.

There are two tuning parameters used in the procedure, the maximum lag τ0 used in constructing

and

in (4) and the maximum lag τ1 used in measuring the dependency between two columns (rows) in (10). Lag τ1 is usually a sufficiently large integer, for example, between 10 and 20, in the spirit of the rule of thumb of Box and Jenkins (Citation1970). Different values of τ0 and τ1 may, or may not, lead to different segmentation. However, the impact on, for example, future prediction is minimum, as the most information on linear dynamics is encapsulated in the most correlated pairs, as indicated by numerical examples in the Appendix, supplementary materials.

The ordering for columns of is arranged, in the same manner as above, by examining the pairwise correlations among the columns of

where

are now the p orthonormal eigenvectors of

.

3 Theoretical Properties

To gain more appreciation of the methodology, we will show the consistency of the proposed detection method of uncorrelated components. We mainly focus on the estimation error and the ordering of , as those for

are similar. Denote by

the ith row of Xt, and

the (i, j)th element of Xt. For matrix

, let

denote the spectrum norm, and

. We introduce some regularity conditions first.

Assumption 1.

Assume exponentially decay coefficients of the strong α-mixing condition, for some constant

and

, where

(12)

(12)

Here for any random variable/vector/matrix X, is understood to be the σ-field generated by X. Assumption 1 allows a very general class of time series models, including causal ARMA processes with continuously distributed innovations; see Bradley (2002), and also Section 2.6 of Fan and Yao (Citation2003). The restriction

is introduced only for simple presentation.

Assumption 2.

Assume . There exist certain finite constants

, such that

(13)

(13)

Note that and

. Assumption 2 on the eigenvalues is a common assumption in the high dimensional setting, for instance, Bickel and Levina (Citation2008a, 2008b) and Xia, Cai, and Cai (Citation2018).

Assumption 3.

For any x > 0, for some constant

and

.

Assumption 3 requires that the tail probability of each individual series of Xt decay exponentially fast. In particular, when , each

is sub-Gaussian.

Write the eigenvalue-eigenvector decomposition of as

where

with

, and

. By the structure assumption in (1), the index set

is partitioned into nc subsets

such that the columns of different sub-matrices

are uncorrelated with each other across all time lags. Define eigen-gap

(14)

(14)

The following theorem provides the nonasymptotic bounds for the estimators of and

,

under both exponential decay α mixing condition and exponential tail condition of Xt.

Theorem 1.

Suppose Assumptions 1–3 hold with constants , and τ0 is a finite constant. Let

and

. Then,

(15)

(15)

(16)

(16) in an event ΩT with probability at least

, where

(17)

(17)

C1 is a constant depending on only. Moreover, there exists

of which the columns are a permutation of

such that

(18)

(18) in the same event ΩT provided

, where C2 is a constant depending on

only.

Remark 6.

Let PG be the projection operator onto the column space of G, which can be written as

. Under the conditions of Theorem 1, we can obtain

for each sub-group

, where the columns of

are a permutation of the orthonormal eigenvectors of

. If we stack all the elements of Xt into a long vector such that

and apply TS-PCA of Chang, Guo, and Yao (Citation2018) directly, the estimation of the decorrelation transformation matrix would satisfy

for

and

. Obviously,

has much sharper rate which is equivalent to that for the TS-PCA estimation with dimension max(p, q).

The consistency of

requires

. When A and B, or equivalently

and

, have certain sparsity structures, this condition can be further relaxed. In TS-PCA, threshold estimator of Bickel and Levina (Citation2008a) is employed to construct a sparse

, and then the convergence rates are improved to allow the dimension to be much larger than T (Chang, Guo, and Yao Citation2018). The similar extension can be established in our setting.

When the decays of the α-mixing coefficients and the tail probabilities of Xt are slower than Assumptions 1 and 3, we impose the following Assumptions 4 and 5 instead. These conditions ensure the Fuk-Nagaev-type inequalities for α-mixing processes; see also Rio (Citation2000), Liu, Xiao, and Wu (Citation2013), Wu and Wu (Citation2016), and Zhang and Wu (Citation2017).

Assumption 4.

Let the α-mixing coefficients satisfy the condition where

is defined in (12),

and

.

Assumption 5.

For any x > 0, for some constant

and

.

Theorem 2 presents the uniform convergence rate for and

. It is intuitively clear that the rate is much slower than that in Theorem 1.

Theorem 2.

Suppose Assumptions 2, 4, and 5 hold with constants , and τ0 is a finite constant. Let

. Then, (15) and (16) hold in an event ΩT with probability at least

, where

(19)

(19) and C1 depends on

only. Similarly, if

, then there exists

of which the columns are a permutation of

such that (18) holds in the same event ΩT for some positive constant C2 depending on

only.

Theorem 3 implies that the partition of into

in Section 2.3 provides a consistent estimation for the column segmentation of

. To this end, let

(20)

(20)

See also (10). By (2), the columns of are divided into the nc uncorrelated blocks. We assume that those nc blocks can also be obtained by the algorithm in Section 2.3 with

replaced by

for some constant

. Denote by

, respectively, the indices of the components of Zt in each of those nc blocks. Denote by

, respectively, the indices of the components of

in each of the

uncorrelated blocks identified by the algorithm in Section 2.3. Rearrange of the order of

if necessary. Then the theorem below implies that

and

for

.

Theorem 3.

Suppose conditions of Theorems 1 or 2 hold and τ1 is a finite constant. Suppose

(21)

(21) where

are certain positive constants depending on

only,

is defined in (17) or (19) and depends on ϵT. Then in an event ΩT with probability at least

, we have

Remark 7.

The first inequality in (21) requires that the minimum eigen-gap Δ between different uncorrelated groups is sufficiently larger than the estimation error , such that all the groups are identifiable. The second inequality in (21) ensures that there are no cross-group edges among

. The constants κ1 and κ2 are specified in the proof of the theorem.

4 Numerical Properties

4.1 Simulation

We illustrate the proposed decorrelation method with simulated examples. We always set in (7), and

in (10). The prewhitening, as stated at the end of Section 2.3, is always applied in determining the groups of the columns of

and

. As the true transformation matrices

and

defined in (3) cannot be computed easily even for simulated examples, we use the following proxies instead

where are given in (5), and

To abuse the notation, we still use to denote their proxies in this section since the true

never occur in our computation. For each setting, we replicate the simulation 1000 times.

Example 1.

We consider model (1) in which all the component series of are independent and ARMA(1, 2) of the form:

(22)

(22) where b is drawn independently from the uniform distribution on

, a1, a2 are drawn independently from the uniform distribution on

, and ϵt are independent and N(0, 1). The elements of A and B are drawn independently from

. For this example, we assume that we know nr = p and nc = q in (1). The focus is to investigate the impact of p and q on the estimation for B and A.

Performing the eigenanalysis for and

defined in (7), we take the resulting two sets of orthonormal eigenvectors as the columns of

and

, respectively. To measure the estimation error, we define

where

is the (i, j)-h element of matrix

. Note that

is always between 0 and 1, and

if

is a column permutation of

.

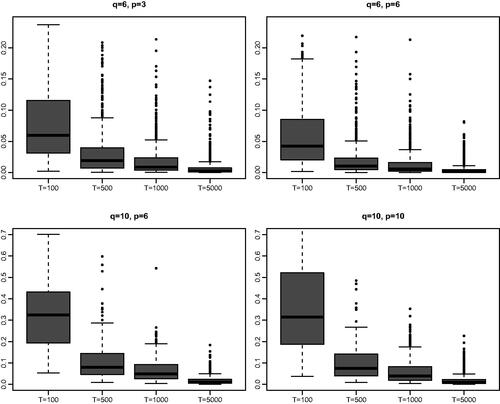

We set , and

. The means and standard errors of

and

over the 1000 replications are reported in . As expected, the estimation errors decrease as T increases. Furthermore the error in estimating

increases as q increases, and that in estimating

increases as p increases. Also noticeable is the fact that the quality of the estimation for

depends on q only and that for

depends on p only, as

is about the same for

and

, and

is about the same for

and

. When

, each A and B contains 1024 unknown parameters. The quality of their estimation with T = 100 is not ideal, but not substantially worse than that for

, and the estimation is very accurate with T = 5000. For

, matrix time series Xt contains 1600 component series. The estimation for

is not accurate enough as q = 100, while the estimation for

remains as good as that for

, and is clearly better than that for

.

Table 1 Example 1—Means and standard errors (SE) of and

in a simulation with 1000 replications.

Example 2.

To examine the performance of the proposed method for identifying uncorrelated blocks, we consider now a only column-segmentation model

where

are p × q matrix time series. Note that adding the row transformation matrix B in the model entails the application of the same method twice without adding any new insights on the performance of the method, as the estimation for

and that for

are performed separately. See Section 2.3.

The transformation matrix A in the above model is generated in the same way as in Example 1. All the element time series of , except those in columns 2, 3, and 5, are simulated independently from ARMA(1,2) model (22). Denoted by

the jth column of

, we let

Hence, the first three columns of forms one block of size 3, columns 4 and 5 forms a block of size 2, and the rest of the columns are uncorrelated with each other, and are uncorrelated to the first two blocks.

We set . For each sample size, we set

, (6, 6), (6, 10) and (10, 10). Hence, the number of groups is

and 7, respectively. For each setting, we perform the eigenanalysis for

defined as (7). We then apply the algorithm in Section 2.3 to arrange the orders of the q resulting orthonormal eigenvectors to form estimator

, for which the number of the correlated pairs of columns is determined by (11). reports the relative frequencies of the correct specification of all the nc uncorrelated blocks. We also report the relative frequencies of two types of wrong segmentation: (a) Merging with

, that is,

blocks are correctly specified, and the remaining two blocks are put into one; (b) Splitting with

, that is,

blocks are correctly specified, and the remaining block incorrectly splits into two. indicates that the relative frequency of the correct specification increases as the sample size T increases, and it decreases as q increases while the performance is much less sensitive to the increase of p. It is noticeable that the probability of the event

is very small especially when T is large. With q = 6, the probability for the correct specification is not smaller than 64% with T = 500, and is greater than 70% with T = 1000. Furthermore the probability for

or

is over 92% for

. Note that when

, an effective dimension reduction is still achieved in spite of missing a split.

Table 2 Example 2—Relative frequencies correct and incorrect segmentation in a simulation with 1000 replications.

For the instances with the correct specification, we also calculate the estimation errors for the nc subspaces. More precisely, put and

. We measure the estimation error by

Note that is always between 0 and 1. Furthermore it is equal to 1 if

, and 0 if the two spaces are perpendicular with each other; see, for example, Stewart and Sun (Citation1990) and Pan and Yao (Citation2008). The boxplots of

presented in indicate that the estimation errors decrease when T increases, and the errors with large q are greater than those with small q.

Example 3.

In this example we examine the gain in post-sample forecasting due to the decorrelation transformation. Now in model (1) all the elements of A and B are drawn independently from , with (p, q) equal to (6, 6), (6, 8), (8, 8), and (10, 10). For each (p, q) combination, the first three rows (or columns) form one block, the next two rows (or columns) form a second block, and all the other row (or column) is independent of the rest of the rows (or columns). shows the configuration of

for

.

Table 3 Example 3: Block configuration of for the case of

.

Each univariate block of (e.g.,

) is an AR(1) process with the autoregressive coefficient drawn from the

and independent and N(0, 1) innovations. For each block of size (3, 1), (1, 3), (2, 1), and (1, 2), a vector AR(1) model is used. All elements of the coefficient matrix is drawn independently from

, then normalized so its singular values are within

. The vector innovations consist of independent and N(0, 1) random variables. Each of the remaining four blocks follow a matrix AR(1) (i.e., MAR(1)) model of Chen, Xiao, and Yang (Citation2021), that is,

(23)

(23) where

and

are the coefficient matrices with unit spectral norm, all elements of

are independent and N(0, 1). Matrices

and

are constructed such that their singular values are all one and the left and right singular matrices are orthonormal, and the scalar coefficient

controls the level of auto-correlation and its values for the four blocks are marked in .

We set sample sizes , and

. For each setting, we simulate a matrix time series of length T + M with M = 10. To perform one-step ahead post-sample forecasting, for each

, we use the first T observations for identifying the uncorrelated blocks and for computing

and

defined in (8). Depending on its shape/size, we fit each identified block in

with an MAR(1), VAR(1), or AR(1) model. The fitted models are used to predict

. The predicted value for

is then obtained by

(24)

(24)

We then compute the mean squared error:

(25)

(25) where

denote the one-step ahead predicted value for the (i, j)th element

of Xs, and

. We compare

with

, instead of

, to remove the impact of the noise in the observed

. We report overall the mean and the standard deviation of MSE over 1000 replications.

For the comparison purpose, we also include several other models in our simulation: (i) VAR(1) model, that is, Xt is stacked into a vector of length pq and a VAR(1) is fitted to the vector series directly, (ii) MAR(1) model, that is, MAR(1) of Chen, Xiao, and Yang (Citation2021), is fitted directly to Xt, and (iii) TS-PCA, that is, all the elements of Xt are stacked into a long vector and the segmentation method of Chang, Guo, and Yao (Citation2018) is applied to the vector series. In addition, we also include two oracle models: (O1) the true segmentation blocks are used to model ; and (O2) True

and true

are used and the true segmentation blocks are used to model

.

The mean and standard deviations (in bracket) of the MSEs are listed in . It is clear that the forecasting based on the proposed decorrelation transformation is more accurate than those based on MAR(1), VAR(1), and TS-PCA directly. The improvement is often substantial. The only exception is the case of and T = 5000: the 36-dimension VAR(1) performs marginally better (i.e., the decrease of 0.002 in MSE) than the transformation based method. On the other hand, the improvement of the transformation based forecast over VAR(1) for sample size

is more significant. The relative poor performance of MAR(1) is due to the substantial discrepancy between MAR(1) model and the data generating mechanism used in this example. Note that there are

free parameters in VAR(1) while there are merely

parameters in MAR(1). With large T, VAR(1) is more flexible than MAR(1). TS-PCA performs poorly, due to the large error in estimating the large transformation matrix of size pq × pq. See also Remark 6. In addition, TS-PCA also requires to fit VAR models for several large blocks of size 9, 6, 6 and etc.

Table 4 Example 3—One-step ahead post-sample forecasting: means and standard deviations (in bracket) of MSEs in a simulation with 1000 replications.

indicates that the oracle model O1 performs only slightly better than segmentation when sample size T = 500 or 1000. Oracle Model O2 only deviates from the true model in terms of the estimation error of the coefficients in the AR models, hence, performs the best. The MSE of O2 is very close to 0, since the only source of error in MSE is the estimation error of the AR models for each blocks of Ut, which goes to zero quickly as T increases since these models are of small dimensions. When T is large, the VAR(1), proposed segmentation and O1 all perform about the same, which is worse than O2.

Example 4.

Next, we assess the sensitivity of the proposed matrix segmentation method with respective to the Kronecker product structure (). We use the same model setting in Example 3 and choose

. Instead of model (1), we consider

, where

is a perturbation matrix with N none-zero elements. We also set each nonzero element of

to be

Rademacher distribution. shows MSEs and standard deviations of VAR(1) and the proposed matrix segmentation method over 1000 replications, for several combinations of N and c. Again, N controls the number of nonzero perturbations and c controls the level of the perturbations, comparing to the average size of the elements in

. When c = 0, it becomes the original case in . From , it is seen that the segmentation is still useful in prediction when the model is deviated from the assumed block structure within certain perturbation. The prediction performance of the VAR model is not affected by the perturbation since it does not assume any block structure. It is seen that the segmentation outperforms the VAR model when c < 0.3 when N = 10 and c < 0.2 when N = 100. This feature demonstrates the benefit of dimension reduction through segmentation, even when the underlying model does not have the exact Kronecker product structure.

Table 5 Sensitivity of the Kronecker product structure using different N and c.

4.2 Real Data Analysis

We now illustrate the proposed decorrelation method with a real data example. The data concerned is a 17 × 6 matrix time series consisting of the logarithmic daily averages of the six air pollutant concentration readings (i.e., PM , PM10, SO2, NO2, CO, O3) from the 17 monitoring stations in Beijing and its surrounding areas (i.e., Tianjin and Hebei Province) in China. The sampling period is January 1, 2015–December 31, 2016 for a total of T = 731 days. The readings of different pollutants at different locations are crossly correlated.

We first remove the seasonal mean of the 17 × 6 matrix time series. Setting in (7), we apply the proposed bilinear transformation to discover the uncorrelated block structure. The finding is stable: with

in (10), the transformed matrix series admits

uncorrelated column blocks with sizes

, respectively, and

uncorrelated row blocks with two blocks of size 2 and the rest of size 1. Overall there are 15 × 4 blocks, among which there are 2 blocks of size 2 × 3, 13 blocks of size 1 × 3, 6 blocks of size 2 × 1 and 39 blocks of size 1 × 1.

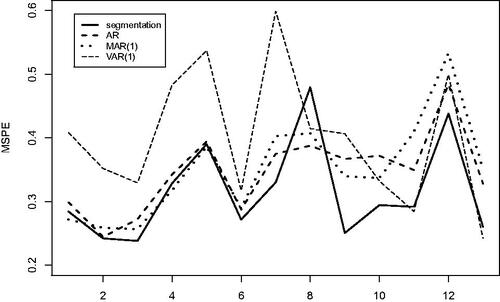

To check the post-sample forecasting performance, we calculate the rolling one-step, and two-step ahead out-sample forecasts for each of the daily readings in the last three months in 2016 (total 92 days). The methods included in the comparison are (i) the forecasting based on the segmentation derived from the proposed decorrelation transformation by fitting an MAR(1), VAR(1) or AR(1) to each identified block according to its size and shape; (ii) MAR(1) for the original 17 × 6 matrix series; (iii) VAR(1) for the vectorized original series, (iv) univariate AR(1) for each of the 17 × 6 original series, and (v) the TS-PCA (Chang, Guo, and Yao Citation2018) for the vectorized original series. The TS-PCA leads to the segmentation consisting of one group of size 3, three groups of size 2, and 93 groups of size 1. For each group, a VAR(1) model is used for prediction.

For the two segmentation approaches, we fix the needed transformation obtained using data up to September 30, 2016, and the corresponding segmentation structure. The time series model for each individual block is updated throughout the rolling forecasting period.

In addition to the identified segmentation with 15 × 4 blocks, we also compute the forecasts based on two other segmentations: one with 14 × 3 blocks, and another with 16 × 5 blocks. The former is obtained by merging two most correlated single row blocks in the discovered segmentation into one block and two most correlated single column blocks into one block. The latter is obtained by splitting one of the two blocks with two rows in the discovered segmentation into two single row blocks and splitting the block with three columns into two blocks. This will reveal the impact of slightly “wrong” segmentations on forecasting performance.

Let the mean squared forecast error for sth observation be

(26)

(26) where

denote the one-step ahead predicted value for the (i, j)th element

of Xs. Two-step ahead MSPEs is defined similarly. The means and the standard deviations of MSPEs of one-step and two-step ahead post-sample forecasts for the pollution readings in the last 92 days are listed in . The prediction based on the bilinear decorrelation transformation (even using “wrong” segmentations) is clearly more accurate than those without transformation as well as that of the TS-PCA. Using the “wrong” segmentation deteriorates the performance only slightly, indicating that a partial (instead of total) decorrelation still leads to significant gain in prediction. Applying TS-PCA to the vectorized series requires to estimate a 102 × 102 transformation matrix (instead of the 17 × 17 and 6 × 6 matrices by using the matrix time series structure). It leads to larger estimation errors (see Remark 6 in Section 3). Nevertheless it still provides more accurate predicts than those without transformation. Also note that fitting each of the original 17 × 6 time series with a univariate AR(1) separately leads to a better performance than those from fitting MAR(1) and VAR(1) to the original matrix series jointly. This indicates that the cross-serial correlation is useful and important information for future forecasting. However, to make efficient use of the information, it is necessary to adopt some effective independent component analysis or dimension-reduction techniques such as the proposed decorrelation transformation which pushes correlations across different series into the autocorrelations of some transformed series.

Table 6 One-step and two-step ahead post-sample forecasting for the air pollution data: means and standard deviations (in bracket) of MSPEs, regrets and adjusted ratios of the various methods.

Also included in the table are “regret” defined as the difference between MSPE and the in-sample residual variance of the fitted VAR(1) model for the vectorized data (i.e., a vector time series of dimension 17 × 6 = 102), and “adjusted ratio” defined as the ratio of the regret to the regret of the best prediction model (i.e., the segmentation with 15 × 4 blocks). The regret tries to measure the forecasting error on the predictable signal, removing the impact from unpredictable noise in the model. Since the fitted VAR(1) model uses more than 10,000 parameters, its sample residual variance underestimates the noise variance in the model, and is taken as a proxy for the latter. The adjusted ratios shows that the “regret” of TS-PCA is 14.4% worse than the 15 × 4 segmentation for one-step ahead forecast, and 6.4% worse than the 15 × 4 segmentation for two-step ahead forecast.

To further evaluate the forecasting stability, shows weekly average of one-step rolling forecast MSPE within the forecasting period under various models. It is seen that the proposed segmentation outperforms the other three methods in most of the periods in terms of prediction.

Fig. 2 Weakly averaged one-step forecast errors of the univariate AR(1) models for each component series (AR), the matrix AR(1) model (MAR(1)), the vector AR(1) for vectorized series (VAR(1)), the method based on the bilinear decorrelation transformation (segmentation).

In this example, the identified segmentation of for the original 17 × 6 matrix series is more likely to be an approximation of the underlying dependence structure rather than a true model. The fact that the two “wrong” segmentation models (though quite close to the discovered one) as well as the aggressive segmentation via vectorized TS-PCA transformation provide comparable, though inferior, post-sample forecasting performance lends further support to the claim that the proposed decorrelation method makes the transformed series more predictable than the original ones, regardless model (1) holds or not. See Remark 1(iv) in Section 2.1.

Supplementary Materials

The supplemental material contain additional simulation results, additional information on the air pollutant example and all proofs.

Supplemental Material

Download MS Word (41.8 KB)Supplemental Material

Download Zip (649.8 KB)Acknowledgements

The authors sincerely thank the joint editor, associate editor, and referees for their valuable comments that helped improve the article substantially.

Additional information

Funding

References

- Ahn, S. C., and Horenstein, A. R. (2013), “Eigenvalue Ratio Test for the Number of Factors,” Econometrica, 81, 1203–1227.

- Back, A. D., and Weigend, A. S. (1997), “A First Application of Independent Component Analysis to Extracting Structure from Stock Returns,” International Journal of Neural Systems, 8, 473–484. DOI: 10.1142/s0129065797000458.

- Bai, J., and Ng, S. (2002), “Determining the Number of Factors in Approximate Factor Models,” Econometrica, 70, 191–221.

- Basu, S., Dunagan, J., Duh, K., and Muniswamy-Reddy, K.-K. (2012), “Blr-d: Applying Bilinear Logistic Regression to Factored Diagnosis Problems,” ACM SIGOPS Operating Systems Review, 45, 31–38.

- Basu, S., and Michailidis, G. (2015), “Regularized Estimation in Sparse High-Dimensional Time Series Models,” The Annals of Statistics, 43, 1535–1567.

- Bickel, P. J., and Levina, E. (2008a), “Covariance Regularization by Thresholding,” The Annals of Statistics, 36, 2577–2604.

- ——— (2008b), “Regularized Estimation of Large Covariance Matrices,” The Annals of Statistics, 36, 199–227.

- Box, G. E. P., and Jenkins, G. M. (1970), Times Series Analysis. Forecasting and Control, San Francisco, CA.-London-Amsterdam: Holden-Day.

- Bradley, R. C. (2005), “Basic Properties of Strong Mixing Conditions. A Survey and Some Open Questions,” Probability Surveys, 2, 107–144.

- Chang, J., Guo, B., and Yao, Q. (2018), “Principal Component Analysis for Second-Order Stationary Vector Time Series,” The Annals of Statistics, 46, 2094–2124.

- Chang, J., He, J., Yang, L., and Yao, Q. (2021), “Modelling Matrix Time Series via a Tensor CP-Decomposition,” arXiv:2112.15423.

- Chen, E. Y., Tsay, R. S., and Chen, R. (2020), “Constrained Factor Models for High-Dimensional Matrix-Variate Time Series,” Journal of the American Statistical Association, 115, 775–793.

- Chen, E. Y., Yun, X., Chen, R., and Yao, Q. (2020), “Modeling Multivariate Spatial-Temporal Data with Latent Low-Dimensional Dynamics,” arXiv preprint arXiv:2002.01305.

- Chen, R., Xiao, H., and Yang, D. (2021), “Autoregressive Models for Matrix-Valued Time Series,” Journal of Econometrics, 222, 539–560.

- Chen, R., Yang, D., and Zhang, C.-H. (2022), “Factor Models for High-Dimensional Tensor Time Series,” Journal of the American Statistical Association, 117, 94–116.

- Fan, J., and Yao, Q. (2003), Nonlinear Time Series: Nonparametric and Parametric Methods. Springer Series in Statistics. New York: Springer.

- Forni, M., Hallin, M., Lippi, M., and Reichlin, L. (2005), “The Generalized Dynamic Factor Model: One-Sided Estimation and Forecasting,” Journal of the American Statistical Association, 100, 830–840.

- Gao, Z., Ma, Y., Wang, H., and Yao, Q. (2019), “Banded Spatio-Temporal Autoregressions,” Journal of Econometrics, 208, 211–230.

- Ghosh, S., Khare, K., and Michailidis, G. (2019), “High-Dimensional Posterior Consistency in Bayesian Vector Autoregressive Models,” Journal of the American Statistical Association, 114, 735–748. DOI: 10.1080/01621459.2018.1437043.

- Guo, S., Wang, Y., and Yao, Q. (2016), “High-Dimensional and Banded Vector Autoregressions,” Biometrika, 103, 889–903.

- Han, Y., Chen, L., and Wu, W. (2020), “Sparse Nonlinear Vector Autoregressive Models,” Technical Report.

- Han, Y., Chen, R., Yang, D., and Zhang, C.-H. (2020), “Tensor Factor Model Estimation by Iterative Projection,” arXiv preprint arXiv:2006.02611.

- Han, Y., Chen, R., and Zhang, C.-H. (2022), “Rank Determination in Tensor Factor Model,” Electronic Journal of Statistics, 16, 1726–1803.

- Han, Y., and Tsay, R. S. (2020), “High-Dimensional Linear Regression for Dependent Observations with Application to Nowcasting,” Statistica Sinica, 30, 1797–1827.

- Han, Y., Tsay, R. S., and Wu, W. B. (2023), “High Dimensional Generalized Linear Models for Temporal Dependent Data,” Bernoulli, 29, 105–131.

- Han, Y., and Zhang, C.-H. (2022), “Tensor Principal Component Analysis in High Dimensional CP Models,” IEEE Transactions on Information Theory, forthcoming.

- Han, Y., Zhang, C.-H., and Chen, R. (2021), “CP Factor Model for Dynamic Tensors,” arXiv:2110.15517.

- Hoff, P. D. (2015), “Multilinear Tensor Regression for Longitudinal Relational Data,” Annals of Applied Statistics, 9, 1169–1193. DOI: 10.1214/15-AOAS839.

- Huang, D., and Tsay, R. (2014), “A Refined Scalar Component Approach to Multivariate Time Series Modeling,” Manuscript.

- Lam, C., and Yao, Q. (2012), “Factor Modeling for High-Dimensional Time Series: Inference for the Number of Factors,” The Annals of Statistics, 40, 694–726.

- Lam, C., Yao, Q., and Bathia, N. (2011), “Estimation of Latent Factors for High-Dimensional Time Series,” Biometrika, 98, 901–918.

- Lin, J., and Michailidis, G. (2017), “Regularized Estimation and Testing for High-Dimensional Multi-Block Vector-Autoregressive Models,” The Journal of Machine Learning Research, 18, 4188–4236.

- Liu, W., Xiao, H., and Wu, W. B. (2013), “Probability and Moment Inequalities under Dependence,” Statistica Sinica, 23, 1257–1272.

- Matteson, D. S., and Tsay, R. S. (2011), “Dynamic Orthogonal Components for Multivariate Time Series,” Journal of the American Statistical Association, 106, 1450–1463.

- Merlevède, F., Peligrad, M., and Rio, E. (2011), “A Bernstein Type Inequality and Moderate Deviations for Weakly Dependent Sequences,” Probability Theory and Related Fields, 151, 435–474.

- Negahban, S., and Wainwright, M. J. (2011), “Estimation of (near) Low-Rank Matrices with Noise and High-Dimensional Scaling,” The Annals of Statistics, 39, 1069–1097.

- Pan, J., and Yao, Q. (2008), “Modelling Multiple Time Series via Common Factors,” Biometrika, 95, 365–379.

- Pena, D., and Box, G. E. (1987), “Identifying a Simplifying Structure in Time Series,” Journal of the American statistical Association, 82, 836–843.

- Rio, E. (2000), “Théorie asymptotique des processus aléatoires faiblement dépendants, volume 31 of Mathématiques & Applications (Berlin) [Mathematics & Applications]. Berlin: Springer.

- Rohde, A., and Tsybakov, A. B. (2011), “Estimation of High-Dimensional Low-Rank Matrices,” The Annals of Statistics, 39, 887–930.

- Stewart, G. W., and Sun, J. G. (1990), Matrix Perturbation Theory. Computer Science and Scientific Computing. Boston, MA: Academic Press, Inc.

- Tiao, G. C., and Tsay, R. S. (1989), “Model Specification in Multivariate Time Series,” Journal of the Royal Statistical Society, Series B, 51, 157–195.

- Wang, D., Liu, X., and Chen, R. (2019), “Factor Models for Matrix-Valued High-Dimensional Time Series,” Journal of Econometrics, 208, 231–248.

- Wang, L., Zhang, Z., and Dunson, D. (2019), “Symmetric Bilinear Regression for Signal Subgraph Estimation,” IEEE Transactions on Signal Processing, 67, 1929–1940.

- Wu, W.-B., and Wu, Y. N. (2016), “Performance Bounds for Parameter Estimates of High-Dimensional Linear Models with Correlated Errors,” Electronic Journal of Statistics, 10, 352–379.

- Xia, D., and Yuan, M. (2019), “Statistical Inferences of Linear Forms for Noisy Matrix Completion,” arXiv preprint arXiv:1909.00116.

- Xia, Y., Cai, T., and Cai, T. T. (2018), “Multiple Testing of Submatrices of a Precision Matrix with Applications to Identification of between Pathway Interactions,” Journal of the American Statistical Association, 113, 328–339. DOI: 10.1080/01621459.2016.1251930.

- Xiao, H., Han, Y., and Chen, R. (2022), “Reduced Rank Autoregressive Models for Matrix Time Series,” Journal of Business & Economic Statistics, forthcoming.

- Zhang, D., and Wu, W. B. (2017), “Gaussian Approximation for High Dimensional Time Series,” The Annals of Statistics, 45, 1895–1919.

- Zhou, H., Li, L., and Zhu, H. (2013), “Tensor Regression with Applications in Neuroimaging Data Analysis,” Journal of the American Statistical Association, 108, 540–552.

- Zhou, H. H., and Raskutti, G. (2018), “Non-parametric Sparse Additive Auto-Regressive Network Models,” IEEE Transactions on Information Theory, 65, 1473–1492.