?Mathematical formulae have been encoded as MathML and are displayed in this HTML version using MathJax in order to improve their display. Uncheck the box to turn MathJax off. This feature requires Javascript. Click on a formula to zoom.

?Mathematical formulae have been encoded as MathML and are displayed in this HTML version using MathJax in order to improve their display. Uncheck the box to turn MathJax off. This feature requires Javascript. Click on a formula to zoom.Abstract

Detection limits (DLs), where a variable cannot be measured outside of a certain range, are common in research. DLs may vary across study sites or over time. Most approaches to handling DLs in response variables implicitly make strong parametric assumptions on the distribution of data outside DLs. We propose a new approach to deal with multiple DLs based on a widely used ordinal regression model, the cumulative probability model (CPM). The CPM is a rank-based, semiparametric linear transformation model that can handle mixed distributions of continuous and discrete outcome variables. These features are key for analyzing data with DLs because while observations inside DLs are continuous, those outside DLs are censored and generally put into discrete categories. With a single lower DL, CPMs assign values below the DL as having the lowest rank. With multiple DLs, the CPM likelihood can be modified to appropriately distribute probability mass. We demonstrate the use of CPMs with DLs via simulations and a data example. This work is motivated by a study investigating factors associated with HIV viral load 6 months after starting antiretroviral therapy in Latin America; 56% of observations are below lower DLs that vary across study sites and over time. Supplementary materials for this article are available online including a standardized description of the materials available for reproducing the work.

1 Introduction

Detection limits (DLs) are not uncommon in biomedical research and other fields. For example, radiation doses may only be detected above a certain threshold (Wing et al.Citation1991), antibody concentrations may not be measured below certain levels (Wu et al.Citation2001), and X-rays may have lower limits of detection (Pan et al.Citation2017). In HIV research, viral load can only be detected above certain levels. To complicate matters, DLs often vary by assay and may change over time.

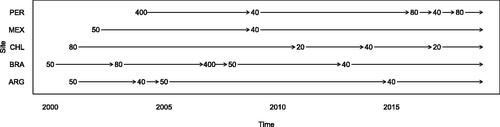

As a motivating example, we consider estimating the association between patient factors at initiation of antiretroviral therapy (ART) and viral load 6 months after starting ART among adults with HIV. Viral load (VL) measures the amount of virus circulating in a person with HIV. A high VL after ART initiation may indicate nonadherence or an ineffective regimen that should be switched. We study the association between VL 6 months after ART initiation and variables measured at ART initiation (baseline). The data include 5301 adults living with HIV starting ART at one of 5 study centers in Latin America between 2000 and 2018. The DLs for the outcome VL differed by site and calendar time. shows the most frequent lower DL values for each year and at each site. There are five distinct lower DLs in this database: 20, 40, 50, 80, and 400 copies/mL. A total of 2992 (56%) patients had 6-month VL censored at a DL: 45%, 54%, 52%, 65%, and 57% at study sites in Argentina, Brazil, Chile, Mexico, and Peru, respectively.

A traditional analysis in the HIV literature would dichotomize VL as detectable or undetectable and perform logistic regression (Jiamsakul et al.Citation2017). There are a few issues that make this analysis less than ideal. First, all VLs above the DL (nearly half of all observations) would be collapsed into a “detectable” category resulting in well-known loss of information due to dichotomizing continuous variables (Fedorov, Mannino, and Zhang Citation2009). Second, because the DL varies with time and by site, the analyst is forced to dichotomize at the largest DL (in this case 400 copies/mL) or else perform an analysis where values above the DL at one site are treated differently than they would be treated at another site. For example, a VL of 300 copies/mL measured in Mexico in 2005 would be measured as “” that same year in Peru; assigning this value as “

” results in lost information but leaving it as “detectable” would make the outcome variable different across time and sites.

Another common approach for handling DLs is substitution, where all nondetects are imputed with a single constant and a linear regression model is fit. The imputed constant may be, for example, the DL itself, DL/2, DL/ (Hornung and Reed Citation1990; Lubin et al.Citation2004; Helsel Citation2011), or the expectation of the measurement conditional on being outside the DL under some assumed parametric model (Garland et al.Citation1993). For example, DL/2 corresponds to the expectation of a uniform distribution between 0 and the DL. Although simple, these substitution approaches typically result in biased estimation, underestimated variances, and thus sometimes wrong conclusions (Baccarelli et al.Citation2005; Fiévet and Della Vedova Citation2010). With multiple DLs, additional questions arise about whether to assign all values below any DL the same constant or to use the same function of the DL (e.g., 400/2 = 200 copies/mL for all values below any DL, or DL/2); there are obvious problems with either approach.

A more parametric analysis might assume that the VL follow a specified distribution (e.g., log-normal distribution) and fit the censored data likelihood or multiply impute values below the DL from the assumed distribution to obtain estimated regression coefficients (Baccarelli et al.Citation2005; Harel, Perkins, and Schisterman Citation2014). However, distributional assumptions for values below the DL are strong and untestable; goodness of fit of a parametric model inside DLs does not ensure goodness of fit outside the DLs. These parametric assumptions may be particularly dangerous in settings with high rates of censoring such as our HIV application (Lubin et al.Citation2004; Zhang et al.Citation2009).

To avoid strong parametric assumptions, nonparametric methods such as Kaplan–Meier, score, and rank-based methods have been proposed in two-sample comparisons (Helsel Citation2011). Zhang et al. (Citation2009) explored the use of the Wilcoxon rank sum test, other weighted rank tests, Gehan and Peto-Peto tests, and a novel nonparametric method for location-shift inference with DLs. Although attractive for two-sample tests, these nonparametric methods generally do not permit inclusion of covariates.

In this manuscript, we propose a new approach for analyzing data subject to multiple detection limits. Data with DLs effectively follow a mixture distribution, where those below a lower DL can be thought of as belonging to a discrete category, those above an upper DL belonging to another discrete category, while those inside the DLs are continuous. Whether discrete or continuous, the values are orderable. In earlier work, Liu et al. (Citation2017) showed that continuous response variables can be modeled using a popular model for ordinal outcomes, namely the cumulative probability model (CPM), also known as the ‘cumulative link model’ (Agresti Citation2013). CPMs are a type of semiparametric linear transformation model (Zeng and Lin Citation2007), in which the continuous response variable after some unspecified monotonic transformation is assumed to follow a linear model, and the transformation is nonparametrically estimated. These models are very flexible and can handle a wide variety of outcomes, including variables with DLs. Importantly, when fitting CPMs to data with DLs, minimal assumptions are made on the distribution of the response variable outside the DLs as these models are based on ranks, and values below/above DLs are simply the lowest/highest rank values. Because of their relationship to the Wilcoxon rank sum test (McCullagh Citation1980), the CPM can be thought of as a semiparametric extension to permit covariates to the approaches that Zhang et al. (Citation2009) found effective for handling DLs in two-sample comparisons. Finally, as will be shown, because CPMs model the conditional cumulative distribution function (CDF), it is easy to extract many different measures of conditional association from a single fitted model, including conditional quantiles, conditional probabilities, odds ratios, and probabilistic indexes, which permits flexible and compatible interpretation.

Methods proposed by Cai and Cheng (Citation2004) and Shen (Citation2011) could also be used to fit semiparametric linear transformation models for data with DLs. Specifically, these authors developed methods to address doubly censored data, of which data subject to both lower and upper DLs are a special case. Like CPMs, these methods are robust and powerful approaches. Unfortunately, implementation of the methods of Cai and Cheng (Citation2004) and Shen (Citation2011) is rare/nonexistent in practice, perhaps because of the complexity of fitting these models and a lack of software. These methods estimate model parameters using estimating equations. In contrast, the CPM that we present obtains nonparametric maximum likelihood estimates that are more efficient than those of Cai and Cheng (Citation2004) and Shen (Citation2011) while making the same assumptions.

In Section 2, we review the CPM, illustrate its use for simple settings where there is a lower and/or upper DL for all subjects, and then show how CPMs can be extended to address multiple DLs. We also propose a new method for estimating the conditional quantile from a CPM. In Section 3, we illustrate and demonstrate the advantages of the proposed approach applied to our HIV study with multiple detection limits. In Section 4, we demonstrate the performance of our method and compare it to other approaches with simulations. The final section contains a discussion of the strengths and limitations of our method and future work. An R package, multipleDL, permits fitting CPMs in settings with multiple DLs.

2 Methods

2.1 Cumulative Probability Models

Transformation is often needed for the regression of a continuous outcome variable Y to satisfy model assumptions, but specifying the correct transformation can be difficult. In a linear transformation model, the outcome is modeled as , where

is an unknown monotonically increasing transformation, X is a vector of covariates, and

follows a known distribution with CDF

. This linear transformation model can be equivalently expressed in terms of the conditional CDF,

Let and

;

is monotonically increasing but otherwise unknown. Then

(1)

(1) where G serves as a link function and the model becomes a cumulative probability model (CPM). The intercept function

is the transformation of the response variable such that

The

coefficients indicate the association between the response variable and covariates: fixing other covariates, a positive/negative

means that an increase in

is associated with a stochastic increase/decrease in the distribution of the response variable.

In the CPM (1), the intercept function can be nonparametrically estimated with a step function (Zeng and Lin Citation2007; Liu et al.Citation2017). This allows great model flexibility. Consider an iid dataset

. The nonparametric likelihood is

(2)

(2) where

. In nonparametric maximum likelihood estimation, the probability mass given any x will be distributed over the discrete set of observed outcome values. Thus, we only need to consider functions for

such that

is a discrete distribution over the observed values. Let J be the number of distinct outcome values, denoted as

. Let

. These serve as the anchor points for the nonparametric likelihood. Let

; then

. The nonparametric likelihood (2) can be written as

(3)

(3)

(3)

(3)

Maximizing (3), we obtain the nonparametric maximum likelihood estimates (NPMLEs), , where

. Note the multinomial form of the likelihood (3); because the probabilities in a multinomial likelihood add to one,

is not estimated. Note also that the likelihood in (3) is identical to that of cumulative link models for ordinal data if the outcome Y is treated as ordinal with categories

. Liu et al. (Citation2017) and Tian et al. (Citation2020) have shown that CPMs can be fit to and work well for continuous responses (

) and mixed types of responses. Under mild conditions including boundedness of the response variable, CPM estimates are consistent and asymptotically normal, with variance consistently estimated with the inverse of the information matrix (Li et al.Citation2023). The NPMLEs and their estimated variances can be efficiently computed with the orm() function in the rms package in R (Harrell Citation2020), which takes advantage of the tridiagonal nature of the Hessian matrix using Cholesky decomposition (Liu et al.Citation2017).

CPMs have several nice features. Some widely used regression methods model only one aspect of the conditional distributions (e.g., conditional mean for linear regression and conditional quantile for quantile regression). With the NPMLEs , we can estimate the conditional CDFs as

where j is the index such that

; standard errors can be obtained by the delta method. Since conditional CDFs are directly modeled, other characteristics of the distribution, such as the conditional quantiles and conditional expectations, can be easily derived (Liu et al.Citation2017). Depending on the choice of link function,

may be interpretable; for example, with the logit link function, exp

is an odds ratio. Probabilistic indexes (De Neve, Thas, and Gerds Citation2019), which are defined as

, can also be easily derived; for example, with the logit link,

. With the transformation

nonparametrically estimated, CPMs are invariant to any monotonic transformation of the outcome; therefore, no pre-transformation is needed. With a single binary covariate and the logit link function, the score test for the CPM is nearly identical to the Wilcoxon rank sum test (McCullagh Citation1980); see supplementary materials S1.1. Because only the order of the outcome values but not the specific values matter when estimating

in the CPM, it can handle any ordinal, continuous, or a mixture of ordinal and continuous distributions, which can be useful for analyzing data with DLs.

2.2 Single Detection Limits

In this section, we first present our method for the simple scenario that there is a single lower DL and/or a single upper DL. We will describe the general approach for multiple DLs in the next section.

Consider a dataset with a lower DL, l, and an upper DL, u. The outcome Y is observed if it is inside the DLs (i.e., ) or censored if it is outside the DLs. The J distinct values of the observed outcomes are denoted as

. When there are no observations outside the DLs, these values are treated as ordered categories in CPMs and they are the anchor points in the nonparametric likelihood (3), and correspondingly there are

alpha parameters,

. With observations outside the DLs, the likelihood (3) needs to be modified accordingly.

When there are observations below the lower DL, we do not know their values except that they are . As there is no way to distinguish them, we treat them as a single category, denoted as

. Note that

is not a value but a symbol for the additional category below

. The nonparametric likelihood for a subject outcome censored at the lower DL l is

where

is the extra alpha parameter corresponding to category

such that

. Because

, the previously lowest category, now has a category below it, the nonparametric likelihood for a subject with

becomes

Similarly, when there are observations above the upper DL, they are also treated as a single category, denoted as , which is a symbol for the additional category above

. The nonparametric likelihood for a subject censored at the upper DL u is

Because is no longer the highest category,

will need to be estimated, and the likelihood for a subject with

is now

Put together, with observed data subject to a single lower DL and a single upper DL, the CPM likelihood is

(4)

(4) which is equivalent to (3) except with two new anchor points,

and

. Therefore, (4) is maximized in an identical manner to (3), with outcomes below the lower DL and outcomes above the upper DL simply assigned to categories

and

, respectively.

In summary, when there are data censored below the lower DL, we add a new anchor point and a new parameter

; when there are data censored above the upper DL, we add a new anchor point

and a new parameter

. The alpha parameters to be estimated are

when there are no DLs or no data censored at DLs,

when both categories

and

are added,

when only

is added, and

when only

is added.

In practice, one can fit the NPMLE in these settings using the orm() function by setting outcomes below the lower DL to some arbitrary number and outcomes above the upper DL to some arbitrary number

. Note that unlike single imputation approaches for dealing with DLs, the CPM estimation procedure is invariant to the choice of these numbers assigned to values outside the DLs. The CPM (1) assumes that after some unspecified transformation, the outcome follows a linear model both within and outside the DLs. In contrast, parametric approaches to deal with DLs assume the full distribution of the outcome conditional on covariates is known, both within and outside DLs. Hence, CPMs make much weaker assumptions than fully parametric approaches.

2.3 Multiple Detection Limits

We now consider the general situation where data may be collected from multiple study sites. A site may have no DL, only one DL, or both lower and upper DLs. Each site may have different lower DLs and different upper DLs, which may change over time.

Every subject has a vector X of covariates and three underlying random variables , where Y is the true outcome and

are the lower and upper DLs. When there is no upper DL,

, and when there is no lower DL,

.

and

are assumed to be independent of Y conditional on X; the vector X may contain variables for study sites or calendar time. This non-informative censoring assumption is typically plausible as DLs are determined by available equipment/assays.

We assume the CPM (1) holds for all subjects. Due to DLs, we may not always observe Y. Instead, we only observe , where

and

is a variable indicating whether Y is observed or censored at a DL:

and

if Y is observed,

and

if

, and

and

if

.

Given a dataset (

), we first determine how many anchor points are needed to support the nonparametric likelihood of the CPM. Let J be the number of distinct values of

among those with

; they are denoted as

. For data without any DLs, these points are the anchor points for the nonparametric likelihood, and they are effectively treated as ordered categories in a CPM. Let

be the set of these values. When there are data with

, let l be the smallest

with

. Similarly, when there are data with

, let u be the largest

with

. If

, we add a category into S below

, denoted as

; note that it is not a value but a symbol for the additional category in S below

. Similarly, if

, we add

into S, which is a symbol for the additional category above

. Depending on the data, the number of ordered categories can be J,

, or

.

Consider the situation where both and

have been added to S (i.e.,

). When

, the nonparametric likelihood for

is

(5)

(5) where j is the index such that

. When

, the nonparametric likelihood for

is

(6)

(6)

where j is the index such that

when

. When

, the nonparametric likelihood for

is

(7)

(7)

where j is the index such that

when

. The overall nonparametric likelihood is the product of these individual likelihoods over all subjects. Note that if there are no uncensored observations between two lower (or upper) DLs, the two DLs are effectively treated as the same DL. A toy example to illustrate our definition is provided in supplementary materials Table S1.

Slight modifications will be applied when no or only one additional category is added to S. When there is no need to add to S (i.e., when

or there are no lower DLs), only the second row in the likelihood (6) for

will be employed, and the likelihood for

with

is

. When there is no need to add

to S (i.e., when

or there are no upper DLs), only the second row in the likelihood (7) for

will be employed, and the likelihood for

with

is

.

Similar to the likelihood of CPM for data without DLs, the individual likelihoods presented above involve either one alpha parameter or two adjacent alpha parameters. As a result, the Hessian matrix continues to be tridiagonal, allowing us to use Cholesky decomposition to solve for the NPMLEs and efficiently estimate their variances using asymptotic approximations from which p-values and confidence intervals (CIs) can be computed. We have developed an R package, multipleDL available on the Comprehensive R Archive Network (CRAN), which uses the optimizing() function in the rstan package to maximize the likelihood (Stan Development Team Citation2020).

As mentioned in the Introduction, there are other existing techniques for fitting semiparametric linear transformation models with doubly censored data (Cai and Cheng Citation2004; Shen Citation2011). Similar to our proposed CPMs, both of these methods assume a model of the form of (1) with known and

, and with

nonparametrically estimated using a step function. These two methods rely on estimating equations expressed with counting process notation. Both methods consistently estimate

and

under the same assumptions as the CPM, but they are less efficient than our NPMLE, which attains the semiparametric efficiency bound (Li et al.Citation2023). The method of Shen (Citation2011) treats the left-censored data below lower detection limits as left-truncated, thus, discarding information. The approach of Cai and Cheng (Citation2004) also loses efficiency as individuals only contribute information for times between

and

; for example, if an observation is observed between

and

, when estimating

for

, this observation is not used even though it is known that it was above the lower detection limit. Details are given in supplementary materials S1.3, and simulations comparing CPMs to these approaches are presented in Section 4.

2.4 Interpretable Quantities and Conditional Quantiles

Interpretation of results after fitting CPMs to outcomes with DLs is similar to settings without DLs. Depending on the link function, may be directly interpretable. The conditional CDF, probabilistic indexes, and conditional quantiles are also easily derived. Note, however, that without additional assumptions on the distribution of the outcome outside DLs, conditional expectations cannot be estimated.

We now describe how to infer conditional quantiles from a CPM fitted on data with DLs. The conditional CDF from a CPM for a given x can be computed as where j is the index such that

; if there is no

such that

, then

. For ease of presentation, we fix x and let

(

); for convenience, let

. Our goal is to define a quantile function

, where

, for the conditional distribution given x.

The quantile function for a CDF is typically defined as

. A plug-in estimator for an estimated CDF,

, is

. When applied to our setting,

when

. This estimator may not be suitable for CPMs because

is a step function and therefore

only takes values at the anchor points, which can be undesirable for continuous outcomes, especially when there is a large gap between adjacent anchor points.

Liu et al. (Citation2017) proposed to estimate quantiles for CPMs with linear interpolation. Specifically, given a fixed p, let be the index such that

. When

,

and define

, which is a linear interpolation between

and

. When

,

is set to be

. Recall that

is not a value but a symbol for being below the lower DL, l; we thus relabel it as “

”, so when

,

“

”. For the linear interpolation between

and

, we set

to be l. Similarly,

is labeled “

” and assigned the value u for the linear interpolation between

and

.

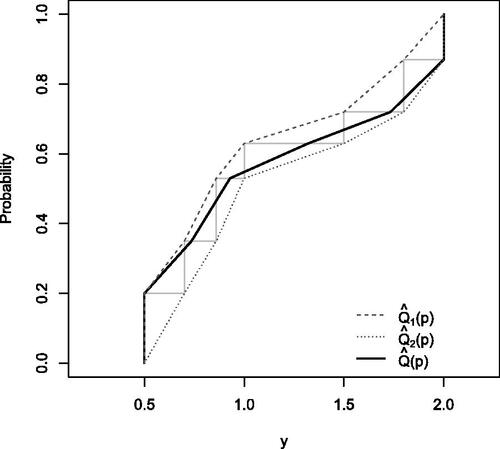

is illustrated as the dashed line in . An alternative definition is to interpolate between

and

:

when

and

“

” when

.

is illustrated as the dotted lines in . For continuous data without DLs,

and

converge as the sample size increases. However, they are problematic for continuous data with DLs because

for all

and

for all

even though there are nonzero estimated probabilities at the lower DL

and upper DL

.

Figure 2: Illustration of three approaches for conditional quantiles. The dataset has a lower DL 0.5, an upper DL 2, and five observed values of y: 0.7, 0.86, 1, 1.5, 1.8. Thus, . The dashed lines are for

, the dotted lines are for

, the solid black lines are for

, and the solid gray lines are for the empirical CDF. Here,

when

, and

when

.

We propose a new quantile estimator as a weighted average between and

,

(8)

(8) where

when

, 0 when

, and 1 when

. This definition is shown as the black curve in . Note that

equals

“

” when

, and equals

“

” when

. It can be shown that similar to

and

,

is also piecewise linear with transition points at

(

). Note that with multiple DLs, the definition seamlessly applies with l and u being the smallest lower and largest upper DLs, respectively. In situations where there is only a lower DL or an upper DL, our definition of

is similar. Confidence intervals for conditional quantiles can be estimated by applying a weighted linear interpolation to the confidence intervals of the conditional CDF in a similar manner (Liu et al.Citation2017).

3 Application

We fit a CPM to study factors associated with viral load 6 months after starting ART in Latin America among 5301 adults with HIV, as described in the Introduction. Our CPM included age, sex, study center, probable route of HIV infection, the indicator that the patient had an AIDS event prior to ART initiation, their CD4 count and viral load at ART initiation (baseline), the ART regimen that they started, the calendar year of ART initiation, and the time between their baseline VL and the 6-month VL measurement. (The baseline VL was the measurement closest to ART initiation within a window of –180 to 30 days; the 6-month VL was the measurement closest to 180 days within a window of 90 days.) Baseline CD4 was square-root transformed and baseline VL was log

transformed. The CPM used a logit link function. The response variable, 6-month VL, had

distinct observed values.

Results are shown in . With the logit link, the parameters can be interpreted as log odds ratios and are presented as odds ratios along with 95% CIs. p-values are likelihood ratio test p-values. The results suggest that study center, route of infection, prior AIDS event, baseline CD4, baseline VL, ART regimen, months to VL measurement, and calendar year are all associated with VL at 6 months. For example, holding other variables constant, a 10-fold increase in VL at baseline is associated with a 44% increase in the odds of having a higher VL at 6 months (95% CI 34%–54%). Initiating a more modern ART regimen based on integrase strand transfer inhibitors (INSTIs) resulted in a 45% decreased odds of having a higher 6-month VL (95% CI 25%–60% decreased odds) compared with regimens based on older non-nucleoside reverse transcriptase inhibitors (NNRTIs). This result was seen after fixing all other variables, including calendar year of ART initiation. In addition, holding all other variables constant, a person starting ART one calendar year later had an estimated 11% lower odds of having a higher 6-month VL (95% CI 9%–12%).

Table 1: The coefficients in CPMs can be interpreted as log odds ratios.

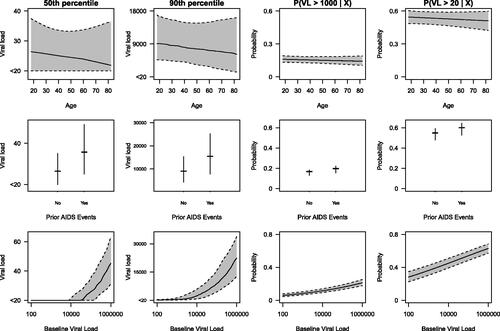

Quantiles and cumulative probabilities are also easily extracted from the CPM. The first row of shows the estimated conditional 50th and 90th percentiles of 6-month VL and the conditional probabilities for 6-month VL being greater than 1000 and 20 copies/mL as functions of age. The plots show that VL at 6 months is fairly similar across age after fixing the other covariates. The second row of contains the estimated conditional quantiles and probabilities as functions of whether a patient had an AIDS event prior to starting ART. People with a prior AIDS event (36%) tended to have a higher VL at 6 months. The third row of are the estimated conditional quantiles and probabilities as functions of baseline VL. People with a higher baseline VL tended to have a higher VL at 6 months. The smallest DL is 20 copies/mL, and all VL values less than this DL belong to the smallest ordered category, which we label as “”. The flat line at “

” in the lower left plot suggests that for those with a baseline VL

copies/mL, the estimated median 6-month VL, as well as its 95% confidence interval, is

copies/mL. Note that our analyses make no attempt to estimate the specific values below the lower DL because the data contain no information on values below 20 copies/mL.

Figure 3: The estimated conditional 50th and 90th percentiles of 6-month VL and the conditional probability of 6-month VL being greater than 1000 and 20 as functions of age (top row), prior AIDS events (middle row), and baseline VL (bottom row) while keeping other covariates at their medians (for continuous variables) or modes (for categorical variables) based on our method.

Figures S1 and S2 of the supplementary materials show diagnostic plots examining model fit using probability-scale residuals (Shepherd, Li, and Liu Citation2016). From QQ-plots, the logit link function appears reasonable and yields a higher log-likelihood than other link functions considered (probit, loglog, and cloglog). Residual-by-predictor plots show no strong relationship between residuals and continuous predictor variables, suggesting model fit may be adequate. Supplementary materials Table S2 and Figure S3 show results from a similar CPM, except with continuous covariates expanded using restricted cubic splines to relax linearity assumptions as a sensitivity analysis. The results are fairly similar.

From , we note that 6-month VL was similar across all study centers except for the center in Mexico. In sensitivity analyses, we repeated the analyses of comparing results using data only from Mexico () versus using data pooled across the other four sites (

). Results are in Table S3 in supplementary materials. The only association that substantially differed between Mexico and the other sites was the association with initial ART regimen. In Mexico, patients starting an INSTI- versus an NNRTI-based regimen tended to have similar 6-month viral loads (OR = 1.0, 95% CI 0.51–1.93), whereas at the other sites, those starting an INSTI- versus an NNRTI-based regimen tended to have lower 6-month viral loads (OR = 0.50, 95% CI 0.36–0.71). Interestingly, among patients starting an INSTI-based regimen, most at our Mexican site used a different drug (bictegravir) than those at the other four sites (dolutegravir). Because the study covers nearly 20 years, as an additional sensitivity analysis we compared estimates among those starting ART from 2000 to 2009 (

) versus those starting ART from 2010 to 2018 (

). Results are in Table S4 in supplementary materials. Estimated odds ratios were fairly similar between the time periods with two exceptions: First, the odds ratio for our Chilean site changed quite a bit, with starting ART in Chile pre-2010 associated with higher viral loads (OR for Chile vs. Peru = 2.34, 95% CI 1.45–3.77) whereas after 2010 associated with lower viral loads (OR = 0.87, 95% CI 0.72–1.05). Second, the odds ratio for INSTI-based regimens appeared to differ pre- versus post-2010 (OR for INSTI- vs. NNRTI-based ART = 1.75, 95% CI 0.46–6.65 pre-2010; OR = 0.57, 95% CI 0.41–0.78 post-2010). Pre-2010, use of INSTI-based regimens was rare (hence, the wide confidence interval) and likely among people with worse health as a sort of experimental salvage drug.

For comparison, we also analyzed the data using competing approaches for addressing DLs described earlier. First, we fit logistic regression to 6-month VL values dichotomized as versus

copies/mL, corresponding to the highest DL. Results are in Table S5 of supplementary materials. The

coefficients in the CPM and the logistic regression model represent identical quantities on the latent logistic distribution scale, the location shift due to covariates (Agresti Citation2013). Whereas the logistic regression estimates arise from a single dichotomization of the outcome variable, the CPM estimates can be thought of as weighted averages of log odds ratio estimates for all possible orderable dichotimizations of the observed response values (Foresi and Peracchi Citation1995). In our HIV application, the CPM and the logistic regression model tended to give similar estimates of the

coefficients, although there were some differences and 95% CIs from the CPM tended to be narrower, as expected. For example, the odds ratios for the cumulative probability and logistic regression models for prior AIDS were 1.24 and 1.27, respectively. However, the 95% confidence interval (on the log odds ratio scale) was 29% narrower with the CPM than with the logistic regression model. The odds ratios for an INSTI- versus NNRTI-based regimen were 0.55 and 0.44 for the CPM and the logistic regression model, respectively, whereas the width of the 95% CI (log odds ratio scale) for the CPM was 36% narrower.

We also fit a full likelihood-based model assuming the outcome variable was normally distributed after transformation (supplementary materials Table S6). Note that even the log-transformed 6-month VL was still quite skewed (supplementary materials Figure S4), and hence the assumptions of this fully parametric approach are questionable. The parameters in this approach and those from the CPM with the logit link are not directly comparable because they are on different scales; however, the directions of associations were similar.

4 Simulations

Extensive simulations of CPMs with continuous data have been reported elsewhere (Liu et al.Citation2017; Tian et al.Citation2020). Here we present a limited set of simulations investigating the performance of CPMs with data subject to multiple DLs.

Data were generated for sample sizes of and

such that the outcome Y followed a normal linear model after log-transformation in the following manner:

Data were simulated to mimic a setting with three equal-sized sites, where the DL of Y and the mean of X, , could vary by site. We considered the following five scenarios:

No DL,

for all sites.

Lower DLs 0.16, 0.30, and 0.50 for the three sites (about 17%, 20%, and 20% censored), and

and 0.5 for site 1, 2, and 3, respectively.

Upper DLs 0.16, 0.30, and 0.50 for the three sites (about 90%, 80%, and 70% censored), and

for all sites.

Lower DLs 0.2, 0.3, and

(13%, 20%, and 0% censored) and upper DLs at

, 4, and 3.5 (0%, 19%, and 16% censored) for the three sites, and

for all sites.

Lower DLs 0.4, 1.0, and 2.5 (38%, 50%, and 62% censored), and

and 0.5 for site 1, 2, and 3, respectively.

Additional simulations considered scenario 2 with (i) to evaluate Type I error; (ii) link function misspecification; (iii) misspecification of the functional form of the model; (iv) comparison with other methods for handling DLs.

CPMs were fit to the observed data without using any knowledge of the correct transformation or

. We simulated 10,000 replications under each scenario. Bias, root mean squared error (RMSE), and coverage of 95% CIs were estimated with respect to

, conditional quantiles for

, and conditional CDFs for

. The choice of quantile and CDF levels varied based on the simulation scenario to ensure that we were estimating a quantity that could be well-estimated based on the scenario-specific detection limits. Specifically, in scenarios 2 and 5, because of the high censoring rates, we estimated the quantiles at

and 0.9 (i.e., the 3rd and 90th percentiles) and CDFs at

and 3.0, respectively.

shows results under correctly specified models (i.e., probit link function and X correctly included). CPMs resulted in nearly unbiased estimation and good CI coverage for , conditional quantiles

, and conditional CDFs

for all five scenarios. As the sample size increased, both the bias and RMSE decreased. The RMSE for

tended to be higher in scenarios with substantial censoring (e.g., scenario 5), as expected. In a simulation under scenario 2 with

set to zero, the Type I error rate was preserved with both

and

(coverage of 95% confidence intervals of 0.948 and 0.947, respectively, and approximately uniformly distributed p-values, supplementary materials Figure S5).

Table 2: Simulation results for multiple DLs.

Supplementary materials Table S7 shows the performance of CPMs for the data generated in scenario 2 under moderate and severe link function misspecification. Link function misspecification is equivalent to misspecification of the distribution of because

. Specifically, we fit CPMs with logit and loglog link functions. Compared to the correct probit link, the logit is moderate misspecification (similar shape and skewness as the true distribution of

), while the loglog represents severe misspecification (very different shape and skewness from the true distribution of

). The performance of CPMs was reasonable with moderate link function misspecification with bias for the conditional median and the conditional CDF

under 6% and coverage of 95% CI generally close to 0.95 with

, although as low as 0.85 with

. With severe link function misspecification, the performance of CPMs was noticeably worse, with bias as high as 8% and coverage as low as 0.46 for the conditional median at

. CPMs, like other regression models, were not very robust to model misspecification due to leaving out a variable (details in supplementary materials Table S8).

shows results under scenario 2 with comparing CPMs with some widely used approaches for handling DLs, specifically single imputation with

, single imputation with

, multiple imputation, and fully parametric maximum likelihood estimation (MLE). For all non-CPM approaches, we first correctly assumed that the outcome variable followed a log-normal distribution. With the imputation approaches, unobserved values were imputed, then a linear regression model was fit on the log-transformed outcome to obtain the

estimate, and median regression was used to estimate conditional medians. In multiple imputation, the correct tail distribution was used for imputing data and 10 iterations were performed for each dataset. For the MLE approach, the medians were computed using the estimated parameters of the fully parametric model. As expected, the MLE performed the best with the lowest bias and RMSE, and highest efficiency because the distributional assumptions matched the true distribution. The performance of multiple imputation was similar to that of the MLE, but with higher RMSE. As a semiparametric method, the CPM also resulted in minimal bias and correct coverage, but had slightly larger variance and RMSE. In contrast, the single imputation estimators were biased and tended to have poor coverage, especially for estimating

. We also evaluated the approaches under misspecification of the transformation. Specifically, we generated data in a manner similar to scenario 2 except using a different transformation,

, where

is the probability density function of the standard normal distribution, and

is the inverse of the CDF for a Chi-square distribution with degrees of freedom equal to 5. Lower DLs were set to be 2, 3, and 4 (corresponding to approximately 14%, 23%, and 30% censored) for sites 1, 2, and 3, respectively. The non-CPM approaches assumed a normal linear model after an incorrectly specified log-transformation. As shown in the lower half of , only the CPM resulted in unbiased estimates, because it is the only approach that does not require pre-transformation or strict distributional assumptions.

Table 3: Comparison of CPM with common approaches for addressing DLs under with the unknown transformation correctly and incorrectly specified.

Finally, we compared our method with two existing methods for fitting semiparametric linear transformation models to doubly censored data (Cai and Cheng Citation2004; Shen Citation2011). The efficiency gains of our method over these other approaches are seen in , which compares estimates of with data generated under a proportional hazards model under various levels of censoring similar to those in scenarios 2–5. (Details are in the supplementary materials S3.1.) For scenario 1 (no censoring) and scenarios 2, 4, and 5 (all have some left-censoring), the bias of the CPM was similar to that of the other two approaches, but the empirical standard error and RMSE was lower for the CPM. The improved efficiency was particularly notable as the proportion of censored observations increased. For example, in scenario 2 (approximately 20% left-censored) with

, the empirical SE for our estimator of

was 0.040 compared to 0.054 and 0.051 for the estimators of Cai and Cheng (Citation2004) and Shen (Citation2011), respectively. Under scenario 5 (approximately 50% left-censored) with

, the empirical SE for our estimator was 0.044 compared to 0.069 for both of the other estimators. Under scenario 3 (approximately 80% right-censored), the empirical SE and RMSE for

were smaller for the method of Shen (Citation2011) with

but they were approximately the same between our method and that of Shen (Citation2011) with

. This finding is similar to that observed by Liu et al. (Citation2017) with uncensored data generated under a proportional hazards model, where with small sample sizes Cox regression had lower RMSE for estimating

than CPM, but similar RMSE as the sample size increased. Under scenario 3, the RMSE for the estimator of Cai and Cheng (Citation2004) was higher than the other two methods.

Table 4: Simulation results for multiple DLs comparing CPMs with the methods proposed by Cai and Cheng (Citation2004) (labeled Cai) and Shen (Citation2011) (labeled Shen).

5 Discussion

In this article, we have described an approach to address multiple detection limits in response variables using CPMs. We illustrated the method with a study looking at factors associated with HIV viral load after antiretroviral therapy initiation, where a large percentage of people had measurements below lower detection limits, which varied over time and by study site. CPMs have several advantages over commonly employed approaches for addressing DLs. They make minimal distributional assumptions, they yield interpretable parameters, and they are invariant to the value assigned to measures outside DLs. Any values outside the lowest/highest DLs are simply assigned to the lowest/highest ordinal categories, and estimation proceeds naturally. From simulation studies we saw that CPMs performed well, even with high censoring rates and relatively small sample sizes, and that they were more generally more efficient than previously proposed methods for fitting semiparametric transformation models to censored data.

Our focus has been on addressing settings with multiple detection limits. However, as highlighted in Section 2.2, CPMs are an effective analysis approach that can also be easily applied to address response data subject to a single detection limit. The supplementary materials contain an example analysis using HIV data subject to a single detection limit (Section S2.2) and a set of simulations demonstrating the excellent performance of CPMs in settings with a single detection limit (Section S3.2).

CPMs have some limitations. Because we do not make distributional assumptions outside DLs, we are not able to estimate conditional expectations after fitting a CPM; however, with DLs, conditional quantiles are probably more reasonable statistics to report anyway. Although CPMs do not make distributional assumptions on the response variable, the link function must still be specified, which corresponds to making an assumption on the distribution of the response variable after an unspecified transformation. Performance can be poor with severe link function misspecification; however, CPMs appear to be fairly robust to moderate misspecification. Note that the latent variable is typically assumed to follow a standard distribution (e.g., standard normal or standard logistic). If in our model, for example, after an unspecified transformation the data are assumed to follow a normal distribution with variance , then the transformation is simply a rescaling of what it would be if the latent variable distribution had variance 1. Specifically, if

with

, and

with

, then

and

. Similarly, if the model had an intercept term, for example,

with

, the intercept term would also be absorbed by the transformation:

. Notice that

and

are not identifiable in these latent variable models where we leave

unspecified. By assuming the latent variable follows a standard distribution, the

coefficient is more interpretable than it would be otherwise. For example, suppose we assumed that

with

. Then

(not

) would have the usual odds ratio interpretation for a 1-unit increase in X.

Further research could consider extensions of CPMs to handle clustered or longitudinal data with DLs. The website, https://github.com/YuqiTian35/DetectionLimitCode, contains code for our application examples and simulations. The website also contains a synthetic dataset similar to the original dataset on which our application analysis code can be run. Our R package, multipleDL, currently handles probit, logit, loglog, and cloglog link functions.

Supplementary Materials

Supplementary materials include details about the relationship between CPMs and the Wilcoxon test, a demonstration of the likelihood in a toy example with multiple detection limits, extended descriptions of other approaches for fitting semiparametric transformation models with censoring, additional results for the HIV viral load study, additional simulation results, and a second application in a setting with a single detection limit.

Acknowledgments

The authors gratefully acknowledge CCASAnet investigators for providing data for the HIV study.

Disclosure Statement

The authors report there are no competing interests to declare.

Additional information

Funding

References

- Agresti, A. (2013), Categorical Data Analysis (3rd ed.), Hoboken, NJ: Wiley.

- Baccarelli, A., Pfeiffer, R., Consonni, D., Pesatori, A. C., Bonzini, M., Patterson Jr, D. G., Bertazzi, P. A., and Landi, M. T. (2005), “Handling of Dioxin Measurement Data in the Presence of Non-detectable Values: Overview of Available Methods and their Application in the Seveso Chloracne Study,” Chemosphere, 60, 898–906. DOI: 10.1016/j.chemosphere.2005.01.055.

- Cai, T., and Cheng, S. (2004), “Semiparametric Regression Analysis for Doubly Censored Data,” Biometrika, 91, 277–290. DOI: 10.1093/biomet/91.2.277.

- De Neve, J., Thas, O., and Gerds, T. A. (2019), “Semiparametric Linear Transformation Models: Effect Measures, Estimators, and Applications,” Statistics in Medicine, 38, 1484–1501. DOI: 10.1002/sim.8078.

- Fedorov, V., Mannino, F., and Zhang, R. (2009), “Consequences of Dichotomization,” Pharmaceutical Statistics, 8, 50–61. DOI: 10.1002/pst.331.

- Fiévet, B., and Della Vedova, C. (2010), “Dealing with Non-detect Values in Time-Series Measurements of Radionuclide Concentration in the Marine Environment,” Journal of Environmental Radioactivity, 101, 1–7. DOI: 10.1016/j.jenvrad.2009.07.007.

- Foresi, S., and Peracchi, F. (1995), “The Conditional Distribution of Excess Returns: An Empirical Analysis,” Journal of the American Statistical Association, 90, 451–466. DOI: 10.1080/01621459.1995.10476537.

- Garland, M., Morris, J. S., Rosner, B. A., Stampfer, M. J., Spate, V. L., Baskett, C. J., Willett, W. C., and Hunter, D. J. (1993), “Toenail Trace Element Levels as Biomarkers: Reproducibility Over a 6-year Period,” Cancer Epidemiology and Prevention Biomarkers, 2, 493–497.

- Harel, O., Perkins, N., and Schisterman, E. F. (2014), “The Use of Multiple Imputation for Data Subject to Limits of Detection,” Sri Lankan Journal of Applied Statistics, 5, 227–246. DOI: 10.4038/sljastats.v5i4.7792.

- Harrell, F. (2020), rms: Regression Modeling Strategies, R package version 6.1.0.

- Helsel, D. R. (2011), Statistics for Censored Environmental Data Using Minitab and R (Vol. 77), Hoboken, NJ: Wiley.

- Hornung, R. W., and Reed, L. D. (1990), “Estimation of Average Concentration in the Presence of Nondetectable Values,” Applied Occupational and Environmental Hygiene, 5, 46–51. DOI: 10.1080/1047322X.1990.10389587.

- Jiamsakul, A., Kariminia, A., Althoff, K. N., Cesar, C., Cortes, C. P., Davies, M.-A., Do, V. C., Eley, B., Gill, J., Kumarasamy, N., et al. (2017), “HIV Viral Load Suppression in Adults and Children Receiving Antiretroviral Therapy–Results from the IeDEA Collaboration,” Journal of Acquired Immune Deficiency Syndromes (1999), 76, 319–329. DOI: 10.1097/QAI.0000000000001499.

- Li, C., Tian, Y., Zeng, D., and Shepherd, B. E. (2023), “Asymptotic Properties for Cumulative Probability Models for Continuous Outcomes,” Mathematics, 11, 4896. DOI: 10.3390/math11244896.

- Liu, Q., Shepherd, B. E., Li, C., and Harrell Jr, F. E. (2017), “Modeling Continuous Response Variables Using Ordinal Regression,” Statistics in Medicine, 36, 4316–4335. DOI: 10.1002/sim.7433.

- Lubin, J. H., Colt, J. S., Camann, D., Davis, S., Cerhan, J. R., Severson, R. K., Bernstein, L., and Hartge, P. (2004), “Epidemiologic Evaluation of Measurement Data in the Presence of Detection Limits,” Environmental Health Perspectives, 112, 1691–1696. DOI: 10.1289/ehp.7199.

- McCullagh, P. (1980), “Regression Models for Ordinal Data,” Journal of the Royal Statistical Society, Series B, 42, 109–127. DOI: 10.1111/j.2517-6161.1980.tb01109.x.

- Pan, W., Wu, H., Luo, J., Deng, Z., Ge, C., Chen, C., Jiang, X., Yin, W.-J., Niu, G., Zhu, L., et al. (2017), “Cs2AgBiBr6 Single-Crystal X-ray Detectors with a Low Detection Limit,” Nature Photonics, 11, 726–732. DOI: 10.1038/s41566-017-0012-4.

- Shen, P.-S. (2011), “Semiparametric Analysis of Transformation Models with Doubly Censored Data,” Journal of Applied Statistics, 38, 675–682. DOI: 10.1080/02664760903563635.

- Shepherd, B. E., Li, C., and Liu, Q. (2016), “Probability-Scale Residuals for Continuous, Discrete, and Censored Data,” Canadian Journal of Statistics, 44, 463–479. DOI: 10.1002/cjs.11302.

- Stan Development Team. (2020), RStan: the R interface to Stan, R package version 2.21.2.

- Tian, Y., Hothorn, T., Li, C., Harrell Jr, F. E., and Shepherd, B. E. (2020), “An Empirical Comparison of Two Novel Transformation Models,” Statistics in Medicine, 39, 562–576. DOI: 10.1002/sim.8425.

- Wing, S., Shy, C. M., Wood, J. L., Wolf, S., Cragle, D. L., and Frome, E. (1991), “Mortality Among Workers at Oak Ridge National Laboratory: Evidence of Radiation Effects in Follow-Up Through 1984,” JAMA, 265, 1397–1402.

- Wu, L., Thompson, D. K., Li, G., Hurt, R. A., Tiedje, J. M., and Zhou, J. (2001), “Development and Evaluation of Functional Gene Arrays for Detection of Selected Genes in the Environment,” Applied and Environmental Microbiology, 67, 5780–5790. DOI: 10.1128/AEM.67.12.5780-5790.2001.

- Zeng, D., and Lin, D. (2007), “Maximum Likelihood Estimation in Semiparametric Regression Models with Censored Data,” Journal of the Royal Statistical Society, Series B, 69, 507–564. DOI: 10.1111/j.1369-7412.2007.00606.x.

- Zhang, D., Fan, C., Zhang, J., and Zhang, C.-H. (2009), “Nonparametric Methods for Measurements Below Detection Limit,” Statistics in Medicine, 28, 700–715. DOI: 10.1002/sim.3488.